Unidimensional ACGAN Applied to Link Establishment Behaviors Recognition of a Short-Wave Radio Station

Abstract

:1. Introduction

- A method based on ACGAN+DenseNet was proposed to recognize radio stations’ LE behaviors without the communication protocol standard, which means a lot in the filed in the military field;

- A new ACGAN called unidimensional ACGAN was presented to generate more LE behavior signals. The presented ACGAN was able to directly process and generate unidimensional electromagnetic signals, while the original ACGAN is mostly used in the field of computer vision rather than unidimensional signals;

- We used a unidimensional Convolutional Auto-Encoder to represent deep features of the generated samples, which provided a novel way to verify the reliability of ACGAN when applied in the generation of electromagnetic signals.

2. Methods

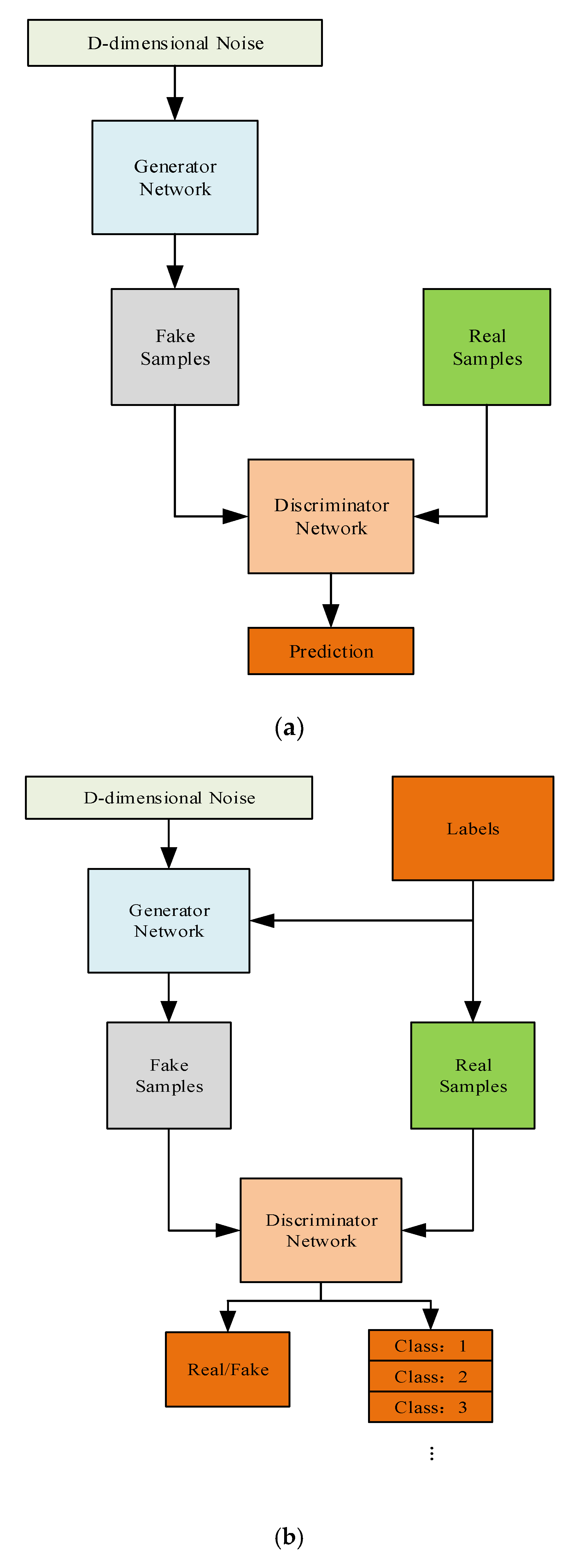

2.1. ACGAN

2.2. Unidimensional ACGAN

2.3. LE Behavior Recognition Algorithm Based on Unidimensional ACGAN+DenseNet

3. Experimental Results and Analysis

3.1. LE Behavior Signals Dataset

3.2. Unidimensional ACGAN Generates LE Behavior Signals

3.3. The LE Behavior Recognition Performance Based on Unidimensional ACGAN + DenseNet

3.4. Comparison Experiment

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- U.S. Standard MIL-STD-188-141B. Interoperability and Performance Standards for Medium and High Frequency Radio Systems; US Department of Defense: Arlington County, VA, USA, 1999.

- Yuan, Y. Network Communication Behaviors Modeling Method on Data Classification Research. Master’s Thesis, Dept. Computer Applied Technology, UESTC, Chengdu, China, 2015. [Google Scholar]

- Liu, C.; Wu, X.; Zhu, L.; Yao, C.; Yu, L.; Wang, L.; Tong, W.; Pan, T. The Communication Relationship Discovery Based on the Spectrum Monitoring Data by Improved DBSCAN. IEEE Access 2019, 7, 121793–121804. [Google Scholar] [CrossRef]

- Liu, C.; Wu, X.; Yao, C.; Zhu, L.; Zhou, Y.; Zhang, H. Discovery and research of communication relation based on communication rules of ultrashort wave radio station. In Proceedings of the 2019 IEEE 4th International Conference on Big Data Analytics (ICBDA), Suzhou, China, 15–18 March 2019; pp. 112–117. [Google Scholar]

- Xiang, Y.; Xu, Z.; You, L. Instruction flow mining algorithm based on the temporal sequence of node communication actions. J. Commun. China 2019, 40, 51–60. [Google Scholar]

- Wu, Z.; Chen, H.; Lei, Y.; Xiong, H. Recognizing Automatic Link Establishment Behaviors of a Short-Wave Radio Station by an Improved Unidimensional DenseNet. IEEE Access 2020, 8, 96055–96064. [Google Scholar] [CrossRef]

- Qiu, J.; Sun, K.; Rudas, I.J.; Gao, H. Command filter-based adaptive NN control for MIMO nonlinear systems with full-state constraints and actuator hysteresis. IEEE Trans. Cybern. 2019, 50, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Qiu, J.; Sun, K.; Wang, T.; Gao, H.-J. Observer-based fuzzy adaptive event-triggered control for pure-feedback nonlinear systems with prescribed performance. IEEE Trans. Fuzzy Syst. 2019, 27, 2152–2162. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Adv. Neural Inf. Process. Syst. 2014, 3, 2672–2680. [Google Scholar]

- Azadi, S.; Fisher, M.; Kim, V.; Wang, Z.; Shechtman, E.; Darrell, T. Multi-content GAN for few-shot font style transfer. In Proceeding of the Conference on Computer Vision and Pattern Recognition 2018 IEEE/CVF, Salt Lake, UT, USA, 18–23 June 2018; pp. 7564–7573. [Google Scholar] [CrossRef] [Green Version]

- Chang, H.; Lu, J.; Yu, F.; Finkelstein, A. PairedCycleGAN: Asymmetric style transfer for applying and removing makeup. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake, UT, USA, 18–23 June 2018; pp. 40–48. [Google Scholar]

- Niu, X.; Yang, D.; Yang, K.; Pan, H.; Dou, Y. Image Translation Between High-Resolution Remote Sensing Optical and SAR Data Using Conditional GAN. In Intelligent Tutoring Systems; Springer Science and Business Media LLC: Hudson Square, NY, USA, 2018; pp. 245–255. [Google Scholar]

- Yang, S.; Wang, Z.; Wang, Z.; Xu, N.; Liu, J.; Guo, Z. Controllable Artistic Text Style Transfer via Shape-Matching GAN. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 4441–4450. [Google Scholar]

- Sandfort, V.; Yan, K.; Pickhardt, P.J.; Summers, R.M. Data augmentation using generative adversarial networks (CycleGAN) to improve generalizability in CT segmentation tasks. Sci. Rep. 2019, 9, 16884. [Google Scholar] [CrossRef] [PubMed]

- Luo, Y.; Lu, B.-L. EEG data augmentation for emotion recognition using a conditional Wasserstein GAN. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 17–21 July 2018; Volume 2018, pp. 2535–2538. [Google Scholar]

- Tang, B.; Tu, Y.; Zhang, Z.; Lin, Y.; Zhang, S. Digital signal modulation classification with data augmentation using generative adversarial nets in cognitive radio networks. IEEE Access 2018, 6, 15713–15722. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5967–5976. [Google Scholar] [CrossRef] [Green Version]

- Zhu, J.-Y.; Zhang, R.; Pathak, D.; Darrell, T.; Efros, A.A.; Wang, O.; Shechtman, E. Toward multimodal image-to-image translation. Neural Inf. Process. Syst. 2017, 465–476. [Google Scholar]

- Cho, S.W.; Baek, N.R.; Koo, J.H.; Arsalan, M.; Park, K.R. Semantic segmentation with low light images by modified CycleGAN-based image enhancement. IEEE Access 2020, 8, 93561–93585. [Google Scholar] [CrossRef]

- Kim, T.; Cha, M.; Kim, H.; Lee, J.K.; Kim, J. Learning to discover cross-domain relations with generative adversarial networks. Computer vision and pattern recognition. arXiv 2017, arXiv:1703.05192. [Google Scholar]

- Deng, J.; Pang, G.; Zhang, Z.; Pang, Z.; Yang, H.; Yang, G. cGAN based facial expression recognition for human-robot interaction. IEEE Access 2019, 7, 9848–9859. [Google Scholar] [CrossRef]

- Liu, J.; Gu, C.; Wang, J.; Youn, G.; Kim, J.-U. Multi-scale multi-class conditional generative adversarial network for handwritten character generation. J. Supercomput. 2017, 75, 1922–1940. [Google Scholar] [CrossRef]

- Zhao, J.; Mathieu, M.; Lecun, Y. Energy-based generative adversarial network. Machine Learning. arXiv 2016, arXiv:1609.03126. [Google Scholar]

- Zhikai, Y.; Leping, B.; Teng, W.; Tianrui, Z.; Fen, W. Fire Image Generation Based on ACGAN. In Proceedings of the 2019 Chinese Control and Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 5743–5746. [Google Scholar] [CrossRef]

- Yao, Z.; Dong, H.; Liu, F.; Guo, Y. Conditional image synthesis using stacked auxiliary classifier generative adversarial networks. In Future of Information and Communication Conference; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Zhang, C.; Cheng, X.; Liu, J.; He, J.; Liu, G. Deep Sparse Autoencoder for Feature Extraction and Diagnosis of Locomotive Adhesion Status. J. Control. Sci. Eng. 2018, 2018, 1–9. [Google Scholar] [CrossRef]

- Azarang, A.; Manoochehri, H.E.; Kehtarnavaz, N. Convolutional autoencoder-based multispectral image fusion. IEEE Access 2019, 7, 35673–35683. [Google Scholar] [CrossRef]

- Karimpouli, S.; Tahmasebi, P.; Tahmasebi, P. Segmentation of digital rock images using deep convolutional autoencoder networks. Comput. Geosci. 2019, 126, 142–150. [Google Scholar] [CrossRef]

- Liu, N.; Xu, Y.; Tian, Y.; Ma, H.; Wen, S. Background classification method based on deep learning for intelligent automotive radar target detection. Futur. Gener. Comput. Syst. 2019, 94, 524–535. [Google Scholar] [CrossRef]

- Wen, L.; Li, X.; Gao, L.; Zhang, Y. A New Convolutional Neural Network-Based Data-Driven Fault Diagnosis Method. IEEE Trans. Ind. Electron. 2018, 65, 5990–5998. [Google Scholar] [CrossRef]

| Layer | Input Size | Output Size |

|---|---|---|

| Input | 500(Noise)+1(Label) | 500 |

| Fully Connected | 500 | 1472 * 30 |

| Reshape | 1472 * 30 | (1472, 30) |

| BN(0.8) | (1472, 30) | (1472, 30) |

| UpSampling1D | (1472, 30) | (2944, 30) |

| Conv1D(KS = 3,1(s)) | (2944, 30) | (2944, 30) |

| Activation(“ReLU”)+BN(0.8) | (2944, 30) | (2944, 30) |

| UpSampling1D | (2944, 30) | (5888, 30) |

| Conv1D(KS = 3,1(s)) | (5888, 30) | (5888, 20) |

| Activation(“ReLU”)+BN(0.8) | (5888, 20) | (5888, 20) |

| Conv1D(KS = 3,1(s)) | (5888, 20) | (5888, 1) |

| Output(Activation(“tanh”)) | (5888, 1) | (5888, 1) |

| Layer | Input Size | Output Size |

|---|---|---|

| Input | (5888, 1) | (5888, 1) |

| Conv1D(KS = 3,2(s)) | (5888, 1) | (2944, 20) |

| Actication(“LeakyReLU(0.2)”) | (2944, 20) | (2944, 20) |

| Dropout(0.25) | (2944, 20) | (2944, 20) |

| Conv1D(KS = 3,2(s)) | (2944, 20) | (1472, 20) |

| Actication(“LeakyReLU(0.2)”) | (1472, 20) | (1472, 20) |

| Dropout(0.25) | (1472, 20) | (1472, 20) |

| Conv1D(KS = 3,2(s)) | (1472, 20) | (736, 30) |

| Actication(“LeakyReLU(0.2)”) | (736, 30) | (736, 30) |

| Dropout(0.25) | (736, 30) | (736, 30) |

| Conv1D(KS = 3,2(s)) | (736, 30) | (368, 30) |

| Actication(“LeakyReLU(0.2)”) | (368, 30) | (368, 30) |

| Dropout(0.25) | (368, 30) | (368, 30) |

| Flatten | (368, 30) | 368 * 30 |

| Output | 368 * 30 | 1(real/fake) |

| 7(class) |

| Encoder | ||

| Layers | Input Size | Output Size |

| Input Layer | (5888, 1) | (5888, 1) |

| Conv1D, S = 1, KS = 3 | (5888, 1) | (5888, 20) |

| MaxPooling1D(2) | (5888, 20) | (2944, 20) |

| Conv1D, S = 1, KS = 3 | (2944, 20) | (2944, 20) |

| MaxPooling1D(2) | (2944, 20) | (1472, 20) |

| Flatten | (1472, 20) | 29, 440 |

| Fully Connected | 29440 | 256 |

| (Out Layer)Fully Connected | 256 | 2 |

| Decoder | ||

| Layers | Input Size | Output Size |

| Input Layer | 2 | 2 |

| Fully Connected | 2 | 256 |

| Fully Connected | 256 | 29440 |

| Reshape | 29440 | (1472, 20) |

| UpSampling1D | (1472, 20) | (2944, 20) |

| Conv1D, S = 1, KS = 3 | (2944,20) | (2944,20) |

| UpSampling1D | (2944,20) | (5888,20) |

| Conv1D, S = 1, KS = 3 | (5888,20) | (5888,20) |

| (Out Layer)Conv1D, S = 1, KS = 3 | (5888, 20) | (5888, 1) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Z.; Chen, H.; Lei, Y. Unidimensional ACGAN Applied to Link Establishment Behaviors Recognition of a Short-Wave Radio Station. Sensors 2020, 20, 4270. https://doi.org/10.3390/s20154270

Wu Z, Chen H, Lei Y. Unidimensional ACGAN Applied to Link Establishment Behaviors Recognition of a Short-Wave Radio Station. Sensors. 2020; 20(15):4270. https://doi.org/10.3390/s20154270

Chicago/Turabian StyleWu, Zilong, Hong Chen, and Yingke Lei. 2020. "Unidimensional ACGAN Applied to Link Establishment Behaviors Recognition of a Short-Wave Radio Station" Sensors 20, no. 15: 4270. https://doi.org/10.3390/s20154270