Color Image Generation from Range and Reflection Data of LiDAR

Abstract

:1. Introduction

2. Proposed Method

2.1. Transformation of 3D LiDAR Point Cloud

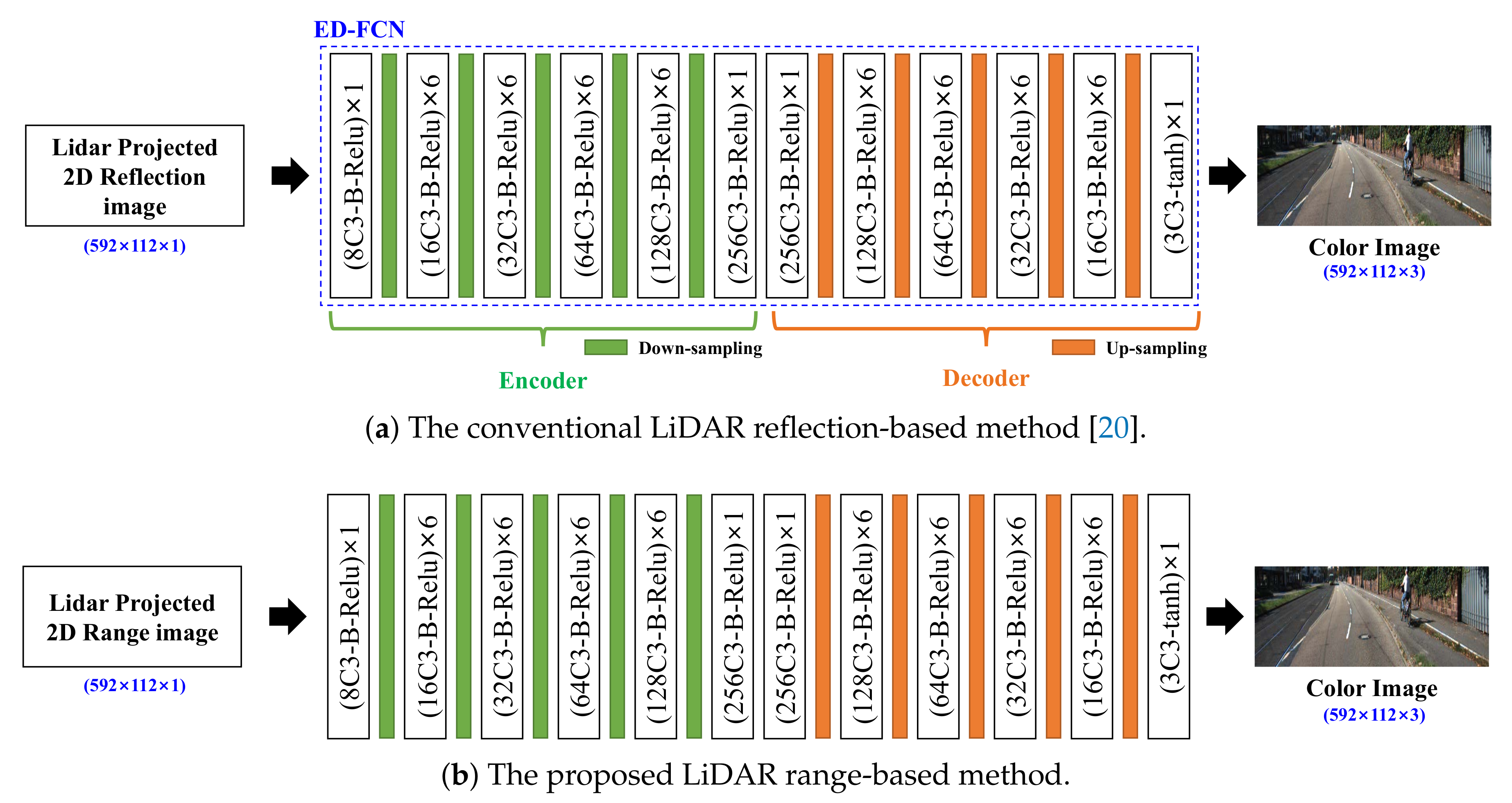

2.2. Single-Input-Based Color Image Generation

2.2.1. LiDAR Reflection-Based Method

2.2.2. LiDAR Range-Based Method

2.3. Proposed Multi-Input-Based Color Image Generation

2.3.1. Early Fusion-Based LiDAR to Color Image Generation Network

2.3.2. Mid Fusion-Based LiDAR to Color Image Generation Network

2.3.3. Last Fusion-Based LiDAR to Color Image Generation Network

2.4. Training and Inference Processes

3. Experimental Results

3.1. Simulation Environment

3.1.1. Evaluation Dataset

3.1.2. Measurement Metrics

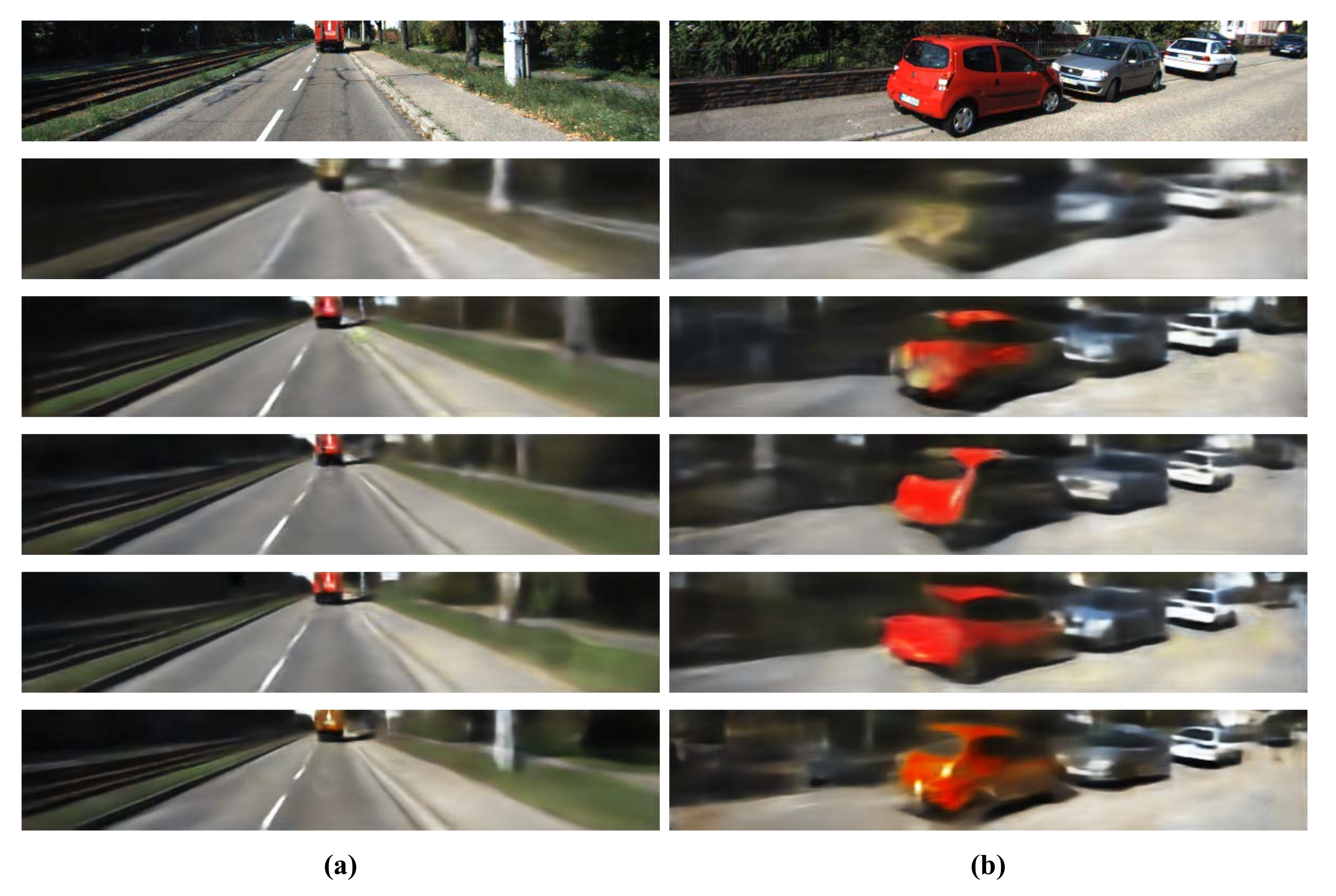

3.2. Performances of Single-Input-Based Methods

3.3. Performances of Proposed Multi-Input-Based Methods

3.4. Subjective Image Quality Evaluation

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Aksoy, E.E.; Baci, S.; Cavdar, S. SalsaNet: Fast Road and Vehicle Segmentation in LiDAR Point Clouds for Autonomous Driving. arXiv 2019, arXiv:1909.08291. [Google Scholar]

- Caltagirone, L.; Bellone, M.; Svensson, L.; Wahde, M. LIDAR–camera fusion for road detection using fully convolutional neural networks. Robot. Auton. Syst. 2019, 111, 125–131. [Google Scholar] [CrossRef] [Green Version]

- Chen, Z.; Zhang, J.; Tao, D. Progressive lidar adaptation for road detection. IEEE/CAA J. Autom. Sin. 2019, 6, 693–702. [Google Scholar] [CrossRef] [Green Version]

- Radi, H.; Ali, W. VolMap: A Real-time Model for Semantic Segmentation of a LiDAR surrounding view. arXiv 2019, arXiv:1906.11873. [Google Scholar]

- Gao, Y.; Zhong, R.; Tang, T.; Wang, L.; Liu, X. Automatic extraction of pavement markings on streets from point cloud data of mobile lidar. Meas. Sci. Technol. 2017, 28, 085203. [Google Scholar] [CrossRef]

- Wurm, K.M.; Kümmerle, R.; Stachniss, C.; Burgard, W. Improving robot navigation in structured outdoor environments by identifying vegetation from laser data. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009. [Google Scholar]

- Du, X.; Ang, M.H.; Karaman, S.; Rus, D. A general pipeline for 3d detection of vehicles. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018. [Google Scholar]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S.L. Joint 3d proposal generation and object detection from view aggregation. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Liang, M.; Yang, B.; Chen, Y.; Hu, R.; Urtasun, R. Multi-task multi-sensor fusion for 3d object detection. In Proceedings of the 2019 Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Liang, M.; Yang, B.; Wang, S.; Urtasun, R. Deep continuous fusion for multi-sensor 3d object detection. In Proceedings of the European Conference on Computer Vision (ECCV) 2018, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum pointnets for 3d object detection from rgb-d data. In Proceedings of the 2018 Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 918–927. [Google Scholar]

- Reymann, C.; Lacroix, S. Improving LiDAR point cloud classification using intensities and multiple echoes. In Proceedings of the IROS 2015—IEEE/RSJ International Conference on Intelligent Robots and Systems, Hamburg, Germany, 28 September–3 October 2015. [Google Scholar]

- Vora, S.; Lang, A.H.; Helou, B.; Beijbom, O. PointPainting: Sequential Fusion for 3D Object Detection. arXiv 2019, arXiv:1911.10150. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Shen, X.; Jia, J. Std: Sparse-to-dense 3d object detector for point cloud. In Proceedings of the IEEE International Conference on Computer Vision 2019, Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Piewak, F.; Pinggera, P.; Schafer, M.; Peter, D.; Schwarz, B.; Schneider, N.; Enzweiler, M.; Pfeiffer, D.; Zollner, M. Boosting lidar-based semantic labeling by cross-modal training data generation. In Proceedings of the European Conference on Computer Vision (ECCV) 2018, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Riveiro, B.; Díaz-Vilariño, L.; Conde-Carnero, B.; Soilán, M.; Arias, P. Automatic segmentation and shape-based classification of retro-reflective traffic signs from mobile LiDAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 9, 295–303. [Google Scholar] [CrossRef]

- Tatoglu, A.; Pochiraju, K. Point cloud segmentation with LIDAR reflection intensity behavior. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012. [Google Scholar]

- Zhao, X.; Yang, Z.; Schwertfeger, S. Mapping with Reflection–Detection and Utilization of Reflection in 3D Lidar Scans. arXiv 2019, arXiv:1909.12483. [Google Scholar]

- Kim, H.K.; Yoo, K.Y.; Park, J.H.; Jung, H.Y. Deep Learning Based Gray Image Generation from 3D LiDAR Reflection Intensity. IEMEK J. Embed. Sys. Appl. 2019, 14, 1–9. [Google Scholar]

- Kim, H.K.; Yoo, K.Y.; Park, J.H.; Jung, H.Y. Asymmetric Encoder-Decoder Structured FCN Based LiDAR to Color Image Generation. Sensors 2019, 19, 4818. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, H.K.; Yoo, K.Y.; Jung, H.Y. Color Image Generation from LiDAR Reflection Data by Using Selected Connection UNET. Sensors 2020, 20, 3387. [Google Scholar] [CrossRef] [PubMed]

- Isola, P.; Zhu, J.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Dahl, G.E.; Sainath, T.N.; Hinton, G.E. Improving deep neural networks for LVCSR using rectified linear units and dropout. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013. [Google Scholar]

- Kalman, B.L.; Kwasny, S.C. Why tanh: Choosing a sigmoidal function. In Proceedings of the IJCNN International Joint Conference on Neural Networks, Baltimore, MD, USA, 7–11 June 1992. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision 2014, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Yang, G.; Zhao, H.; Shi, J.; Deng, Z.; Jia, J. Segstereo: Exploiting semantic information for disparity estimation. In Proceedings of the European Conference on Computer Vision (ECCV) 2018, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Prechelt, L. Automatic early stopping using cross validation: Quantifying the criteria. Neural Netw. 1998, 11, 761–767. [Google Scholar] [CrossRef] [Green Version]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef] [Green Version]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for large-scale machine learning. arXiv 2016, arXiv:1605.08695. [Google Scholar]

- Keras. Available online: https://keras.io (accessed on 8 October 2019).

| Input Data | Validation | Test | ||||

|---|---|---|---|---|---|---|

| PSNR | PSNR | SSIM | PSNR | PSNR | SSIM | |

| LiDAR reflection | 19.57 | 19.18 | 0.51 | 19.53 | 19.15 | 0.51 |

| (±2.49) | (±2.36) | (±0.11) | (±2.47) | (±2.34) | (±0.11) | |

| LiDAR range | 18.90 | 18.55 | 0.46 | 18.86 | 18.51 | 0.46 |

| (±2.01) | (±1.95) | (±0.09) | (±2.00) | (±1.94) | (±0.09) | |

| Input Data | The Number of Weights | Average Processing Time [ms] |

|---|---|---|

| LiDAR Refection | 3,350,243 | 6.91 |

| LiDAR Range | 3,350,243 | 6.91 |

| Fusion-Based Network | Validation | Test | ||||

|---|---|---|---|---|---|---|

| PSNR | PSNR | SSIM | PSNR | PSNR | SSIM | |

| Early fusion-based network | 19.79 | 19.37 | 0.52 | 19.71 | 19.31 | 0.52 |

| (±2.51) | (±2.37) | (±0.11) | (±2.52) | (±2.37) | (±0.11) | |

| Mid fusion-based network | 19.96 | 19.55 | 0.52 | 19.91 | 19.50 | 0.52 |

| (±2.46) | (±2.34) | (±0.11) | (±2.44) | (±2.30) | (±0.11) | |

| Last fusion-based network | 20.10 | 19.68 | 0.53 | 20.06 | 19.64 | 0.53 |

| (±2.62) | (±2.44) | (±0.11) | (±2.61) | (±2.42) | (±0.11) | |

| Fusion-Based Network | The Number of Weights | Average Processing Time [ms] |

|---|---|---|

| Early fusion-based network | 3,550,315 | 7.03 |

| Mid fusion-based network | 4,863,219 | 9.93 |

| Last fusion-based network | 6,700,531 | 13.56 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.-K.; Yoo, K.-Y.; Jung, H.-Y. Color Image Generation from Range and Reflection Data of LiDAR. Sensors 2020, 20, 5414. https://doi.org/10.3390/s20185414

Kim H-K, Yoo K-Y, Jung H-Y. Color Image Generation from Range and Reflection Data of LiDAR. Sensors. 2020; 20(18):5414. https://doi.org/10.3390/s20185414

Chicago/Turabian StyleKim, Hyun-Koo, Kook-Yeol Yoo, and Ho-Youl Jung. 2020. "Color Image Generation from Range and Reflection Data of LiDAR" Sensors 20, no. 18: 5414. https://doi.org/10.3390/s20185414