Evaluating Convolutional Neural Networks for Cage-Free Floor Egg Detection

Abstract

:1. Introduction

2. Materials and Methods

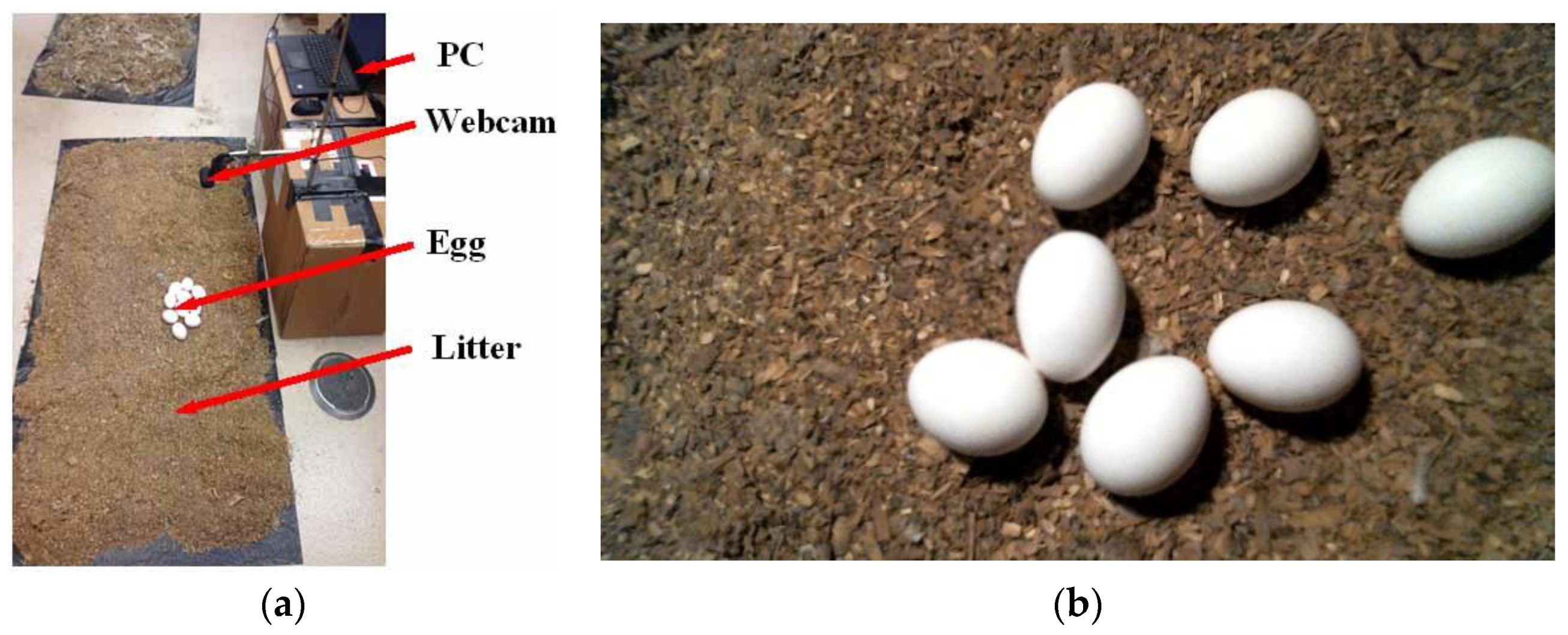

2.1. System Description

2.2. Network Description

2.3. General workflow of Detector Training, Validation, and Testing

2.4. Development of CNN Floor-Egg Detectors

2.4.1. Preparation of Development Environment

- Install libraries and accessories including Python, Pillow, Lxml, Cython, Matplotlib, Pandas, OpenCV, and TensorFlow-GPU. This step creates the appropriate virtual environment for detector training, validation, and testing.

- Label eggs in images and create .xml (XML) files. A Python-based annotation tool, LabelImg, is used to label eggs in images with rectangular bounding boxes. The labels are saved as XML files in Pascal Visual Object Class format, which contain file name, file path, image size (width, length, and depth), object identification, and pixel coordinates (xmin, ymin, xmax, and ymax) of the bounding boxes. Each image corresponds to one XML file.

- Create .csv (CSV) and TFRecord files. The CSV files contain image name, image size (width and length, and depth), object identification, and pixel coordinates (xmin, ymin, xmax, and ymax) of all bounding boxes in each image. The CSV files are then converted into TFRecord files which follow TensorFlow’s binary storage formats.

- Install CNN pretrained object detectors downloaded from TensorFlow detection model zoo [18]. The versions of the detectors were “ssd_mobilenet_v1_coco_2018_01_28” for the SSD detector, “faster_rcnn_inception_v2_coco_2018_01_28” for the faster R-CNN detector, and “rfcn_resnet101_coco_2018_01_28” for the R-FCN detector in this study.

2.4.2. Development of the Floor-Egg Detectors (Network Training)

2.5. Validation

2.5.1. Validation Strategy

2.5.2. Evaluation and Performance Metrics

2.6. Comparison of Convolutional Neural Network (CNN) Floor-Egg Detectors

2.7. Evaluation of the Optimal Floor-Egg Detector under Different Settings

2.8. Generalizability of the Optimal CNN Floor-Egg Detector

3. Results

3.1. Floor Egg Detection Using the CNN Floor-Egg Detectors

3.2. Performance of the Three CNN Floor-Egg Detectors

3.3. Performance of the Optimal Convolutional Neural Network (CNN) Floor-Egg Detector

3.3.1. Detector Performance with Different Camera Settings

3.3.2. Detector Performance with Different Environmental Settings

3.3.3. Detector Performance with Different Egg Settings

3.4. Performance of the Faster R-CNN Detector under Random Settings

4. Discussion

4.1. Performance of the Three CNN Floor-Egg Detectors

4.2. Performance of the Faster R-CNN Detector under Different Settings

4.3. Performance of the Faster R-CNN Detector under Random Settings

5. Conclusions

- Compared with the SSD and R-FCN detectors, the faster R-CNN detector had better recall (98.4 ± 0.4%) and accuracy (98.1 ± 0.3%) for detecting floor eggs under a wide range of commercial conditions and system setups. It also had decent processing speed (201.5 ± 2.3 ms·image−1), precision (99.7 ± 0.2%), and RMSE (0.8–1.1 mm) for the detection.

- The faster R-CNN detector performed very well in detecting floor eggs under a range of common CF housing conditions, except for brown eggs at the 1-lux light intensity. Its performance was not affected by camera height, camera tilting angle, light intensity, litter condition, egg color, buried depth, egg number in an image, egg proportion in an image, eggshell cleanness, or egg contact in images.

- The precision, recall, and accuracy of the faster R-CNN detector in floor egg detection were 91.9%–100% under random settings, suggesting good generalizability.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Hartcher, K.; Jones, B. The welfare of layer hens in cage and cage-free housing systems. World’s Poult. Sci. J. 2017, 73, 767–782. [Google Scholar] [CrossRef] [Green Version]

- Lentfer, T.L.; Gebhardt-Henrich, S.G.; Fröhlich, E.K.; von Borell, E. Influence of nest site on the behaviour of laying hens. Appl. Anim. Behav. Sci. 2011, 135, 70–77. [Google Scholar] [CrossRef]

- Oliveira, J.L.; Xin, H.; Chai, L.; Millman, S.T. Effects of litter floor access and inclusion of experienced hens in aviary housing on floor eggs, litter condition, air quality, and hen welfare. Poult. Sci. 2018, 98, 1664–1677. [Google Scholar] [CrossRef] [PubMed]

- Vroegindeweij, B.A.; Blaauw, S.K.; IJsselmuiden, J.M.; van Henten, E.J. Evaluation of the performance of PoultryBot, an autonomous mobile robotic platform for poultry houses. Biosyst. Eng. 2018, 174, 295–315. [Google Scholar] [CrossRef]

- Jones, D.; Anderson, K. Housing system and laying hen strain impacts on egg microbiology. Poult. Sci. 2013, 92, 2221–2225. [Google Scholar] [CrossRef] [PubMed]

- Abrahamsson, P.; Tauson, R. Performance and egg quality of laying hens in an aviary system. J. Appl. Poult. Res. 1998, 7, 225–232. [Google Scholar] [CrossRef]

- Bac, C.; Hemming, J.; Van Henten, E. Robust pixel-based classification of obstacles for robotic harvesting of sweet-pepper. Comput. Electron. Agric. 2013, 96, 148–162. [Google Scholar] [CrossRef]

- Bac, C.W.; Hemming, J.; Van Tuijl, B.; Barth, R.; Wais, E.; van Henten, E.J. Performance evaluation of a harvesting robot for sweet pepper. J. Field Robot. 2017, 34, 1123–1139. [Google Scholar] [CrossRef]

- Hiremath, S.; van Evert, F.; Heijden, V.; ter Braak, C.; Stein, A. Image-based particle filtering for robot navigation in a maize field. In Proceedings of the Workshop on Agricultural Robotics (IROS 2012), Vilamoura, Portugal, 7–12 October 2012; pp. 7–12. [Google Scholar]

- Vroegindeweij, B.A.; Kortlever, J.W.; Wais, E.; van Henten, E.J. Development and test of an egg collecting device for floor eggs in loose housing systems for laying hens. Presented at the International Conference of Agricultural Engineering AgEng 2014, Zurich, Switzerland, 6–10 July 2014. [Google Scholar]

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, I.; Wojna, Z.; Song, Y.; Guadarrama, S. Speed/accuracy trade-offs for modern convolutional object detectors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7310–7311. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object detection via region-based fully convolutional networks. In Proceedings of the Advances in Neural Information Processing Systems 29: Annual Conference on Neural Information Processing Systems 2016, Barcelona, Spain, 5–10 December 2016; pp. 379–387. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. In Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, Montreal, QC, USA, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Wang, D.; Tang, J.; Zhu, W.; Li, H.; Xin, J.; He, D. Dairy goat detection based on Faster R-CNN from surveillance video. Comput. Electron. Agric. 2018, 154, 443–449. [Google Scholar] [CrossRef]

- Yang, Q.; Xiao, D.; Lin, S. Feeding behavior recognition for group-housed pigs with the Faster R-CNN. Comput. Electron. Agric. 2018, 155, 453–460. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Sturm, B.; Edwards, S.; Jeppsson, K.-H.; Olsson, A.-C.; Müller, S.; Hensel, O. Deep Learning and Machine Vision Approaches for Posture Detection of Individual Pigs. Sensors 2019, 19, 3738. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Huang, J.; Rathod, V.; Chow, D.; Sun, C.; Zhu, M.; Fathi, A.; Lu, Z. Tensorflow Object Detection API. Available online: https://github.com/tensorflow/models/tree/master/research/object_detection (accessed on 5 May 2019).

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Google Cloud Creating an Object Detection Application Using TensorFlow. Available online: https://cloud.google.com/solutions/creating-object-detection-application-tensorflow (accessed on 22 July 2019).

- Japkowicz, N. Why question machine learning evaluation methods. In Proceedings of the AAAI Workshop on Evaluation Methods for Machine Learning, Boston, MA, USA, 16–17 July 2006; pp. 6–11. [Google Scholar]

- Gunawardana, A.; Shani, G. A survey of accuracy evaluation metrics of recommendation tasks. J. Mach. Learn. Res. 2009, 10, 2935–2962. [Google Scholar]

- Wang, J.; Yu, L.-C.; Lai, K.R.; Zhang, X. Dimensional sentiment analysis using a regional CNN-LSTM model. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers); Association for Computational Linguistics: Berlin, Germany, 2016; pp. 225–230. [Google Scholar]

- Zhang, T.; Liu, L.; Zhao, K.; Wiliem, A.; Hemson, G.; Lovell, B. Omni-supervised joint detection and pose estimation for wild animals. Pattern Recognit. Lett. 2018. [Google Scholar] [CrossRef]

- Pacha, A.; Choi, K.-Y.; Coüasnon, B.; Ricquebourg, Y.; Zanibbi, R.; Eidenberger, H. Handwritten music object detection: Open issues and baseline results. In Proceedings of the 2018 13th IAPR International Workshop on Document Analysis Systems (DAS), Vienna, Austria, 24–27 April 2018; pp. 163–168. [Google Scholar]

- Korolev, S.; Safiullin, A.; Belyaev, M.; Dodonova, Y. Residual and plain convolutional neural networks for 3D brain MRI classification. In Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), Melbourne, Australia, 18–21 April 2017; pp. 835–838. [Google Scholar]

- Adam, C. Egg Lab Results. Available online: https://adamcap.com/schoolwork/1407/ (accessed on 13 August 2019).

- Okafor, E.; Berendsen, G.; Schomaker, L.; Wiering, M. Detection and Recognition of Badgers Using Deep Learning. In International Conference on Artificial Neural Networks; Springer: Cham, Switzerland, 2018; pp. 554–563. [Google Scholar]

- Vanhoucke, V.; Senior, A.; Mao, M.Z. Improving the speed of neural networks on CPUs. In Proceedings of the 24th Annual Conference on Neural Information Processing Systems (NIPS 2010), Whistler, BC, Canada, 10 December 2011. [Google Scholar]

- Christiansen, P.; Steen, K.; Jørgensen, R.; Karstoft, H. Automated detection and recognition of wildlife using thermal cameras. Sensors 2014, 14, 13778–13793. [Google Scholar] [CrossRef] [PubMed]

| Setting | Level | |

|---|---|---|

| Camera settings | Camera height | 30, 50, and 70 cm |

| Camera tilting angle | 0, 30, and 60° | |

| Environmental settings | Light intensity | 1, 5, 10, 15, and 20 lux |

| Litter condition | with and without feather | |

| Egg settings | Buried depth | 0, 2, 3, and 4 cm |

| Egg number in an image | 0, 1, 2, 3, 4, 5, 6, and 7 | |

| Egg proportion in an image | 30%, 50%, 70%, and 100% | |

| Eggshell cleanness | with and without litter | |

| Egg contact in an image | contacted and separated | |

| Parameters | CNN Floor-Egg Detectors | ||

|---|---|---|---|

| SSD | Faster R-CNN | R-FCN | |

| Batch size | 24 | 1 | 1 |

| Initial learning rate | 4.0 × 10−3 | 2.0 × 10−4 | 3.0 × 10−4 |

| Learning rate at 90,000 steps | 3.6 × 10−3 | 2.0 × 10−5 | 3.0 × 10−5 |

| Learning rate at 120,000 steps | 3.2 × 10−3 | 2.0 × 10−6 | 2.0 × 10−6 |

| Momentum optimizer value | 0.9 | 0.9 | 0.9 |

| Epsilon value | 1.0 | – | – |

| Gradient clipping by norm | – | 10.0 | 10.0 |

| Detector | Processing Speed (ms·image−1) | PRC (%) | RCL (%) | ACC (%) | RMSE (mm) | ||

|---|---|---|---|---|---|---|---|

| RMSEx | RMSEy | RMSExy | |||||

| SSD | 125.1 ± 2.7 | 99.9 ± 0.1 | 72.1 ± 7.2 | 72.0 ± 7.2 | 1.0 ± 0.1 | 1.0 ± 0.1 | 1.4 ± 0.1 |

| Faster R-CNN | 201.5 ± 2.3 | 99.7 ± 0.2 | 98.4 ± 0.4 | 98.1 ± 0.3 | 0.8 ± 0.1 | 0.8 ± 0.1 | 1.1 ± 0.1 |

| R-FCN | 243.2 ± 1.0 | 93.3 ± 2.4 | 98.5 ± 0.5 | 92.0 ± 2.5 | 0.8 ± 0.1 | 0.8 ± 0.1 | 1.1 ± 0.1 |

| Settings | Brown Egg | White Egg | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PRC (%) | RCL (%) | ACC (%) | RMSE (mm) | PRC (%) | RCL (%) | ACC (%) | RMSE (mm) | ||||||

| RMSEx | RMSEy | RMSExy | RMSEx | RMSEy | RMSExy | ||||||||

| Camera height (cm) | 30 | 99.6 ± 0.7 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.8 ± 0.1 | 0.6 ± 0.1 | 1.2 ± 0.1 | 97.6 ± 2.7 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.8 ± 0.1 | 0.6 ± 0.1 | 1.0 ± 0.1 |

| 50 | 99.8 ± 0.2 | 99.9 ± 0.1 | 99.9 ± 0.1 | 1.7 ± 0.3 | 1.4 ± 0.4 | 2.0 ± 0.6 | 99.6 ± 0.5 | 99.9 ± 0.1 | 99.9 ± 0.1 | 2.0 ± 0.6 | 1.5 ± 0.7 | 3.3 ± 0.9 | |

| 70 | 99.7 ± 0.4 | 99.9 ± 0.1 | 99.9 ± 0.1 | 4.9 ± 0.9 | 5.8 ± 1.1 | 8.0 ± 0.9 | 98.9 ± 1.0 | 99.9 ± 0.1 | 99.9 ± 0.1 | 6.5 ± 1.1 | 6.1 ± 0.9 | 8.9 ± 1.0 | |

| Camera tilting angle (°) | 0 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.7 ± 0.1 | 0.6 ± 0.1 | 0.9 ± 0.2 | 99.8 ± 0.4 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.7 ± 0.1 | 0.6 ± 0.1 | 1.0 ± 0.2 |

| 30 | 99.7 ± 0.5 | 99.9 ± 0.1 | 99.9 ± 0.1 | 1.4 ± 0.1 | 0.8 ± 0.1 | 1.6 ± 0.2 | 99.1 ± 0.9 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.9 ± 0.1 | 0.7 ± 0.1 | 1.3 ± 0.2 | |

| 60 | 99.8 ± 0.4 | 99.7 ± 0.5 | 99.7 ± 0.5 | 1.9 ± 0.1 | 1.9 ± 0.1 | 2.5 ± 0.2 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 1.5 ± 0.1 | 1.0 ± 0.1 | 1.8 ± 0.2 | |

| Settings | Brown Egg | White Egg | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PRC (%) | RCL (%) | ACC (%) | RMSE (mm) | PRC (%) | RCL (%) | ACC (%) | RMSE (mm) | ||||||

| RMSEx | RMSEy | RMSExy | RMSEx | RMSEy | RMSExy | ||||||||

| Light intensity (lux) | 1 | 98.2 ± 1.8 | 34.4 ± 7.9 | 34.4 ± 7.9 | 2.3 ± 0.4 | 2.9 ± 0.4 | 4.5 ± 0.6 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.8 ± 0.4 | 1.3 ± 0.3 | 1.5 ± 0.6 |

| 5 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 1.0 ± 0.1 | 1.0 ± 0.1 | 1.4 ± 0.2 | 99.6 ± 0.9 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.7 ± 0.1 | 1.0 ± 0.1 | 1.4 ± 0.2 | |

| 10 | 98.8 ± 1.6 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.7 ± 0.1 | 0.7 ± 0.1 | 1.1 ± 0.1 | 99.8 ± 0.3 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.8 ± 0.1 | 0.5 ± 0.1 | 0.9 ± 0.2 | |

| 15 | 99.8 ± 0.3 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.8 ± 0.1 | 0.7 ± 0.1 | 1.2 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.9 ± 0.1 | 0.7 ± 0.1 | 1.1 ± 0.1 | |

| 20 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.9 ± 0.1 | 0.5 ± 0.1 | 1.1 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.8 ± 0.1 | 0.7 ± 0.1 | 1.1 ± 0.1 | |

| Litter condition | w/feather | 99.0 ± 1.3 | 99.9 ± 0.1 | 99.9 ± 0.1 | 1.4 ± 0.3 | 1.2 ± 0.2 | 1.7 ± 0.3 | 99.6 ± 0.7 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.7 ± 0.1 | 0.8 ± 0.1 | 1.1 ± 0.1 |

| w/o feather | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.9 ± 0.1 | 0.8 ± 0.1 | 1.1 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.5 ± 0.1 | 0.9 ± 0.1 | 1.0 ± 0.1 | |

| Settings | Brown Egg | White Egg | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PRC (%) | RCL (%) | ACC (%) | RMSE (mm) | PRC (%) | RCL (%) | ACC (%) | RMSE (mm) | ||||||

| RMSEx | RMSEy | RMSExy | RMSEx | RMSEy | RMSExy | ||||||||

| Buried depth (cm) | 0 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.7 ± 0.1 | 0.7 ± 0.1 | 1.0 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.8 ± 0.1 | 0.7 ± 0.1 | 1.0 ± 0.1 |

| 2 | 99.7 ± 0.7 | 99.7 ± 0.5 | 99.9 ± 0.1 | 0.6 ± 0.1 | 1.5 ± 0.1 | 1.8 ± 0.1 | 98.6 ± 1.5 | 99.6 ± 0.8 | 99.9 ± 0.1 | 0.7 ± 0.1 | 0.9 ± 0.1 | 1.1 ± 0.1 | |

| 3 | 99.9 ± 0.1 | 99.8 ± 0.2 | 99.9 ± 0.1 | 0.8 ± 0.1 | 0.8 ± 0.1 | 1.2 ± 0.1 | 99.3 ± 0.6 | 99.6 ± 0.7 | 99.9 ± 0.1 | 0.9 ± 0.1 | 0.6 ± 0.1 | 1.0 ± 0.1 | |

| 4 | 99.9 ± 0.1 | 99.2 ± 1.1 | 99.6 ± 0.8 | 1.6 ± 0.2 | 1.3 ± 0.2 | 2.1 ± 0.2 | 99.6 ± 0.9 | 99.8 ± 0.4 | 99.9 ± 0.1 | 0.9 ± 0.1 | 1.6 ± 0.1 | 1.9 ± 0.2 | |

| Egg number in an image | 0 | – | – | 99.9 ± 0.1 | – | – | – | – | – | 99.9 ± 0.1 | – | – | – |

| 1 | 98.0 ± 4.4 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.7 ± 0.3 | 0.9 ± 0.3 | 1.1 ± 0.4 | 97.3 ± 3.6 | 99.9 ± 0.1 | 99.9 ± 0.1 | 1.0 ± 0.2 | 0.9 ± 0.2 | 1.1 ± 0.3 | |

| 2 | 99.1 ± 1.9 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.9 ± 0.2 | 0.6 ± 0.1 | 1.1 ± 0.2 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.8 ± 0.1 | 0.6 ± 0.1 | 1.0 ± 0.1 | |

| 3 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.8 ± 0.1 | 0.8 ± 0.1 | 1.1 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.9 ± 0.2 | 0.6 ± 0.1 | 1.1 ± 0.1 | |

| 4 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.7 ± 0.1 | 0.7 ± 0.1 | 1.0 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.8 ± 0.1 | 0.6 ± 0.1 | 1.0 ± 0.1 | |

| 5 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.8 ± 0.1 | 0.7 ± 0.1 | 1.0 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.7 ± 0.1 | 0.4 ± 0.1 | 0.9 ± 0.1 | |

| 6 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.8 ± 0.1 | 0.8 ± 0.1 | 1.1 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.5 ± 0.1 | 0.6 ± 0.1 | 0.8 ± 0.1 | |

| 7 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 1.5 ± 0.1 | 0.9 ± 0.1 | 1.7 ± 0.2 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.9 ± 0.1 | 0.6 ± 0.1 | 1.0 ± 0.1 | |

| Egg proportion in an image (%) | 30 | 99.8 ± 0.5 | 99.3 ± 0.9 | 99.3 ± 0.9 | 1.7 ± 0.3 | 1.2 ± 0.1 | 2.1 ± 0.3 | 99.6 ± 0.3 | 99.5 ± 0.4 | 99.6 ± 0.6 | 0.7 ± 0.1 | 0.6 ± 0.1 | 1.0 ± 0.1 |

| 50 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 1.0 ± 0.2 | 0.9 ± 0.1 | 1.3 ± 0.2 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.8 ± 0.1 | 0.6 ± 0.1 | 1.0 ± 0.1 | |

| 70 | 99.9 ± 0.1 | 99.3 ± 0.9 | 99.9 ± 0.1 | 0.8 ± 0.3 | 0.8 ± 0.1 | 1.0 ± 0.1 | 99.9 ± 0.1 | 99.6 ± 0.5 | 99.9 ± 0.1 | 0.7 ± 0.1 | 0.7 ± 0.1 | 0.9 ± 0.1 | |

| 100 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.5 ± 0.1 | 0.6 ± 0.1 | 0.8 ± 0.2 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.7 ± 0.1 | 0.7 ± 0.1 | 1.0 ± 0.1 | |

| Eggshell cleanness | w/litter | 99.9 ± 0.1 | 99.5 ± 0.8 | 99.9 ± 0.1 | 0.9 ± 0.1 | 1.2 ± 0.2 | 1.6 ± 0.2 | 99.9 ± 0.1 | 99.8 ± 0.4 | 99.9 ± 0.1 | 0.7 ± 0.1 | 0.7 ± 0.1 | 1.0 ± 0.1 |

| w/o litter | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.7 ± 0.1 | 0.7 ± 0.1 | 1.0 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 99.9 ± 0.1 | 0.8 ± 0.1 | 0.7 ± 0.1 | 1.1 ± 0.1 | |

| Egg contact in an image | contacted | 99.9 ± 0.1 | 99.9 ± 0.2 | 99.9 ± 0.1 | 0.8 ± 0.2 | 0.8 ± 0.1 | 1.2 ± 0.1 | 99.6 ± 0.8 | 99.2 ± 1.6 | 99.2 ± 1.8 | 0.9 ± 0.1 | 0.7 ± 0.1 | 1.1 ± 0.1 |

| separated | 99.9 ± 0.1 | 99.8 ± 0.4 | 99.9 ± 0.1 | 0.8 ± 0.1 | 1.4 ± 0.1 | 1.6 ± 0.2 | 99.9 ± 0.1 | 99.6 ± 0.8 | 99.9 ± 0.1 | 0.7 ± 0.1 | 0.8 ± 0.1 | 0.9 ± 0.1 | |

| Brown Egg | White Egg | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| PRC (%) | RCL (%) | ACC (%) | RMSE (mm) | PRC (%) | RCL (%) | ACC (%) | RMSE (mm) | ||||

| RMSEx | RMSEy | RMSExy | RMSEx | RMSEy | RMSExy | ||||||

| 94.7 | 99.8 | 94.5 | 1.0 | 1.1 | 1.4 | 91.9 | 100.0 | 91.9 | 0.9 | 0.9 | 1.3 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, G.; Xu, Y.; Zhao, Y.; Du, Q.; Huang, Y. Evaluating Convolutional Neural Networks for Cage-Free Floor Egg Detection. Sensors 2020, 20, 332. https://doi.org/10.3390/s20020332

Li G, Xu Y, Zhao Y, Du Q, Huang Y. Evaluating Convolutional Neural Networks for Cage-Free Floor Egg Detection. Sensors. 2020; 20(2):332. https://doi.org/10.3390/s20020332

Chicago/Turabian StyleLi, Guoming, Yan Xu, Yang Zhao, Qian Du, and Yanbo Huang. 2020. "Evaluating Convolutional Neural Networks for Cage-Free Floor Egg Detection" Sensors 20, no. 2: 332. https://doi.org/10.3390/s20020332