2.1.1. Features Extracted from the Electroencephalograms

The empirical mode decomposition assumes that a signal can be represented as the sum of a finite number of intrinsic mode functions. The intrinsic mode functions are obtained using the following procedures:

- Step 1:

Initialization: let , and a threshold value equal to 0.3.

- Step 2:

Let the ith intrinsic mode function be . This can be obtained as follows:

- (a)

Initialization: let , and .

- (b)

Find all the maxima and minima of .

- (c)

Denote the upper envelope and the lower envelope of as and , respectively. Obtain and by interpolating the cubic spline function at the maxima and the minima of , respectively.

- (d)

Let the mean of the upper envelope and the lower envelope of be .

- (e)

Define .

- (f)

Compute . If SD is not greater than the given threshold, then set . Otherwise, increment the value of and go back to Step (b).

- Step 3:

Set . If satisfies the properties of the intrinsic mode function or it is a monotonic function, then the decomposition is completed.

The details of the empirical mode decomposition can be found in [

8,

9,

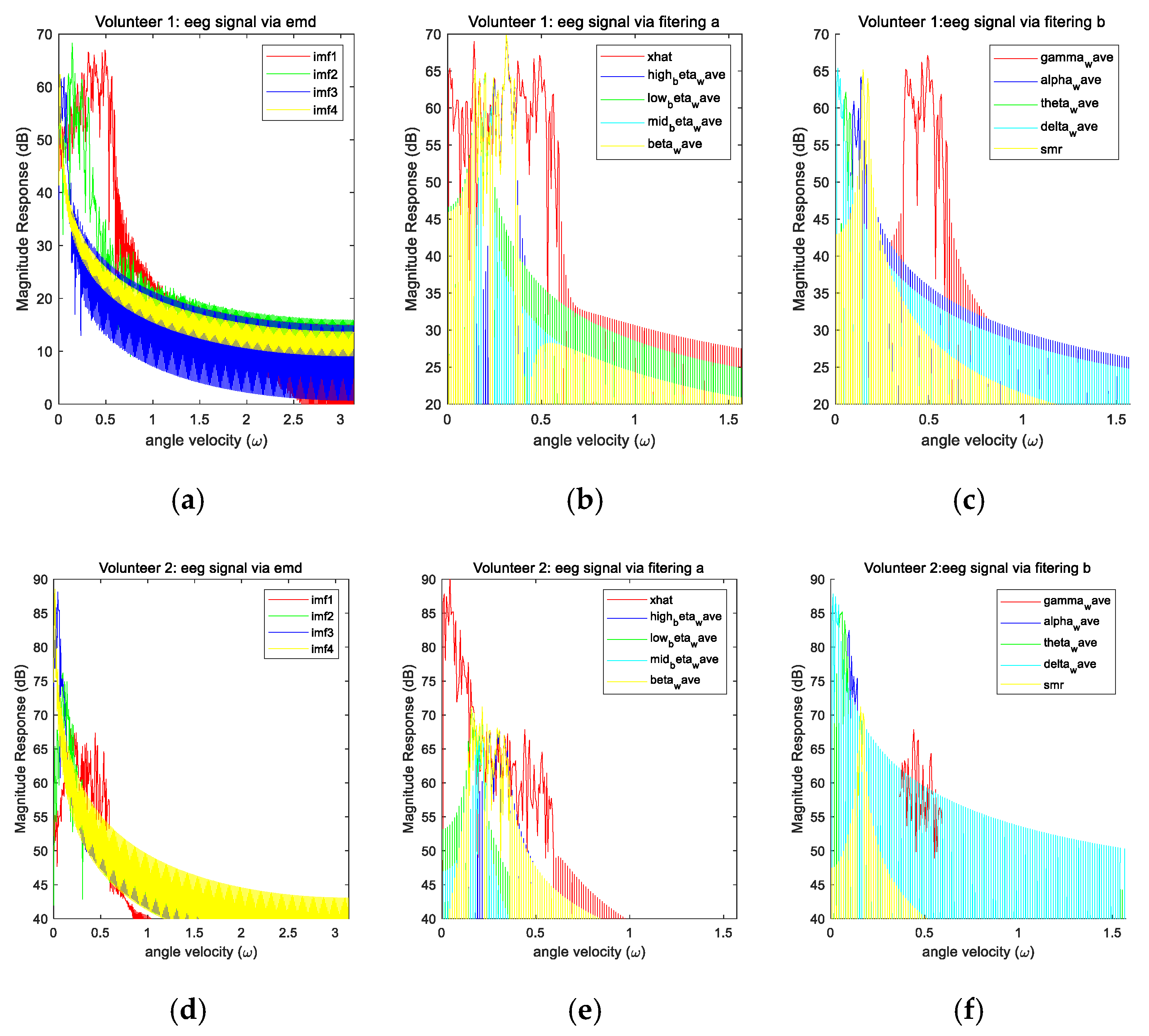

10]. Because a signal with more extrema will contain more high-frequency components, the intrinsic mode functions with the lower indices will be localized in the higher frequency bands. Hence, the empirical mode decomposition is a kind of time frequency analysis. Because of these desirable properties, this paper applies empirical mode decomposition to decompose the electroencephalograms into various intrinsic mode functions.

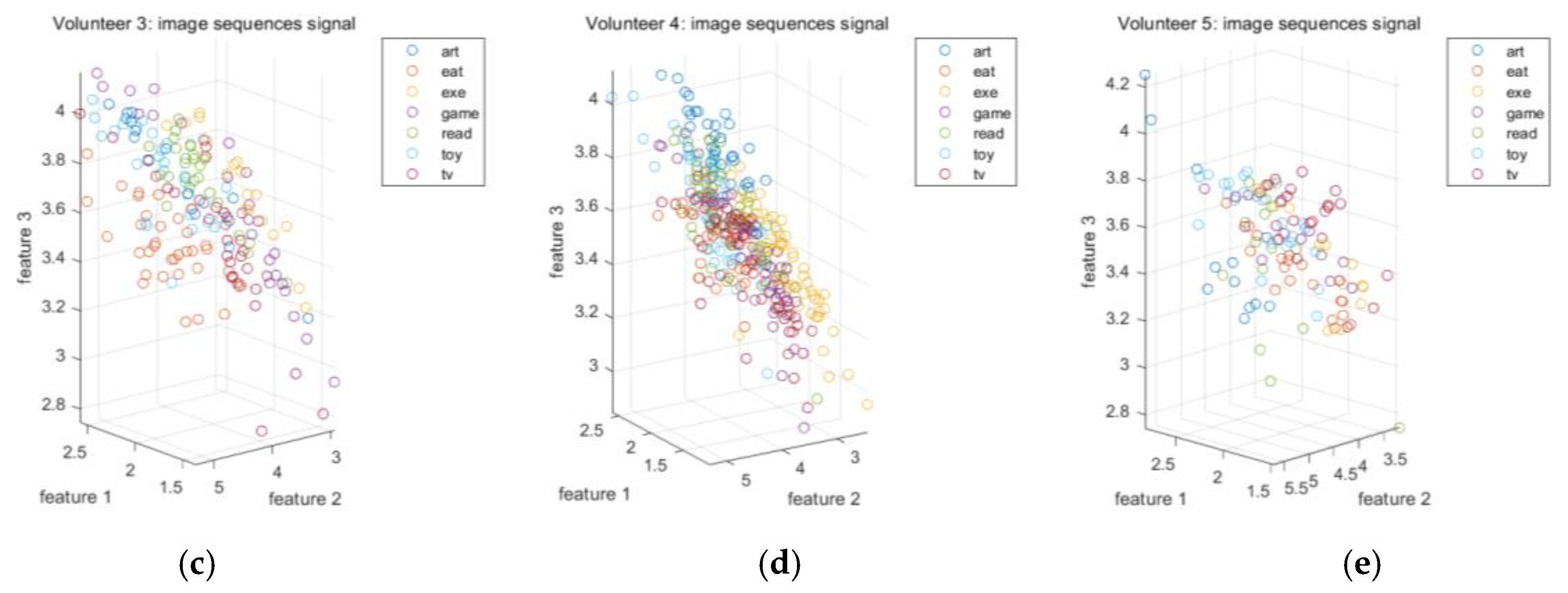

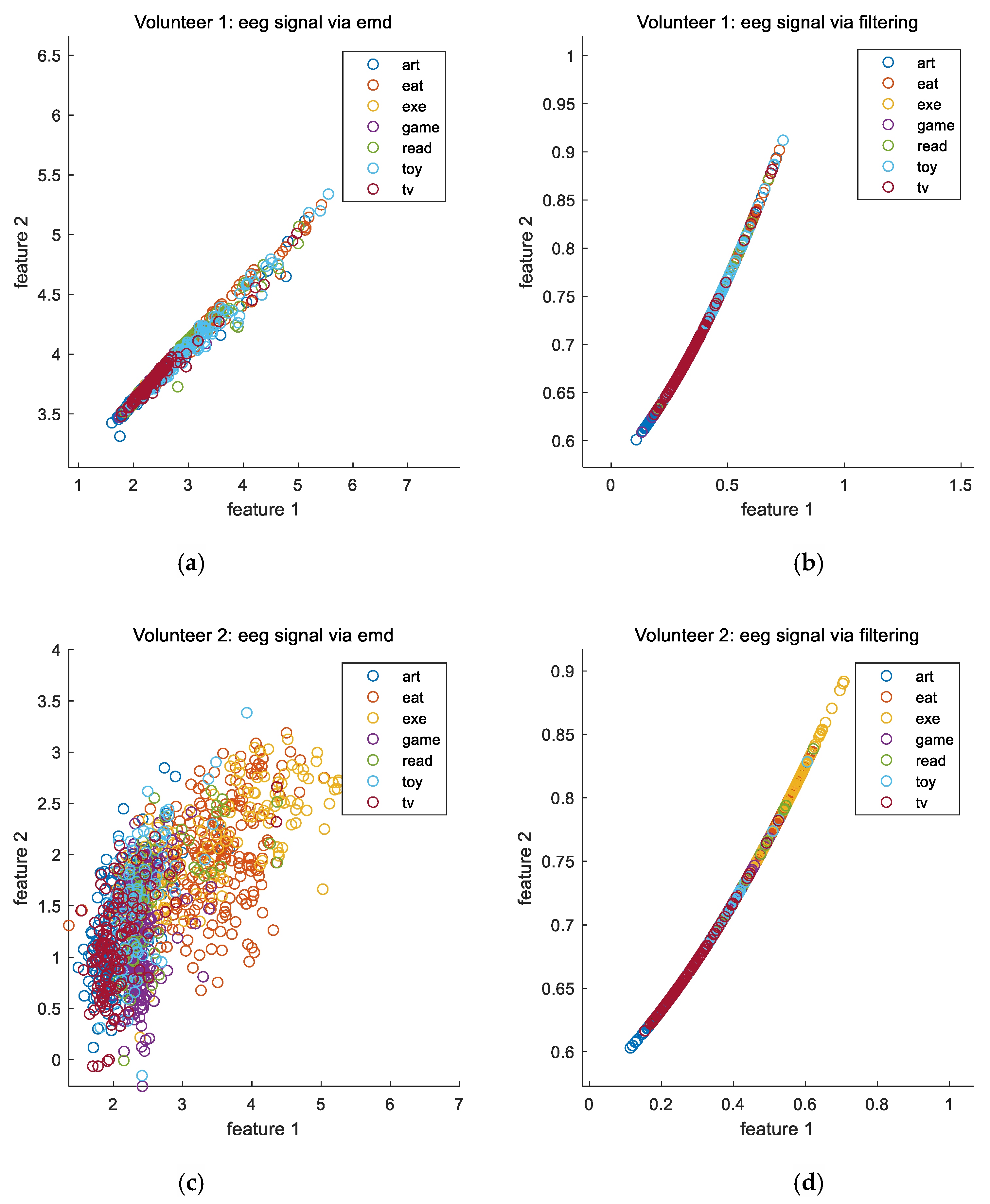

However, because the total number of intrinsic mode functions is determined automatically by the above algorithm, it is difficult to obtain the fixed length feature vectors to perform activity recognition. To tackle this difficulty, the intrinsic mode functions are grouped together. Because there are four to eight intrinsic mode functions for most of the electroencephalograms, the intrinsic mode functions are categorized into four groups. Let , , , and be the sets of the first, second, third, and fourth groups of intrinsic mode functions, respectively.

If there are only four intrinsic mode functions obtained in the empirical mode decomposition, then each set of intrinsic mode functions contains one intrinsic mode function. That is, , , and .

If there are only five intrinsic mode functions obtained in the empirical mode decomposition, then the third and fourth intrinsic mode functions are combined together as one group. That is, , , and . If there are only six intrinsic mode functions obtained in the empirical mode decomposition, then the second and third intrinsic mode functions are combined together as one group, and the fourth and fifth intrinsic mode functions are combined together as another group. That is, , , and . If there are only seven intrinsic mode functions obtained in the empirical mode decomposition, then the first and second intrinsic mode functions are combined together as one group, the third and fourth intrinsic mode functions are combined together as another group, and the fifth and sixth intrinsic mode functions are combined together as another group. That is, , , and . If there are eight intrinsic mode functions obtained in the empirical mode decomposition, then the first and second intrinsic mode functions are combined together as one group, the third and fourth intrinsic mode functions are combined together as another group, the fifth and sixth intrinsic mode functions are combined together as another group, and the seventh and eighth intrinsic mode functions are combined together as another group. That is, , , and .

Because the magnitudes of various brain waves for performing different activities are different, the magnitudes and the energies of various brain waves are usually employed as the features for activity recognition. Similar but more physical quantities are employed as the features in this paper. In particular, the entropy, mean, interquartile range, mean absolute deviation, range, variance, skewness, kurtosis,

L2 norm,

L1 norm, and

L∞ norm of each group of intrinsic mode functions are computed and employed as the features [

11,

12]. Here, there are four groups of intrinsic mode functions for each electroencephalogram and there are 11 features extracted from each group of intrinsic mode functions. Hence, the lengths of each feature vector is 44.

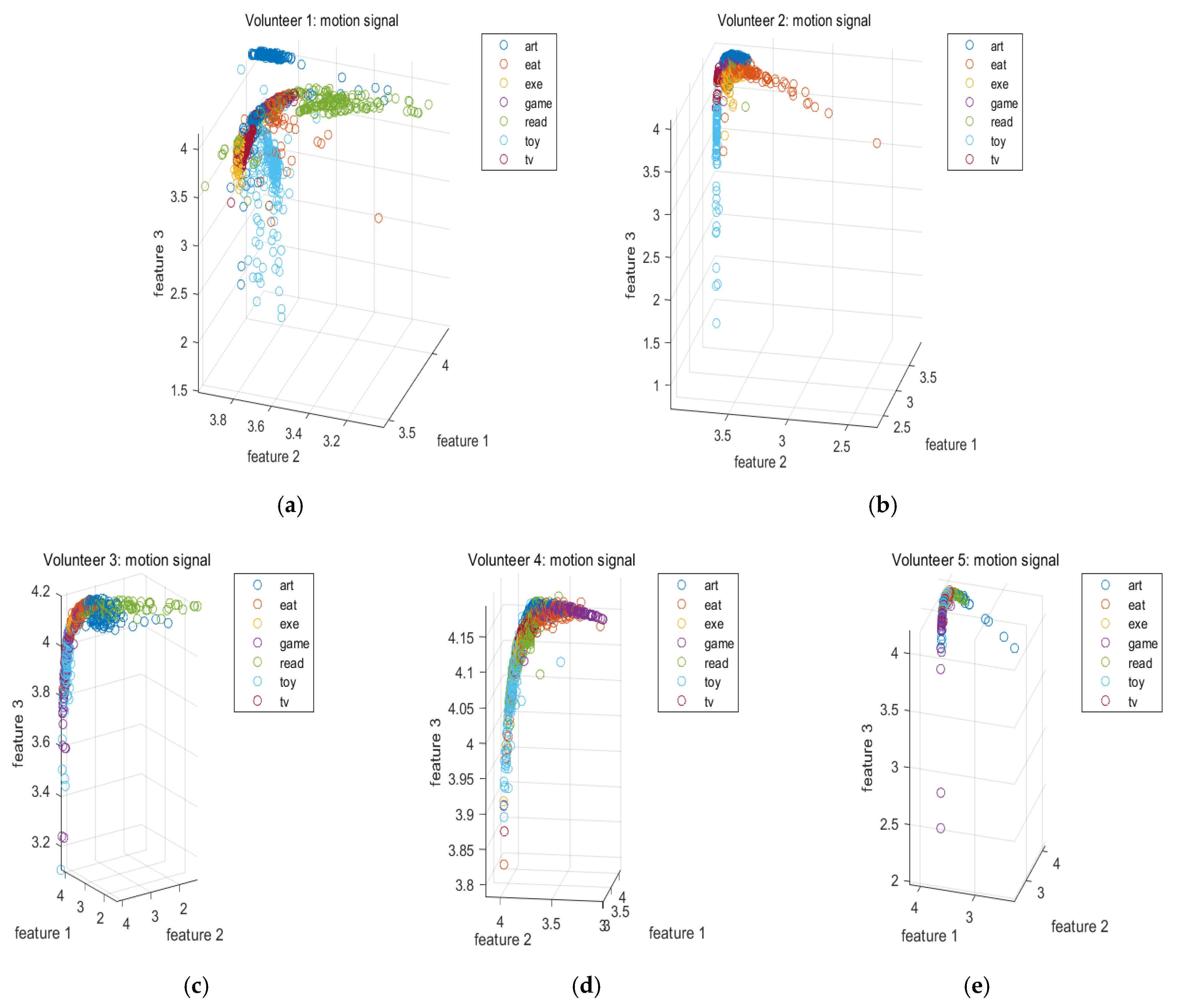

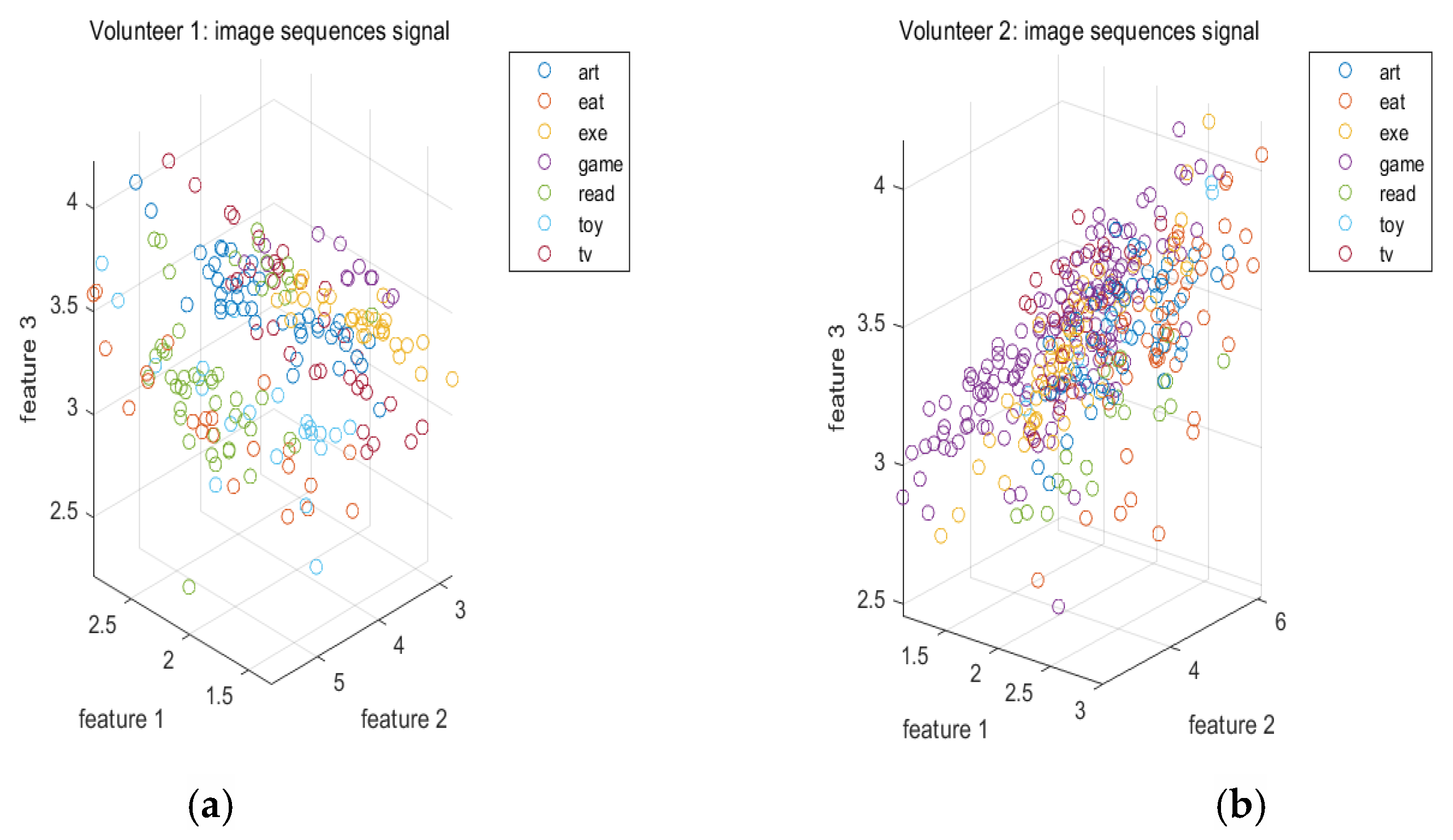

2.1.2. Features Extracted from the Image Sequences

Because different activities involve different objects, the objects are segmented from each image. Due to the movements of the subjects, the camera rotates and translates. As a result, the sizes of the same object in two consecutive images are different. To address this difficulty, because the discrete cosine transform can be used to resize the objects, the discrete cosine transform is first applied to the objects. Next, the matrices of the discrete cosine transform coefficients of the objects in two consecutive images are compared [

13]. Then, the zeros are placed into the matrix of the discrete cosine transform coefficients corresponding to the smaller size of objects such that the size of the zero-filled matrix of the discrete cosine transform coefficients is the same as that of the matrix of the discrete cosine transform coefficients without zeros [

14]. The zero-filled matrix or the matrix without zeros of the discrete cosine transform coefficients of the object in the

image is denoted

.

It is worth noting that the rates of change of the objects in the image for different activities are different. For example, the rates of change of the objects in the image of the computer screen for playing electronic games are faster than those for performing the online exercises. This implies that the changes of the positions of the objects between two consecutive images can be employed as the features for activity recognition. Let the minimum x-coordinate, the maximum x-coordinate, the minimum y-coordinate, and the maximum y-coordinate of the object in the image be , , , and , respectively. The middle point of the x-coordinate and the middle point of the y-coordinate of the object in the image are defined as and , respectively. In particular, and . Here, , , , , , , and are employed as the features. In addition to using the features in the spatial domain, this paper also extracts the features based on the differences of the discrete cosine transform coefficients of the objects between two consecutive images. In particular, the mean, median, variance, skewness, and kurtosis of all of the coefficients in are also employed as the features. Obviously, the length of the feature vectors is 12.