An Automatic Instrument Integration Scheme for Interoperable Ocean Observatories

Abstract

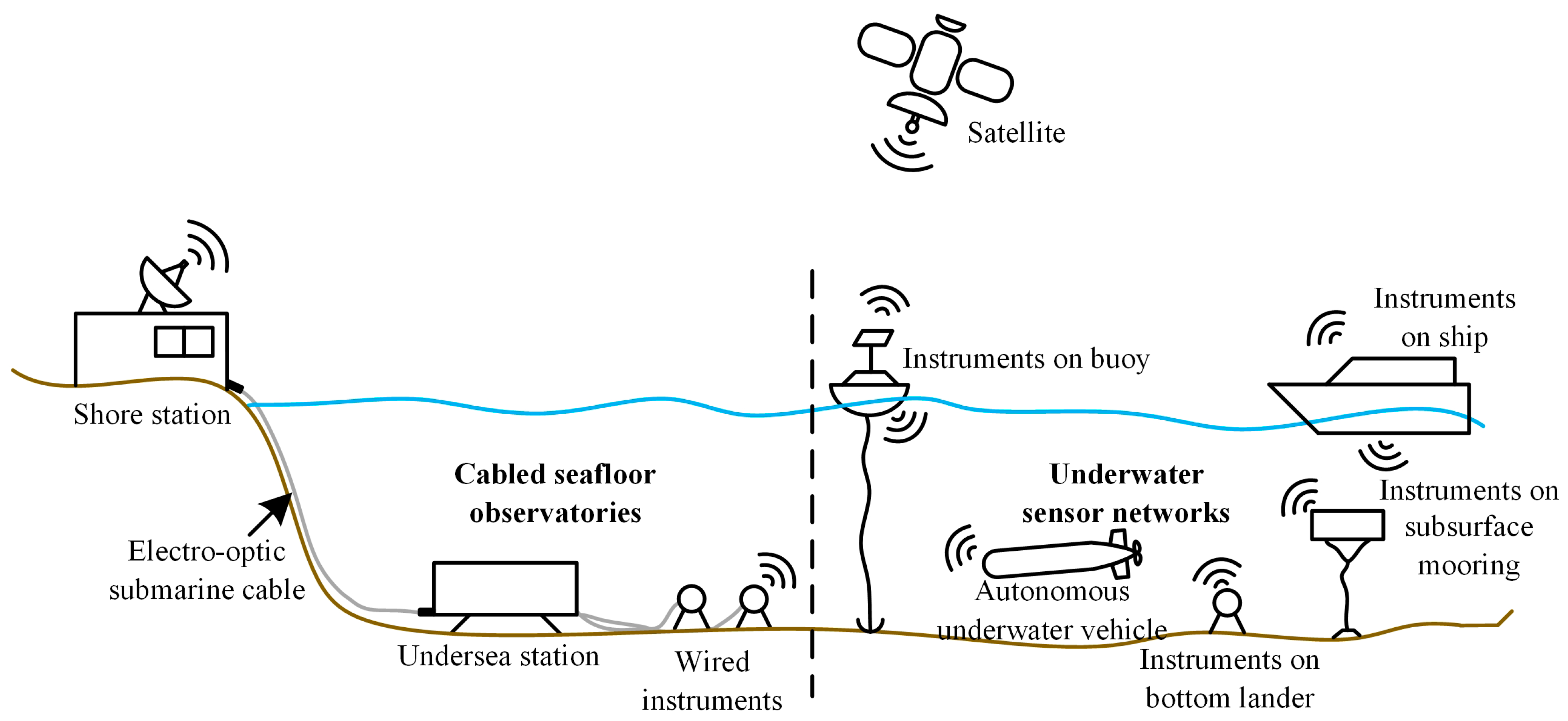

:1. Introduction

2. Design of the Automatic Instrument Integration Scheme

2.1. Challenges and Requirements

- (1)

- The architecture of the scheme should standardize various instruments with different intelligence levels and different native protocols.

- (2)

- The scheme should enable reliable networking in the absence of external configuration information, which means each instrument can be automatically allocated a valid network address which can be resolved by the domain name, even if there are no DHCP or DNS servers in the local network.

- (3)

- Instruments in the same network should be able to detect the services of other ones with no external assistance.

- (4)

- Each instrument should be uniquely identified throughout its whole lifetime so that it can be recognized after it is physically removed/added, or its network address is changed.

- (5)

- Entities in an ocean observatory should interact with others by standardized and uniform processes, so that software and hardware modules can be reused to save cost.

- (6)

- Considering the limited resources and capability of embedded devices used in ocean observatories, as well as the limited power availability, low-bandwidth communications, and high maintenance costs, the protocols adopted should be energy and bandwidth saving.

- (7)

- For better interoperability among instruments, existing standard protocols should be used whenever possible.

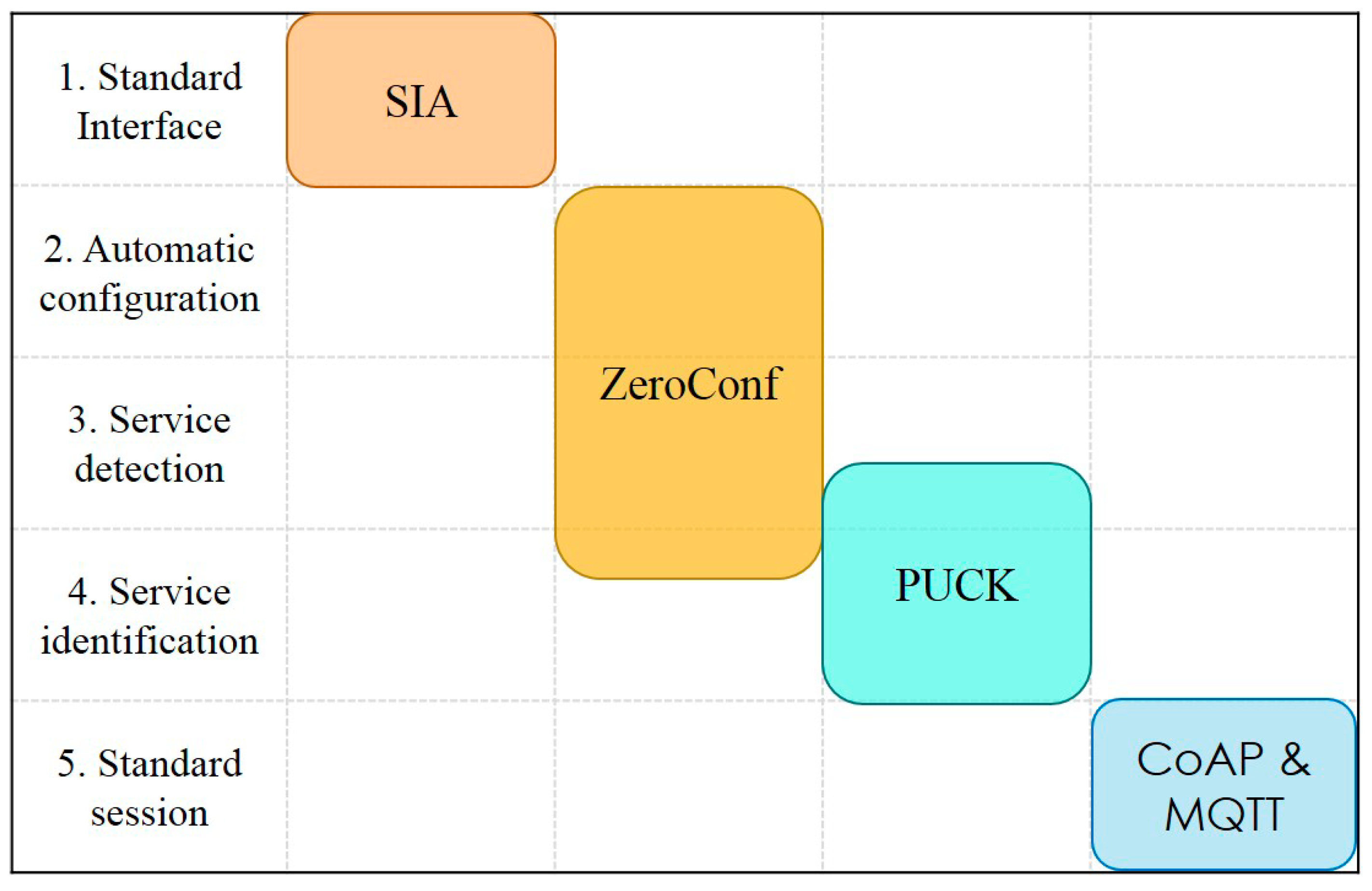

- (1)

- Standard Interface: Nonstandard instruments should be converted to the standard interface.

- (2)

- Automatic Configuration: Instruments should complete network configuration independently so that they can communicate with one another in a temporary local network.

- (3)

- Service Detection: After instruments have been ready for communication, they should be able to announce their service and to find out where to get the services of others.

- (4)

- Service Identification: The description of the instrument services should be obtained to know what the services are and to identify the same one when the network address changed.

- (5)

- Standard Session: Instruments should follow standard procedures to establish sessions with peers to exchange data or send/receive commands. Request/response mode and subscribe/publish mode should be supported.

2.2. Protocols and Standards

2.2.1. SIA

2.2.2. Zeroconf

2.2.3. PUCK

2.2.4. CoAP

2.2.5. MQTT

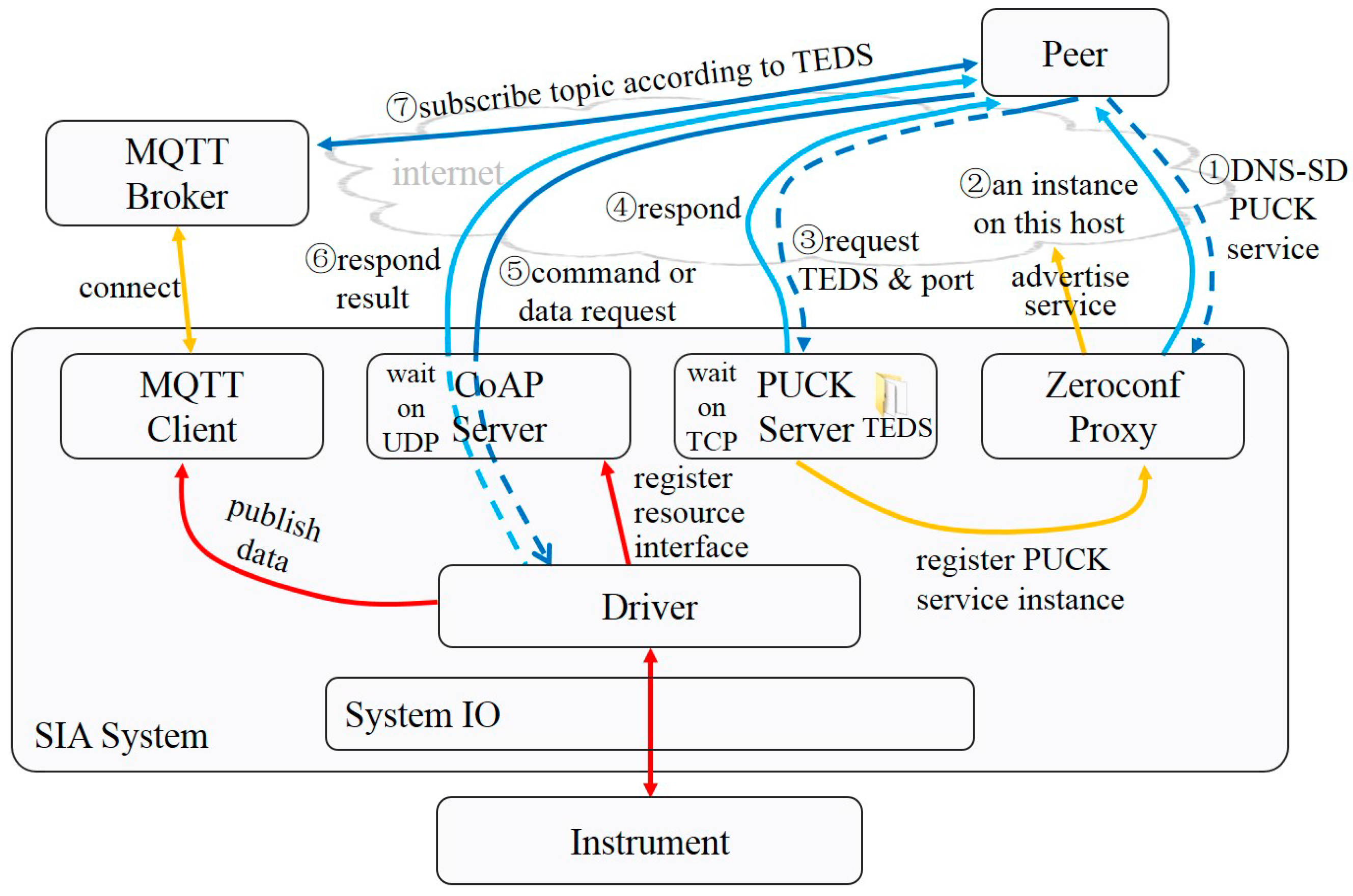

2.3. Scheme Design

3. Implementation of the Scheme for Interoperable Ocean Observatories

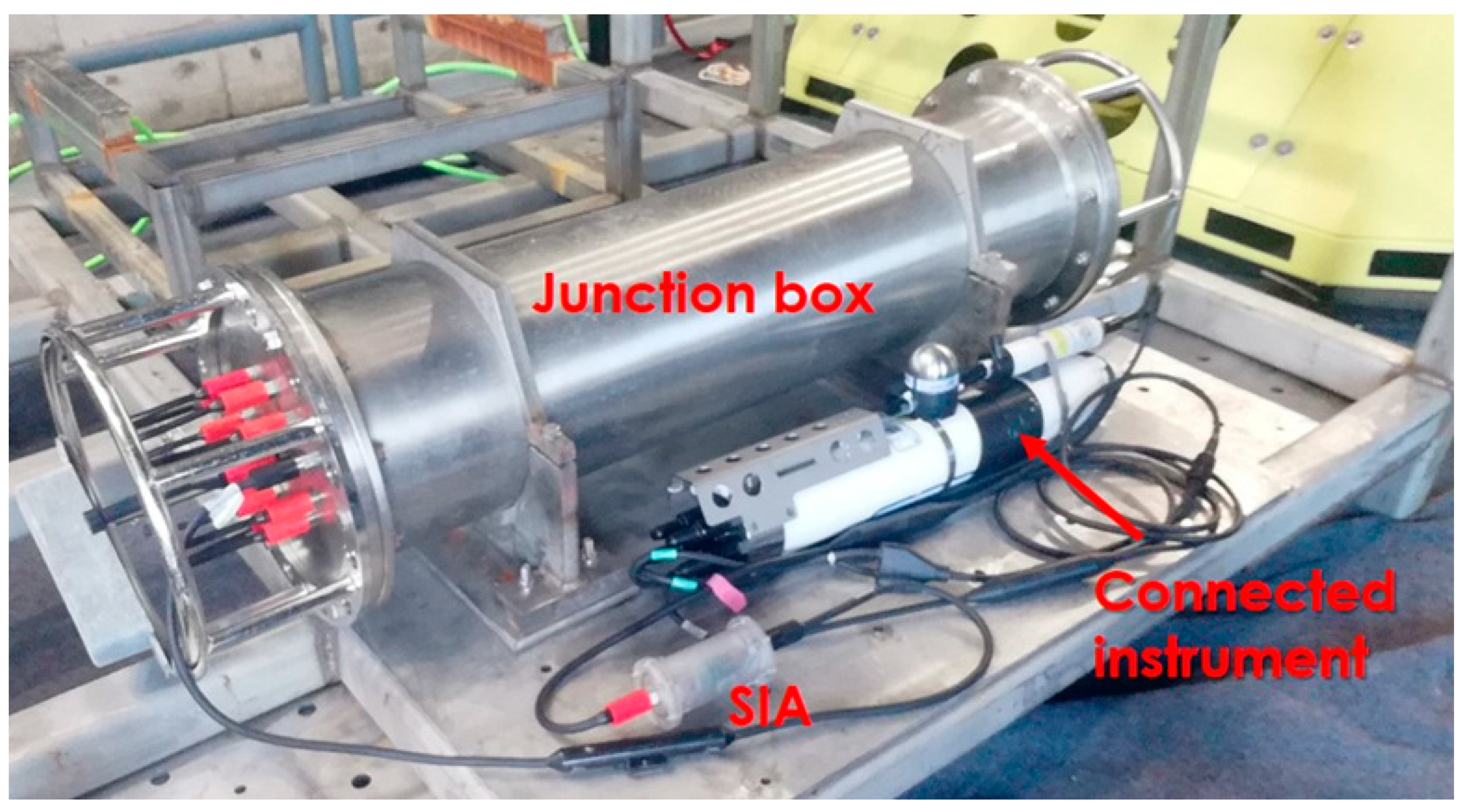

3.1. Hardware Implementation

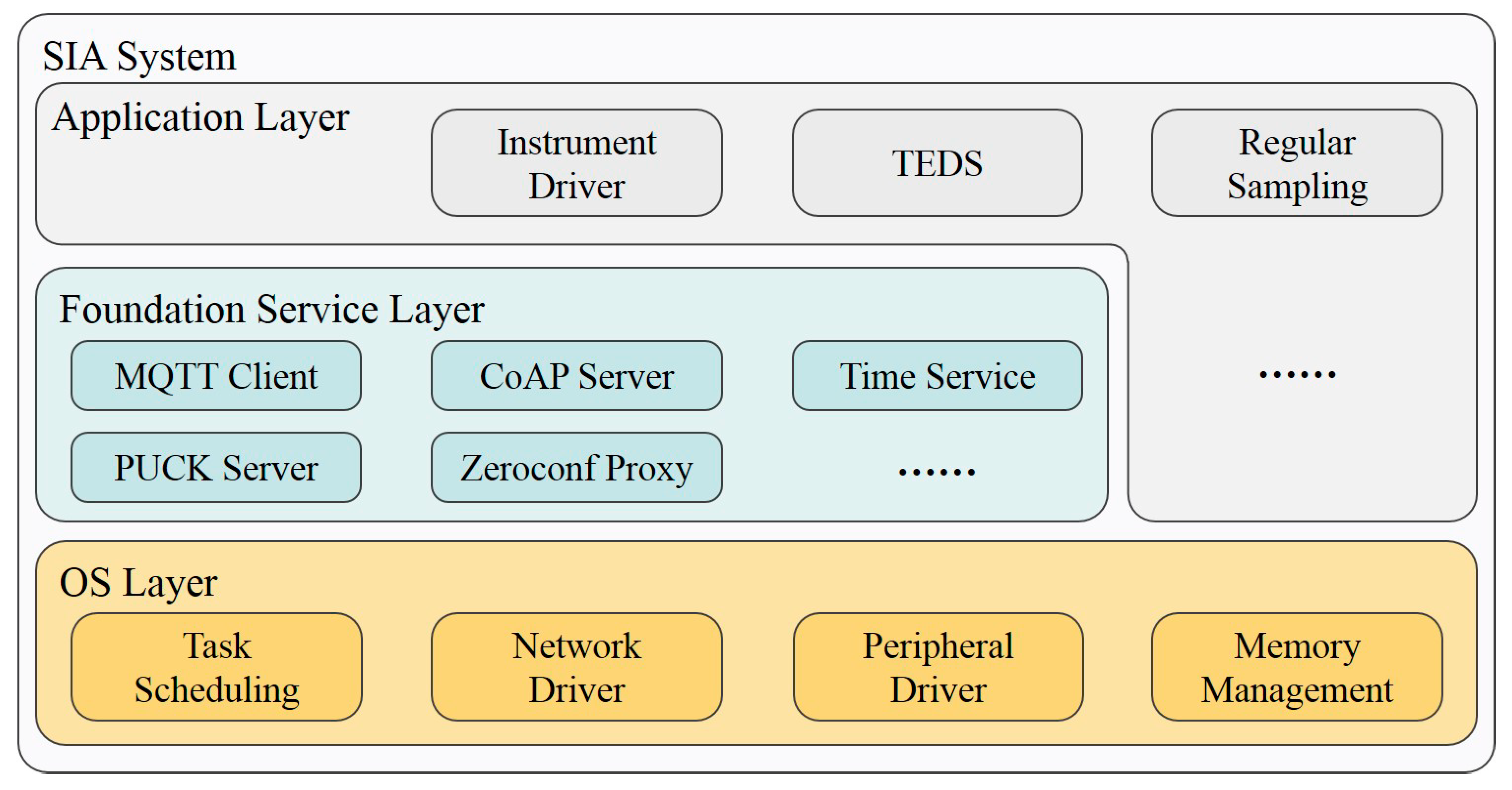

3.2. Software Implementation

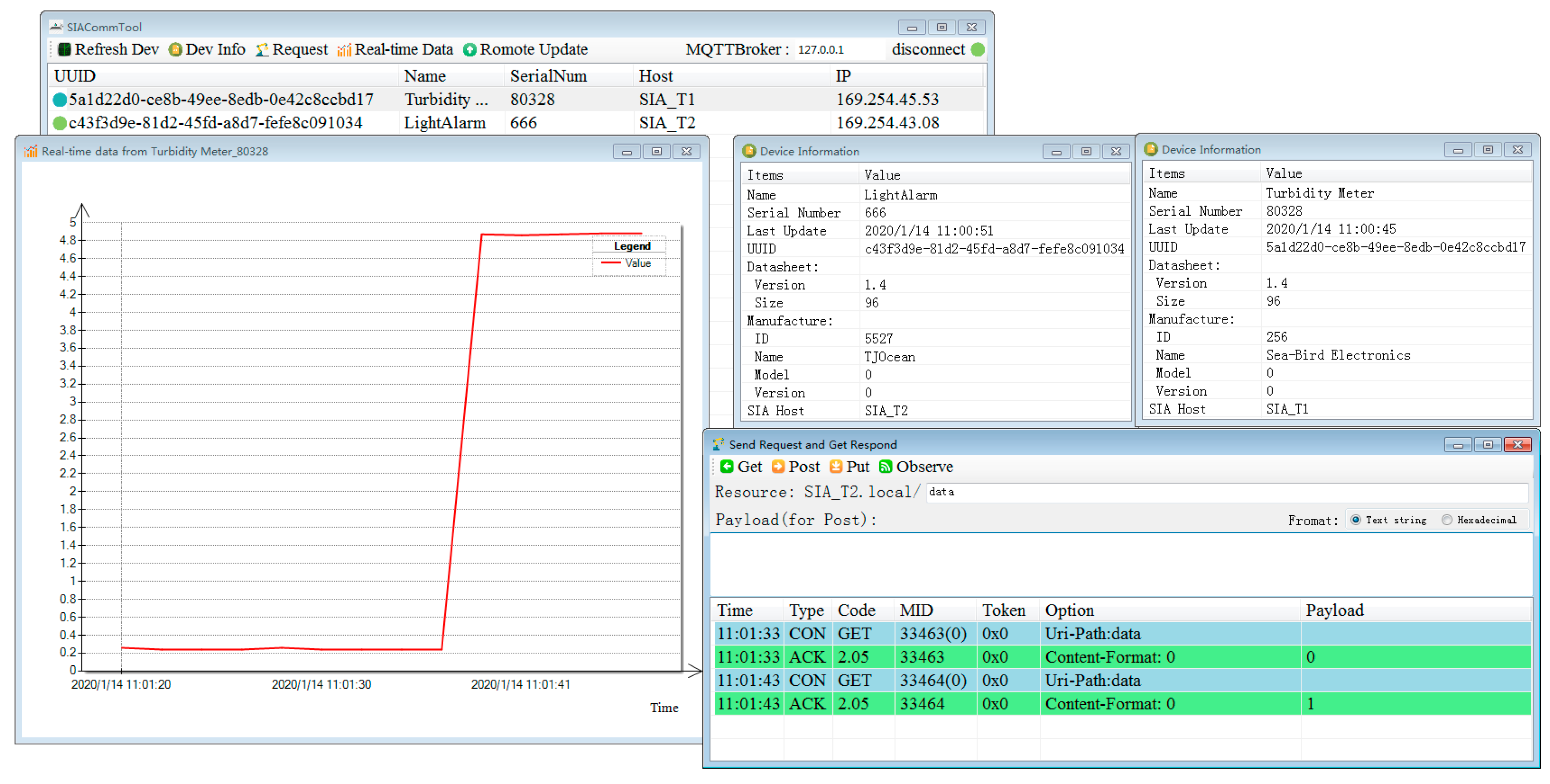

3.3. Laboratory Test

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lyu, F.; Zhou, H. Progress of Scientific Cabled Seafloor Observatory Networks (in Chinese). J. Eng. Stud. 2016, 8, 139–154. [Google Scholar] [CrossRef]

- Howe, B.M.; Lukas, R.; Duennebier, F.; Karl, D. ALOHA cabled observatory installation. In Proceedings of the OCEANS’11 MTS/IEEE KONA, Waikoloa, HI, USA, 19–22 September 2011; pp. 1–11. [Google Scholar]

- Kawaguchi, K.; Kaneda, Y.; Araki, E. The DONET: A real-time seafloor research infrastructure for the precise earthquake and tsunami monitoring. In Proceedings of the OCEANS 2008-MTS/IEEE Kobe Techno-Ocean, Kobe, Japan, 8–11 April 2008; pp. 1–4. [Google Scholar]

- Heidemann, J.; Stojanovic, M.; Zorzi, M. Underwater sensor networks: Applications, advances and challenges. Philos. Trans. Ser. A Math. Phys. Eng. Sci. 2012, 370, 158–175. [Google Scholar] [CrossRef] [PubMed]

- Reilly, T.C.O.; Headley, K.L.; Herlien, R.A.; Risi, M.; Davis, D.; Edgington, D.R.; Gomes, K.; Meese, T.; Graybeal, J.B.; Chaffey, M. Software infrastructure and applications for the Monterey Ocean Observing System: Design and implementation. In Proceedings of the Oceans ‘04 MTS/IEEE Techno-Ocean ‘04 (IEEE Cat. No.04CH37600), Kobe, Japan, 9–12 November 2004; Volume 1984, pp. 1985–1994. [Google Scholar]

- Reilly, T.C.O.; Headley, K.; Edgington, D.R.; Rueda, C.; Lee, K.; Song, E.; Zedlitz, J.; Rio, J.D.; Toma, D.; Manuel, A.; et al. Instrument interface standards for interoperable ocean sensor networks. In Proceedings of the OCEANS 2009-EUROPE, Bremen, Germany, 11–14 May 2009; pp. 1–10. [Google Scholar]

- Río, J.D.; Toma, D.M.; Reilly, T.C.O.; Bröring, A.; Dana, D.R.; Bache, F.; Headley, K.L.; Mànuel-Làzaro, A.; Edgington, D.R. Standards-Based Plug & Work for Instruments in Ocean Observing Systems. IEEE J. Ocean. Eng. 2014, 39, 430–443. [Google Scholar] [CrossRef]

- Martínez, E.; Toma, D.M.; Jirka, S.; Del, J.R. Middleware for Plug and Play Integration of Heterogeneous Sensor Resources into the Sensor Web. Sensors 2017, 17, 2923. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Song, E.Y.; Lee, K.B. Service-oriented sensor data interoperability for IEEE 1451 smart transducers. In Proceedings of the 2009 IEEE Instrumentation and Measurement Technology Conference, Singapore, 5–7 May 2009; pp. 1043–1048. [Google Scholar]

- Walter, K.; Nash, E. Coupling Wireless Sensor Networks and the Sensor Observation Service- Bridging the Interoperability Gap. In Proceedings of the 12th AGILE International Conference on Geographic Information Science, Leibniz Universität Hannover, Hannover, Germany, 2–5 June 2009; pp. 1–9. [Google Scholar]

- Kang, L. IEEE 1451: A standard in support of smart transducer networking. In Proceedings of the 17th IEEE Instrumentation and Measurement Technology Conference [Cat. No. 00CH37066], Baltimore, MD, USA, 1–4 May 2000; Volume 522, pp. 525–528. [Google Scholar]

- Reed, C.; Botts, M.; Davidson, J.; Percivall, G. OGC® Sensor Web Enablement: Overview and High Level Achhitecture. In Proceedings of the 2007 IEEE Autotestcon, Baltimore, MD, USA, 17–20 September 2007; pp. 372–380. [Google Scholar]

- Gigan, G.; Atkinson, I. Sensor Abstraction Layer: A unique software interface to effectively manage sensor networks. In Proceedings of the 2007 3rd International Conference on Intelligent Sensors, Sensor Networks and Information, Melbourne, Australia, 3–6 December 2007; pp. 479–484. [Google Scholar]

- Yang, Y.; Xu, H.; Xu, C. Development of a Plug-and-Play Monitoring System for Cabled Observatories in the East China Sea. Sensors 2015, 15, 17926–17943. [Google Scholar] [CrossRef] [Green Version]

- Barnes, C.R.; Best, M.M.R.; Johnson, F.R.; Pautet, L.; Pirenne, B. Challenges, Benefits, and Opportunities in Installing and Operating Cabled Ocean Observatories: Perspectives from NEPTUNE Canada. IEEE J. Ocean. Eng. 2013, 38, 144–157. [Google Scholar] [CrossRef]

- Reilly, T.C.O.; Edgington, D.; Davis, D.; Henthorn, R.; McCann, M.P.; Meese, T.; Radochonski, W.; Risi, M.; Roman, B.; Schramm, R. “Smart network” infrastructure for the MBARI ocean observing system. In Proceedings of the MTS/IEEE Oceans 2001. An Ocean Odyssey. Conference Proceedings (IEEE Cat. No.01CH37295), Honolulu, HI, USA, 5–8 November 2001; Volume 1272, pp. 1276–1282. [Google Scholar]

- Headley, K.L.; Davis, D.; Edgington, D.; McBride, L.; Reilly, T.C.O.; Risi, M. Managing sensor network configuration and metadata in ocean observatories using instrument pucks. In Proceedings of the 2003 International Conference Physics and Control, Tokyo, Japan, 25–27 June 2003; pp. 67–70. [Google Scholar]

- Edgington, D.R.; Davis, D.; Reilly, T.C.O. Ocean Observing System Instrument Network Infrastructure. In Proceedings of the OCEANS 2006, Boston, MA, USA, 18–21 September 2006; pp. 1–4. [Google Scholar]

- Waldmann, C.; Lázaro, A.; Delory, E.; Tilak, S.; Phillips, R.; Davis, E.; Johnson, G.; Johnson, F.; Zedlitz, J.; Bghiel, I.; et al. Evaluation of MBARI PUCK protocol for interoperable ocean observatories. In Proceedings of the Third International Congress on Marine Technology, Cottbus, Germany, 20–24 May 2009; p. 87. [Google Scholar]

- Toma, D.M.; Reilly, T.O.; Rio, J.D.; Headley, K.; Manuel, A.; Bröring, A.; Edgington, D. Smart sensors for interoperable Smart Ocean Environment. In Proceedings of the OCEANS 2011 IEEE-Spain, Santander, Spain, 6–9 June 2011; pp. 1–4. [Google Scholar]

- Toma, D.M.; Rio, J.d.; Jirka, S.; Delory, E.; Pearlman, J.; Waldmann, C. NeXOS smart electronic interface for sensor interoperability. In Proceedings of the OCEANS 2015-Genova, Genoa, Italy, 18–21 May 2015; pp. 1–5. [Google Scholar]

- Lin, S.; Lyu, F. A Capable Smart Sensor Interface Module for Interoperable Ocean Observatories. IOP Conf. Ser. 2018, 171, 012005. [Google Scholar] [CrossRef]

- Guttman, E. Autoconfiguration for IP networking: Enabling local communication. IEEE Internet Comput. 2001, 5, 81–86. [Google Scholar] [CrossRef]

- Open Geospatial Consortium. OGC® PUCK Protocol Standard Version 1.4; OGC: Welland, MA, USA, 2012. [Google Scholar]

- Bormann, C.; Castellani, A.P.; Shelby, Z. CoAP: An Application Protocol for Billions of Tiny Internet Nodes. IEEE Internet Comput. 2012, 16, 62–67. [Google Scholar] [CrossRef]

- MQTT. Available online: http://mqtt.org/ (accessed on 8 February 2020).

- Freescale Semiconductor. MC9S12XEP100 Reference Manual. Available online: https://www.nxp.com.cn/docs/en/data-sheet/MC9S12XEP100RMV1.pdf (accessed on 8 February 2020).

| Items | PUCK Firmware | SEISI | SIA |

|---|---|---|---|

| Metadata standards | PUCK with others as needed | PUCK, Sensor Model Language (SensorML) and Observations and Measurements(O&M) | PUCK with others as needed |

| Interaction standards after metadata obtained | Undefined, passed by after metadata obtained | OGC-SWE standards | Constrained Application Protocol (CoAP) and Message Queuing Telemetry Transport (MQTT) |

| Electrical interface | EIA-232 or Ethernet | Mixed | Mixed (Ethernet in this paper) |

| Where to achieve standardization | Host-side | Instrument-side or Host-side | Instrument-side |

| Resource requirement of MCU | Low | Relatively high | Relatively low |

| Bandwidth requirement | Low | Relatively high | Relatively low |

| Intelligence | little, almost a storage device | Customizable | Customizable |

| Integration mode | Passive | Passive | Passive or active, support direct interaction between SIAs |

| Items | Centralized Architectures | SOA |

|---|---|---|

| Reliability of the whole system | Low, totally depends on the reliability and connectivity of the master node | High, the failure of one node does not crash the whole system |

| Controllability | Highly Centralized | Distributed |

| Scalability | Low | High |

| Performance requirement of controller | Extremely high for the master node, low for slave nodes. | Higher than that of the slave node in the centralized one. |

| Connectivity requirement | Always connected with the master node. | Connectivity between any two nodes. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, S.; Lyu, F.; Nie, H. An Automatic Instrument Integration Scheme for Interoperable Ocean Observatories. Sensors 2020, 20, 1990. https://doi.org/10.3390/s20071990

Lin S, Lyu F, Nie H. An Automatic Instrument Integration Scheme for Interoperable Ocean Observatories. Sensors. 2020; 20(7):1990. https://doi.org/10.3390/s20071990

Chicago/Turabian StyleLin, Shijun, Feng Lyu, and Huixin Nie. 2020. "An Automatic Instrument Integration Scheme for Interoperable Ocean Observatories" Sensors 20, no. 7: 1990. https://doi.org/10.3390/s20071990