Agricultural Robotics for Field Operations

Abstract

:1. Introduction

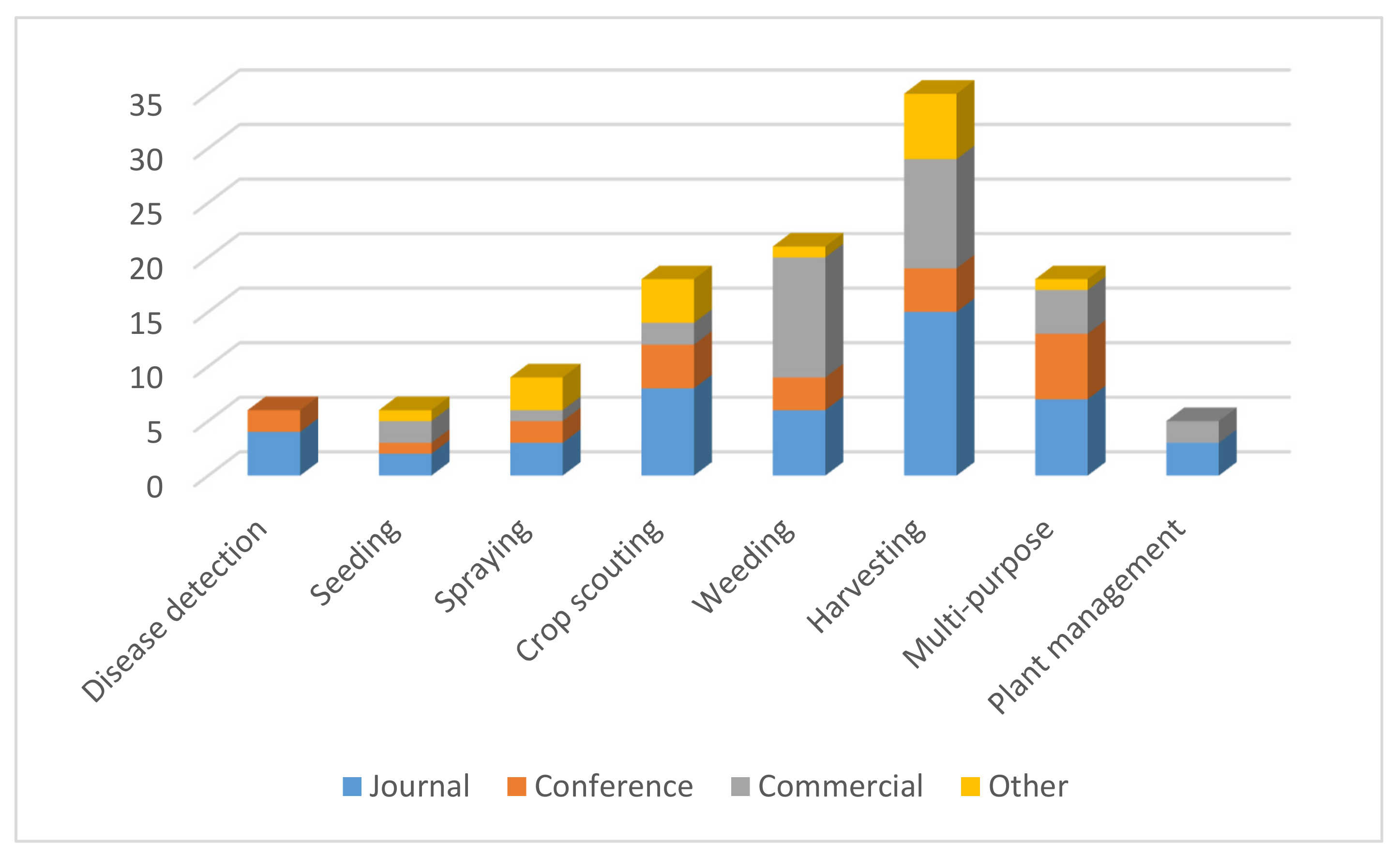

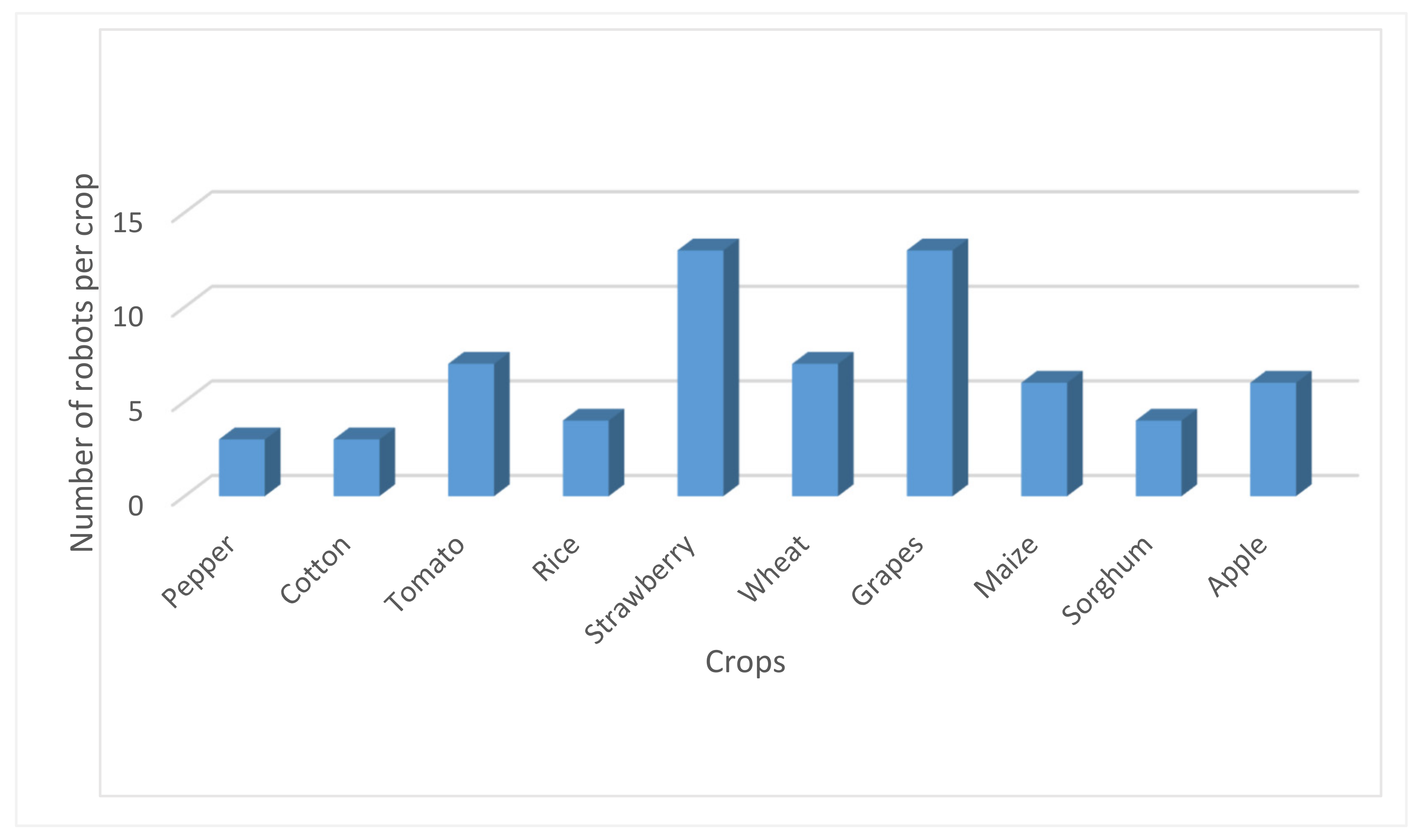

2. Review Framework

3. Operational Classification and Analysis

3.1. Weeding Robotic Systems

3.2. Seeding

3.3. Disease and Insect Detection

3.4. Crop Scouting

3.4.1. Plant Vigor Monitoring

3.4.2. Phenotyping

3.5. Spraying

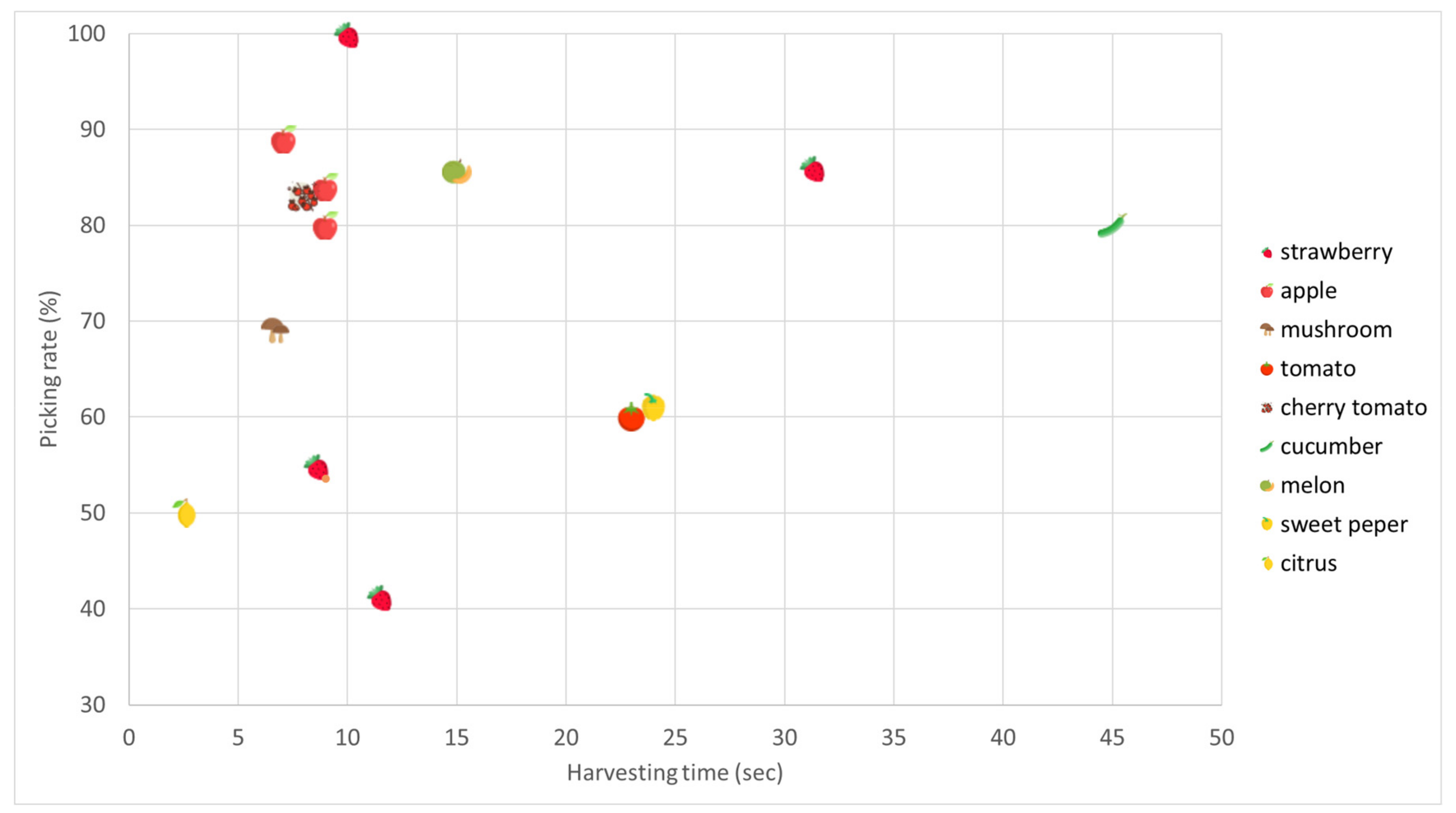

3.6. Harvesting Robotic Systems

3.7. Plant Management Robots

3.8. Multi-Purpose Robotic Systems

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Marinoudi, V.; Sørensen, C.G.; Pearson, S.; Bochtis, D. Robotics and labour in agriculture. A context consideration. Biosyst. Eng. 2019, 184, 111–121. [Google Scholar] [CrossRef]

- Pedersen, S.M.; Fountas, S.; Have, H.; Blackmore, B.S. Agricultural robots—System analysis and economic feasibility. Precis. Agric. 2006, 7, 295–308. [Google Scholar] [CrossRef]

- Pedersen, S.M.; Fountas, S.; Sørensen, C.G.; Van Evert, F.K.; Blackmore, B.S. Robotic seeding: Economic perspectives. In Precision Agriculture: Technology and Economic Perspectives; Pedersen, S.M., Lind, K.M., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 167–179. ISBN 978-3-319-68715-5. [Google Scholar]

- Blackmore, B.S.; Fountas, S.; Gemtos, T.A.; Griepentrog, H.W. A specification for an autonomous crop production mechanization system. In Proceedings of the International Symposium on Application of Precision Agriculture for Fruits and Vegetables, Orlando, FL, USA, 1 April 2009; Volume 824, pp. 201–216. [Google Scholar]

- Fountas, S.; Gemtos, T.A.; Blackmore, S. Robotics and Sustainability in Soil Engineering. In Soil Engineering; Springer: Berlin, Germany, 2010; pp. 69–80. [Google Scholar]

- Fountas, S.; Blackmore, B.; Vougioukas, S.; Tang, L.; Sørensen, C.; Jørgensen, R. Decomposition of agricultural tasks into robotic behaviours. Available online: https://cigrjournal.org/index.php/Ejounral/article/viewFile/901/895 (accessed on 6 May 2020).

- Reina, G.; Milella, A.; Galati, R. Terrain assessment for precision agriculture using vehicle dynamic modelling. Biosyst. Eng. 2017, 162, 124–139. [Google Scholar] [CrossRef]

- Fernandes, H.R.; Garcia, A.P. Design and control of an active suspension system for unmanned agricultural vehicles for field operations. Biosyst. Eng. 2018, 174, 107–114. [Google Scholar] [CrossRef]

- Bochtis, D.D.; Vougioukas, S.G.; Griepentrog, H.W. A mission planner for an autonomous tractor. Trans. ASABE 2009, 52, 1429–1440. [Google Scholar] [CrossRef]

- Bochtis, D.; Griepentrog, H.W.; Vougioukas, S.; Busato, P.; Berruto, R.; Zhou, K. Route planning for orchard operations. Comput. Electron. Agric. 2015, 113, 51–60. [Google Scholar] [CrossRef]

- Yang, L.; Noguchi, N. Human detection for a robot tractor using omni-directional stereo vision. Comput. Electron. Agric. 2012, 89, 116–125. [Google Scholar] [CrossRef]

- Noguchi, N.; Will, J.; Reid, J.; Zhang, Q. Development of a master-slave robot system for farm operations. Comput. Electron. Agric. 2004, 44, 1–19. [Google Scholar] [CrossRef]

- Zhang, C.; Noguchi, N.; Yang, L. Leader-follower system using two robot tractors to improve work efficiency. Comput. Electron. Agric. 2016, 121, 269–281. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations. Part 2: Operations and systems. Biosyst. Eng. 2017, 153, 110–128. [Google Scholar] [CrossRef]

- Aravind, K.R.; Raja, P.; Pérez-Ruiz, M. Task-based agricultural mobile robots in arable farming: A review. Span. J. Agric. Res. 2017, 15, 1–16. [Google Scholar] [CrossRef]

- Shamshiri, R.R.; Weltzien, C.; Hameed, I.A.; Yule, I.J.; Grift, T.E.; Balasundram, S.K.; Pitonakova, L.; Ahmad, D.; Chowdhary, G. Research and development in agricultural robotics: A perspective of digital farming. Int. J. Agric. Biol. Eng. 2018, 11, 1–14. [Google Scholar] [CrossRef]

- Tsolakis, N.; Bechtsis, D.; Bochtis, D. Agros: A robot operating system based emulation tool for agricultural robotics. Agronomy 2019, 9, 403. [Google Scholar] [CrossRef] [Green Version]

- Slaughter, D.C.; Giles, D.K.; Downey, D. Autonomous robotic weed control systems: A review. Comput. Electron. Agric. 2008, 61, 63–78. [Google Scholar] [CrossRef]

- Defterli, S.G.; Shi, Y.; Xu, Y.; Ehsani, R. Review of robotic technology for strawberry production. Appl. Eng. Agric. 2016, 32, 301–318. [Google Scholar]

- FAO Recommendations for improved weed management. Plant Prod. Prot. Div. Rome 2006, 1–56.

- Oerke, E.C. Crop losses to pests. J. Agric. Sci. 2006, 144, 31–43. [Google Scholar] [CrossRef]

- Utstumo, T.; Urdal, F.; Brevik, A.; Dørum, J.; Netland, J.; Overskeid, Ø.; Berge, T.W.; Gravdahl, J.T. Robotic in-row weed control in vegetables. Comput. Electron. Agric. 2018, 154, 36–45. [Google Scholar] [CrossRef]

- Dino. Available online: https://www.naio-technologies.com/en/agricultural-equipment/large-scale-vegetable-weeding-robot/ (accessed on 6 May 2020).

- Bakker, T.; Van Asselt, K.; Bontsema, J.; Müller, J.; Van Straten, G. An autonomous weeding robot for organic farming. In Field and Service Robotics; Springer: Berlin, Germany, 2006; Volume 25, pp. 579–590. [Google Scholar]

- Kim, G.H.; Kim, S.C.; Hong, Y.K.; Han, K.S.; Lee, S.G. A robot platform for unmanned weeding in a paddy field using sensor fusion. In Proceedings of the IEEE International Conference on Automation Science and Engineering, Seoul, Korea, 20–24 August 2012; pp. 904–907. [Google Scholar]

- Vitirover. Available online: https://www.vitirover.fr/en-home (accessed on 6 May 2020).

- Jørgensen, R.N.; Sørensen, C.G.; Pedersen, J.M.; Havn, I.; Jensen, K.; Søgaard, H.T.; Sorensen, L.B. Hortibot: A system design of a robotic tool carrier for high-tech plant nursing. Available online: https://ecommons.cornell.edu/bitstream/handle/1813/10601/ATOE%2007%20006%20Jorgensen%2011July2007.pdf?sequence=1&isAllowed=y (accessed on 6 May 2020).

- Bochtis, D.D.; Sørensen, C.G.; Jørgensen, R.N.; Nørremark, M.; Hameed, I.A.; Swain, K.C. Robotic weed monitoring. Acta Agric. Scand. Sect. B Soil Plant Sci. 2011, 61, 201–208. [Google Scholar] [CrossRef]

- OZ. Available online: https://www.naio-technologies.com/en/agricultural-equipment/weeding-robot-oz/ (accessed on 6 May 2020).

- Reiser, D.; Sehsah, E.S.; Bumann, O.; Morhard, J.; Griepentrog, H.W. Development of an autonomous electric robot implement for intra-row weeding in vineyards. Agriculture 2019, 9, 18. [Google Scholar] [CrossRef] [Green Version]

- Gokul, S.; Dhiksith, R.; Sundaresh, S.A.; Gopinath, M. Gesture controlled wireless agricultural weeding robot. In Proceedings of the 2019 5th International Conference on Advanced Computing and Communication Systems, San Mateo, CA, USA, 4–8 November 2019; pp. 926–929. [Google Scholar]

- TED. Available online: https://www.naio-technologies.com/en/agricultural-equipment/vineyard-weeding-robot/ (accessed on 6 May 2020).

- Anatis. Available online: https://www.carre.fr/entretien-des-cultures-et-prairies/anatis/?lang=en (accessed on 6 May 2020).

- Tertill. Available online: https://www.franklinrobotics.com (accessed on 6 May 2020).

- Asterix. Available online: https://www.adigo.no/portfolio/asterix/?lang=en (accessed on 6 May 2020).

- Van Evert, F.K.; Van Der Heijden, G.W.A.M.; Lotz, L.A.P.; Polder, G.; Lamaker, A.; De Jong, A.; Kuyper, M.C.; Groendijk, E.J.K.; Neeteson, J.J.; Van Der Zalm, T. A mobile field robot with vision-based detection of volunteer potato plants in a corn crop. Weed Technol. 2006, 20, 853–861. [Google Scholar] [CrossRef]

- EcoRobotix. Available online: https://www.ecorobotix.com/en/ (accessed on 6 May 2020).

- Klose, R.; Thiel, M.; Ruckelshausen, A.; Marquering, J. Weedy—A sensor fusion based autonomous field robot for selective weed control. In Proceedings of the Conference: Agricultural Engineering—Land-Technik 2008: Landtechnik regional und International, Stuttgart-Hohenheim, Germany, 25–26 September 2008; pp. 167–172. [Google Scholar]

- Ibex. Available online: http://www.ibexautomation.co.uk/ (accessed on 6 May 2020).

- BlueRiver. Available online: http://www.bluerivertechnology.com (accessed on 6 May 2020).

- RIPPA & VIPPA. Available online: https://confluence.acfr.usyd.edu.au/display/AGPub/Our+Robots (accessed on 6 May 2020).

- Lee, W.S.; Slaughter, D.C.; Giles, D.K. Robotic weed control system for tomatoes. Precis. Agric. 1999, 1, 95–113. [Google Scholar] [CrossRef]

- Testa, G.; Reyneri, A.; Blandino, M. Maize grain yield enhancement through high plant density cultivation with different inter-row and intra-row spacings. Eur. J. Agron. 2016, 72, 28–37. [Google Scholar] [CrossRef]

- Haibo, L.; Shuliang, D.; Zunmin, L.; Chuijie, Y. Study and experiment on a wheat precision seeding robot. J. Robot. 2015. [Google Scholar] [CrossRef]

- Ruangurai, P.; Ekpanyapong, M.; Pruetong, C.; Watewai, T. Automated three-wheel rice seeding robot operating in dry paddy fields. Maejo Int. J. Sci. Technol. 2015, 9, 403–412. [Google Scholar]

- Sunitha, K.A.; Suraj, G.S.G.S.; Sowrya, C.H.P.N.; Sriram, G.A.; Shreyas, D.; Srinivas, T. Agricultural robot designed for seeding mechanism. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2017; Volume 197. [Google Scholar]

- Xaver. Available online: https://www.fendt.com/int/xaver (accessed on 6 May 2020).

- Mars. Available online: https://www.fendt.com/int/fendt-mars (accessed on 6 May 2020).

- Katupitiya, J. An autonomous seeder for broad acre crops. In Proceedings of the American Society of Agricultural and Biological Engineers Annual International Meeting 2014, St Joseph, MI, USA, 13–16 July 2014; Volume 1, pp. 169–176. [Google Scholar]

- Rehman, T.U.; Mahmud, M.S.; Chang, Y.K.; Jin, J.; Shin, J. Current and future applications of statistical machine learning algorithms for agricultural machine vision systems. Comput. Electron. Agric. 2019, 156, 585–605. [Google Scholar] [CrossRef]

- Schor, N.; Bechar, A.; Ignat, T.; Dombrovsky, A.; Elad, Y.; Berman, S. Robotic disease detection in greenhouses: Combined detection of powdery mildew and tomato spotted wilt virus. IEEE Robot. Autom. Lett. 2016, 1, 354–360. [Google Scholar] [CrossRef]

- Schor, N.; Berman, S.; Dombrovsky, A.; Elad, Y.; Ignat, T.; Bechar, A. Development of a robotic detection system for greenhouse pepper plant diseases. Precis. Agric. 2017, 18, 394–409. [Google Scholar] [CrossRef]

- Rey, B.; Aleixos, N.; Cubero, S.; Blasco, J. XF-ROVIM. A field robot to detect olive trees infected by Xylella fastidiosa using proximal sensing. Remote Sens. 2019, 11, 221. [Google Scholar] [CrossRef] [Green Version]

- Sultan Mahmud, M.; Zaman, Q.U.; Esau, T.J.; Price, G.W.; Prithiviraj, B. Development of an artificial cloud lighting condition system using machine vision for strawberry powdery mildew disease detection. Comput. Electron. Agric. 2019, 158, 219–225. [Google Scholar] [CrossRef]

- Liu, B.; Hu, Z.; Zhao, Y.; Bai, Y.; Wang, Y. Recognition of pyralidae insects using intelligent monitoring autonomous robot vehicle in natural farm scene. arXiv 2019, arXiv:physics/1903.10827. [Google Scholar]

- Pilli, S.K.; Nallathambi, B.; George, S.J.; Diwanji, V. EAGROBOT—A robot for early crop disease detection using image processing. In Proceedings of the 2nd International Conference on Electronics and Communication Systems, Coimbatore, India, 26–27 February 2015; pp. 1684–1689. [Google Scholar]

- Zheng, Y.-Y.; Kong, J.-L.; Jin, X.-B.; Wang, X.-Y.; Su, T.-L.; Zuo, M. CropDeep: The crop vision dataset for deep-learning-based classification and detection in precision agriculture. Sensors 2019, 19, 1058. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Barth, R.; IJsselmuiden, J.; Hemming, J.; Van Henten, E.J. Data synthesis methods for semantic segmentation in agriculture: A Capsicum annuum dataset. Comput. Electron. Agric. 2018, 144, 284–296. [Google Scholar] [CrossRef]

- Bayati, M.; Fotouhi, R. A mobile robotic platform for crop monitoring. Adv. Robot. Autom. 2018, 7, 2. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. Sensors 2017. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Hu, X.; Hou, Z.; Ning, J.; Zhang, Z. Discrimination of nitrogen fertilizer levels of tea plant (Camellia sinensis) based on hyperspectral imaging. J. Sci. Food Agric. 2018, 98, 4659–4664. [Google Scholar] [CrossRef]

- Bietresato, M.; Carabin, G.; Vidoni, R.; Gasparetto, A.; Mazzetto, F. Evaluation of a LiDAR-based 3D-stereoscopic vision system for crop-monitoring applications. Comput. Electron. Agric. 2016, 124, 1–13. [Google Scholar] [CrossRef]

- Vidoni, R.; Gallo, R.; Ristorto, G.; Carabin, G.; Mazzetto, F.; Scalera, L.; Gasparetto, A. Byelab: An agricultural mobile robot prototype for proximal sensing and precision farming. In Proceedings of the ASME International Mechanical Engineering Congress and Exposition, Tampa, FL, USA, 3–9 November 2017; Volume 4A-2017. [Google Scholar]

- OUVA. Available online: https://www.polariks.com/text?rq=ouva (accessed on 6 May 2020).

- Vinbot. Available online: http://vinbot.eu/ (accessed on 6 May 2020).

- Vinescout. Available online: http://vinescout.eu/web/ (accessed on 6 May 2020).

- Grape. Available online: http://www.grape-project.eu/home/ (accessed on 6 May 2020).

- Dos Santos, F.N.; Sobreira, H.; Campos, D.; Morais, R.; Moreira, A.P.; Contente, O. Towards a reliable monitoring robot for mountain vineyards. In Proceedings of the 2015 IEEE International Conference on Autonomous Robot Systems and Competitions, ICARSC 2015, Vila Real, Portugal, 8–10 April 2015; pp. 37–43. [Google Scholar]

- Terrasentia. Available online: https://www.earthsense.co (accessed on 6 May 2020).

- Vaeljaots, E.; Lehiste, H.; Kiik, M.; Leemet, T. Soil sampling automation case-study using unmanned ground vehicle. Eng. Rural Dev. 2018, 17, 982–987. [Google Scholar]

- Iida, M.; Kang, D.; Taniwaki, M.; Tanaka, M.; Umeda, M. Localization of CO2 source by a hexapod robot equipped with an anemoscope and a gas sensor. Comput. Electron. Agric. 2008, 63, 73–80. [Google Scholar] [CrossRef]

- Deery, D.; Jimenez-Berni, J.; Jones, H.; Sirault, X.; Furbank, R. Proximal remote sensing buggies and potential applications for field-based phenotyping. Agronomy 2014, 4, 349–379. [Google Scholar] [CrossRef] [Green Version]

- Mueller-Sim, T.; Jenkins, M.; Abel, J.; Kantor, G. The Robotanist: A ground-based agricultural robot for high-throughput crop phenotyping. In Proceedings of the IEEE International Conference on Robotics and Automation, Singapore, 29 May–3 July 2017; pp. 3634–3639. [Google Scholar]

- Benet, B.; Dubos, C.; Maupas, F.; Malatesta, G.; Lenain, R. Development of autonomous robotic platforms for sugar beet crop phenotyping using artificial vision. Available online: https://hal.archives-ouvertes.fr/hal-02155159/document (accessed on 6 May 2020).

- Ruckelshausen, A.; Biber, P.; Dorna, M.; Gremmes, H.; Klose, R.; Linz, A.; Rahe, R.; Resch, R.; Thiel, M.; Trautz, D.; et al. BoniRob: An autonomous field robot platform for individual plant phenotyping. In Proceedings of the Precision Agriculture 2009—Papers Presented at the 7th European Conference on Precision Agriculture, Wageningen, The Netherlands, 6–8 July 2009; pp. 841–847. [Google Scholar]

- Virlet, N.; Sabermanesh, K.; Sadeghi-Tehran, P.; Hawkesford, M.J. Field Scanalyzer: An automated robotic field phenotyping platform for detailed crop monitoring. Funct. Plant Biol. 2017, 44, 143–153. [Google Scholar] [CrossRef] [Green Version]

- Bao, Y.; Tang, L.; Breitzman, M.W.; Salas Fernandez, M.G.; Schnable, P.S. Field-based robotic phenotyping of sorghum plant architecture using stereo vision. J. Field Robot. 2019, 36, 397–415. [Google Scholar] [CrossRef]

- Shafiekhani, A.; Kadam, S.; Fritschi, F.B.; Desouza, G.N. Vinobot and vinoculer: Two robotic platforms for high-throughput field phenotyping. Sensors 2017, 17, 214. [Google Scholar] [CrossRef] [PubMed]

- Young, S.N.; Kayacan, E.; Peschel, J.M. Design and field evaluation of a ground robot for high-throughput phenotyping of energy sorghum. Precis. Agric. 2019, 20, 697–722. [Google Scholar] [CrossRef] [Green Version]

- Zhang, S.; Wang, H.; Huang, W.; You, Z. Plant diseased leaf segmentation and recognition by fusion of superpixel, K-means and PHOG. Optik 2018, 157, 866–872. [Google Scholar] [CrossRef]

- Vacavant, A.; Lebre, M.A.; Rositi, H.; Grand-Brochier, M.; Strand, R. New definition of quality-scale robustness for image processing algorithms, with generalized uncertainty modeling, applied to denoising and segmentation. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Beijing, China, 2019; Volume 11455 LNCS, pp. 138–149. [Google Scholar]

- Rajbhoj, A.; Deshpande, S.; Gubbi, J.; Kulkarni, V.; Balamuralidhar, P. A System for semi-automatic construction of image processing pipeline for complex problems. In fs have already been under development over the last years, and there are also a number of commercial robots on the market. Most of the major problems related tg; Springer: Rome, Italy, 2019; Volume 352, pp. 295–310. [Google Scholar]

- Sammons, P.J.; Furukawa, T.; Bulgin, A. Autonomous pesticide spraying robot for use in a greenhouse. In Proceedings of the 2005 Australasian Conference on Robotics and Automation, Sydney, Australia, 5–7 December 2005. [Google Scholar]

- Holland Green Machine. Available online: http://www.hollandgreenmachine.com/sprayrobot/product-information-spray-robot-s55/ (accessed on 6 May 2020).

- Sanchez-Hermosilla, J.; Rodriguez, F.; Gonzalez, R.; Luis, J.; Berenguel, M. A mechatronic description of an autonomous mobile robot for agricultural tasks in greenhouses. In Mobile Robots Navigation; INTECH Open Access Publisher: London, UK, 2010. [Google Scholar]

- Mahmud, M.S.A.; Abidin, M.S.Z.; Mohamed, Z.; Rahman, M.K.I.A.; Iida, M. Multi-objective path planner for an agricultural mobile robot in a virtual greenhouse environment. Comput. Electron. Agric. 2019, 157, 488–499. [Google Scholar] [CrossRef]

- Singh, S.; Burks, T.F.; Lee, W.S. Autonomous robotic vehicle development for greenhouse spraying. Trans. Am. Soc. Agric. Eng. 2005, 48, 2355–2361. [Google Scholar] [CrossRef]

- Oberti, R.; Marchi, M.; Tirelli, P.; Calcante, A.; Iriti, M.; Hočevar, M.; Baur, J.; Pfaff, J.; Schütz, C.; Ulbrich, H. Selective spraying of grapevine’s diseases by a modular agricultural robot. J. Agric. Eng. 2013, 44, 149–153. [Google Scholar] [CrossRef]

- Underwood, J.P.; Calleija, M.; Taylor, Z.; Hung, C.; Nieto, J.; Fitch, R.; Sukkarieh, S. Real-time target detection and steerable spray for vegetable crops. In Proceedings of the International Conference on Robotics and Automation: Robotics in Agriculture Workshop, Seattle, WA, USA, 26–30 May 2015. [Google Scholar]

- Søgaard, H.T.; Lund, I. Application accuracy of a machine vision-controlled robotic micro-dosing system. Biosyst. Eng. 2007, 96, 315–322. [Google Scholar] [CrossRef]

- Ogawa, Y.; Kondo, N.; Monta, M.; Shibusawa, S. Spraying robot for grape production. Springer Tracts Adv. Robot. 2006, 24, 539–548. [Google Scholar]

- Hayashi, S.; Yamamoto, S.; Tsubota, S.; Ochiai, Y.; Kobayashi, K.; Kamata, J.; Kurita, M.; Inazumi, H.; Peter, R. Automation technologies for strawberry harvesting and packing operations in Japan. J. Berry Res. 2014, 4, 19–27. [Google Scholar] [CrossRef] [Green Version]

- Feng, Q.; Zheng, W.; Qiu, Q.; Jiang, K.; Guo, R. Study on strawberry robotic harvesting system. In Proceedings of the CSAE 2012 IEEE International Conference on Computer Science and Automation Engineering, Zhangjiajie, China, 25–27 May 2012; Volume 1, pp. 320–324. [Google Scholar]

- Qingchun, F.; Xiu, W.; Wengang, Z.; Quan, Q.; Kai, J. New strawberry harvesting robot for elevated-trough culture. Int. J. Agric. Biol. Eng. 2012, 5, 1–8. [Google Scholar]

- Xiong, Y.; Peng, C.; Grimstad, L.; From, P.J.; Isler, V. Development and field evaluation of a strawberry harvesting robot with a cable-driven gripper. Comput. Electron. Agric. 2019, 157, 392–402. [Google Scholar] [CrossRef]

- Harvest Croo Robotics. Available online: https://harvestcroo.com/ (accessed on 6 May 2020).

- Arima, S.; Kondo, N.; Monta, M. Strawberry harvesting robot on table-top culture. In 2004 ASAE Annual Meeting; American Society of Agricultural and Biological Engineers: San Jose, MI, USA, 2004; p. 1. [Google Scholar]

- Hayashi, S.; Shigematsu, K.; Yamamoto, S.; Kobayashi, K.; Kohno, Y.; Kamata, J.; Kurita, M. Evaluation of a strawberry-harvesting robot in a field test. Biosyst. Eng. 2010, 105, 160–171. [Google Scholar] [CrossRef]

- Shiigi, T.; Kondo, N.; Kurita, M.; Ninomiya, K.; Rajendra, P.; Kamata, J.; Hayashi, S.; Kobayashi, K.; Shigematsu, K.; Kohno, Y. Strawberry harvesting robot for fruits grown on table top culture. In Proceedings of the American Society of Agricultural and Biological Engineers Annual International Meeting 2008, Providence, RI, USA, 29 June–2 July 2008; Volume 5, pp. 3139–3148. [Google Scholar]

- Dogtooth. Available online: https://dogtooth.tech/ (accessed on 6 May 2020).

- Agrobot E-Series. Available online: http://agrobot.com/ (accessed on 6 May 2020).

- Octinion. Available online: http://octinion.com/products/agricultural-robotics/rubion (accessed on 6 May 2020).

- Silwal, A.; Davidson, J.; Karkee, M.; Mo, C.; Zhang, Q.; Lewis, K. Effort towards robotic apple harvesting in Washington State. In Proceedings of the 2016 American Society of Agricultural and Biological Engineers Annual International Meeting, Orlando, FL, USA, 17–20 July 2016. [Google Scholar]

- Baeten, J.; Donné, K.; Boedrij, S.; Beckers, W.; Claesen, E. Autonomous fruit picking machine: A robotic apple harvester. In Field and service robotics; Springer: Berlin, Germany, 2008; Volume 42, pp. 531–539. [Google Scholar]

- Bulanon, D.; Kataoka, T. Fruit detection system and an end effector for robotic harvesting of Fuji apples. Agric. Eng. Int. CIGR J. 2010, 12, 203–210. [Google Scholar]

- FF Robotics. Available online: https://www.ffrobotics.com/ (accessed on 6 May 2020).

- Yaguchi, H.; Nagahama, K.; Hasegawa, T.; Inaba, M. Development of an autonomous tomato harvesting robot with rotational plucking gripper. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Daejeon, Korea, 9–14 October 2016; pp. 652–657. [Google Scholar]

- Wang, L.L.; Zhao, B.; Fan, J.W.; Hu, X.A.; Wei, S.; Li, Y.S.; Zhou, Q.B.; Wei, C.F. Development of a tomato harvesting robot used in greenhouse. Int. J. Agric. Biol. Eng. 2017, 10, 140–149. [Google Scholar]

- Feng, Q.; Zou, W.; Fan, P.; Zhang, C.; Wang, X. Design and test of robotic harvesting system for cherry tomato. Int. J. Agric. Biol. Eng. 2018, 11, 96–100. [Google Scholar] [CrossRef]

- Metomotion. Available online: https://metomotion.com (accessed on 6 May 2020).

- Root-AI. Available online: https://root-ai.com (accessed on 6 May 2020).

- Energid. Available online: https://www.energid.com/industries/agricultural-robotics (accessed on 6 May 2020).

- Tanigaki, K.; Fujiura, T.; Akase, A.; Imagawa, J. Cherry-harvesting robot. Comput. Electron. Agric. 2008, 63, 65–72. [Google Scholar] [CrossRef]

- Ceres, R.; Pons, J.L.; Jiménez, A.R.; Martín, J.M.; Calderón, L. Design and implementation of an aided fruit-harvesting robot (Agribot). Ind. Rob. 1998, 25, 337–346. [Google Scholar] [CrossRef]

- Van Henten, E.J.; Hemming, J.; Van Tuijl, B.A.J.; Kornet, J.G.; Meuleman, J.; Bontsema, J.; Van Os, E.A. An autonomous robot for harvesting cucumbers in greenhouses. Auton. Robot. 2002, 13, 241–258. [Google Scholar] [CrossRef]

- Hayashi, S.; Ganno, K.; Ishii, Y.; Tanaka, I. Robotic harvesting system for eggplants. Jpn. Agric. Res. Q. 2002, 36, 163–168. [Google Scholar] [CrossRef] [Green Version]

- Sweeper. Available online: http://www.sweeper-robot.eu (accessed on 6 May 2020).

- Irie, N.; Taguchi, N.; Horie, T.; Ishimatsu, T. Asparagus harvesting robot coordinated with 3-D vision sensor. In Proceedings of the IEEE International Conference on Industrial Technology, Gippsland, Australia, 10–13 February 2009. [Google Scholar]

- Cerescon. Available online: https://www.cerescon.com/EN/sparter (accessed on 6 May 2020).

- Roshanianfard, A.R.; Noguchi, N. Kinematics analysis and simulation of A 5DOF articulated robotic arm applied to heavy products harvesting. Tarım Bilim. Derg. 2018, 24, 91–104. [Google Scholar] [CrossRef] [Green Version]

- Edan, Y.; Rogozin, D.; Flash, T.; Miles, G.E. Robotic melon harvesting. IEEE Trans. Robot. Autom. 2000, 16, 831–835. [Google Scholar] [CrossRef]

- Umeda, M.; Kubota, S.; Iida, M. Development of “STORK”, a watermelon-harvesting robot. Artif. Life Robot. 1999, 3, 143–147. [Google Scholar] [CrossRef]

- Pilarski, T.; Happold, M.; Pangels, H.; Ollis, M.; Fitzpatrick, K.; Stentz, A. The Demeter system for automated harvesting. Auton. Robots 2002, 13, 9–20. [Google Scholar] [CrossRef]

- Rowley, J.H. Developing Flexible Automation for Mushroom Harvesting (Agaricus Bisporus): Innovation Report; University of Warwick: Coventry, UK, 2009. [Google Scholar]

- Siciliano, B.; Khatib, O. Springer Handbook of Robotics; Springer: Berlin, Germany, 2016; ISBN 3319325523. [Google Scholar]

- Abundant Robotics. Available online: https://www.abundantrobotics.com/ (accessed on 6 May 2020).

- Zapotezny-Anderson, P.; Lehnert, C. Towards active robotic vision in agriculture: A deep learning approach to visual servoing in occluded and unstructured protected cropping environments. arXiv 2019, arXiv:Prepr/1908.01885. [Google Scholar] [CrossRef]

- Mu, L.; Cui, G.; Liu, Y.; Cui, Y.; Fu, L.; Gejima, Y. Design and simulation of an integrated end-effector for picking kiwifruit by robot. Inf. Process. Agric. 2019, 7, 58–71. [Google Scholar] [CrossRef]

- Harvest Automation. Available online: https://www.public.harvestai.com/ (accessed on 6 May 2020).

- Vision Robotics. Available online: https://www.visionrobotics.com/vr-grapevine-pruner (accessed on 6 May 2020).

- Botterill, T.; Paulin, S.; Green, R.; Williams, S.; Lin, J.; Saxton, V.; Mills, S.; Chen, X.; Corbett-Davies, S. A robot system for pruning grape vines. J. F. Robot. 2017, 34, 1100–1122. [Google Scholar] [CrossRef]

- Zahid, A.; He, L.; Zeng, L. Development of a robotic end effector for apple tree pruning. In Proceedings of the 2019 ASABE Annual International Meeting, Boston, MA, USA, 7–10 July 2019. [Google Scholar]

- He, L.; Zhou, J.; Zhang, Q.; Charvet, H.J. A string twining robot for high trellis hop production. Comput. Electron. Agric. 2016, 121, 207–214. [Google Scholar] [CrossRef] [Green Version]

- Grimstad, L.; From, P.J. The Thorvald II agricultural robotic system. Robotics 2017, 6, 24. [Google Scholar] [CrossRef] [Green Version]

- Xue, J.; Fan, B.; Zhang, X.; Feng, Y. An agricultural robot for multipurpose operations in a greenhouse. DEStech Trans. Eng. Technol. Res. 2017. [Google Scholar] [CrossRef] [Green Version]

- Narendran, V. Autonomous robot for E-farming based on fuzzy logic reasoning. Int. J. Pure Appl. Math. 2018, 118, 3811–3821. [Google Scholar]

- Robotti. Available online: http://agrointelli.com/robotti-diesel.html#rob.diesel (accessed on 6 May 2020).

- Durmus, H.; Gunes, E.O.; Kirci, M.; Ustundag, B.B. The design of general purpose autonomous agricultural mobile-robot: “AGROBOT”. In Proceedings of the 2015 4th International Conference on Agro-Geoinformatics, Agro-Geoinformatics 2015, Istanbul, Turkey, 20–24 July 2015; pp. 49–53. [Google Scholar]

- Peña, C.; Riaño, C.; Moreno, G. RobotGreen: A teleoperated agricultural robot for structured environments. J. Eng. Sci. Technol. Rev. 2018, 11, 87–98. [Google Scholar] [CrossRef]

- Murakami, N.; Ito, A.; Will, J.D.; Steffen, M.; Inoue, K.; Kita, K.; Miyaura, S. Development of a teleoperation system for agricultural vehicles. Comput. Electron. Agric. 2008, 63, 81–88. [Google Scholar] [CrossRef]

- Jasiński, M.; Mączak, J.; Radkowski, S.; Korczak, S.; Rogacki, R.; Mac, J.; Szczepaniak, J. Autonomous agricultural robot—Conception of inertial navigation system. In International Conference on Automation; Springer: Cham, Switzerland, 2016; Volume 440, pp. 669–679. [Google Scholar]

- Chang, C.L.; Lin, K.M. Smart agricultural machine with a computer vision-based weeding and variable-rate irrigation scheme. Robotics 2018, 7, 38. [Google Scholar] [CrossRef] [Green Version]

- Moshou, D.; Bravo, C.; Oberti, R.; West, J.S.; Ramon, H.; Vougioukas, S.; Bochtis, D. Intelligent multi-sensor system for the detection and treatment of fungal diseases in arable crops. Biosyst. Eng. 2011, 108, 311–321. [Google Scholar] [CrossRef]

- Quan, L.; Feng, H.; Lv, Y.; Wang, Q.; Zhang, C.; Liu, J.; Yuan, Z. Maize seedling detection under different growth stages and complex field environments based on an improved Faster R–CNN. Biosyst. Eng. 2019, 184, 1–23. [Google Scholar] [CrossRef]

- Gollakota, A.; Srinivas, M.B. Agribot—A multipurpose agricultural robot. In Proceedings of the 2011 Annual IEEE India Conference, Hyderabad, India, 16–18 December 2011; pp. 1–4. [Google Scholar]

- Praveena, R.; Srimeena, R. Agricultural robot for automatic ploughing and seeding. In Proceedings of the 2015 IEEE Technological Innovation in ICT for Agriculture and Rural Development (TIAR), Chennai, India, 10–12 July 2015; pp. 17–23. [Google Scholar]

- Sowjanya, K.D.; Sindhu, R.; Parijatham, M.; Srikanth, K.; Bhargav, P. Multipurpose autonomous agricultural robot. In Proceedings of the International Conference on Electronics, Communication and Aerospace Technology, Coimbatore, India, 20–22 April 2017; pp. 696–699. [Google Scholar]

- Ranjitha, B.; Nikhitha, M.N.; Aruna, K.; Murthy, B.T.V. Solar powered autonomous multipurpose agricultural robot using bluetooth/android app. In Proceedings of the 2019 3rd International conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 12–14 June 2019; pp. 872–877. [Google Scholar]

- Wall-ye (MYCE_Agriculture). Available online: http://wall-ye.com/index-6.html (accessed on 6 May 2020).

- Wall-ye (Myce_Vigne). Available online: http://wall-ye.com/index.html (accessed on 6 May 2020).

- Green, O.; Schmidt, T.; Pietrzkowski, R.P.; Jensen, K.; Larsen, M.; Jørgensen, R.N. Commercial autonomous agricultural platform. In Proceedings of the Second International Conference on Robotics and associated High-technologies and Equipment for Agriculture and Forestry, Bergamo, Italy, 20–23 October 2014; pp. 351–356. [Google Scholar]

- TrimBot 2020. Available online: http://trimbot2020.webhosting.rug.nl/ (accessed on 6 May 2020).

- Bak, T.; Jakobsen, H. Agricultural robotic platform with four wheel steering for weed detection. Biosyst. Eng. 2004, 87, 125–136. [Google Scholar] [CrossRef]

| Crop | Perception Sensors | Weed Detection | Weed Control | Results | Cited Work |

|---|---|---|---|---|---|

| Maize | Cameras, optical and acoustic distance sensors | Yes | Chemical | No performance metrics provided | [38] |

| Carrot | RGB infrared camera | Partly | Chemical | 100% effectiveness with the DoD system | [22] |

| Potato, corn | Webcam, solid-state gyroscope | Partly | Chemical | 98% and 89% detection accuracy | [36] |

| Sugar beet | Color camera | Yes | Mechanical | Row detection precision < 25 mm. > 90% in-row weed removal | [24] |

| N/A | Stereo vision system, laser | No | Mechanical | Precision < 3 cm. | [27,29] |

| Rice | Laser range finder, IMU | No | Mechanical | Precision < 62 mm | [25] |

| Beetroot | Color camera, artificial vision, compass | Yes | Chemical | > 85% detection & destroy, precision < 2 cm | [37] |

| Grapes | IMU, hall sensors, electromechanical sensor, a sonar sensor | No | Mechanical | Average performance: 65% (feeler) & 82% (sonar) | [30] |

| N/A | Accelerometer, gyroscope, flex sensor | No | Mechanical | No performance metrics provided | [31] |

| Tomato | Color camera, SensorWatch | Partly | Chemical | 24.2% were incorrectly identified and sprayed and 52.4% of the weeds were not sprayed. | [42] |

| Crop | Perception | Results | Cited Work |

|---|---|---|---|

| Wheat | Force sensor, displacement sensor, angle sensor | The path tracking errors are +/− 5 cm and the angle errors are about zero. | [49] |

| Wheat | Signal sensor, angle, pressure & infrared sensors | Qualified rate: 93.3% | [44] |

| Rice | Compass, wheel encoder | 92% accuracy & 5 cm error in the dropping position. | [45] |

| Crop | Perception | Detected Disease | Highest Accuracy | Cited Work |

|---|---|---|---|---|

| Bell pepper | RGB camera, multispectral camera, laser sensor | Powdery mildew & tomato spotted wilt virus | 95% & 90% | [51,52] |

| Cotton, groundnut | RGB camera | Cotton (Bacterial blight, magnesium deficiency), groundnut (leaf spot & anthracnose) | ~90%, 83–96% | [56] |

| Olive tree | Two DSLR cameras (one in BNDVI mode), a multispectral camera, a hyperspectral system in visible and NIR range, a thermal camera, LiDAR, an IMU sensor(*) | Xylella fastidiosa bacterium | N/A | [53] |

| Tomato, rice | RGB camera | Pyralidae insect | 94.3% | [55] |

| Strawberry | RGB camera | Powdery mildew | 72%-95% | [54] |

| Crop | Perception | Results | Cited Work |

|---|---|---|---|

| No specific crop | High-resolution stereo-cameras, 3D LiDAR | Soil sampling. No performance metrics provided. | [70] |

| No specific crop | CO2 gas sensor, anemoscope, IR distance measuring sensor | Gas source tracking. CO2 concentration levels up to 2500 ppm were recorded while the robot was moving at a speed of 2 m/min. | [71] |

| Orchards | LiDAR, luxmeter | Canopy volume estimation. The system is independent of the light conditions, it is highly reliable and data processing is very fast. | [62] |

| Grapes | RGB & IR camera, laser ranger finder, IMU, pressure sensor, etc. | Crop monitoring tasks. No performance metrics provided. | [68] |

| Orchards and vineyards | LiDAR, OptRX sensor | Monitor health status and canopy thickness. Terrestrial Laser Scanning (TLS): 2 mm distance accuracy | [63] |

| Canola | Ultrasonic sensors, NDVI sensors, IR thermometers, RGB camera | Gather phenotypic data. Maximum measurement error: 2.5% | [59] |

| Crop | Perception | Autonomy Level | Results | Cited Work |

|---|---|---|---|---|

| Maize & wheat | Cameras, spectral imaging systems, laser sensors, 3D time-of-flight cameras | Autonomous | No performance metrics provided | [75] |

| Cotton | Stereo RGB & IR thermal camera, temperature, humidity, light intensity sensors, pyranometer, quantum, LiDAR | Semi-autonomous | RMS error: Plant height: < 0.5 cm (Vinobot) RGB to IR calibration: 2.5 px (Vinoculer) Temp: < 1 °C (Vinoculer) | [78] |

| Sorghum | Stereo camera, RGB camera with fish eye lenses, penetrometer | Autonomous | Stalk detection: 96% | [73] |

| Rice, maize & wheat | RGB camera, chlorophyll fluorescence camera, NDVI camera, thermal infrared camera, hyperspectral camera, 3D laser scanner | Fixed site fully automated | Plant height RMS error: 1.88 cm | [76] |

| Sugar Beet | Mobile robot: Webcam camera, gigaethernet camera Bettybot: Color camera, hyperspectral camera | Autonomous | No performance metrics provided | [74] |

| Sorghum | Stereo imaging system consisting of color cameras | Autonomous based on commercial tractor | The image-derived measurements were highly repeatable & showed high correlations with manual measurements. | [77] |

| Energy Sorghum | Stereo camera, time of flight depth sensor, IR camera | Semi-autonomous | Average absolute error for stem width and plant height: 13% and 15%. | [79] |

| Crop | Perception | Real-time Detection | Results | Cited Work |

|---|---|---|---|---|

| Cantaloupe | Robot controller | No | NSGA-II execution time was better 1.5–7% than NSGA-III for the same test cases. (*) | [86] |

| N/A | Web camera | Yes | 27% off-target shots, 99.8% of the targets were sprayed by at least one shot. | [90] |

| Cucumber | Bump sensors, infra-red sensors, induction sensors | No | Run success:90% & 95%, topside leaf coverage:95% & 90%, underside leaf coverage:95 & 80%, over-spray:20% & 10% for Tests 1 & 2 | [83] |

| N/A | Ultrasonic sensors | No | Maximum error varied from 1.2–4.5 cm for self-contained mode on concrete, while for trailer varied from 2.2–4.9 cm. | [87] |

| Grapevine | Ultrasonic sensor, color TV camera | No | No performance metrics. | [91] |

| N/A | Middle-range sonar, short-range sonar, radar, compass, radar | No | Longitudinal, lateral and orientation errors are close to zero, by including slip varied 10–30%. | [85] |

| Grapevine | RGB camera, R-G-NIR multispectral camera | Yes | The sensitivity of the robotic selective spraying was 85%, the selectivity was 92% (8% of the total healthy area was sprayed unnecessarily). | [88] |

| Vegetable crops | Hyperspectral camera, stereo vision, thermal IR camera, monocular color camera | Yes | The greedy sort and raster methods are substantially faster than back-to-front scheduling, taking only 68% to 77% of the time. | [89] |

| Crop | Perception | Fastest Picking Speed | Highest Picking Rate | Cited Work |

|---|---|---|---|---|

| alfalfa, sudan | Color camera, gyroscope | 2 ha/h (alfalfa) | N/A | [123] |

| apple tree | Color camera, time of flight based three dimensional camera | 7.5 sec/fruit | 84% | [103] |

| apple tree | Color CCD (Charge Coupled Device) camera, laser range sensor | 7.1 sec/fruit | 89% | [105] |

| apple tree | High-frequency light, camera | 9 sec/fruit | 80% | [104] |

| cherry | 3D vision sensor with red, IR laser diodes, pressure sensor | 14 sec/fruit | N/A | [113] |

| mushroom | Laser sensor, vision sensor | 6.7 sec/mushroom | 69% | [124] |

| asparagus | 3D vision sensor with two sets of slit laser projectors & a TV camera | 13.7 sec/asparagus | N/A | [118] |

| strawberry | Sonar camera sensor, binocular camera | 31.3 sec/fruit | 86% | [94] |

| strawberry | Color CCD cameras, reflection-type photoelectric sensor | 8.6 sec/fruit | 54.9% | [92] |

| strawberry | LED light source, three-color CCD cameras, photoelectric sensor, suction device | 11.5 sec/fruit | 41.3% with a suction device 34.9% without it | [98] |

| strawberry | Color CCD camera, visual sensor | 10 sec/fruit | N/A | [97] |

| strawberry | Three VGA (Video Graphics Array) class CCD color cameras (stereo vision system and center camera) | N/A | 46% | [99] |

| strawberry | RGB-D camera, 3 IR sensors | 10.6 sec/fruit | 53.6% | [95] |

| tomato | Stereo camera, playstation camera | 23 sec/tomato | 60% | [107] |

| tomato | Binocular stereo vision system, laser sensor | 15 sec/tomato | 86% | [108] |

| cherry tomato | Camera, laser sensor | 8 sec/tomato bunch | 83% | [109] |

| cucumber | Two synchronized CCD cameras | 45 sec/cucumber | 80% | [115] |

| various fruits | Pressure sensor, 2 convergent IR sensors, telemeter, cameras | 2 sec/fruit (only grasp & detach) | N/A | [114] |

| melon | Two black and white CCD cameras, proximity sensor, far and near vision sensors | 15 sec/fruit | 85.67% | [121] |

| eggplant | Single CCD camera, photoelectric sensor | 64.1 sec/eggplant | 62.5% | [116] |

| watermelon | Two CCD cameras, vacuum sensor | N/A | 66.7% | [122] |

| Crop | Perception | Operation | Cited Work |

|---|---|---|---|

| Apple tree | Force sensor | pruning | [132] |

| Hop | Camera, wire detecting bar | string twining | [133] |

| Grape | Three cameras | pruning | [131] |

| Working Environment | Perception | Operation(s) | Cited Work |

|---|---|---|---|

| Arable crops | N/A | Ploughing, seeding | [145] |

| Arable crops | Sonar sensor, temperature | Monitoring, spraying, fertilization, disease detection | [138] |

| Arable crops | Τhree cameras with IR filter, humidity & ultrasonic sensors | Ploughing, seeding, harvesting, spraying | [146] |

| Arable crops, polytunnels, greenhouse | 2D LiDAR, ultrasonic sensors, an RGB camera & a monochromatic IR camera | Harrowing, soil sampling, phenotyping, additional tasks by combining modules | [135] |

| Arable crops | Soil sensors | Ploughing, irrigation, seeding | [147] |

| Greenhouse | Two color cameras, machine vision sensor | Spraying, weeding, additional tasks by adding or removing components | [134] |

| Arable crops | Humidity and temperature air sensor, IR sensors | Ploughing, seeding, irrigation, spraying, monitoring | [136] |

| Urban crops | Color camera, temperature, humidity and luminosity sensors | Sowing, irrigation, fumigation, pruning | [139] |

| Arable crops | Voltage sensors | Seeding, spraying, ploughing, mowing | [148] |

| Arable crops | 2D LiDAR, ultrasonic sensor, IR camera | Seeding, weeding, ploughing | [141] |

| Arable crops | N/A | Sowing, sprinkling, weeding, harvesting | [149] |

| Arable crops | CloverCam, RoboWeedCamRT | Seeding, weeding | [137] |

| Vineyards | N/A | Pruning, weeding, mowing | [150] |

| Arable crops | N/A | Precision seeding, ridging discs & mechanical row crop cleaning | [151] |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fountas, S.; Mylonas, N.; Malounas, I.; Rodias, E.; Hellmann Santos, C.; Pekkeriet, E. Agricultural Robotics for Field Operations. Sensors 2020, 20, 2672. https://doi.org/10.3390/s20092672

Fountas S, Mylonas N, Malounas I, Rodias E, Hellmann Santos C, Pekkeriet E. Agricultural Robotics for Field Operations. Sensors. 2020; 20(9):2672. https://doi.org/10.3390/s20092672

Chicago/Turabian StyleFountas, Spyros, Nikos Mylonas, Ioannis Malounas, Efthymios Rodias, Christoph Hellmann Santos, and Erik Pekkeriet. 2020. "Agricultural Robotics for Field Operations" Sensors 20, no. 9: 2672. https://doi.org/10.3390/s20092672