Development of a Guidance System for Motor Imagery Enhancement Using the Virtual Hand Illusion

Abstract

:1. Introduction

2. Methods

2.1. Proposed Guidance System for MI Enhancement

2.2. Experimental Designs

2.3. Apparatus

2.4. Experimental Protocols

- (1)

- RHI-P: I felt as if the fake hand in RHI-P were my hand more than that in VHI-P;

- (2)

- VHI-P: I felt as if the fake hand in VHI-P were my hand more than that in RHI-P;

- (3)

- Both: I felt as if the fake hands in both RHI-P and VHI-P were my hand similarly;

- (4)

- None: I could not feel as if either the fake hand in RHI-P or VHI-P were my hand.

2.5. Data Acquisition & Analysis

3. Results

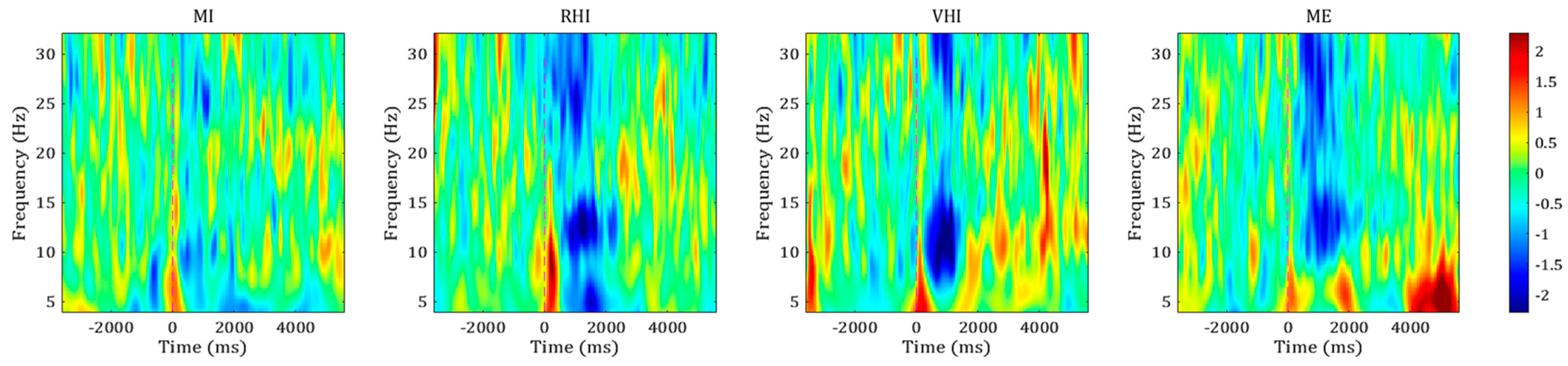

3.1. ERSP Map and Topographical Distrubution

3.2. Analysis of Relative ERD

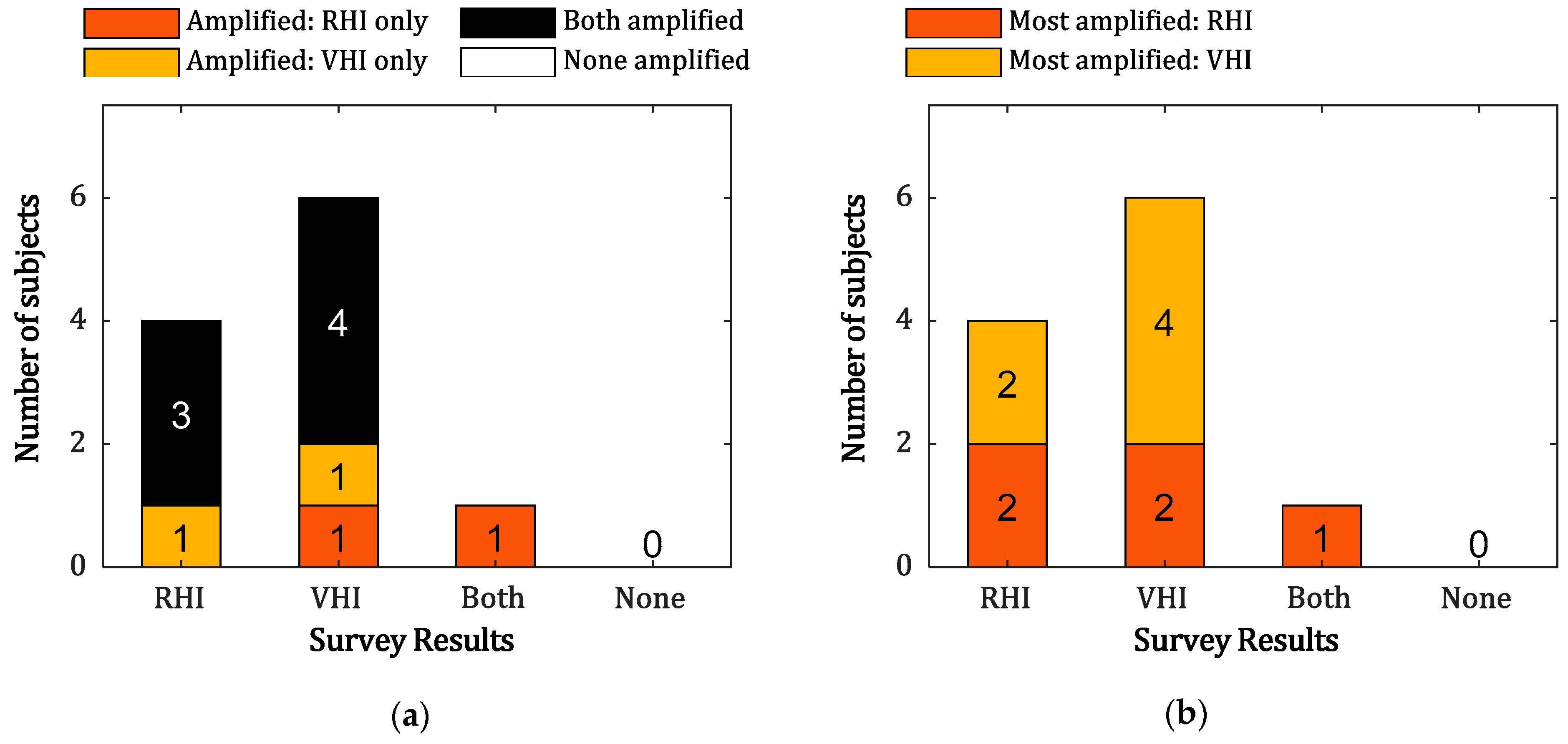

3.3. Questionnaire

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Mulder, T. Motor imagery and action observation: Cognitive tools for rehabilitation. J. Neural Transm. 2007, 114, 1265–1278. [Google Scholar] [CrossRef] [Green Version]

- Pfurtscheller, G.; Neuper, C. Motor imagery activates primary sensorimotor area in humans. Neurosci. Lett. 1997, 239, 65–68. [Google Scholar] [CrossRef]

- Bai, O.; Rathi, V.; Lin, P.; Huang, D.; Battapady, H.; Fei, D.-Y.; Schneider, L.; Houdayer, E.; Chen, X.; Hallett, M. Prediction of human voluntary movement before it occurs. Clin. Neurophysiol. 2011, 122, 364–372. [Google Scholar] [CrossRef] [Green Version]

- Tang, Z.; Sun, S.; Zhang, S.; Chen, Y.; Li, C.; Chen, S. A Brain-Machine Interface Based on ERD/ERS for an Upper-Limb Exoskeleton Control. Sensors 2016, 16, 2050. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Song, M.; Oh, S.; Jeong, H.; Kim, J.; Kim, J. A Novel Movement Intention Detection Method for Neurorehabilitation Brain-Computer Interface System. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 1016–1021. [Google Scholar]

- Daly, J.J.; Wolpaw, J.R. Brain-computer interfaces in neurological rehabilitation. Lancet Neurol. 2008, 7, 1032–1043. [Google Scholar] [CrossRef]

- Tsui, C.S.; Gan, J.Q.; Hu, H. A self-paced motor imagery based brain-computer interface for robotic wheelchair control. Clin. EEG Neurosci. 2011, 42, 225–229. [Google Scholar] [CrossRef] [PubMed]

- McFarland, D.J.; Miner, L.A.; Vaughan, T.M.; Wolpaw, J.R. Mu and beta rhythm topographies during motor imagery and actual movements. Brain Topogr. 2000, 12, 177–186. [Google Scholar] [CrossRef]

- Brinkman, L.; Stolk, A.; Dijkerman, H.C.; De Lange, F.P.; Toni, I. Distinct Roles for Alpha- and Beta-Band Oscillations during Mental Simulation of Goal-Directed Actions. J. Neurosci. 2014, 34, 14783–14792. [Google Scholar] [CrossRef]

- Duann, J.-R.; Chiou, J.-C. A Comparison of Independent Event-Related Desynchronization Responses in Motor-Related Brain Areas to Movement Execution, Movement Imagery, and Movement Observation. PLoS ONE 2016, 11, e0162546. [Google Scholar] [CrossRef]

- Lotte, F.; Bougrain, L.; Cichocki, A.; Clerc, M.; Congedo, M.; Rakotomamonjy, A.; Yger, F. A review of classification algorithms for EEG-based brain–computer interfaces: A 10 year update. J. Neural Eng. 2018, 15, 031005. [Google Scholar] [CrossRef] [Green Version]

- Padfield, N.; Zabalza, J.; Zhao, H.; Masero, V.; Ren, J. EEG-Based Brain-Computer Interfaces Using Motor-Imagery: Techniques and Challenges. Sensors 2019, 19, 1423. [Google Scholar] [CrossRef] [Green Version]

- Kaneko, N.; Masugi, Y.; Yokoyama, H.; Nakazawa, K. Difference in phase modulation of corticospinal excitability during the observation of the action of walking, with and without motor imagery. Neuroreport 2018, 29, 169–173. [Google Scholar] [CrossRef]

- Williams, S.E. Comparing movement imagery and action observation as techniques to increase imagery ability. Psychol. Sport Exerc. 2019, 44, 99–106. [Google Scholar] [CrossRef]

- Liang, S.; Choi, K.-S.; Qin, J.; Pang, W.-M.; Wang, Q.; Heng, P.-A. Improving the discrimination of hand motor imagery via virtual reality based visual guidance. Comput. Methods Programs Biomed. 2016, 132, 63–74. [Google Scholar] [CrossRef] [Green Version]

- Choi, J.W.; Kim, B.H.; Huh, S.; Jo, S. Observing Actions Through Immersive Virtual Reality Enhances Motor Imagery Training. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1614–1622. [Google Scholar] [CrossRef]

- Perez-Marcos, D.; Slater, M.; Sanchez-Vives, M.V. Inducing a Virtual Hand Ownership Illusion through a Brain–Computer Interface. Neuroreport 2009, 20, 589–594. [Google Scholar] [CrossRef]

- Choi, J.W.; Huh, S.; Jo, S. Improving performance in motor imagery BCI-based control applications via virtually embodied feedback. Comput. Biol. Med. 2020, 127, 104079. [Google Scholar] [CrossRef]

- Škola, F.; Tinková, S.; Liarokapis, F. Progressive Training for Motor Imagery Brain-Computer Interfaces Using Gamification and Virtual Reality Embodiment. Front. Hum. Neurosci. 2019, 13, 329. [Google Scholar] [CrossRef] [PubMed]

- Song, M.; Kim, J. A Paradigm to Enhance Motor Imagery Using Rubber Hand Illusion Induced by Visuo-Tactile Stimulus. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 477–486. [Google Scholar] [CrossRef] [PubMed]

- Botvinick, M.; Cohen, J. Rubber hands ‘feel’ touch that eyes see. Nature 1998, 391, 756. [Google Scholar] [CrossRef]

- Christ, O.; Reiner, M. Perspectives and possible applications of the rubber hand and virtual hand illusion in non-invasive rehabilitation: Technological improvements and their consequences. Neurosci. Biobehav. Rev. 2014, 44, 33–44. [Google Scholar] [CrossRef] [PubMed]

- Erro, R.; Marotta, A.; Tinazzi, M.; Frera, E.; Fiorio, M. Judging the position of the artificial hand induces a “visual” drift towards the real one during the rubber hand illusion. Sci. Rep. 2018, 8, 2531. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Slater, M. Towards a digital body: The virtual arm illusion. Front. Hum. Neurosci. 2008, 2, 6. [Google Scholar] [CrossRef] [Green Version]

- Sanchez-Vives, M.V.; Spanlang, B.; Frisoli, A.; Bergamasco, M.; Slater, M. Virtual Hand Illusion Induced by Visuomotor Correlations. PLoS ONE 2010, 5, e10381. [Google Scholar] [CrossRef] [Green Version]

- Brugada-Ramentol, V.; Clemens, I.; De Polavieja, G.G. Active control as evidence in favor of sense of ownership in the moving Virtual Hand Illusion. Conscious. Cogn. 2019, 71, 123–135. [Google Scholar] [CrossRef] [PubMed]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [Green Version]

- Meirovitch, Y.; Harris, H.; Dayan, E.; Arieli, A.; Flash, T. Alpha and Beta Band Event-Related Desynchronization Reflects Kinematic Regularities. J. Neurosci. 2015, 35, 1627–1637. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vigário, R.N. Extraction of ocular artefacts from EEG using independent component analysis. Electroencephalogr. Clin. Neurophysiol. 1997, 103, 395–404. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Lopes da Silva, F.H. Event-related EEG/MEG synchronization and desynchronization: Basic principles. Clin. Neurophysiol. 1999, 110(11), 1842–1857. [Google Scholar] [CrossRef]

- Jeon, Y.; Nam, C.S.; Kim, Y.-J.; Whang, M.C. Event-related (De)synchronization (ERD/ERS) during motor imagery tasks: Implications for brain–computer interfaces. Int. J. Ind. Ergon. 2011, 41, 428–436. [Google Scholar] [CrossRef]

- Pfurtscheller, G. Functional brain imaging based on ERD/ERS. Vis. Res. 2001, 41, 1257–1260. [Google Scholar] [CrossRef] [Green Version]

- Sawilowsky, S.; Fahoome, G. Friedman’s Test. In Wiley StatsRef: Statistics Reference Online; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2014. [Google Scholar]

- Rey, D.; Neuhäuser, M. Wilcoxon-Signed-Rank Test. In International Encyclopedia of Statistical Science; Lovric, M., Ed.; Springer: Berlin, Germany, 2011; pp. 1658–1659. [Google Scholar]

- Garrison, K.A.; Winstein, C.J.; Aziz-Zadeh, L. The Mirror Neuron System: A Neural Substrate for Methods in Stroke Rehabilitation. Neurorehabilit. Neural Repair 2010, 24, 404–412. [Google Scholar] [CrossRef] [PubMed]

- Hardwick, R.M.; Caspers, S.; Eickhoff, S.B.; Swinnen, S.P. Neural correlates of action: Comparing meta-analyses of imagery, observation, and execution. Neurosci. Biobehav. Rev. 2018, 94, 31–44. [Google Scholar] [CrossRef] [PubMed]

- Kumar, S.; Riddoch, M.J.; Humphreys, G. Mu rhythm desynchronization reveals motoric influences of hand action on object recognition. Front. Hum. Neurosci. 2013, 7, 66. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Arroyo, S.; Lesser, R.P.; Gordon, B.; Uematsu, S.; Jackson, D.; Webber, R. Functional significance of the mu rhythm of human cortex: An electrophysiologic study with subdural electrodes. Electroencephalogr. Clin. Neurophysiol. 1993, 87, 76–87. [Google Scholar] [CrossRef]

- Jeong, H.; Song, M.; Oh, S.; Kim, J.; Kim, J. Toward Comparison of Cortical Activation with Different Motor Learning Methods Using Event-Related Design: EEG-fNIRS Study. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019. [Google Scholar]

- Graimann, B.; Allison, B.; Pfurtsheller, G. Brain-Computer Interfaces: Revolutionizing Human-Computer Interaction; Springer: Berlin, Germany, 2010; pp. 10–11. [Google Scholar]

- Zhang, J.; Wang, B.; Zhang, C.; Xiao, Y.; Wang, M.Y. An EEG/EMG/EOG-Based Multimodal Human-Machine Interface to Real-Time Control of a Soft Robot Hand. Front. Neurorobotics 2019, 13, 7. [Google Scholar] [CrossRef] [Green Version]

- Tariq, M.; Trivailo, P.M.; Simic, M. Mu-Beta event-related (de)synchronization and EEG classification of left-right foot dorsiflexion kinaesthetic motor imagery for BCI. PLoS ONE 2020, 15, e0230184. [Google Scholar] [CrossRef] [PubMed]

- Pfurtscheller, G.; Neuper, C.; Flotzinger, D.; Pregenzer, M. EEG-based discrimination between imagination of right and left hand movement. Electroencephalogr. Clin. Neurophysiol. 1997, 103, 642–651. [Google Scholar] [CrossRef]

- Neuper, C.; Scherer, R.; Wriessnegger, S.; Pfurtscheller, G. Motor imagery and action observation: Modulation of sensorimotor brain rhythms during mental control of a brain–computer interface. Clin. Neurophysiol. 2009, 120, 239–247. [Google Scholar] [CrossRef]

- Morash, V.; Bai, O.; Furlani, S.; Lin, P.; Hallett, M. Classifying EEG signals preceding right hand, left hand, tongue, and right foot movements and motor imageries. Clin. Neurophysiol. 2008, 119, 2570–2578. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Gao, X.; Hong, B.; Gao, S. Practical Designs of Brain–Computer Interfaces Based on the Modulation of EEG Rhythms. In Brain-Computer Interfaces; Springer: Berlin, Germany, 2009; pp. 137–154. [Google Scholar]

- Filimon, F.; Nelson, J.D.; Hagler, D.J.; Sereno, M.I. Human cortical representations for reaching: Mirror neurons for execution, observation, and imagery. NeuroImage 2007, 37, 1315–1328. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kalckert, A.; Ehrsson, H.H. The Onset Time of the Ownership Sensation in the Moving Rubber Hand Illusion. Front. Psychol. 2017, 8, 344. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Marotta, A.; Bombieri, F.; Zampini, M.; Schena, F.; Dallocchio, C.; Fiorio, M.; Tinazzi, M. The Moving Rubber Hand Illusion Reveals that Explicit Sense of Agency for Tapping Movements Is Preserved in Functional Movement Disorders. Front. Hum. Neurosci. 2017, 11, 291. [Google Scholar] [CrossRef] [PubMed]

| Friedman Test | Wilcoxon Signed Rank Test | |||||||

|---|---|---|---|---|---|---|---|---|

| Channel | Parameter | p-Value | ||||||

| MI-RHI | MI-VHI | MI-ME | RHI-VHI | RHI-ME | VHI-ME | |||

| Contralateral channel | Peak ERD amplitude | 0.008 ** | 0.016 * | 0.016 * | 0.003 ** | 0.722 | 0.790 | 0.534 |

| Latency | 0.032 * | 0.155 | 0.041 * | 0.050 * | 0.016 * | 0.004 ** | 0.248 | |

| Ipsilateral channel | Peak ERD amplitude | 0.058 | 0.091 | 0.594 | 0.033 * | 0.722 | 0.182 | 0.110 |

| Latency | 0.025 * | 0.114 | 0.026 * | 0.013 * | 0.026 * | 0.091 | 0.477 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeong, H.; Kim, J. Development of a Guidance System for Motor Imagery Enhancement Using the Virtual Hand Illusion. Sensors 2021, 21, 2197. https://doi.org/10.3390/s21062197

Jeong H, Kim J. Development of a Guidance System for Motor Imagery Enhancement Using the Virtual Hand Illusion. Sensors. 2021; 21(6):2197. https://doi.org/10.3390/s21062197

Chicago/Turabian StyleJeong, Hojun, and Jonghyun Kim. 2021. "Development of a Guidance System for Motor Imagery Enhancement Using the Virtual Hand Illusion" Sensors 21, no. 6: 2197. https://doi.org/10.3390/s21062197