An Enhanced Intrusion Detection Model Based on Improved kNN in WSNs

Abstract

:1. Introduction

- We propose an intelligent intrusion detection model based on edge intelligence that is deployed at the edge of the WSN node ();

- We propose a parallelized arithmetic optimization algorithm and achieve outstanding results compared to another algorithm;

- Through standard data set testing, our edge intelligent intrusion detection model has good performance in detecting DoS attacks.

2. Related Works

2.1. Arithmetic Optimization Algorithm (AOA)

2.2. K-Nearest Neighbor (kNN)

3. Improved AOA

3.1. Lévy AOA

Lévy Flight

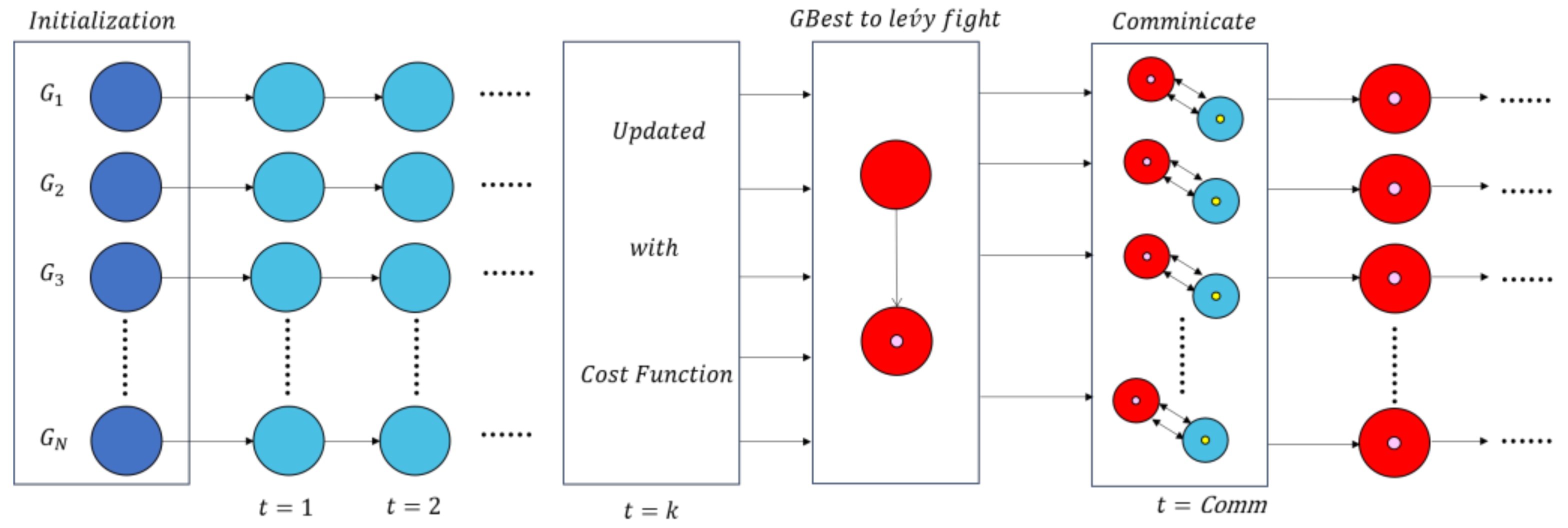

3.2. Parallel Lévy AOA (PL-AOA)

Parallel Strategy Based on Lévy AOA

- (1)

- Initialization:

- (2)

- Evaluation:

- (3)

- Update:

- (4)

- Communication:

- (5)

- Termination:

| Algorithm 1 Pseudocode of PL-AOA |

| 1: Initialize the parameters related to the algorithm: . |

| 2: Generate initial population containing individuals . |

| 3: Divide into 4 groups. |

| 4: Do |

| 5: if |

| 6: Update the by Equation (1). |

| 7: else |

| 8: Update the by Equation (2). |

| 9: for i = 1: |

| 10: for i = 1: |

| 11: if |

| 12: Update the best solution obtained so far. |

| 13: Change flight status according to iteration. |

| 14: end |

| 15: if |

| 16: Update the by Equation (6) and calculate its fitness value. |

| 17: if |

| 18: Update the best solution obtained so far. |

| 19: Change flight status according to iteration. |

| 20: end |

| 21: end |

| 22: While ( |

| 23: Return the best solution obtained so far as the global optimum. |

4. An Edge-Intelligent WSN Intrusion Detection System

4.1. Weighted kNN

4.2. PL-AOA Combined with kNN

4.3. WSN Intrusion Detection System

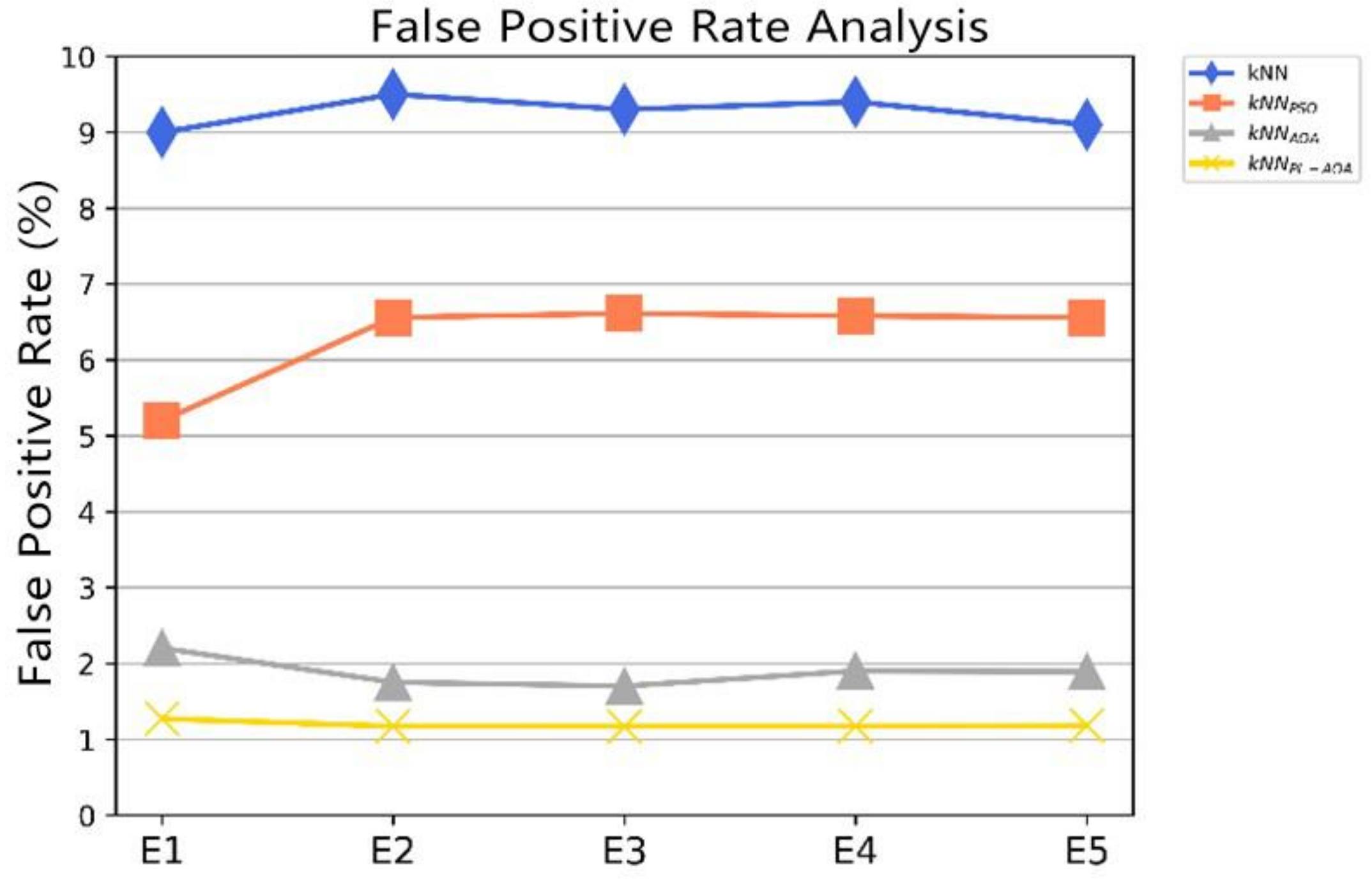

4.4. Performance Evaluation of Intrusion Detection System

5. Simulation Experiment and Analysis

5.1. The Experimental Results and Conclusions of PL-AOA

5.2. The Experimental Results and Conclusions of WSN Intrusion Detection System

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Haseeb, K.; Din, I.U.; Almogren, A.; Islam, N. An Energy Efficient and Secure IoT-Based WSN Framework: An Application to Smart Agriculture. Sensors 2020, 20, 2081. [Google Scholar] [CrossRef] [PubMed]

- Creech, G.; Hu, J. A Semantic Approach to Host-Based Intrusion Detection Systems Using Contiguousand Discontiguous System Call Patterns. IEEE Trans. Comput. 2014, 63, 807–819. [Google Scholar] [CrossRef]

- Vokorokos, L.; BaláŽ, A. Host-Based Intrusion Detection System. In Proceedings of the 2010 IEEE 14th International Conference on Intelligent Engineering Systems, Las Palmas, Spain, 5–7 May 2010; pp. 43–47. [Google Scholar] [CrossRef]

- Yeung, D.Y.; Ding, Y. Host-Based Intrusion Detection Using Dynamic and Static Behavioral Models. Pattern Recognit. 2003, 36, 229–243. [Google Scholar] [CrossRef] [Green Version]

- Vigna, G.; Kemmerer, R.A. NetSTAT: A Network-Based Intrusion Detection System. J. Comput. Secur. 1999, 7, 37–71. [Google Scholar] [CrossRef] [Green Version]

- Bivens, A.; Palagiri, C.; Smith, R.; Szymanski, B.; Embrechts, M. Network-Based Intrusion Detection Using Neural Networks. In Proceedings of the Intelligent Engineering Systems through Artificial Neural Networks, St. Louis, MO, USA, 10–13 November 2002; ASME Press: New York, NY, USA, 2002; pp. 579–584. [Google Scholar]

- Snapp, S.R.; Brentano, J.; Dias, G.V.; Goan, T.L.; Heberlein, L.T.; Ho, C.-L.; Levitt, K.N.; Mukherjee, B.; Smaha, S.E.; Grance, T.; et al. DIDS (Distributed Intrusion Detection System)—Motivation, Architecture, and an Early Prototype. In Proceedings of the 14th National Computer Security Conference, Washington, DC, USA, 1–4 October 1991; pp. 1–9. [Google Scholar]

- Farooqi, A.H.; Khan, F.A. A Survey of Intrusion Detection Systems for Wireless Sensor Networks. Int. J. Ad Hoc Ubiquitous Comput. 2012, 9, 69–83. [Google Scholar] [CrossRef]

- Doumit, S.S.; Agrawal, D.P. Self-Organized Criticality & Stochastic Learning Based Intrusion Detection System for Wireless Sensor Networks. In Proceedings of the IEEE Military Communications Conference, MILCOM 2003, Boston, MA, USA, 13–16 October 2003; pp. 609–614. [Google Scholar] [CrossRef]

- Tylman, W. Misuse-Based Intrusion Detection Using Bayesian Networks. Int. J. Crit. Comput. Syst. 2010, 1, 178–190. [Google Scholar] [CrossRef]

- García-Teodoro, P.; Díaz-Verdejo, J.; Maciá-Fernández, G.; Vázquez, E. Anomaly-Based Network Intrusion Detection: Techniques, Systems and Challenges. Comput. Secur. 2009, 28, 18–28. [Google Scholar] [CrossRef]

- Aljawarneh, S.; Aldwairi, M.; Yassein, M.B. Anomaly-Based Intrusion Detection System through Feature Selection Analysis and Building Hybrid Efficient Model. J. Comput. Sci. 2018, 25, 152–160. [Google Scholar] [CrossRef]

- Sermanet, P.; Chintala, S.; Lecun, Y. Convolutional Neural Networks Applied to House Numbers Digit Classification. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 3288–3291. [Google Scholar]

- Breiman, L.E.O. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Lewis, D.D. Naive (Bayes) at Forty: The Independence Assumption in Information Retrieval. In Proceedings of the 10th European Conference on Machine Learning, Chemnitz, Germany, 21–23 April 1998; Springer: Berlin/Heidelberg, Germany, 1998; Volume 1398, pp. 4–15. [Google Scholar]

- Safavian, S.R.; Landgrebe, D. A survey of decision tree classifier methodology. IEEE Trans. Syst. Man Cybern. 1991, 21, 660–674. [Google Scholar] [CrossRef] [Green Version]

- Fukunaga, K.; Narendra, P.M. A Branch and Bound Algorithm for Computing K-Nearest Neighbors. IEEE Trans. Comput. 1975, 100, 750–753. [Google Scholar] [CrossRef]

- Zhang, G.; Wang, T.; Wang, G.; Liu, A.; Jia, W. Detection of Hidden Data Attacks Combined Fog Computing and Trust Evaluation Method in Sensor-Cloud System. Concurr. Comput. Pract. Exp. 2021, 33, 1. [Google Scholar] [CrossRef]

- Khan, M.A.; Khan, M.A.; Jan, S.U.; Ahmad, J.; Jamal, S.S.; Shah, A.A.; Pitropakis, N.; Buchanan, W.J. A Deep Learning-Based Intrusion Detection System for Mqtt Enabled Iot. Sensors 2021, 21, 7016. [Google Scholar] [CrossRef]

- Kelli, V.; Argyriou, V.; Lagkas, T.; Fragulis, G.; Grigoriou, E.; Sarigiannidis, P. Ids for Industrial Applications: A Federated Learning Approach with Active Personalization. Sensors 2021, 21, 6743. [Google Scholar] [CrossRef]

- Tan, S. An Effective Refinement Strategy for kNN Text Classifier. Expert Syst. Appl. 2006, 30, 290–298. [Google Scholar] [CrossRef]

- Liang, X.; Gou, X.; Liu, Y. Fingerprint-Based Location Positoning Using Improved kNN. In Proceedings of the 2012 3rd IEEE International Conference on Network Infrastructure and Digital Content, Beijing, China, 21–23 September 2012; pp. 57–61. [Google Scholar] [CrossRef]

- Chen, M.; Guo, J.; Wang, C.; Wu, F. PSO-based adaptively normalized weighted kNN classifier. J. Comput. Inf. Syst. 2015, 11, 1407–1415. [Google Scholar] [CrossRef]

- Xu, H.; Fang, C.; Cao, Q.; Fu, C.; Yan, L.; Wei, S. Application of a Distance-Weighted KNN Algorithm Improved by Moth-Flame Optimization in Network Intrusion Detection. In Proceedings of the 2018 IEEE 4th International Symposium on Wireless Systems within the International Conferences on Intelligent Data Acquisition and Advanced Computing Systems (IDAACS-SWS), Lviv, Ukraine, 20–21 September 2018; pp. 166–170. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef] [Green Version]

- Tahir, M.A.; Bouridane, A.; Kurugollu, F. Simultaneous Feature Selection and Feature Weighting Using Hybrid Tabu Search/K-Nearest Neighbor Classifier. Pattern Recognit. Lett. 2007, 28, 438–446. [Google Scholar] [CrossRef]

- Glover, F.; Laguna, M. Tabu Search, Handbook of Combinatorial Optimization; Springer: Boston, MA, USA, 1998; pp. 2093–2229. [Google Scholar]

- Whitley, D. A Genetic Algorithm Tutorial. Stat. Comput. 1994, 4, 65–85. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential Evolution—A Simple and Efficient Heuristic for Global Optimization over Continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Chu, S.-C.; Tsai, P.; Pan, J.-S. Cat Swarm Optimization. In Proceedings of the 9th Pacific Rim International Conference on Artificial Intelligence, Guilin, China, 7–11 August 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 854–858. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Hatamlou, A. Multi-Verse Optimizer: A Nature-Inspired Algorithm for Global Optimization. Neural Comput. Appl. 2016, 27, 495–513. [Google Scholar] [CrossRef]

- Meng, Z.; Pan, J.S.; Xu, H. QUasi-Affine TRansformation Evolutionary (QUATRE) Algorithm: A Cooperative Swarm Based Algorithm for Global Optimization. Knowl.-Based Syst. 2016, 109, 104–121. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Abd Elaziz, M.; Gandomi, A.H. The Arithmetic Optimization Algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No Free Lunch Theorems for Optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef] [Green Version]

- Iliyasu, A.M.; Fatichah, C. A Quantum Hybrid PSO Combined with Fuzzy K-NN Approach to Feature Selection and Cell Classification in Cervical Cancer Detection. Sensors 2017, 17, 2935. [Google Scholar] [CrossRef] [Green Version]

- Callahan, P.B.; Kosaraju, S.R. A Decomposition of Multidimensional Point Sets with Applications to K-Nearest-Neighbors and N-Body Potential Fields. J. ACM 1995, 42, 67–90. [Google Scholar] [CrossRef]

- Rajagopalan, B.; Lall, U. A K-Nearest-Neighbor Simulator for Daily Precipitation and Other Weather Variables. Water Resour. Res. 1999, 35, 3089–3101. [Google Scholar] [CrossRef] [Green Version]

- Yang, X.S.; Deb, S. Cuckoo Search via Lévy Flights. In Proceedings of the 2009 World Congress on Nature & Biologically Inspired Computing (NaBIC), Coimbatore, India, 9–11 December 2009; pp. 210–214. [Google Scholar] [CrossRef]

- Chang, J.F.; Chu, S.C.; Roddick, J.F.; Pan, J.S. A Parallel Particle Swarm Optimization Algorithm with Communication Strategies. J. Inf. Sci. Eng. 2005, 21, 809–818. [Google Scholar]

- Cheng, D.; Zhang, S.; Deng, Z.; Zhu, Y.; Zong, M. κ NN Algorithm with Data-Driven k Value. In Proceedings of the International Conference on Advanced Data Mining and Applications, 10th International Conference, ADMA 2014, Guilin, China, 19–21 December 2014; Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2014; Volume 8933, pp. 499–512. [Google Scholar] [CrossRef]

- Kaur, T.; Saini, B.S.; Gupta, S. An Adaptive Fuzzy K-Nearest Neighbor Approach for MR Brain Tumor Image Classification Using Parameter Free Bat Optimization Algorithm. Multimed. Tools Appl. 2019, 78, 21853–21890. [Google Scholar] [CrossRef]

- Pan, J.S.; Sun, X.X.; Chu, S.C.; Abraham, A.; Yan, B. Digital Watermarking with Improved SMS Applied for QR Code. Eng. Appl. Artif. Intell. 2021, 97, 104049. [Google Scholar] [CrossRef]

- Marriwala, N.; Rathee, P. An Approach to Increase the Wireless Sensor Network Lifetime. In Proceedings of the 2012 World Congress on Information and Communication Technologies, Trivandrum, India, 30 October–2 November 2012; pp. 495–499. [Google Scholar] [CrossRef]

- Mukherjee, B.; Heberlein, L.T.; Levitt, K.N. Network Intrusion Detection. IEEE Netw. 1994, 8, 26–41. [Google Scholar] [CrossRef]

- Shi, Y.; Tian, Y.; Kou, G.; Peng, Y.; Li, J. Network Intrusion Detection. In Optimization Based Data Mining: Theory and Applications; Advanced Information and Knowledge Processing; Springer: London, UK, 2011; pp. 237–241. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for Solving Optimization Problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Almomani, I.; Al-Kasasbeh, B.; Al-Akhras, M. WSN-DS: A Dataset for Intrusion Detection Systems in Wireless Sensor Networks. J. Sens. 2016, 2016, 4731953. [Google Scholar] [CrossRef] [Green Version]

- Otoum, S.; Kantarci, B.; Mouftah, H.T. On the Feasibility of Deep Learning in Sensor Network Intrusion Detection. IEEE Netw. Lett. 2019, 1, 68–71. [Google Scholar] [CrossRef]

- Almaiah, M.A. A New Scheme for Detecting Malicious Attacks in Wireless Sensor Networks Based on Blockchain Technology; Springer: Cham, Switzerland, 2021; pp. 217–234. [Google Scholar] [CrossRef]

- Sajjad, S.M.; Bouk, S.H.; Yousaf, M. Neighbor Node Trust Based Intrusion Detection System for WSN. Procedia Comput. Sci. 2015, 63, 183–188. [Google Scholar] [CrossRef] [Green Version]

| 30 | 0 | ||

| 30 | 0 | ||

| 30 | 0 | ||

| 30 | 0 | ||

| 30 | 0 | ||

| 30 | 0 | ||

| 30 | 0 | ||

| 2 | 0 | ||

| 2 | 3 | ||

| 4 | |||

| 4 | |||

| 4 |

| Function | Algorithm | Best Value | AVG | STD |

|---|---|---|---|---|

| PL-AOA | ||||

| AOA | ||||

| SCA | ||||

| MVO | ||||

| PL-AOA | ||||

| AOA | ||||

| SCA | ||||

| MVO | ||||

| PL-AOA | ||||

| AOA | ||||

| SCA | ||||

| MVO | ||||

| PL-AOA | ||||

| AOA | ||||

| SCA | ||||

| MVO | ||||

| PL-AOA | ||||

| AOA | ||||

| SCA | ||||

| MVO | ||||

| PL-AOA | ||||

| AOA | ||||

| SCA | ||||

| MVO | ||||

| PL-AOA | ||||

| AOA | ||||

| SCA | ||||

| MVO | ||||

| PL-AOA | ||||

| AOA | ||||

| SCA | ||||

| MVO | ||||

| PL-AOA | ||||

| AOA | ||||

| SCA | ||||

| MVO | ||||

| PL-AOA | ||||

| AOA | ||||

| SCA | ||||

| MVO | ||||

| PL-AOA | ||||

| AOA | ||||

| SCA | ||||

| MVO | ||||

| PL-AOA | ||||

| AOA | ||||

| SCA | ||||

| MVO | ||||

| Compared with the four algorithms | Algorithm | Win | Win | Win |

| PL-AOA | 9 | 9 | 8 | |

| AOA | 0 | 0 | 1 | |

| SCA | 0 | 0 | 0 | |

| MVO | 1 | 1 | 1 |

| Data Set | The Type of Data | ||||

|---|---|---|---|---|---|

| Normal | Blackhole | Grayhole | Flooding | Scheduling Attacks | |

| Number | 340,066 | 10,049 | 14,596 | 3312 | 6638 |

| Model | ACC (%) | DR (%) | FPR (%) |

|---|---|---|---|

| 0.91162 | 0.95291 | 0.51429 | |

| 0.92893 | 0.94226 | 0.035714 | |

| 0.97727 | 0.97861 | 0.045455 | |

| 0.99721 | 0.99171 | 0.068966 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, G.; Zhao, H.; Fan, F.; Liu, G.; Xu, Q.; Nazir, S. An Enhanced Intrusion Detection Model Based on Improved kNN in WSNs. Sensors 2022, 22, 1407. https://doi.org/10.3390/s22041407

Liu G, Zhao H, Fan F, Liu G, Xu Q, Nazir S. An Enhanced Intrusion Detection Model Based on Improved kNN in WSNs. Sensors. 2022; 22(4):1407. https://doi.org/10.3390/s22041407

Chicago/Turabian StyleLiu, Gaoyuan, Huiqi Zhao, Fang Fan, Gang Liu, Qiang Xu, and Shah Nazir. 2022. "An Enhanced Intrusion Detection Model Based on Improved kNN in WSNs" Sensors 22, no. 4: 1407. https://doi.org/10.3390/s22041407

APA StyleLiu, G., Zhao, H., Fan, F., Liu, G., Xu, Q., & Nazir, S. (2022). An Enhanced Intrusion Detection Model Based on Improved kNN in WSNs. Sensors, 22(4), 1407. https://doi.org/10.3390/s22041407