RANSAC for Robotic Applications: A Survey

Abstract

1. Introduction

2. RANSAC

| Algorithm 1: RANSAC algorithm |

|

2.1. Matching Images

2.2. Finding 2D/3D Shapes

3. RANSAC Variants

3.1. Accuracy-Focused Variants

3.1.1. MSAC (M-Estimator SAC)

3.1.2. MLESAC (Maximum Likelihood SAC)

3.1.3. MAPSAC (Maximum A Posterior Estimation SAC)

3.1.4. LO-RANSAC (Locally Optimized RANSAC)

- 1.

- Define a threshold and a number of optimization iterations I.

- 2.

- In each step of the RANSAC method, the samples are selected only from the data points that are consistent with the model created in the previous step.

- 3.

- Take all data points with error smaller than and compute new model parameters according to a linear algorithm. Reduce I by one and iterate until the threshold is .

3.1.5. QDEGSAC (RANSAC for Quasi-Degenerate Data)

3.1.6. Graph-Cut RANSAC

3.2. Speed-Focused Variants

3.2.1. NAPSAC (N Adjacent Points SAmple Consensus)

3.2.2. Randomized RANSAC with Test

3.2.3. Guided-MLESAC

3.2.4. RANSAC with Bail-Out Test

3.2.5. Randomized RANSAC with Sequential Probability Ratio Test

3.2.6. PROSAC (Progressive Sample Consensus)

3.2.7. GASAC (Genetic Algorithm SAC)

3.2.8. One-Point RANSAC

3.2.9. GCSAC (Geometrical Constraint SAmple Consensus)

3.2.10. Latent RANSAC

3.3. Robustness-Focused Variants

3.3.1. AMLESAC

3.3.2. u-MLESAC

3.3.3. Recursive RANSAC

3.3.4. SC-RANSAC (Spatial Consistency RANSAC)

3.3.5. NG-RANSAC (Neural-Guided RANSAC)

3.3.6. LP-RANSAC (Locality-Preserving RANSAC)

3.4. Optimality-Focused Variants

3.4.1. Optimal Randomized RANSAC

3.4.2. Optimal RANSAC

4. Applications

4.1. Image Matching

4.2. Shape Detection

4.3. Hardware Acceleration

5. Software

5.1. OpenCV

5.2. Point Cloud Library

5.3. Other Software

6. Discussion and Conclusions

- 1.

- RANSAC is a good alternative to deep learning approaches when the model whose parameters we want to estimate is known in advance, which is the case, e.g., of shape matching of simple objects in many robotic applications.

- 2.

- Theoretical analysis of the probability of estimating the model parameters is possible, and this can lead to optimal use of resources in embedded devices or real-time applications.

- 3.

- Open-source implementations of RANSAC variants are available for the robotics community.

- 4.

- Research in parallelization and hybrid approaches with deep learning methods could be promising.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep learning for computer vision: A brief review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep learning for visual understanding: A review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Choi, S.; Kim, T.; Yu, W. Performance evaluation of RANSAC family. In Proceedings of the British Machine Vision Conference, London, UK, 7–10 September 2009; pp. 81.1–81.12. [Google Scholar]

- Rousseeuw, P.J. Least median of squares regression. J. Am. Stat. Assoc. 1984, 79, 871–880. [Google Scholar] [CrossRef]

- Subbarao, R.; Meer, P. Subspace estimation using projection based M-estimators over Grassmann manifolds. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 301–312. [Google Scholar]

- Zeineldin, R.A.; El-Fishawy, N.A. A survey of RANSAC enhancements for plane detection in 3D point clouds. Menoufia J. Electron. Eng. Res. 2017, 26, 519–537. [Google Scholar] [CrossRef]

- Strandmark, P.; Gu, I.Y. Joint random sample consensus and multiple motion models for robust video tracking. In Proceedings of the Scandinavian Conference on Image Analysis, Oslo, Norway, 15–18 June 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 450–459. [Google Scholar]

- Vedaldi, A.; Jin, H.; Favaro, P.; Soatto, S. KALMANSAC: Robust filtering by consensus. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05), Beijing, China, 17–21 October 2005; Volume 1, pp. 633–640. [Google Scholar]

- Derpanis, K.G. Overview of the RANSAC Algorithm. Image Rochester NY 2010, 4, 2–3. [Google Scholar]

- Hoseinnezhad, R.; Bab-Hadiashar, A. An M-estimator for high breakdown robust estimation in computer vision. Comput. Vis. Image Underst. 2011, 115, 1145–1156. [Google Scholar] [CrossRef]

- Shapira, G.; Hassner, T. Fast and accurate line detection with GPU-based least median of squares. J. Real-Time Image Process. 2020, 17, 839–851. [Google Scholar] [CrossRef]

- Korman, S.; Litman, R. Latent RANSAC. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6693–6702. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Torr, P.H.; Zisserman, A. Robust parameterization and computation of the trifocal tensor. Image Vis. Comput. 1997, 15, 591–605. [Google Scholar] [CrossRef]

- Torr, P.H.; Zisserman, A. MLESAC: A new robust estimator with application to estimating image geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef]

- Torr, P.H.S. Bayesian model estimation and selection for epipolar geometry and generic manifold fitting. Int. J. Comput. Vis. 2002, 50, 35–61. [Google Scholar] [CrossRef]

- Chum, O.; Matas, J.; Kittler, J. Locally optimized RANSAC. In Proceedings of the Joint Pattern Recognition Symposium, Magdeburg, Germany, 10–12 September 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 236–243. [Google Scholar]

- Frahm, J.M.; Pollefeys, M. RANSAC for (quasi-) degenerate data (QDEGSAC). In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 1, pp. 453–460. [Google Scholar]

- Barath, D.; Matas, J. Graph-cut RANSAC. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6733–6741. [Google Scholar]

- Myatt, D.R.; Torr, P.H.; Nasuto, S.J.; Bishop, J.M. NAPSAC: High noise, high dimensional robust estimation-it’s in the bag. In Proceedings of the British Machine Vision Conference (BMVC), Cardiff, UK, 2–5 September 2002; Volume 2, p. 3. [Google Scholar]

- Matas, J.; Chum, O. Randomized RANSAC with Td, d test. Image Vis. Comput. 2004, 22, 837–842. [Google Scholar] [CrossRef]

- Tordoff, B.J.; Murray, D.W. Guided-MLESAC: Faster image transform estimation by using matching priors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1523–1535. [Google Scholar] [CrossRef] [PubMed]

- Capel, D.P. An Effective Bail-out Test for RANSAC Consensus Scoring. In Proceedings of the British Machine Vision Conference (BMVC), Oxford, UK, 5–8 September 2005; Volume 1, p. 2. [Google Scholar]

- Matas, J.; Chum, O. Randomized RANSAC with sequential probability ratio test. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05), Beijing, China, 17–21 October 2005; Volume 2, pp. 1727–1732. [Google Scholar]

- Chum, O.; Matas, J. Matching with PROSAC-progressive sample consensus. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 220–226. [Google Scholar]

- Rodehorst, V.; Hellwich, O. Genetic algorithm sample consensus (GASAC)-a parallel strategy for robust parameter estimation. In Proceedings of the 2006 IEEE Conference on Computer Vision and Pattern Recognition Workshop (CVPRW’06), New York, NY, USA, 17–22 June 2006; p. 103. [Google Scholar]

- Civera, J.; Grasa, O.G.; Davison, A.J.; Montiel, J.M. 1-Point RANSAC for extended Kalman filtering: Application to real-time structure from motion and visual odometry. J. Field Robot. 2010, 27, 609–631. [Google Scholar] [CrossRef]

- Le, V.H.; Vu, H.; Nguyen, T.T.; Le, T.L.; Tran, T.H. Acquiring qualified samples for RANSAC using geometrical constraints. Pattern Recognit. Lett. 2018, 102, 58–66. [Google Scholar] [CrossRef]

- Konouchine, A.; Gaganov, V.; Veznevets, V. AMLESAC: A new maximum likelihood robust estimator. In Proceedings of the GraphiCon, Novosibirsk, Russia, 20–24 June 2005; Volume 5, pp. 93–100. [Google Scholar]

- Choi, S.; Kim, J.H. Robust regression to varying data distribution and its application to landmark-based localization. In Proceedings of the 2008 IEEE International Conference on Systems, Man and Cybernetics, Singapore, 12–15 October 2008; pp. 3465–3470. [Google Scholar]

- Niedfeldt, P.C.; Beard, R.W. Recursive RANSAC: Multiple signal estimation with outliers. IFAC Proc. Vol. 2013, 46, 430–435. [Google Scholar] [CrossRef]

- Fotouhi, M.; Hekmatian, H.; Kashani-Nezhad, M.A.; Kasaei, S. SC-RANSAC: Spatial consistency on RANSAC. Multimed. Tools Appl. 2019, 78, 9429–9461. [Google Scholar] [CrossRef]

- Brachmann, E.; Rother, C. Neural-guided RANSAC: Learning where to sample model hypotheses. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4322–4331. [Google Scholar]

- Wang, G.; Sun, X.; Shang, Y.; Wang, Z.; Shi, Z.; Yu, Q. Two-view geometry estimation using RANSAC with locality preserving constraint. IEEE Access 2020, 8, 7267–7279. [Google Scholar] [CrossRef]

- Chum, O.; Matas, J. Optimal randomized RANSAC. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1472–1482. [Google Scholar] [CrossRef]

- Hast, A.; Nysjö, J.; Marchetti, A. Optimal RANSAC-towards a repeatable algorithm for finding the optimal set. J. WSCG 2013, 21, 21–30. [Google Scholar]

- Akaike, H. A new look at the statistical model identification. IEEE Trans. Autom. Control 1974, 19, 716–723. [Google Scholar] [CrossRef]

- Schwarz, G. Estimating the dimension of a model. Ann. Stat. 1978, 6, 461–464. [Google Scholar] [CrossRef]

- Rissanen, J. Modeling by shortest data description. Automatica 1978, 14, 465–471. [Google Scholar] [CrossRef]

- Tordoff, B.; Murray, D.W. Guided sampling and consensus for motion estimation. In Proceedings of the European Conference on Computer Vision, Copenhagen, Denmark, 28–31 May 2002; Springer: Berlin/Heidelberg, Germany, 2002; pp. 82–96. [Google Scholar]

- Boykov, Y.; Veksler, O. Graph cuts in vision and graphics: Theories and applications. In Handbook of Mathematical Models in Computer Vision; Springer: Boston, MA, USA, 2006; pp. 79–96. [Google Scholar]

- Barath, D.; Valasek, G. Space-Partitioning RANSAC. arXiv 2021, arXiv:2111.12385. [Google Scholar]

- Barath, D.; Matas, J. Graph-cut RANSAC: Local optimization on spatially coherent structures. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4961–4974. [Google Scholar] [CrossRef]

- Raguram, R.; Chum, O.; Pollefeys, M.; Matas, J.; Frahm, J.M. USAC: A universal framework for random sample consensus. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 2022–2038. [Google Scholar] [CrossRef]

- Barath, D.; Noskova, J.; Ivashechkin, M.; Matas, J. MAGSAC++, a fast, reliable and accurate robust estimator. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1304–1312. [Google Scholar]

- Wald, A. Sequential Analysis; Courier Corporation: New York, NY, USA, 1947. [Google Scholar]

- Ribeiro, M.I. Kalman and extended Kalman filters: Concept, derivation and properties. Inst. Syst. Robot. 2004, 43, 46. [Google Scholar]

- Xu, L.; Oja, E.; Kultanen, P. A new curve detection method: Randomized Hough transform (RHT). Pattern Recognit. Lett. 1990, 11, 331–338. [Google Scholar] [CrossRef]

- Aiger, D.; Kokiopoulou, E.; Rivlin, E. Random grids: Fast approximate nearest neighbors and range searching for image search. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 3471–3478. [Google Scholar]

- Torr, P.; Zisserman, A. Robust computation and parametrization of multiple view relations. In Proceedings of the Sixth International Conference on Computer Vision (IEEE Cat. No. 98CH36271), Bombay, India, 7 January 1998; pp. 727–732. [Google Scholar]

- Moon, T.K. The expectation-maximization algorithm. IEEE Signal Process. Mag. 1996, 13, 47–60. [Google Scholar] [CrossRef]

- Engel, Y.; Mannor, S.; Meir, R. The kernel recursive least-squares algorithm. IEEE Trans. Signal Process. 2004, 52, 2275–2285. [Google Scholar] [CrossRef]

- Illingworth, J.; Kittler, J. A survey of the Hough transform. Comput. Vis. Graph. Image Process. 1988, 44, 87–116. [Google Scholar] [CrossRef]

- Shan, Y.; Matei, B.; Sawhney, H.S.; Kumar, R.; Huber, D.; Hebert, M. Linear model hashing and batch RANSAC for rapid and accurate object recognition. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2004. CVPR 2004, Washington, DC, USA, 27 June–2 July 2004; Volume 2, p. II. [Google Scholar]

- Ma, J.; Zhao, J.; Jiang, J.; Zhou, H.; Guo, X. Locality preserving matching. Int. J. Comput. Vis. 2019, 127, 512–531. [Google Scholar] [CrossRef]

- Macario Barros, A.; Michel, M.; Moline, Y.; Corre, G.; Carrel, F. A comprehensive survey of visual SLAM algorithms. Robotics 2022, 11, 24. [Google Scholar] [CrossRef]

- Bahraini, M.S.; Bozorg, M.; Rad, A.B. SLAM in dynamic environments via ML-RANSAC. Mechatronics 2018, 49, 105–118. [Google Scholar] [CrossRef]

- Bahraini, M.S.; Rad, A.B.; Bozorg, M. SLAM in dynamic environments: A deep learning approach for moving object tracking using ML-RANSAC algorithm. Sensors 2019, 19, 3699. [Google Scholar] [CrossRef]

- Zhang, D.; Zhu, J.; Wang, F.; Hu, X.; Ye, X. GMS-RANSAC: A Fast Algorithm for Removing Mismatches Based on ORB-SLAM2. Symmetry 2022, 14, 849. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. ORB-SLAM3: An accurate open-source library for visual, visual–inertial, and multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Bian, J.; Lin, W.Y.; Matsushita, Y.; Yeung, S.K.; Nguyen, T.D.; Cheng, M.M. GMS: Grid-based motion statistics for fast, ultra-robust feature correspondence. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4181–4190. [Google Scholar]

- Kroeger, T.; Dai, D.; Van Gool, L. Joint vanishing point extraction and tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2449–2457. [Google Scholar]

- Wu, J.; Zhang, L.; Liu, Y.; Chen, K. Real-time vanishing point detector integrating under-parameterized RANSAC and Hough transform. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3732–3741. [Google Scholar]

- Shen, X.; Darmon, F.; Efros, A.A.; Aubry, M. RANSAC-flow: Generic two-stage image alignment. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 618–637. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Balntas, V.; Lenc, K.; Vedaldi, A.; Mikolajczyk, K. HPatches: A benchmark and evaluation of handcrafted and learned local descriptors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 5173–5182. [Google Scholar]

- Thomee, B.; Shamma, D.A.; Friedland, G.; Elizalde, B.; Ni, K.; Poland, D.; Borth, D.; Li, L.J. YFCC100M: The new data in multimedia research. Commun. ACM 2016, 59, 64–73. [Google Scholar] [CrossRef]

- Merlet, J.P. Parallel Robots; Springer Science & Business Media: Dordrecht, The Netherlands, 2005; Volume 128. [Google Scholar]

- Gao, G.Q.; Zhang, Q.; Zhang, S. Pose detection of parallel robot based on improved RANSAC algorithm. Meas. Control 2019, 52, 855–868. [Google Scholar] [CrossRef]

- Zhao, Q.; Zhao, D.; Wei, H. Harris-SIFT algorithm and its application in binocular stereo vision. J. Univ. Electron. Sci. Technol. China Pap. 2010, 4, 2–16. [Google Scholar]

- Li, X.; Ren, C.; Zhang, T.; Zhu, Z.; Zhang, Z. Unmanned aerial vehicle image matching based on improved RANSAC algorithm and SURF algorithm. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 42, 67–70. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Zheng, J.; Peng, W.; Wang, Y.; Zhai, B. Accelerated RANSAC for accurate image registration in aerial video surveillance. IEEE Access 2021, 9, 36775–36790. [Google Scholar] [CrossRef]

- Wang, Y.; Zheng, J.; Xu, Q.Z.; Li, B.; Hu, H.M. An improved RANSAC based on the scale variation homogeneity. J. Vis. Commun. Image Represent. 2016, 40, 751–764. [Google Scholar] [CrossRef]

- Petersen, M.; Samuelson, C.; Beard, R.W. Target Tracking and Following from a Multirotor UAV. Curr. Robot. Rep. 2021, 2, 285–295. [Google Scholar] [CrossRef]

- Salehi, B.; Jarahizadeh, S. Improving the UAV-derived DSM by introducing a modified RANSAC algorithm. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 147–152. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Cherian, A.K.; Poovammal, E. Image Augmentation Using Hybrid RANSAC Algorithm. Webology 2021, 18, 237–254. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ali, W.; Abdelkarim, S.; Zidan, M.; Zahran, M.; El Sallab, A. YOLO3D: End-to-end real-time 3d oriented object bounding box detection from lidar point cloud. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops (Part III), Munich, Germany, 8–14 September 2018; pp. 718–728. [Google Scholar]

- Takahashi, M.; Ji, Y.; Umeda, K.; Moro, A. Expandable YOLO: 3D object detection from RGB-D images. In Proceedings of the 2020 21st IEEE International Conference on Research and Education in Mechatronics (REM), Cracow, Poland, 9–11 December 2020; pp. 1–5. [Google Scholar]

- Simony, M.; Milzy, S.; Amendey, K.; Gross, H.M. Complex-YOLO: An Euler-region-proposal for real-time 3D object detection on point clouds. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops (Part I), Munich, Germany, 8–14 September 2018; pp. 197–209. [Google Scholar]

- Hana, X.F.; Jin, J.S.; Xie, J.; Wang, M.J.; Jiang, W. A comprehensive review of 3D point cloud descriptors. arXiv 2018, arXiv:1802.02297. [Google Scholar]

- Chen, J.; Fang, Y.; Cho, Y.K. Performance evaluation of 3D descriptors for object recognition in construction applications. Autom. Constr. 2018, 86, 44–52. [Google Scholar] [CrossRef]

- Kasaei, S.H.; Ghorbani, M.; Schilperoort, J.; van der Rest, W. Investigating the importance of shape features, color constancy, color spaces, and similarity measures in open-ended 3D object recognition. Intell. Serv. Robot. 2021, 14, 329–344. [Google Scholar] [CrossRef]

- Liang, M.; Yang, B.; Wang, S.; Urtasun, R. Deep continuous fusion for multi-sensor 3D object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 641–656. [Google Scholar]

- Qi, S.; Ning, X.; Yang, G.; Zhang, L.; Long, P.; Cai, W.; Li, W. Review of multi-view 3D object recognition methods based on deep learning. Displays 2021, 69, 102053. [Google Scholar] [CrossRef]

- Li, Y.; Yu, A.W.; Meng, T.; Caine, B.; Ngiam, J.; Peng, D.; Shen, J.; Lu, Y.; Zhou, D.; Le, Q.V.; et al. Deepfusion: Lidar-camera deep fusion for multi-modal 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 17182–17191. [Google Scholar]

- Giulietti, N.; Allevi, G.; Castellini, P.; Garinei, A.; Martarelli, M. Rivers’ Water Level Assessment Using UAV Photogrammetry and RANSAC Method and the Analysis of Sensitivity to Uncertainty Sources. Sensors 2022, 22, 5319. [Google Scholar] [CrossRef]

- Tittmann, P.; Shafii, S.; Hartsough, B.; Hamann, B. Tree detection and delineation from LiDAR point clouds using RANSAC. In Proceedings of the SilviLaser, Hobart, TAS, Australia, 16–20 October 2011; pp. 1–23. [Google Scholar]

- Hardy, R.L. Multiquadric equations of topography and other irregular surfaces. J. Geophys. Res. 1971, 76, 1905–1915. [Google Scholar] [CrossRef]

- Gönültaş, F.; AtiK, M.E.; Duran, Z. Extraction of roof planes from different point clouds using RANSAC algorithm. Int. J. Environ. Geoinform. 2020, 7, 165–171. [Google Scholar] [CrossRef]

- Aly, M. Real time detection of lane markers in urban streets. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 7–12. [Google Scholar]

- Borkar, A.; Hayes, M.; Smith, M.T. Robust lane detection and tracking with RANSAC and Kalman filter. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 3261–3264. [Google Scholar]

- Lopez, A.; Canero, C.; Serrat, J.; Saludes, J.; Lumbreras, F.; Graf, T. Detection of lane markings based on ridgeness and RANSAC. In Proceedings of the 2005 IEEE Intelligent Transportation Systems, Vienna, Austria, 16 September 2005; pp. 254–259. [Google Scholar]

- López, A.; Serrat, J.; Canero, C.; Lumbreras, F.; Graf, T. Robust lane markings detection and road geometry computation. Int. J. Automot. Technol. 2010, 11, 395–407. [Google Scholar] [CrossRef]

- Tan, H.; Zhou, Y.; Zhu, Y.; Yao, D.; Wang, J. Improved river flow and random sample consensus for curve lane detection. Adv. Mech. Eng. 2015, 7, 1687814015593866. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Chen, L.; Wang, H.; Wang, H.; Cao, D.; Velenis, E.; Wang, F.Y. Advances in vision-based lane detection: Algorithms, integration, assessment, and perspectives on ACP-based parallel vision. IEEE/CAA J. Autom. Sin. 2018, 5, 645–661. [Google Scholar] [CrossRef]

- Yang, K.; Yu, L.; Xia, M.; Xu, T.; Li, W. Nonlinear RANSAC with crossline correction: An algorithm for vision-based curved cable detection system. Opt. Lasers Eng. 2021, 141, 106417. [Google Scholar] [CrossRef]

- Ding, L.; Goshtasby, A. On the Canny edge detector. Pattern Recognit. 2001, 34, 721–725. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Gallo, O.; Manduchi, R.; Rafii, A. CC-RANSAC: Fitting planes in the presence of multiple surfaces in range data. Pattern Recognit. Lett. 2011, 32, 403–410. [Google Scholar] [CrossRef]

- Qian, X.; Ye, C. NCC-RANSAC: A fast plane extraction method for 3-D range data segmentation. IEEE Trans. Cybern. 2014, 44, 2771–2783. [Google Scholar] [CrossRef]

- Choi, S.; Park, J.; Byun, J.; Yu, W. Robust ground plane detection from 3D point clouds. In Proceedings of the 2014 14th IEEE International Conference on Control, Automation and Systems (ICCAS 2014), Gyeonggi-do, Republic of Korea, 22–25 October 2014; pp. 1076–1081. [Google Scholar]

- Yue, W.; Lu, J.; Zhou, W.; Miao, Y. A new plane segmentation method of point cloud based on Mean Shift and RANSAC. In Proceedings of the 2018 IEEE Chinese Control And Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; pp. 1658–1663. [Google Scholar]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

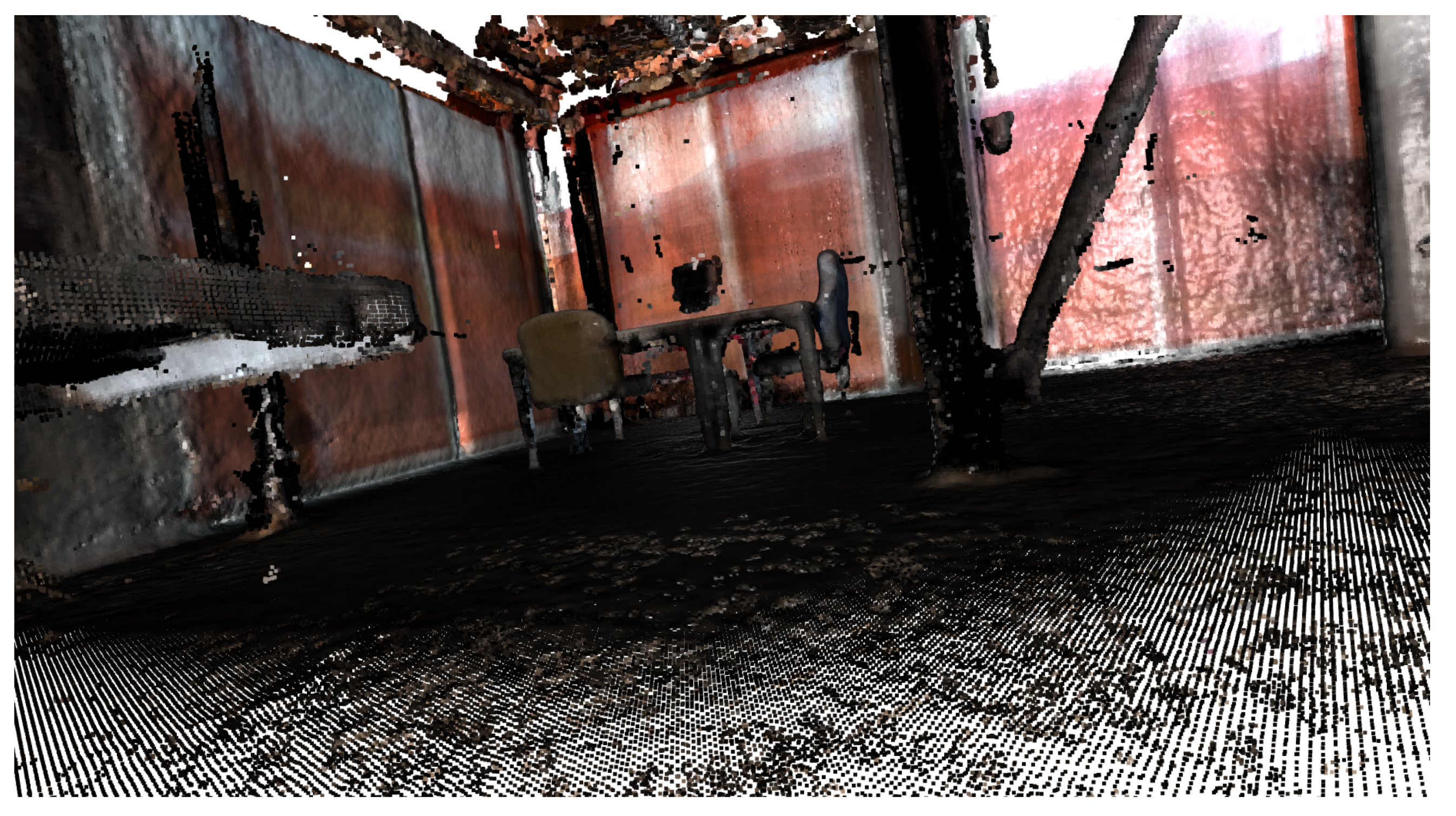

- Martínez-Otzeta, J.M.; Mendialdua, I.; Rodríguez-Moreno, I.; Rodriguez, I.R.; Sierra, B. An Open-source Library for Processing of 3D Data from Indoor Scenes. In Proceedings of the 11th International Conference on Pattern Recognition Applications and Methods (ICPRAM 2022), Online, 3–5 February 2022; pp. 610–615. [Google Scholar]

- Wu, Y.; Li, G.; Xian, C.; Ding, X.; Xiong, Y. Extracting POP: Pairwise orthogonal planes from point cloud using RANSAC. Comput. Graph. 2021, 94, 43–51. [Google Scholar] [CrossRef]

- Moré, J.J. The Levenberg-Marquardt algorithm: Implementation and theory. In Numerical Analysis; Springer: Berlin/Heidelberg, Germany, 1978; pp. 105–116. [Google Scholar]

- Armeni, I.; Sax, S.; Zamir, A.R.; Savarese, S. Joint 2D-3D-semantic data for indoor scene understanding. arXiv 2017, arXiv:1702.01105. [Google Scholar]

- Xiong, X.; Adan, A.; Akinci, B.; Huber, D. Automatic creation of semantically rich 3D building models from laser scanner data. Autom. Constr. 2013, 31, 325–337. [Google Scholar] [CrossRef]

- Capocchiano, F.; Ravanelli, R. An original algorithm for BIM generation from indoor survey point clouds. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, Enschede, The Netherlands, 10–14 June 2019; pp. 769–776. [Google Scholar]

- Khoshelham, K.; Tran, H.; Díaz-Vilariño, L.; Peter, M.; Kang, Z.; Acharya, D. An evaluation framework for benchmarking indoor modelling methods. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 297–302. [Google Scholar] [CrossRef]

- Wang, Y.E.; Wei, G.Y.; Brooks, D. Benchmarking TPU, GPU, and CPU platforms for deep learning. arXiv 2019, arXiv:1907.10701. [Google Scholar]

- Kuon, I.; Tessier, R.; Rose, J. FPGA architecture: Survey and challenges. Found. Trends Electron. Des. Autom. 2008, 2, 135–253. [Google Scholar] [CrossRef]

- Dung, L.R.; Huang, C.M.; Wu, Y.Y. Implementation of RANSAC algorithm for feature-based image registration. J. Comput. Commun. 2013, 1, 46–50. [Google Scholar] [CrossRef]

- Gentleman, W.M.; Kung, H. Matrix triangularization by systolic arrays. In Proceedings of the Real-Time Signal Processing IV, Arlington, VA, USA, 4–7 May 1982; SPIE: Philadelphia, PA, USA, 1982; Volume 298, pp. 19–26. [Google Scholar]

- Tang, J.W.; Shaikh-Husin, N.; Sheikh, U.U. FPGA implementation of RANSAC algorithm for real-time image geometry estimation. In Proceedings of the 2013 IEEE Student Conference on Research and Developement, Putrajaya, Malaysia, 16–17 December 2013; pp. 290–294. [Google Scholar]

- Dantsker, O.D.; Caccamo, M.; Vahora, M.; Mancuso, R. Flight & ground testing data set for an unmanned aircraft: Great planes avistar elite. In Proceedings of the AIAA Scitech 2020 Forum, Orlando, FL, USA, 6–10 January 2020; p. 780. [Google Scholar]

- Vourvoulakis, J.; Lygouras, J.; Kalomiros, J. Acceleration of RANSAC algorithm for images with affine transformation. In Proceedings of the 2016 IEEE International Conference on Imaging Systems and Techniques (IST), Chania, Greece, 4–6 October 2016; pp. 60–65. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Vourvoulakis, J.; Kalomiros, J.; Lygouras, J. FPGA-based architecture of a real-time SIFT matcher and RANSAC algorithm for robotic vision applications. Multimed. Tools Appl. 2018, 77, 9393–9415. [Google Scholar] [CrossRef]

- Hidalgo-Paniagua, A.; Vega-Rodríguez, M.A.; Pavón, N.; Ferruz, J. A comparative study of parallel RANSAC implementations in 3D space. Int. J. Parallel Program. 2015, 43, 703–720. [Google Scholar] [CrossRef]

- Barath, D.; Ivashechkin, M.; Matas, J. Progressive NAPSAC: Sampling from gradually growing neighborhoods. arXiv 2019, arXiv:1906.02295. [Google Scholar]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar]

- Zhou, Q.Y.; Park, J.; Koltun, V. Open3D: A modern library for 3D data processing. arXiv 2018, arXiv:1801.09847. [Google Scholar]

- Mariga, L. pyRANSAC-3D. 2022. Available online: https://github.com/leomariga/pyRANSAC-3D (accessed on 23 November 2022).

- Bradski, G. The OpenCV library. Dr. Dobb’s J. Softw. Tools Prof. Program. 2000, 25, 120–123. [Google Scholar]

| Focus on | RANSAC Variant |

|---|---|

| Accuracy | MSAC (M-estimator SAC) [15] |

| MLESAC (Maximum Likelihood SAC) [16] | |

| MAPSAC (Maximum A Posterior Estimation SAC) [17] | |

| LO-RANSAC (Locally Optimized RANSAC) [18] | |

| QDEGSAC (RANSAC for Quasi-degenerate Data) [19] | |

| Graph-Cut RANSAC [20] | |

| Speed | NAPSAC (N Adjacent Points SAmple Consensus) [21] |

| Randomized RANSAC with test [22] | |

| Guided-MLESAC [23] | |

| RANSAC with bail-out test [24] | |

| Randomized RANSAC with Sequential Probability Ratio Test [25] | |

| PROSAC (Progressive Sample Consensus) [26] | |

| GASAC (Genetic Algorithm SAC) [27] | |

| 1-point RANSAC [28] | |

| GCSAC (Geometrical Constraint SAmple Consensus) [29] | |

| Latent RANSAC [13] | |

| Robustness | AMLESAC [30] |

| u-MLESAC [31] | |

| Recursive RANSAC [32] | |

| SC-RANSAC (Spatial Consistency RANSAC) [33] | |

| NG-RANSAC (Neural-Guided RANSAC) [34] | |

| LP-RANSAC (Locality-preserving RANSAC) [35] | |

| Optimality | Optimal Randomized RANSAC [36] |

| Optimal RANSAC [37] |

| API Name | Method |

|---|---|

| SAC_RANSAC | RANdom SAmple Consensus |

| SAC_LMEDS | Least Median of Squares |

| SAC_MSAC | M-Estimator SAmple Consensus |

| SAC_RRANSAC | Randomized RANSAC |

| SAC_MLESAC | Maximum LikeLihood Estimation SAmple Consensus |

| SAC_PROSAC | PROgressive SAmple Consensus |

| API Name | Model | Coefficients | Constraints |

|---|---|---|---|

| SACMODEL_PLANE | Plane | 4 | No |

| SACMODEL_LINE | Line | 6 | No |

| SACMODEL_CIRCLE2D | Circle | 3 | No |

| SACMODEL_CIRCLE3D | Circle | 7 | No |

| SACMODEL_SPHERE | Sphere | 4 | No |

| SACMODEL_CYLINDER | Cylinder | 7 | No |

| SACMODEL_CONE | Cone | 7 | No |

| SACMODEL_PARALLEL_LINE | Line | 6 | Yes |

| SACMODEL_PERPENDICULAR_PLANE | Plane | 4 | Yes |

| SACMODEL_NORMAL_PLANE | Plane | 4 | Yes |

| SACMODEL_NORMAL_SPHERE | Sphere | 4 | Yes |

| SACMODEL_PARALLEL_PLANE | Plane | 4 | Yes |

| SACMODEL_NORMAL_PARALLEL_PLANE | Plane | 4 | Yes |

| SACMODEL_STICK | Line | 6 | Yes |

| RANSAC Variant | Language | Code |

|---|---|---|

| GraphCut-RANSAC [44] | C++ | https://github.com/danini/graph-cut-ransac accessed on 23 November 2022 |

| GCSAC [29] | C++ | http://mica.edu.vn/perso/Le-Van-Hung/GCSAC/index.html accessed on 23 November 2022 |

| Latent RANSAC [13] | C++ | https://github.com/rlit/LatentRANSAC accessed on 23 November 2022 |

| Optimal RANSAC [37] | Matlab | https://www.cb.uu.se/~aht/code.html accessed on 23 November 2022 |

| RANSAC-Flow [65] | Python (PyTorch) | https://github.com/XiSHEN0220/RANSAC-Flow accessed on 23 November 2022 |

| Library | Language | URL |

|---|---|---|

| OpenCV [130] | C++, Python | https://opencv.org/ accessed on 23 November 2022 |

| PCL [127] | C++ | https://pointclouds.org/ accessed on 23 November 2022 |

| Open3D [128] | C++, Python | http://www.open3d.org/ accessed on 23 November 2022 |

| pyRANSAC-3D [129] | Python | https://github.com/leomariga/pyRANSAC-3D/ accessed on 23 November 2022 |

| indoor3D [109] | Python | https://github.com/rsait/indoor3d accessed on 23 November 2022 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martínez-Otzeta, J.M.; Rodríguez-Moreno, I.; Mendialdua, I.; Sierra, B. RANSAC for Robotic Applications: A Survey. Sensors 2023, 23, 327. https://doi.org/10.3390/s23010327

Martínez-Otzeta JM, Rodríguez-Moreno I, Mendialdua I, Sierra B. RANSAC for Robotic Applications: A Survey. Sensors. 2023; 23(1):327. https://doi.org/10.3390/s23010327

Chicago/Turabian StyleMartínez-Otzeta, José María, Itsaso Rodríguez-Moreno, Iñigo Mendialdua, and Basilio Sierra. 2023. "RANSAC for Robotic Applications: A Survey" Sensors 23, no. 1: 327. https://doi.org/10.3390/s23010327

APA StyleMartínez-Otzeta, J. M., Rodríguez-Moreno, I., Mendialdua, I., & Sierra, B. (2023). RANSAC for Robotic Applications: A Survey. Sensors, 23(1), 327. https://doi.org/10.3390/s23010327