State-of-the-Art Deep Learning Methods for Objects Detection in Remote Sensing Satellite Images

Abstract

:1. Introduction

1.1. Complex Features of Remote Sensing Images

- Varying sizes, structures and resolutions: Objects in remotely sensed imagery are characterized by varying sizes and resolutions, high inter-class similarity, and intra-class diversity [14]. This presents a challenge for object detection methods, as they must accurately identify objects of different sizes and shapes within the image. Additionally, the high inter-class similarity and intra-class diversity make it difficult to distinguish between different object classes, further complicating the object detection process. Furthermore, a high degree of similarity may occur among objects in images that are intensely similar [11], making extracting similar features confusing to detectors, hence incorrect outcomes. Recent studies have focused on developing object detection methods specifically for satellite images. These include the use of multi-scale object detection techniques and deep learning-based methods that can handle complex object characteristics [14]. In this study, we established the effects of training models on a novel dataset created from environmental perception data characterized by diverse features and captured in multiple scenes and on the performance of such models.

- Challenging background: The background of an image can present challenges for object detection methods, as it causes difficulty in distinguishing between objects and their surroundings. This is particularly true in remotely sensed satellite imagery, where the background can be highly variable and contain similar texture and color patterns to the objects of interest. Various methods have been adopted to handle complex image backgrounds. These include the use of contextual information such as incorporating contextual features into the object detection process and the use of object proposal methods to pre-select potential object regions within the image. However, these methods are yet to achieve optimum performance [15,16].

- Limited labeled dataset: Instance annotation refers to the process of labeling individual objects within an image with their respective class labels and bounding boxes. However, this process can be complex and time-consuming, particularly in cases where there are large numbers of objects within the image or when the objects have complex shapes or occlusions [17]. This can lead to errors in the annotation process, which can negatively impact the accuracy of the object detection method. Inaccurate sample annotations were therefore established in this study as a major factor that increases the complexity of detection implementation. Applications such as urban monitoring, disaster prediction, and general environmental monitoring require an accurate and effective object detection approach.

1.2. Our Approach

- Design of a novel dataset from environmental perception data characterized with diverse features and captured in multiple scenes;

- Review of related works;

- Modeling R-CNN and YOLO-based algorithms on the newly created dataset;

- Conducting experiments to establish the object detection performance of the state-of-the-art object detection algorithms.

2. Review of Related Works

3. Methods

3.1. Methods Overview

- Dataset creation and preprocessing;

- Model architectures;

- Model training—transfer learning.

3.2. Dataset Creation

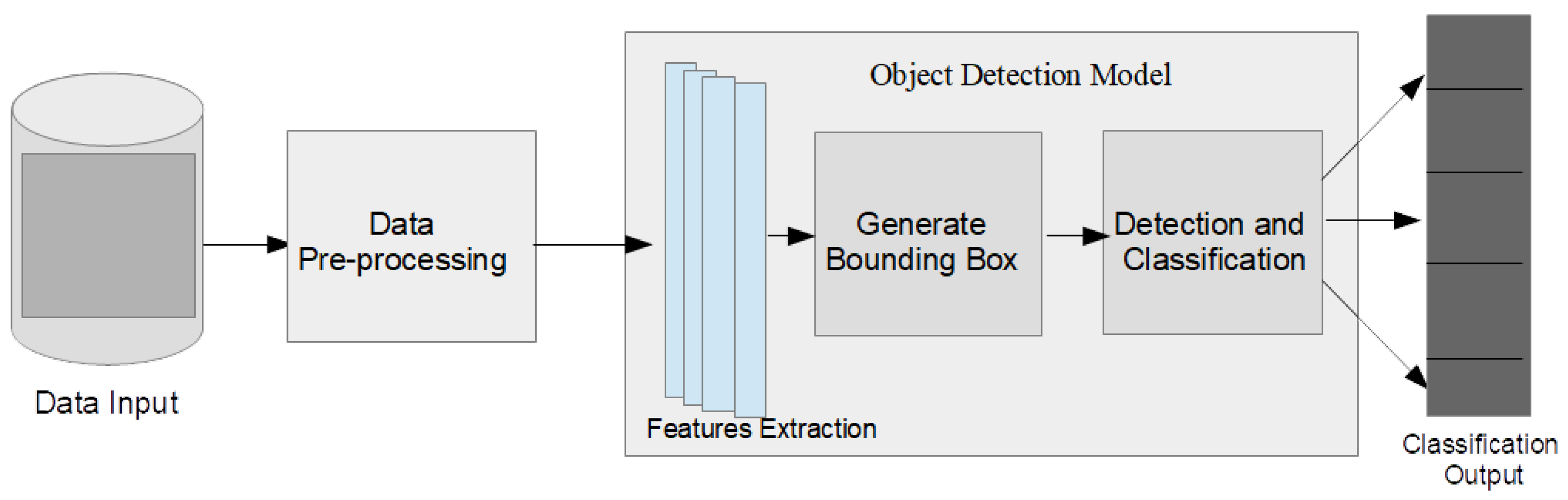

3.3. Generalized Objects Detection Systems Architecture

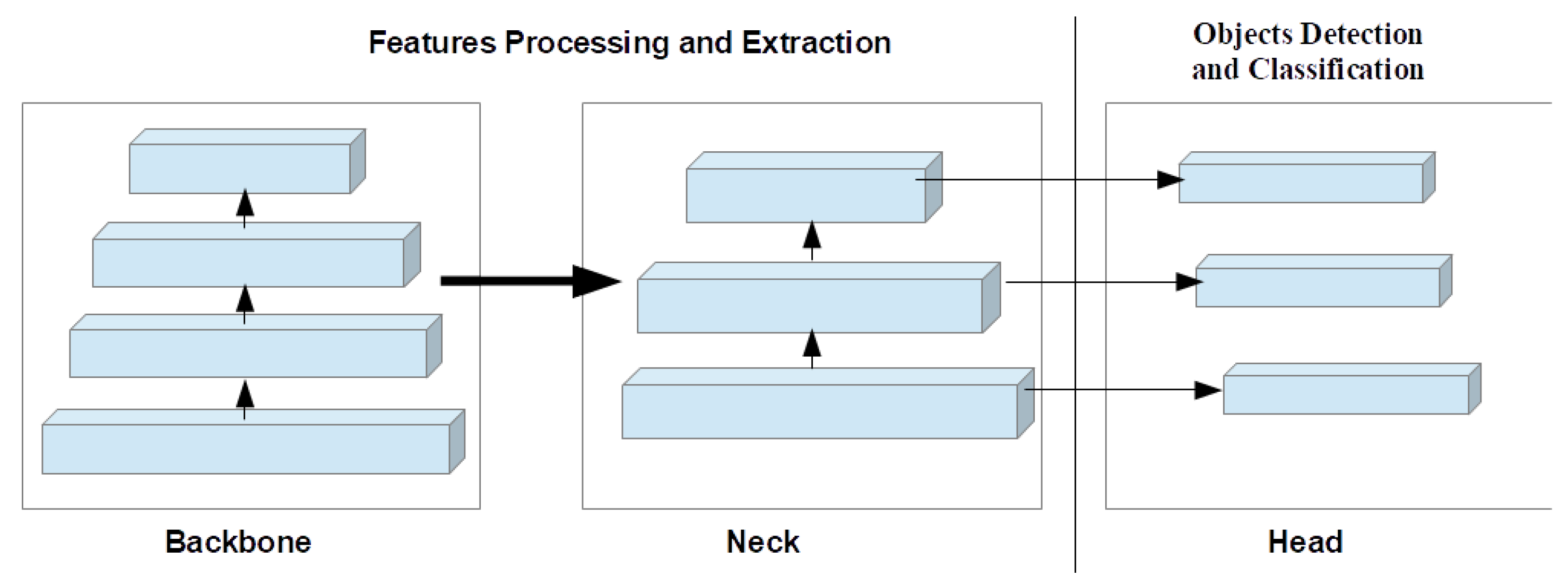

- Head: The head module is the final component of the architecture and is responsible for predicting the bounding boxes, class probabilities, and objectness scores for each object in the input image [42]. The head module takes the feature maps generated by the neck module and applies a set of convolutional filters to predict the locations and sizes of bounding boxes for each object. The head is composed of several fully connected layers that perform regression and classification tasks. The head section, for example, predicts the location and size of objects using anchor boxes and applies a softmax activation function to output class probabilities. The head module can also predict the class probabilities for each bounding box, indicating the object class corresponding to the bounding box. The head module predicts the objectness score for each bounding box, indicating the likelihood that the bounding box contains an object [43,44,45]. The output of the head module is always a set of bounding boxes, each with a corresponding class and objectness score.

- Backbone: The backbone is the core network architecture that processes the input image and extracts high-level features that are useful for detecting objects [46]. Typically, the backbone consists of several layers of CNNs, such as convolutional and pooling layers and activation functions, which are stacked on top of each other. These layers perform operations such as convolution, pooling, and activation to gradually reduce the spatial resolution of the input image while increasing its depth. The YOLO architecture [47], for example, uses popular backbones such as Darknet-53 or ResNet as the backbone. The output of the backbone is a set of feature maps that capture different levels of abstraction and spatial scale.

- Neck: The neck module connects the feature pyramids to the head of the architecture [48]. Feature pyramids are multi-scale feature maps that are generated by the backbone network [49]. These feature maps contain information about objects at different scales and resolutions, allowing the architecture to detect objects of different sizes. They are created by applying a set of convolutional filters and spatial pooling to the output of the backbone at different scales. The feature pyramids are used to detect objects of different sizes and scales. The neck region is composed of a series of convolutional and pooling layers, which are used to upsample an extracted features map from the backbone region. A common example of the neck region is the feature pyramids network [50].

3.4. Network Architectures

3.5. Model Network Training—Transfer Learning

4. Results and Discussion

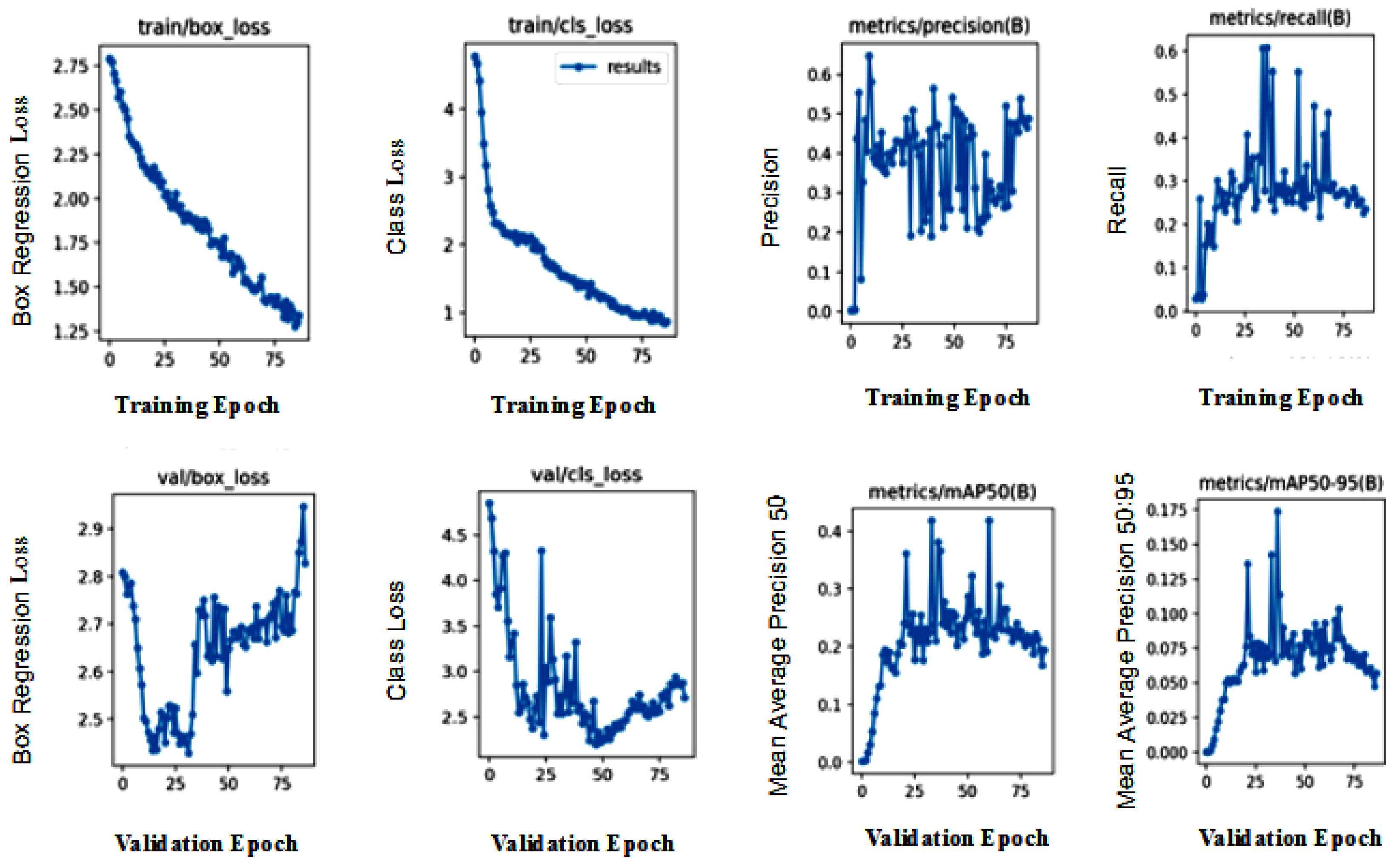

4.1. Evaluation Metrics

- Detection accuracy is a measure of how well a model can correctly identify objects within an image. There are several metrics used to evaluate the detection accuracy of a model; these include precision, recall, and F1-score;

- Precision refers to the proportion of true positives (correctly detected objects) out of all the objects detected by the model. A high precision indicates that the model has a low rate of false positives, meaning that most of the objects it detects are correct;

- Average precision (AP) is the average precision of the objects detected by the model;

- Mean average precision (mAP) is the overall mean value of the AP;

- Recall measures the proportion of true positives detected by the model out of all the actual objects in the image. A high recall indicates that the model can detect most of the objects in the image.

4.2. Results Discussion

4.2.1. Experimental Analysis of State-of-the-Art

4.2.2. Class-Wise Detection Performance

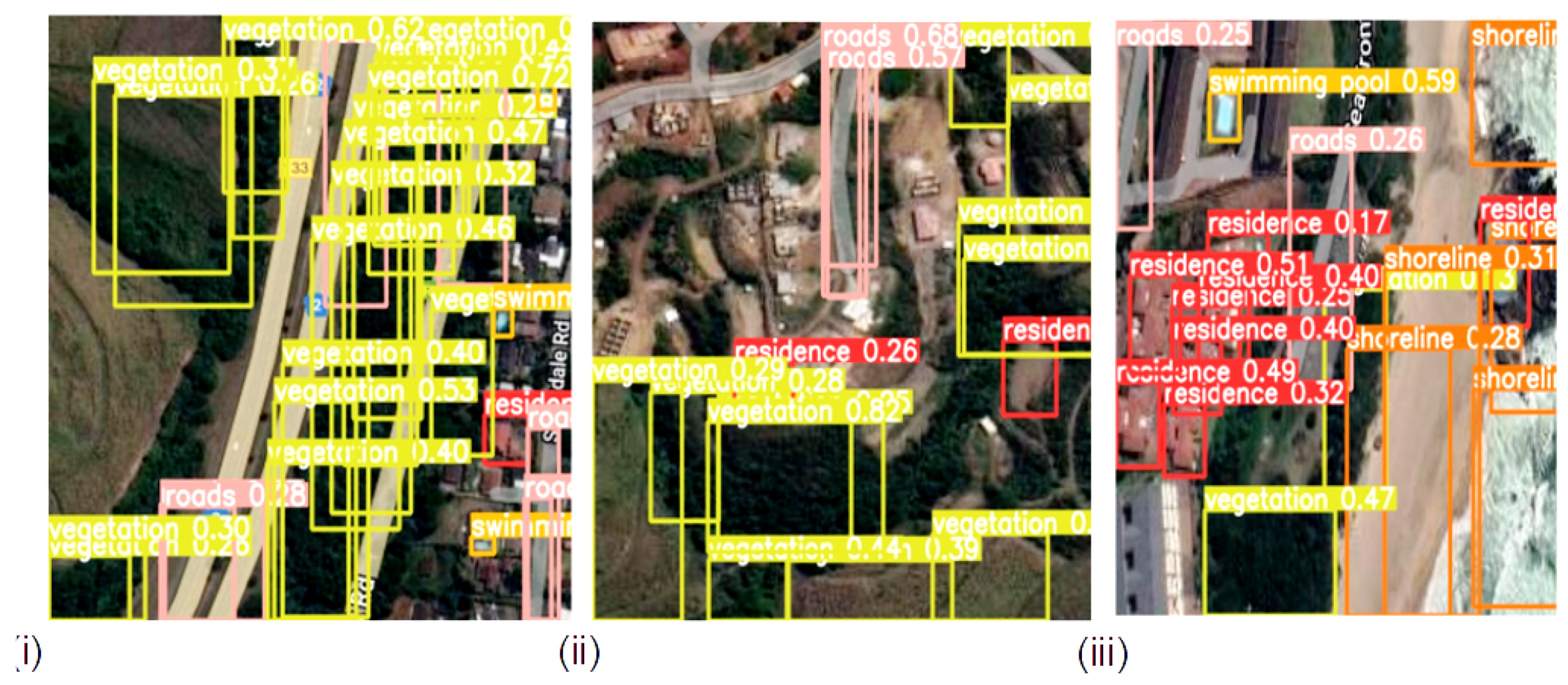

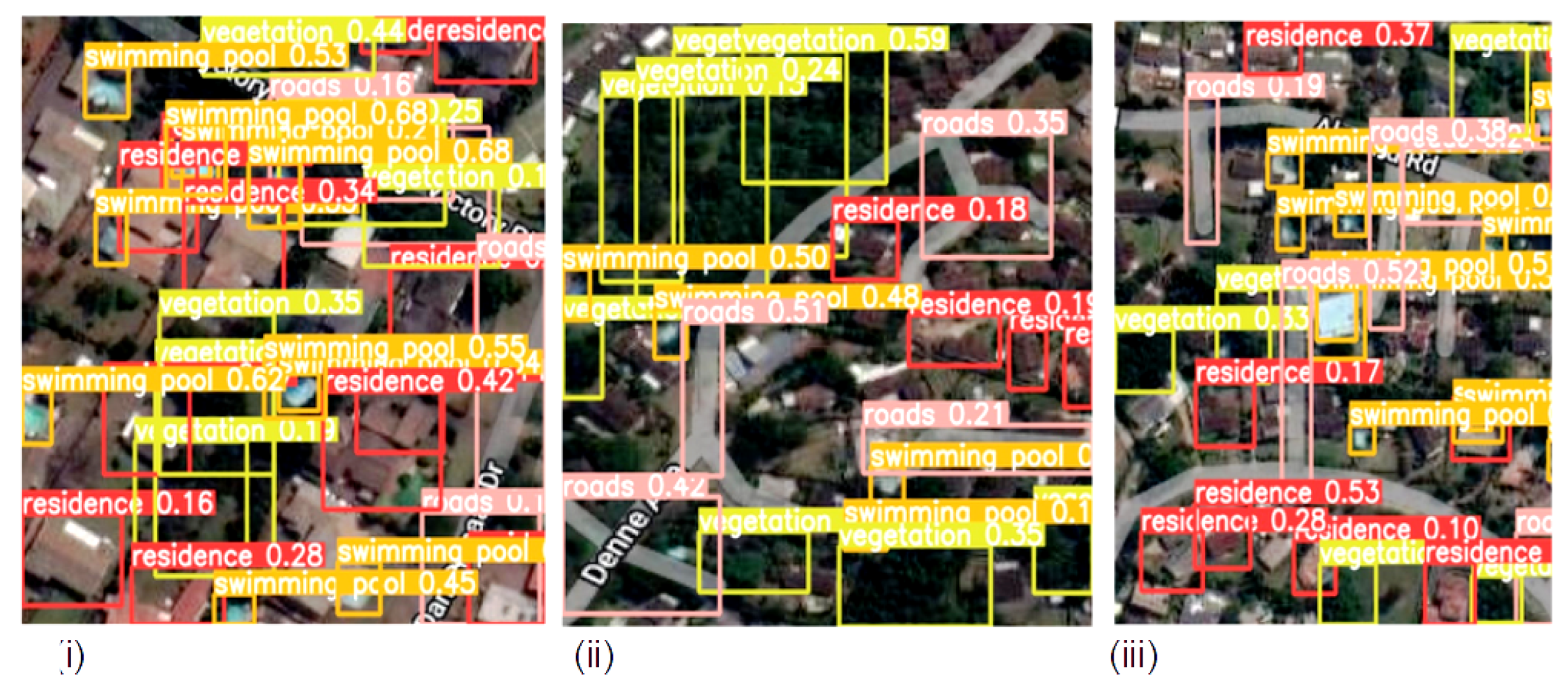

4.2.3. Visualization Analysis of Detection Results Output

4.2.4. Comparison Analysis of the Models on Publicly Available Datasets: Visdrones and Pascalvoc

4.3. Summary and Future Works

4.3.1. Summary

- Varying sizes, structures, and resolutions:

- –

- In this paper, to address the challenges of varying sizes, structures, and resolutions, we proposed a newly-created dataset of diverse images characterized by objects of varying sizes and resolutions acquired under real-life conditions and quality, and with high inter-class similarity and intra-class diversity. We established the effects of training models on a novel dataset created from environmental perception data characterized by diverse features and captured in multiple scenes on the performance of the models.

- –

- The data augmentation approach employed during training enabled the model to efficiently handle objects with varying sizes, structures, and resolutions.

- –

- Multi-scale training: The proposed system also employed a multi-scale training strategy that enables the model to learn representations at different scales. This enhances the ability to handle objects of varying sizes, structures, and resolutions.

- Challenging background: The research addresses the issue of challenging backgrounds through the following approaches:

- –

- Data augmentation: Data augmentation approaches employed expose the model to a wider range of backgrounds and help the object detecting model to generalize better and improve its ability to handle challenging backgrounds during inference.

- –

- The object detecting system adopted in this paper has numerous layers and components that learn to recognize patterns and objects of varying complexity and challenging background. For example, the backbone has a series of convolutional layers that extract relevant features from the input image, SPPF layer, and the subsequent convolution layers that process features at a variety of scales. The C2f module combines the high-level features extracted with contextual information to improve detection accuracy. The C2f component in the head structure is succeeded by two decoupled segmentation heads, which learn to predict the semantic segmentation masks for the input image. The Detection module uses a set of convolution and linear layers to map the high-dimensional features to the output bounding boxes and object classes.

- Limited labeled dataset:

- –

- The dataset was subjected to data augmentation, to increase the diversity of training data. The approach employs geometric augmentations and transformations that change the spatial orientation of images. This helps to diversify the training set and make the models more resilient to changes in perspective or orientation. It involves flipping the image horizontally to create a mirror image and flipping it vertically to invert the image. It also involves rotating images by 90, 180, or 270 degrees to simulate different viewing angles of an object in the image. These processes were performed repeatedly to increase the quantity of the training images by 100 times.

- –

- This study also employed a transfer learning and dynamic data fusion approach for modeling the object detection method on the newly created dataset for improved performance. The transfer learning approach involves using pre-trained models on large-scale datasets to improve the performance of object detection and recognition in remote sensing satellite images.

4.3.2. Future Works

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Simelane, S.P.; Hansen, C.; Munghemezulu, C. The use of remote sensing and GIS for land use and land cover mapping in Eswatini: A Review. S. Afr. J. Geomat. 2022, 10, 181–206. [Google Scholar] [CrossRef]

- Bhuyan, K.; Van Westen, C.; Wang, J.; Meena, S.R. Mapping and characterising buildings for flood exposure analysis using open-source data and artificial intelligence. Nat. Hazards 2022, 1–31. [Google Scholar] [CrossRef]

- Qi, W. Object detection in high resolution optical image based on deep learning technique. Nat. Hazards Res. 2022, 2, 384–392. [Google Scholar] [CrossRef]

- Vemuri, R.K.; Reddy, P.C.S.; Kumar, B.S.P.; Ravi, J.; Sharma, S.; Ponnusamy, S. Deep learning based remote sensing technique for environmental parameter retrieval and data fusion from physical models. Arab. J. Geosci. 2021, 14, 1230. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Li, Z.; Wang, Y.; Zhang, N.; Zhang, Y.; Zhao, Z.; Xu, D.; Ben, G.; Gao, Y. Deep Learning-Based Object Detection Techniques for Remote Sensing Images: A Survey. Remote Sens. 2022, 14, 2385. [Google Scholar] [CrossRef]

- Karim, S.; Zhang, Y.; Yin, S.; Bibi, I.; Brohi, A.A. A brief review and challenges of object detection in optical remote sensing imagery. Multiagent Grid Syst. 2020, 16, 227–243. [Google Scholar] [CrossRef]

- Pham, M.-T.; Courtrai, L.; Friguet, C.; Lefèvre, S.; Baussard, A. YOLO-Fine: One-Stage Detector of Small Objects under Various Backgrounds in Remote Sensing Images. Remote Sens. 2020, 12, 2501. [Google Scholar] [CrossRef]

- Nawaz, S.A.; Li, J.; Bhatti, U.A.; Shoukat, M.U.; Ahmad, R.M. AI-based object detection latest trends in remote sensing, multimedia and agriculture applications. Front. Plant Sci. 2022, 13, 1041514. [Google Scholar] [CrossRef]

- Liu, J.; Yang, D.; Hu, F. Multiscale object detection in remote sensing images combined with multi-receptive-field features and relation-connected attention. Remote Sens. 2022, 14, 427. [Google Scholar] [CrossRef]

- Ahmed, M.; Hashmi, K.A.; Pagani, A.; Liwicki, M.; Stricker, D.; Afzal, M.Z. Survey and performance analysis of deep learning based object detection in challenging environments. Sensors 2021, 21, 5116. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Proceedings, Part V 13, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: New York, NY, USA, 2014; pp. 740–755. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Han, L.; Han, L.; Zhu, L. How well do deep learning-based methods for land cover classification and object detection perform on high resolution remote sensing imagery? Remote Sens. 2020, 12, 417. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Xu, C.; Liu, C.; Li, Z. Context Information Refinement for Few-Shot Object Detection in Remote Sensing Images. Remote Sens. 2022, 14, 3255. [Google Scholar] [CrossRef]

- Chang, R.-I.; Ting, C.; Wu, S.; Yin, P. Context-Dependent Object Proposal and Recognition. Symmetry 2020, 12, 1619. [Google Scholar] [CrossRef]

- Saito, S.; Yamashita, T.; Aoki, Y. Multiple object extraction from aerial imagery with convolutional neural networks. Electron. Imaging 2016, 2016, 010402-1–010402-9. [Google Scholar]

- Xiaolin, F.; Fan, H.; Ming, Y.; Tongxin, Z.; Ran, B.; Zenghui, Z.; Zhiyuan, G. Small object detection in remote sensing images based on super-resolution. Pattern Recognit. Lett. 2022, 153, 107–112. [Google Scholar] [CrossRef]

- Tong, K.; Wu, Y.; Zhou, F. Recent advances in small object detection based on deep learning: A review. Image Vis. Comput. 2020, 2, 103910. [Google Scholar] [CrossRef]

- Li, M.; Zhang, Z.; Lei, L.; Wang, X.; Guo, X. Agricultural greenhouses detection in high-resolution satellite images based on convolutional neural networks: Comparison of faster R-CNN, YOLO v3 and SSD. Sensors 2020, 2, 4938. [Google Scholar] [CrossRef]

- Wan, D.; Lu, R.; Wang, S.; Shen, S.; Xu, T.; Lang, X. YOLO-HR: Improved YOLOv5 for Object Detection in High-Resolution Optical Remote Sensing Images. Remote Sens. 2023, 2, 614. [Google Scholar] [CrossRef]

- Wang, Y.; Gu, L.; Li, X.; Ren, R. Building extraction in multitemporal high-resolution remote sensing imagery using a multifeature LSTM network. IEEE Geosci. Remote Sens. Lett. 2020, 2, 1645–1649. [Google Scholar] [CrossRef]

- Gao, F.; He, Y.; Wang, J.; Hussain, A.; Zhou, H. Anchor-free convolutional network with dense attention feature aggregation for ship detection in SAR images. Remote Sens. 2020, 2, 2619. [Google Scholar] [CrossRef]

- Gan, Y.; You, S.; Luo, Z.; Liu, K.; Zhang, T.; Du, L. Object detection in remote sensing images with mask R-CNN. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2020; Volume 1673, p. 012040. [Google Scholar]

- Long, Y.; Gong, Y.; Xiao, Z.; Liu, Q. Accurate object localization in remote sensing images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2017, 2, 2486–2498. [Google Scholar] [CrossRef]

- Van Etten, A. Satellite imagery multiscale rapid detection with windowed networks. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 735–743. [Google Scholar]

- Van Etten, A. You only look twice: Rapid multi-scale object detection in satellite imagery. arXiv 2018, arXiv:1805.09512. [Google Scholar]

- Ku, B.; Kim, K.; Jeong, J. Real-Time ISR-YOLOv4 Based Small Object Detection for Safe Shop Floor in Smart Factories. Electronics 2022, 2, 2348. [Google Scholar] [CrossRef]

- Sun, B.; Wang, X.; Oad, A.; Pervez, A.; Dong, F. Automatic Ship Object Detection Model Based on YOLOv4 with Transformer Mechanism in Remote Sensing Images. Appl. Sci. 2023, 2, 2488. [Google Scholar] [CrossRef]

- Yu, L.; Wu, H.; Liu, L.; Hu, H.; Deng, Q. TWC-AWT-Net: A transformer-based method for detecting ships in noisy SAR images. Remote Sens. Lett. 2023, 2, 512–521. [Google Scholar] [CrossRef]

- Gao, X.; Sun, W. Ship object detection in one-stage framework based on Swin-Transformer. In Proceedings of the 2022 5th International Conference on Signal Processing and Machine Learning, Dalian, China, 4–6 August 2022; pp. 189–196. [Google Scholar]

- Zhang, Y.; Er, M.J.; Gao, W.; Wu, J. High Performance Ship Detection via Transformer and Feature Distillation. In 2022 5th International Conference on Intelligent Autonomous Systems (ICoIAS), Dalian, China, 23–25 September 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 31–36. [Google Scholar]

- Chen, Z.; Liu, C.; Filaretov, V.; Yukhimets, D.A. Multi-Scale Ship Detection Algorithm Based on YOLOv7 for Complex Scene SAR Images. Remote Sens. 2023, 2, 2071. [Google Scholar] [CrossRef]

- Patel, K.; Bhatt, C.; Mazzeo, P.L. Deep learning-based automatic detection of ships: An experimental study using satellite images. J. Imaging 2022, 2, 182. [Google Scholar] [CrossRef]

- Pang, L.; Li, B.; Zhang, F.; Meng, X.; Zhang, L. A Lightweight YOLOv5-MNE Algorithm for SAR Ship Detection. Sensors 2022, 2, 7088. [Google Scholar] [CrossRef]

- Nambiar, A.; Vaigandla, A.; Rajendran, S. Efficient Ship Detection in Synthetic Aperture Radar Images and Lateral Images using Deep Learning Techniques. In Proceedings of the OCEANS 2022, Hampton Roads, VA, USA, 17–20 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–10. [Google Scholar]

- Lang, L.; Xu, K.; Zhang, Q.; Wang, D. Fast and accurate object detection in remote sensing images based on lightweight deep neural network. Sensors 2021, 2, 5460. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Xuan, X.; Wang, W.; Li, Z.; Yao, H.; Wang, Z. A review of research on object detection based on deep learning. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2020; Volume 1684, p. 012028. [Google Scholar]

- Kumar, L.; Mutanga, O. Google Earth Engine applications since inception: Usage, trends, and potential. Remote Sens. 2018, 2, 1509. [Google Scholar] [CrossRef] [Green Version]

- Google Earth Engine. 2023. Available online: https://earthengine.google.com/ (accessed on 1 June 2023).

- Roboflow. 2022. Available online: https://roboflow.com/ (accessed on 1 June 2023).

- Kaur, J.; Singh, W. Tools, techniques, datasets and application areas for object detection in an image: A review. Multimed. Tools Appl. 2022, 2, 38297–38351. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Maleva, T.; Soloviev, V. Detecting apples in orchards using YOLOv3 and YOLOv5 in general and close-up images. In Proceedings of the Advances in Neural Networks–ISNN 2020: 17th International Symposium on Neural Networks, ISNN 2020, Proceedings 17, Cairo, Egypt, 4–6 December 2020; Springer International Publishing: New York, NY, USA; pp. 233–243. [Google Scholar]

- Singh, B.; Li, H.; Sharma, A.; Davis, L.S. R-fcn-3000 at 30fps: Decoupling detection and classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1081–1090. [Google Scholar]

- Choi, H.; Kang, M.; Kwon, Y.; Yoon, S.-E. An objectness score for accurate and fast detection during navigation. arXiv 2019, arXiv:1909.05626. [Google Scholar]

- Elharrouss, O.; Akbari, Y.; Almaadeed, N.; Al-Maadeed, S. Backbones-review: Feature extraction networks for deep learning and deep reinforcement learning approaches. arXiv 2022, arXiv:2206.08016. [Google Scholar]

- Mahasin, M.; Dewi, I.A. Comparison of CSPDarkNet53, CSPResNeXt-50, and EfficientNet-B0 Backbones on YOLO V4 as Object Detector. Int. J. Eng. Sci. Inf. Technol. 2022, 2, 64–72. [Google Scholar] [CrossRef]

- Picron, C.; Tuytelaars, T.; ESAT-PSI, K.U. Trident Pyramid Networks for Object Detection. arXiv 2022, arXiv:2110.04004v3. [Google Scholar]

- Zhang, Z.; Qiu, X.; Li, Y. Sefpn: Scale-equalizing feature Pyramid network for object detection. Sensors 2021, 2, 7136. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Pham, V.; Pham, C.; Dang, T. Road damage detection and classification with detectron2 and faster r-cnn. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; IEEE: Piscataway, NJ, USA; pp. 5592–5601. [Google Scholar]

- Olorunshola, O.E.; Irhebhude, M.E.; Evwiekpaefe, A.E. A Comparative Study of YOLOv5 and YOLOv7 Object Detection Algorithms. J. Comput. Soc. Inform. 2023, 2, 1–12. [Google Scholar] [CrossRef]

- Github: Yolov5. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 June 2023).

- Li, C.; Li, L.; Geng, Y.; Jiang, H.; Cheng, M.; Zhang, B.; Ke, Z.; Xu, X.; Chu, X. YOLOv6 v3. 0: A Full-Scale Reloading. arXiv 2023, arXiv:2301.05586. [Google Scholar]

- Terven, J.; Cordova-Esparza, D. A Comprehensive Review of YOLO: From YOLOv1 to YOLOv8 and Beyond. arXiv 2023, arXiv:2304.00501. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Wang, C.-Y.; Liao, H.-Y.M.; Yeh, I.-H. Designing Network Design Strategies Through Gradient Path Analysis. arXiv 2022, arXiv:2211.04800. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 30 March 2023).

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 2, 43–76. [Google Scholar] [CrossRef]

- Cao, Y.; He, Z.; Wang, L.; Wang, W.; Yuan, Y.; Zhang, D.; Zhang, J. VisDrone-DET2021: The vision meets drone object detection challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 2847–2854. [Google Scholar]

- Lou, H.; Duan, X.; Guo, J.; Liu, H.; Gu, J.; Bi, L.; Chen, H. DC-YOLOv8: Small Size Object Detection Algorithm Based on Camera Sensor. Electronics 2023, 12, 2323. [Google Scholar] [CrossRef]

| Methods | Datasets | Results Obtained | Limitations |

|---|---|---|---|

| FasterR-CNN [38] | VOC2007 | mAP 76.4%, 5 frames per seconds (fps) | Difficulty in detecting small objects and real-time detection |

| SPP-Net [38] | VOC2007 | 54.2% mAP | Requires complicated multi-step training steps and large computational resources, lower detection rate and accuracy |

| R-CNN [38] | VOC2007 | 66%, 0.5 fps. | calculation efficiency is too low, may cause object distortion, lower detection rate |

| FasterR-CNN [20] | AGs-GF1 and 2 | 86.0%, 12 fps | Lower detection accuracy |

| YOLOv3 [20] | AGs-GF1 and 2 | 90.4%, 73 fps | Slower detection rate |

| SSD [20] | AGs-GF1 and 2 | 84.9%, 35 fps | Lower detection accuracy |

| YOLOv4 [19] | MS COCO | 43.5%, 65.7 fps | Requires larger computational resources |

| YOLOv5s [21] | SIMD | 5.8 ms, 62.8 mAP | Lower detection speed and accuracy |

| YOLO-HR [21] | SIMD | 67.31% mAP, 6.7 ms | Requires larger computational resources, Lower detection speed and accuracy |

| RetinaNet [23] | SAR | average precision 79% | Difficulty in detecting smaller objects |

| YOLOv3 [23] | SAR | 63% mAP | Requires larger computational resources, lower detection speed and accuracy |

| YOLT [26] | DigitalGlobe satellites, planet satellites, and aerial platforms. | 68% mAP, 44 fps | Difficulty in detecting small objects, lower detection speed and accuracy |

| YOLOv4-Tiny [37] | RSOD | 80.02% mAP, 285.3 fps | Lower detection speed s |

| YOLOv4 + CSPA + DE [37] | RSOD | 85.13% mAP, 227.9 fps | Challenges of obtaining biased anchors due to the large variation in object scales in remote sensing images. |

| Methods | Backbone Structure | Neck Structure | Head Structure |

|---|---|---|---|

| Detectron2 | The backbone network is responsible for extracting features from the input image. Detectron2 provides various backbone architectures such as ResNet, ResNeXt, and MobileNet. | Neck has FPN, a top-down architecture using multi-scale features, taking backbone output, and creating feature maps at different scales. It also contains RPN, generating object regions in an input image from feature maps. | The detection head takes the features extracted from ROI pooling and produces the final detection results. It consists of two sub-components: classification and regression. |

| YOLOv5 | YOLOv5 [52,53] utilizes a Cross Stage Partial hybridized with Dark-net53 (CSP-Darknet53) as the backbone. The structure aims at limiting the vanishing gradient challenge with a dense system. | The neck uses Spatial Pyramid Pooling (SPP) and Path Aggregation Network with BottleNeckCSP (PANet) [54] for enhanced information flow through information aggregation. | The head is made up of a series of convolution layers for the prediction of bounding boxes and object classes score. |

| YOLOv6 | It employs a backbone based on RepVGG called EfficientRep that uses a higher parallelism than previous YOLO backbones [55]. | The neck uses PAN enhanced with RepBlocks or CSPStackRep Blocks for the larger models [55]. | The head adopts new loss modules (VariFocal, SIoU, GIoU) and uses RepOptimizer-based quantization and channel-wise distillation for better detection [55]. |

| YOLOv7 | The backbone employs concatenation-based models (CBS) and Efficient Layer Aggregation Network (E-ELAN) algorithms [55] for feature extraction [56,57] and efficient learning and convergence. | The neck structure employs a PAN-based feature pyramid network. This allows the system to efficiently manage the transmission of both high-level and low-level features and enhance the accuracy. | YOLOv7 incorporates Deep Supervision, a technique that employs multiple heads, including the lead head responsible for the final output, and an auxiliary head that assists with training in middle layers. |

| YOLOv8 | The backbone [55,58] includes convolution layers, coarse-to-fine (C2f) modules, and spatial pyramid pooling faster (SPPF) modules. Bottleneck components extend to neck regions and feature maps; and are extracted from the backbone and neck regions. | Neck region concatenates features for fewer parameters and tensor size. YOLOv8 uses the C2f module in the neck region, replacing CSP and C3 modules with “f” denoting feature count. | The C2f component in the head structure is succeeded by two decoupled segmentation heads. The detection heads in YOLOv8 are composed of detection modules and a prediction layer and are also anchor-free detection. |

| Methods | P (%) | R (%) | mAP50 (%) | MAP50-95 (%) | Speed (ms) |

|---|---|---|---|---|---|

| Detectron2 | 50 | 32.7 | 16 | 24 | 0.9 |

| YOLOv5 | 53.4 | 49.7 | 27 | 18.4 | 0.5 |

| YOLOv6 | 53.2 | 47.4 | 32.1 | 16.6 | 0.4 |

| YOLOv7 | 54.5 | 46.2 | 34.1 | 25 | 0.3 |

| YOLOv8 | 68 | 60 | 43 | 17.5 | 0.2 |

| Class | P (%) | R (%) | mAP50 (%) | MAP50-95 (%) |

|---|---|---|---|---|

| Residence | 41.1 | 42.1 | 19.3 | 12.8 |

| Roads | 41.2 | 57.1 | 13.7 | 4.75 |

| Shorelines | 54.6 | 96.4 | 99.5 | 59.7 |

| Swimming Pool | 62.7 | 62.9 | 45.5 | 12.8 |

| Vegetation | 57.3 | 62.3 | 12.8 | 8.45 |

| Datasets | No of Images | Categories | Description |

|---|---|---|---|

| PascalVOC2007 [13] | 9963 | 20 |

|

| VisDrones [60] | 10,209 | 10 | pedestrian, tricycle, truck, van, car, person, motorcycle, bus, awning, and bicycle |

| Methods | mAP50 (%) |

|---|---|

| YOLOv3 | 38.8 |

| YOLOv5 | 38.1 |

| YOLOv7 | 30.7 |

| YOLOv8 | 39.0 |

| Methods | mAP50 (%) |

|---|---|

| YOLOv3 | 79.5 |

| YOLOv5 | 78 |

| YOLOv7 | 69.1 |

| YOLOv8 | 83.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adegun, A.A.; Fonou Dombeu, J.V.; Viriri, S.; Odindi, J. State-of-the-Art Deep Learning Methods for Objects Detection in Remote Sensing Satellite Images. Sensors 2023, 23, 5849. https://doi.org/10.3390/s23135849

Adegun AA, Fonou Dombeu JV, Viriri S, Odindi J. State-of-the-Art Deep Learning Methods for Objects Detection in Remote Sensing Satellite Images. Sensors. 2023; 23(13):5849. https://doi.org/10.3390/s23135849

Chicago/Turabian StyleAdegun, Adekanmi Adeyinka, Jean Vincent Fonou Dombeu, Serestina Viriri, and John Odindi. 2023. "State-of-the-Art Deep Learning Methods for Objects Detection in Remote Sensing Satellite Images" Sensors 23, no. 13: 5849. https://doi.org/10.3390/s23135849