Automated Gait Analysis Based on a Marker-Free Pose Estimation Model

Abstract

:1. Introduction

- A markerless pose estimation model, MediaPipe Pose, which requires lower computational resources, was applied for the extraction of body key points from healthy individuals with promising accuracy and reduced inference speed.

- An algorithm was devised to automate the assessment of gait parameters based on the body key points extracted using MediaPipe Pose, eliminating the requirement for human intervention.

2. Materials and Methods

2.1. Dataset

2.2. Video Data Collection

2.3. Reference Standard—Vicon 3D Motion Capture System

2.4. Pose Estimation Model for Gait Assessment

2.5. Gait Parameter Extraction

| Algorithm 1 Pseudo-code for the Proposed System |

| Input: Walking video of healthy individual |

| Output: Gait parameters results in CSV file |

| Begin |

| 1 Initialize MediaPipe Pose Estimator |

| 2 while (current video frame ≤ last video frame) do |

| 3 Identify the region-of-interest that contains human pose |

| 4 Extract and save the positions of body keypoints in the region-of-interest |

| 5 end while |

| 6 Gap-filled-body-keypoints = Gap-fill (body keypoints) |

| 7 Setup 10th order Butterworth low pass filter at normalized cut off frequency = 0.1752 |

| 8 Filtered-body-keypoints = Butterworth-low-pass-filter (Gap-filled-body-keypoints) |

| 9 Calculate the relative changes in distance between the hip and foot-index for the left and right legs over time |

| 10 Identify the peak and minima of the relative changes in distance between the hip and foot-index for the left |

| and right legs over time |

| 11 Heel-strike-event-timings-left-leg = Timings of peak occurrence (left leg) |

| 12 Heel-strike-event-timings-right-leg = Timings of peak occurrence (right leg) |

| 13 Toe-off-event-timings-left-leg = Timings of minima occurrence (left leg) |

| 14 Toe-off-event-timings-right-leg = Timings of minima occurrence (right leg) |

| 15 Stance-time = Time duration between heel strike and toe-off of the same leg |

| 16 Swing-time = Time duration between toe-off and heel-strike of the same leg |

| 17 Step-time = Time duration between consecutive heel strikes of both feet |

| 18 Double-support time = Time duration between heel-strike of one leg and toe-off of the contralateral leg |

| 19 Save Stance-time, Swing-time, Step-time, Double-support-time in CSV file |

| End |

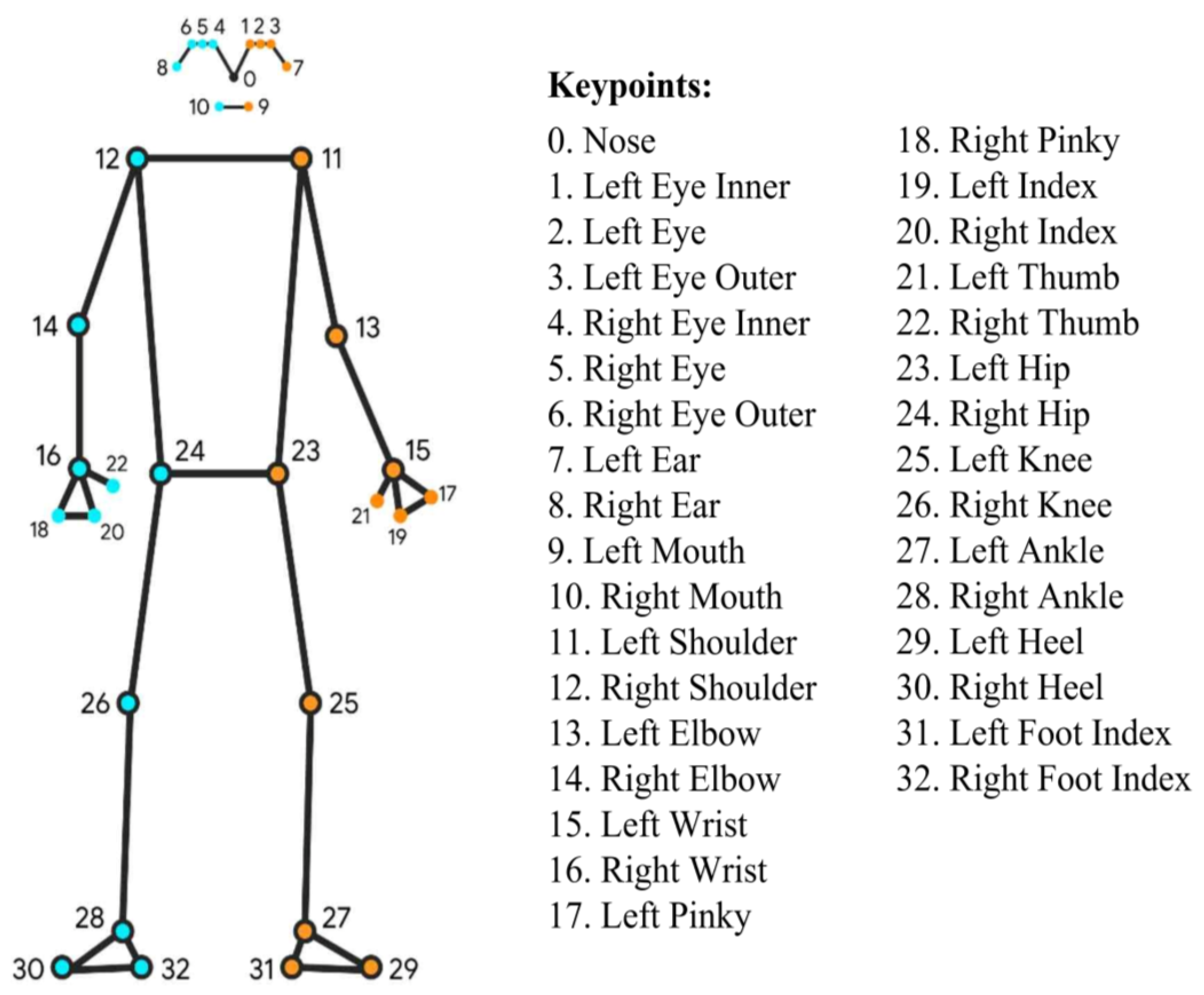

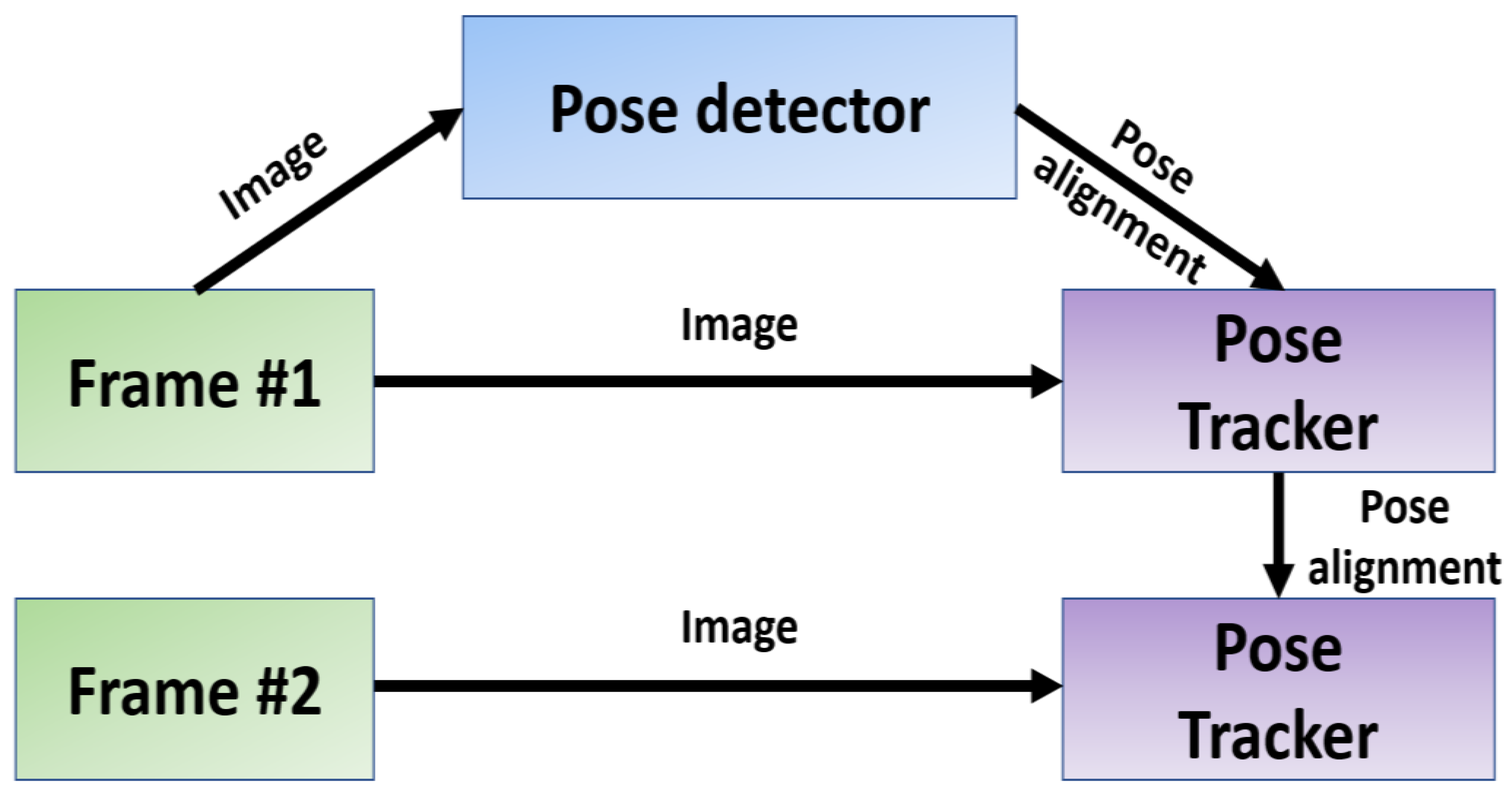

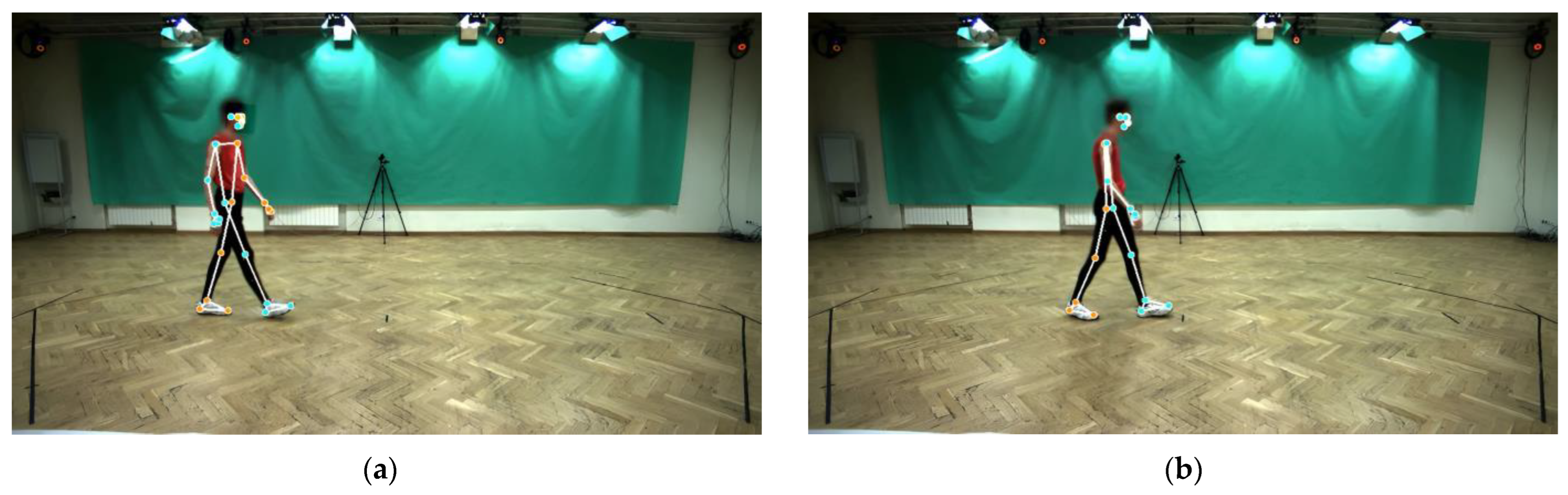

2.5.1. Pose Estimation Using MediaPipe Pose

- x and y: The coordinates of the key points, normalized to a range of [0.0, 1.0] based on the image width and height, respectively.

- z: The depth of the key points relative to the midpoint of the hips, where smaller values indicated proximity to the camera. The scale of z was roughly comparable to x.

- Visibility: A value ranging from 0.0 to 1.0, indicating the likelihood of the key points being visible and unobstructed in the image.

2.5.2. Data Preprocessing (Gap Filling and Low Pass Filtering)

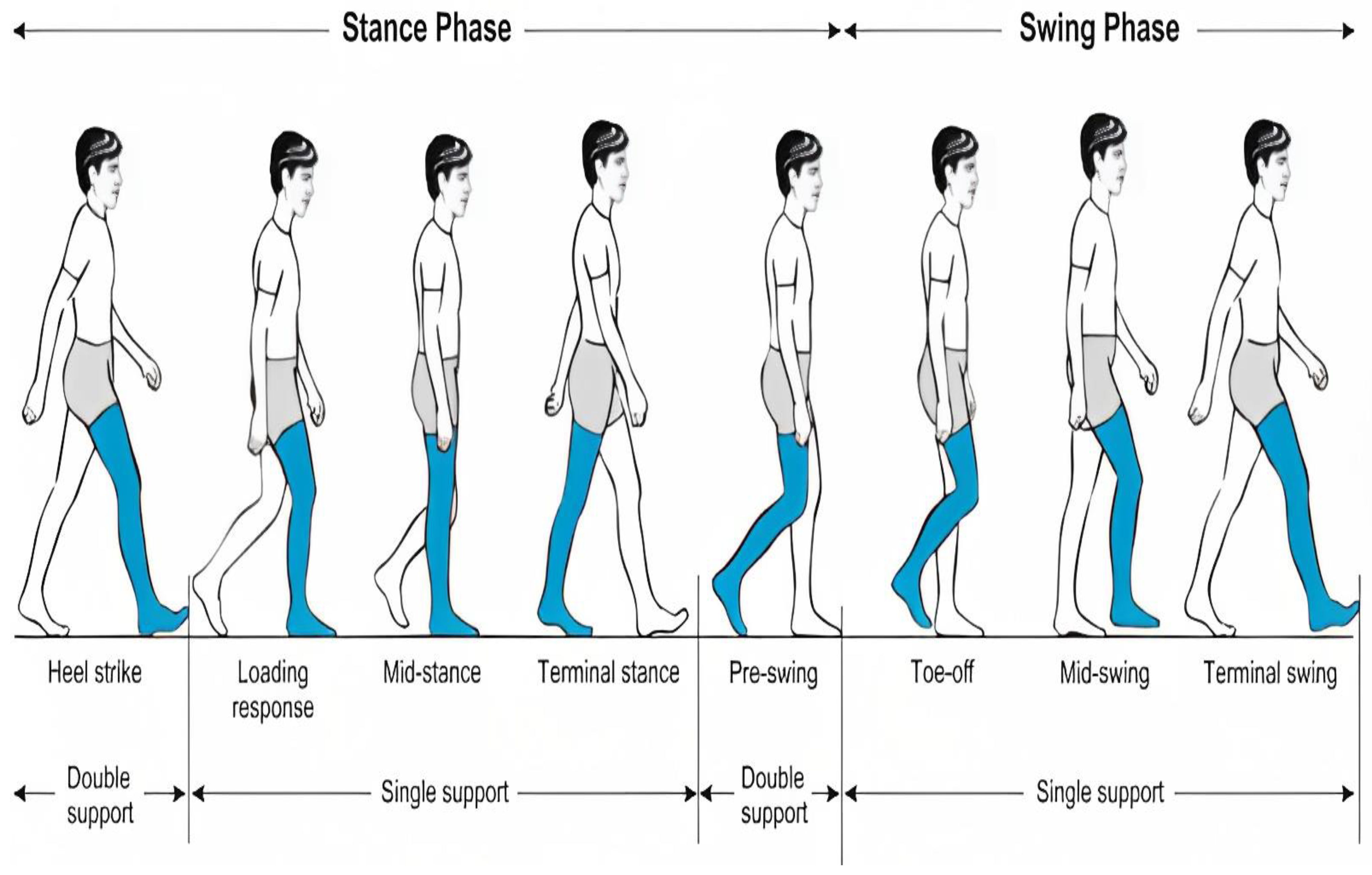

2.5.3. Temporal Gait Parameters Extraction: Identifying Key Gait Events

- (i)

- Stance time: the duration between heel strike and toe-off of the same leg.

- (ii)

- Swing time: the duration between toe-off and heel-strike of the same leg.

- (iii)

- Step time: the duration between consecutive heel strikes of both feet.

- (iv)

- Double support time: the duration between the heel strike of one leg and the toe-off of the contralateral leg.

2.6. Statistics

3. Results

3.1. Descriptive Statistics for Key Gait Events (Heel Strike and Toe-Off)

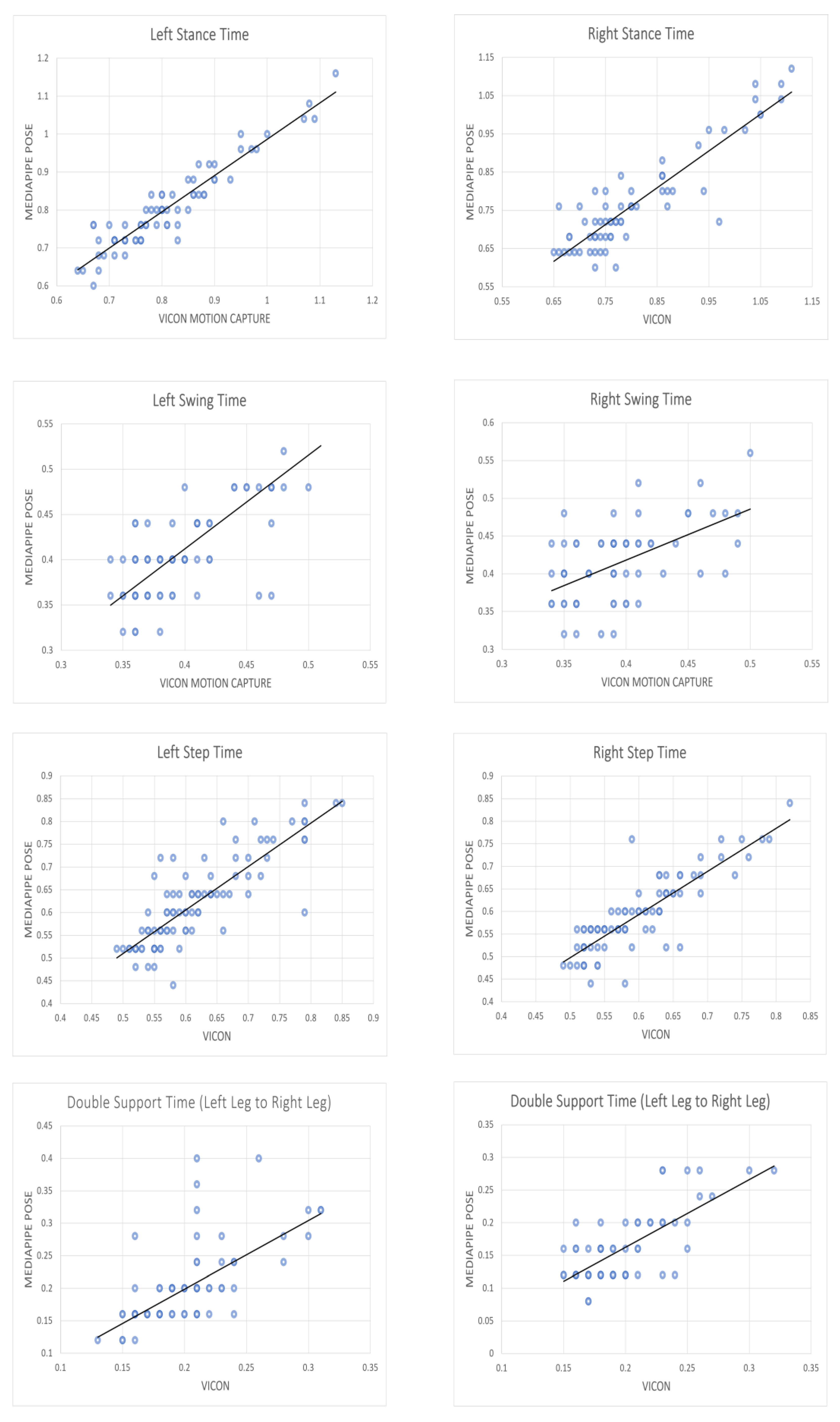

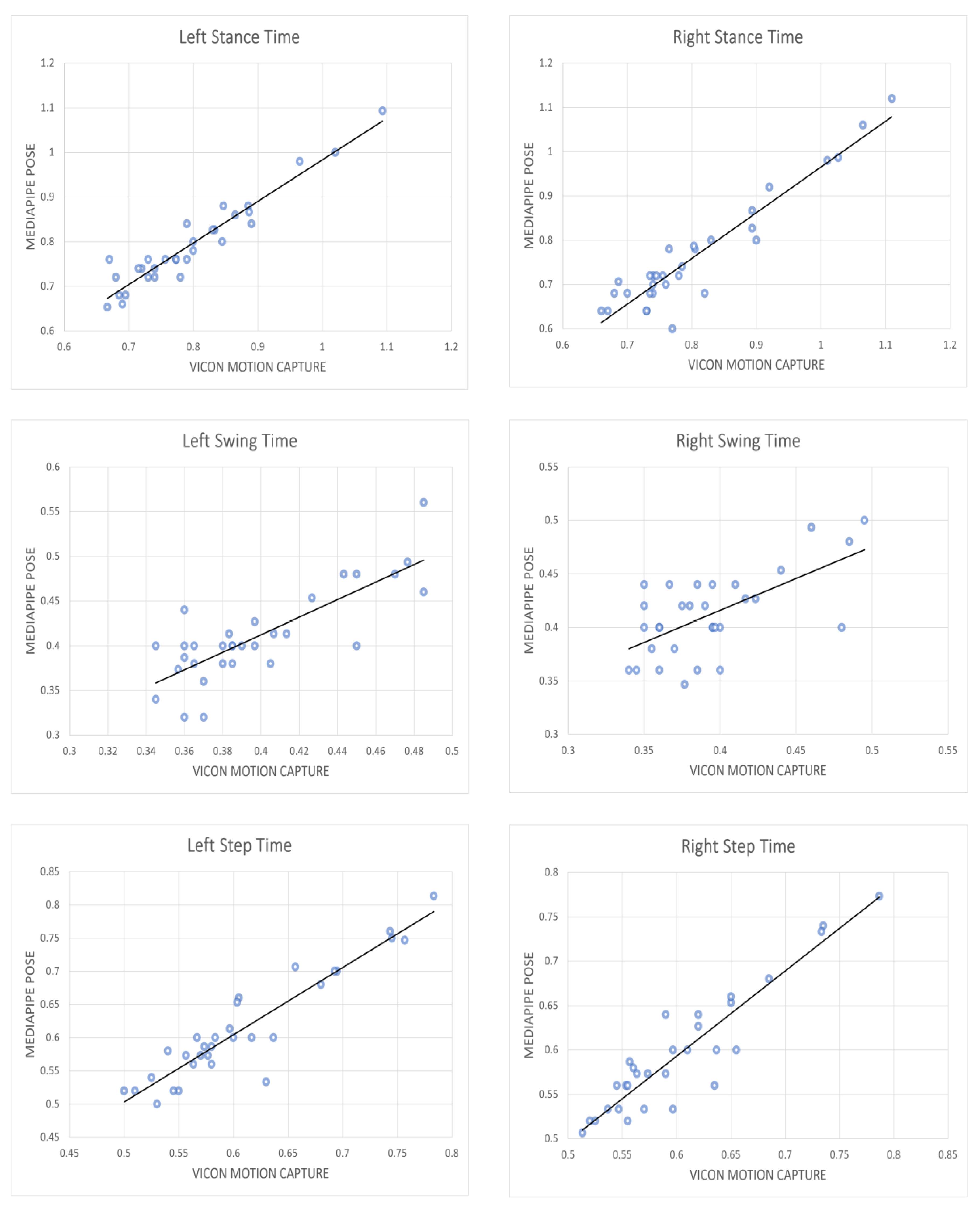

3.2. Statistical Analysis of Temporal Gait Parameters for All Gait Cycle

3.3. Statistical Analysis of Temporal Gait Parameters for the Means of Each Healthy Individual

4. Discussion

4.1. Performance of MediaPipe Pose

4.2. Temporal Gait Parameters Assessment

4.3. Qualitative Comparison with Other Works

| Method | Video Resolution | Video Frame Rate | Spatiotemporal Gait Parameter (Relative Error) | Year | Ref. | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Stance Time (s) | Swing Time (s) | Step Time (s) | Double Support Time (s) | Step Length (m) | Step Width (m) | Stride Length (m) | Stride Time (s) | |||||

| Azure Kinect | 3840 × 2160 px | 30 fps | ✕ | ✕ | ✕ | ✕ | −0.03 | −0.001 | −0.04 | 0.01 | 2022 | [36] |

| Azure Kinect | 3840 × 2160 px | 30 fps | ✕ | ✕ | 0.000 | ✕ | 0.00 | 0.040 | ✕ | 0.00 | 2020 | [37] |

| Kinect v2 | 1920 × 1080 px | 30 fps | ✕ | ✕ | 0.000 | ✕ | −0.05 | −0.070 | ✕ | 0.00 | 2020 | [37] |

| Our Work | 960 × 540 px | 25 fps | 0.02 | −0.02 | 0.001 | 0.02 | ✕ | ✕ | ✕ | ✕ | ||

4.4. Implications of the Proposed Approach in Clinical Settings

5. Conclusions

6. Limitations and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Seckiner, D.; Mallett, X.; Maynard, P.; Meuwly, D.; Roux, C. Forensic gait analysis—Morphometric assessment from surveillance footage. Forensic Sci. Int. 2019, 296, 57–66. [Google Scholar] [CrossRef] [Green Version]

- Whittle, M.W. Clinical gait analysis: A review. Hum. Mov. Sci. 1996, 15, 369–387. [Google Scholar] [CrossRef]

- Peel, N.; Bartlett, H.; McClure, R. Healthy ageing: How is it defined and measured? Australas. J. Ageing 2004, 23, 115–119. [Google Scholar] [CrossRef]

- Inai, T.; Takabayashi, T.; Edama, M.; Kubo, M. Evaluation of factors that affect hip moment impulse during gait: A systematic review. Gait Posture 2018, 61, 488–492. [Google Scholar] [CrossRef]

- Nguyen, D.-P.; Phan, C.-B.; Koo, S. Predicting body movements for person identification under different walking conditions. Forensic Sci. Int. 2018, 290, 303–309. [Google Scholar] [CrossRef]

- Marin, J.; Marin, J.J.; Blanco, T.; de la Torre, J.; Salcedo, I.; Martitegui, E. Is My Patient Improving? Individualized Gait Analysis in Rehabilitation. Appl. Sci. 2020, 10, 8558. [Google Scholar] [CrossRef]

- Qiu, S.; Liu, L.; Zhao, H.; Wang, Z.; Jiang, Y. MEMS Inertial Sensors Based Gait Analysis for Rehabilitation Assessment via Multi-Sensor Fusion. Micromachines 2018, 9, 442. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Marýn, J.; Blanco, T.; Marín, J.J.; Moreno, A.; Martitegui, E.; Aragüés, J.C. Integrating a gait analysis test in hospital rehabilitation: A service design approach. PLoS ONE 2019, 14, e0224409. [Google Scholar] [CrossRef] [Green Version]

- Akhtaruzzaman, M.; Shafie, A.A.; Khan, M.R. Gait analysis: Systems, technologies, and importance. J. Mech. Med. Biol. 2016, 16, 1630003. [Google Scholar] [CrossRef]

- van Mastrigt, N.M.; Celie, K.; Mieremet, A.L.; Ruifrok, A.C.C.; Geradts, Z. Critical review of the use and scientific basis of forensic gait analysis. Forensic Sci. Res. 2018, 3, 183–193. [Google Scholar] [CrossRef] [Green Version]

- Lord, S.E.; Halligan, P.W.; Wade, D.T. Visual gait analysis: The development of a clinical assessment and scale. Clin. Rehabil. 1998, 12, 107–119. [Google Scholar] [CrossRef]

- Raghu, S.L.; Conners, R.T.; Kang, C.; Landrum, D.B.; Whitehead, P.N. Kinematic analysis of gait in an underwater treadmill using land-based Vicon T 40s motion capture cameras arranged externally. J. Biomech. 2021, 124, 110553. [Google Scholar] [CrossRef]

- Goldfarb, N.; Lewis, A.; Tacescu, A.; Fischer, G.S. Open source Vicon Toolkit for motion capture and Gait Analysis. Comput. Methods Programs Biomed. 2021, 212, 106414. [Google Scholar] [CrossRef] [PubMed]

- Della Valle, G.; Caterino, C.; Aragosa, F.; Micieli, F.; Costanza, D.; Di Palma, C.; Piscitelli, A.; Fatone, G. Outcome after Modified Maquet Procedure in dogs with unilateral cranial cruciate ligament rupture: Evaluation of recovery limb function by use of force plate gait analysis. PLoS ONE 2021, 16, e0256011. [Google Scholar] [CrossRef]

- Papagiannis, G.I.; Triantafyllou, A.I.; Roumpelakis, I.M.; Zampeli, F.; Garyfallia Eleni, P.; Koulouvaris, P.; Papadopoulos, E.C.; Papagelopoulos, P.J.; Babis, G.C. Methodology of surface electromyography in gait analysis: Review of the literature. J. Med. Eng. Technol. 2019, 43, 59–65. [Google Scholar] [CrossRef]

- Park, S.W.; Das, P.S.; Park, J.Y. Development of wearable and flexible insole type capacitive pressure sensor for continuous gait signal analysis. Org. Electron. 2018, 53, 213–220. [Google Scholar] [CrossRef]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.-E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef] [Green Version]

- Bazarevsky, V.; Grishchenko, I.; Raveendran, K.; Zhu, T.; Zhang, F.; Grundmann, M. BlazePose: On-device Real-time Body Pose tracking. arXiv 2020, arXiv:2006.10204. [Google Scholar]

- Maji, D.; Nagori, S.; Mathew, M.; Poddar, D. YOLO-Pose: Enhancing YOLO for Multi Person Pose Estimation Using Object Keypoint Similarity Loss. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 2636–2645. [Google Scholar] [CrossRef]

- Abd Shattar, N.; Gan, K.B.; Abd Aziz, N.S. Experimental Setup for Markerless Motion Capture and Landmarks Detection using OpenPose During Dynamic Gait Index Measurement. In Proceedings of the 2021 7th International Conference on Space Science and Communication (IconSpace), Selangor, Malaysia, 23–24 November 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 286–289. [Google Scholar] [CrossRef]

- Latorre, J.; Colomer, C.; Alcañiz, M.; Llorens, R. Gait analysis with the Kinect v2: Normative study with healthy individuals and comprehensive study of its sensitivity, validity, and reliability in individuals with stroke. J. Neuroeng. Rehabil. 2019, 16, 97. [Google Scholar] [CrossRef] [Green Version]

- Ma, Y.; Mithraratne, K.; Wilson, N.; Wang, X.; Ma, Y.; Zhang, Y. The Validity and Reliability of a Kinect v2-Based Gait Analysis System for Children with Cerebral Palsy. Sensors 2019, 19, 1660. [Google Scholar] [CrossRef] [Green Version]

- Ma, Y.; Mithraratne, K.; Wilson, N.; Zhang, Y.; Wang, X. Kinect V2-Based Gait Analysis for Children with Cerebral Palsy: Validity and Reliability of Spatial Margin of Stability and Spatiotemporal Variables. Sensors 2021, 21, 2104. [Google Scholar] [CrossRef] [PubMed]

- Tanaka, R.; Takimoto, H.; Yamasaki, T.; Higashi, A. Validity of time series kinematical data as measured by a markerless motion capture system on a flatland for gait assessment. J. Biomech. 2018, 71, 281–285. [Google Scholar] [CrossRef] [PubMed]

- Siena, F.L.; Byrom, B.; Watts, P.; Breedon, P. Utilising the Intel RealSense Camera for Measuring Health Outcomes in Clinical Research. J. Med. Syst. 2018, 42, 53. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Viswakumar, A.; Rajagopalan, V.; Ray, T.; Parimi, C. Human Gait Analysis Using OpenPose. In Proceedings of the 2019 Fifth International Conference on Image Information Processing (ICIIP), Shimla, India, 15–17 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 310–314. [Google Scholar] [CrossRef]

- Wagner, J.; Szymański, M.; Błażkiewicz, M.; Kaczmarczyk, K. Methods for Spatiotemporal Analysis of Human Gait Based on Data from Depth Sensors. Sensors 2023, 23, 1218. [Google Scholar] [CrossRef] [PubMed]

- D’Antonio, E.; Taborri, J.; Palermo, E.; Rossi, S.; Patane, F. A markerless system for gait analysis based on OpenPose library. In Proceedings of the 2020 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Dubrovnik, Croatia, 25–28 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Stenum, J.; Rossi, C.; Roemmich, R.T. Two-dimensional video-based analysis of human gait using pose estimation. PLoS Comput. Biol. 2021, 17, e1008935. [Google Scholar] [CrossRef]

- Viswakumar, A.; Rajagopalan, V.; Ray, T.; Gottipati, P.; Parimi, C. Development of a Robust, Simple, and Affordable Human Gait Analysis System Using Bottom-Up Pose Estimation with a Smartphone Camera. Front. Physiol. 2022, 12, 784865. [Google Scholar] [CrossRef]

- Tony Hii, C.S.; Gan, K.B.; Zainal, N.; Ibrahim, N.M.; Md Rani, S.A.; Shattar, N.A. Marker Free Gait Analysis using Pose Estimation Model. In Proceedings of the 2022 IEEE 20th Student Conference on Research and Development (SCOReD), Bangi, Malaysia, 8–9 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 109–113. [Google Scholar] [CrossRef]

- Kwolek, B.; Michalczuk, A.; Krzeszowski, T.; Switonski, A.; Josinski, H.; Wojciechowski, K. Calibrated and synchronized multi-view video and motion capture dataset for evaluation of gait recognition. Multimed. Tools Appl. 2019, 78, 32437–32465. [Google Scholar] [CrossRef] [Green Version]

- Pirker, W.; Katzenschlager, R. Gait disorders in adults and the elderly: A clinical guide. Wien. Klin. Wochenschr. 2017, 129, 81–95. [Google Scholar] [CrossRef] [Green Version]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef] [Green Version]

- Buckley, E.; Mazzà, C.; McNeill, A. A systematic review of the gait characteristics associated with Cerebellar Ataxia. Gait Posture 2018, 60, 154–163. [Google Scholar] [CrossRef] [PubMed]

- Guess, T.M.; Bliss, R.; Hall, J.B.; Kiselica, A.M. Comparison of Azure Kinect overground gait spatiotemporal parameters to marker based optical motion capture. Gait Posture 2022, 96, 130–136. [Google Scholar] [CrossRef]

- Albert, J.A.; Owolabi, V.; Gebel, A.; Brahms, C.M.; Granacher, U.; Arnrich, B. Evaluation of the Pose Tracking Performance of the Azure Kinect and Kinect v2 for Gait Analysis in Comparison with a Gold Standard: A Pilot Study. Sensors 2020, 20, 5104. [Google Scholar] [CrossRef] [PubMed]

- Vairis, A.; Boyak, J.; Brown, S.; Bess, M.; Bae, K.H.; Petousis, M. Gait Analysis Using Video for Disabled People in Marginalized Communities. In Lecture Notes in Computer Science (including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer International Publishing: Cham, Switzerland, 2021; Volume 12616, pp. 145–153. ISBN 9783030684518. [Google Scholar] [CrossRef]

- Cimorelli, A.; Patel, A.; Karakostas, T.; Cotton, R.J. Portable in-clinic video-based gait analysis: Validation study on prosthetic users. medRxiv 2022. [Google Scholar] [CrossRef]

- Gouelle, A.; Mégrot, F. Interpreting Spatiotemporal Parameters, Symmetry, and Variability in Clinical Gait Analysis. In Handbook of Human Motion; Müller, B., Wolf, S.I., Brueggemann, G.-P., Deng, Z., McIntosh, A., Miller, F., Selbie, W.S., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 1–20. ISBN 978-3-319-30808-1. [Google Scholar] [CrossRef]

- Haddas, R.; Patel, S.; Arakal, R.; Boah, A.; Belanger, T.; Ju, K.L. Spine and lower extremity kinematics during gait in patients with cervical spondylotic myelopathy. Spine J. 2018, 18, 1645–1652. [Google Scholar] [CrossRef] [PubMed]

| Gait Event | Leg | N | TP | FP | Mean ± SD (V—MP) | Mean ± SD (|V—MP|) | Range (V—MP) |

|---|---|---|---|---|---|---|---|

| Heel strike time | L | 106 | 103 | 1 | −0.004 ± 0.03 | 0.02 ± 0.02 | [−0.13, 0.09] |

| R | 102 | 101 | 0 | 0.02 ± 0.05 | 0.03 ± 0.04 | [−0.17, 0.20] | |

| Toe-off time | L | 102 | 100 | 0 | −0.004 ± 0.03 | 0.02 ± 0.03 | [−0.10, 0.18] |

| R | 105 | 100 | 1 | 0.005 ± 0.04 | 0.02 ± 0.03 | [−0.11, 0.17] |

| Temporal Gait Parameter | Leg | N | Mean ± SD | Mean ± SD (V—MP) | Mean ± SD (|V—MP|) | |

|---|---|---|---|---|---|---|

| V | MP | |||||

| Stance time | L | 71 | 0.81 ± 0.11 | 0.80 ± 0.11 | 0.01 ± 0.04 | 0.03 ± 0.02 |

| R | 65 | 0.81 ± 0.12 | 0.77 ± 0.13 | 0.04 ± 0.06 | 0.05 ± 0.04 | |

| Swing time | L | 69 | 0.40 ± 0.04 | 0.41 ± 0.06 | −0.01 ± 0.05 | 0.03 ± 0.04 |

| R | 65 | 0.39 ± 0.04 | 0.41 ± 0.05 | −0.02 ± 0.05 | 0.04 ± 0.03 | |

| Step time | L | 88 | 0.62 ± 0.09 | 0.62 ± 0.10 | −0.005 ± 0.05 | 0.04 ± 0.04 |

| R | 82 | 0.60 ± 0.07 | 0.59 ± 0.08 | 0.01 ± 0.04 | 0.03 ± 0.03 | |

| Double support time | L2R | 71 | 0.20 ± 0.04 | 0.20 ± 0.07 | 0.001 ± 0.05 | 0.03 ± 0.04 |

| R2L | 62 | 0.20 ± 0.04 | 0.16 ± 0.05 | 0.04 ± 0.04 | 0.04 ± 0.03 | |

| Temporal Gait Parameter | Leg | Significance (2-Tailed) | Pearson Correlation, r | ICC(2,1) |

|---|---|---|---|---|

| Stance time | L | 0.785 | 0.945 | 0.945 |

| R | 0.075 | 0.900 | 0.857 | |

| Swing time | L | 0.199 | 0.690 | 0.624 |

| R | 0.020 | 0.522 | 0.469 | |

| Step time | L | 0.737 | 0.839 | 0.832 |

| R | 0.579 | 0.853 | 0.846 | |

| Double support time | L2R | 0.891 | 0.625 | 0.552 |

| R2L | <0.001 | 0.724 | 0.510 |

| Temporal Gait Parameter | Leg | N | Mean ± SD | Mean ± SD (V—MP) | Mean ± SD (|V—MP|) | |

|---|---|---|---|---|---|---|

| V | MP | |||||

| Stance time | L | 31 | 0.80 ± 0.10 | 0.79 ± 0.10 | 0.003 ± 0.03 | 0.02 ± 0.02 |

| R | 31 | 0.81 ± 0.12 | 0.76 ± 0.13 | 0.04 ± 0.04 | 0.04 ± 0.04 | |

| Swing time | L | 31 | 0.40 ± 0.04 | 0.41 ± 0.05 | −0.01 ± 0.03 | 0.03 ± 0.02 |

| R | 31 | 0.39 ± 0.04 | 0.41 ± 0.04 | −0.02 ± 0.03 | 0.03 ± 0.02 | |

| Step time | L | 31 | 0.61 ± 0.08 | 0.61 ± 0.08 | −0.005 ± 0.03 | 0.02 ± 0.02 |

| R | 31 | 0.60 ± 0.07 | 0.59 ± 0.07 | 0.01 ± 0.03 | 0.02 ± 0.02 | |

| Double support time | L2R | 31 | 0.20 ± 0.04 | 0.20 ± 0.05 | 0.001 ± 0.03 | 0.02 ± 0.02 |

| R2L | 31 | 0.20 ± 0.03 | 0.16 ± 0.05 | 0.04 ± 0.03 | 0.04 ± 0.03 | |

| Temporal Gait Parameter | Leg | Significance (2-Tailed) | Pearson Correlation, r | ICC(2,1) |

|---|---|---|---|---|

| Stance time | L | 0.922 | 0.955 | 0.956 |

| R | 0.196 | 0.944 | 0.893 | |

| Swing time | L | 0.306 | 0.802 | 0.765 |

| R | 0.076 | 0.635 | 0.579 | |

| Step time | L | 0.824 | 0.931 | 0.928 |

| R | 0.692 | 0.926 | 0.923 | |

| Double support time | L2R | 0.902 | 0.804 | 0.779 |

| R2L | 0.001 | 0.805 | 0.551 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hii, C.S.T.; Gan, K.B.; Zainal, N.; Mohamed Ibrahim, N.; Azmin, S.; Mat Desa, S.H.; van de Warrenburg, B.; You, H.W. Automated Gait Analysis Based on a Marker-Free Pose Estimation Model. Sensors 2023, 23, 6489. https://doi.org/10.3390/s23146489

Hii CST, Gan KB, Zainal N, Mohamed Ibrahim N, Azmin S, Mat Desa SH, van de Warrenburg B, You HW. Automated Gait Analysis Based on a Marker-Free Pose Estimation Model. Sensors. 2023; 23(14):6489. https://doi.org/10.3390/s23146489

Chicago/Turabian StyleHii, Chang Soon Tony, Kok Beng Gan, Nasharuddin Zainal, Norlinah Mohamed Ibrahim, Shahrul Azmin, Siti Hajar Mat Desa, Bart van de Warrenburg, and Huay Woon You. 2023. "Automated Gait Analysis Based on a Marker-Free Pose Estimation Model" Sensors 23, no. 14: 6489. https://doi.org/10.3390/s23146489