Collaborative Perception—The Missing Piece in Realizing Fully Autonomous Driving

Abstract

:1. Introduction

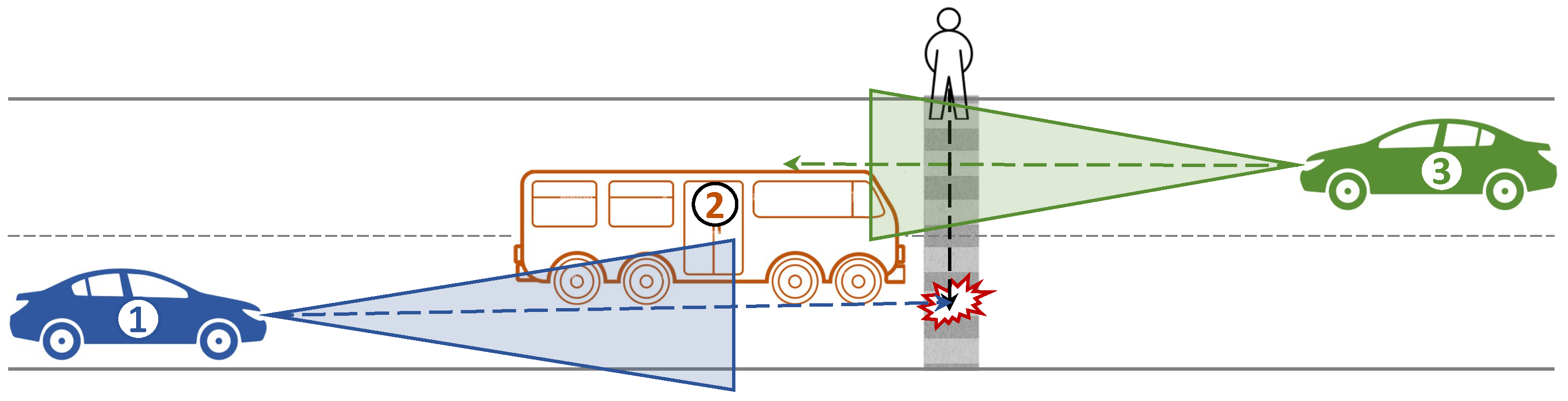

2. Transition from Classical Perception to Collaborative Perception

2.1. Perception Creation at the Vehicle Level

2.2. Perception Creation at the Tower Level

- Integration of multiple sensors: the need for integrating information from cameras, LiDAR, and radar sensors to create a comprehensive view of the environment;

- Collaborative perception: the need for collaboration among autonomous vehicles and eRSUs for creating an extended perception of the environment;

- Design and development of sophisticated algorithms: the need for developing algorithms that can effectively process the large amounts of data generated by the sensors, create a collaborative perception of the environment, and communicate the extended perception with AVs;

- Ensuring safe and efficient operation: the importance of having accurate and collaborative perceptions of the environment to ensure the safe and efficient operations of autonomous vehicles; and

- Addressing potential challenges: the potential challenges that may arise in the development of collaborative perception through the eRSUs and the importance of addressing these challenges to make the extended perception available to all relevant AVs.

3. Collaborative Perception Sharing

3.1. V2X Communication Technologies

3.1.1. Dedicated Short-Range Communication

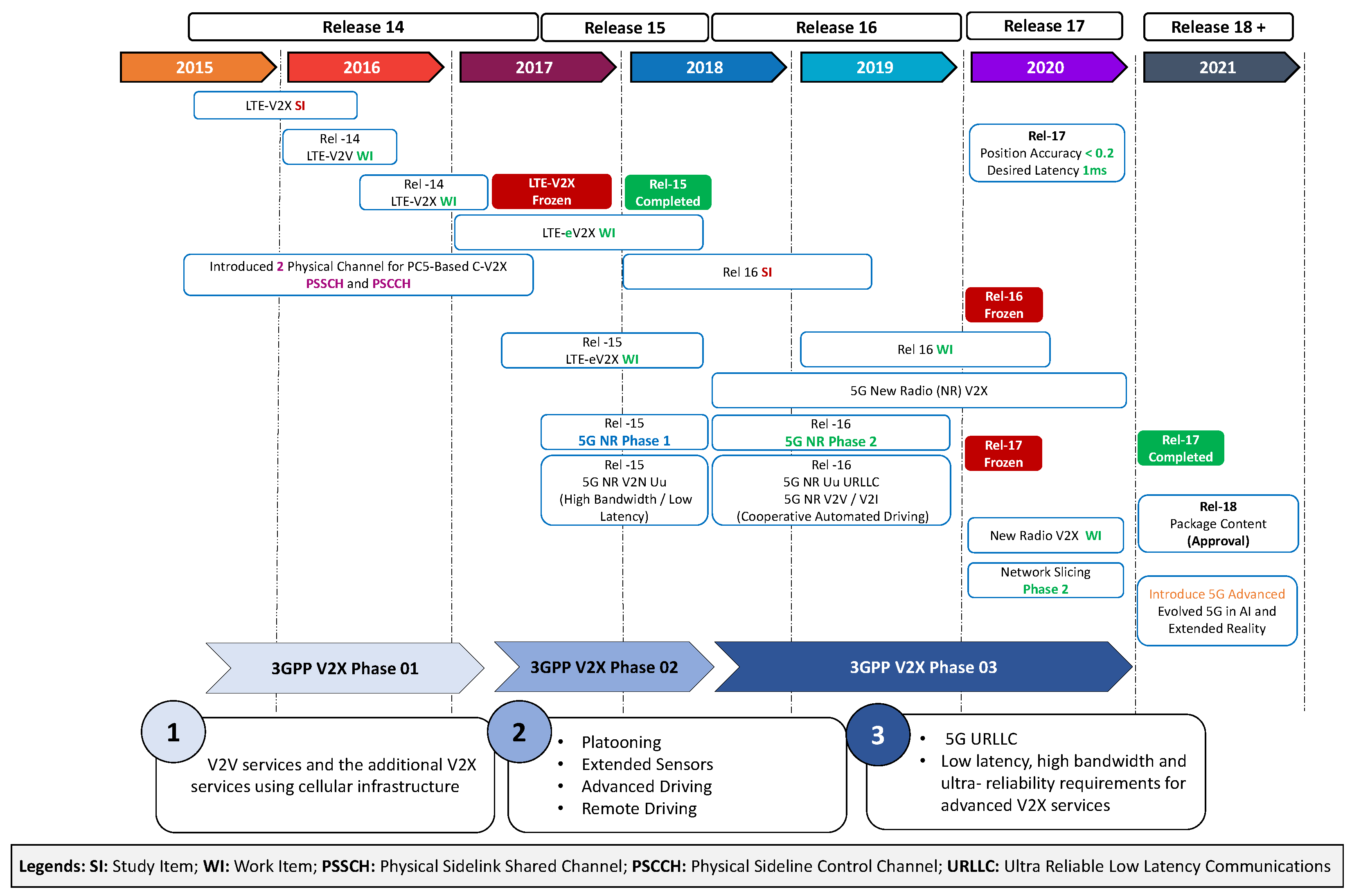

3.1.2. C-V2X Communication

3.2. Collaborative Perception Message-Sharing Models

3.2.1. Early Collaboration

3.2.2. Intermediate Collaboration

3.2.3. Late Collaboration

4. Challenges and Future Directions

4.1. Data Governance

4.2. Towards Model-Agnostic Perception Frameworks

4.3. Heterogeneous and Dynamic Collaboration

4.4. Value of Information Aware Collaborative Perception

4.5. Intelligent Context-Aware Fusion

4.6. Towards Multi-Modality Large-Scale Open Datasets

4.7. Information Redundancy Mitigation in V2X Collaborative Perception

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADAS | Advanced Driving Assistance System |

| AD | Autonomous Driving |

| AV | Autonomous Vehicle |

| CAD | Collaborative Autonomous Driving |

| CAV | Connected and Autonomous Vehicle |

| CP | collaborative Perception |

| CPM | Cooperative Perception Message |

| CPS | Collective Perception Service |

| CSMA | Carrier Sense Multiple Access |

| DMS | Dynamic Message Signs |

| DRL | Deep Reinforcement Learning |

| DSRC | Dedicated Short-range Communication |

| eRSU | evolved Roadside Units |

| ETSI | European Telecommunications Standards Institute |

| ITS | Intelligent Transport System |

| POC | Perceived Object Container |

| OEM | Original Equipment Manufacturers |

| OFDM | Orthogonal Frequency Division Multiplexing |

| SAE | Society of Automotive Engineers |

| URLLC | Ultra-Reliable, Low-Latency Communications |

| V2I | Vehicle to Infrastructure |

| V2P | Vehicle to Pedestrian |

| V2V | Vehicle to Vehicle |

| V2X | Vehicle to Everything |

References

- SAE Levels of Driving Automation™ Refined for Clarity and International Audience. Available online: https://www.sae.org/blog/sae-j3016-update (accessed on 20 December 2022).

- Malik, S.; Khan, M.A.; El-Sayed, H. Collaborative autonomous driving—A survey of solution approaches and future challenges. Sensors 2021, 21, 3783. [Google Scholar] [CrossRef] [PubMed]

- Khan, M.A.; Sayed, H.E.; Malik, S.; Zia, T.; Khan, J.; Alkaabi, N.; Ignatious, H. Level-5 autonomous driving—Are we there yet? A review of research literature. ACM Comput. Surv. (CSUR) 2022, 55, 1–38. [Google Scholar] [CrossRef]

- Yeong, D.J.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and sensor fusion technology in autonomous vehicles: A review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef]

- Cui, G.; Zhang, W.; Xiao, Y.; Yao, L.; Fang, Z. Cooperative perception technology of autonomous driving in the internet of vehicles environment: A review. Sensors 2022, 22, 5535. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, X.; Song, Z.; Bi, J.; Zhang, G.; Wei, H.; Tang, L.; Yang, L.; Li, J.; Jia, C.; et al. Multi-modal 3D Object Detection in Autonomous Driving: A Survey and Taxonomy. IEEE Trans. Intell. Veh. 2023, 1–19. [Google Scholar] [CrossRef]

- Li, Y.; Ma, D.; An, Z.; Wang, Z.; Zhong, Y.; Chen, S.; Feng, C. V2X-Sim: Multi-agent collaborative perception dataset and benchmark for autonomous driving. IEEE Robot. Autom. Lett. 2022, 7, 10914–10921. [Google Scholar] [CrossRef]

- TR 103 562—V2.1.1—Intelligent Transport Systems (ITS); Vehicular Communications; Basic Set of Applications; Analysis of the Collective Perception Service (CPS); Release 2. Available online: https://www.etsi.org/deliver/etsi_tr/103500_103599/103562/02.01.01_60/tr_103562v020101p.pdf (accessed on 31 January 2023).

- Wang, J.; Guo, X.; Wang, H.; Jiang, P.; Chen, T.; Sun, Z. Pillar-Based Cooperative Perception from Point Clouds for 6G-Enabled Cooperative Autonomous Vehicles. Wirel. Commun. Mob. Comput. 2022, 2022, 3646272. [Google Scholar] [CrossRef]

- Cui, J.; Qiu, H.; Chen, D.; Stone, P.; Zhu, Y. Coopernaut: End-to-end driving with cooperative perception for networked vehicles. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17252–17262. [Google Scholar]

- Qiu, H.; Huang, P.; Asavisanu, N.; Liu, X.; Psounis, K.; Govindan, R. AutoCast: Scalable Infrastructure-less Cooperative Perception for Distributed Collaborative Driving. In Proceedings of the 20th Annual International Conference on Mobile Systems, Applications, and Services, MobiSys ’22, Portland, OR, USA, 27 June–1 July 2022. [Google Scholar]

- Wang, T.H.; Manivasagam, S.; Liang, M.; Yang, B.; Zeng, W.; Urtasun, R. V2vnet: Vehicle-to-vehicle communication for joint perception and prediction. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 605–621. [Google Scholar]

- Wu, Q.; Zhao, Y.; Fan, Q.; Fan, P.; Wang, J.; Zhang, C. Mobility-Aware Cooperative Caching in Vehicular Edge Computing Based on Asynchronous Federated and Deep Reinforcement Learning. IEEE J. Sel. Top. Signal Process. 2022, 17, 66–81. [Google Scholar] [CrossRef]

- Li, J.; Xu, R.; Liu, X.; Ma, J.; Chi, Z.; Ma, J.; Yu, H. Learning for vehicle-to-vehicle cooperative perception under lossy communication. IEEE Trans. Intell. Veh. 2023, 8, 2650–2660. [Google Scholar] [CrossRef]

- Xu, R.; Xiang, H.; Xia, X.; Han, X.; Li, J.; Ma, J. Opv2v: An open benchmark dataset and fusion pipeline for perception with vehicle-to-vehicle communication. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 2583–2589. [Google Scholar]

- Hu, Y.; Fang, S.; Lei, Z.; Zhong, Y.; Chen, S. Where2comm: Communication-efficient collaborative perception via spatial confidence maps. arXiv 2022, arXiv:2209.12836. [Google Scholar]

- Chen, W.; Xu, R.; Xiang, H.; Liu, L.; Ma, J. Model-agnostic multi-agent perception framework. arXiv 2022, arXiv:2203.13168. [Google Scholar]

- Yang, Q.; Fu, S.; Wang, H.; Fang, H. Machine-learning-enabled cooperative perception for connected autonomous vehicles: Challenges and opportunities. IEEE Netw. 2021, 35, 96–101. [Google Scholar] [CrossRef]

- Guo, J.; Carrillo, D.; Chen, Q.; Yang, Q.; Fu, S.; Lu, H.; Guo, R. Slim-FCP: Lightweight-Feature-Based Cooperative Perception for Connected Automated Vehicles. IEEE Internet Things J. 2022, 9, 15630–15638. [Google Scholar] [CrossRef]

- Biswas, A.; Wang, H.C. Autonomous vehicles enabled by the integration of IoT, edge intelligence, 5G, and blockchain. Sensors 2023, 23, 1963. [Google Scholar] [PubMed]

- Gallego-Madrid, J.; Sanchez-Iborra, R.; Ortiz, J.; Santa, J. The role of vehicular applications in the design of future 6G infrastructures. ICT Express, 2023; in press. [Google Scholar] [CrossRef]

- Wu, Q.; Zhao, Y.; Fan, Q. Time-dependent performance modeling for platooning communications at intersection. IEEE Internet Things J. 2022, 9, 18500–18513. [Google Scholar] [CrossRef]

- Wu, Q.; Ge, H.; Fan, P.; Wang, J.; Fan, Q.; Li, Z. Time-dependent performance analysis of the 802.11 p-based platooning communications under disturbance. IEEE Trans. Veh. Technol. 2020, 69, 15760–15773. [Google Scholar] [CrossRef]

- Bai, Z.; Wu, G.; Qi, X.; Liu, Y.; Oguchi, K.; Barth, M.J. Infrastructure-based object detection and tracking for cooperative driving automation: A survey. In Proceedings of the 2022 IEEE Intelligent Vehicles Symposium (IV), Aachen, Germany, 5–9 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1366–1373. [Google Scholar]

- Aoki, S.; Higuchi, T.; Altintas, O. Cooperative perception with deep reinforcement learning for connected vehicles. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 328–334. [Google Scholar]

- Tsukada, M.; Oi, T.; Kitazawa, M.; Esaki, H. Networked roadside perception units for autonomous driving. Sensors 2020, 20, 5320. [Google Scholar] [CrossRef]

- Chen, Q.; Tang, S.; Yang, Q.; Fu, S. Cooper: Cooperative perception for connected autonomous vehicles based on 3d point clouds. In Proceedings of the 2019 IEEE 39th International Conference on Distributed Computing Systems (ICDCS), Dallas, TX, USA, 7–9 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 514–524. [Google Scholar]

- Arnold, E.; Dianati, M.; de Temple, R.; Fallah, S. Cooperative perception for 3D object detection in driving scenarios using infrastructure sensors. IEEE Trans. Intell. Transp. Syst. 2020, 23, 1852–1864. [Google Scholar] [CrossRef]

- Bai, Z.; Wu, G.; Barth, M.J.; Liu, Y.; Sisbot, E.A.; Oguchi, K. Pillargrid: Deep learning-based cooperative perception for 3D object detection from onboard-roadside lidar. In Proceedings of the 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1743–1749. [Google Scholar]

- Duan, X.; Jiang, H.; Tian, D.; Zou, T.; Zhou, J.; Cao, Y. V2I based environment perception for autonomous vehicles at intersections. China Commun. 2021, 18, 1–12. [Google Scholar] [CrossRef]

- Yu, H.; Luo, Y.; Shu, M.; Huo, Y.; Yang, Z.; Shi, Y.; Guo, Z.; Li, H.; Hu, X.; Yuan, J.; et al. Dair-v2x: A large-scale dataset for vehicle-infrastructure cooperative 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 21361–21370. [Google Scholar]

- Mao, R.; Guo, J.; Jia, Y.; Sun, Y.; Zhou, S.; Niu, Z. DOLPHINS: Dataset for Collaborative Perception enabled Harmonious and Interconnected Self-driving. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 4361–4377. [Google Scholar]

- Zhang, H.; Yang, Z.; Xiong, H.; Zhu, T.; Long, Z.; Wu, W. Transformer Aided Adaptive Extended Kalman Filter for Autonomous Vehicle Mass Estimation. Processes 2023, 11, 887. [Google Scholar] [CrossRef]

- Choi, J.D.; Kim, M.Y. A sensor fusion system with thermal infrared camera and LiDAR for autonomous vehicles and deep learning based object detection. ICT Express 2023, 9, 222–227. [Google Scholar] [CrossRef]

- Singh, A. Transformer-based sensor fusion for autonomous driving: A survey. arXiv 2023, arXiv:2302.11481. [Google Scholar]

- Khan, M.A.; El Sayed, H.; Malik, S.; Zia, M.T.; Alkaabi, N.; Khan, J. A journey towards fully autonomous driving-fueled by a smart communication system. Veh. Commun. 2022, 36, 100476. [Google Scholar]

- Mannoni, V.; Berg, V.; Sesia, S.; Perraud, E. A comparison of the V2X communication systems: ITS-G5 and C-V2X. In Proceedings of the 2019 IEEE 89th Vehicular Technology Conference (VTC2019-Spring), Kuala Lumpur, Malaysia, 28 April–1 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–5. [Google Scholar]

- Molina-Masegosa, R.; Gozalvez, J.; Sepulcre, M. Comparison of IEEE 802.11 p and LTE-V2X: An evaluation with periodic and aperiodic messages of constant and variable size. IEEE Access 2020, 8, 121526–121548. [Google Scholar] [CrossRef]

- Kenney, J.B. Dedicated short-range communications (DSRC) standards in the United States. Proc. IEEE 2011, 99, 1162–1182. [Google Scholar] [CrossRef]

- Abdelkader, G.; Elgazzar, K.; Khamis, A. Connected vehicles: Technology review, state of the art, challenges and opportunities. Sensors 2021, 21, 7712. [Google Scholar] [CrossRef]

- 3GPP—The Mobile Broadband Standard. Available online: https://www.3gpp.org/ (accessed on 10 May 2023).

- Khan, M.J.; Khan, M.A.; Malik, S.; Kulkarni, P.; Alkaabi, N.; Ullah, O.; El-Sayed, H.; Ahmed, A.; Turaev, S. Advancing C-V2X for Level 5 Autonomous Driving from the Perspective of 3GPP Standards. Sensors 2023, 23, 2261. [Google Scholar] [CrossRef]

- Miucic, R.; Sheikh, A.; Medenica, Z.; Kunde, R. V2X applications using collaborative perception. In Proceedings of the 2018 IEEE 88th Vehicular Technology Conference (VTC-Fall), Chicago, IL, USA, 27–30 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Masini, B.M.; Bazzi, A.; Natalizio, E. Radio access for future 5G vehicular networks. In Proceedings of the 2017 IEEE 86th Vehicular Technology Conference (VTC-Fall), Toronto, ON, Canada, 24–27 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–7. [Google Scholar]

- Choi, J.; Va, V.; Gonzalez-Prelcic, N.; Daniels, R.; Bhat, C.R.; Heath, R.W. Millimeter-wave vehicular communication to support massive automotive sensing. IEEE Commun. Mag. 2016, 54, 160–167. [Google Scholar] [CrossRef]

- Garcia-Roger, D.; González, E.E.; Martín-Sacristán, D.; Monserrat, J.F. V2X support in 3GPP specifications: From 4G to 5G and beyond. IEEE Access 2020, 8, 190946–190963. [Google Scholar] [CrossRef]

- Zhu, X.; Yuan, S.; Zhao, P. Research and application on key technologies of 5G and C-V2X intelligent converged network based on MEC. In Proceedings of the 2021 IEEE International Conference on Power Electronics, Computer Applications (ICPECA), Shenyang, China, 22–24 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 175–179. [Google Scholar]

- Wei, C.; Tan, X.; Zhang, H. Deep Reinforcement Learning Based Radio Resource Selection Approach for C-V2X Mode 4 in Cooperative Perception Scenario. In Proceedings of the 2022 18th International Conference on Mobility, Sensing and Networking (MSN), Guangzhou, China, 14–16 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 11–19. [Google Scholar]

- Fukatsu, R.; Sakaguchi, K. Millimeter-wave V2V communications with cooperative perception for automated driving. In Proceedings of the 2019 IEEE 89th vehicular technology conference (VTC2019-Spring), Kuala Lumpur, Malaysia, 28 April–1 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–5. [Google Scholar]

- Fukatsu, R.; Sakaguchi, K. Automated driving with cooperative perception using millimeter-wave V2I communications for safe and efficient passing through intersections. In Proceedings of the 2021 IEEE 93rd Vehicular Technology Conference (VTC2021-Spring), Virtual Event, 25–28 April 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–5. [Google Scholar]

- Ren, S.; Chen, S.; Zhang, W. Collaborative perception for autonomous driving: Current status and future trend. In Proceedings of the 2021 5th Chinese Conference on Swarm Intelligence and Cooperative Control, Shenzhen, China, 19–22 November 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 682–692. [Google Scholar]

- Chen, Q.; Ma, X.; Tang, S.; Guo, J.; Yang, Q.; Fu, S. F-cooper: Feature based cooperative perception for autonomous vehicle edge computing system using 3D point clouds. In Proceedings of the 4th ACM/IEEE Symposium on Edge Computing, Arlington, VA, USA, 7–9 November 2019; pp. 88–100. [Google Scholar]

- Li, Y.; Ren, S.; Wu, P.; Chen, S.; Feng, C.; Zhang, W. Learning distilled collaboration graph for multi-agent perception. Adv. Neural Inf. Process. Syst. 2021, 34, 29541–29552. [Google Scholar]

- Bhattacharya, P.; Shukla, A.; Tanwar, S.; Kumar, N.; Sharma, R. 6Blocks: 6G-enabled trust management scheme for decentralized autonomous vehicles. Comput. Commun. 2022, 191, 53–68. [Google Scholar] [CrossRef]

- ETSI. Intelligent transport systems (its); vehicular communications; basic set of applications; part 2: Specification of cooperative awareness basic service. Draft ETSI TS 2011, 20, 448–451. [Google Scholar]

- Abdel-Aziz, M.K.; Perfecto, C.; Samarakoon, S.; Bennis, M.; Saad, W. Vehicular cooperative perception through action branching and federated reinforcement learning. IEEE Trans. Commun. 2021, 70, 891–903. [Google Scholar] [CrossRef]

- Jung, B.; Kim, J.; Pack, S. Deep Reinforcement Learning-based Context-Aware Redundancy Mitigation for Vehicular Collective Perception Services. In Proceedings of the 2022 International Conference on Information Networking (ICOIN), Jeju-si, Republic of Korea, 12–15 January 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 276–279. [Google Scholar]

- Delooz, Q.; Willecke, A.; Garlichs, K.; Hagau, A.C.; Wolf, L.; Vinel, A.; Festag, A. Analysis and evaluation of information redundancy mitigation for v2x collective perception. IEEE Access 2022, 10, 47076–47093. [Google Scholar] [CrossRef]

| Parameters | Camera | LiDAR | Radar | Ultra-sonic | Fusion and V2X |

|---|---|---|---|---|---|

| Field of View | A | A | G | M | G |

| Edge Detection | G | G | W | W | G |

| Object Detection | A | A | A | A | G |

| Distance Estimation | A | G | G | M | G |

| Object Classification | G | M | A | M | G |

| Lane Tracking | G | W | W | W | G |

| Darkness/Light Disturbance | A | G | G | G | G |

| Visibility Range | A | A | G | M | G |

| Adverse Weather | M | M | A | M | G |

| Angular Resolution | G | M | A | W | G |

| Velocity Resolution | M | G | A | W | G |

| Range | G | G | G | W | G |

| Feature/ Requirement | DSRC IEEE 802.11p | LTE-V2X | C-V2X | NR-V2X |

|---|---|---|---|---|

| Technology | Wi-Fi-based | LTE-based | LTE- and 5G-based | 5G-based |

| Communication Range | Up to a few hundred meters | Up to several kilometers | Up to several kilometers | Up to several kilometers |

| Frequency Band | 5.9 GHz | Licensed cellular bands | Licensed cellular bands | Licensed cellular bands |

| Communication Modes | V2V, V2I, V2P | V2V, V2I, V2P | V2V, V2I, V2P | V2V, V2I, V2P |

| Direct Communication | Yes | Yes | Yes | Yes |

| Network Connectivity | No | Yes | Yes | Yes |

| Latency | Low to medium | Medium to high | Medium to high | Low to medium |

| Data Rate | Medium to high | High | High | High |

| Modulation | OFDM | SC-FDMA | SC-FDMA | 256 QAM |

| Security & Privacy | Basic | Enhanced | Enhanced | Enhanced |

| Spectrum Efficiency | Moderate | High | High | High |

| Scalability | Limited scalability | High | High | High |

| Retransmission | No | Yes | Yes | Yes |

| Collaboration Type | Approach | Advantages | Disadvantages | Decision Challenges |

|---|---|---|---|---|

| Early | Deep Learning | 1. A comprehensive understanding of the environment is formed by sharing and collecting raw data. 2. A complete and accurate understanding of the environment enables AVs to make better decisions. | 1. Low tolerance for transmission delay and noise, and communication bandwidth is a constraint. 2. Implementing data-level fusion can be expensive as it requires significant computational resources. | These models have decision problems, especially in dynamic environments: 1. Who will decide on which vehicle to provide which data? 2. Who will decide to receive data from which neighboring vehicle? 3. How to intelligently decide on selectively receiving data from the right set of a sensor (camera, LiDAR, radar) from neighboring vehicles. 4. How to decide on the standardized data exchange and fusion process. |

| Intermediate | Deep Learning | 1. High tolerance for noise, delay, and variations in node and sensor models. 2. Reduces the computational demands on individual vehicles. 3. Reduces the complexity of the data and improves the overall perception. | 1. Needs training data, and finding a systematic approach to model design is difficult. 2. Features extracted from different sources may be inconsistent, making it difficult to combine them effectively. | |

| Late | Traditional | 1. Simple to develop and implement in real-world systems. 2. Better handling of data heterogeneity. | 1. Significantly constrained by incorrect perceptual outcomes or disparities in sources. 2. Can be computationally intensive, requiring significant processing power. 3. Can increase the latency of the system, potentially leading to delays in decision-making. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Malik, S.; Khan, M.J.; Khan, M.A.; El-Sayed, H. Collaborative Perception—The Missing Piece in Realizing Fully Autonomous Driving. Sensors 2023, 23, 7854. https://doi.org/10.3390/s23187854

Malik S, Khan MJ, Khan MA, El-Sayed H. Collaborative Perception—The Missing Piece in Realizing Fully Autonomous Driving. Sensors. 2023; 23(18):7854. https://doi.org/10.3390/s23187854

Chicago/Turabian StyleMalik, Sumbal, Muhammad Jalal Khan, Manzoor Ahmed Khan, and Hesham El-Sayed. 2023. "Collaborative Perception—The Missing Piece in Realizing Fully Autonomous Driving" Sensors 23, no. 18: 7854. https://doi.org/10.3390/s23187854