Beyond Human Detection: A Benchmark for Detecting Common Human Posture

Abstract

:1. Introduction

- We formulate a novel task of common human posture detection, which underpins a variety of applications where information about human posture is desired but traditional pose estimation is hard and which may also attract attention to more informative object detection methods that extend beyond mere identification and localization.

- We introduced CHP dataset, the first benchmark dedicated to common human posture detection.

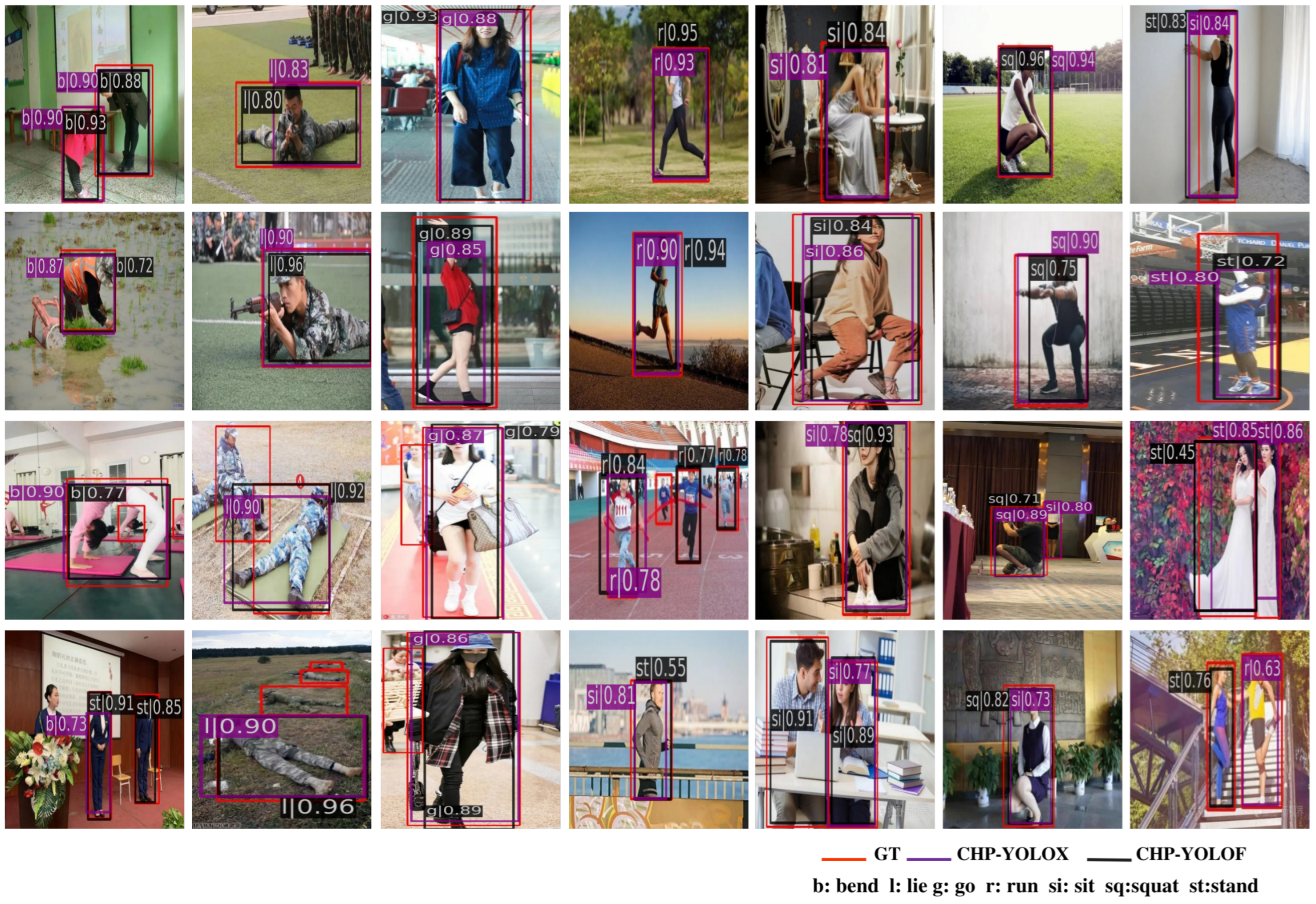

- We developed two baseline detectors, i.e., CHP-YOLOF and CHP-YOLOX, based on two identity-preserved human posture detectors to support and stimulate further research on CHP.

2. Related Work

2.1. Traditional Human Detection

2.2. Deep Learning Methods for Human Detection

2.3. Human Pose Estimation

3. Detection Benchmark for Human Posture

3.1. Image Collection

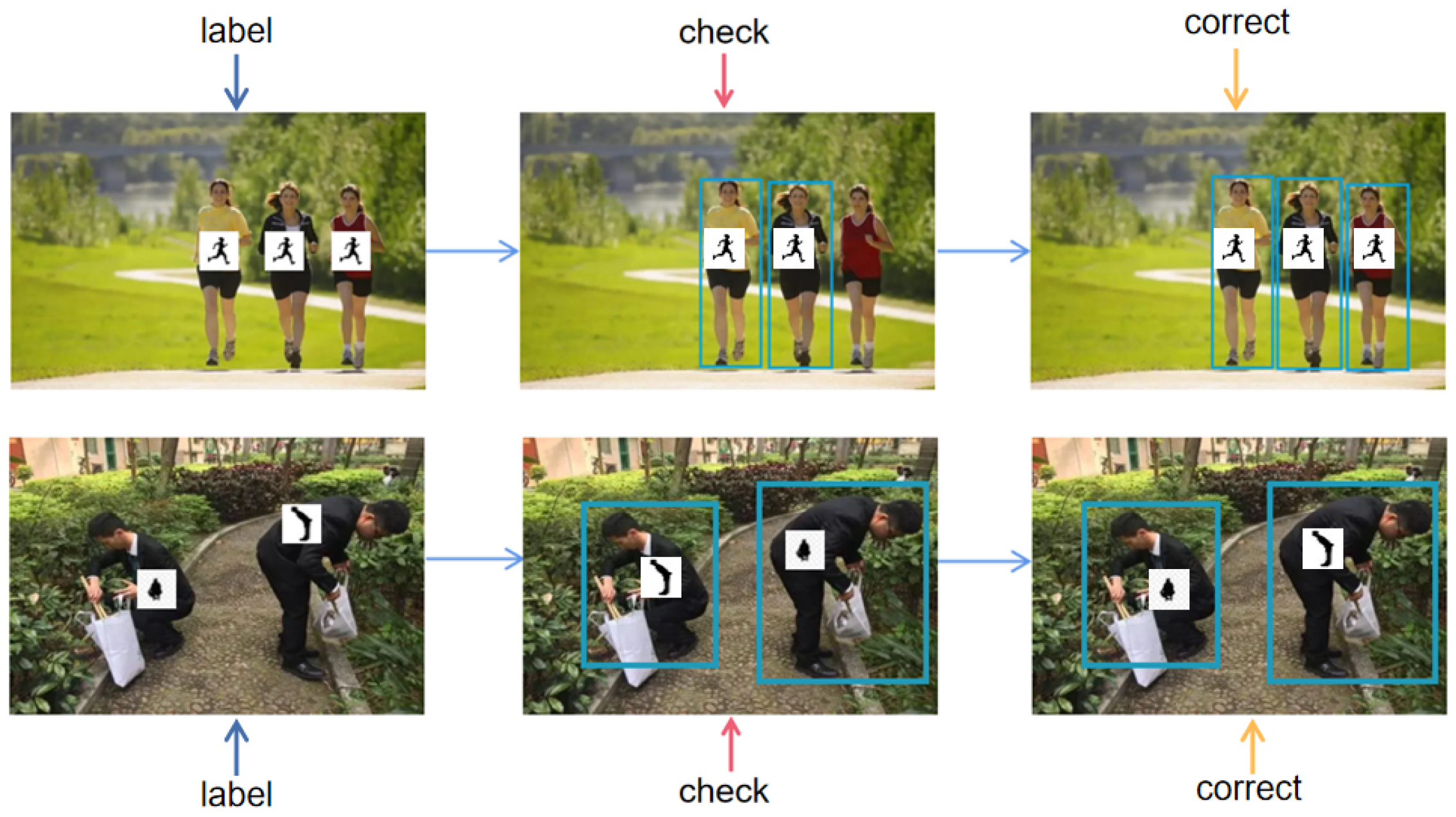

3.2. Annotation

- Category: human.

- Common Human Posture box: one of ‘bending’, ‘lying’, ‘going’, ‘running’, ‘sitting’, ‘squatting’, and ‘standing’.

- Bounding box: an axis-aligned bounding box surrounding the extent of the human visible in the image

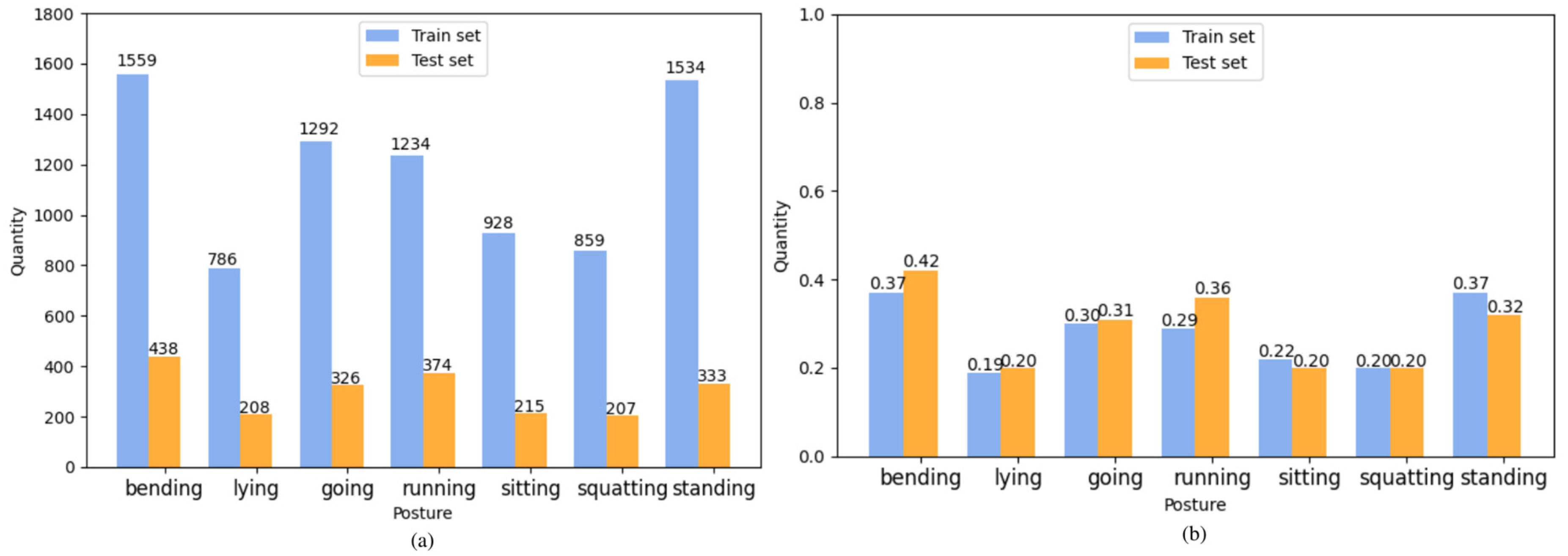

3.3. Dataset Statistics

4. Baseline Detectors for Detecting Common Human Postures

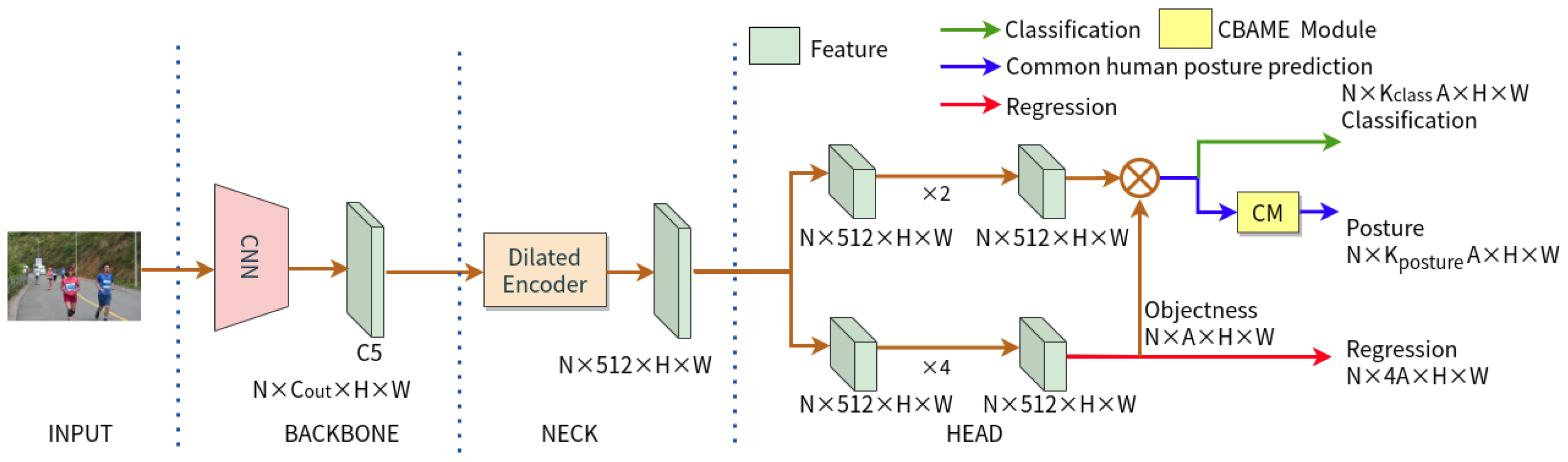

4.1. CHP-YOLOF

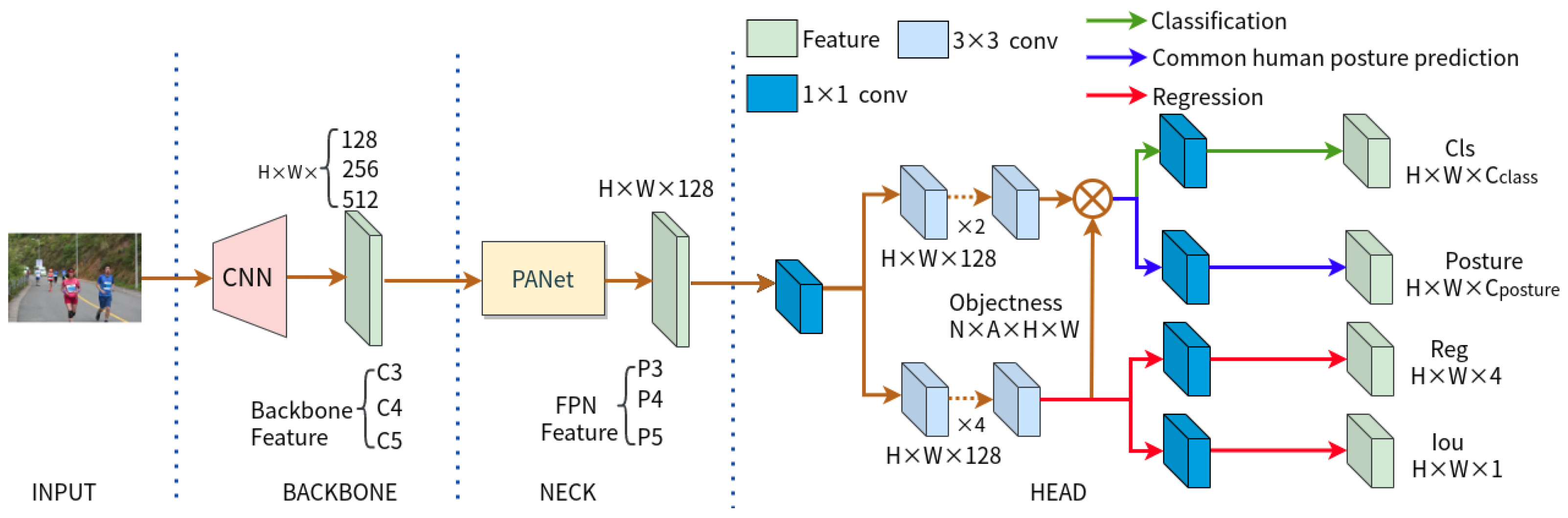

4.2. CHP-YOLOX

5. Evaluation

5.1. Evaluation Metrics

5.2. Evaluation Results

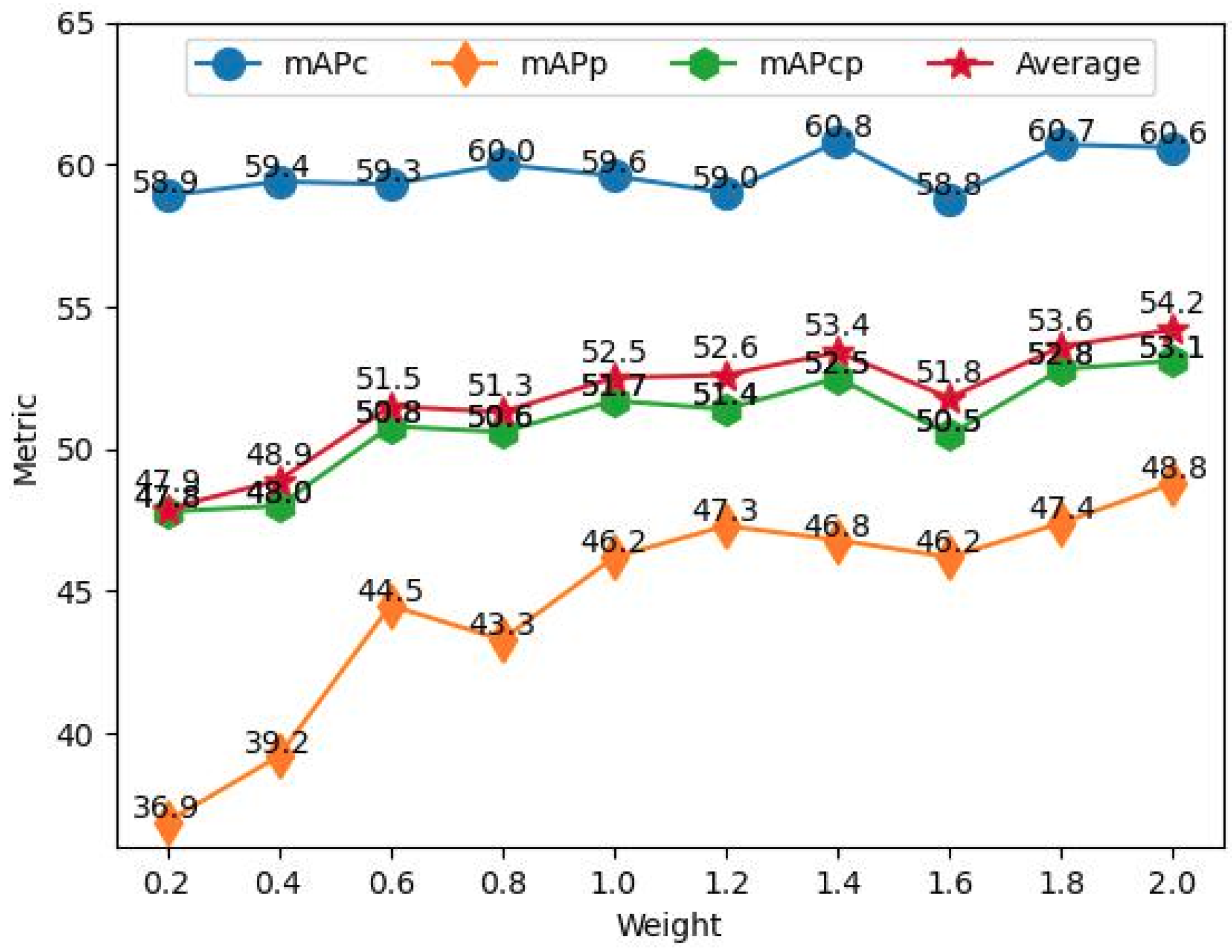

5.3. Ablation Study

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cui, H.; Dahnoun, N. High Precision Human Detection and Tracking Using Millimeter-Wave Radars. IEEE Aerosp. Electron. Syst. Mag. 2020, 36, 22–32. [Google Scholar] [CrossRef]

- Ansari, M.A.; Singh, D.K. Human detection techniques for real time surveillance: A comprehensive survey. Multimed. Tools Appl. 2020, 80, 8759–8808. [Google Scholar] [CrossRef]

- Khan, M.A.; Mittal, M.; Goyal, L.M.; Roy, S. A deep survey on supervised learning based human detection and activity classification methods. Multimed. Tools Appl. 2021, 80, 27867–27923. [Google Scholar] [CrossRef]

- Rahmaniar, W.; Hernawan, A. Real-Time Human Detection Using Deep Learning on Embedded Platforms: A Review. J. Robot. Control. (JRC) 2021, 2, 462–468. [Google Scholar]

- Sumit, S.S.; Rambli, D.R.A.; Mirjalili, S.M. Vision-Based Human Detection Techniques: A Descriptive Review. IEEE Access 2021, 9, 42724–42761. [Google Scholar] [CrossRef]

- Pawar, P.; Devendran, V. Scene Understanding: A Survey to See the World at a Single Glance. In Proceedings of the 2019 2nd International Conference on Intelligent Communication and Computational Techniques (ICCT), Jaipur, India, 28–29 September 2019; pp. 182–186. [Google Scholar]

- Naseer, M.; Khan, S.H.; Porikli, F.M. Indoor Scene Understanding in 2.5/3D for Autonomous Agents: A Survey. IEEE Access 2018, 7, 1859–1887. [Google Scholar] [CrossRef]

- Taeihagh, A.; Lim, H.S.M. Governing autonomous vehicles: Emerging responses for safety, liability, privacy, cybersecurity, and industry risks. Transp. Rev. 2018, 39, 103–128. [Google Scholar] [CrossRef]

- Guo, Z.; Huang, Y.; Hu, X.; Wei, H.; Zhao, B. A Survey on Deep Learning Based Approaches for Scene Understanding in Autonomous Driving. Electronics 2021, 10, 471. [Google Scholar] [CrossRef]

- Schepers, J.; Streukens, S. To serve and protect: A typology of service robots and their role in physically safe services. J. Serv. Manag. 2022, 32, 197–209. [Google Scholar] [CrossRef]

- Wirtz, J.; Patterson, P.G.; Kunz, W.H.; Gruber, T.; Lu, V.N.; Paluch, S.; Martins, A. Brave new world: Service robots in the frontline. J. Serv. Manag. 2018, 29, 907–931. [Google Scholar] [CrossRef]

- Lu, V.N.; Wirtz, J.; Kunz, W.H.; Paluch, S.; Gruber, T.; Martins, A.; Patterson, P.G. Service robots, customers and service employees: What can we learn from the academic literature and where are the gaps? J. Serv. Theory Pract. 2020, 30, 361–391. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, F.; Zhang, Y.; Cheng, H.; Gao, R.; Li, Z.; Zhao, J.; Zhang, M. An Elderly Living-alone Guardianship Model Based on Wavelet Transform. In Proceedings of the 2022 4th International Conference on Power and Energy Technology (ICPET), Xining, China, 28–31 July 2022; pp. 1249–1253. [Google Scholar]

- Umbrello, S.; Capasso, M.C.; Balistreri, M.; Pirni, A.; Merenda, F. Value Sensitive Design to Achieve the UN SDGs with AI: A Case of Elderly Care Robots. Minds Mach. 2021, 31, 395–419. [Google Scholar] [CrossRef] [PubMed]

- Yew, G.C.K. Trust in and Ethical Design of Carebots: The Case for Ethics of Care. Int. J. Soc. Robot. 2020, 13, 629–645. [Google Scholar] [CrossRef] [PubMed]

- Coin, A.; Dubljević, V. Carebots for eldercare: Technology, ethics, and implications. In Trust in Human-Robot Interaction; Academic Press: Cambridge, MA, USA, 2021. [Google Scholar]

- Bardaro, G.; Antonini, A.; Motta, E. Robots for Elderly Care in the Home: A Landscape Analysis and Co-Design Toolkit. Int. J. Soc. Robot. 2021, 14, 657–681. [Google Scholar] [CrossRef]

- Jang, J.; Kim, D.; Park, C.; Jang, M.; Lee, J.; Kim, J. ETRI-Activity3D: A Large-Scale RGB-D Dataset for Robots to Recognize Daily Activities of the Elderly. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 10990–10997. [Google Scholar]

- He, W.; Li, Z.; Chen, C.P. A survey of human-centered intelligent robots: Issues and challenges. IEEE/CAA J. Autom. Sin. 2017, 4, 602–609. [Google Scholar] [CrossRef]

- Quiroz, M.; Patiño, R.; Diaz-Amado, J.; Cardinale, Y. Group emotion detection based on social robot perception. Sensors 2022, 22, 3749. [Google Scholar] [CrossRef]

- Bretan, M.; Hoffman, G.; Weinberg, G. Emotionally expressive dynamic physical behaviors in robots. Int. J. Hum. Comput. Stud. 2015, 78, 1–16. [Google Scholar] [CrossRef]

- Liu, J.; Wang, L.; Zhou, H. The application of human–computer interaction technology fused with artificial intelligence in sports moving target detection education for college athlete. Front. Psychol. 2021, 12, 677590. [Google Scholar] [CrossRef]

- Ait-Bennacer, F.E.; Aaroud, A.; Akodadi, K.; Cherradi, B. Applying Deep Learning and Computer Vision Techniques for an e-Sport and Smart Coaching System Using a Multiview Dataset: Case of Shotokan Karate. Int. J. Online Biomed. Eng. 2022, 18, 35–53. [Google Scholar] [CrossRef]

- Zhao, L.; Chen, W. Detection and recognition of human body posture in motion based on sensor technology. IEEJ Trans. Electr. Electron. Eng. 2020, 15, 766–770. [Google Scholar] [CrossRef]

- Wang, J.; Qiu, K.; Peng, H.; Fu, J.; Zhu, J. Ai coach: Deep human pose estimation and analysis for personalized athletic training assistance. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 374–382. [Google Scholar]

- Ko, B.C.; Jeong, M.; Nam, J. Fast human detection for intelligent monitoring using surveillance visible sensors. Sensors 2014, 14, 21247–21257. [Google Scholar] [CrossRef] [PubMed]

- Chaaraoui, A.A.; Padilla-López, J.R.; Ferrández-Pastor, F.J.; Nieto-Hidalgo, M.; Flórez-Revuelta, F. A vision-based system for intelligent monitoring: Human behaviour analysis and privacy by context. Sensors 2014, 14, 8895–8925. [Google Scholar] [CrossRef] [PubMed]

- Jalal, A.; Kamal, S.; Kim, D. A depth video-based human detection and activity recognition using multi-features and embedded hidden Markov models for health care monitoring systems. Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 54. [Google Scholar] [CrossRef]

- Cortés, C.; Ardanza, A.; Molina-Rueda, F.; Cuesta-Gomez, A.; Unzueta, L.; Epelde, G.; Ruiz, O.E.; De Mauro, A.; Florez, J. Upper limb posture estimation in robotic and virtual reality-based rehabilitation. BioMed Res. Int. 2014, 2014, 821908. [Google Scholar] [CrossRef]

- Postolache, O.; Hemanth, D.J.; Alexandre, R.; Gupta, D.; Geman, O.; Khanna, A. Remote monitoring of physical rehabilitation of stroke patients using IoT and virtual reality. IEEE J. Sel. Areas Commun. 2020, 39, 562–573. [Google Scholar] [CrossRef]

- Wu, M.Y.; Ting, P.W.; Tang, Y.H.; Chou, E.T.; Fu, L.C. Hand pose estimation in object-interaction based on deep learning for virtual reality applications. J. Vis. Commun. Image Represent. 2020, 70, 102802. [Google Scholar] [CrossRef]

- Negrillo-Cárdenas, J.; Jiménez-Pérez, J.R.; Feito, F.R. The role of virtual and augmented reality in orthopedic trauma surgery: From diagnosis to rehabilitation. Comput. Methods Programs Biomed. 2020, 191, 105407. [Google Scholar] [CrossRef]

- Lv, X.; Ta, N.; Chen, T.; Zhao, J.; Wei, H. Analysis of Gait Characteristics of Patients with Knee Arthritis Based on Human Posture Estimation. BioMed Res. Int. 2022, 2022, 7020804. [Google Scholar] [CrossRef]

- Xia, Z.X.; Lai, W.C.; Tsao, L.W.; Hsu, L.F.; Yu, C.C.H.; Shuai, H.H.; Cheng, W.H. A Human-Like Traffic Scene Understanding System: A Survey. IEEE Ind. Electron. Mag. 2021, 15, 6–15. [Google Scholar] [CrossRef]

- Chen, Y.; Tian, Y.; He, M. Monocular human pose estimation: A survey of deep learning-based methods. Comput. Vis. Image Underst. 2020, 192, 102897. [Google Scholar] [CrossRef]

- Desmarais, Y.; Mottet, D.; Slangen, P.R.L.; Montesinos, P. A review of 3D human pose estimation algorithms for markerless motion capture. Comput. Vis. Image Underst. 2021, 212, 103275. [Google Scholar] [CrossRef]

- Tang, F.; Wu, Y.; Hou, X.; Ling, H. 3D Mapping and 6D Pose Computation for Real Time Augmented Reality on Cylindrical Objects. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 2887–2899. [Google Scholar] [CrossRef]

- Adamkiewicz, M.; Chen, T.; Caccavale, A.; Gardner, R.; Culbertson, P.; Bohg, J.; Schwager, M. Vision-Only Robot Navigation in a Neural Radiance World. IEEE Robot. Autom. Lett. 2022, 7, 4606–4613. [Google Scholar] [CrossRef]

- Chen, H.; Feng, R.; Wu, S.; Xu, H.; Zhou, F.; Liu, Z. 2D Human Pose Estimation: A Survey. arXiv 2022, arXiv:2204.07370. [Google Scholar] [CrossRef]

- Kulkarni, S.; Deshmukh, S.; Fernandes, F.; Patil, A.; Jabade, V. PoseAnalyser: A Survey on Human Pose Estimation. SN Comput. Sci. 2023, 4, 1–11. [Google Scholar] [CrossRef]

- Zanfir, M.; Leordeanu, M.; Sminchisescu, C. The Moving Pose: An Efficient 3D Kinematics Descriptor for Low-Latency Action Recognition and Detection. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 2–8 December 2013; pp. 2752–2759. [Google Scholar]

- Rutjes, H.; Willemsen, M.C.; IJsselsteijn, W.A. Beyond Behavior: The Coach’s Perspective on Technology in Health Coaching. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019. [Google Scholar]

- Boerner, P.; Polasek, K.M.; True, L.; Lind, E.; Hendrick, J.L. Is What You See What You Get? Perceptions of Personal Trainers’ Competence, Knowledge, and Preferred Sex of Personal Trainer Relative to Physique. J. Strength Cond. Res. 2019, 35, 1949–1955. [Google Scholar] [CrossRef] [PubMed]

- Deng, X.; Xiang, Y.; Mousavian, A.; Eppner, C.; Bretl, T.; Fox, D. Self-supervised 6D Object Pose Estimation for Robot Manipulation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3665–3671. [Google Scholar]

- Lamas, A.; Tabik, S.; Montes, A.C.; Pérez-Hernández, F.; Fernández, J.G.T.; Olmos, R.; Herrera, F. Human pose estimation for mitigating false negatives in weapon detection in video-surveillance. Neurocomputing 2022, 489, 488–503. [Google Scholar] [CrossRef]

- Thyagarajmurthy, A.; Ninad, M.G.; Rakesh, B.; Niranjan, S.K.; Manvi, B. Anomaly Detection in Surveillance Video Using Pose Estimation. In Lecture Notes in Electrical Engineering; Springer: Singapore, 2019. [Google Scholar]

- Guo, Y.; Chen, Y.; Deng, J.; Li, S.; Zhou, H. Identity-Preserved Human Posture Detection in Infrared Thermal Images: A Benchmark. Sensors 2023, 23, 92. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Wang, Q.J.; Zhang, R.B. LPP-HOG: A new local image descriptor for fast human detection. In Proceedings of the 2008 IEEE International Symposium on Knowledge Acquisition and Modeling Workshop, Wuhan, China, 21–22 December 2008; pp. 640–643. [Google Scholar]

- Shen, J.; Sun, C.; Yang, W.; Sun, Z. Fast human detection based on enhanced variable size HOG features. In Proceedings of the Advances in Neural Networks–ISNN 2011: 8th International Symposium on Neural Networks, ISNN 2011, Guilin, China, 29 May–1 June 2011; Proceedings, Part II 8. Springer: Berlin/Heidelberg, Germany, 2011; pp. 342–349. [Google Scholar]

- Wang, X.; Han, T.X.; Yan, S. An HOG-LBP human detector with partial occlusion handling. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 32–39. [Google Scholar]

- Pang, Y.; Yuan, Y.; Li, X.; Pan, J. Efficient HOG human detection. Signal Process. 2011, 91, 773–781. [Google Scholar] [CrossRef]

- Ye, Q.; Han, Z.; Jiao, J.; Liu, J. Human detection in images via piecewise linear support vector machines. IEEE Trans. Image Process. 2012, 22, 778–789. [Google Scholar] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Chen, Q.; Wang, Y.; Yang, T.; Zhang, X.; Cheng, J.; Sun, J. You only look one-level feature. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13039–13048. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Nikouei, S.Y.; Chen, Y.; Song, S.; Xu, R.; Choi, B.Y.; Faughnan, T.R. Real-time human detection as an edge service enabled by a lightweight cnn. In Proceedings of the 2018 IEEE International Conference on Edge Computing (EDGE), San Francisco, CA, USA, 2–7 July 2018; pp. 125–129. [Google Scholar]

- Zhao, J.; Zhang, G.; Tian, L.; Chen, Y.Q. Real-time human detection with depth camera via a physical radius-depth detector and a CNN descriptor. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; pp. 1536–1541. [Google Scholar]

- Lan, W.; Dang, J.; Wang, Y.; Wang, S. Pedestrian detection based on YOLO network model. In Proceedings of the 2018 IEEE International Conference on Mechatronics and Automation (ICMA), Changchun, China, 5–8 August 2018; pp. 1547–1551. [Google Scholar]

- Burić, M.; Pobar, M.; Ivašić-Kos, M. Adapting YOLO network for ball and player detection. In Proceedings of the 8th International Conference on Pattern Recognition Applications and Methods, Prague, Czech Republic, 19–21 February 2019; Volume 1, pp. 845–851. [Google Scholar]

- Zhou, X.; Yi, J.; Xie, G.; Jia, Y.; Xu, G.; Sun, M. Human Detection Algorithm Based on Improved YOLO v4. Inf. Technol. Control 2022, 51, 485–498. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Newell, A.; Yang, K.; Deng, J. Stacked hourglass networks for human pose estimation. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VIII 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 483–499. [Google Scholar]

- Groos, D.; Ramampiaro, H.; Ihlen, E.A. EfficientPose: Scalable single-person pose estimation. Appl. Intell. 2021, 51, 2518–2533. [Google Scholar] [CrossRef]

- Wei, S.E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional pose machines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4724–4732. [Google Scholar]

- Sun, X.; Xiao, B.; Wei, F.; Liang, S.; Wei, Y. Integral human pose regression. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 529–545. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Xu, W.; Xu, Y.; Chang, T.; Tu, Z. Co-scale conv-attentional image transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 9981–9990. [Google Scholar]

- Wang, W.; Dai, J.; Chen, Z.; Huang, Z.; Li, Z.; Zhu, X.; Hu, X.; Lu, T.; Lu, L.; Li, H.; et al. Internimage: Exploring large-scale vision foundation models with deformable convolutions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 14408–14419. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Qin, L.; Zhou, H.; Wang, Z.; Deng, J.; Liao, Y.; Li, S. Detection Beyond What and Where: A Benchmark for Detecting Occlusion State. In Proceedings of the Pattern Recognition and Computer Vision: 5th Chinese Conference, PRCV 2022, Shenzhen, China, 4–7 November 2022; Proceedings, Part IV. Springer: Berlin/Heidelberg, Germany, 2022; pp. 464–476. [Google Scholar]

- Wu, Y.; Ye, H.; Yang, Y.; Wang, Z.; Li, S. Liquid Content Detection in Transparent Containers: A Benchmark. Sensors 2023, 23, 6656. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Wang, W.; Dai, J.; Chen, Z.; Huang, Z.; Li, Z.; Zhu, X.; Hu, X.; Lu, T.; Lu, L.; Li, H.; et al. Internimage: Exploring large-scale vision foundation models with deformable convolutions. arXiv 2022, arXiv:2211.05778. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Hendrycks, D.; Gimpel, K. Gaussian error linear units (gelus). arXiv 2016, arXiv:1606.08415. [Google Scholar]

| Model | {AP@0.5, AP@0.75, mAP} | Model | {AP@0.5, AP@0.75, mAP} |

|---|---|---|---|

| YOLOv3 | (0.766, 0.595, 0.520) | YOLOF | (0.868, 0.718, 0.622) |

| YOLOv5 | (0.825, 0.669, 0.573) | YOLOX | (0.874, 0.723, 0.616) |

| Model | {AP, AP, AP}@0.5 | {AP75, AP75, AP}@0.75 | {mAP, mAP, mAP} |

|---|---|---|---|

| CHP-YOLOF | (0.818, 0.652, 0.708) | (0.720, 0.579, 0.632) | (0.608, 0.488, 0.531) |

| CHP-YOLOX | (0.828, 0.696, 0.727) | (0.692, 0.579, 0.612) | (0.588, 0.490, 0.516) |

| Bending | Lying | Going | Running | Sitting | Squatting | Standing | |

|---|---|---|---|---|---|---|---|

| (mAP)CHP-YOLOF | 0.482 | 0.429 | 0.559 | 0.546 | 0.545 | 0.490 | 0.363 |

| (mAP)CHP-YOLOX | 0.498 | 0.452 | 0.536 | 0.572 | 0.511 | 0.493 | 0.389 |

| Method | EM | CM | CT | IE | |||

|---|---|---|---|---|---|---|---|

| CHP-YOLOF | × | × | × | × | (0.790, 0.586, 0.659) | (0.704, 0.528, 0.596) | (0.590, 0.441, 0.499) |

| CHP-YOLOF | √ | × | × | × | (0.809, 0.636, 0.694) | (0.717, 0.574, 0.626) | (0.604, 0.481, 0.525) |

| CHP-YOLOF | × | √ | × | × | (0.818, 0.652, 0.708) | (0.720, 0.579, 0.632) | (0.606, 0.488, 0.531) |

| CHP-YOLOX | × | × | × | × | (0.817, 0.637, 0.677) | (0.673, 0.530, 0.561) | (0.577, 0.449, 0.478) |

| CHP-YOLOX | × | × | √ | × | (0.817, 0.659, 0.698) | (0.652, 0.553, 0.567) | (0.572, 0.459, 0.490) |

| CHP-YOLOX | × | × | × | √ | (0.828, 0.696, 0.727) | (0.692, 0.579, 0.612) | (0.588, 0.490, 0.516) |

| Model | ||||

|---|---|---|---|---|

| 0.2 | (0.790, 0.499, 0.627) | (0.703, 0.440, 0.576) | (0.589, 0.369, 0.478) | |

| 0.4 | (0.790, 0.521, 0.623) | (0.703, 0.470, 0.571) | (0.594, 0.392, 0.480) | |

| 0.6 | (0.790, 0.586, 0.673) | (0.704, 0.526, 0.604) | (0.593, 0.442, 0.508) | |

| 0.8 | (0.800, 0.570, 0.665) | (0.713, 0.518, 0.605) | (0.600, 0.433, 0.506) | |

| CHP-YOLOF | 1.0 | (0.800, 0.609, 0.682) | (0.703, 0.554, 0.615) | (0.596, 0.462, 0.517) |

| 1.2 | (0.790, 0.624, 0.676) | (0.704, 0.566, 0.615) | (0.590, 0.473, 0.514) | |

| 1.4 | (0.819, 0.626, 0.699) | (0.720, 0.559, 0.624) | (0.608, 0.468, 0.525) | |

| 1.6 | (0.799, 0.620, 0.678) | (0.700, 0.601, 0.603) | (0.588, 0.462, 0.505) | |

| 1.8 | (0.818, 0.632, 0.701) | (0.720, 0.568, 0.633) | (0.607, 0.474, 0.528) | |

| 2.0 | (0.818, 0.652, 0.708) | (0.720, 0.579, 0.632) | (0.606, 0.488, 0.531) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Wu, Y.; Chen, X.; Chen, H.; Kong, D.; Tang, H.; Li, S. Beyond Human Detection: A Benchmark for Detecting Common Human Posture. Sensors 2023, 23, 8061. https://doi.org/10.3390/s23198061

Li Y, Wu Y, Chen X, Chen H, Kong D, Tang H, Li S. Beyond Human Detection: A Benchmark for Detecting Common Human Posture. Sensors. 2023; 23(19):8061. https://doi.org/10.3390/s23198061

Chicago/Turabian StyleLi, Yongxin, You Wu, Xiaoting Chen, Han Chen, Depeng Kong, Haihua Tang, and Shuiwang Li. 2023. "Beyond Human Detection: A Benchmark for Detecting Common Human Posture" Sensors 23, no. 19: 8061. https://doi.org/10.3390/s23198061

APA StyleLi, Y., Wu, Y., Chen, X., Chen, H., Kong, D., Tang, H., & Li, S. (2023). Beyond Human Detection: A Benchmark for Detecting Common Human Posture. Sensors, 23(19), 8061. https://doi.org/10.3390/s23198061