Post Disaster Damage Assessment Using Ultra-High-Resolution Aerial Imagery with Semi-Supervised Transformers

Abstract

:1. Introduction

2. Proposed Methods

2.1. Data Sources, Collection, and Preparation

2.1.1. UHRA Image Data

2.1.2. Satellite Image Data

2.2. Deep Learning Architecture

2.2.1. Supervised: Vision Transformer (ViT)

2.2.2. Semi Supervised: Semi-ViT

3. Experiments

3.1. Semi-Supervised Learning with Unlabeled Data

3.2. Comparison of CNN and Transformer Model Architectures

3.3. Comparison of Satellite and UHRA Image Data Types

3.4. Classification Metrics

4. Results and Discussion

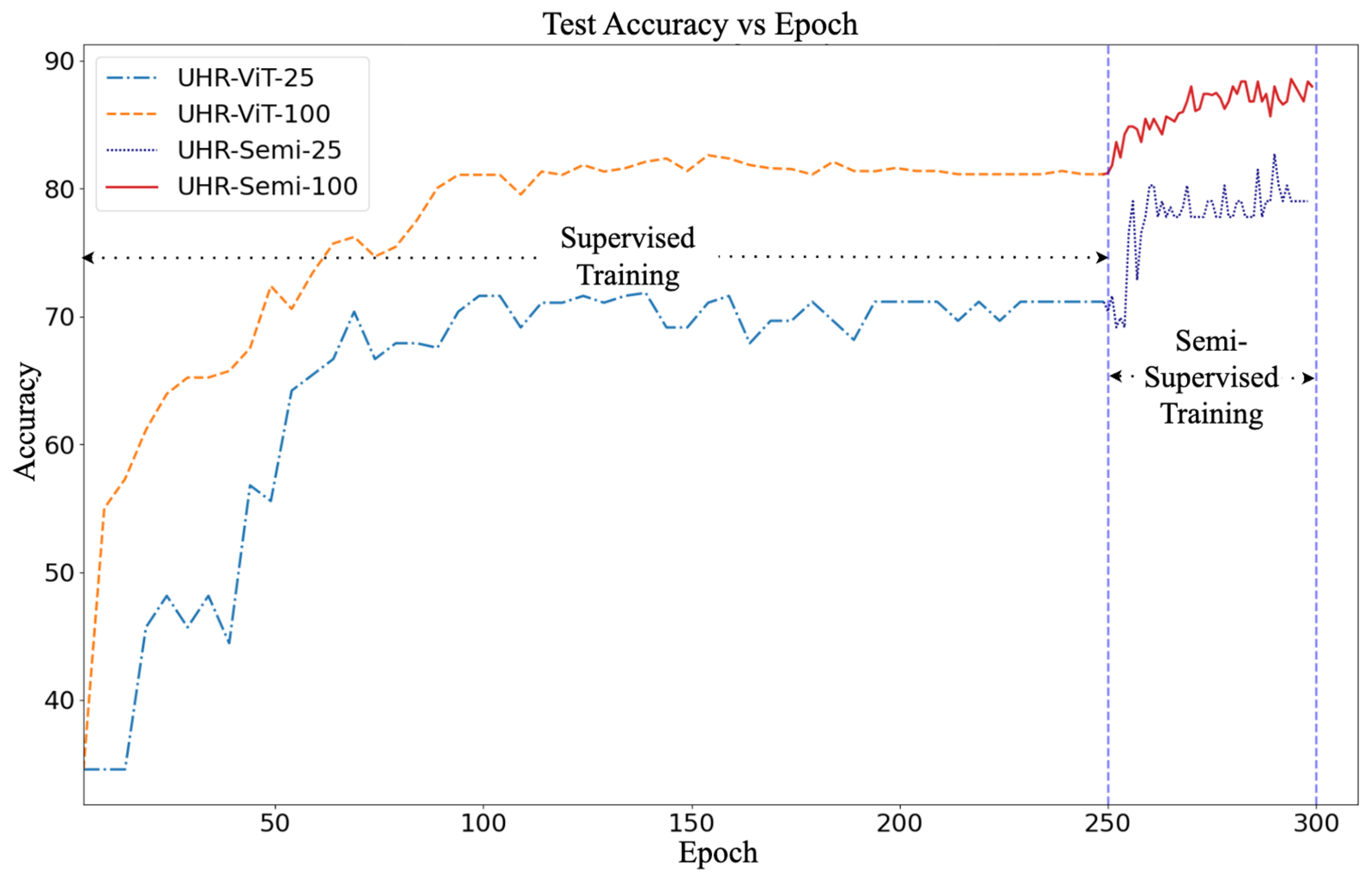

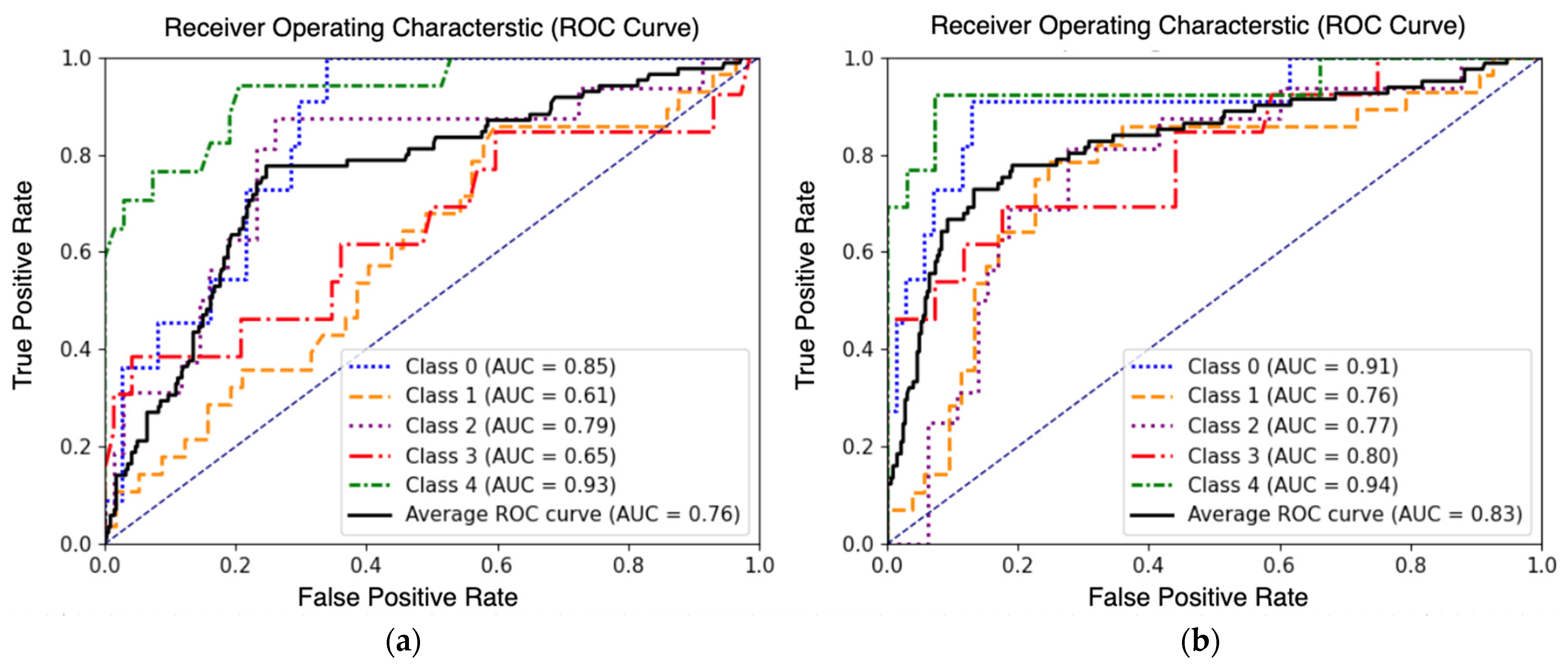

4.1. Semi-Supervised Learning with Unlabeled Data

4.2. Comparison of CNN and Transformer Model Architectures

4.3. Comparison of Satellite and UHRA Image Data Types

4.4. Limitations

- Above-Ground Structures Only: The methodology is tailored for above-ground structures and would not be suitable for subsurface assessment.

- Cloud Cover Impact: The flight altitude for capturing UHRA images is approximately 2 km, making clouds below this altitude a potentially significant limitation in the damage detection process.

- Roof Damage Sensitivity: While the sensitivity to roof damage serves as a valuable indicator for the PDA, it may not be equally informative for evaluating damage caused by other disasters where roof damage is not a good indicator of overall structural health.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Preliminary Damage Assessments|FEMA.Gov. Available online: https://www.fema.gov/disaster/how-declared/preliminary-damage-assessments#report-guide (accessed on 29 June 2023).

- Hurricane Costs. Available online: https://coast.noaa.gov/states/fast-facts/hurricane-costs.html (accessed on 29 June 2023).

- Preliminary Damage Assessments|FEMA.Gov. Available online: https://www.fema.gov/disaster/how-declared/preliminary-damage-assessments#resources (accessed on 5 July 2023).

- FEMA. Preliminary Damage Assessment Guide. 2020. Available online: https://www.fema.gov/disaster/how-declared/preliminary-damage-assessments/guide (accessed on 29 September 2023).

- Hurricane Ian Survivors Face Delays Getting FEMA Aid—The Washington Post. Available online: https://www.washingtonpost.com/nation/2022/10/04/hurricane-ian-fema-victims/ (accessed on 4 July 2023).

- FEMA. Delays Leave Many Hurricane Ian Victims Exasperated Nearly Five Months after the Disastrous Storm|CNN. Available online: https://www.cnn.com/2023/02/13/us/hurricane-ian-fema/index.html (accessed on 4 July 2023).

- Taşkin, G.; Erten, E.; Alataş, E.O. A Review on Multi-Temporal Earthquake Damage Assessment Using Satellite Images. In Change Detection and Image Time Series Analysis 2: Supervised Methods; John Wiley & Sons: Hoboken, NJ, USA, 2021; Volume 22, pp. 155–221. [Google Scholar] [CrossRef]

- Canada Sends Troops to Help Clear Hurricane Fiona’s Devastation|Climate News|Al Jazeera. Available online: https://www.aljazeera.com/news/2022/9/25/canada-sends-troops-to-help-clear-hurricane-fionas-devastation (accessed on 4 July 2023).

- Hong, Z.; Zhong, H.; Pan, H.; Liu, J.; Zhou, R.; Zhang, Y.; Han, Y.; Wang, J.; Yang, S.; Zhong, C. Classification of Building Damage Using a Novel Convolutional Neural Network Based on Post-Disaster Aerial Images. Sensors 2022, 22, 5920. [Google Scholar] [CrossRef] [PubMed]

- Berezina, P.; Liu, D. Hurricane Damage Assessment Using Coupled Convolutional Neural Networks: A Case Study of Hurricane Michael. Nat. Hazards Risk 2022, 13, 414–431. [Google Scholar] [CrossRef]

- Gerke, M.; Kerle, N. Automatic Structural Seismic Damage Assessment with Airborne Oblique Pictometry© Imagery. Photogramm. Eng. Remote Sens. 2011, 77, 885–898. [Google Scholar] [CrossRef]

- Yamazaki, F.; Matsuoka, M. Remote sensing technologies in post-disaster damage assessment. J. Earthq. Tsunami 2012, 01, 193–210. [Google Scholar] [CrossRef]

- Post-Disaster Damage Assessment of Bridge Systems—Rutgers CAIT. Available online: https://cait.rutgers.edu/research/post-disaster-damage-assessment-of-bridge-systems/ (accessed on 5 July 2023).

- Spencer, B.F.; Hoskere, V.; Narazaki, Y. Advances in Computer Vision-Based Civil Infrastructure Inspection and Monitoring. Engineering 2019, 5, 199–222. [Google Scholar] [CrossRef]

- Hoskere, V.; Narazaki, Y.; Hoang, T.; Spencer, B., Jr. Vision-Based Structural Inspection Using Multiscale Deep Convolutional Neural Networks. arXiv 2018, arXiv:1805.01055. [Google Scholar]

- Gao, Y.; Mosalam, K.M. Deep Transfer Learning for Image-Based Structural Damage Recognition. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 748–768. [Google Scholar] [CrossRef]

- Lu, C.H.; Ni, C.F.; Chang, C.P.; Yen, J.Y.; Chuang, R.Y. Coherence Difference Analysis of Sentinel-1 SAR Interferogram to Identify Earthquake-Induced Disasters in Urban Areas. Remote Sens. 2018, 10, 1318. [Google Scholar] [CrossRef]

- Matsuoka, M.; Yamazaki, F. Building Damage Mapping of the 2003 Bam, Iran, Earthquake Using Envisat/ASAR Intensity Imagery. Earthq. Spectra 2005, 21, 285–294. [Google Scholar] [CrossRef]

- Watanabe, M.; Thapa, R.B.; Ohsumi, T.; Fujiwara, H.; Yonezawa, C.; Tomii, N.; Suzuki, S. Detection of Damaged Urban Areas Using Interferometric SAR Coherence Change with PALSAR-2 4. Seismology. Earth Planets Space 2016, 68, 131. [Google Scholar] [CrossRef]

- Matsuoka, M.; Yamazaki, F. Use of Satellite SAR Intensity Imagery for Detecting Building Areas Damaged Due to Earthquakes. Earthq. Spectra 2004, 20, 975–994. [Google Scholar] [CrossRef]

- Kim, M.; Park, S.E.; Lee, S.J. Detection of Damaged Buildings Using Temporal SAR Data with Different Observation Modes. Remote Sens. 2023, 15, 308. [Google Scholar] [CrossRef]

- Celik, T. Unsupervised Change Detection in Satellite Images Using Principal Component Analysis and κ-Means Clustering. IEEE Geosci. Remote Sens. Lett. 2009, 6, 772–776. [Google Scholar] [CrossRef]

- Chen, J.; Yuan, Z.; Peng, J.; Chen, L.; Huang, H.; Zhu, J.; Liu, Y.; Li, H. DASNet: Dual Attentive Fully Convolutional Siamese Networks for Change Detection in High-Resolution Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 1194–1206. [Google Scholar] [CrossRef]

- Celik, T. Change Detection in Satellite Images Using a Genetic Algorithm Approach. IEEE Geosci. Remote Sens. Lett. 2010, 7, 386–390. [Google Scholar] [CrossRef]

- Ezequiel, C.A.F.; Cua, M.; Libatique, N.C.; Tangonan, G.L.; Alampay, R.; Labuguen, R.T.; Favila, C.M.; Honrado, J.L.E.; Canos, V.; Devaney, C.; et al. UAV Aerial Imaging Applications for Post-Disaster Assessment, Environmental Management and Infrastructure Development. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems, ICUAS 2014—Conference Proceedings, Orlando, FL, USA, 27–30 May 2014; pp. 274–283. [Google Scholar] [CrossRef]

- Chowdhury, T.; Murphy, R.; Rahnemoonfar, M. RescueNet: A High Resolution UAV Semantic Segmentation Benchmark Dataset for Natural Disaster Damage Assessment. arXiv 2022, arXiv:2202.12361. [Google Scholar]

- Calantropio, A.; Chiabrando, F.; Codastefano, M.; Bourke, E. DEEP LEARNING for Automatic Building Damage Assessment: Application In Post-Disaster Scenarios Using UAV Data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 5, 113–120. [Google Scholar] [CrossRef]

- Aicardi, I.; Nex, F.; Gerke, M.; Lingua, A.M. An Image-Based Approach for the Co-Registration of Multi-Temporal UAV Image Datasets. Remote Sens. 2016, 8, 779. [Google Scholar] [CrossRef]

- Mavroulis, S.; Andreadakis, E.; Spyrou, N.I.; Antoniou, V.; Skourtsos, E.; Papadimitriou, P.; Kasssaras, I.; Kaviris, G.; Tselentis, G.A.; Voulgaris, N.; et al. UAV and GIS Based Rapid Earthquake-Induced Building Damage Assessment and Methodology for EMS-98 Isoseismal Map Drawing: The June 12, 2017 Mw 6.3 Lesvos (Northeastern Aegean, Greece) Earthquake. Int. J. Disaster Risk Reduct. 2019, 37, 101169. [Google Scholar] [CrossRef]

- Khajwal, A.B.; Cheng, C.S.; Noshadravan, A. Post-Disaster Damage Classification Based on Deep Multi-View Image Fusion. Comput.-Aided Civil. Infrastruct. Eng. 2023, 38, 528–544. [Google Scholar] [CrossRef]

- Hoskere, V.; Narazaki, Y.; Hoang, T.A.; Spencer, B.F. Towards Automated Post-Earthquake Inspections with Deep Learning-Based Condition-Aware Models. arXiv 2018, arXiv:1809.09195. [Google Scholar]

- Narazaki, Y.; Hoskere, V.; Chowdhary, G.; Spencer, B.F. Vision-Based Navigation Planning for Autonomous Post-Earthquake Inspection of Reinforced Concrete Railway Viaducts Using Unmanned Aerial Vehicles. Autom. Constr. 2022, 137, 104214. [Google Scholar] [CrossRef]

- Bai, Y.; Hu, J.; Su, J.; Liu, X.; Liu, H.; He, X.; Meng, S.; Mas, E.; Koshimura, S. Pyramid Pooling Module-Based Semi-Siamese Network: A Benchmark Model for Assessing Building Damage from XBD Satellite Imagery Datasets. Remote Sens. 2020, 12, 4055. [Google Scholar] [CrossRef]

- Chen, T.Y. Interpretability in Convolutional Neural Networks for Building Damage Classification in Satellite Imagery. arXiv 2022, arXiv:2201.10523. [Google Scholar]

- Abdi, G.; Jabari, S. A Multi-Feature Fusion Using Deep Transfer Learning for Earthquake Building Damage Detection. Can. J. Remote Sens. 2021, 47, 337–352. [Google Scholar] [CrossRef]

- Adams, B.; Ghosh, S.; Wabnitz, C.; Alder, J. Post-Tsunami Urban Damage Assessment in Thailand, Using Optical Satellite Imagery and the VIEWSTM Field Reconnaissance System. Geotech. Geol. Earthq. Eng. 2009, 7, 523–539. [Google Scholar] [CrossRef]

- Xu, J.Z.; Lu, W.; Li, Z.; Khaitan, P.; Zaytseva, V. Building Damage Detection in Satellite Imagery Using Convolutional Neural Networks. arXiv 2019, arXiv:1910.06444. [Google Scholar]

- Matsuoka, M.; Nojima, N. Building Damage Estimation by Integration of Seismic Intensity Information and Satellite L-Band SAR Imagery. Remote Sens. 2010, 2, 2111–2126. [Google Scholar] [CrossRef]

- Liu, W.; Yamazaki, F. Extraction of Collapsed Buildings in the 2016 Kumamoto Earthquake Using Multi-Temporal PALSAR-2 Data. J. Disaster Res. 2017, 12, 241–250. [Google Scholar] [CrossRef]

- Grünthal, G.; Schwarz, J. European Macroseismic Scale 1998. In Cahiers du Centre Europeen de Geodynamique et du Seismologie; EMS-98; Le Bureau Central Sismologique Français: Strasbourg, France, 1998. [Google Scholar]

- Yeum, C.M.; Dyke, S.J.; Ramirez, J. Visual Data Classification in Post-Event Building Reconnaissance. Eng. Struct. 2018, 155, 16–24. [Google Scholar] [CrossRef]

- Ghosh Mondal, T.; Jahanshahi, M.R.; Wu, R.T.; Wu, Z.Y. Deep Learning-Based Multi-Class Damage Detection for Autonomous Post-Disaster Reconnaissance. Struct. Control Health Monit. 2020, 27, e2507. [Google Scholar] [CrossRef]

- Jia, J.; Ye, W. Deep Learning for Earthquake Disaster Assessment: Objects, Data, Models, Stages, Challenges, and Opportunities. Remote Sens. 2023, 15, 4098. [Google Scholar] [CrossRef]

- Lombardo, F.T.; Wienhoff, Z.B.; Rhee, D.M.; Nevill, J.B.; Poole, C.A. An Approach for Assessing Misclassification of Tornado Characteristics Using Damage. J. Appl. Meteorol. Climatol. 2023, 62, 781–799. [Google Scholar] [CrossRef]

- Chen, J.; Tang, H.; Ge, J.; Pan, Y. Rapid Assessment of Building Damage Using Multi-Source Data: A Case Study of April 2015 Nepal Earthquake. Remote Sens. 2022, 14, 1358. [Google Scholar] [CrossRef]

- Yuan, X.; Tanksley, D.; Li, L.; Zhang, H.; Chen, G.; Wunsch, D. Faster Post-Earthquake Damage Assessment Based on 1D Convolutional Neural Networks. Appl. Sci. 2021, 11, 9844. [Google Scholar] [CrossRef]

- Feng, L.; Yi, X.; Zhu, D.; Xie, X.; Wang, Y. Damage Detection of Metro Tunnel Structure through Transmissibility Function and Cross Correlation Analysis Using Local Excitation and Measurement. Mech. Syst. Signal Process 2015, 60, 59–74. [Google Scholar] [CrossRef]

- Wang, S.; Long, X.; Luo, H.; Zhu, H. Damage Identification for Underground Structure Based on Frequency Response Function. Sensors 2018, 18, 3033. [Google Scholar] [CrossRef]

- Schaumann, M.; Gamba, D.; Guinchard, M.; Scislo, L.; Wenninger, J. JACoW: Effect of Ground Motion Introduced by HL-LHC CE Work on LHC Beam Operation. In Proceedings of the 10th International Particle Accelerator Conference (IPAC2019), Melbourne, Australia, 19–24 May 2019; p. THPRB116. [Google Scholar] [CrossRef]

- Asokan, A.; Anitha, J. Change Detection Techniques for Remote Sensing Applications: A Survey. Earth Sci. Inform. 2019, 12, 143–160. [Google Scholar] [CrossRef]

- Lee, J.; Xu, J.Z.; Sohn, K.; Lu, W.; Berthelot, D.; Gur, I.; Khaitan, P.; Huang, K.-W.; Koupparis, K.; Kowatsch, B. Assessing Post-Disaster Damage from Satellite Imagery Using Semi-Supervised Learning Techniques. arXiv 2020, arXiv:2011.14004. [Google Scholar]

- Doshi, J.; Basu, S.; Pang, G. From satellite imagery to disaster insights. arXiv 2018, arXiv:1812.07033. [Google Scholar]

- Ishraq, A.; Lima, A.A.; Kabir, M.M.; Rahman, M.S.; Mridha, M.F. Assessment of Building Damage on Post-Hurricane Satellite Imagery Using Improved CNN. In Proceedings of the 2022 International Conference on Decision Aid Sciences and Applications, Chiangrai, Thailand, 23–25 March 2022; pp. 665–669. [Google Scholar] [CrossRef]

- Loos, S.; Barns, K.; Bhattacharjee, G.; Soden, R.; Herfort, B.; Eckle, M.; Giovando, C.; Girardot, B.; Saito, K.; Deierlein, G.; et al. Crowd-Sourced Remote Assessments of Regional-Scale Post-Disaster Damage. In Proceedings of the 11th US National Conference on Earthquake Engineering, Los Angeles, CA, USA, 25–29 June 2018. [Google Scholar]

- Xia, J.; Yokoya, N.; Adriano, B. Building Damage Mapping with Self-Positive Unlabeled Learning. arXiv 2021, arXiv:2111.02586. [Google Scholar]

- Varghese, S.; Hoskere, V. Unpaired Image-to-Image Translation of Structural Damage. Adv. Eng. Inform. 2023, 56, 101940. [Google Scholar] [CrossRef]

- Varghese, S.; Wang, R.; Hoskere, V. Image to Image Translation of Structural Damage Using Generative Adversarial Networks. In Structural Health Monitoring 2021: Enabling Next-Generation SHM for Cyber-Physical Systems—Proceedings of the 13th International Workshop on Structural Health Monitoring, IWSHM 2021, Stanford, CA, USA, 15–17 March 2022; DEStech Publications: Lancaster, PA, USA, 2021; pp. 610–618. [Google Scholar] [CrossRef]

- ImageNet Benchmark (Image Classification). Papers with Code. Available online: https://paperswithcode.com/sota/image-classification-on-imagenet (accessed on 11 July 2023).

- Arkin, E.; Yadikar, N.; Muhtar, Y.; Ubul, K. A Survey of Object Detection Based on CNN and Transformer. In Proceedings of the 2021 IEEE 2nd International Conference on Pattern Recognition and Machine Learning, PRML 2021, Chengdu, China, 16–18 July 2021; pp. 99–108. [Google Scholar] [CrossRef]

- Pinto, F.; Torr, P.H.S.; Dokania, P.K. An Impartial Take to the CNN vs Transformer Robustness Contest. Comput. Sci. 2022, 13673, 466–480. [Google Scholar] [CrossRef]

- Announcing Post Disaster Aerial Imagery Free to Government Agencies • Vexcel Imaging. Available online: https://www.vexcel-imaging.com/announcing-post-disaster-aerial-imagery-free-to-government-agencies/ (accessed on 29 July 2023).

- MV-HarveyNET: A Labelled Image Dataset from Hurricane Harvey for Damage Assessment of Residential Houses Based on Multi-View CNN|DesignSafe-CI. Available online: https://www.designsafe-ci.org/data/browser/public/designsafe.storage.published/PRJ-3692 (accessed on 23 July 2023).

- Google Earth. Available online: https://earth.google.com/web/@30.73078914,-104.03088407,-856.33961182a,13314486.82114363d,35y,359.99966115h,0t,0r/data=Ci4SLBIgOGQ2YmFjYjU2ZDIzMTFlOThiNTM2YjMzNGRiYmRhYTAiCGxheWVyc18w (accessed on 29 July 2023).

- Vexcel Imaging—Home of the UltraCam. Available online: https://www.vexcel-imaging.com/ (accessed on 23 July 2023).

- Kijewski-Correa, T.; Jie, G.; Womble, A.; Kennedy, A.; Cai, S.C.S.; Cleary, J.; Dao, T.; Leite, F.; Liang, D.; Peterman, K.; et al. Hurricane Harvey (Texas) Supplement—Collaborative Research: Geotechnical Extreme Events Reconnaissance (GEER) Association: Turning Disaster into Knowledge. Forensic Eng. 2018, 1017–1027. [Google Scholar] [CrossRef]

- DesignSafe|DesignSafe-CI. Available online: https://www.designsafe-ci.org/ (accessed on 23 July 2023).

- Hazus User & Technical Manuals|FEMA.Gov. Available online: https://www.fema.gov/flood-maps/tools-resources/flood-map-products/hazus/user-technical-manuals (accessed on 4 July 2023).

- Kijewski-Correa, T. Field Assessment Structural Team (FAST) Handbook; Frontiers Media SA: Losan, Switzerland, 2021. [Google Scholar]

- Video: Why Vexcel Aerial Imagery Is Better|Vexcel Data Program. Available online: https://vexceldata.com/videos/video-vexcel-imagery-better/ (accessed on 27 July 2023).

- Google Maps Platform Documentation|Geocoding API|Google for Developers. Available online: https://developers.google.com/maps/documentation/geocoding (accessed on 23 July 2023).

- Gupta, R.; Hosfelt, R.; Sajeev, S.; Patel, N.; Goodman, B.; Doshi, J.; Heim, E.; Choset, H.; Gaston, M. XBD: A Dataset for Assessing Building Damage from Satellite Imagery. arXiv 2019, arXiv:1911.09296. [Google Scholar]

- Cao, Q.D.; Choe, Y. Building Damage Annotation on Post-Hurricane Satellite Imagery Based on Convolutional Neural Networks. Nat. Hazards 2020, 103, 3357–3376. [Google Scholar] [CrossRef]

- Kaur, N.; Lee, C.C.; Mostafavi, A.; Mahdavi-Amiri, A. Large-scale Building Damage Assessment Using a Novel Hierarchical Transformer Architecture on Satellite Images. Comput.-Aided Civ. Infrastruct. Eng. 2022, 38, 2072–2091. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Cai, Z.; Ravichandran, A.; Favaro, P.; Wang, M.; Modolo, D.; Bhotika, R.; Tu, Z.; Soatto, S. Semi-Supervised Vision Transformers at Scale. Adv. Neural Inf. Process. Syst. 2022, 35, 25697–25710. [Google Scholar]

- ImageNet. Available online: https://www.image-net.org/ (accessed on 23 July 2023).

- Bouchard, I.; Rancourt, M.È.; Aloise, D.; Kalaitzis, F. On Transfer Learning for Building Damage Assessment from Satellite Imagery in Emergency Contexts. Remote Sens. 2022, 14, 2532. [Google Scholar] [CrossRef]

- Sohn, K.; Berthelot, D.; Li, C.-L.; Zhang, Z.; Carlini, N.; Cubuk, E.D.; Kurakin, A.; Zhang, H.; Raffel, C. FixMatch: Simplifying Semi-Supervised Learning with Consistency and Confidence. Adv. Neural Inf. Process. Syst. 2020, 33, 596–608. [Google Scholar]

- Cui, J.; Zhong, Z.; Liu, S.; Yu, B.; Jia, J. Parametric Contrastive Learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Zhong, Z.; Zheng, L.; Kang, G.; Li, S.; Yang, Y. Random Erasing Data Augmentation. Proc. AAAI Conf. Artif. Intell. 2020, 34, 13001–13008. [Google Scholar] [CrossRef]

- Cheng, C.-S.; Khajwal, A.B.; Behzadan, A.H.; Noshadravan, A. A Probabilistic Crowd–AI Framework for Reducing Uncertainty in Postdisaster Building Damage Assessment. J. Eng. Mech. 2023, 149, 04023059. [Google Scholar] [CrossRef]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in Vision: A Survey. ACM Comput. Surv. 2021, 54, 1–41. [Google Scholar] [CrossRef]

- Maurício, J.; Domingues, I.; Bernardino, J. Comparing Vision Transformers and Convolutional Neural Networks for Image Classification: A Literature Review. Appl. Sci. 2023, 13, 5521. [Google Scholar] [CrossRef]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar] [CrossRef]

- Muhammad, M.B.; Yeasin, M. Eigen-CAM: Class Activation Map Using Principal Components. In Proceedings of the International Joint Conference on Neural Networks, Glasgow, UK, 19–24 July 2020. [Google Scholar] [CrossRef]

- How Does It Work with Vision Transformers—Advanced AI Explainability with Pytorch-Gradcam. Available online: https://jacobgil.github.io/pytorch-gradcam-book/vision_transformers.html?highlight=transformer (accessed on 2 August 2023).

| Proposed Scale | Individual Assessment (IA) | HAZUS | NEHRI Design Safe [68] | Description * | |||

|---|---|---|---|---|---|---|---|

| Roof Cover Damage | Sliding Damage | Door and Windows Failure | Roof Sheathing Failures | ||||

| 0 | NA | No Damage | 0 | ≤10% | ≤1 Panel | None | None |

| 1 | Affected | Minor | 1 | >10% to ≤25% | >1% to ≤25% | 1 or 2 | None |

| 2 | Minor | Moderate | 2 | >25% | >25% | >2% to ≤50% | >0% to ≤25% |

| 3 | Major | Severe | 3 | >25% | >25% | >50% | >25% with minor connection failure |

| 4 | Destroyed | Destroyed | 4 | >25% | >25% | >50% | >25% with major connection failure |

| NA | Inaccessible | NA | NA | Damage to residence cannot be visually verified | |||

| Hyperparameter | ViT | Semi-ViT |

|---|---|---|

| Optimizer | AdamW | AdamW |

| Base Learning Rate | 0.001 | 0.0025 |

| Weight Decay | 0.05 | 0.05 |

| Mixup | 0.8 | 0.8 |

| Cutmix | 1.0 | 1.0 |

| Epochs | 250 | 50 |

| Experiment Name | Data Type | Model Architecture | Labeled (%) | Unlabeled (%) | Deep Learning Method |

|---|---|---|---|---|---|

| Sat-CNN-100 | Satellite | CNN | 100 | 0 | Supervised |

| Sat-ViT-100 | Satellite | Transformer (ViT) | 100 | 0 | Supervised |

| UHR-ViT-100 | UHRA | Transformer (ViT) | 100 | 0 | Supervised |

| UHR-ViT-25 | UHRA | Transformer (ViT) | 25 | 0 | Supervised |

| UHR-Semi-100 | UHRA | Transformer (Semi-ViT) | 100 | 100 | Semi-Supervised |

| UHR-Semi-25 | UHRA | Transformer (Semi-ViT) | 25 | 100 | Semi-Supervised |

| Model Name | Trained on | Tested on |

|---|---|---|

| ViT-UHR-UHR | UHRA (213) | UHRA (54) |

| ViT-UHR-Sat | UHRA (213) | Satellite (54) |

| ViT-Sat-Sat | Satellite (213) | Satellite (54) |

| ViT-Sat-UHR | Satellite (213) | UHRA (54) |

| Metric | Formula |

|---|---|

| Accuracy | (True Positives + True Negatives)/Total |

| Precision | True Positives/(True Positives + False Positives) |

| Recall | True Positives/(True Positives + False Negatives) |

| F1 Score | 2 × (Precision × Recall)/(Precision + Recall) |

| Average AUC-ROC | Average of Area Under the ROC Curve for all Classes |

| Experiment # | Accuracy (%) | Average F1 (%) | Precision (%) | Recall (%) | Average AUC-ROC (%) |

|---|---|---|---|---|---|

| UHR-Semi-100 | 88 | 88 | 89 | 88 | 96 |

| UHR-ViT-100 | 81 | 77 | 79 | 77 | 94 |

| UHR-Semi-25 | 81 | 83 | 84 | 82 | 91 |

| Sat-ViT-100 | 73 | 72 | 72 | 73 | 88 |

| UHR-ViT-25 | 71 | 68 | 70 | 68 | 91 |

| Sat-CNN-100 | 55 | 54 | 55 | 55 | 78 |

| Model Name | Accuracy (%) | F1 (%) | Average AUC-ROC (%) |

|---|---|---|---|

| ViT-UHR-Sat | 58 | 62 | 83 |

| ViT-Sat-UHR | 41 | 39 | 76 |

| ViT-UHR-UHR | 71 | 68 | 91 |

| ViT-Sat-Sat | 67 | 66 | 91 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Singh, D.K.; Hoskere, V. Post Disaster Damage Assessment Using Ultra-High-Resolution Aerial Imagery with Semi-Supervised Transformers. Sensors 2023, 23, 8235. https://doi.org/10.3390/s23198235

Singh DK, Hoskere V. Post Disaster Damage Assessment Using Ultra-High-Resolution Aerial Imagery with Semi-Supervised Transformers. Sensors. 2023; 23(19):8235. https://doi.org/10.3390/s23198235

Chicago/Turabian StyleSingh, Deepank Kumar, and Vedhus Hoskere. 2023. "Post Disaster Damage Assessment Using Ultra-High-Resolution Aerial Imagery with Semi-Supervised Transformers" Sensors 23, no. 19: 8235. https://doi.org/10.3390/s23198235