Deep Learning with Attention Mechanisms for Road Weather Detection

Abstract

1. Introduction

- A multi-label transport-related dataset consisting of seven weather conditions: sunny, cloudy, foggy, rainy, wet, clear, and snowy to be used for road weather detection research.

- Assessment of different state-of-the-art computer vision models in addressing multi-label road weather detection, using our dataset as a benchmark.

- Evaluation of the effectiveness of focal loss function to increase model accuracy for unbalanced classes and hard instances.

- Implementing transformer vision models to assess the efficiency of their attention mechanism (assigning dynamic weights to pixels) in addressing road weather idiosyncrasies.

2. Background

2.1. Related Work

2.2. Loss Functions Explored in This Study to Deal with Data Predicaments

- Class Weighted Loss Function: The traditional cross entropy loss does not take into account the imbalanced nature of the dataset. The inherent assumption that the data are balanced often lead to fallacious results. Since the learning becomes biased towards majority classes, the model fails to learn meaningful features to identify the minority classes. Therefore, to overcome these issues, loss function can be optimised by assigning weights such that more attention is given to minority classes during training. Weights are assigned to each class such that the smaller the number of instances in a class, the greater the weight assigned to that class. For each class, Weight assigned to the class = Total images in dataset/Total images in that class. The weighted cross-entropy loss function is given by:where is the total loss, c represents the class, i represents the training instance, while C and N represent total number of classes and instances, respectively. The yc indicates the ground truth label for the class c, and pc is the predicted probability that the given image belongs to class c, while c represents the weight of the class c.

- Focal Loss Function: A focal loss function is a dynamically scaled cross-entropy loss function. Focal loss forces the model to focus on the hard misclassified examples during the training process [17]. For any given instance, the scaling factor of the focal loss function decays to zero as the loss decreases, thus allowing the model to rapidly focus on hard examples instead of assigning similar weights to all the instances. Focal loss function is given bywhere and are hyperparameters such that setting greater than zero reduces relative loss for examples that are easily classified. The hyperparameter 0 and its value controls the loss for easy and hard instances, while lies between [0,1] and addresses the class imbalance problem.

2.3. Deep Learning Architectures Investigated

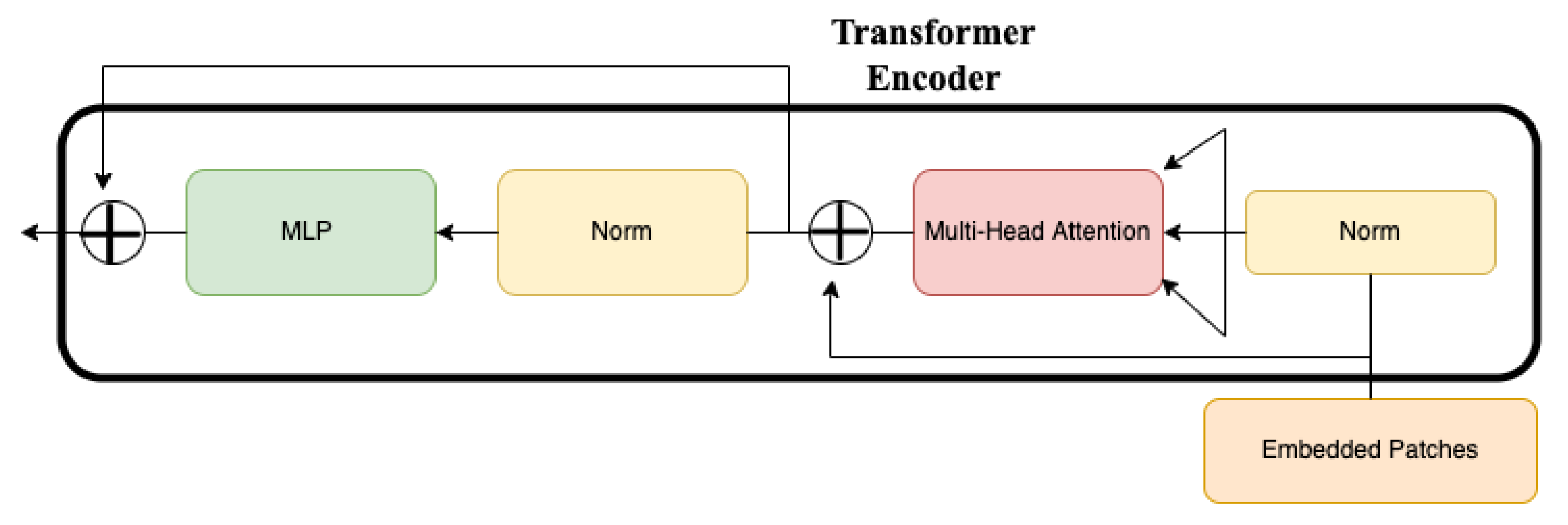

3. Vision Transformers

4. Experiments

4.1. Proposed Dataset Description

4.2. Vision Transformers Implemented

4.3. Experimental Design

- Stage 1: Pre-trained the state-of-the-art CNN architectures on the ImageNet dataset.

- Stage 2: Re-trained the architectures on our proposed road weather dataset using cross entropy loss function.

- Stage 3: Optimise the architectures using class weighted loss function.

- Stage 4: Optimise the architectures using focal loss function.

- Stage 5: Pre-trained the state-of-the-art Transformer vision models on the ImageNet dataset.

- Stage 6: Re-trained the architectures on our proposed multi-label road weather dataset.

4.4. Evaluation Protocol

5. Results and Discussion

5.1. State-of-the-Art CNN Models

5.2. Vision Transformers

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mase, J.M.; Pekaslan, D.; Agrawal, U.; Mesgarpour, M.; Chapman, P.; Torres, M.T.; Figueredo, G.P. Contextual Intelligent Decisions: Expert Moderation of Machine Outputs for Fair Assessment of Commercial Driving. arXiv 2022, arXiv:2202.09816. [Google Scholar]

- Perrels, A.; Votsis, A.; Nurmi, V.; Pilli-Sihvola, K. Weather conditions, weather information and car crashes. ISPRS Int. J. Geo Inf. 2015, 4, 2681–2703. [Google Scholar] [CrossRef]

- Kang, L.W.; Chou, K.L.; Fu, R.H. Deep Learning-based weather image recognition. In Proceedings of the 2018 International Symposium on Computer, Consumer and Control (IS3C), Taichung, Taiwan, 6–8 December 2018; pp. 384–387. [Google Scholar]

- Zhang, Z.; Ma, H. Multi-class weather classification on single images. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 4396–4400. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- An, J.; Chen, Y.; Shin, H. Weather classification using convolutional neural networks. In Proceedings of the 2018 International SoC Design Conference (ISOCC), Daegu, Korea, 12–15 November 2018; pp. 245–246. [Google Scholar]

- Khan, M.N.; Ahmed, M.M. Weather and surface condition detection based on road-side webcams: Application of pre-trained convolutional neural network. Int. J. Transp. Sci. Technol. 2021, 11, 468–483. [Google Scholar] [CrossRef]

- Guerra, J.C.V.; Khanam, Z.; Ehsan, S.; Stolkin, R.; McDonald-Maier, K. Weather Classification: A new multi-class dataset, data augmentation approach and comprehensive evaluations of Convolutional Neural Networks. In Proceedings of the 2018 NASA/ESA Conference on Adaptive Hardware and Systems (AHS), Edinburgh, UK, 6–9 August 2018; pp. 305–310. [Google Scholar]

- Jabeen, S.; Malkana, A.; Farooq, A.; Khan, U.G. Weather Classification on Roads for Drivers Assistance using Deep Transferred Features. In Proceedings of the 2019 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 16–18 December 2019; pp. 221–2215. [Google Scholar]

- Zhao, B.; Li, X.; Lu, X.; Wang, Z. A CNN-RNN architecture for multi-label weather recognition. Neurocomputing 2018, 322, 47–57. [Google Scholar] [CrossRef]

- Xia, J.; Xuan, D.; Tan, L.; Xing, L. ResNet15: Weather Recognition on Traffic Road with Deep Convolutional Neural Network. Adv. Meteorol. 2020, 2020, 6972826. [Google Scholar] [CrossRef]

- Toğaçar, M.; Ergen, B.; Cömert, Z. Detection of weather images by using spiking neural networks of deep learning models. Neural Comput. Appl. 2021, 33, 6147–6159. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.; Radford, A.; Child, R.; Wu, J.; Jun, H.; Luan, D.; Sutskever, I. Generative pretraining from pixels. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 1691–1703. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers); Association for Computational Linguistics: Minneapolis, MN, USA, 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Rengasamy, D.; Jafari, M.; Rothwell, B.; Chen, X.; Figueredo, G.P. Deep learning with dynamically weighted loss function for sensor-based prognostics and health management. Sensors 2020, 20, 723. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- A Sample of HGV Dashcam Clips. 2021. Available online: https://youtu.be/-PfIjkiDozo (accessed on 1 October 2021).

- Zooniverse Website. 2022. Available online: https://www.zooniverse.org/ (accessed on 28 March 2022).

- Road Weather Dataset. 2022. Available online: https://drive.google.com/file/d/1e7NRaIVX6GNqHGC_aAqaib_DU_0eMVRz (accessed on 9 January 2023).

- Deng, J. A large-scale hierarchical image database. In Proceedings of the IEEE Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Mafeni Mase, J.; Chapman, P.; Figueredo, G.P.; Torres Torres, M. Benchmarking deep learning models for driver distraction detection. In Proceedings of the International Conference on Machine Learning, Optimization, and Data Science, Siena, Italy, 19–23 July 2020; pp. 103–117. [Google Scholar]

- Pytorch. Models and Pre-Trained Weights. 2022. Available online: https://pytorch.org/vision/stable/models.html (accessed on 15 March 2022).

| Model | Author | Year | Number of Layers | Input Image Size |

|---|---|---|---|---|

| VGG19 | Oxford University Researchers [18] | 2014 | 19 layers | 224 × 224 |

| GoogleNet | Researchers at Google [19] | 2015 | 22 layers | 224 × 224 |

| ResNet-152 | He et al. [20] | 2015 | 152 layers | 224 × 224 |

| Inception-v3 | Szegedy et al. [21] | 2016 | 48 layers | 299 × 299 |

| EfficientNet-B7 | Tan et al. [22] | 2019 | 813 layers | 600 × 600 |

| Class | Number of Instances |

|---|---|

| Clear | 1299 |

| Sunny | 1184 |

| Cloudy | 626 |

| Wet | 369 |

| Snowy | 147 |

| Rainy | 84 |

| Foggy | 78 |

| Icy | 3 |

| Model | Avg Training Accuracy | Training SD | Avg Validation Accuracy | Validation SD | Avg F Score | F-Score SD |

|---|---|---|---|---|---|---|

| VGG19 | 84.19 | 0.005 | 85.14 | 0.002 | 58.50 | 0.008 |

| GoogleNet | 84.42 | 0.009 | 85.08 | 0.006 | 50.52 | 0.012 |

| ResNet-152 | 87.58 | 0.003 | 87.73 | 0.005 | 64.22 | 0.014 |

| Inception-v3 | 84.23 | 0.008 | 84.80 | 0.006 | 50.56 | 0.004 |

| EfficientNet-B7 | 85.11 | 0.003 | 86.03 | 0.003 | 56.09 | 0.007 |

| ViT-B/16 | 93.52 | 0.0118 | 91.92 | 0.0088 | 81.22 | 0.0182 |

| ViT-B/32 | 94.65 | 0.0262 | 91.45 | 0.0065 | 80.48 | 0.0115 |

| Model | Avg Training Accuracy | Training SD | Avg Validation Accuracy | Validation SD | Avg F Score | F-Score SD |

|---|---|---|---|---|---|---|

| VGG19 | 84.48 | 0.002 | 85.35 | 0.005 | 64.21 | 0.015 |

| GoogleNet | 86.79 | 0.002 | 87.19 | 0.003 | 63.54 | 0.010 |

| ResNet-152 | 88.98 | 0.001 | 88.84 | 0.003 | 71.00 | 0.011 |

| Inception-v3 | 85.95 | 0.004 | 86.87 | 0.004 | 62.52 | 0.009 |

| EfficientNet-B7 | 86.82 | 0.002 | 87.24 | 0.005 | 63.38 | 0.007 |

| ViT-B/16 | 95.97 | 0.3579 | 90.95 | 0.0076 | 79.18 | 0.0211 |

| ViT-B/32 | 98.66 | 0.0178 | 90.48 | 0.0043 | 77.912 | 0.0073 |

| Model | Avg Training Accuracy | Training SD | Avg Validation Accuracy | Validation SD | Avg F Score | F-Score SD |

|---|---|---|---|---|---|---|

| VGG19 | 83.90 | 0.003 | 84.85 | 0.005 | 66.28 | 0.012 |

| GoogleNet | 87.22 | 0.002 | 87.63 | 0.004 | 67.99 | 0.014 |

| ResNet-152 | 89.44 | 0.004 | 88.71 | 0.007 | 74.40 | 0.010 |

| Inception-v3 | 85.91 | 0.002 | 87.26 | 0.002 | 66.29 | 0.006 |

| EfficientNet-B7 | 87.48 | 0.001 | 87.72 | 0.005 | 66.15 | 0.008 |

| ViT-B/16 | 93.95 | 0.02942 | 91.26 | 0.0059 | 80.23 | 0.0077 |

| ViT-B/32 | 94.80 | 0.3387 | 91.23 | 0.0050 | 80.25 | 0.0125 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Samo, M.; Mafeni Mase, J.M.; Figueredo, G. Deep Learning with Attention Mechanisms for Road Weather Detection. Sensors 2023, 23, 798. https://doi.org/10.3390/s23020798

Samo M, Mafeni Mase JM, Figueredo G. Deep Learning with Attention Mechanisms for Road Weather Detection. Sensors. 2023; 23(2):798. https://doi.org/10.3390/s23020798

Chicago/Turabian StyleSamo, Madiha, Jimiama Mosima Mafeni Mase, and Grazziela Figueredo. 2023. "Deep Learning with Attention Mechanisms for Road Weather Detection" Sensors 23, no. 2: 798. https://doi.org/10.3390/s23020798

APA StyleSamo, M., Mafeni Mase, J. M., & Figueredo, G. (2023). Deep Learning with Attention Mechanisms for Road Weather Detection. Sensors, 23(2), 798. https://doi.org/10.3390/s23020798