MIC: Microwave Imaging Curtain for Dynamic and Automatic Detection of Weapons and Explosive Belts

Abstract

:1. Introduction

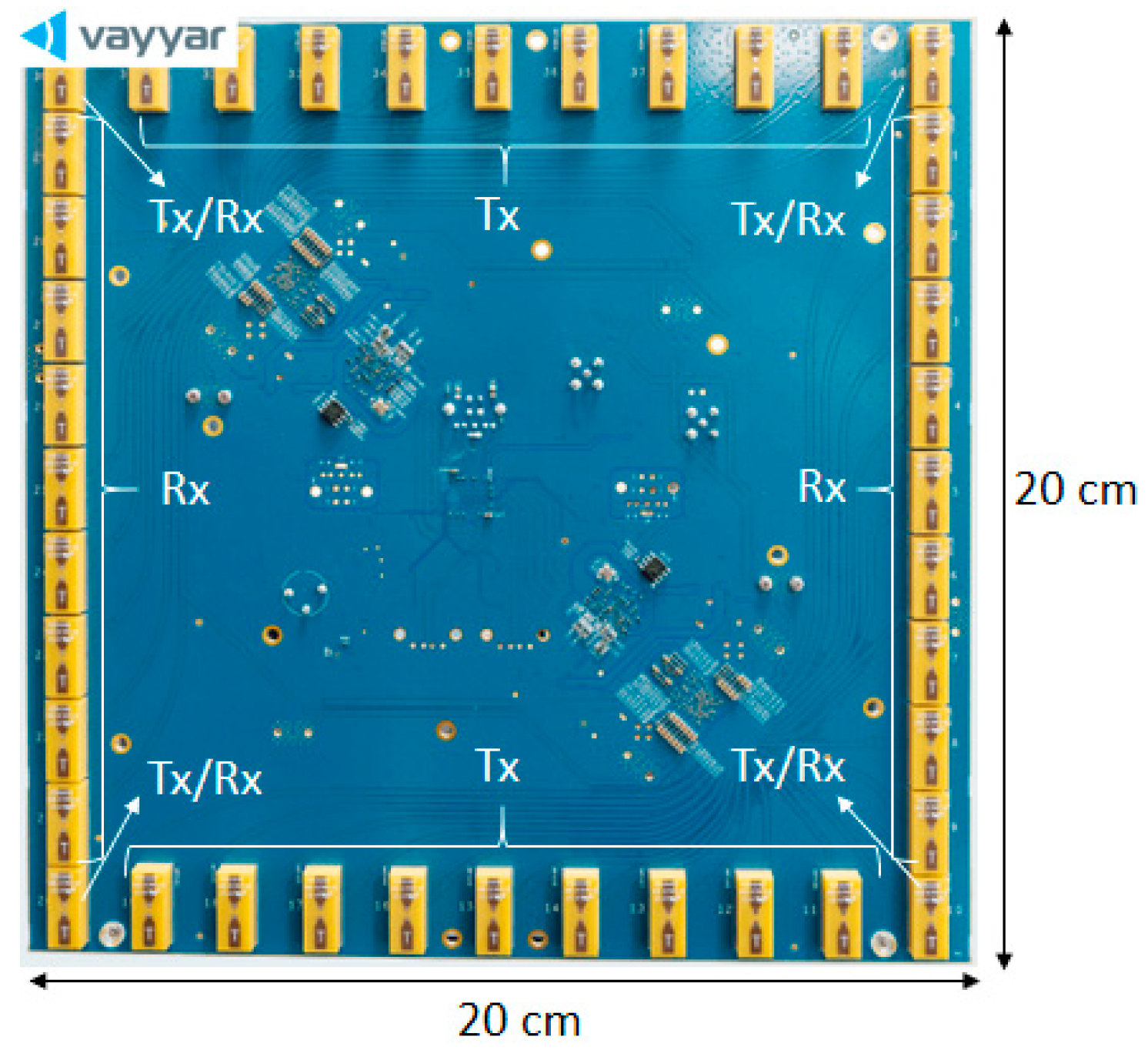

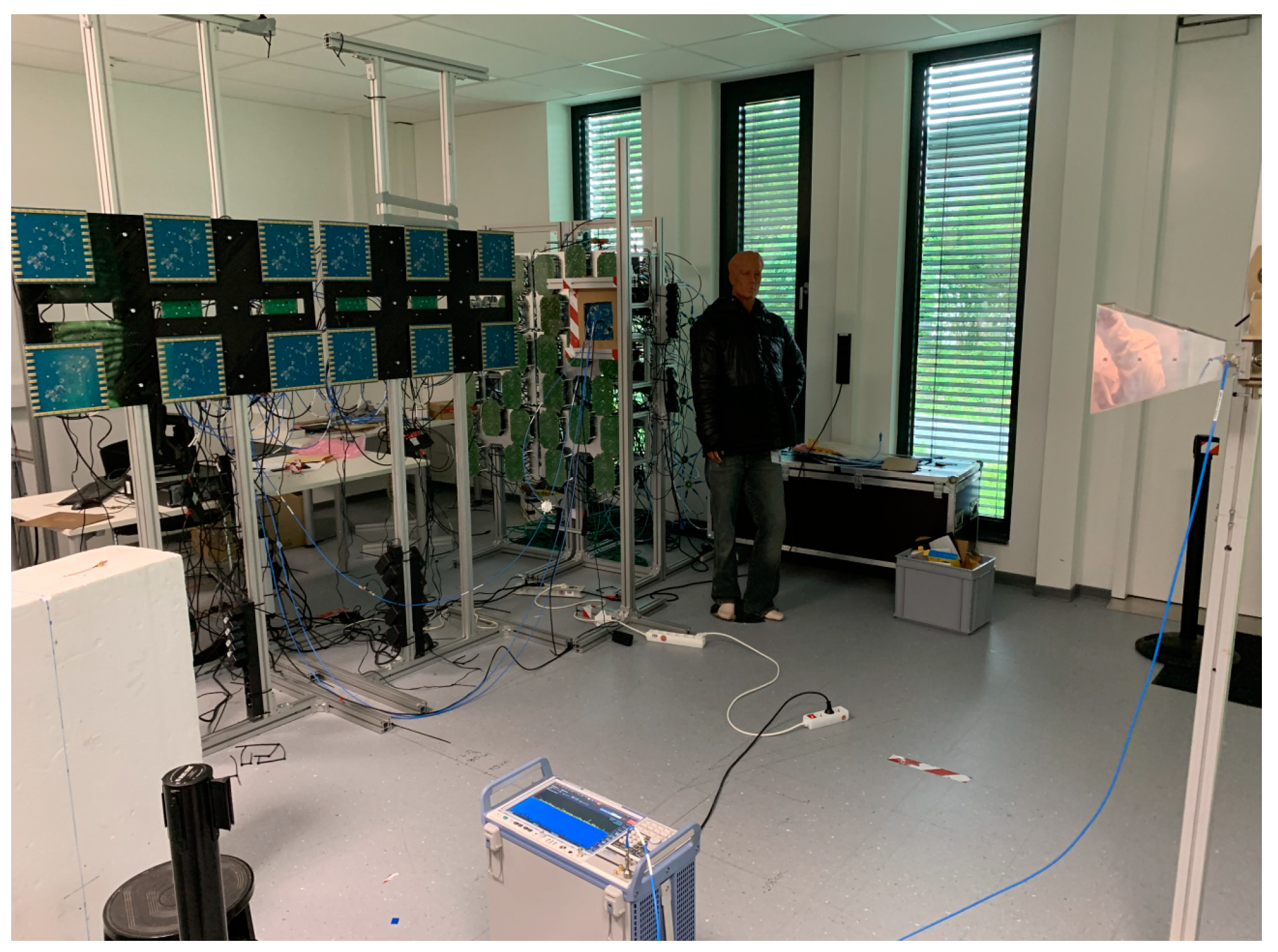

2. Description of the Imaging System Hardware

2.1. System Modular Structure

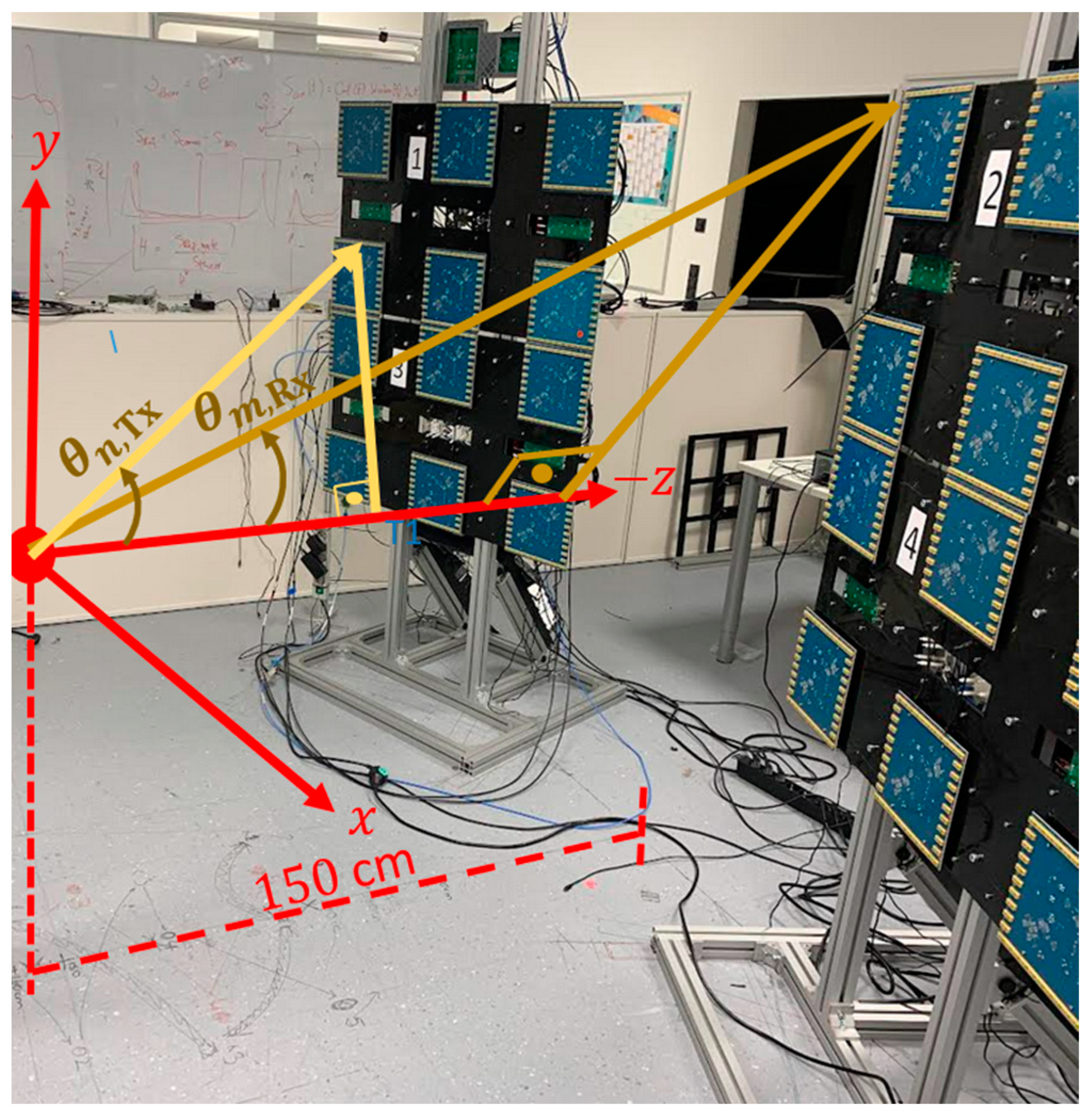

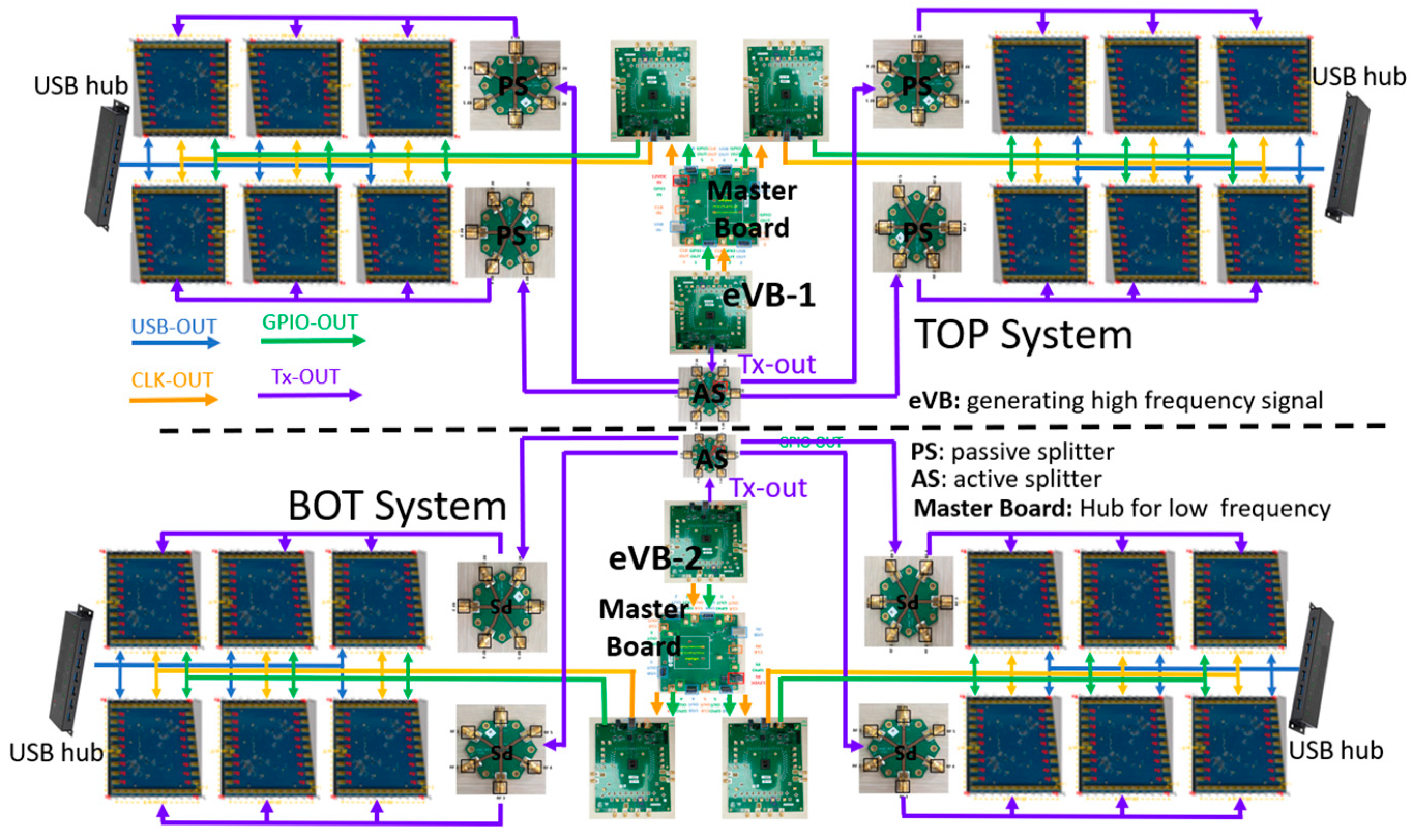

2.2. The MIC System Configuration

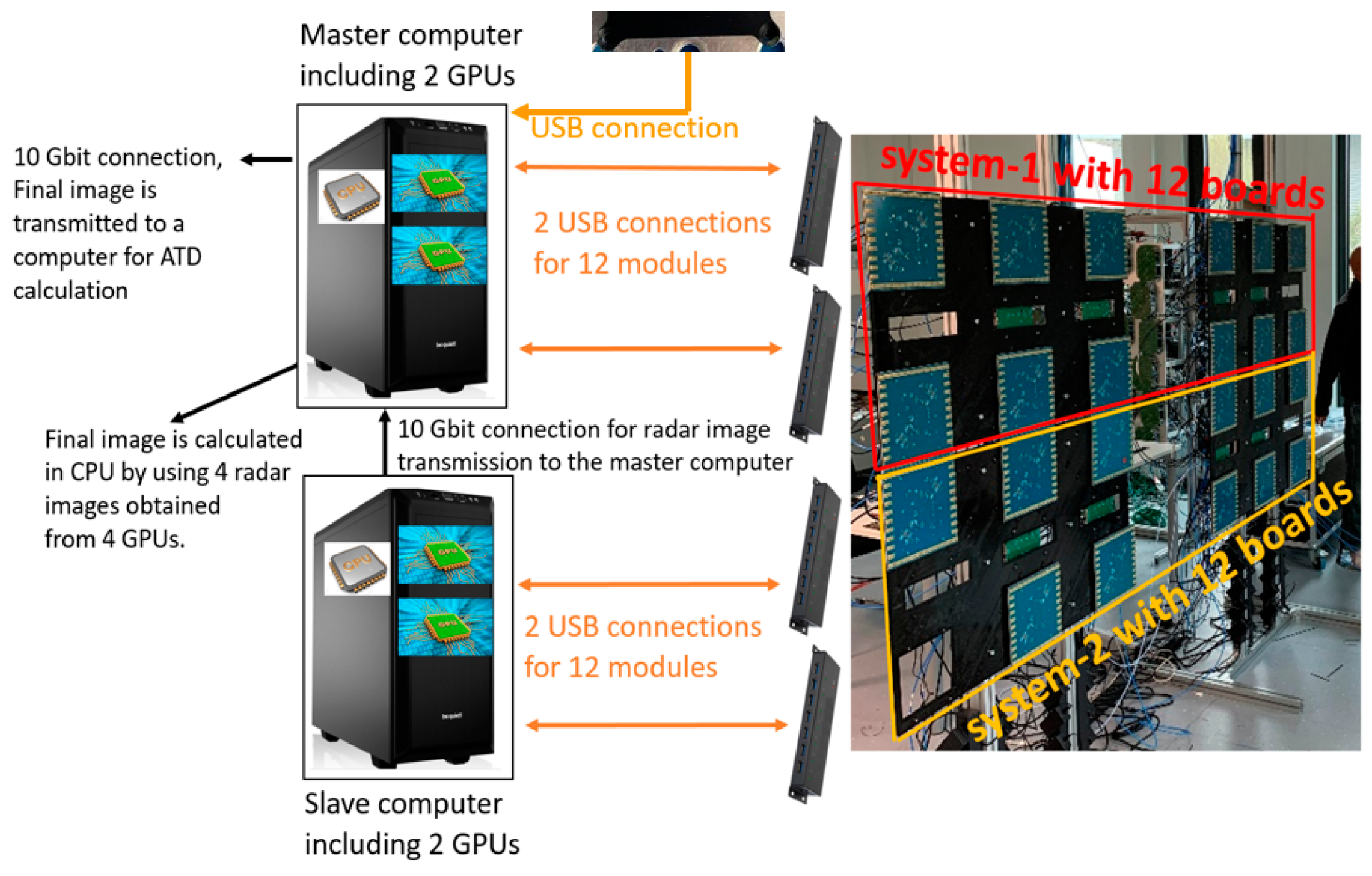

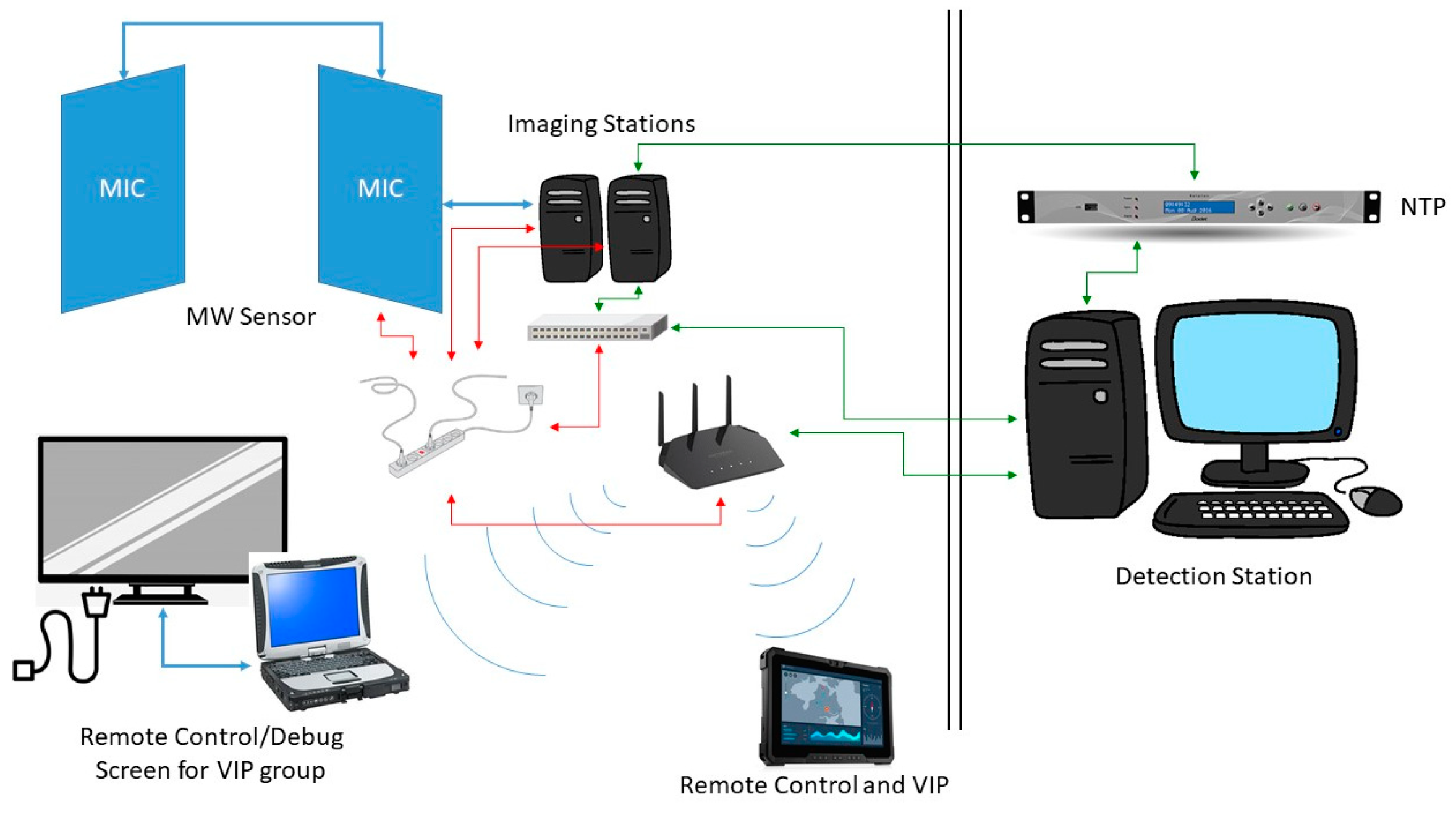

2.3. The MIC System Connection to Workstations

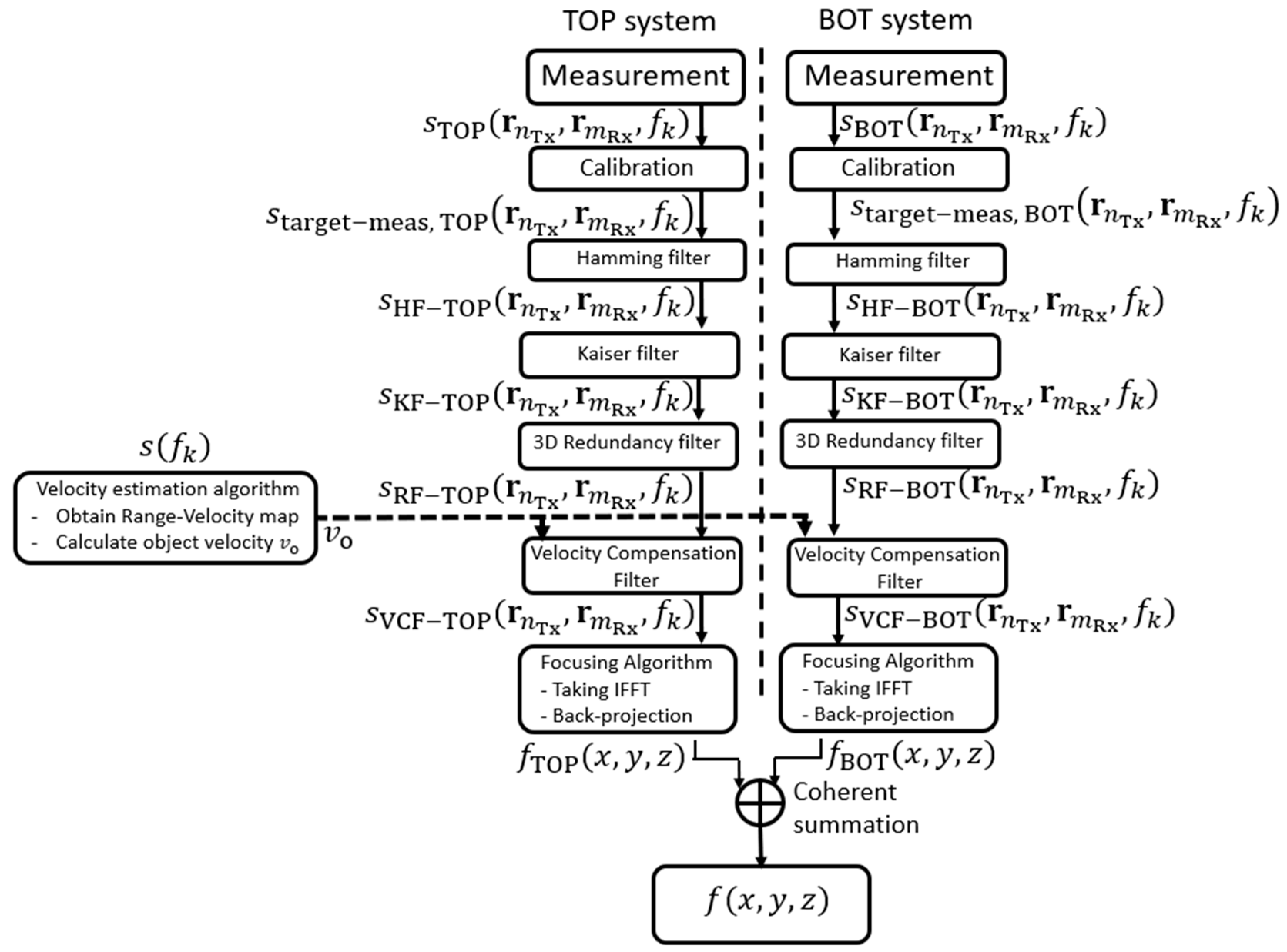

2.4. The Imaging System Algorithm

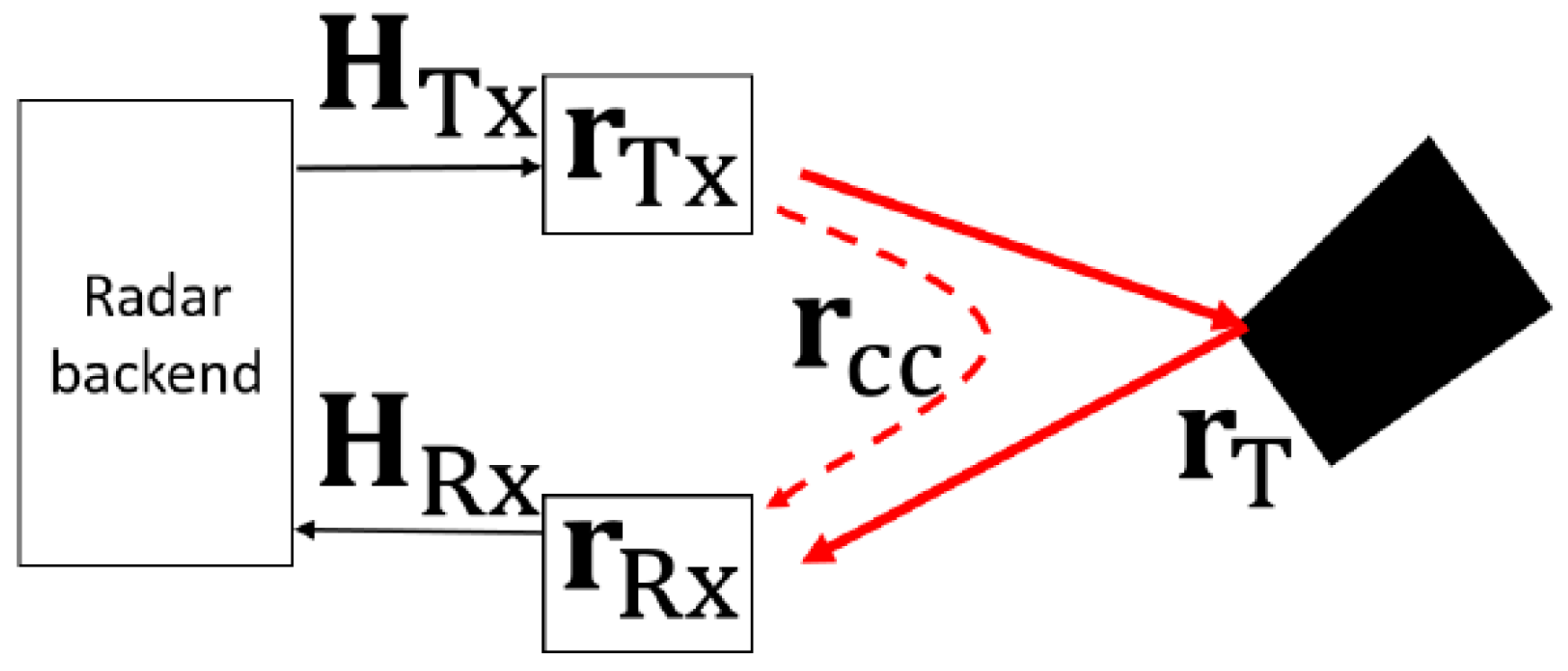

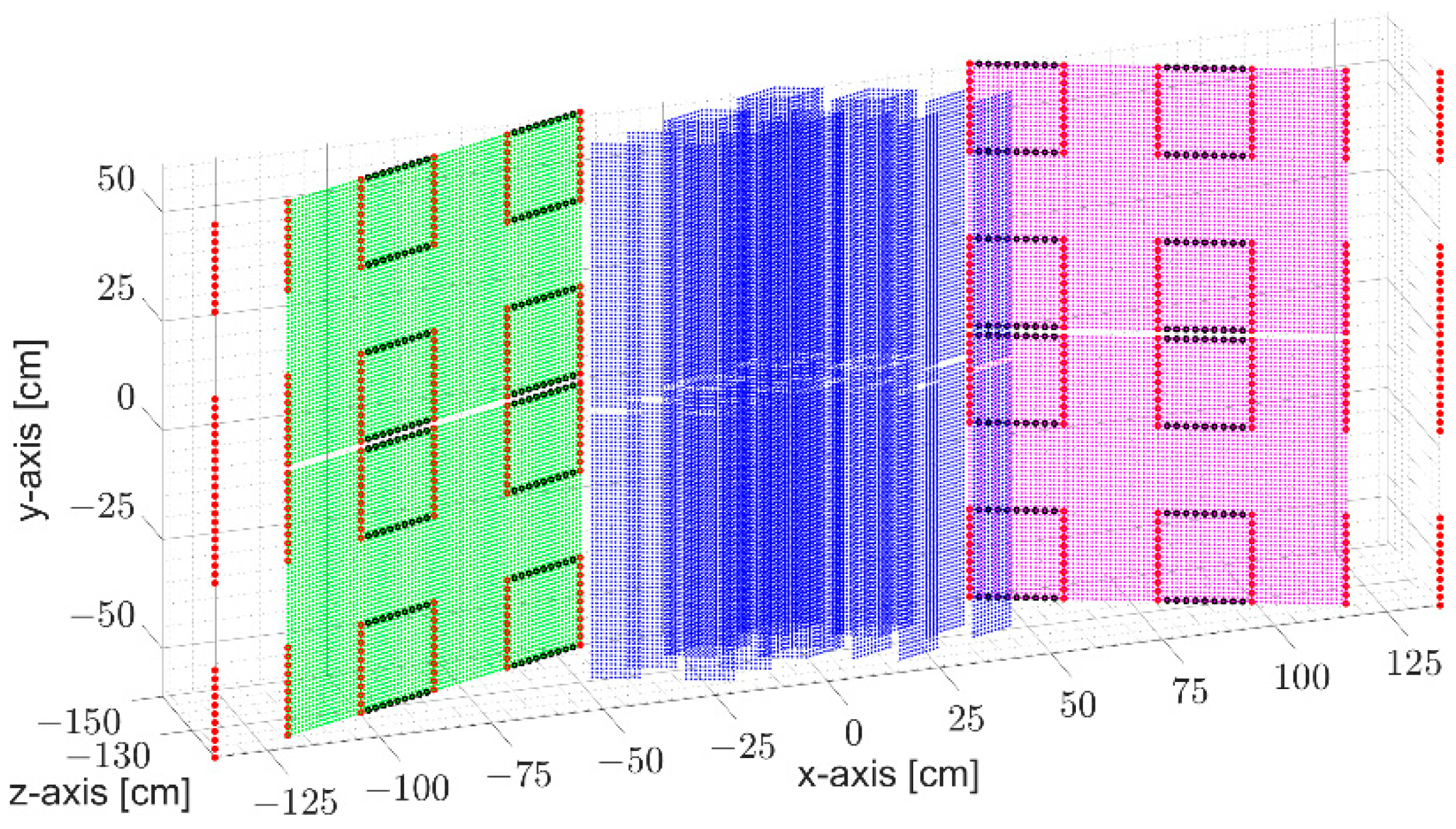

2.4.1. Imaging Algorithm

- Calibration: Signal processing errors emerge on a radar image as a high background level, indicating a noise level. To reduce the noise level, a calibration procedure based on [9] is developed. The calibration procedure involves the first filter in the imaging algorithm and determines the quality of the radar image by compensating channel responses. If the calibration is not rigorously performed, the quality of the radar image can distinctly degrade. More details are provided in Section 2.4.2.

- Hamming Filter: The Hamming filter is a well-known and frequently employed filter in radar image applications [10]. In this application, the 1D Hamming filter is used to suppress the range side lobes that lead to degradation in the quality of the radar image.

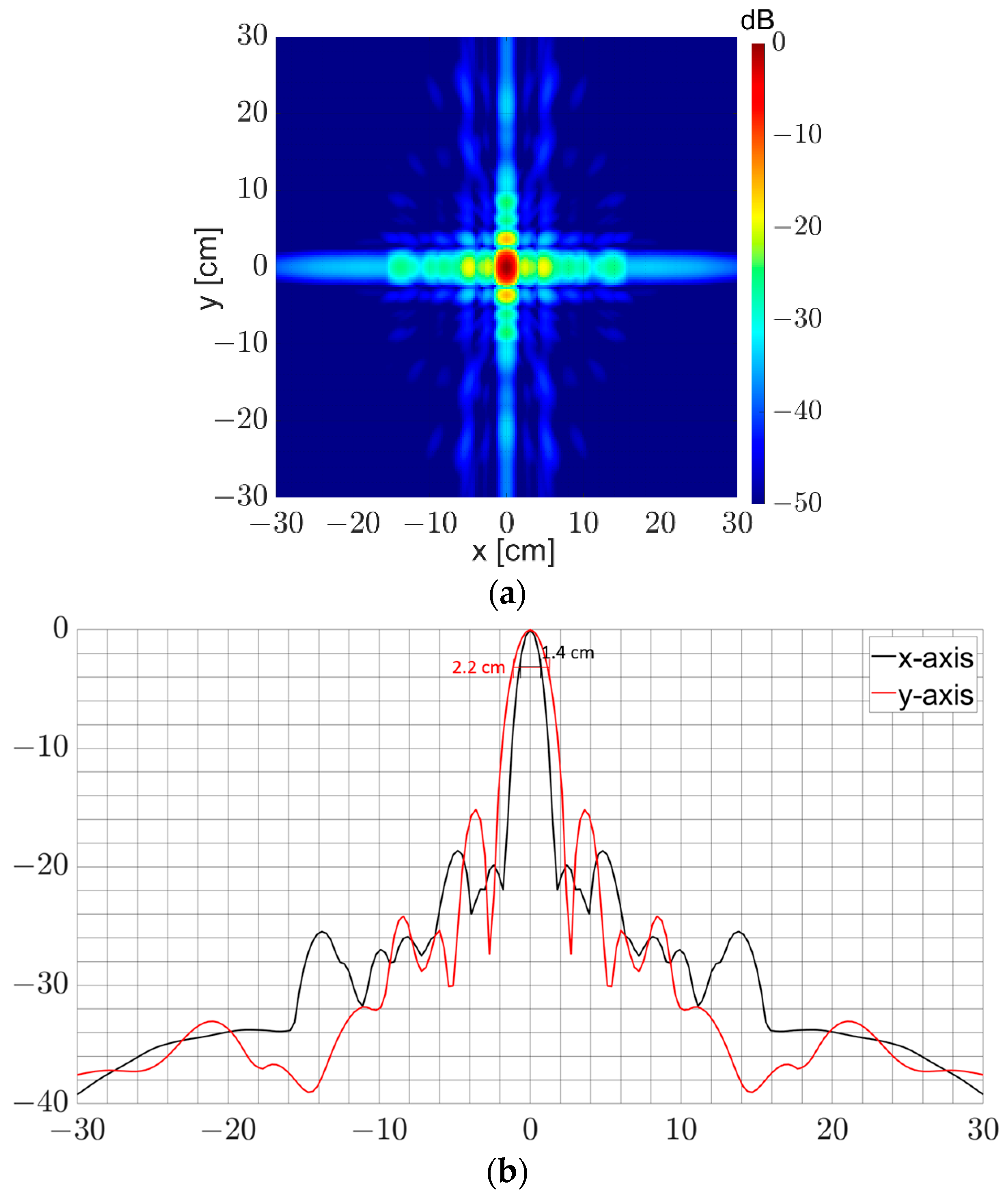

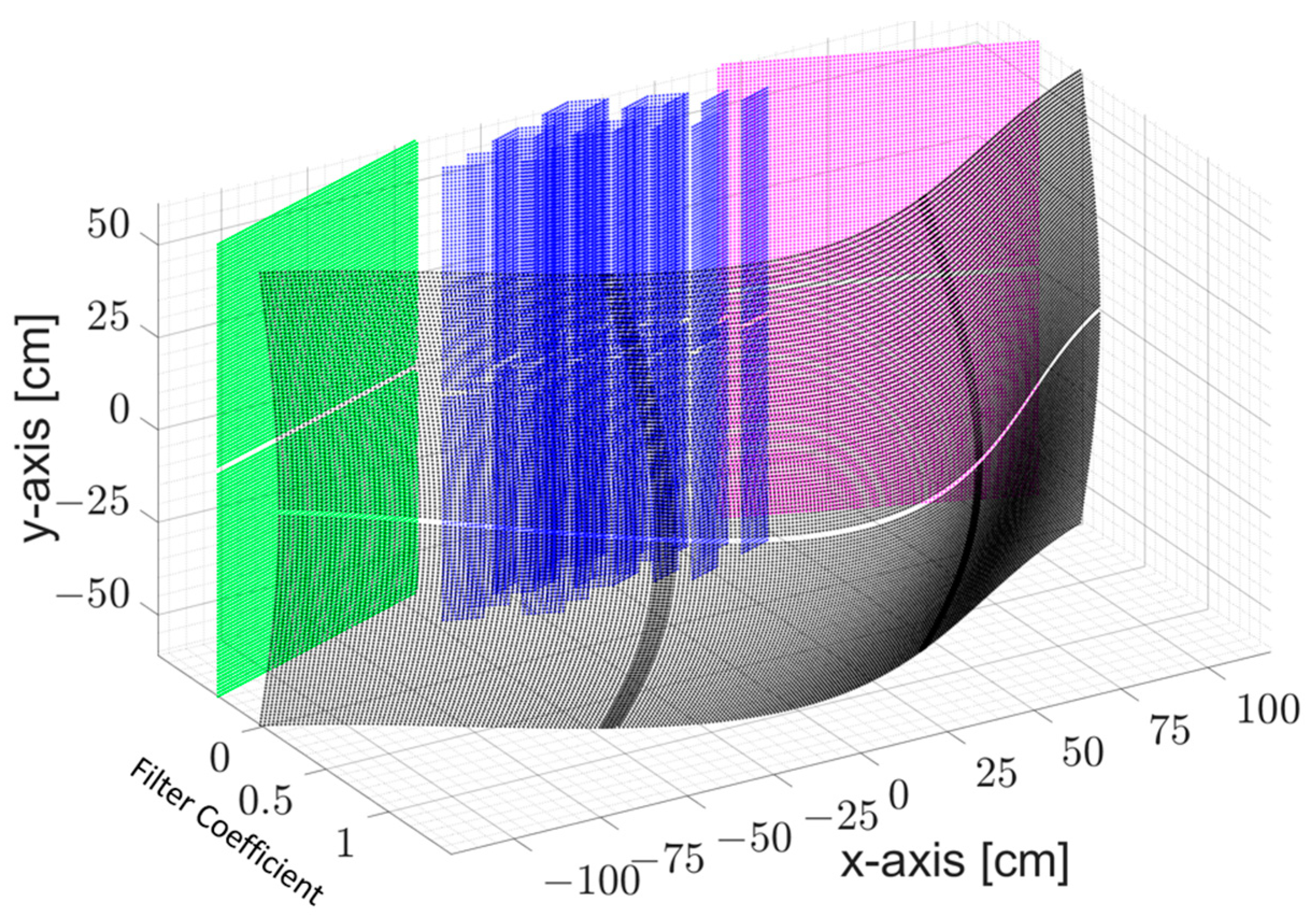

- Kaiser Filter: Virtual elements overlay the virtual aperture of the MIMO array. The abrupt end of virtual elements at the end of the virtual aperture can also degrade image quality, and so further smoothing might be required. The exact choice of the window coefficient will, of course, influence the lateral focusing quality of the array. This includes the side-lobe level as well as the resolution of the image. A suitable coefficient for the Kaiser filter is determined after the visibility comparison of the object, which is reconstructed for different coefficient values [11]. A coefficient of 4 has been chosen (Figure 8).

- Virtual Element Redundancy Filter: The illumination of the virtual array aperture is generally not flat and hence can include abrupt changes. Its smoothing is thus necessary to avoid abrupt illumination changes and preserve the image quality. The flattening of the aperture is thus performed by normalizing the scattering coefficient values by means of a number of antenna pairs whose virtual elements form at the same spatial position within the virtual array aperture.

- Velocity Estimation and Compensation: During the acquisition, the illuminated person is in motion. Although a single transmission time is below a millisecond, the total measurement time is 70 ms due to the time-division multiplexing mode of 176 transmitter antennas for each sub-system. This movement finally results in a shift in the spatial domain and needs to be compensated using a velocity compensation filter.

2.4.2. Calibration Procedure

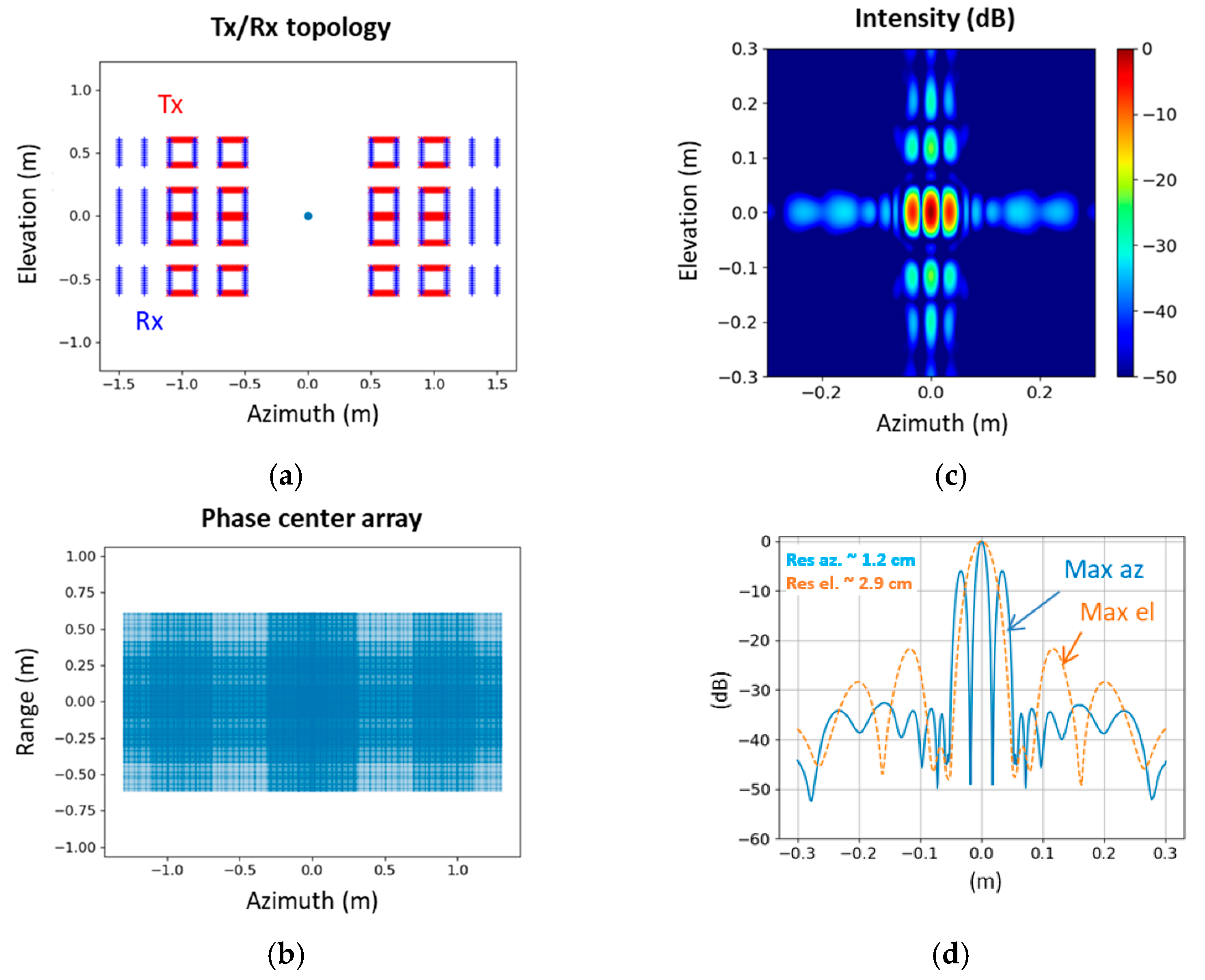

2.5. Imaging System Characterization

2.5.1. Point Spread Function Characterization

2.5.2. Signal-to-Noise Ratio Measurement

2.5.3. Radiation Power

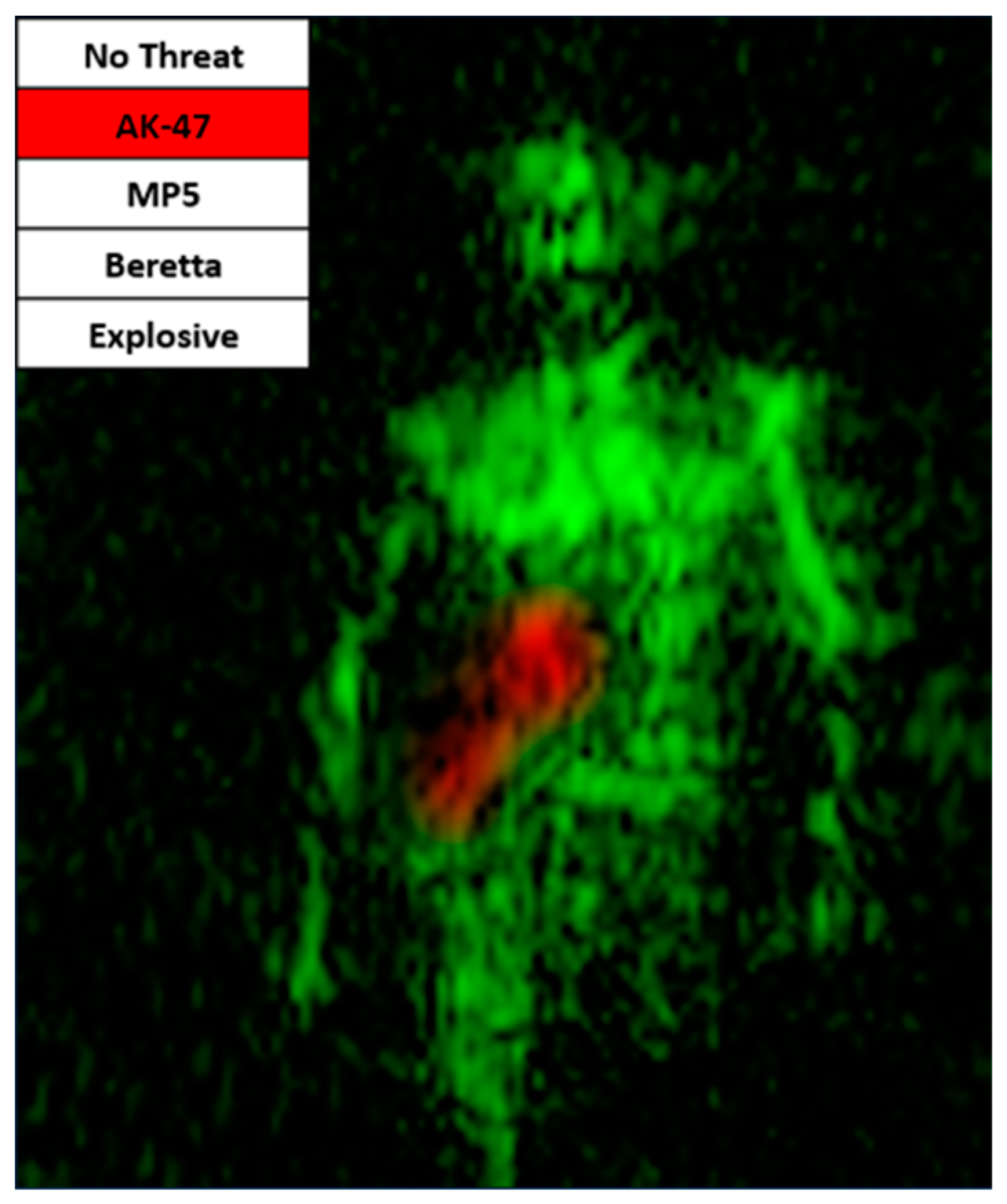

2.6. Artificial Intelligence Algorithm for Automatic Detection on Radar Imagery

2.6.1. MIC Convolutional Neural Network Classifier and Explainable Artificial Intelligence Description

- Convolutional Neural Network

- Explainable Artificial Intelligence module

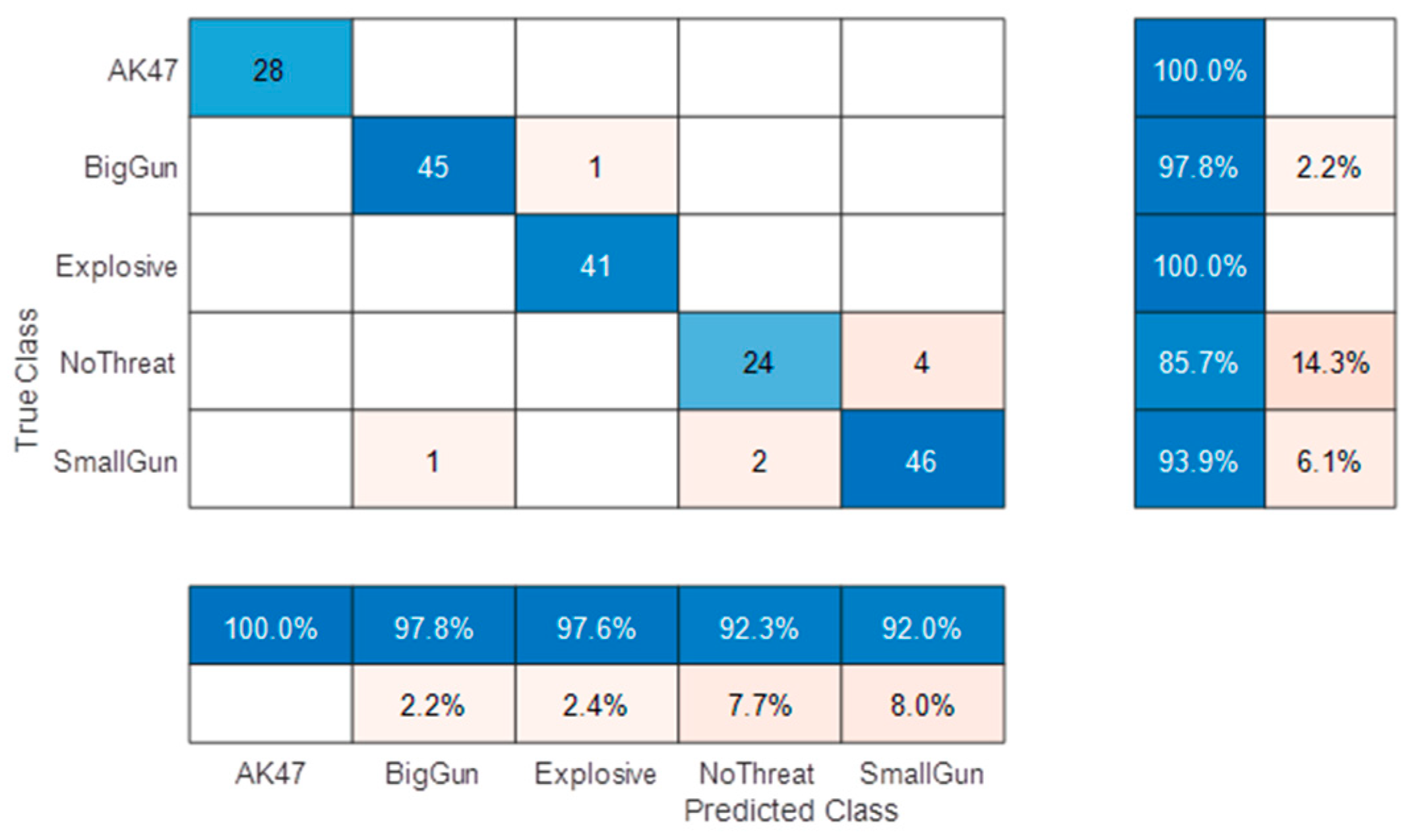

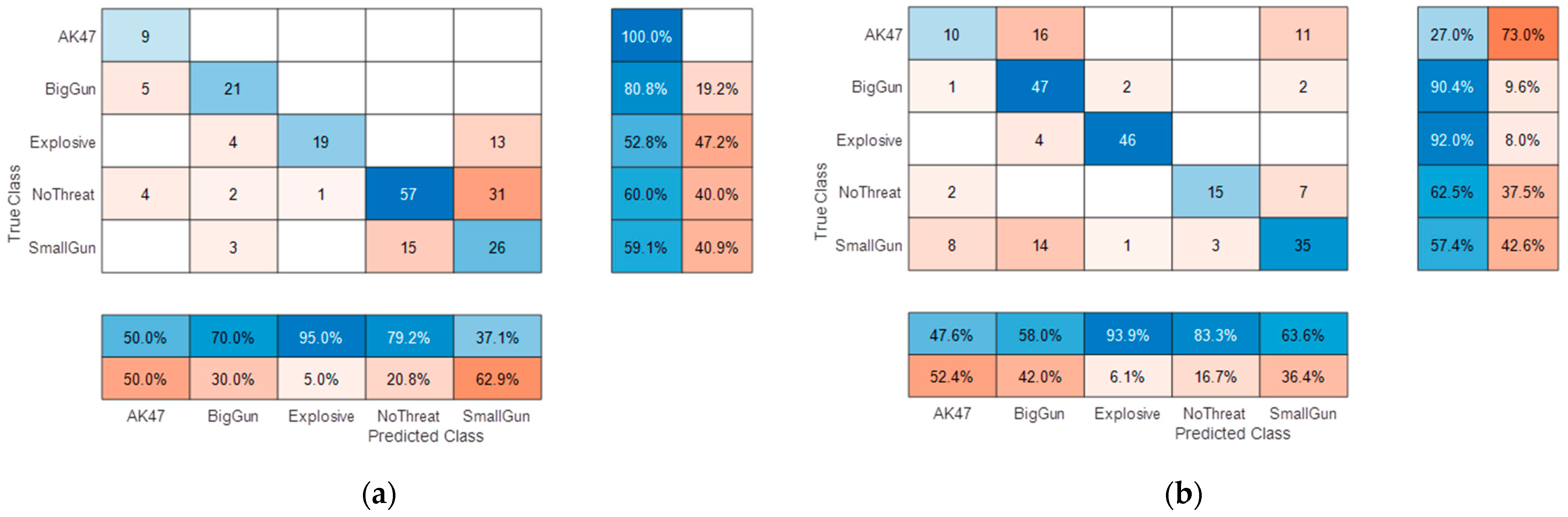

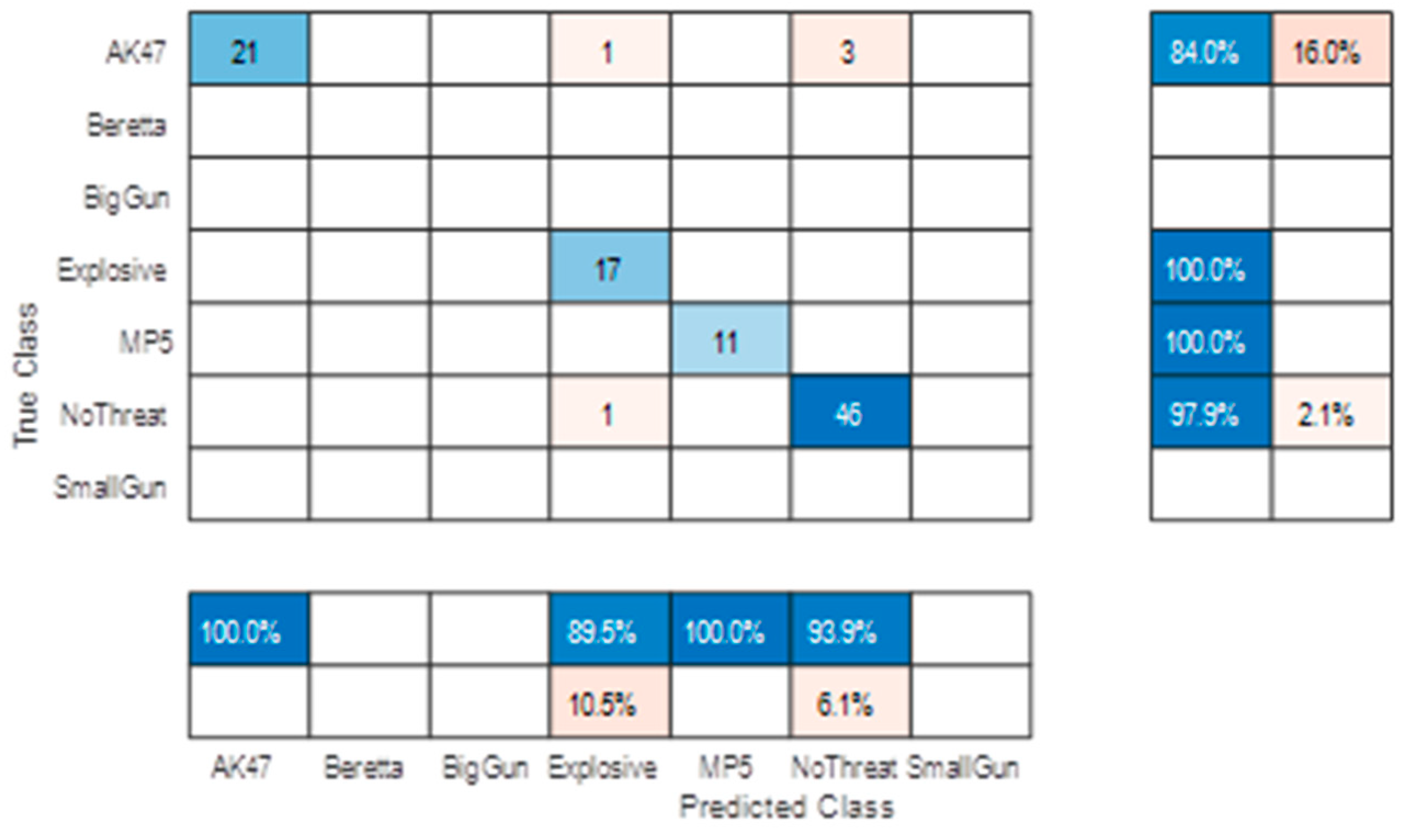

2.6.2. MIC Classification Performance Assessment on Static Targets

3. Performance Evaluation

3.1. Live Demonstration Setup

3.2. Field Trial Results

- 92.6% of threat detection and identification;

- 93% of threat detection;

- 94% of objects concealed under clothes detection;

- 96% of big concealed object detection (if we do not take into account the small gun cases that were at the limit of the system in terms of target size).

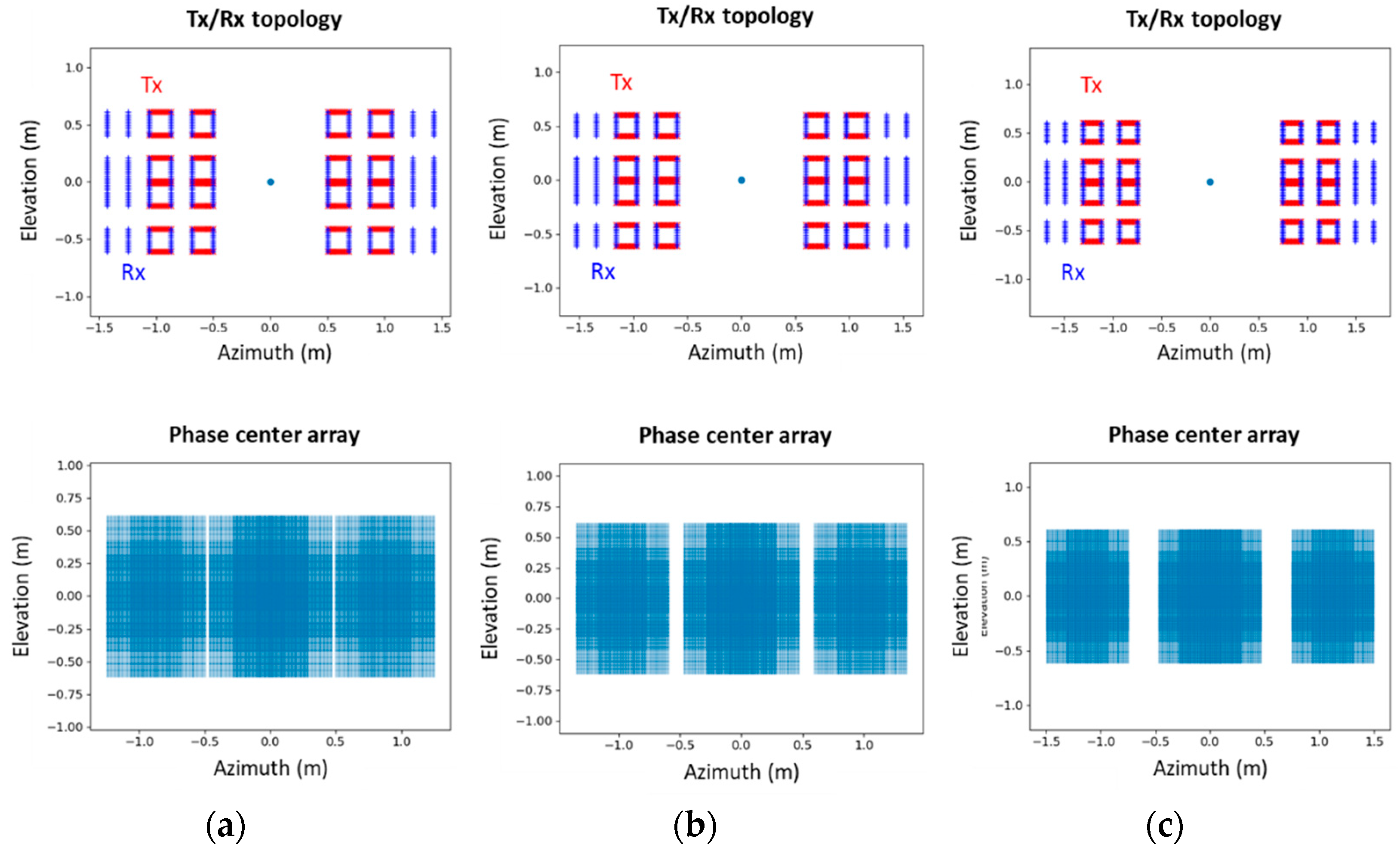

4. Perspectives for Operational Sensors: Wider Door and Wall Configuration

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Sheen, D.M.; Fernandes, J.L.; Tedeschi, J.R.; McMakin, D.L.; Jones, A.M.; Lechelt, W.M.; Severtsen, R.H. Wide-bandwidth, wide-beamwidth, high-resolution, millimeter-wave imaging for concealed weapon detection. In Passive and Active Millimeter-Wave Imaging XVI; International Society for Optics and Photonics: Bellingham, WA, USA, 2013; Volume 8715, pp. 65–75. [Google Scholar]

- Sheen, D.M.; McMakin, D.L.; Lechelt, W.M.; Griffin, J.W. Circularly polarized millimeter-wave imaging for personnel screening. In Passive Millimeter-Wave Imaging Technology VIII; International Society for Optics and Photonics: Bellingham, WA, USA, 2005; Volume 5789, pp. 117–126. [Google Scholar]

- Zhuge, X.; Yarovoy, A. A sparse aperture mimo-sar-based uwb imaging system for concealed weapon detection. IEEE Trans. Geosci. Remote Sens. 2011, 49, 509–518. [Google Scholar] [CrossRef]

- Anadol, E.; Seker, I.; Camlica, S.; Topbas, T.O.; Koc, S.; Alatan, L.; Oktem, F.; Civi, O.A. UWB 3D near-field imaging with a sparse MIMO antenna array for concealed weapon detection. In Radar Sensor Technology XXII; Ranney, K.I., Doerry, A., Eds.; International Society for Optics and Photonics SPIE: Bellingham, WA, USA, 2018; Volume 10633, pp. 458–472. [Google Scholar] [CrossRef]

- Ahmed, S.S.; Schiessl, A.; Schmidt, L.-P. A novel fully electronic active real-time imager based on a planar multistatic sparse array. IEEE Trans. Microw. Theory Tech. 2011, 59, 3567–3576. [Google Scholar] [CrossRef]

- Sheen, D.M.; McMakin, D.L.; Collins, H.D.; Hall, T.E.; Severtsen, R.H. Concealed explosive detection on personnel using a wideband holographic millimeter-wave imaging system. In Signal Processing, Sensor Fusion, and Target Recognition V; Kadar, I., Libby, V., Eds.; International Society for Optics and Photonics SPIE: Bellingham, WA, USA, 1996; Volume 2755, pp. 503–513. [Google Scholar] [CrossRef]

- Available online: https://vayyar.com/public-safety/ (accessed on 13 November 2023).

- Li, J.; Stoica, P. Mimo radar with colocated antennas. IEEE Signal Process. Mag. 2007, 24, 106–114. [Google Scholar] [CrossRef]

- Genghammer, A.; Juenemann, R.; Ahmed, S.; Gumbmann, F. Calibration Device and Calibration Method for Calibrating Antenna Arrays. U.S. Patent US 10,527,714 B2, 7 January 2020. [Google Scholar]

- Wang, G.; Tang, T.; Ye, J.; Zhang, F. Research on sidelobe suppression method in LFM pulse compression technique. IOP Conf. Series Mater. Sci. Eng. 2019, 677, 052104. [Google Scholar] [CrossRef]

- Ahmed, S. Electronic Microwave Imaging with Planar Multistatic Arrays. Ph.D. Thesis, University Erlangen, Erlangen, Germany, 2015. [Google Scholar]

- 1999/519/EC; Council Recommendation of 12 July 1999 on the Limitation of Exposure of the General Public to Electromagnetic Fields (0 Hz to 300 GHz). C.O.T.E. UNION: Barcelona, Spain, 1999.

- Ciresan, D.C.; Meier, U.; Masci, J.; Gambardella, L.M.; Schmidhuber, J. Flexible, High Performance Convolutional Neural Networks for Image Classification. In Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence, Barcelona, Spain, 16–22 July 2011; Volume 2, pp. 1237–1242. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Héder, M. Explainable AI: A Brief History of the Concept. ERCIM News 2023, 134, 9–10. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should I trust you?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| Parameters | |

|---|---|

| Module—Frequency Range | 6.5 GHz–10.5 GHz |

| Module—Number of Frequencies | 81 points |

| Total number of transmitter antennas | 352 |

| Total number of receiver antennas | 528 |

| Total number of modules | 24 |

| Confusions | False Positives | False Negatives |

|---|---|---|

| Small gun instead of big gun (2) | Small gun on woman (2) | Big gun on woman (2) |

| Explosive belt instead of a Backpack on the front (3) | Explosive belt on woman (1) | Small gun on woman (2) |

| AK47 instead of a big Metallic Bottle on the front (1) | AK47 on woman with arms crossed (1) | Small gun on man (3) |

| Small gun on “phone texting” (1) | Explosive belt on woman (1) | |

| Big gun on “phone texting” (1) | Explosive belt on man (2) | |

| Umbrella confused with small gun (1) | AK47 in wrong position |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baqué, R.; Vignaud, L.; Wasik, V.; Castet, N.; Herschel, R.; Cetinkaya, H.; Brandes, T. MIC: Microwave Imaging Curtain for Dynamic and Automatic Detection of Weapons and Explosive Belts. Sensors 2023, 23, 9531. https://doi.org/10.3390/s23239531

Baqué R, Vignaud L, Wasik V, Castet N, Herschel R, Cetinkaya H, Brandes T. MIC: Microwave Imaging Curtain for Dynamic and Automatic Detection of Weapons and Explosive Belts. Sensors. 2023; 23(23):9531. https://doi.org/10.3390/s23239531

Chicago/Turabian StyleBaqué, Rémi, Luc Vignaud, Valentine Wasik, Nicolas Castet, Reinhold Herschel, Harun Cetinkaya, and Thomas Brandes. 2023. "MIC: Microwave Imaging Curtain for Dynamic and Automatic Detection of Weapons and Explosive Belts" Sensors 23, no. 23: 9531. https://doi.org/10.3390/s23239531