Smartphone LiDAR Data: A Case Study for Numerisation of Indoor Buildings in Railway Stations

Abstract

:1. Introduction

2. State of Art

2.1. BIM and Scan Technology

2.2. KPI: Indicators for Quality Process of Point Clouds

2.3. Mobile Device Experimentation

2.4. Synthesis

3. Material and Method

3.1. Material: LiDAR Smartphone: iPhone 12 Pro

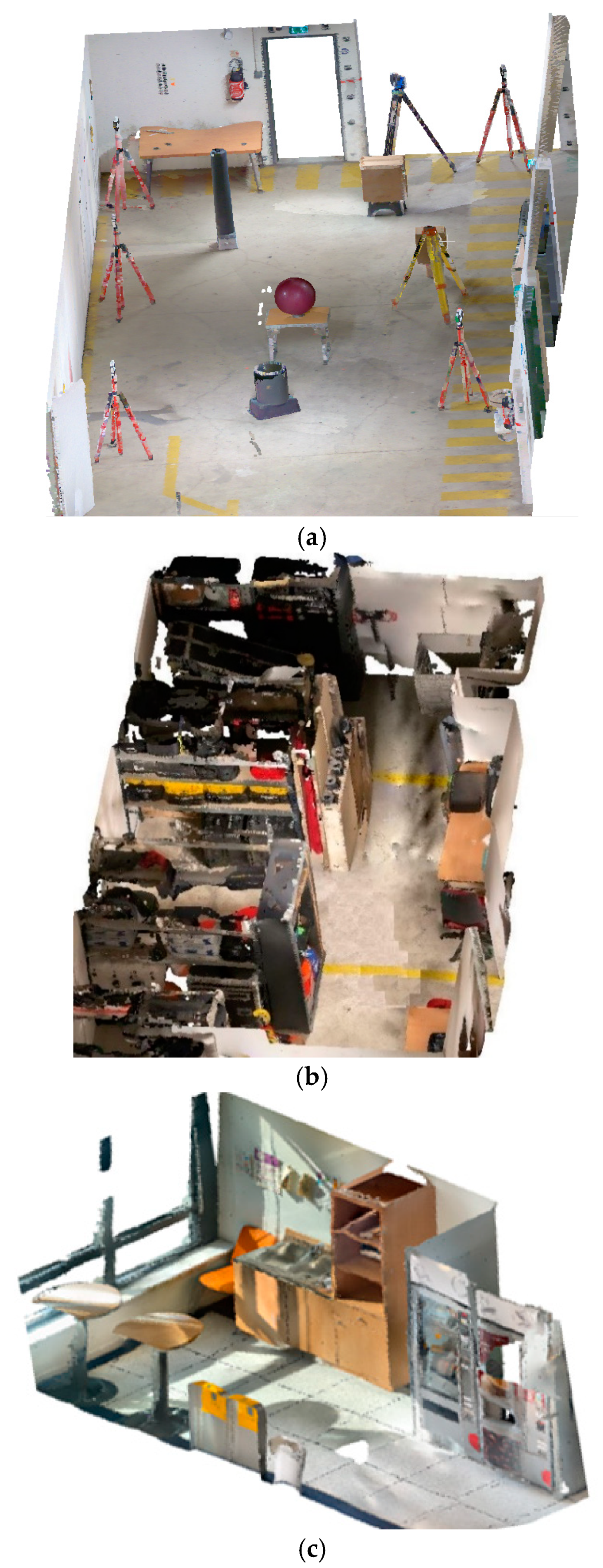

3.2. Railway Stations Use Case

3.3. Experimental Approach to Evaluate the Point Clouds of LiDAR Smartphone of Indoor Building Context: Protocol

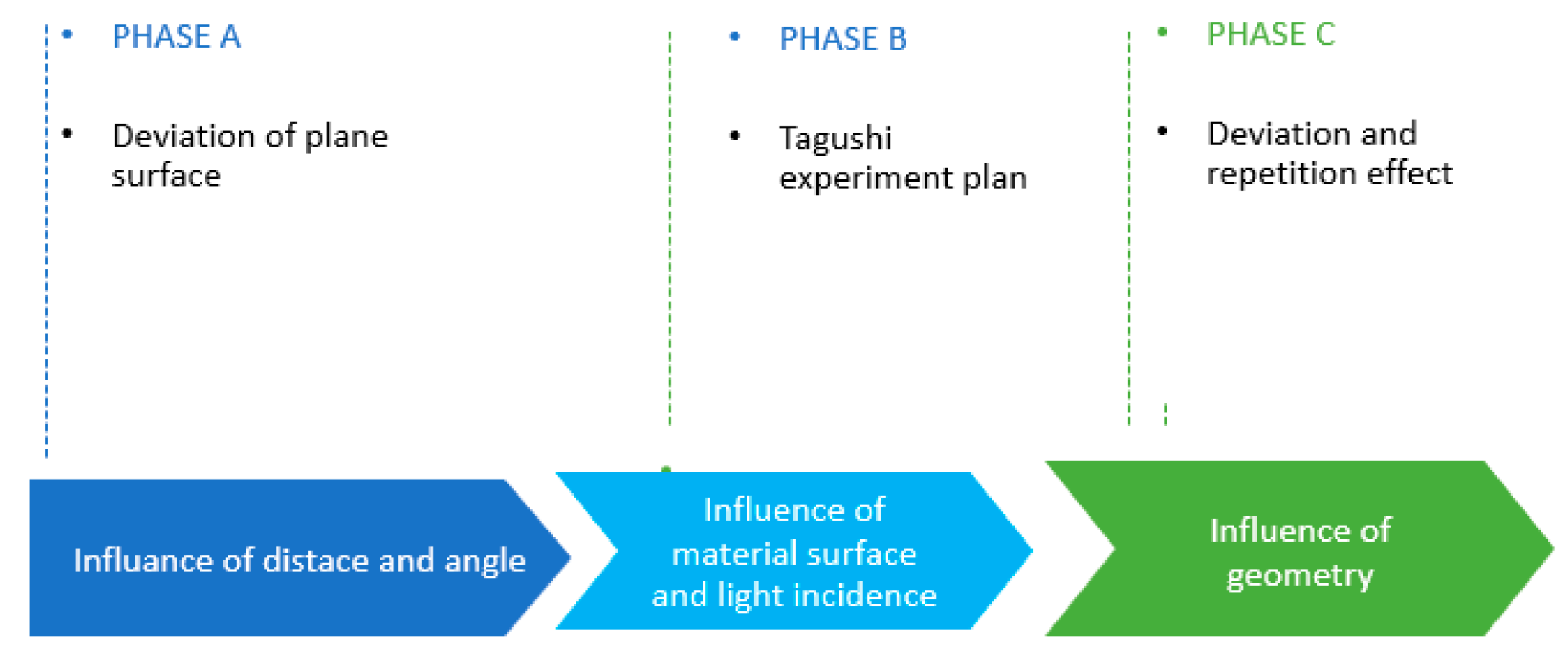

3.3.1. Experiments in Controlled Environment

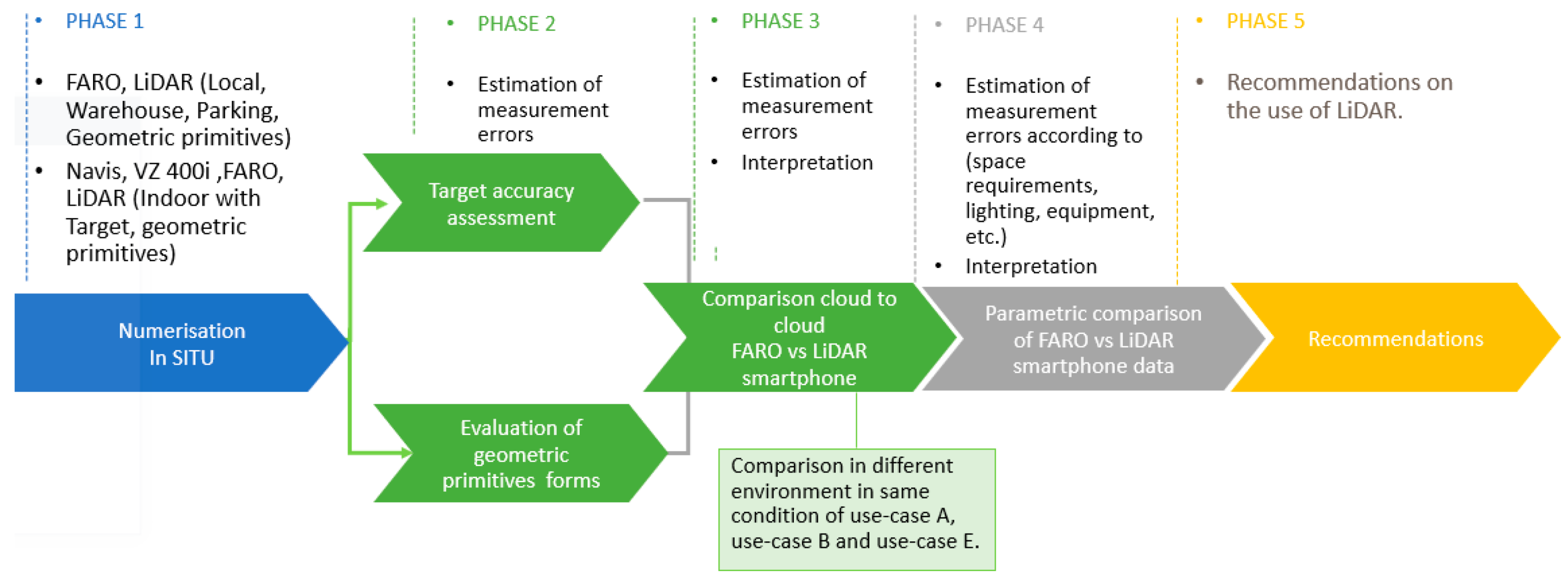

3.3.2. In Situ Experiments

4. Results

4.1. LiDAR Smartphone Performance Tests in a Controlled Environment

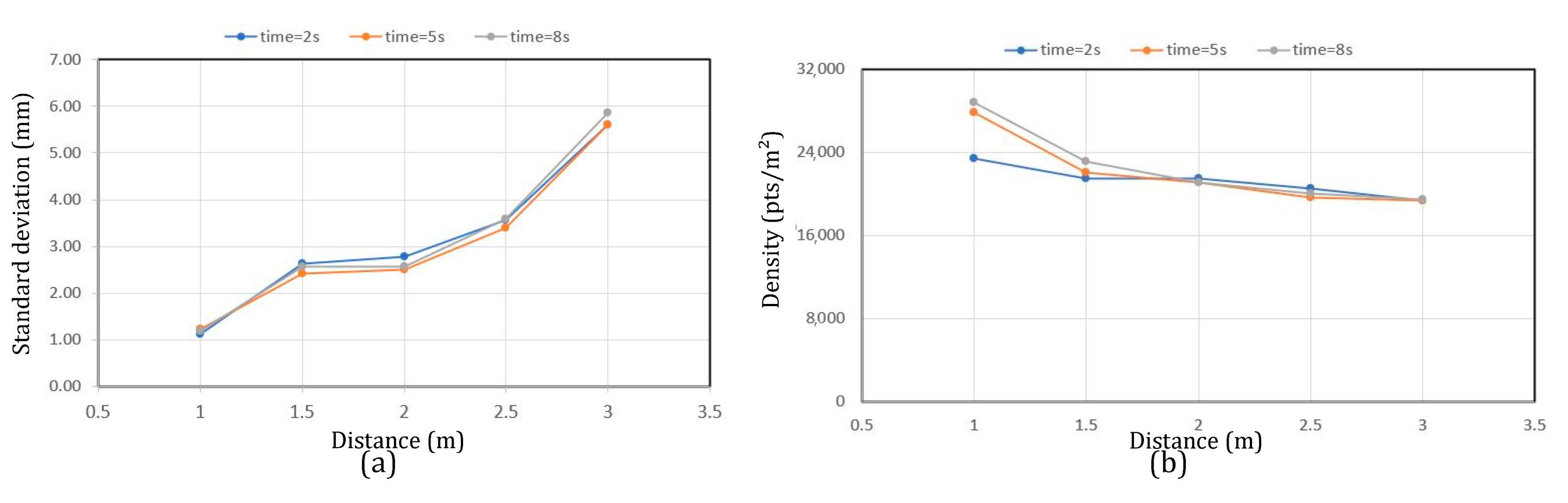

4.1.1. Influence of Distance and Time on Data (Phase A)

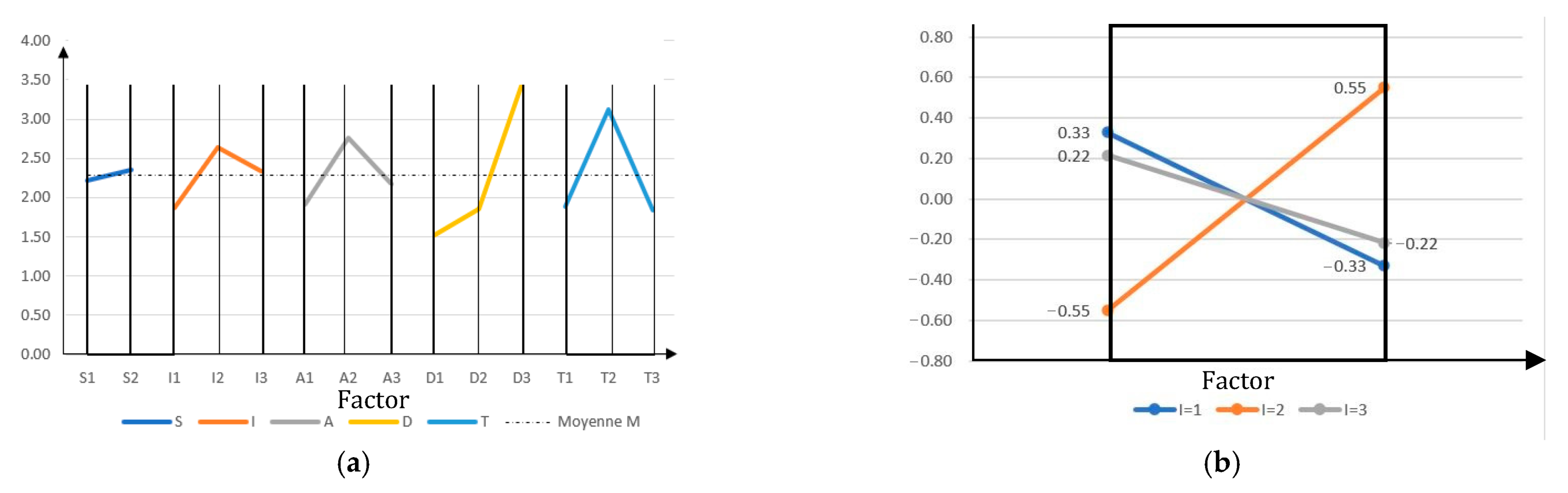

4.1.2. Influence of the Material Surface and Light Incidence (Phase B)

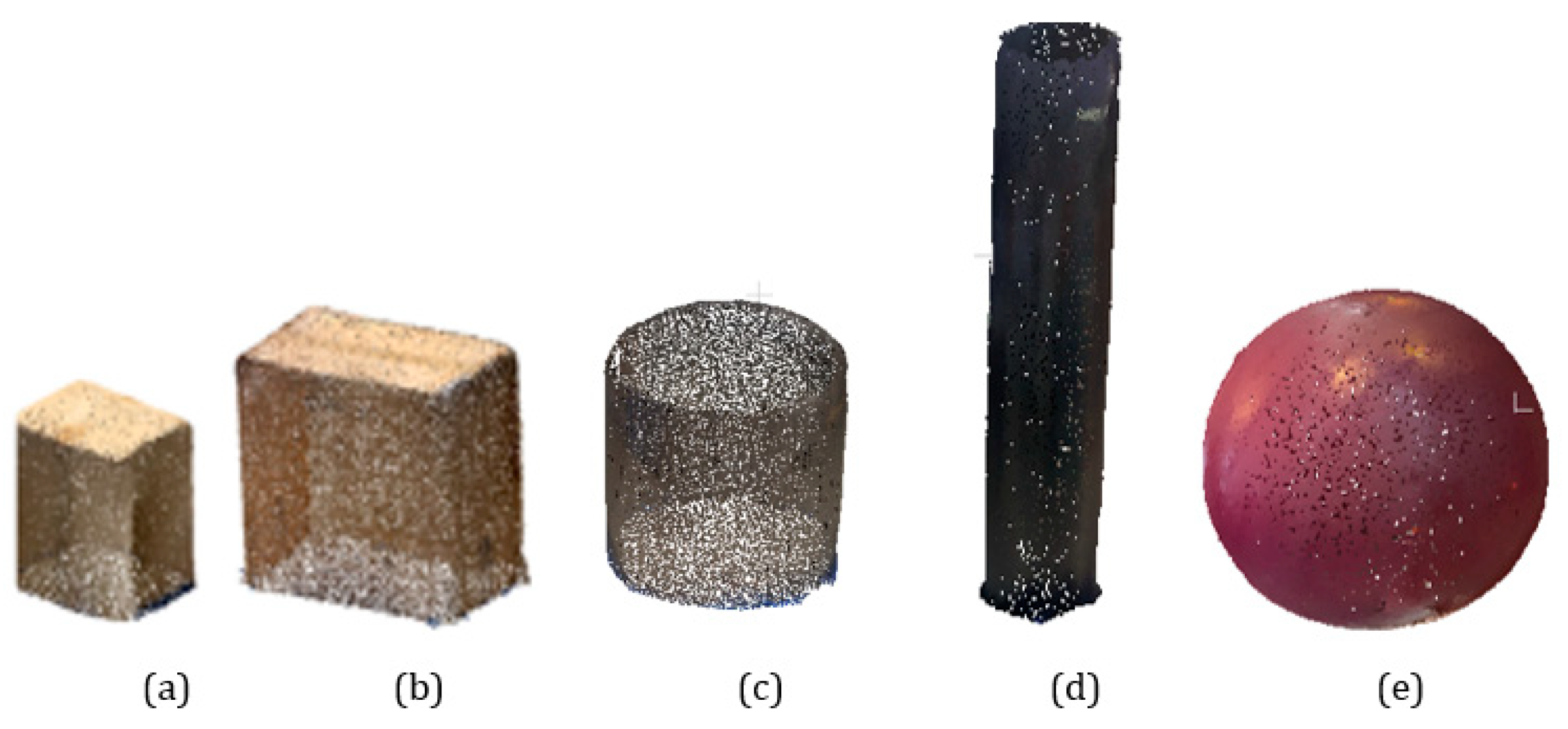

4.1.3. Influence of the Geometry (Phase C)

4.2. In Situ Experiments

4.2.1. Comparison of LiDAR Smartphone with Navis, RIEGEL, and FARO Scanner: Target Accuracy Assessment (Phase 2.1)

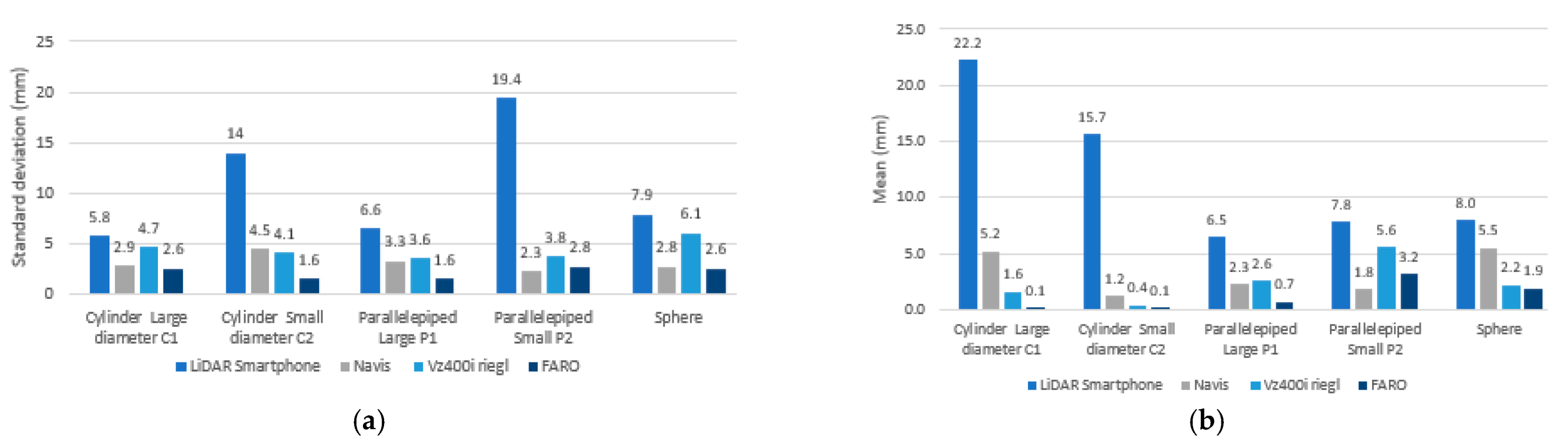

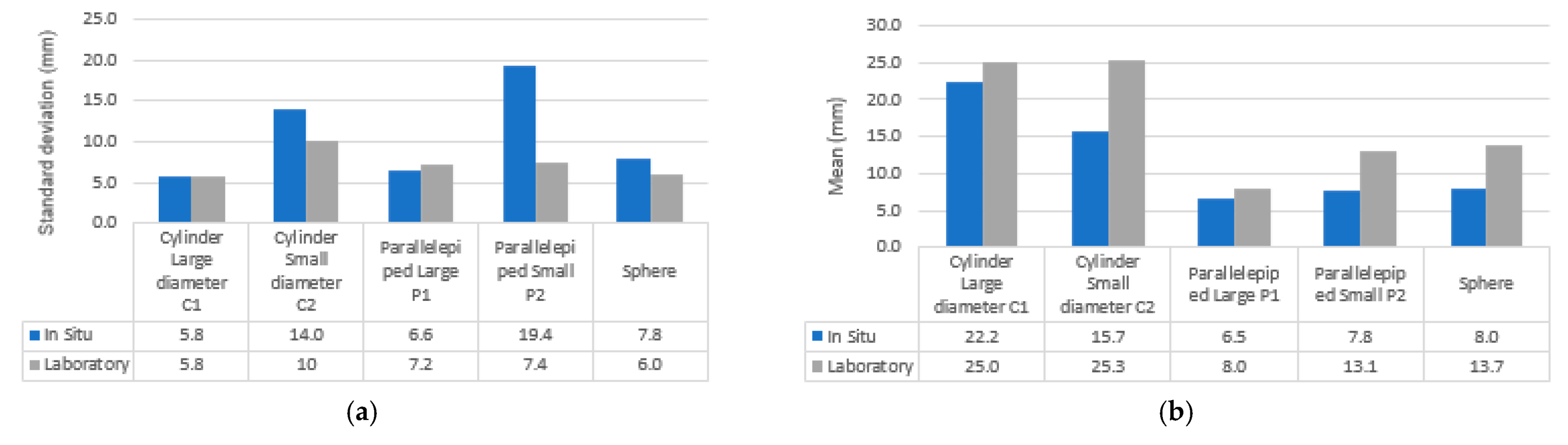

4.2.2. Comparison of LiDAR Smartphone with Navis, RIEGEL, and FARO Scanner: Evaluation of Geometric Primitive’s Form (Phase 2.2)

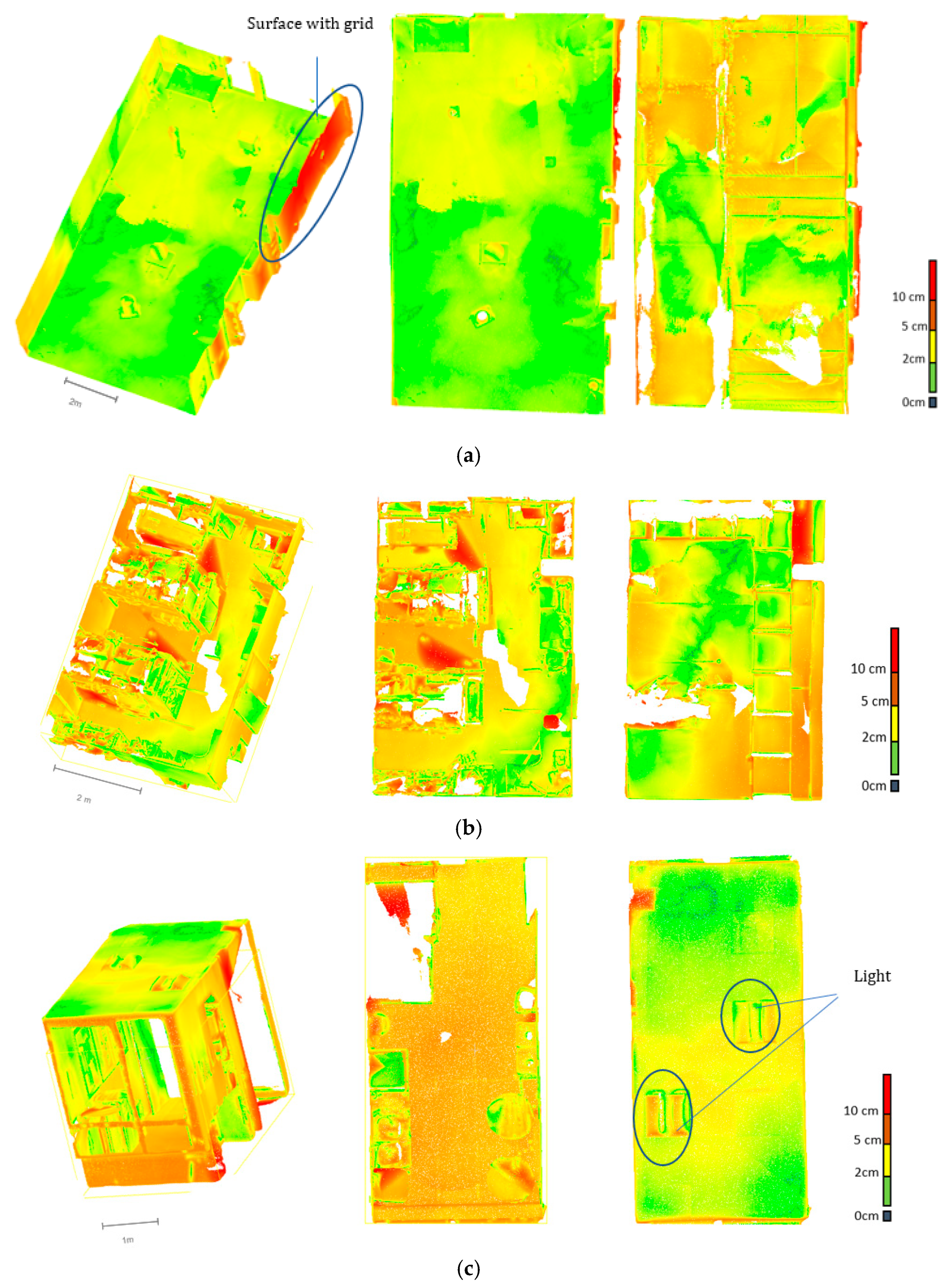

4.2.3. Comparison of Environment Quality of Reconstruction (LiDAR Smartphone vs. FARO): (Phase 3)

4.2.4. Parametric Comparison of FARO vs. LiDAR Smartphone Data (Phase 4)

5. Discussions: Recommendation for the Use of LiDAR Technologies in Railway Context

6. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Macher, H.; Landes, T.; Grussenmeyer, P. Point clouds segmentation as base for as-built BIM creation. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 2, 191–197. [Google Scholar] [CrossRef]

- Deng, M.; Menassa, C.C.; Kamat, V.R. From BIM to digital twins: A systematic review of the evolution of intelligent building representations in the AEC-FM industry. J. Inf. Technol. Constr. (ITcon) 2021, 26, 58–83. [Google Scholar] [CrossRef]

- Berger, M.; Tagliasacchi, A.; Seversky, L.; Alliez, P.; Levine, J.; Sharf, A.; Silva, C.; Berger, M.; Tagliasacchi, A.; Seversky, L.; et al. A Survey of Surface Reconstruction from Point Clouds. Comput. Graph. Forum 2016, 36, 301–329. [Google Scholar] [CrossRef]

- Dave, B.; Buda, A.; Nurminen, A.; Främling, K. A framework for integrating BIM and IoT through open standards. Autom. Constr. 2018, 95, 35–45. [Google Scholar] [CrossRef]

- Ham, N.; Moon, S.; Kim, J.H.; Kim, J.J. Economic Analysis of Design Errors in BIM-Based High-Rise Construction Projects: Case Study of Haeundae L Project. J. Constr. Eng. Manag. 2018, 144, 05018006. [Google Scholar] [CrossRef]

- Jiang, Y. Intelligent Building Construction Management Based on BIM. Comput. Intell. Neurosci. 2021, 2021, 11. [Google Scholar] [CrossRef]

- Salvi, S. A Review: Lifecycle Assessment of a Building by Using BIM. Int. J. Res. Appl. Sci. Eng. Technol. 2022, 10, 699–704. [Google Scholar] [CrossRef]

- Kubler, S.; Buda, A.; Robert, J.; Främling, K.; Le Traon, Y. Building Lifecycle Management System for Enhanced Closed Loop Collaboration. In Product Lifecycle Management for Digital Transformation of Industries, Proceedings of the 13th IFIP WG 5. 1 International Conference, Columbia, SC, USA, 11–13 July 2016; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 423–432. [Google Scholar]

- Varlik, A.; Dursun, İ. Generation and Comparison of Bim Models with Cad to Bim and Scan to Bim Techniques. SSRN Electron. J. 2022. [Google Scholar] [CrossRef]

- Khoshelham, K. Smart heritage: Challenges in digitisation and spatial information modelling of historical buildings. In Proceedings of the 2nd Workshop on Computing Techniques for Spatio-Temporal Data in Archaeology and Cultural Heritage, Melbourne, Australia, 28 August 2018; Volume 2230, pp. 7–12. [Google Scholar] [CrossRef]

- Valero, E.; Bosché, F.; Bueno, M. Laser scanning for BIM. J. Inf. Technol. Constr. 2022, 27, 486–495. [Google Scholar] [CrossRef]

- Lachat, E.; Landes, T.; Grussenmeyer, P. Performance investigation of a handheld 3D scanner to define good practices for small artefact 3D modeling. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2017, 42, 427–434. [Google Scholar] [CrossRef]

- Mechelke, K.; Kersten, T.P.; Lindstaedt, M. Comparative Investigation into the Accuarcy Behaviour of the New Generation of Terrestrial Laser Scanning Systems. Opt. 3-D Meas. Tech. VIII 2007, 1, 319–327. [Google Scholar]

- Vogt, M.; Rips, A.; Emmelmann, C. Comparison of iPad Pro ®’ s LiDAR and TrueDepth Capabilities with an Industrial 3D Scanning Solution. Technologies 2021, 9, 25. [Google Scholar] [CrossRef]

- Zaimovic-uzunovic, N.; Lemes, S. Influences of Surface Parameters on Laser 3D Scanning. In Proceedings of the IMEKO Conference Proceedings: International Symposium on Measurement and Quality Control, Osaka, Japan, 5–9 September 2010; pp. 9–12. [Google Scholar]

- Gerbino, S.; Maria, D.; Giudice, D.; Staiano, G.; Lanzotti, A.; Martorelli, M. On the influence of scanning factors on the laser scanner-based 3D inspection process. Int. J. Adv. Manuf. Technol. 2015, 84, 1787–1799. [Google Scholar] [CrossRef]

- Ameen, W.; Al-ahmari, A.M.; Mian, S.H. Evaluation of Handheld Scanners for Automotive Applications. Appl. Sci. 2018, 8, 217. [Google Scholar] [CrossRef]

- Bolkas, D.; Campus, W. Effect of target color and scanning geometry on terrestrial LiDAR point-cloud noise and plane fitting. J. Appl. Geod. 2018, 12, 109–127. [Google Scholar] [CrossRef]

- Lachat, E.; Macher, H.; Landes, T.; Grussenmeyer, P. Assessment and calibration of a RGB-D camera (Kinect v2 Sensor) towards a potential use for close-range 3D modeling. Remote Sens. 2015, 7, 13070–13097. [Google Scholar] [CrossRef]

- Calantropio, A.; Patrucco, G.; Sammartano, G.; Teppati Losè, L. Low-cost sensors for rapid mapping of cultural heritage: First tests using a COTS Steadicamera. Appl. Geomat. 2018, 10, 31–45. [Google Scholar] [CrossRef]

- Lachat, E.; Landes, T.; Grussenmeyer, P. Combination of TLS point clouds and 3D data from kinect V2 sensor to complete indoor models. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 2–19 July 2016; Volume 41, pp. 659–666. [Google Scholar] [CrossRef]

- Sgrenzaroli, M.; Ortiz Barrientos, J.; Vassena, G.; Sanchez, A.; Ciribini, A.; Mastrolembo Ventura, S.; Comai, S. Indoor Mobile Mapping Systems and (BIM) Digital Models for Construction Progress Monitoring. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLIII, 6–11. [Google Scholar] [CrossRef]

- Zhang, S.; Shan, J.; Zhang, Z.; Yan, J.; Hou, Y. Integrating smartphone images and airborne lidar data for complete urban building modelling. Int. Arch. Photogramm. Remote Sens. Spat Inf. Sci. - ISPRS Arch. 2016, 41, 741–747. [Google Scholar] [CrossRef]

- Gupta, S.; Lohani, B. Augmented reality system using lidar point cloud data for displaying dimensional information of objects on mobile phones. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 2, 153–159. [Google Scholar] [CrossRef]

- Gao, J.H.; Peh, L.S. A smartphone-based laser distance sensor for outdoor environments. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 2922–2929. [Google Scholar]

- Luetzenburg, G.; Kroon, A.; Bjørk, A.A. Evaluation of the Apple iPhone 12 Pro LiDAR for an Application in Geosciences. Sci. Rep. 2021, 11, 22221. [Google Scholar] [CrossRef] [PubMed]

- Tavani, S.; Billi, A.; Corradetti, A.; Mercuri, M.; Bosman, A.; Cuffaro, M.; Seers, T.; Carminati, E. Earth-Science Reviews Smartphone assisted fieldwork: Towards the digital transition of geoscience fieldwork using LiDAR-equipped iPhones. Earth-Sci. Rev. 2022, 227, 103969. [Google Scholar] [CrossRef]

- Spreafico, A.; Chiabrando, F.; Tonolo, F.G. The Ipad Pro Built-In Lidar Sensor: 3D Rapid Mapping Tests and Quality Assessment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 63–69. [Google Scholar] [CrossRef]

- Khoshelham, K.; Tran, H.; Acharya, D. Indoor Mapping Eyewear: Geometric Evaluation of Spatial Mapping Capability of Hololens. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII, 10–14. [Google Scholar] [CrossRef]

- Teppati Losè, L.; Spreafico, A.; Chiabrando, F.; Giulio Tonolo, F. Apple LiDAR Sensor for 3D Surveying: Tests and Results in the Cultural Heritage Domain. Remote Sens. 2022, 14, 4157. [Google Scholar] [CrossRef]

- Díaz-Vilariño, L.; Tran, H.; Frías, E.; Balado, J.; Khoshelham, K. 3D Mapping of Indoor and Outdoor Environments Using Apple Smart Devices. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 303–308. [Google Scholar] [CrossRef]

- 3DScanApp. Available online: https://3dscannerapp.com/ (accessed on 1 September 2020).

- SPAD. Available online: https://www.vgis.io/2020/12/02/lidar-in-iphone-and-ipad-spatial-tracking-capabilities-test-take-2/ (accessed on 2 December 2020).

- ukašinović, N.; Bračun, D.; Možina, J.; Duhovnik, J. The influence of incident angle, object colour and distance on CNC laser scanning. Int. J. Adv. Manuf. Technol. 2010, 50, 265–274. [Google Scholar] [CrossRef]

- Semioshkina, N.; Voigt, G. An overview on Taguchi Method. J. Radiat. Res. 2006, 47, A95–A100. [Google Scholar] [CrossRef]

- Pillet, M. Les plans d’expériences par la méthode Taguchi. Available online: https://hal.science/hal-00470004 (accessed on 2 February 2023).

- Balado, J.; Frías, E.; González-Collazo, S.M.; Díaz-Vilariño, L. New Trends in Laser Scanning for Cultural Heritage; Springer Nature: Singapore, 2022; Volume 258. [Google Scholar]

- Costantino, D.; Vozza, G.; Pepe, M.; Alfio, V.S. Smartphone LiDAR Technologies for Surveying and Reality Modelling in Urban Scenarios: Evaluation Methods, Performance and Challenges. Appl. Syst. Innov. 2022, 5, 63. [Google Scholar] [CrossRef]

- Gourguechon, C.; Macher, H.; Landes, T. Automation of As-Built Bim Creation from Point Cloud: An Overview of Research Works Fo-Cused on Indoor Environment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 193–200. [Google Scholar] [CrossRef]

- Dore, C.; Murphy, M.; McCarthy, S.; Brechin, F.; Casidy, C.; Dirix, E. Structural simulations and conservation analysis-historic building information model (HBIM). Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 351. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. arXiv arXiv:1612.00593, 2017. Available online: https://arxiv.org/abs/1612.00593 (accessed on 2 February 2023).

- Wang, F.; Zhao, Y.; Shi, G.; Cui, Q.; Cao, T.; Jiang, X.; Hou, Y.; Zhuang, R.; Mei, Y. CGR-Block: Correlated Feature Extractor and Geometric Feature Fusion for Point Cloud Analysis. Sensors 2022, 22, 4878. [Google Scholar] [CrossRef]

- Aslan, İ.; Polat, N. Availability of Iphone 13 Pro Laser Data in 3D Modeling. Adv. LiDAR 2022, 2, 10–14. [Google Scholar]

| Column 1 | Column 2 | Column 3 | Column 4 | Column 5 | |

|---|---|---|---|---|---|

| Tests | Surface | Incidence Of light | Angle | Distance | Time |

| L1 | S1 = Wood | I1 = 0° | A1 = 0° | D1 = 1 m | T1 = 2 s |

| L2 | S1 = Wood | I1 = 0° | A2 = 25° | D2 = 2 m | T2 = 5 s |

| L3 | S1 = Wood | I1 = 0° | A3 = 45° | D3 = 3 m | T3 = 8 s |

| L4 | S1 = Wood | I2 = 40° | A1 = 0° | D1 = 1 m | T2 = 5 s |

| L5 | S1 = Wood | I2 = 40° | A2 = 25° | D2 = 2 m | T3 = 8 s |

| L6 | S1 = Wood | I2 = 40° | A3 = 45° | D3 = 3 m | T1 = 2 s |

| L7 | S1 = Wood | I3 = 80° | A1 = 0° | D2 = 2 m | T1 = 2 s |

| L8 | S1 = Wood | I3 = 80° | A2 = 25° | D3 = 3 m | T2 = 5 s |

| L9 | S1 = Wood | I3 = 80° | A3 = 45° | D1 = 1 m | T3 = 8 s |

| L10 | S2 = Aluminium | I1 = 0° | A1 = 0° | D3 = 3 m | T3 = 8 s |

| L11 | S2 = Aluminium | I1 = 0° | A2 = 25° | D1 = 1 m | T1 = 2 s |

| L12 | S2 = Aluminium | I1 = 0° | A3 = 45° | D2 = 2 m | T2 = 5 s |

| L13 | S2 = Aluminium | I2 = 40° | A1 = 0° | D2 = 2 m | T1 = 2 s |

| L14 | S2 = Aluminium | I2 = 40° | A2 = 25° | D3 = 3 m | T2 = 5 s |

| L15 | S2 = Aluminium | I2 = 40° | A3 = 45° | D1 = 1 m | T3 = 8 s |

| L16 | S2 = Aluminium | I3 = 80° | A1 = 0° | D3 = 3 m | T2 = 5 s |

| L17 | S2 = Aluminium | I3 = 80° | A2 = 25° | D1 = 1 m | T3 = 8 s |

| L18 | S2 = Aluminium | I3 = 80° | A3 = 45° | D2 = 2 m | T1 = 2 s |

| LiDAR Smartphone iPhone 12 Pro Max | Navis VLX | Vz 400i RIEGL | FARO Focus X130 | |

|---|---|---|---|---|

| Range | 0.5–5 m | 0.5–60 m | 0.5–800 m | 0.6–130 m |

| Precision | 5–20 mm | 6 mm | 1 mm | 2 mm |

| Size | 14.6 cm × 7.1 cm × 0.7 cm | 108 cm × 33 cm × 56 cm | 30 cm × 22 cm × 26 cm | 24 cm × 20 cm × 10 cm |

| Type of scan | Dynamic | Dynamic | Static | Static |

| Sensor | TOF: Time Of Flight | TOF: Time Of Flight | TOF: Time Of Flight | TOF: Time Of Flight |

| Test | Y (mm) |

|---|---|

| L1 | 1.53 |

| L2 | 1.78 |

| L3 | 3.10 |

| L4 | 1.81 |

| L5 | 1.55 |

| L6 | 2.68 |

| L7 | 2.02 |

| L8 | 3.69 |

| L9 | 1.75 |

| L10 | 1.76 |

| L11 | 1.15 |

| L12 | 1.91 |

| L13 | 1.64 |

| L14 | 6.80 |

| L15 | 1.31 |

| L16 | 2.70 |

| L17 | 1.60 |

| L18 | 2.27 |

| Factors | S | I | A | D | T |

|---|---|---|---|---|---|

| Effect of level 1 | −0.07 | −0.41 | −0.37 | −0.76 | −0.40 |

| Effect of level 2 | 0.07 | 0.35 | 0.48 | −0.42 | 0.84 |

| Effect of level 3 | 0.06 | −0.11 | 1.18 | −0.44 |

| MSE: Mean Square Error (m) | ||||

|---|---|---|---|---|

| d12 | d23 | d34 | d41 | |

| LiDAR Smartphone | 0.037 | 0.321 | 0.100 | 0.090 |

| Navis | 0.005 | 0.005 | 0.001 | 0.007 |

| VZ | 0.007 | 0.003 | 0.001 | 0.003 |

| FARO | 0.001 | 0.007 | 0.004 | 0.004 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Catharia, O.; Richard, F.; Vignoles, H.; Véron, P.; Aoussat, A.; Segonds, F. Smartphone LiDAR Data: A Case Study for Numerisation of Indoor Buildings in Railway Stations. Sensors 2023, 23, 1967. https://doi.org/10.3390/s23041967

Catharia O, Richard F, Vignoles H, Véron P, Aoussat A, Segonds F. Smartphone LiDAR Data: A Case Study for Numerisation of Indoor Buildings in Railway Stations. Sensors. 2023; 23(4):1967. https://doi.org/10.3390/s23041967

Chicago/Turabian StyleCatharia, Orphé, Franck Richard, Henri Vignoles, Philippe Véron, Améziane Aoussat, and Frédéric Segonds. 2023. "Smartphone LiDAR Data: A Case Study for Numerisation of Indoor Buildings in Railway Stations" Sensors 23, no. 4: 1967. https://doi.org/10.3390/s23041967