1. Introduction

In conventional photography, only limited information from the light passing through the camera lens is captured. In general, each point in the captured images is the sum of the light ray intensities striking that point, not the total amount of light traveling along different directions that contribute to the image [

1]. In contrast, light field imaging technology can capture rich visual information by representing the distribution of light in free space [

2], which means capturing the pixel intensity and the direction of the incident light. Light fields can be captured using either an array of cameras [

3] or a plenoptic camera [

4]. However, capturing a light field with high spatial and angular resolution is challenging because plenoptic cameras have a spatial-angular resolution trade-off [

4], and the set-up for a dense camera array is complex.

The additional dimensions of data captured in light field images enable generating images with extended depth of field and images at different focal lengths using ray-tracing techniques. Light field images also allow researchers to explore depth estimation techniques such as depth from defocus and correspondence, stereo-based matching, and using epipolar images from a single light field image. Depth estimation is crucial in computer vision applications such as robot vision, self-driving cars, surveillance, and human–computer interactions [

5]. Stereo-based matching algorithms for light field images are mainly based on energy minimization and graph cut techniques. Kolmogorov and Zabih [

6] combine visibility and smoothness terms for energy minimization. On the other hand, Bleyer et al. [

7] consider the pixel appearance, global MDL (Minimum Description Length) constraint, smoothing, soft segmentation, surface curvature, and occlusion. However, the stereo-based depth estimation methods suffer from ambiguities while dealing with noisy and aliased regions. The narrow baseline makes it difficult for these algorithms to solve the occlusion problem [

8]. In their work, Schechner and Kiryati [

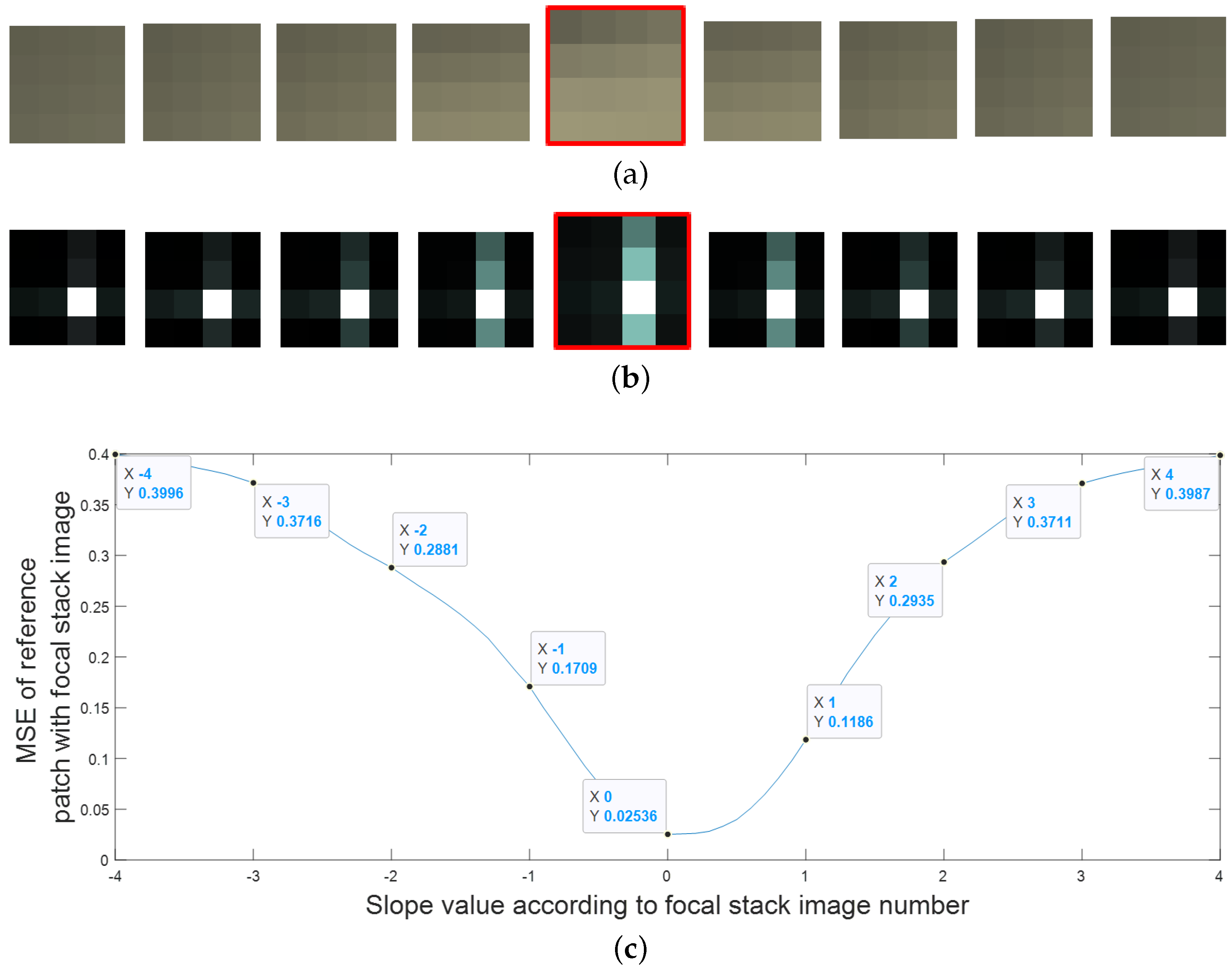

9] study the advantages and disadvantages of depth from defocus and correspondence techniques. While depth from stereo and depth from defocus and correspondence techniques can also be used for non-light field images, depth from epipolar images can only be used for light field images. Epipolar images (EPI) are formed by stacking the light field sub-aperture images in the horizontal and vertical directions, and a slice through this 4D representation reveals the depth of the pixels in terms of the slope of the line. However, due to the noise present in images, basic line fitting techniques do not produce robust results [

10]. Zhang et al. [

8] use a spinning parallelogram operator and estimate the local direction of a line in an EPI. They consider pixels on either side of the line separately to avoid the problem of the inconsistency of the pixel distribution. This also makes their depth estimation algorithm more robust to occlusions and noise compared to the EPI algorithm presented by Criminisi et al. [

11] and Wanner and Goldluecke [

12]. The techniques explored for depth estimation of light field images form the building blocks for light field image reconstruction and synthesis.

Light field reconstruction and synthesis algorithms can solve the problem of lower spatial resolutions for hand-held plenoptic cameras, and the ability to convert 2D RGB images to 4D light field images will change how we perceive traditional photography. Many algorithms that propose light field reconstruction techniques use a sparse set of light field views to reconstruct novel views [

13,

14,

15,

16]. However, the input data for these algorithms are a set of sub-aperture images, which is not easy to capture because the camera needs to be moved to capture the sub-aperture views using a 2D camera, and this is time-consuming and introduces issues of alignment. In contrast, for capturing a focal stack and all-in-focus images, we only need to change the focus and aperture of the camera without physically moving the camera. Thus, these algorithms cannot be used for light field synthesis but can be used for either increasing the spatial and angular resolution of light field images or for light field image compression. In this paper, the term ‘light field synthesis’ is considered an approach to creating an entire light field image with fewer input images, and we are not trying to synthesize the views with a large or small baseline. We are only focusing on mimicking the light field sub-aperture views using characteristics of the EPI of the light field images while using the central all-in-focus image and depth map.

On the other hand, light field synthesis can also be classified into two main categories: non-learning-based and learning-based approaches. Non-learning-based light field synthesis algorithms use a deterministic approach, where the same rules are used to synthesize the view for every image. These synthesis algorithms can be further divided into two categories based on the input data used: focal stack images or stereo image pairs. Stereo image pair-based algorithms either use micro-baseline image pairs or an image pair with a large baseline. Zhang et al. [

17] propose a micro-baseline image pair-based view synthesis algorithm. Since the disparity between the stereo pair is small, the images can be captured by vibrating a static camera. Chao et al. [

18], on the other hand, use a large baseline stereo pair. As the algorithm uses a large baseline, the horizontal views are synthesized by interpolating the stereo pair. In contrast, the main advantage of using focal stack images for light field synthesis is that the focal stack images can provide information to fill the gaps created near occluded regions in the synthesized views; key recent algorithms are in [

14,

19,

20].

Learning-based light field synthesis uses a probabilistic approach, where the training input images are used to fit a model that can map the output. The two main drawbacks of learning-based light field synthesis approaches are that, first, a large amount of training data are required to train the network adequately; second, that the algorithm’s accuracy directly relates to the training data quality. Some learning-based algorithms [

18,

21,

22] synthesize the entire 9 × 9 sub-aperture light field images using two, four, and one input image, respectively. Although these algorithms use fewer images as input, the Convolutional Neural Network (CNN) must be trained on a significant amount of training data to ensure high accuracy.

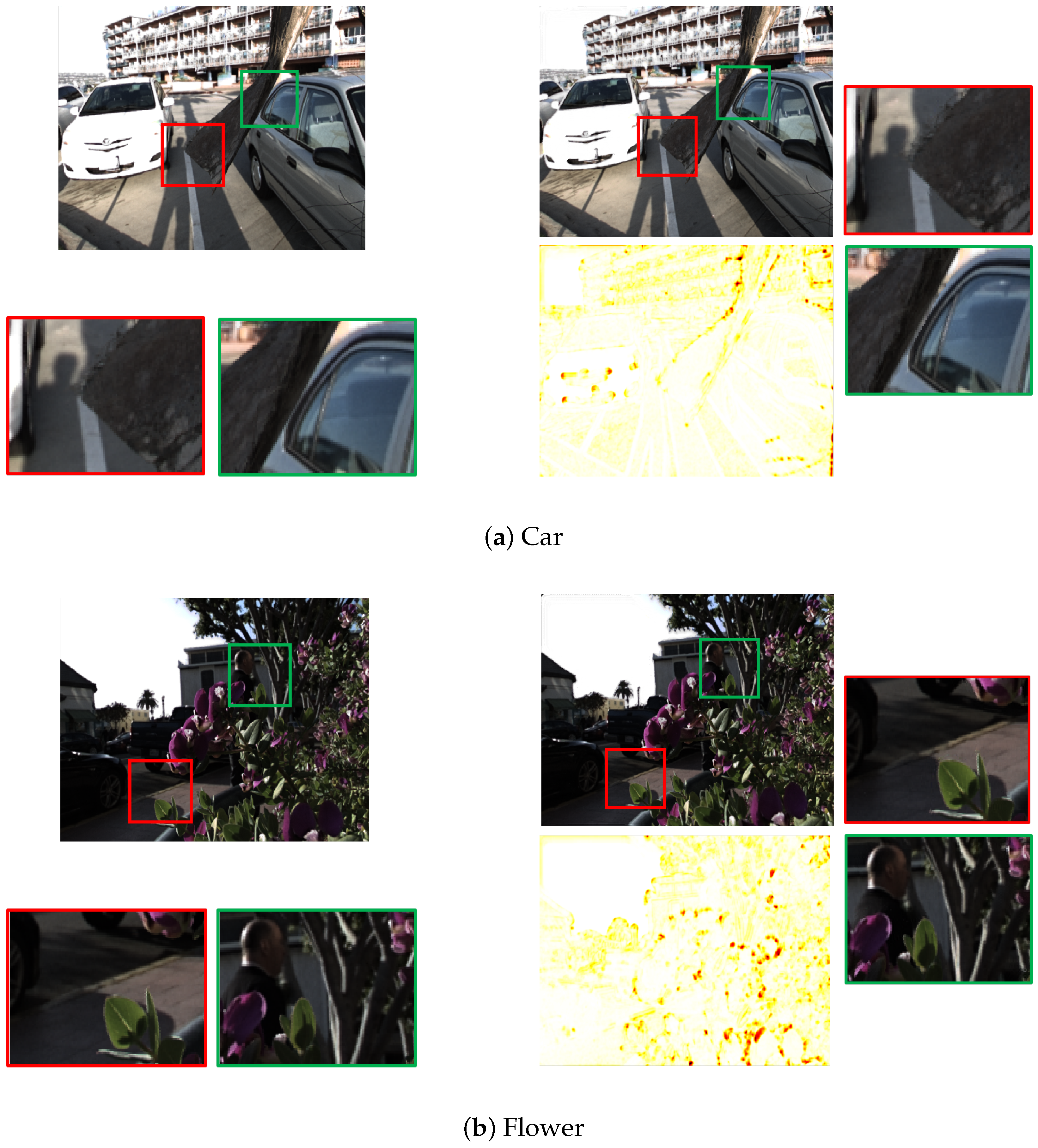

Our work thus uses a non-learning-based light field synthesis approach that does not require training data, yet can use varying sizes of focal stacks to synthesize light field images with high accuracy. We use the focal stack images and the all-in-focus image to synthesize the light field image: first, estimating a depth map using depth from defocus; then, refining the depth map using maximum likelihood for pixels estimated to incorrect depths. The depth map and the all-in-focus image are then used to synthesize the sub-aperture views and their corresponding depth maps by shifting the regions in the image. In our work, we show that, without using input images with a large baseline, we can still mimic the apparent disparity of the objects at different depths in sub-aperture views by only using the depth map and all-in-focus image. The missing information in the synthesized views is only where the foreground object moves more than the background objects for occluded regions. We handle these occluded regions in the synthesized sub-aperture views by filling them with the information recovered from the focal stack images. We compare our algorithm accuracy with one non-learning and two learning-based (CNN) approaches, and show that our algorithm outperforms all three in terms of PSNR and SSIM metrics.

Our Contributions

Our proposed non-learning-based light field synthesis approach improves synthesis accuracy by:

Synthesizing high-accuracy light field images with varying sizes of focal stacks as input, filling the occluded regions with the information recovered from the focal stack images;

Using the frequency domain to mimic the apparent movement of the regions at different depths in the sub-aperture view, ensuring sub-pixel accuracy for small depth values.

2. Related Work

A well-known method for image synthesis using intermediate views of a scene is image interpolation [

23]. View interpolation is then the process of estimating intermediate views given a set of images of the scene from different viewpoints. In an earlier key work on image synthesis [

24], pixel correspondences are established using the range data and the camera transformation parameters between a pair of two consecutive images. A pre-computed morph map is then used to interpolate the intermediate views. In their work, they also talk about the holes that are generated in the estimated intermediate views. As the foreground regions in the estimated views move more than the background regions, these holes are filled by interpolating the adjacent pixels near the holes. However, this causes the filled regions to be blurry.

Building on these early image synthesis views, non-learning light field synthesis approaches either use a sparse set of perspective views to synthesize the view inside of the image baseline, or use a focal stack or the central view to extrapolate the perspective views. As mentioned previously, light field synthesis can be more broadly classified into two main categories: non-learning based and learning based approaches. Whilst we use focal stack images in a non-learning approach, below, we review both learning and non-learning light field synthesis methods as our results are compared to both types of approaches.

2.1. Non-Learning Based Light Field Synthesis

Kubota et al. [

19] use focal stacks captured from multiple viewpoints to synthesise intermediate views. They assume that the scene has only two focus regions: a background and a foreground. The inputs for their approach are four images, two images captured by each camera for the background and foreground regions. The drawback of the approach is that it requires images to be captured from two viewpoints, which is a complex setup, and the resultant synthesized image only has only two focal planes.

In their work, Mousnier et al. [

20] propose partial light field reconstruction from a focal stack. They use the focal stack images captured by a Nikon camera to estimate the disparity map and an all-in-focus image, and then use the camera parameters to estimate the depth map. They use the depth map and all-in-focus image to synthesise only one set of nine horizontal and nine vertical perspective views, but since the algorithm requires data from the camera parameters, it is difficult to implement the algorithm to check the accuracy against light field sub-aperture images.

Levin et al. [

14] also use focal stack images, but, instead of using depth estimation for the synthesis, show that using a focal stack, the 4D light field can be rendered in a linear and depth-invariant manner. They argue that a focal stack is a slice of the 4D light field spectrum; thus, the focal stack directly provides the set of slices that comprise the 3D focal manifold that can be used to construct the 4D light field spectrum. However, their dimensionality gap model is unreliable at depth boundaries, which results in the background pixels leaking into the foreground pixels.

Pérez et al. [

25] propose a light field recovering algorithm from its focal stack that is based on the inversion of the Discrete Focal Stack transform. They show that the inversion using the Discrete Focal Stack transform needs many images in the focal stack. They then show practical inversion procedures for general light fields with various types of regularizers, such as L2 regularization of 0th order and 1st order, and L1 isotropic TV regularization. The two main drawbacks of the algorithm proposed by Pérez et al. [

25] are that inversion using the Discrete Focal Stack transform requires a large number of images in the focal stack, and they need to use regularization approaches to stabilize the transform.

Zhang et al. [

17] in their work use one micro-baseline image pair to synthesize the 4D light field image, where the disparity between the stereo images is less than 5 pixels. They propose that the small-baseline image pair can be captured using vibration in a static camera or by a slight movement of a hand-held camera. There are two limitations of the approach: first, the depth estimation algorithm used reduces in accuracy as the distance between the input views is increased; second, since no information is available to fill in the gaps generated by the difference in the movement of the background and foreground regions in the sub-aperture images, the accuracy of the edge sub-aperture images is reduced considerably compared to the sub-aperture images closer to the central view.

Shi et al. [

13] use a sparse set of light field views to predict the views inside the boundary light field images used, but since the approach requires a specific set of sub-aperture views as input data from the light field images, applying the algorithm to different types of data is non-trivial. The approach can be used for applications such as light field compression, but not for light field synthesis as they require a set of sub-aperture views as input data.

2.2. Learning-Based Light Field Synthesis

Kalantari et al. [

21] propose a two-network learning based light field synthesis approach that uses a sparse set of four corner sub-aperture images. The first network estimates the depth map and then the second network estimates the missing RGB sub-aperture images. Gul et al. [

26] propose a three-stage learning-based light field synthesis approach that also uses a sparse set of four corner sub-aperture images. The first stage is the stereo feature extraction network, the second stage is a depth estimation network, and the third stage uses the depth map to warp the input corner view to have them registered with the target view to be synthesized. One drawback of both the proposed algorithm is that capturing the four corner sub-aperture images is not straightforward, and would either require moving the camera manually or a special apparatus with multiple cameras. However, the approach can be used for light field compression as the approach uses corner sub-aperture views as input data.

Srinivasan et al. [

22] propose a CNN that estimates the geometry of the scene for a single image and renders the Lambertian light field using that geometry, with a second CNN stage that predicts the occluded rays and non-Lambertian effects. The network is trained on a dataset containing 3300 scenes of flowers and plants captured by a plenoptic camera. However, since the algorithm predicts the 4D light field image using a single image, filling the regions in the sub-aperture image at large discontinuities will only be an approximation as that information is not available from a single image. They extend their network by training it on 4281 light fields of various types of toys including cars, figurines, stuffed animals, and puzzles, but their results show that the images are perceptually not quite as impressive as the images synthesized for the flower dataset [

22].

Wang et al. [

27] propose a two-stage position-guiding network that uses the left-right stereo pair to synthesize the novel view. They first estimate the depth map for the middle/central view and then check the consistency for the synthesized left and right view. The second CNN is the view rectifying network. They train their network on the Flyingthings3D dataset [

28] that contains 22,390 pairs of left-right views and their disparity maps for training and 4370 pairs for testing. The main limitation of the approach is that, since their research focuses on dense view synthesis for light field display, they only generate the central horizontal views and not the entire light field image.

Wu et al. [

29] present a “blur restoration-deblur” framework for light field reconstruction using EPIs. They first extract the low-frequency components of the light field in the spatial dimensions using a blur kernel on each EPI slice. They then implement a CNN to restore the angular details of the EPI, and they use a non-blind “deblur” operation on the blurred EPI to recover the high spatial frequencies. In their work, they also show the effectiveness of their approach on challenging cases like microscope light field datasets [

29]. The main drawback of their approach is that they need at least three views for both angular dimensions for the initial interpolation, and their framework cannot handle extrapolation.

Yeung et al. [

30] propose a learning-based algorithm to reconstruct a densely-sampled light field in one forward pass from a sparse set of sub-aperture views. Their approach first synthesises intermediate sub-aperture images with spatial-angular alternating convolutions using the characteristics of the sparse set of input views, and they then use guided residual learning and stride 2 4D convolutions to refine the intermediate sub-aperture views. They suggest that the proposed algorithm can not-only be used for light field compression but also applications such as spatial and angular super-resolution and depth estimation.

Zhou et al. [

31] train a deep network that predicts the Multi-Plane Image (MPI) using an input stereo image pair. A multi-plane image is a set of images where each plane encodes the RGB image and an alpha/transparency map at each depth estimated by the stereo image pair. The MPIs can be considered as a focal stack representation of the scene, predicted using only the stereo image as input. If the stereo baseline is large enough, the parts of the image that are visible due to the lateral shift give information that can be used to fill in the gaps generated by the difference in region depths in the perspective views (horizontal direction). Chao et al. [

18] in their work propose a lightweight CNN that uses a single stereo image pair that enforces the left-right consistency constraint on the light fields synthesized from left and right stereo views. The light field synthesized by right and left stereo views is then merged by using a distance-weighted alpha blending operation. However, since the input stereo pair used is only in the horizontal direction, gaps in the vertical perspective views can only be filled by using a prediction model as no information is available in the vertical direction.