A New Deep-Learning Method for Human Activity Recognition

Abstract

1. Introduction

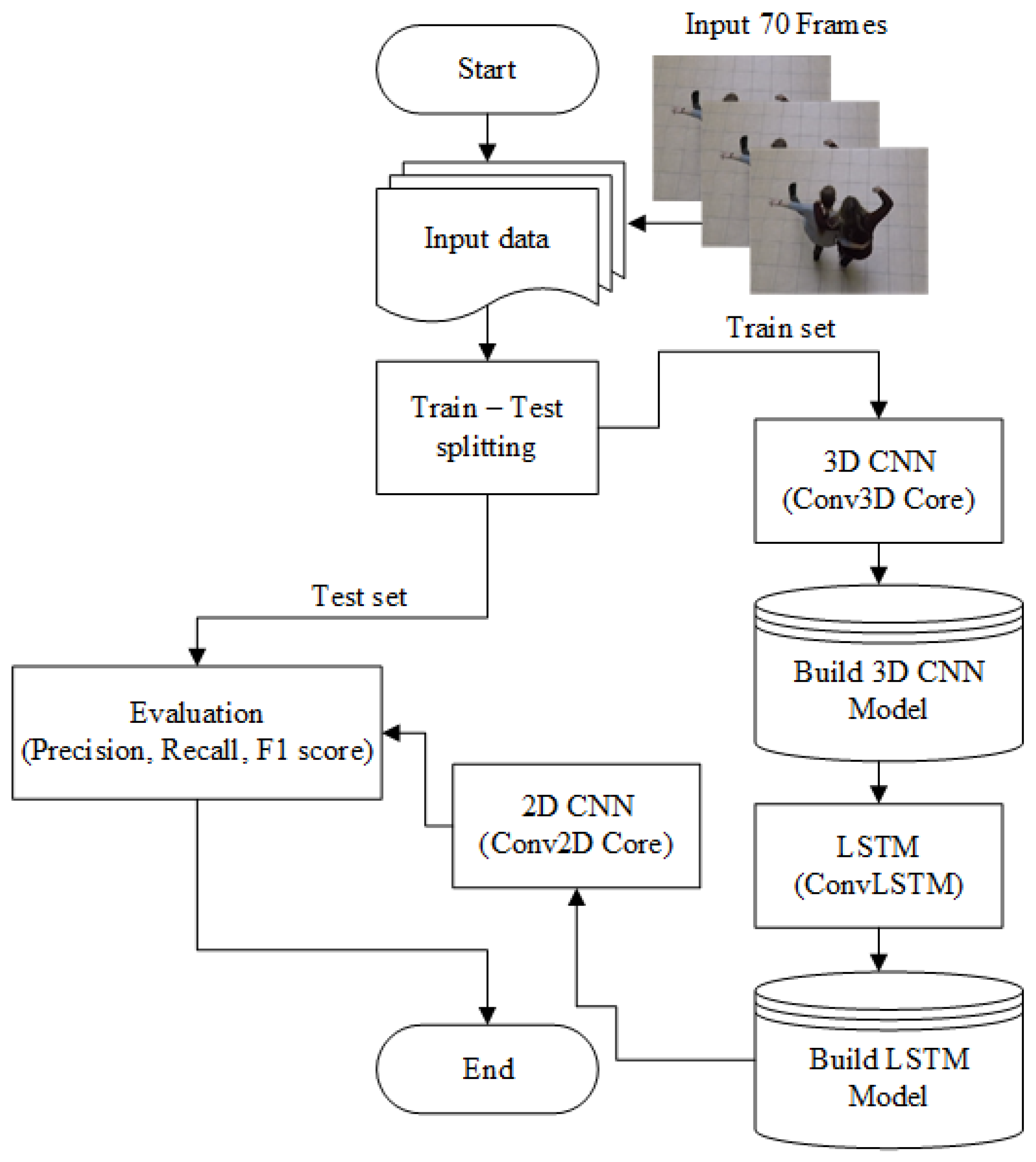

2. Materials and Methods

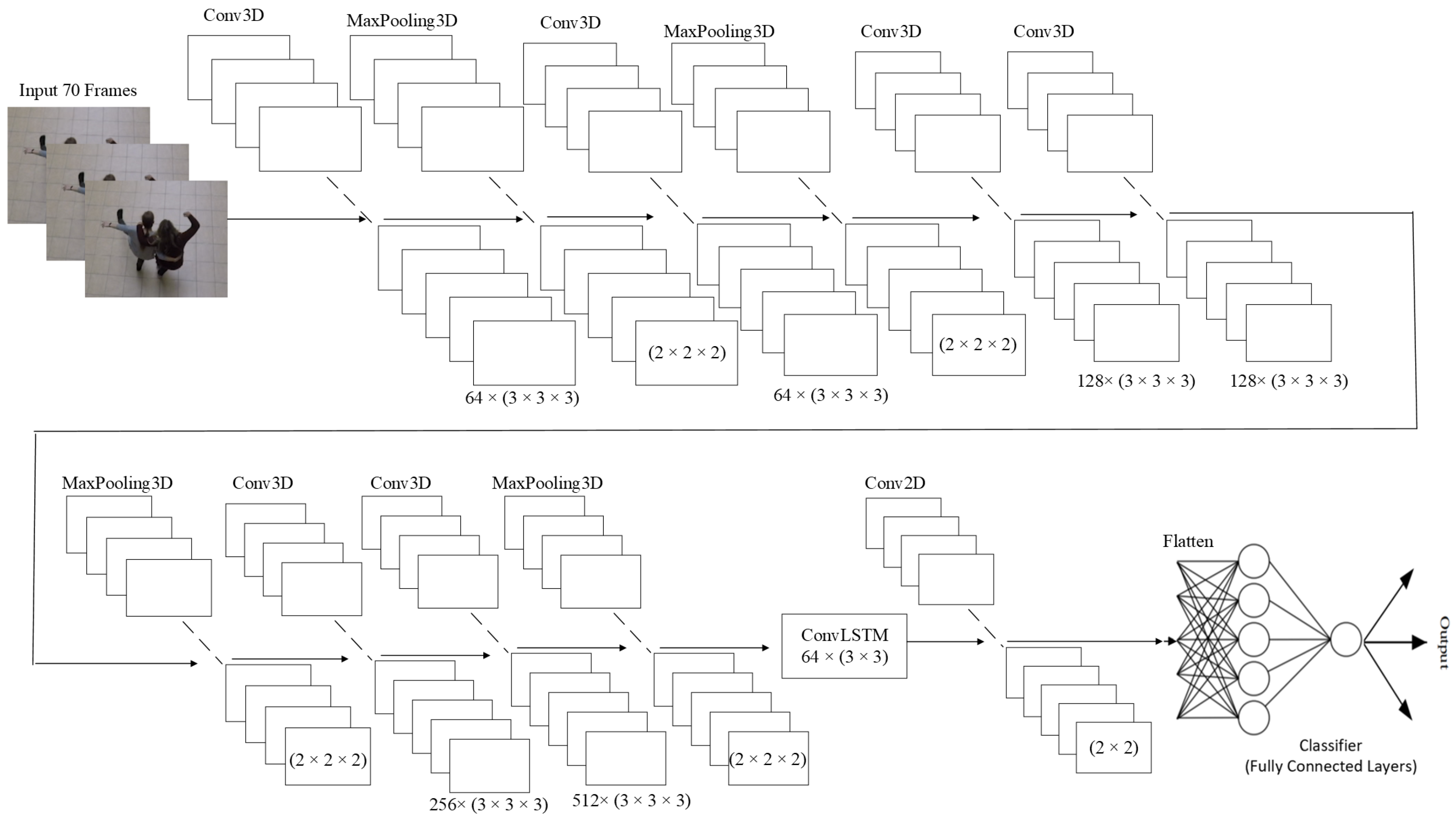

2.1. 3DCNN Architecture

2.2. ConvLSTM Architecture

2.3. Proposed 3DCNN + ConvLSTM Architecture

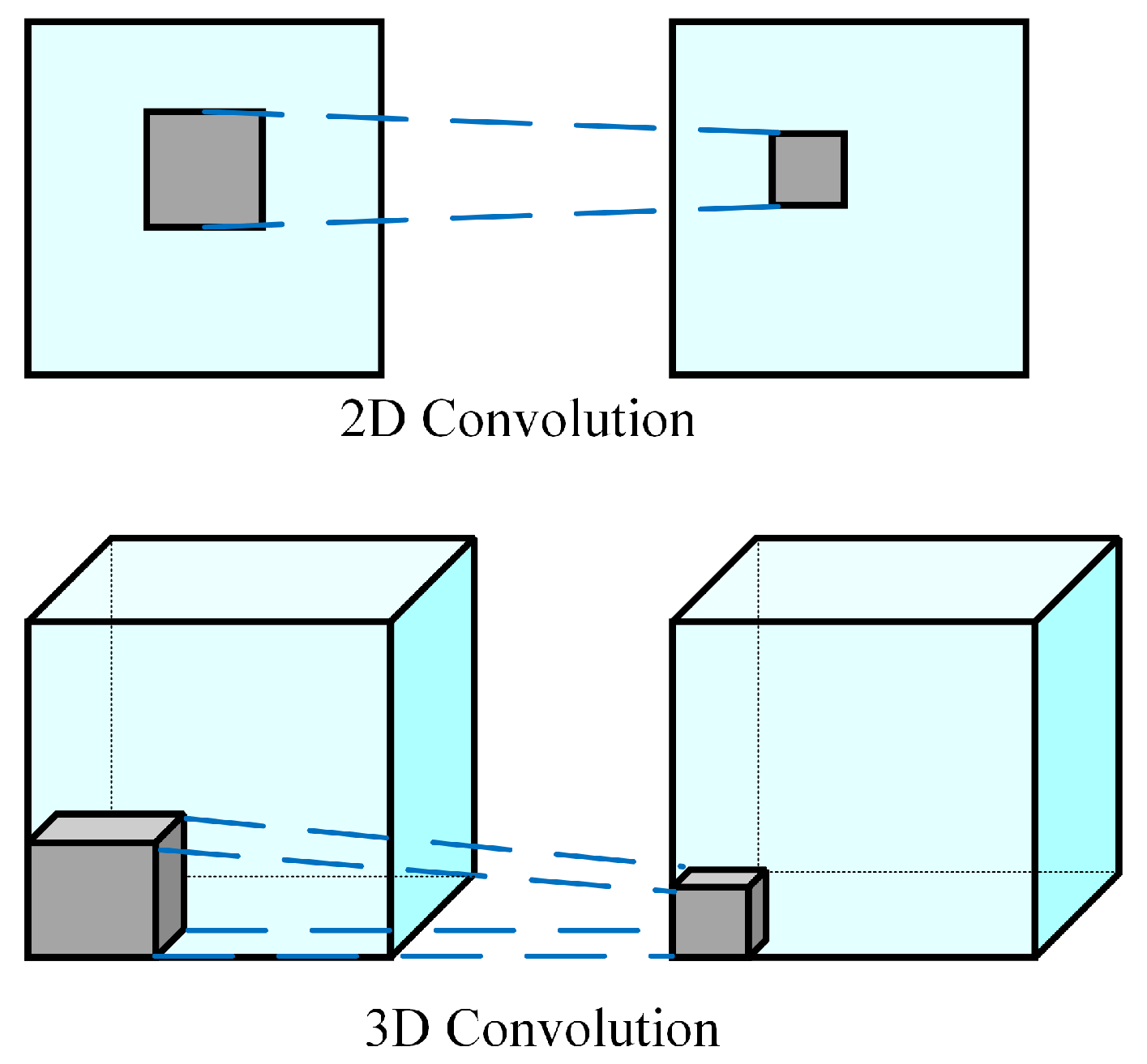

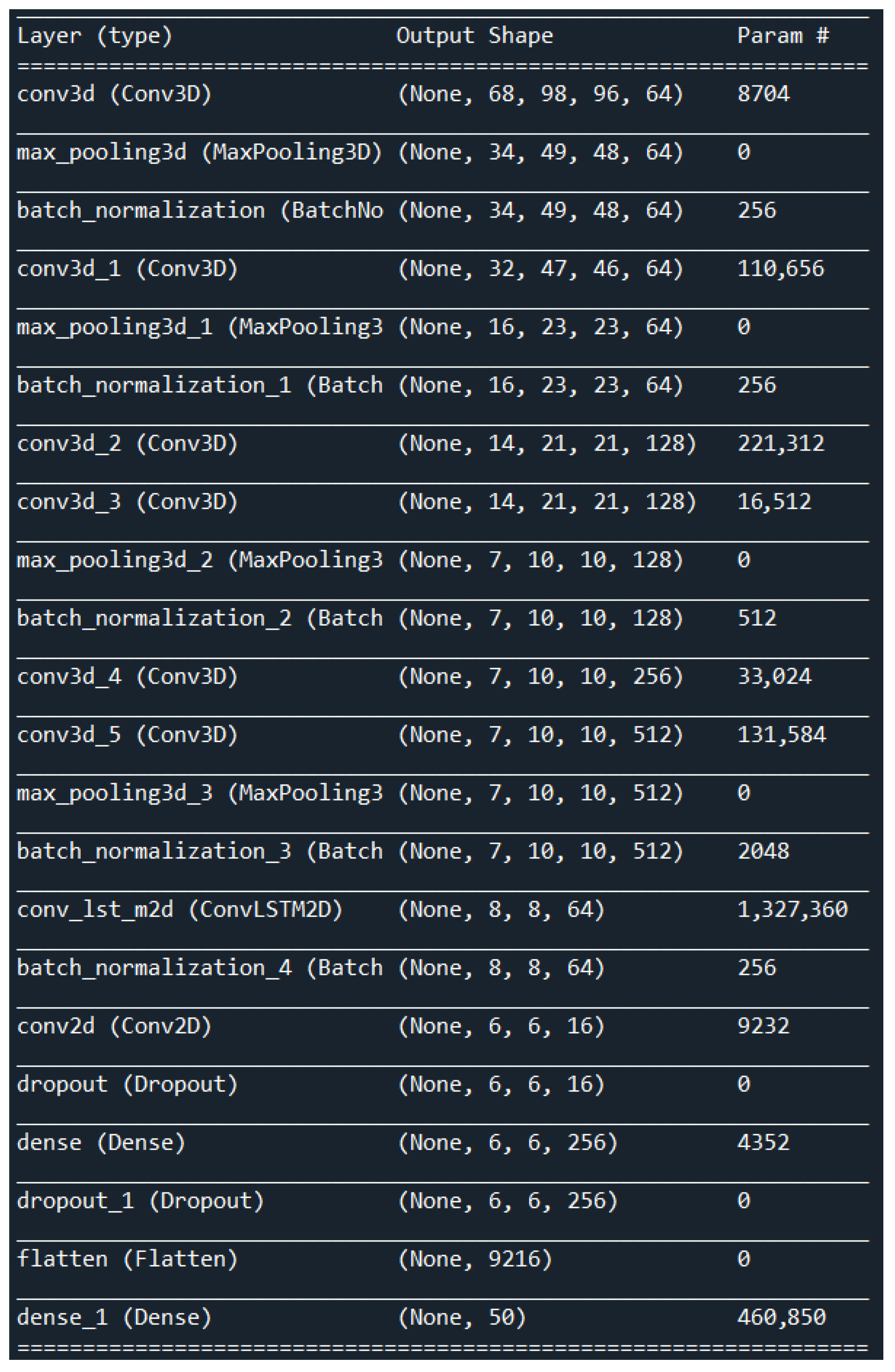

- Conv3D layers: These layers extract spatiotemporal features from the input video data. The number of Conv3D layers can be adjusted based on the complexity of the task. These layers incorporate a three-dimensional filter, which performs convolution by moving in three directions (x, y, z).

- MaxPooling3D layer is a mathematical operation for 3D data (reduction of 3D data).

- ConvLSTM layer: This layer processes the extracted features from the Conv3D layers and captures the temporal dependencies between the frames.

- Conv2D layer is layer, which applies convolution on 2D data. This layer performs the final classification based on the output of the previous layers.

- A flatten layer converts the output matrix to the vector.

3. Description of the Datasets

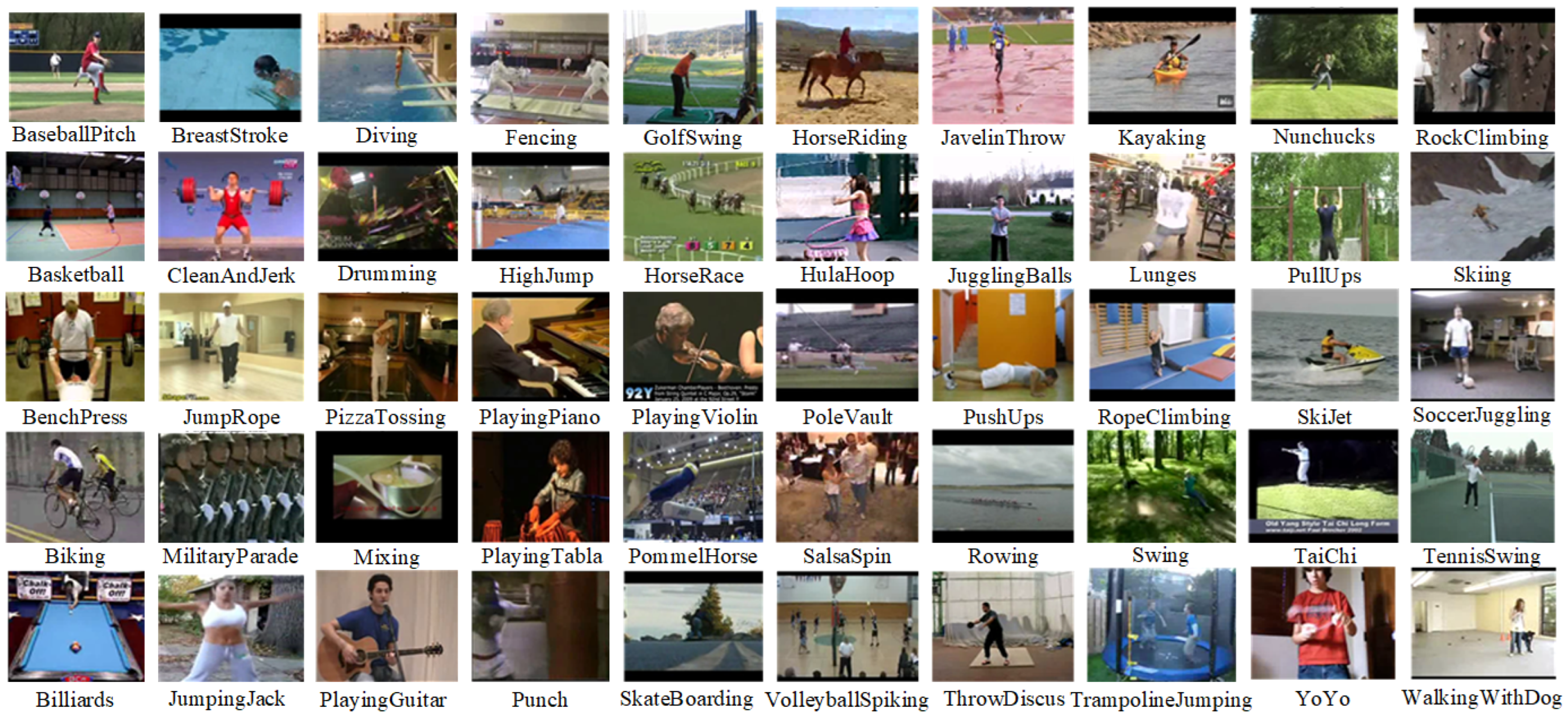

3.1. UCF50 Dataset

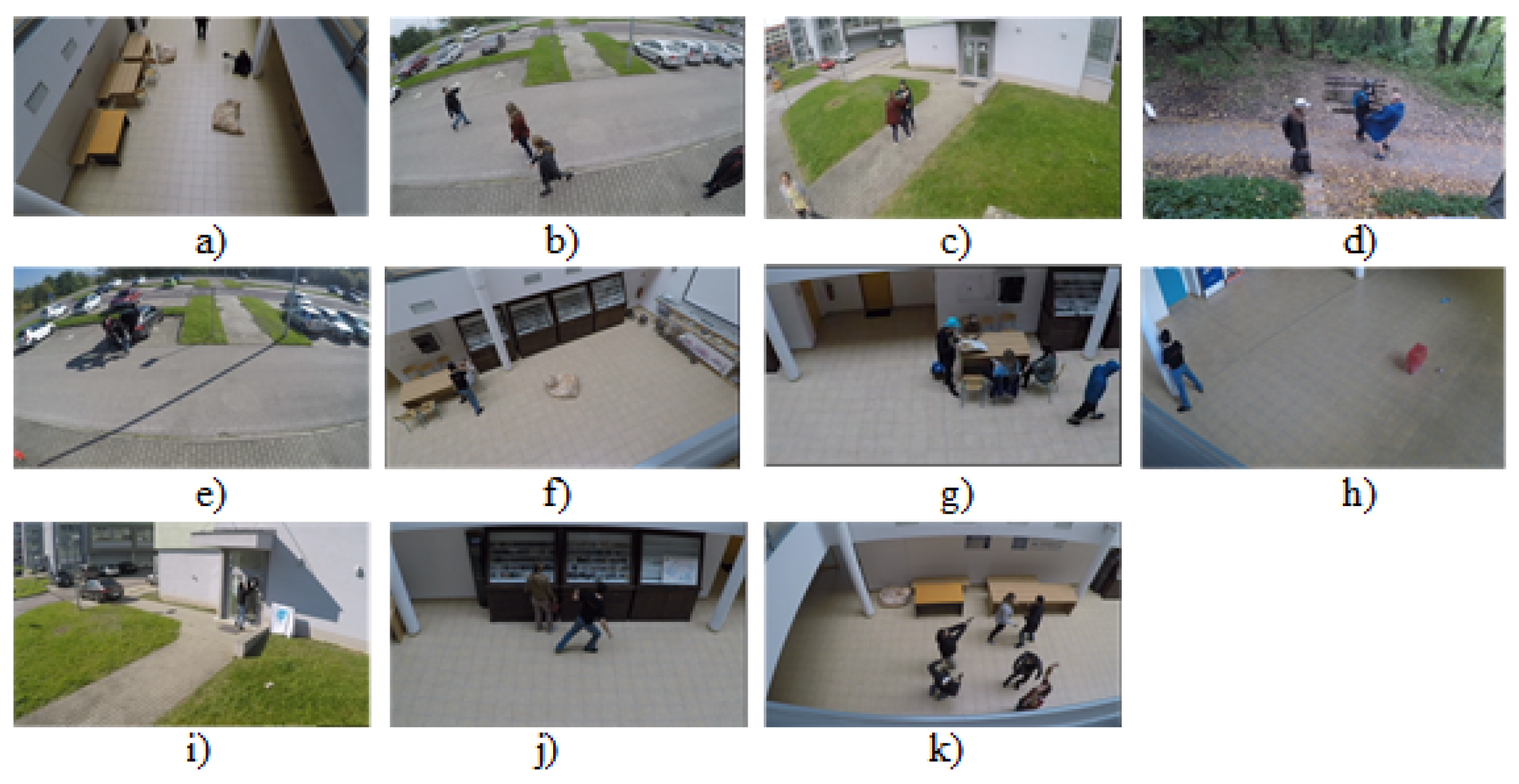

3.2. LoDVP Abnormal Activities Dataset

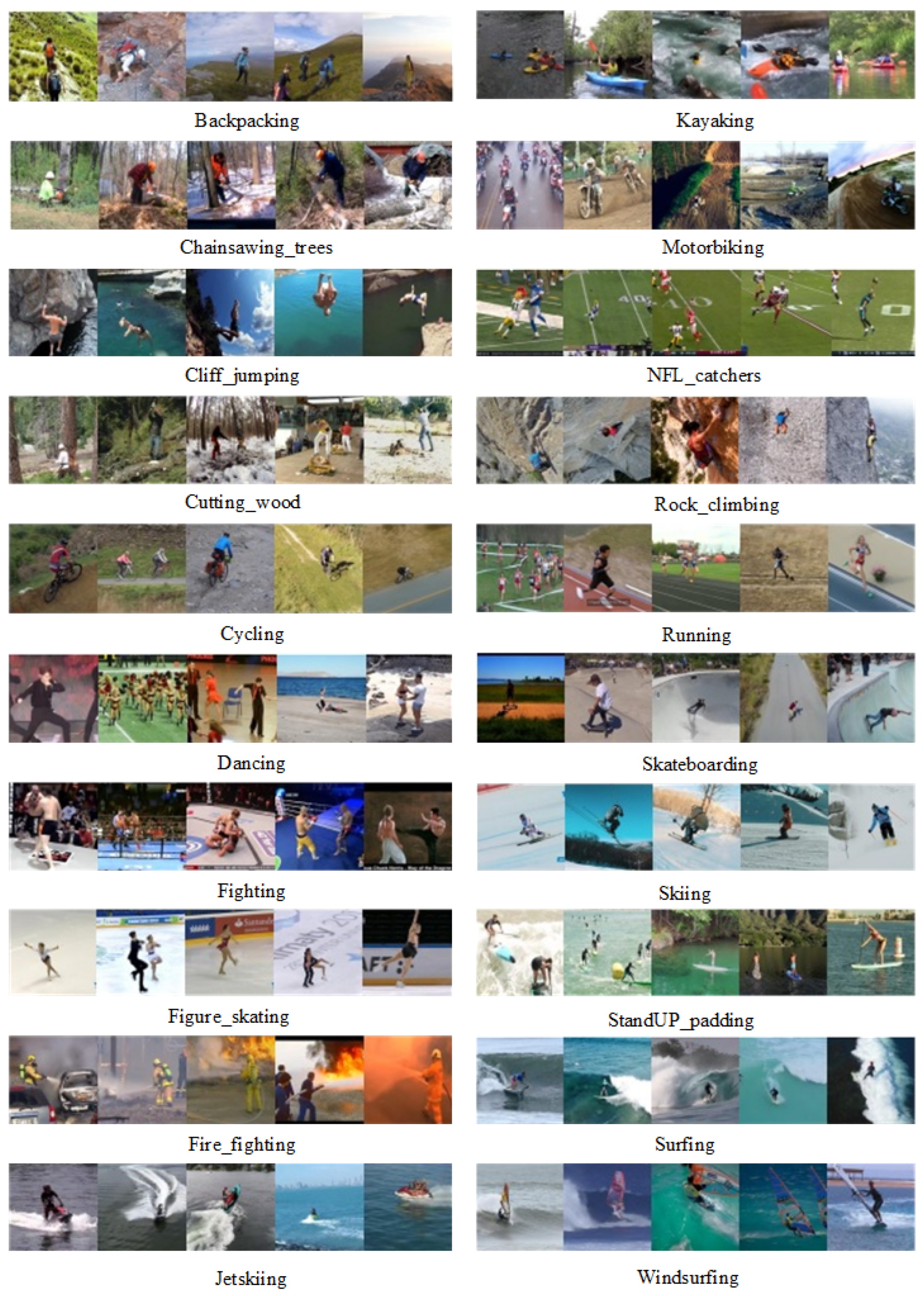

3.3. MOD20 Dataset

4. Experimental Results

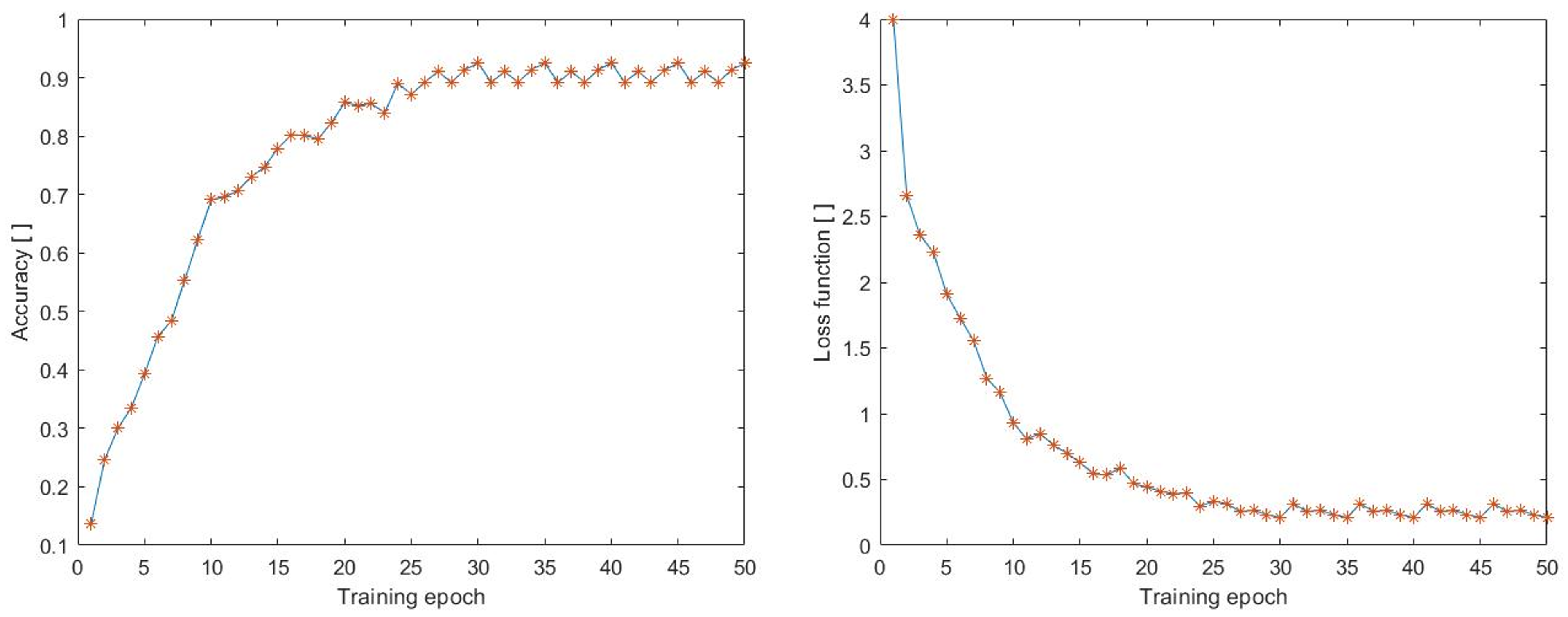

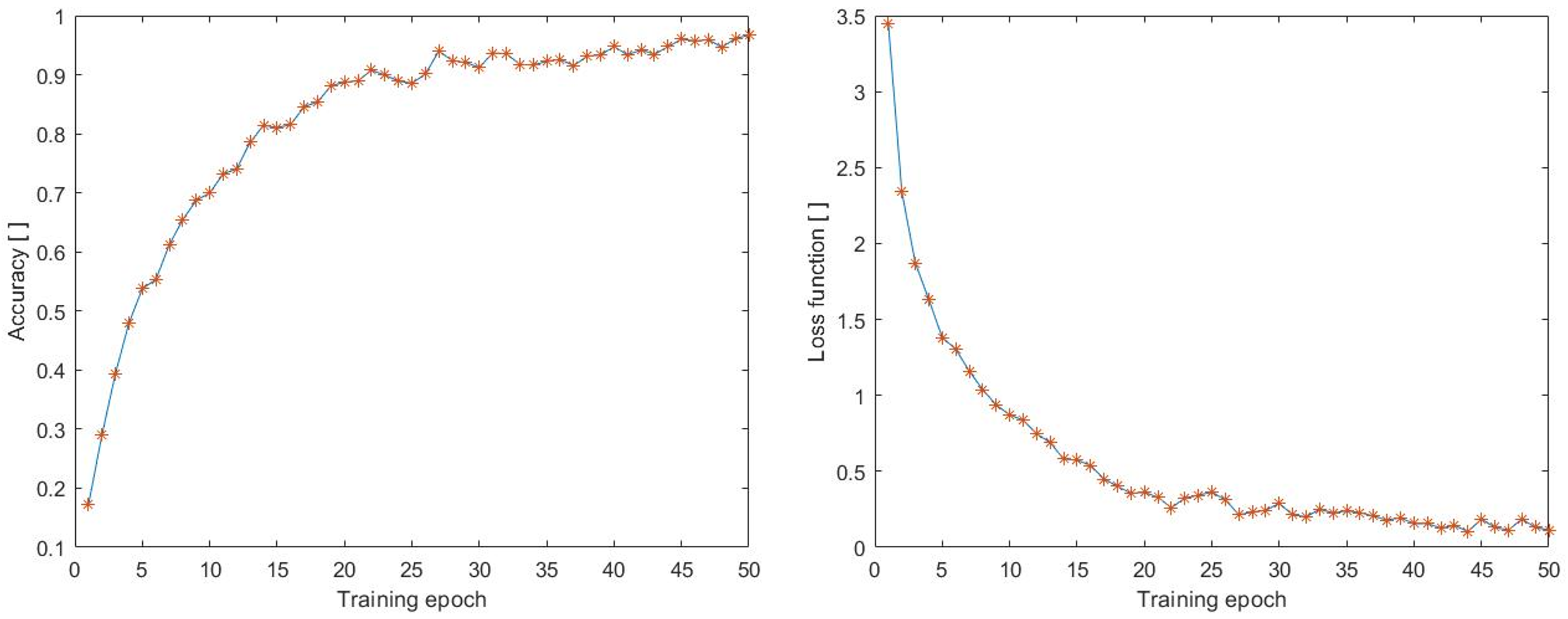

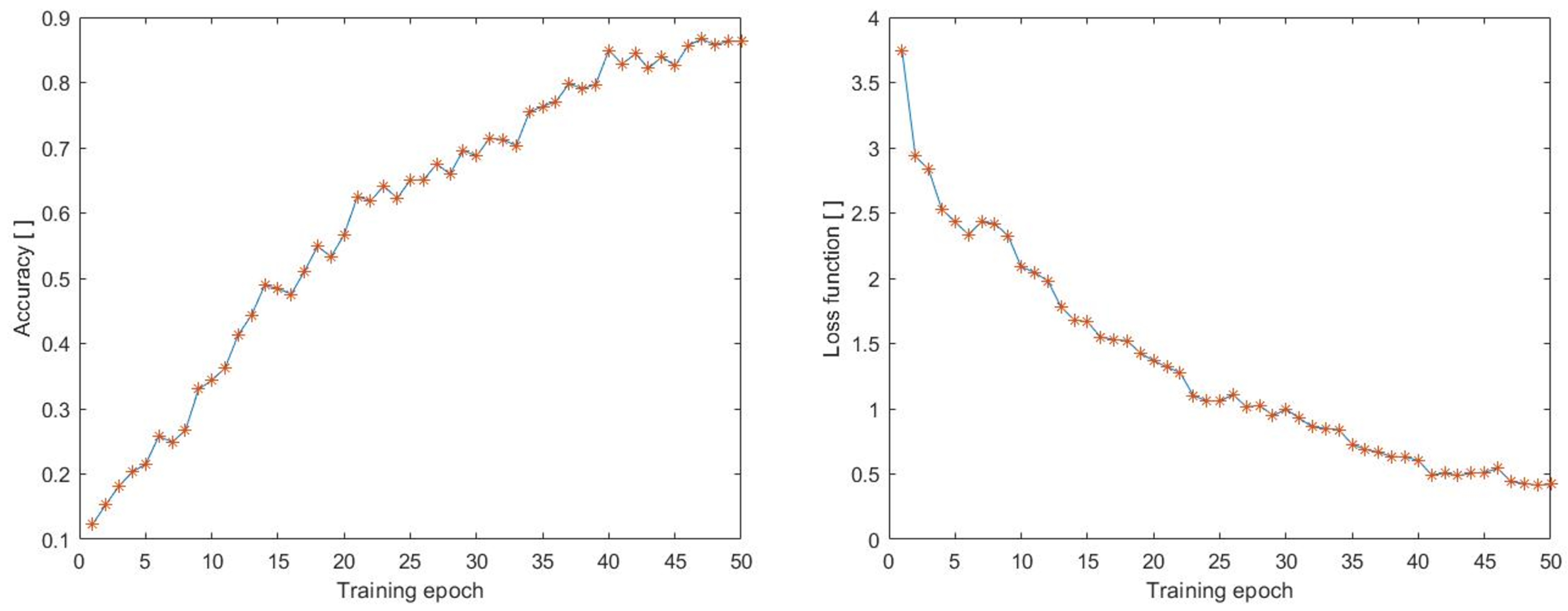

Results

5. Conclusions and Future Work

- Limited interpretability: 3DCNN with ConvLSTM is a deep-learning architecture, and like most deep-learning models, it is not transparent in how it makes predictions (understanding how the model arrives at a particular decision can be challenging).

- Limited availability of training data: The training of 3DCNN with ConvLSTM requires a large amount of high-quality data to produce good results. This can be a significant limitation in many applications where such data are not readily available.

- Difficulty in tuning hyperparameters: 3DCNN with ConvLSTM involves several hyperparameters that need to be tuned correctly to achieve optimal performance. Tuning these hyperparameters can be time-consuming and requires a significant amount of expertise and experimentation.

- Sensitivity to noise and missing data: The combination of 3DCNN and ConvLSTM relies on the temporal coherence of data for accurate predictions. Therefore, the model can be sensitive to noise and missing data in the input, which can significantly affect the model’s performance.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ConvLSTM | Convolutional Long Short-Term Memory |

| 3DCNN | 3D Convolutional Network |

| KRP-FS | Feature Subspace-Based Kernelized Rank Pooling |

| BKRP | Kernelized Rank-Based Pooling |

| BILSTM | Bidirectional Long Short-Term Memory |

| Conv2D | 2D Convolutional Neural Network |

| Conv3D | 3D Convolutional Neural Network |

| ROI | Region of Interest |

| MLP | Multilayer perceptron |

| HOF | Histogram of oriented gradients |

| ASD | Autism Spectrum Disorder |

References

- Wang, T.; Li, J.; Zhang, M.; Zhu, A.; Snoussi, H.; Choi, C. An enhanced 3DCNN-ConvLSTM for spatiotemporal multimedia data analysis. Concurr. Comput. Pract. Exp. 2021, 33, e5302. [Google Scholar] [CrossRef]

- Islam, M.S.; Gao, Y.; Ji, Z.; Lv, J.; Mohammed, A.A.Q.; Sang, Y. 3DCNN Backed Conv-LSTM Auto Encoder for Micro Facial Expression Video Recognition. Mach. Learn. Intell. Commun. 2021, 438, 90–105. [Google Scholar]

- Zhu, G.; Zhang, L.; Shen, P.; Song, J.; Shah, S.A.A. Continuous Gesture Segmentation and Recognition using 3DCNN and Convolutional LSTM. IEEE Trans. Multimed. 2019, 21, 1011–1021. [Google Scholar] [CrossRef]

- Krishna, N.S.; Bhattu, S.N.; Somayajulu, D.V.L.N.; Kumar, N.V.N.; Reddy, K.J.S. GssMILP for anomaly classification in surveillance videos. IEEE Expert Syst. Appl. 2022, 203, 117451. [Google Scholar] [CrossRef]

- Pediaditis, M.; Farmaki, C.; Schiza, S.; Tzanakis, N.; Galanakis, E.; Sakkalis, V. Contactless respiratory rate estimation from video in a real-life clinical environment using eulerian magnification and 3D CNNs. In Proceedings of the IEEE International Conference on Imaging Systems and Techniques, Kaohsiung, Taiwan, 21–23 June 2022. [Google Scholar]

- Negin, F.; Ozyer, B.; Agahian, S.; Kacdioglu, S.; Ozyer, G.T. Vision-assisted recognition of stereotype behaviors for early diagnosis of Autism Spectrum Disorders. Neurocomputing 2022, 446, 145–155. [Google Scholar] [CrossRef]

- Kaçdioglu, S.; Özyer, B.; Özyer, G.T. Recognizing Self-Stimulatory Behaviours for Autism Spectrum Disorders. In Proceedings of the Signal Processing and Communications Applications Conference, Gaziantep, Turkey, 5–7 October 2020; Volume 28, pp. 1–4. [Google Scholar]

- Zhao, W.; Xu, J.; Li, X.; Chen, Z.; Chen, X. Recognition of Farmers’ Working Based on HC-LSTM Model. Neurocomputing 2022, 813, 77–86. [Google Scholar]

- Zhang, L.; Zhu, G.; Shen, P.; Song, J.; Shah, S.A.; Bennamoun, M. Learning Spatiotemporal Features Using 3DCNN and Convolutional LSTM for Gesture Recognition. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 3120–3128. [Google Scholar]

- Xu, C.; Chai, D.; He, J.; Zhang, X.; Duan, S. InnoHAR: A Deep Neural Network for Complex Human Activity Recognition. IEEE Access 2019, 7, 9893–9902. [Google Scholar] [CrossRef]

- Almabdy, S.; Elrefaei, L. Deep Convolutional Neural Network-Based Approaches for Face Recognition. Appl. Sci. 2019, 9, 4397. [Google Scholar] [CrossRef]

- Zheng, W.; Yin, L.; Chen, X.; Ma, Z.; Liu, S.; Yang, B. Knowledge Base Graph Embedding Module Design for Visual Question Answering Model. Pattern Recognit. 2021, 120, 108153. [Google Scholar] [CrossRef]

- Mutegeki, R.; Han, D.S. A CNN-LSTM Approach to Human Activity Recognition. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 19–21 February 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 362–366. [Google Scholar]

- Vrskova, R.; Hudec, R.; Kamencay, P.; Sykora, P. A New Approach for Abnormal Human Activities Recognition Based on ConvLSTM Architecture. Sensors 2022, 22, 2946. [Google Scholar] [CrossRef] [PubMed]

- Vrskova, R.; Hudec, R.; Kamencay, P.; Sykora, P. Human Activity Classification Using the 3DCNN Architecture. Appl. Sci. 2022, 12, 931. [Google Scholar] [CrossRef]

- Chengping, R.; Yang, L. 3D Convolutional Neural Networks for Human Action Recognition. Comput. Mater. Sci. 2013, 35, 221–231. [Google Scholar]

- Partila, P.; Tovarek, J.; Ilk, H.G.; Rozhon, J.; Voznak, M. Deep learning serves voice cloning: How vulnerable are automatic speaker verification systems to spooting trial. IEEE Commun. Mag. 2020, 58, 100–105. [Google Scholar] [CrossRef]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. Three-dimensional convolutional neural network (3D-CNN) for heterogeneous material homogenization. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 184, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Yuan, Z.; Zhou, X.; Yang, T. Hetero-ConvLSTM: A Deep Learning Approach to Traffic Accident Prediction on Heterogeneous Spatio-Temporal Data. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018. [Google Scholar]

- Reddy, K.K.; Shah, M. Recognizing 50 Human Action Categories of Web Videos. Mach. Vis. Appl. J. (MVAP) 2013, 24, 971–981. [Google Scholar] [CrossRef]

- Perera, A.G.; Law, Y.W.; Ogunwa, T.T.; Chahl, J. A Multiviewpoint Outdoor Dataset for Human Action Recognition. IEEE Trans. Hum. Mach. Syst. 2020, 50, 405–413. [Google Scholar] [CrossRef]

- Ghodhbani, E.; Kaanich, M.; Benazza-Benyahia, A. An Effective 3D ResNet Architecture for Stereo Image Retrieval. In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2021), Virtual Event, 8–10 February 2021; pp. 160580–160595. [Google Scholar]

| Parameters of the Proposed Architecture | Number of Parameters |

|---|---|

| Total parameters | 2,326,914 |

| Trainable parameters | 2,325,250 |

| Non-Trainable parameters | 1664 |

| Evaluation Metrics | MOD20 | UCF50mini | LoDVP Abnormal Activities |

|---|---|---|---|

| Train loss | 0.4223 | 0.2106 | 0.1042 |

| Train accuracy | 86.30% | 92.50% | 96.68% |

| Test loss | 0.5614 | 0.3568 | 0.3982 |

| Test accuracy | 78.21% | 87.78% | 83.12% |

| Targeted/ Predicted | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 16 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 0 | 14 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 3 | 4 | 6 | 18 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 0 | 0 | 0 | 16 | 0 | 0 | 4 | 0 | 0 | 0 | 0 |

| 5 | 0 | 0 | 0 | 0 | 20 | 0 | 0 | 0 | 0 | 0 | 0 |

| 6 | 0 | 2 | 0 | 0 | 0 | 8 | 0 | 0 | 0 | 0 | 0 |

| 7 | 0 | 0 | 0 | 2 | 0 | 0 | 18 | 2 | 0 | 0 | 0 |

| 8 | 0 | 0 | 0 | 6 | 0 | 0 | 2 | 12 | 0 | 0 | 0 |

| 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 26 | 0 | 0 |

| 10 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 | 0 |

| 11 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 14 |

| Targeted/ Predicted | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 14 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 0 | 11 | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 0 |

| 3 | 0 | 0 | 5 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

| 4 | 1 | 1 | 0 | 13 | 0 | 0 | 0 | 1 | 0 | 1 |

| 5 | 0 | 0 | 0 | 0 | 15 | 0 | 0 | 0 | 0 | 0 |

| 6 | 0 | 0 | 0 | 0 | 0 | 8 | 0 | 0 | 0 | 0 |

| 7 | 0 | 0 | 1 | 2 | 0 | 0 | 9 | 0 | 0 | 0 |

| 8 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 12 | 0 | 0 |

| 9 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 17 | 0 |

| 10 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 11 |

| Targeted/ Predicted | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 9 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 1 | 2 | 0 | 0 | 0 | 0 | 1 |

| 2 | 0 | 9 | 0 | 2 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 3 | 1 | 0 | 10 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 |

| 4 | 0 | 0 | 0 | 7 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 5 | 2 | 0 | 0 | 0 | 6 | 0 | 2 | 0 | 0 | 1 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 1 |

| 6 | 1 | 0 | 0 | 2 | 0 | 6 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

| 7 | 0 | 0 | 0 | 0 | 0 | 0 | 14 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 14 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 |

| 9 | 0 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 7 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 |

| 10 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 9 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| 11 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 11 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 |

| 12 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 12 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 13 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 11 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 14 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 9 | 0 | 0 | 0 | 0 | 1 | 0 |

| 15 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 10 | 0 | 0 | 0 | 0 | 0 |

| 16 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 11 | 0 | 0 | 0 | 0 |

| 17 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 9 | 0 | 0 | 0 |

| 18 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 14 | 0 | 0 |

| 19 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 12 | 0 |

| 20 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 11 |

| Evaluation Metrics | MOD20 | UCF50mini | LoDVP Abnormal Activities |

|---|---|---|---|

| Precision (P) | 83.89% | 87.76% | 89.12% |

| Recall (R) | 81.09% | 88.63% | 87.69% |

| F1 score (F1) | 81.57% | 87.84% | 89.32% |

| Video Recognition Architectures | Accuracy [%] |

|---|---|

| Proposed architecture | 93.41 |

| ConvLSTM [21] | 92.38 |

| 3D Resnet50 [22] | 36.19 |

| 3D Resnet101 [22] | 61.90 |

| 3D Resnet152 [22] | 90.48 |

| Video Recognition Architectures | Accuracy [%] |

|---|---|

| Proposed architecture | 87.78 |

| ConvLSTM [21] | 80.38 |

| 3D Resnet50 [22] | 71.53 |

| 3D Resnet101 [22] | 75.91 |

| 3D Resnet152 [22] | 83.39 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vrskova, R.; Kamencay, P.; Hudec, R.; Sykora, P. A New Deep-Learning Method for Human Activity Recognition. Sensors 2023, 23, 2816. https://doi.org/10.3390/s23052816

Vrskova R, Kamencay P, Hudec R, Sykora P. A New Deep-Learning Method for Human Activity Recognition. Sensors. 2023; 23(5):2816. https://doi.org/10.3390/s23052816

Chicago/Turabian StyleVrskova, Roberta, Patrik Kamencay, Robert Hudec, and Peter Sykora. 2023. "A New Deep-Learning Method for Human Activity Recognition" Sensors 23, no. 5: 2816. https://doi.org/10.3390/s23052816

APA StyleVrskova, R., Kamencay, P., Hudec, R., & Sykora, P. (2023). A New Deep-Learning Method for Human Activity Recognition. Sensors, 23(5), 2816. https://doi.org/10.3390/s23052816