Multi-UAV Path Planning in GPS and Communication Denial Environment

Abstract

1. Introduction

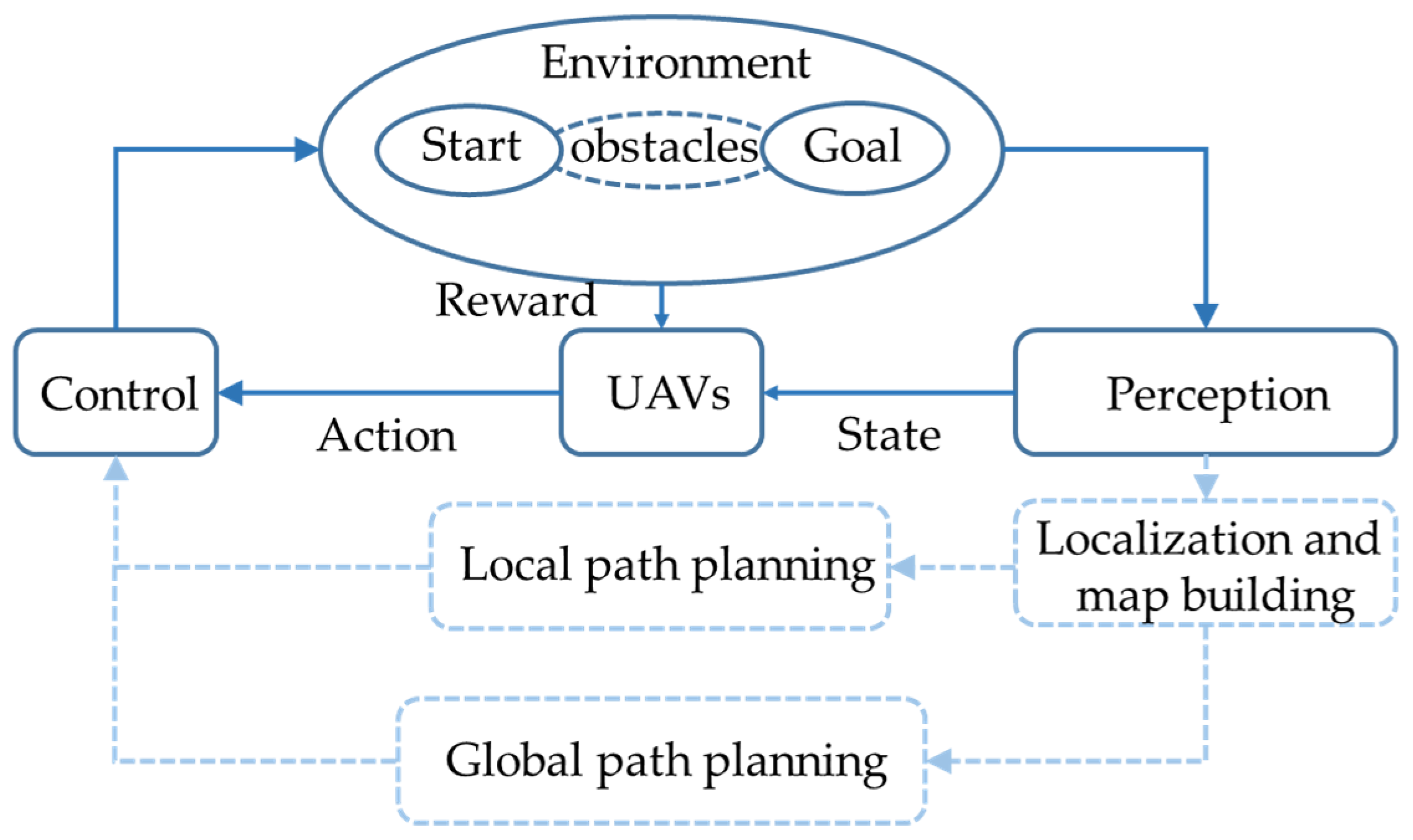

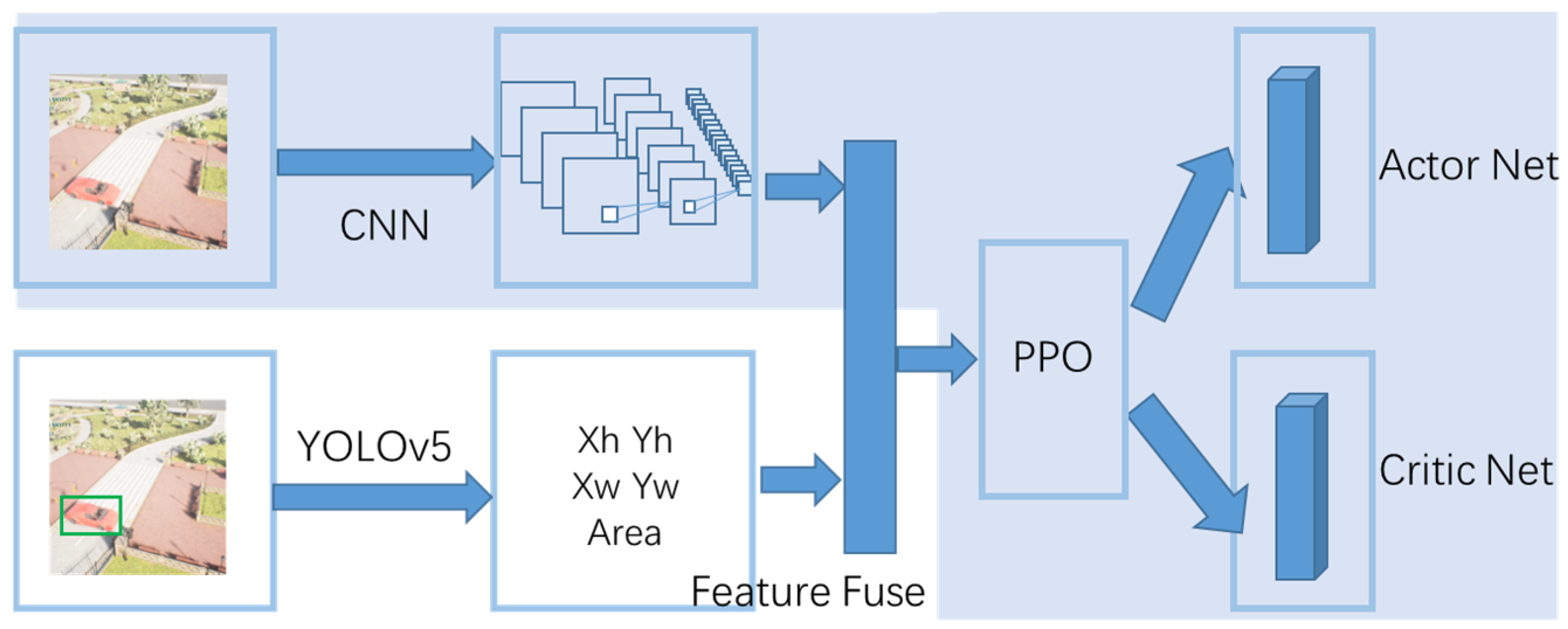

- To solve the problem of UAV path planning in GPS blocked environment, we introduce target recognition algorithms based on the reinforcement learning method to realize multi-UAV path planning. Compared with the direct use of the end-to-end reinforcement learning method, the results obtained from image recognition are fused with the original image as an observation. The reinforcement learning algorithm can make the UAV perform the corresponding action according to the observation and finally connect the trajectory of the UAV, which can realize the path planning of multiple UAVs without precise target locations.

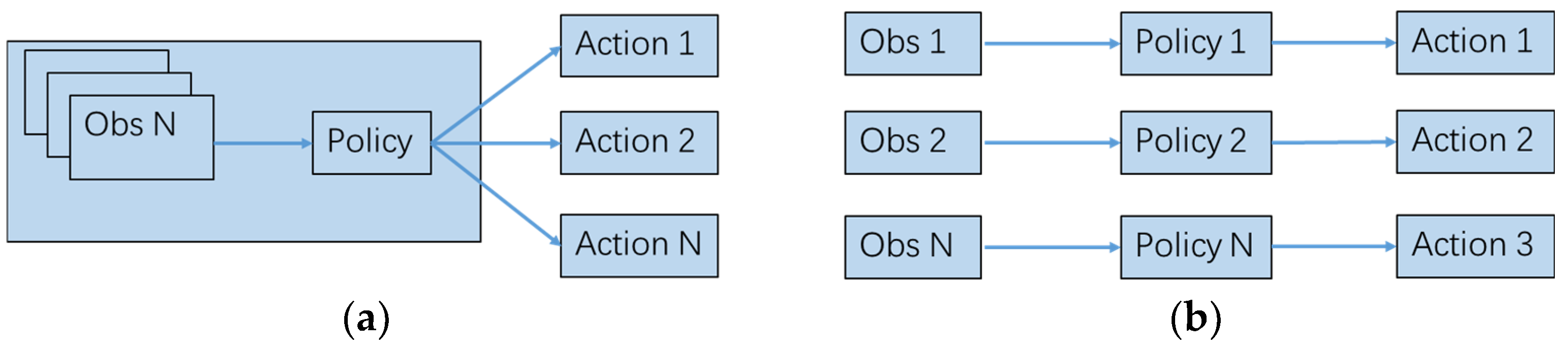

- Considering the problem of path planning for multiple UAVs in the blocked environment, we proposed a distributed control method based on an independent policy. This method does not require the UAVs to communicate with each other. Each UAV uses its own observation results to make flight decisions.

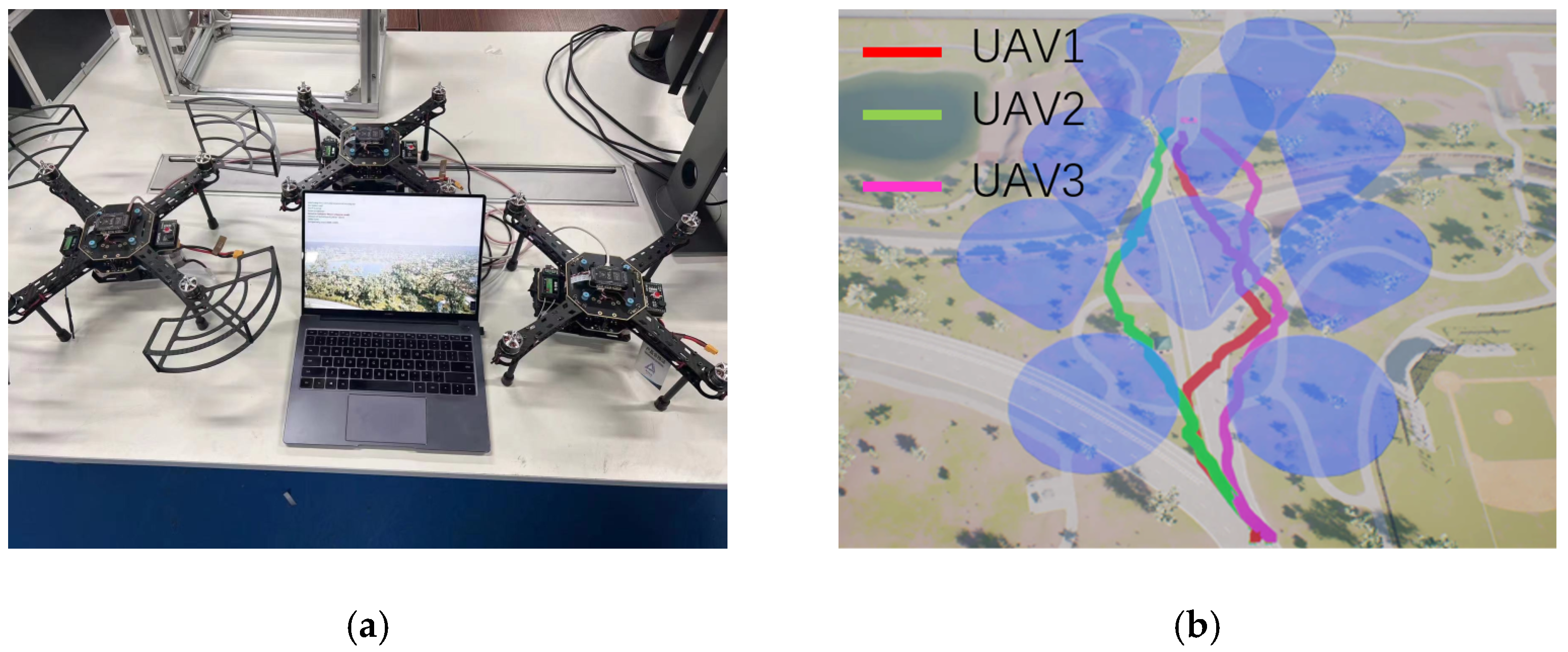

- A simulation platform is built, and hardware-in-the-loop experiments are carried out on the hardware to verify the feasibility of the algorithm. Experimental results show that the proposed algorithm can realize the cooperative path planning of multiple UAVs without precise target locations, and its success rate is close to that of the known precise target location.

2. Related Works

3. Proposed Method

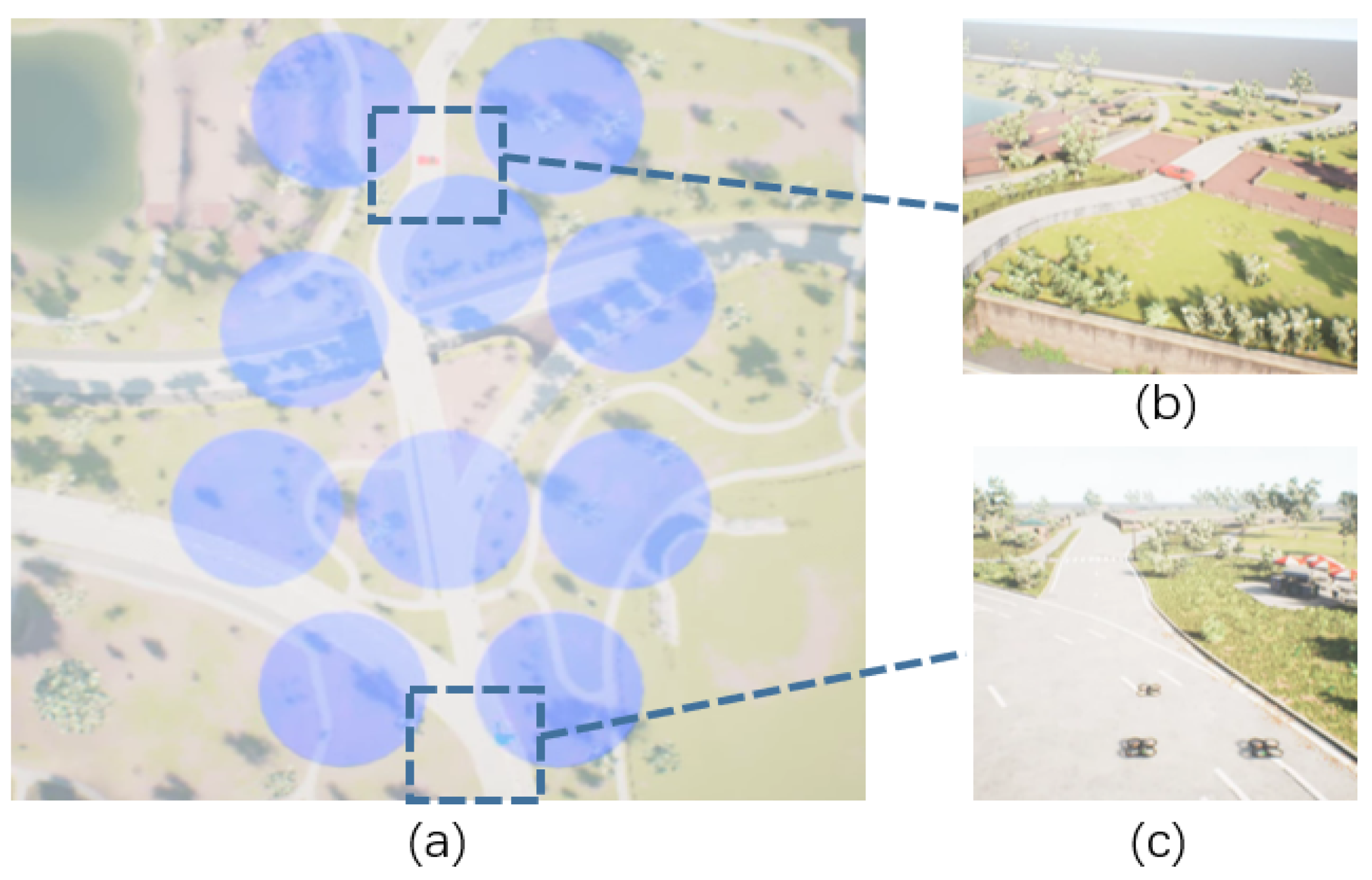

3.1. Problem Definition

- The UAV should avoid the defense zone during the flight. If the UAVs enter the defense zone, the UAVs will be destroyed or captured.

- The defense zone is generated by electromagnetism and cannot be observed visually.

- Due to GPS denial, the UAV can only know the area of the target but cannot know the precise location of the target.

- Due to the denial of communication, UAVs cannot share information and can only perceive the position and status of other UAVs via the onboard camera.

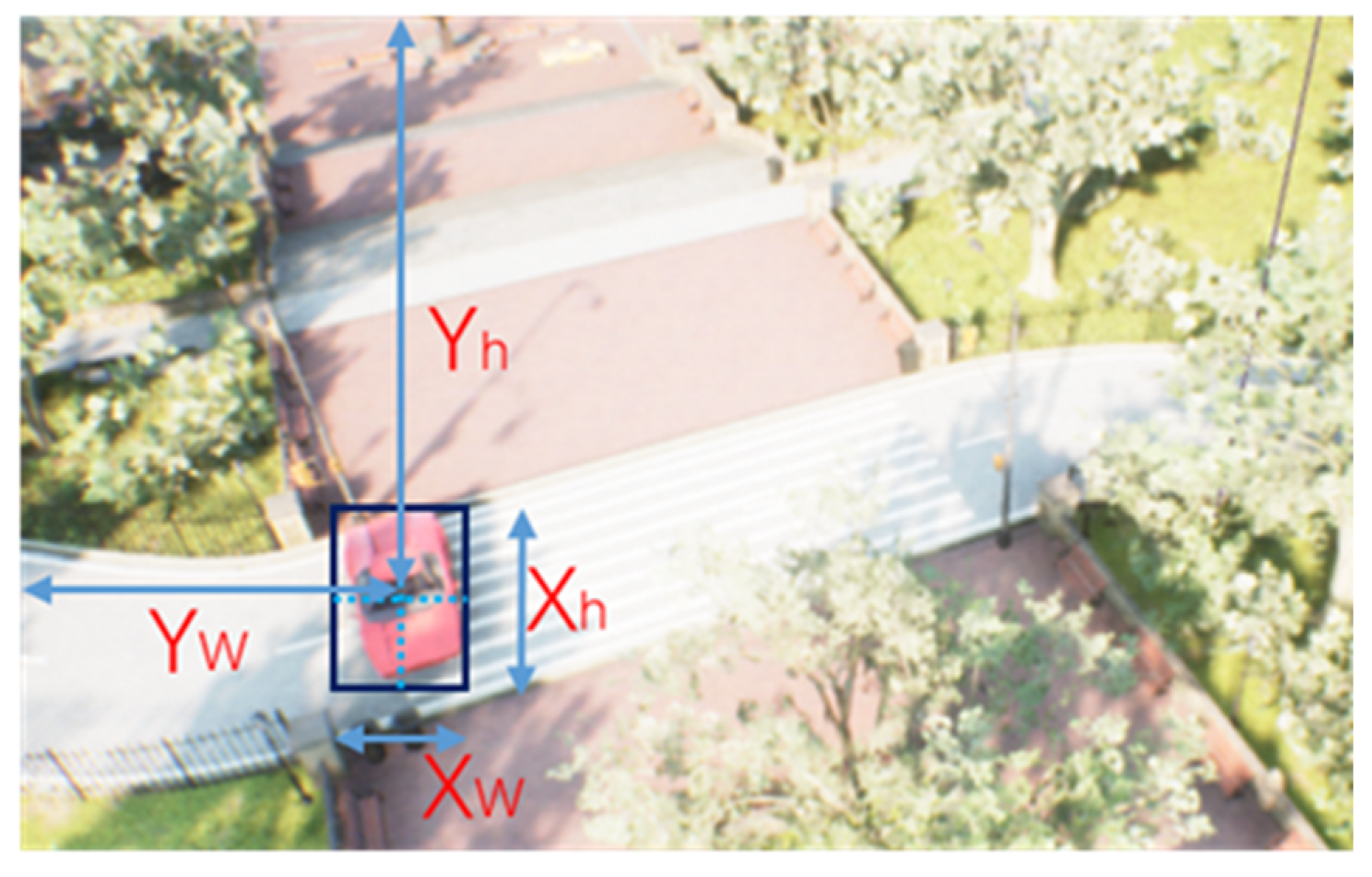

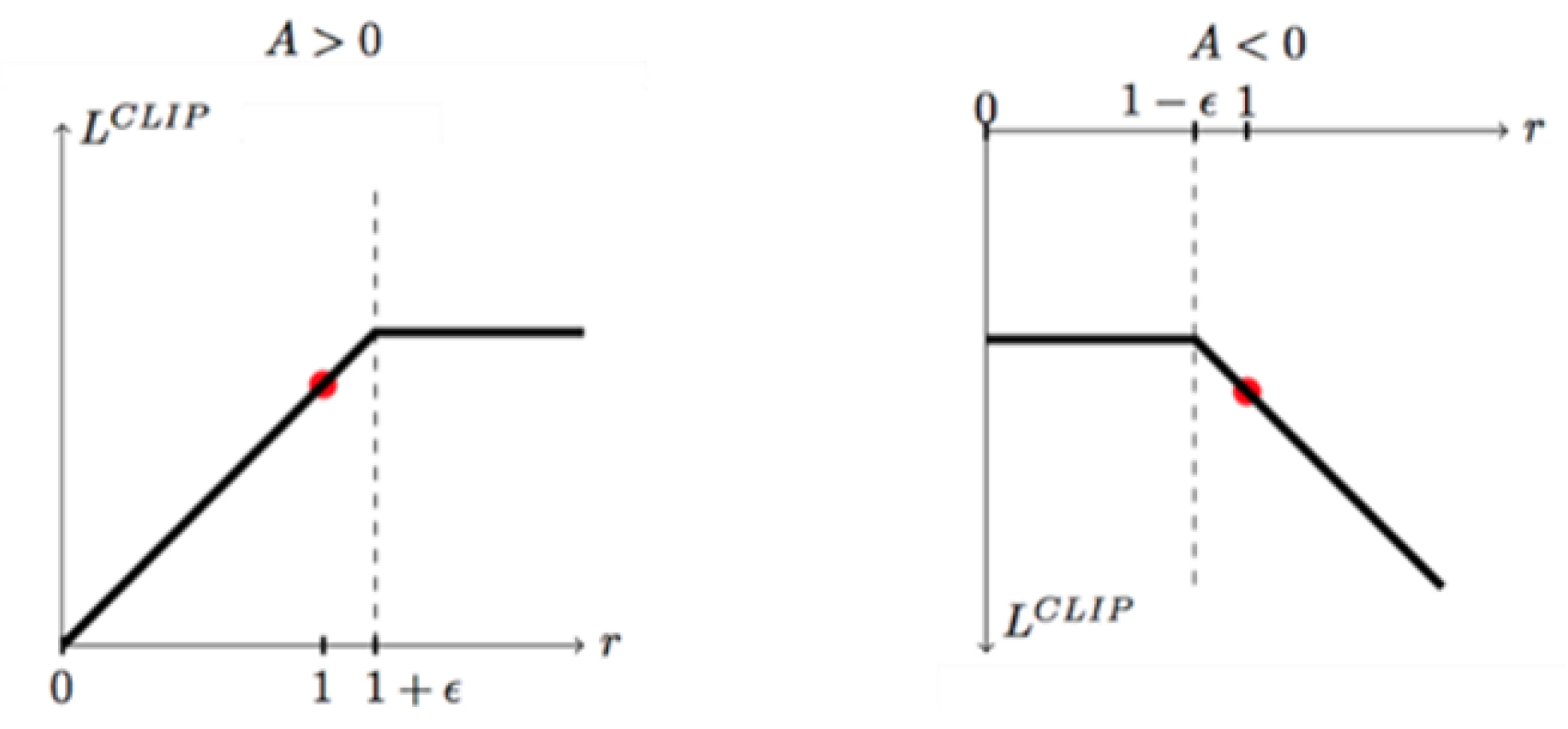

3.2. Feature Fusion PPO

| Algorithm 1. Feature Fusion PPO Algorithm |

| Initialize policy net |

| Initialize critic net |

| Initialize hyper-parameter |

| for do |

| Get the target position and area by YOLOv5 |

| Then join with the image as a tuple |

| Run policy for timesteps, collecting |

| Estimate advantages |

| for do |

| Update by a gradient method |

| end for |

| for do |

| Update by a gradient method |

| end for |

| end for |

3.3. Independent Policy PPO

4. Experiment

4.1. Environmental Model

4.2. Settings for Observations, Action Spaces, and Rewards

4.3. Hyper-Parameter

4.4. Results, Validation, and Analysis

- (1)

- Unknowing the precise location of the target (Ours)

- (2)

- Knowing the precise location of the target (Precise Position)

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Puente-Castro, A.; Rivero, D.; Pazos, A.; Fernandez-Blanco, E. A review of artificial intelligence applied to path planning in UAV swarms. Neural Comput. Appl. 2022, 34, 153–170. [Google Scholar] [CrossRef]

- Poudel, S.; Moh, S. Task assignment algorithms for unmanned aerial vehicle networks: A comprehensive survey. Veh. Commun. 2022, 35, 100469. [Google Scholar] [CrossRef]

- Khatib, O. Real-time bstacle avoidance for manipulators and mobile robots. Int. J. Robot. Res. 1986, 5, 90–98. [Google Scholar] [CrossRef]

- Pan, Z.; Zhang, C.; Xia, Y.; Xiong, H.; Shao, X. An improved artificial potential field method for path planning and formation control of the multi-UAV systems. IEEE Trans. Circuits Syst. II Express Briefs 2022, 69, 1129–1133. [Google Scholar] [CrossRef]

- Wei, R.; Xu, Z.; Wang, S.; Lv, M. Self-optimization A-star algorithm for UAV path planning based on Laguerre diagram. Syst. Eng. Electron 2015, 37, 577–582. [Google Scholar]

- Holland, J.H. Adaptation in Natural and Artificial Systems: An Introductory Analysis with Applications to Biology, Control, and Artificial Intelligence; MIT Press: Cambridge, MA, USA, 1975. [Google Scholar]

- Zhenhua, P.; Hongbin, D.; Li, D. A multilayer graph for multi-agent formation and trajectory tracking control based on MPC algorithm. IEEE Trans. Cybern. 2021, 50, 12. [Google Scholar] [CrossRef]

- Xie, R.; Meng, Z.; Zhou, Y.; Ma, Y.; Wu, Z. Heuristic Q-learning based on experience replay for three-dimensional path planning of the unmanned aerial vehicle. Sci. Prog. 2020, 103, 0036850419879024. [Google Scholar] [CrossRef]

- Cui, Z.Y.; Wang, Y. UAV Path Planning Based on Multi-Layer Reinforcement Learning Technique. IEEE Access 2021, 9, 59486–59497. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, H.; Tian, Y.L. Reinforcement learning based two-level control framework of UAV swarm for cooperative persistent surveillance in an unknown urban area. Aerosp. Sci. Technol. 2020, 98, 105671. [Google Scholar] [CrossRef]

- Fevgas, G.; Lagkas, T.; Argyriou, V.; Sarigiannidis, P. Coverage Path Planning Methods Focusing on Energy Efficient and Cooperative Strategies for Unmanned Aerial Vehicles. Sensors 2022, 22, 1235. [Google Scholar] [CrossRef]

- Lowe, R.; Wu, Y.; Tamar, A.; Harb, J.; Abbeel, P.; Mordatch, I. Multi-agent actor-critic for mixed cooperative-competitive environments. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Krichen, M.; Adoni, W.Y.H.; Mihoub, A.; Alzahrani, M.Y.; Nahhal, T. Security Challenges for Drone Communications: Possible Threats, Attacks and Countermeasures. In Proceedings of the 2022 2nd International Conference of Smart Systems and Emerging Technologies (SMARTTECH), Riyadh, Saudi Arabia, 9–11 May 2022. [Google Scholar]

- Bunse, C.; Plotz, S. Security analysis of drone communication protocols. In Proceedings of the Engineering Secure Software and Systems: 10th International Symposium (ESSoS 2018), Paris, France, 26–27 June 2018; pp. 96–107. [Google Scholar]

- Yolov5. 2022. Available online: https://github.com/ultralytics/yolov5 (accessed on 3 December 2022).

- Sutton, R.S.; Barto, A.G. Reinforcement Learning, 2nd ed.; An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Michels, J.; Saxena, A.; Ng, A.Y. High speed obstacle avoidance using monocular vision and reinforcement learning. In Proceedings of the 22nd International Conference on Machine Learning, Bonn, Germany, 7–11 August 2005. [Google Scholar]

- Xie, L.; Wang, S.; Markham, A.; Trigoni, N. Towards monocular vision based obstacle avoidance through deep reinforcement learning. arXiv 2017, arXiv:1706.09829. [Google Scholar]

- Vamvoudakis, K.G.; Vrabie, D.; Lewis, F.L. Online adaptive algorithm for optimal control with integral reinforcement learning. Int. J. Robust Nonlinear Control 2014, 24, 2686–2710. [Google Scholar] [CrossRef]

- Kulkarni, T.D.; Narasimhan, K.R.; Saeedi, A.; Tenenbaum, J.B. Hierarchical deep reinforcement learning: Integrating temporal abstraction and intrinsic motivation. Adv. Neural Inf. Process. Syst. 2016, 29. [Google Scholar]

- Zhu, Y.; Mottaghi, R.; Kolve, E.; Lim, J.J.; Gupta, A.; Fei-Fei, L.; Farhadi, A. Target-driven visual navigation in indoor scenes using deep reinforcement learning. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–30 June 2017. [Google Scholar]

- Chen, L.; Moorthy, M.; Sharma, P.; Kawthekar, P. Imitating shortest paths for visual navigation with trajectory-aware deep reinforcement learning. Comput. Sci. 2017. [Google Scholar]

- Siriwardhana, S.; Weerasakera, R.; Matthies, D.J.C.; Nanayakkara, S. Vusfa: Variational universal successor features approximator to improve transfer drl for target driven visual navigation. arXiv 2019, arXiv:1908.06376. [Google Scholar]

- Siriwardhana, S.; Weerasekera, R.; Nanayakkara, R. Target driven visual navigation with hybrid asynchronous universal successor representations. arXiv 2018, arXiv:1811.11312. [Google Scholar]

- Qie, H.; Shi, D.; Shen, T.; Xu, X.; Li, Y.; Wang, L. Joint Optimization of Multi-UAV Target Assignment and Path Planning Based on Multi-Agent Reinforcement Learning. IEEE Access 2019, 7, 146264–146272. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 2–8. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, R.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Shah, S.; Dey, D.; Lovett, C.; Kapoor, A. AirSim: High-Fidelity Visual and Physical Simulation for Autonomous Vehicles. In Field and Service Robotics: Results of the 11th International Conference; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 621–635. [Google Scholar]

- Brockman, G.; Cheung, V.; Pettersson, L.; Schneider, J.; Schulman, J.; Tang, J.; Zaremba, W. Openai gym. arXiv 2016, arXiv:1606.01540. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems 32 (NIPS 2019), Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 8–14 December 2019; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Association for Computing Machinery: New York, NY, USA, 2019. [Google Scholar]

- Moritz, P.; Nishihara, R.; Wang, S.; Tumanov, A.; Liaw, R.; Liang, E.; Elibol, M.; Yang, Z.; Paul, W.; Jordan, M.I. Ray: A Distributed Framework for Emerging AI Applications. In Proceedings of the 13th {USENIX} Symposium on Operating Systems Design and Implementation ({OSDI} 18), Carlsbad, CA, USA, 8–10 October 2018. [Google Scholar]

| Publication | RL Algorithm | Year | Environment |

|---|---|---|---|

| Xie, R., et al. [8] | DQN | 2020 | 3D-grid |

| Cui, Z.Y. [9] | DQN | 2021 | 2D-grid |

| Liu, Y., et al. [10] | PPO | 2020 | 3D-visual |

| Lowe, R., et al. [12] | MADDPG | 2017 | 2D-grid |

| Xie, L., et al. [18] | DQN | 2017 | 3D-visual |

| Kulkarni, T.D., et al. [20] | DQN | 2016 | 3D-visual |

| Zhu, Y., et al. [21] | A3C | 2017 | 3D-visual |

| Chen, L., et al. [22] | SAC | 2017 | 3D-visual |

| Siriwardhana, S., et al. [23] | A3C | 2019 | 3D-visual |

| Parameters | Value |

|---|---|

| Gamma | 0.995 |

| Lambda | 0.9 |

| Learn rate | 0.00025 |

| SGD Minibatch Size | 256 |

| Train Batch Size | 1024 |

| Clip Param | 0.3 |

| Input size | 84 × 84 × 1 + 5 |

| Neural network structure | Conv1 [84,[4,4],4], Conv2 [42,[4,4],4], Conv3 [21,[5,5],2] Fcnet [512,256,64] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Y.; Wei, Y.; Wang, D.; Jiang, K.; Deng, H. Multi-UAV Path Planning in GPS and Communication Denial Environment. Sensors 2023, 23, 2997. https://doi.org/10.3390/s23062997

Xu Y, Wei Y, Wang D, Jiang K, Deng H. Multi-UAV Path Planning in GPS and Communication Denial Environment. Sensors. 2023; 23(6):2997. https://doi.org/10.3390/s23062997

Chicago/Turabian StyleXu, Yahao, Yiran Wei, Di Wang, Keyang Jiang, and Hongbin Deng. 2023. "Multi-UAV Path Planning in GPS and Communication Denial Environment" Sensors 23, no. 6: 2997. https://doi.org/10.3390/s23062997

APA StyleXu, Y., Wei, Y., Wang, D., Jiang, K., & Deng, H. (2023). Multi-UAV Path Planning in GPS and Communication Denial Environment. Sensors, 23(6), 2997. https://doi.org/10.3390/s23062997