Double-Branch Multi-Scale Contextual Network: A Model for Multi-Scale Street Tree Segmentation in High-Resolution Remote Sensing Images

Abstract

1. Introduction

- The DB-MSC Net is proposed to enhance the ability to segment street trees. The overall accuracy is improved by at least 0.16% and the mIoU is improved by at least 1.13% compared to typical networks.

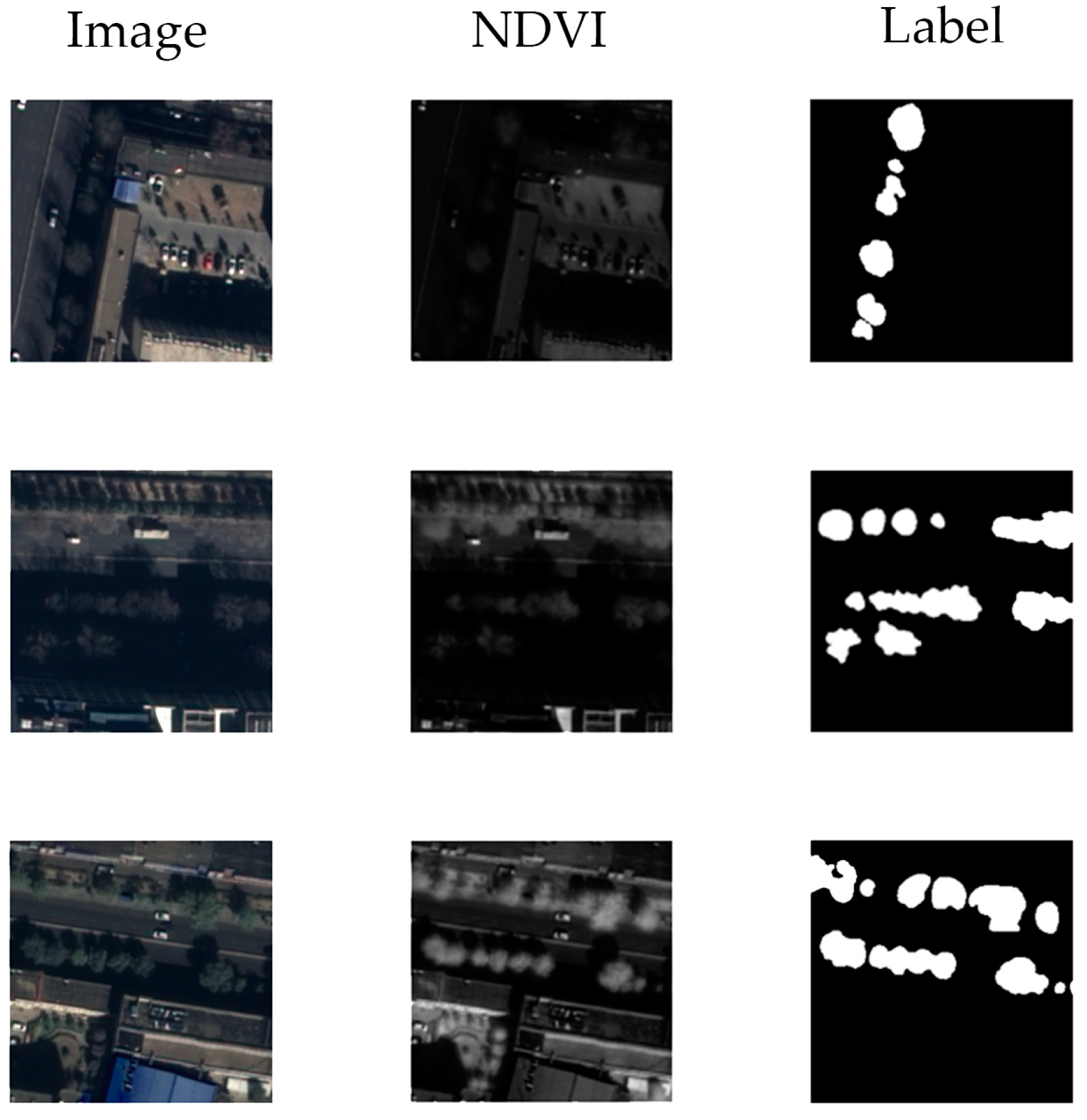

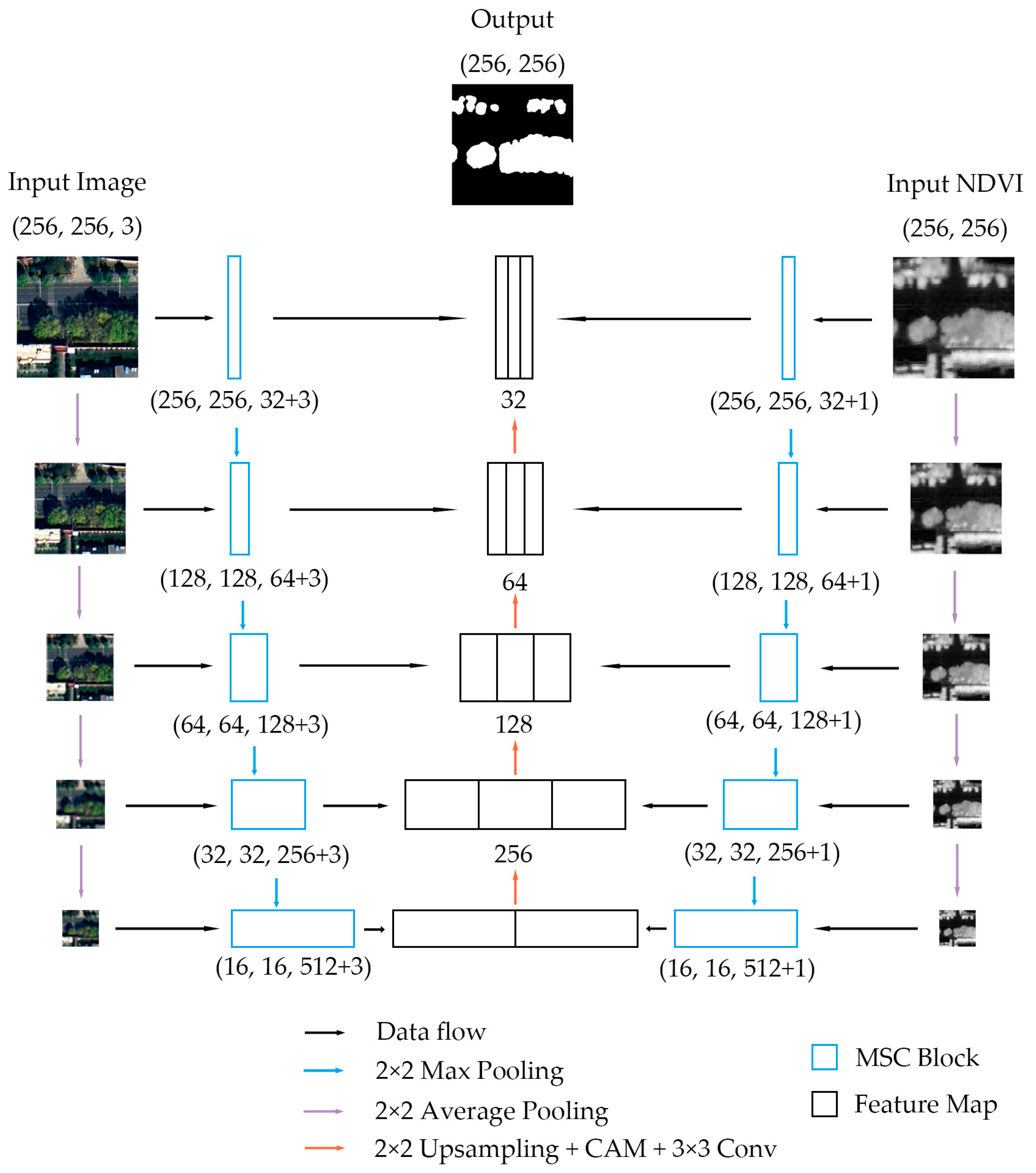

- We designed a double-branch structure in the network to adapt to the input of both RGB images and the NDVI.

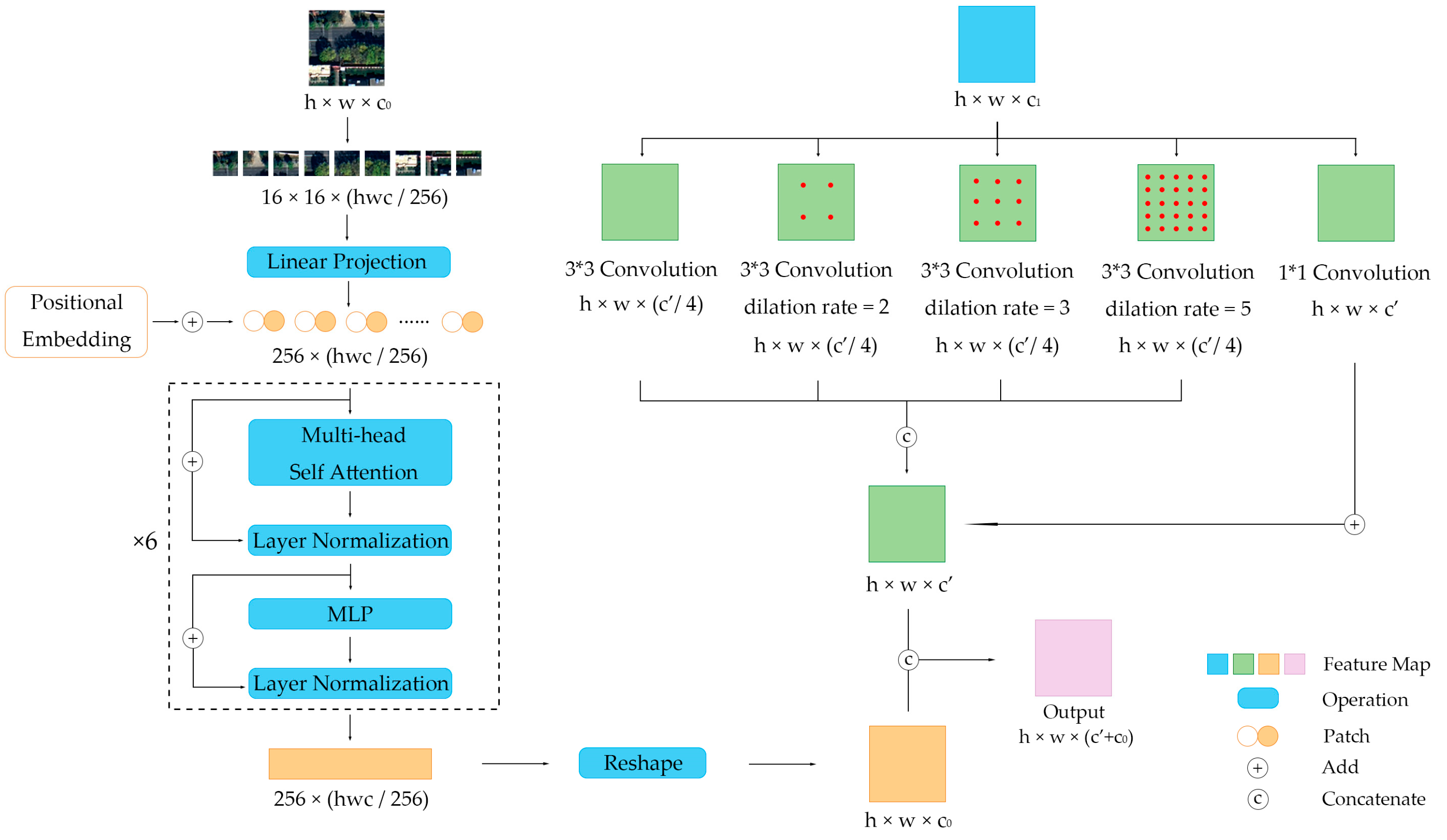

- The MSC block is proposed to improve the ability to extract multi-scale features. It uses a CNN–Transformer hybrid structure to extract both local and global features.

2. Related Work

3. Materials and Methods

3.1. Dataset and Data Preprocessing

3.2. The Overall Architecture of DB-MSC Net

3.3. The Structure of the MSC Block

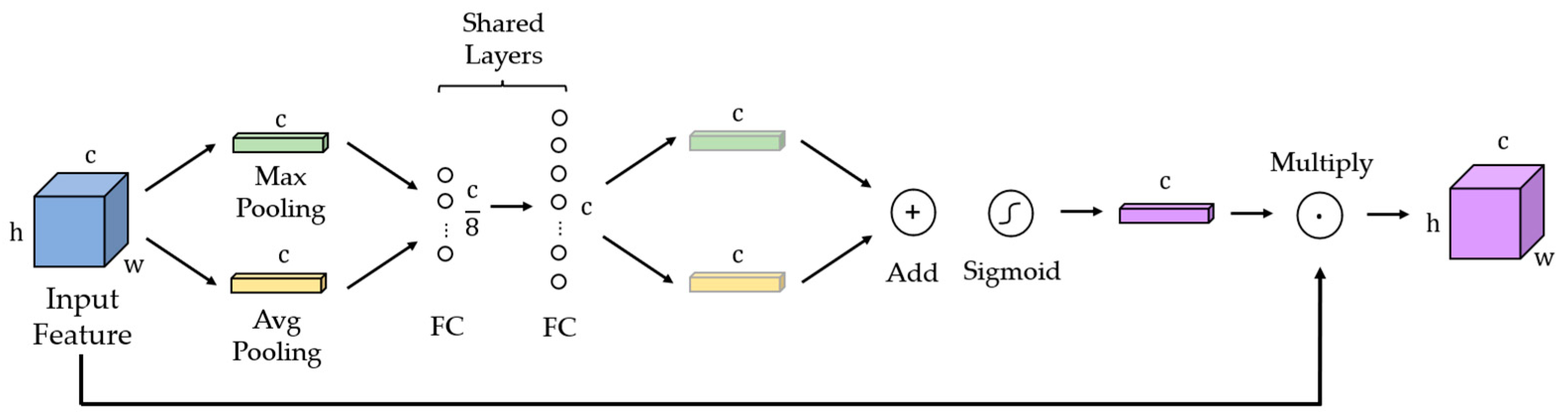

3.4. Channel Attention Mechanism (CAM)

3.5. Experimental Design and Criteria

4. Results

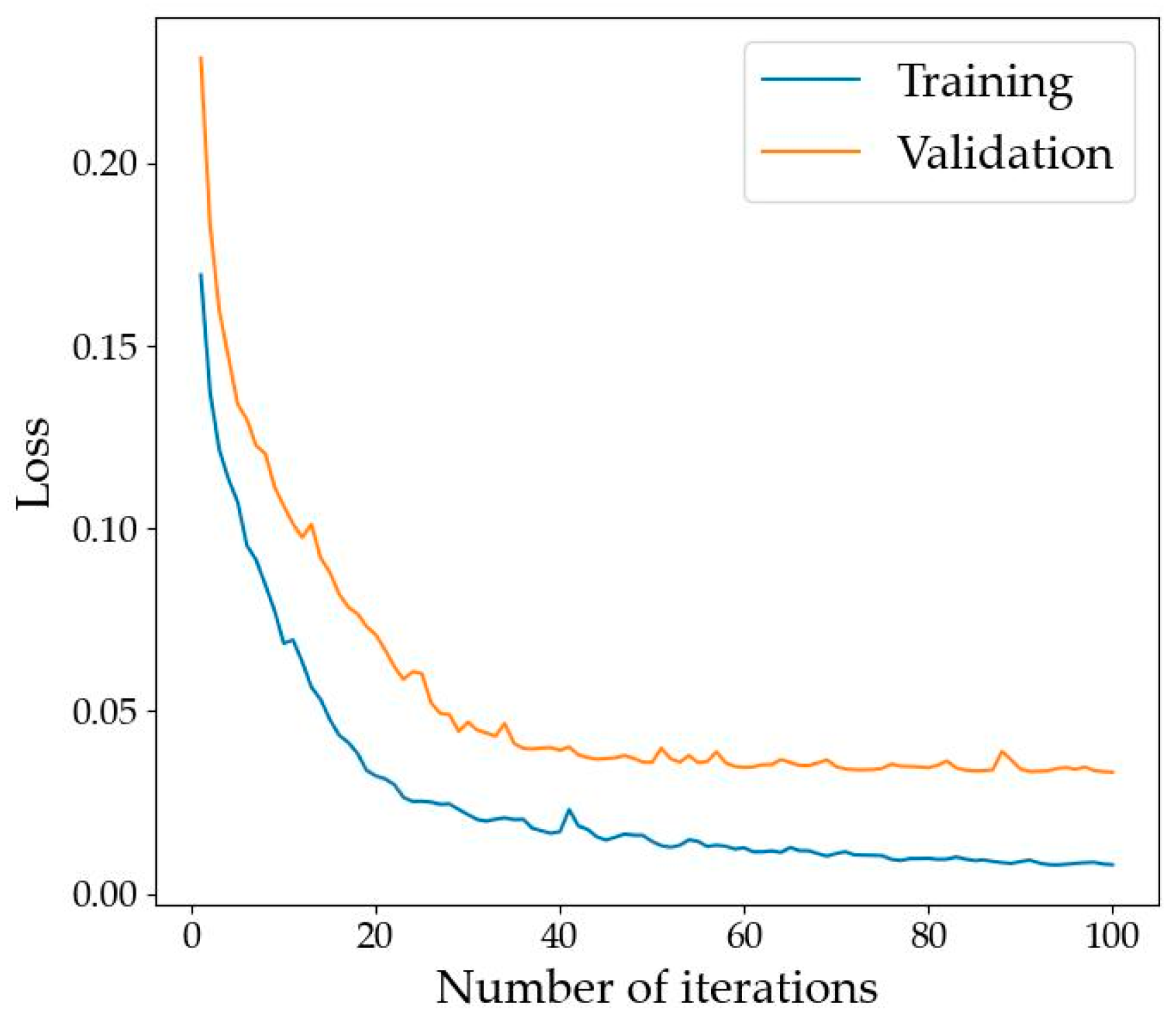

4.1. Network Training

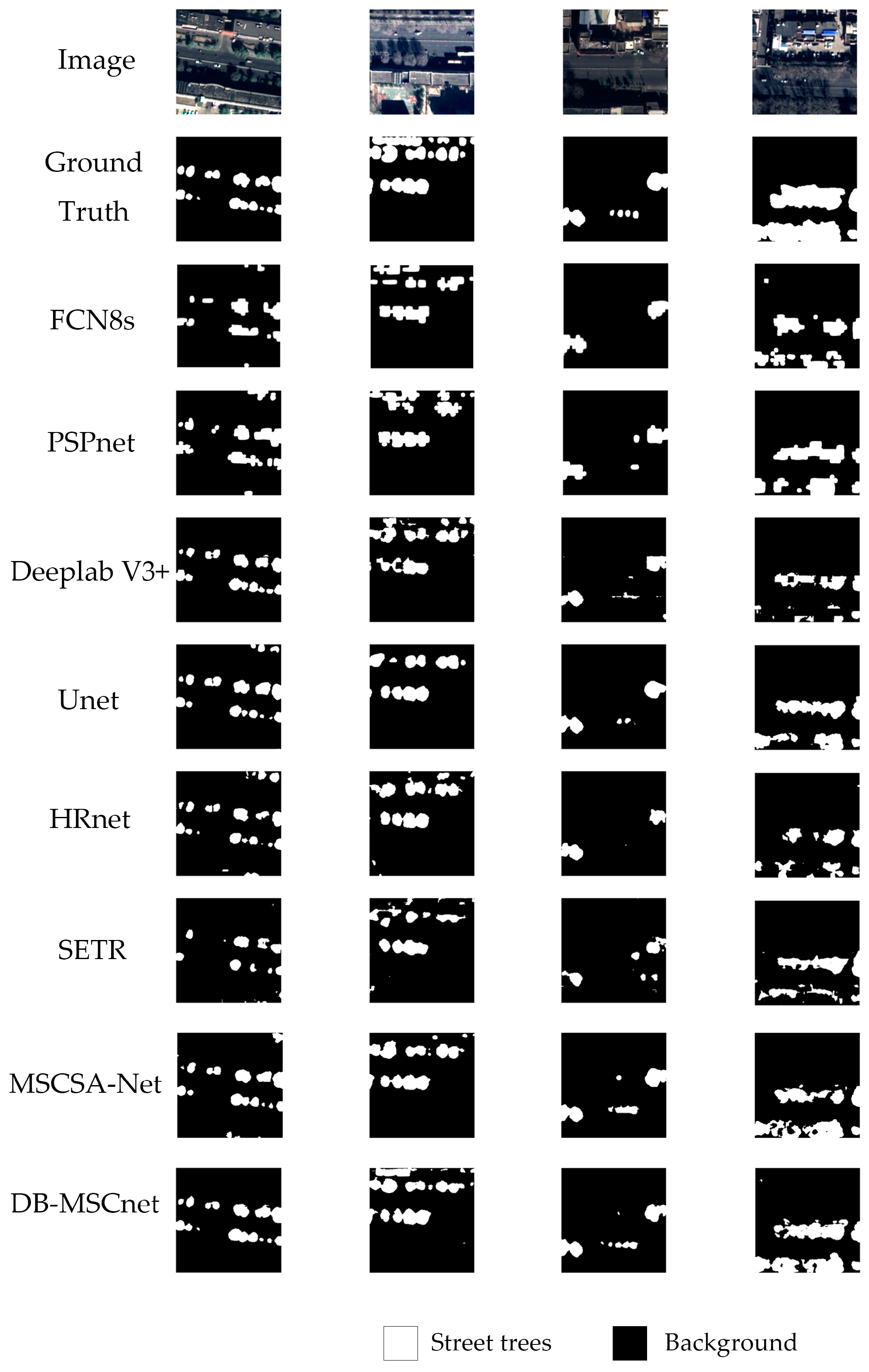

4.2. Street Tree Segmentation

4.3. Ablation Study

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, Y.; Wu, Y.; Sun, Q.; Hu, C.; Liu, H.; Chen, C.; Xiao, P. Tree failure assessment of london plane (Platanus × acerifolia (aiton) willd.) street trees in nanjing city. Forests 2023, 14, 1696. [Google Scholar] [CrossRef]

- Yadav, M.; Khan, P.; Singh, A.K.; Lohani, B. Generating GIS database of street trees using mobile lidar data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, IV-5, 233–237. [Google Scholar] [CrossRef]

- Shahtahmassebi, A.R.; Li, C.; Fan, Y.; Wu, Y.; Lin, Y.; Gan, M.; Wang, K.; Malik, A.; Blackburn, G.A. Remote sensing of urban green spaces: A review. Urban For. Urban Green. 2021, 57, 126946. [Google Scholar] [CrossRef]

- Branson, S.; Wegner, J.D.; Hall, D.; Lang, N.; Schindler, K.; Perona, P. From Google Maps to a fine-grained catalog of street trees. ISPRS J. Photogramm. Remote Sens. 2018, 135, 13–30. [Google Scholar] [CrossRef]

- Breuste, J.H. Investigations of the urban street tree forest of Mendoza, Argentina. Urban Ecosyst. 2013, 16, 801–818. [Google Scholar] [CrossRef]

- Zhang, X.; Boutat, D.; Liu, D. Applications of fractional operator in image processing and stability of control systems. Fractal Fract. 2023, 7, 359. [Google Scholar] [CrossRef]

- Hong, Z.H.; Xu, S.; Wang, J.; Xiao, P.F. Extraction of urban street trees from high resolution remote sensing image. In Proceedings of the 2009 Joint Urban Remote Sensing Event, Shanghai, China, 20–22 May 2009; Volume 1–3, pp. 1510–1514. [Google Scholar]

- Zhao, H.H.; Xiao, P.F.; Feng, X.Z. Edge detection of street trees in high-resolution remote sensing images using spectrum features. In Proceedings of the MIPPR 2013: Automatic Target Recognition and Navigation, Wuhan, China, 26 October 2013. [Google Scholar]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Zhang, J.X.; Yang, T.; Chai, T. Neural network control of underactuated surface vehicles with prescribed trajectory tracking performance. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. arXiv 2017, arXiv:1411.4038. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin, Germany, 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5686–5696. [Google Scholar]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on convolutional neural networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, C. Modified U-Net for plant diseased leaf image segmentation. Comput. Electron. Agric. 2023, 204, 107511. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Yan, H.; Zhang, J.X.; Zhang, X. Injected infrared and visible image fusion via L_1 decomposition model and guided filtering. IEEE Trans. Comput. Imaging 2022, 8, 162–173. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected CRFs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Computer Vision—ECCV 2018, Proceedings of the 15th European Conference, Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 833–851. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- Qin, Y.; Kamnitsas, K.; Ancha, S.; Nanavati, J.; Cottrell, G.; Criminisi, A.; Nori, A. Autofocus layer for semantic segmentation. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2018, Proceedings of the 21st International Conference, Granada, Spain, 16–20 September 2018; Springer: Cham, Switzerland, 2018; pp. 603–611. [Google Scholar]

- Gu, F.; Burlutskiy, N.; Andersson, M.; Wilén, L.K. Multi-resolution networks for semantic segmentation in whole slide images. In Computational Pathology and Ophthalmic Medical Image Analysis, Proceedings of the First International Workshop, COMPAY 2018, and 5th International Workshop, OMIA 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 16–20 September 2018; Springer: Cham, Switzerland, 2018; pp. 11–18. [Google Scholar]

- Tokunaga, H.; Teramoto, Y.; Yoshizawa, A.; Bise, R. Adaptive Weighting Multi-Field-Of-View CNN for Semantic Segmentation in Pathology. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12589–12598. [Google Scholar]

- Xiao, H.; Li, L.; Liu, Q.; Zhu, X.; Zhang, Q. Transformers in medical image segmentation: A review. Biomed. Signal Process. Control 2023, 84, 104791. [Google Scholar] [CrossRef]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.S.; et al. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 6877–6886. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar]

- Ye, Z.; Wei, J.; Lin, Y.; Guo, Q.; Zhang, J.; Zhang, H.; Deng, H.; Yang, K. Extraction of olive crown based on UAV visible images and the U2-Net deep learning model. Remote Sens. 2022, 14, 1523. [Google Scholar] [CrossRef]

- Zhang, C.; Zhou, J.; Wang, H.; Tan, T.; Cui, M.; Huang, Z.; Wang, P.; Zhang, L. Multi-species individual tree segmentation and identification based on improved mask R-CNN and UAV imagery in mixed forests. Remote Sens. 2022, 14, 874. [Google Scholar] [CrossRef]

- Schürholz, D.; Castellanos-Galindo, G.A.; Casella, E.; Mejía-Rentería, J.C.; Chennu, A. Seeing the forest for the trees: Mapping cover and counting trees from aerial images of a mangrove forest using artificial intelligence. Remote Sens. 2023, 15, 3334. [Google Scholar] [CrossRef]

- Lv, L.; Li, X.; Mao, F.; Zhou, L.; Xuan, J.; Zhao, Y.; Yu, J.; Song, M.; Huang, L.; Du, H. A deep learning network for individual tree segmentation in UAV images with a coupled CSPNet and attention mechanism. Remote Sens. 2023, 15, 4420. [Google Scholar] [CrossRef]

- Zheng, Z.; Yu, S.; Jiang, S. A domain adaptation method for land use classification based on improved HR-Net. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4400911. [Google Scholar] [CrossRef]

- Liu, K.-H.; Lin, B.-Y. MSCSA-Net: Multi-scale channel spatial attention network for semantic segmentation of remote sensing images. Appl. Sci. 2023, 13, 9491. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Computer Vision—ECCV 2018, Proceedings of the 15th European Conference, Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland; pp. 3–19.

| Category | Training Set | Validation Set | Test Set | Total |

|---|---|---|---|---|

| Contain street trees | 7110 | 885 | 890 | 8885 |

| No street trees | 890 | 115 | 110 | 1115 |

| Total | 8000 | 1000 | 1000 | 10,000 |

| Model | OA (%) | mIoU (%) | F1 (%) | Kappa (%) |

|---|---|---|---|---|

| FCN-8s [11] | 95.05 | 63.81 | 71.13 | 42.69 |

| PSPNet [22] | 95.07 | 64.62 | 72.05 | 44.48 |

| Deeplab V3+ [21] | 95.81 | 69.21 | 75.98 | 52.28 |

| UNet [12] | 95.98 | 69.78 | 76.05 | 52.43 |

| HRNet [14] | 95.44 | 66.67 | 73.51 | 47.50 |

| SETR [27] | 94.19 | 65.84 | 73.09 | 46.78 |

| MSCSA-Net [34] | 95.87 | 69.82 | 76.24 | 53.16 |

| DB-MSC Net (Ours) | 96.14 | 70.95 | 77.66 | 55.63 |

| Model | OA (%) | mIoU (%) | F1 (%) | Kappa (%) |

|---|---|---|---|---|

| DB-UNet | 95.65 | 68.62 | 73.13 | 51.68 |

| DB-CAM-UNet | 95.87 | 68.81 | 74.05 | 52.47 |

| DB-MSC Net (Ours) | 96.14 | 70.95 | 77.66 | 55.63 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Liu, S. Double-Branch Multi-Scale Contextual Network: A Model for Multi-Scale Street Tree Segmentation in High-Resolution Remote Sensing Images. Sensors 2024, 24, 1110. https://doi.org/10.3390/s24041110

Zhang H, Liu S. Double-Branch Multi-Scale Contextual Network: A Model for Multi-Scale Street Tree Segmentation in High-Resolution Remote Sensing Images. Sensors. 2024; 24(4):1110. https://doi.org/10.3390/s24041110

Chicago/Turabian StyleZhang, Hongyang, and Shuo Liu. 2024. "Double-Branch Multi-Scale Contextual Network: A Model for Multi-Scale Street Tree Segmentation in High-Resolution Remote Sensing Images" Sensors 24, no. 4: 1110. https://doi.org/10.3390/s24041110

APA StyleZhang, H., & Liu, S. (2024). Double-Branch Multi-Scale Contextual Network: A Model for Multi-Scale Street Tree Segmentation in High-Resolution Remote Sensing Images. Sensors, 24(4), 1110. https://doi.org/10.3390/s24041110