Use of Phase-Angle Model for Full-Field 3D Reconstruction under Efficient Local Calibration

Abstract

:1. Introduction

2. Principle

2.1. Camera Imaging Model

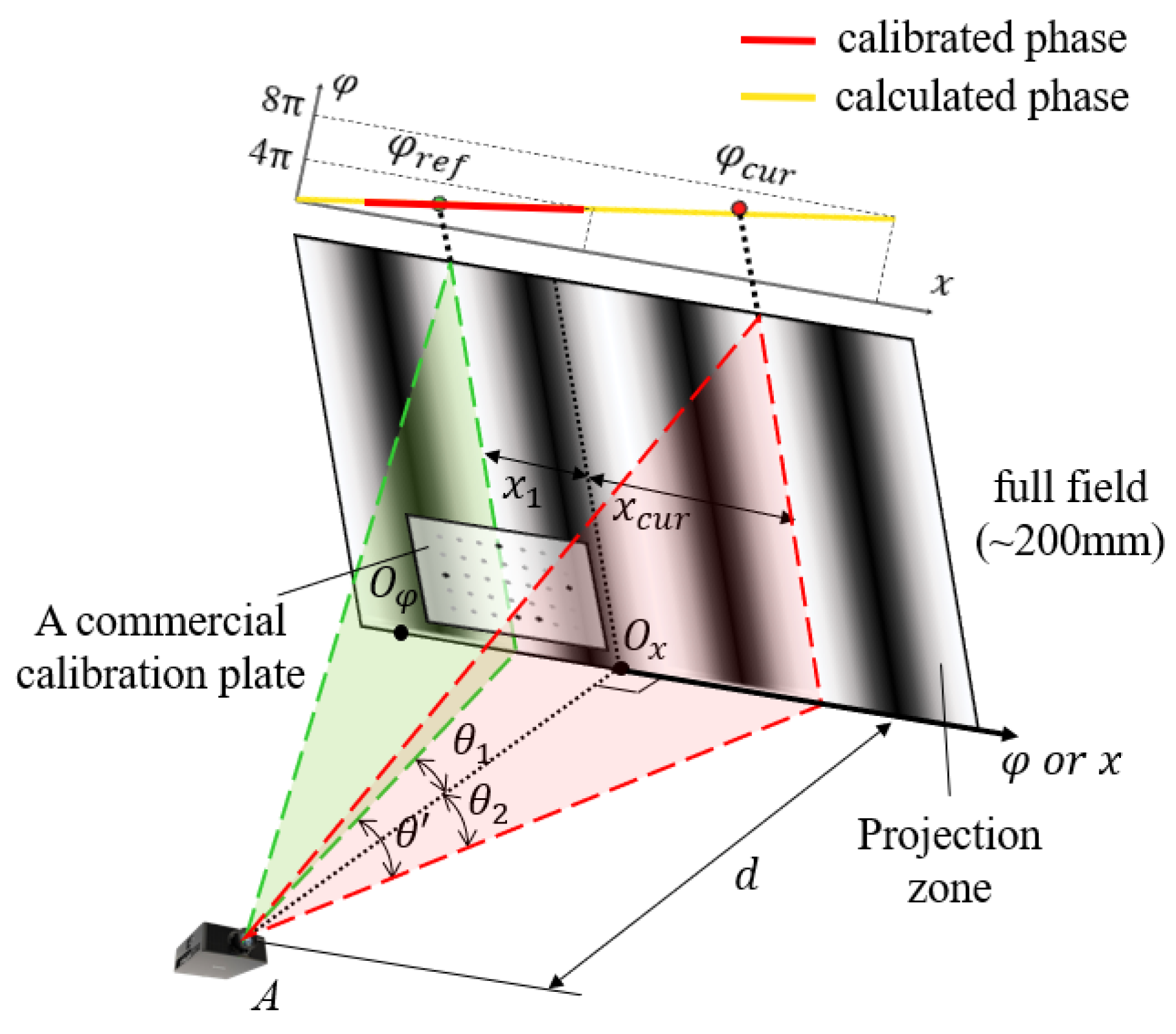

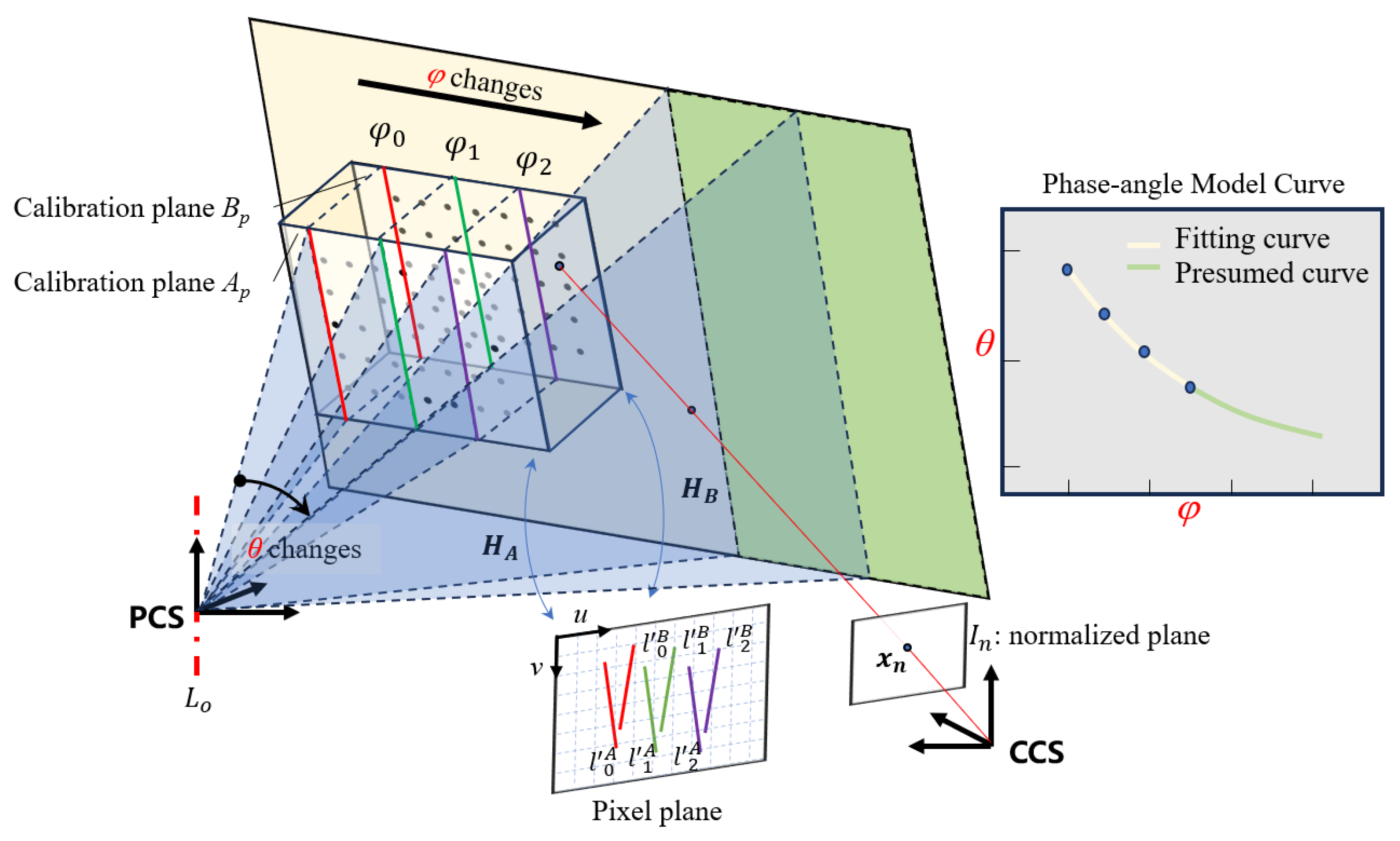

2.2. Basis of the Phase-Angle Model

3. System Calibration and 3D Reconstruction

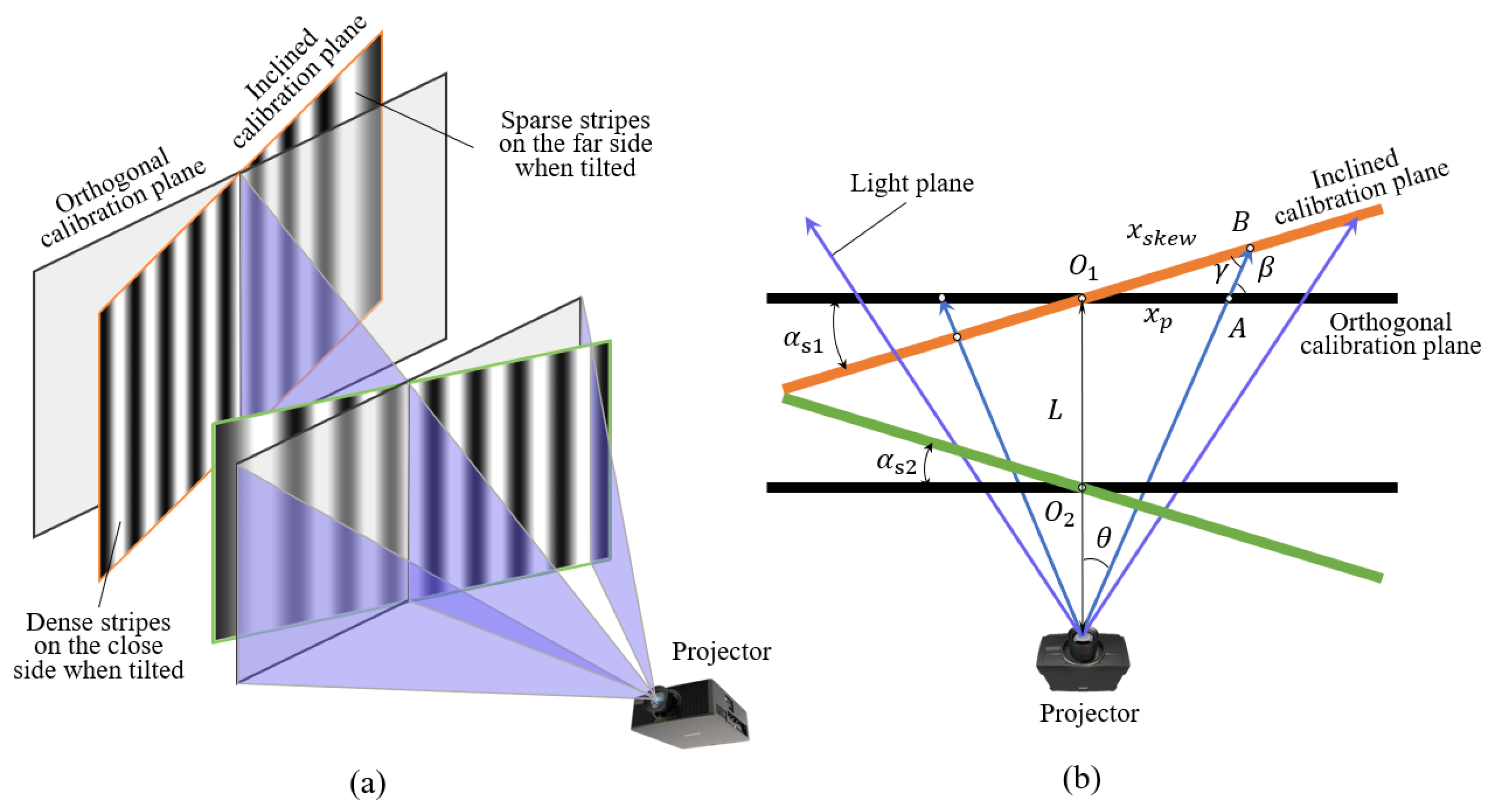

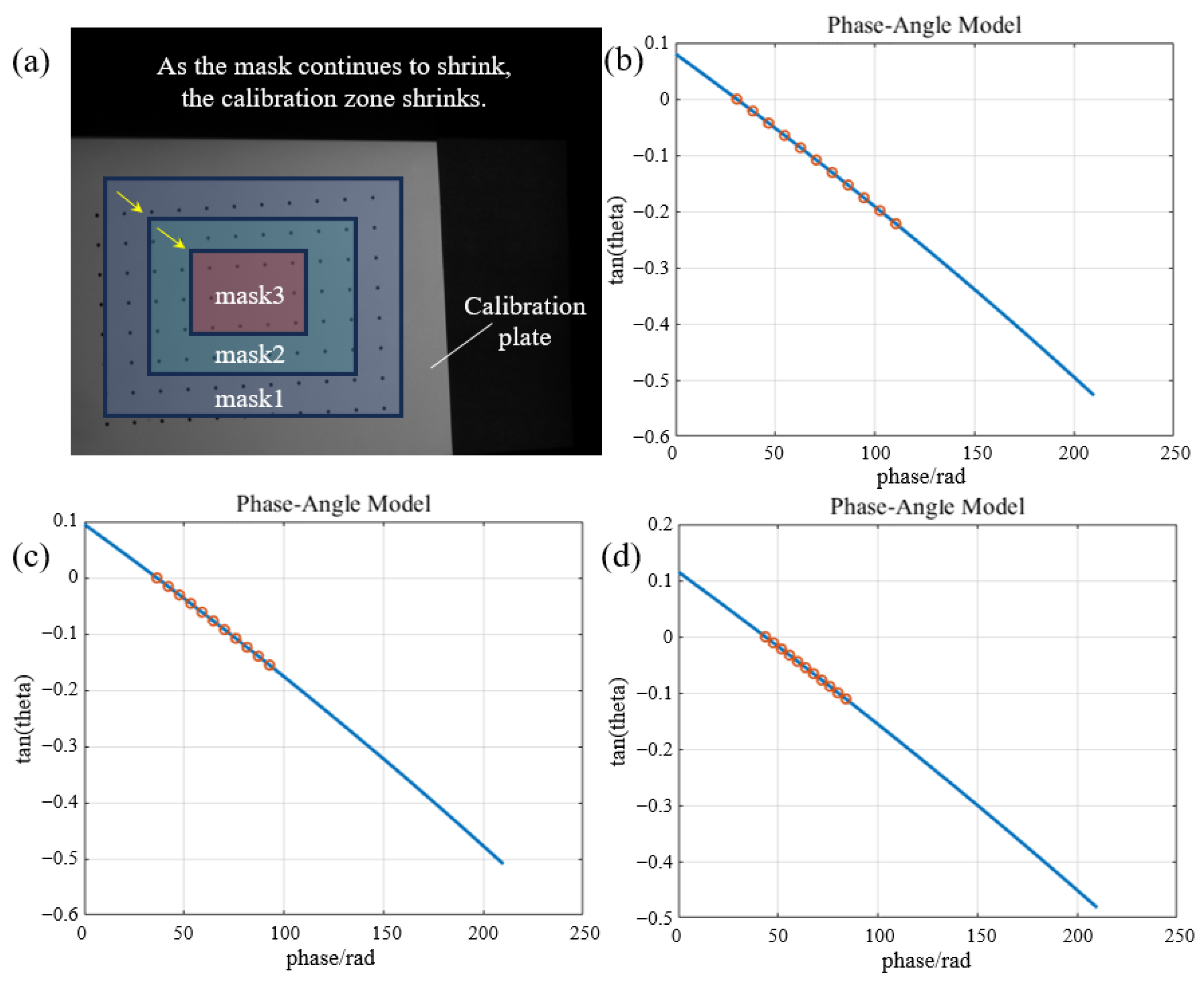

3.1. Local Calibration

3.2. Reconstruction for Global Scope

4. Experiment and Analysis

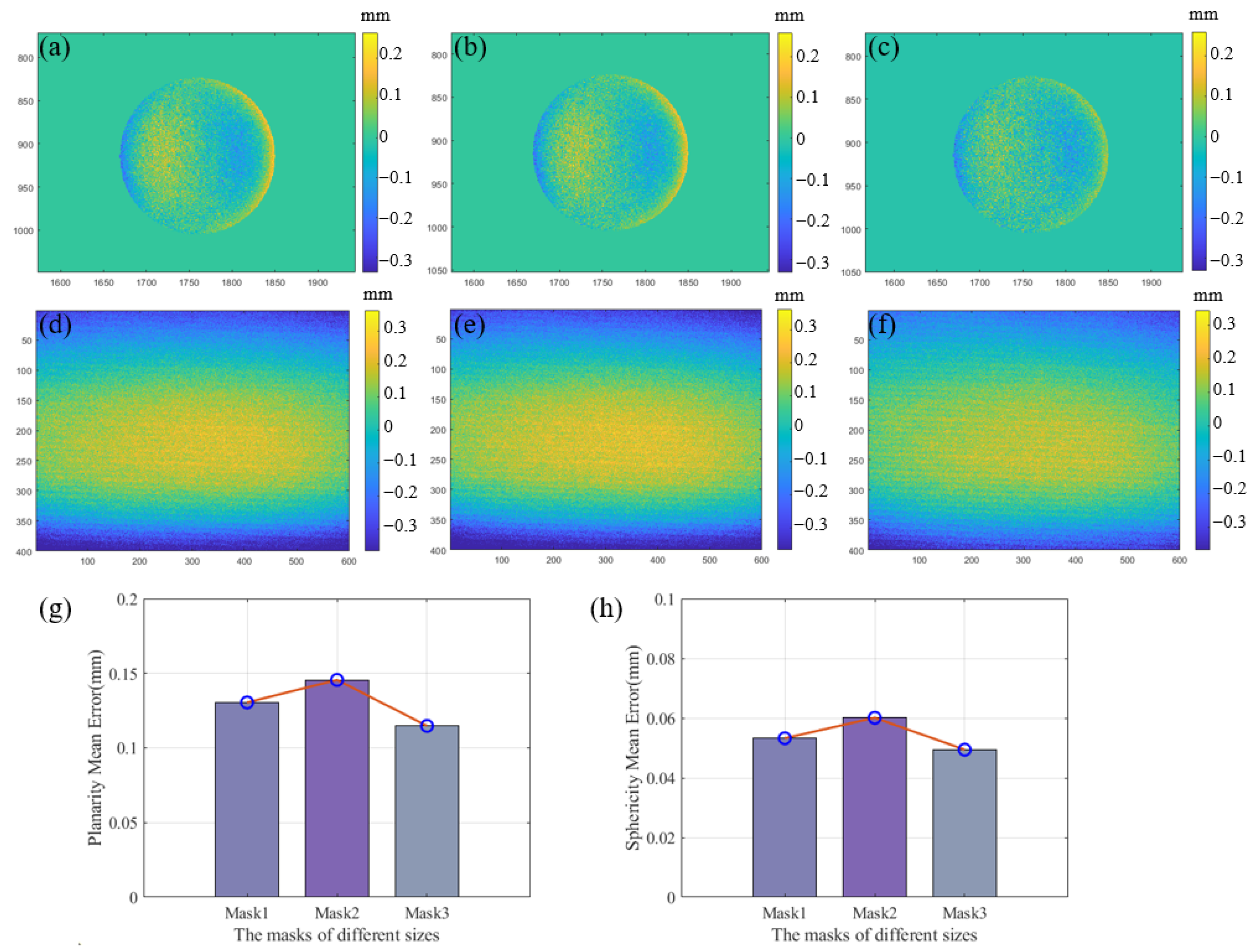

4.1. Calibration Results

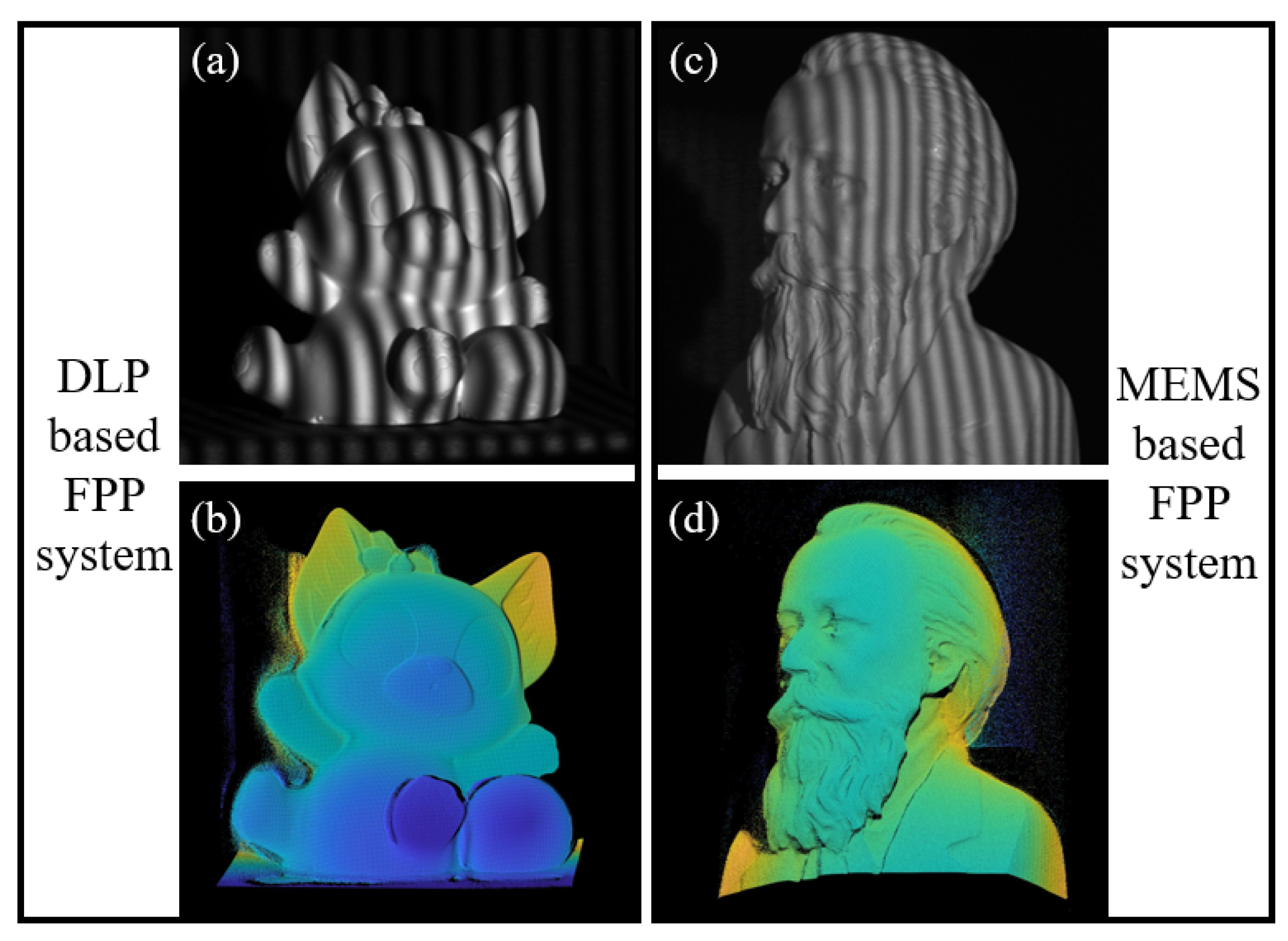

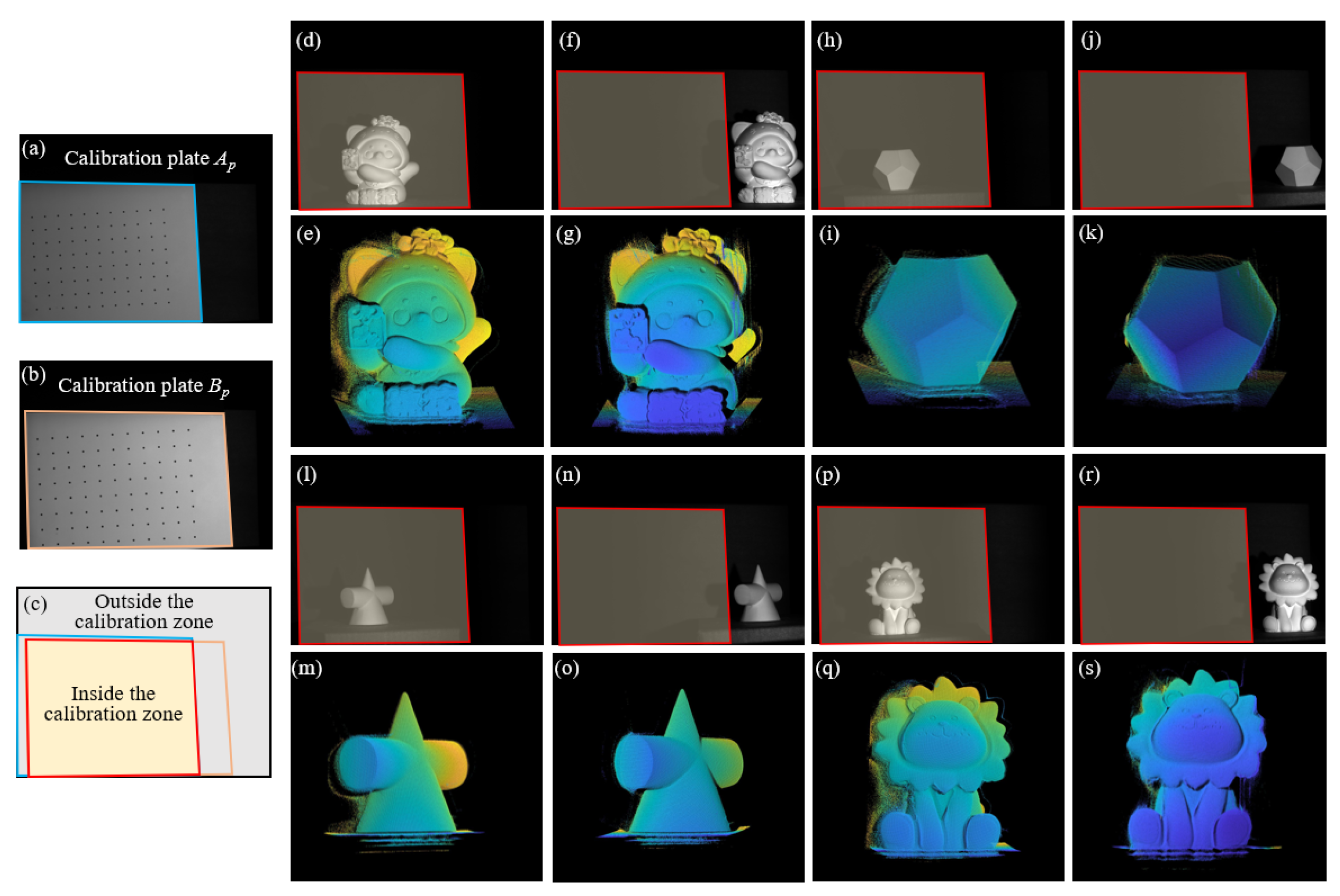

4.2. Reconstruction Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lv, S.; Tang, D.; Zhang, X.; Yang, D.; Deng, W.; Kemao, Q. Fringe projection profilometry method with high efficiency, precision, and convenience: Theoretical analysis and development. Opt. Express 2022, 30, 33515–33537. [Google Scholar] [CrossRef]

- Xing, C.; Huang, J.; Wang, Z.; Duan, Q.; Li, Z.; Qi, M. A high-accuracy online calibration method for structured light 3D measurement. Measurement 2023, 210, 112488. [Google Scholar] [CrossRef]

- An, H.; Cao, Y.; Zhang, Y.; Li, H. Phase-shifting temporal phase unwrapping algorithm for high-speed fringe projection profilometry. IEEE Trans. Instrum. Meas. 2023, 72, 5009209. [Google Scholar] [CrossRef]

- Zhang, S. High-speed 3D shape measurement with structured light methods: A review. Opt. Lasers Eng. 2018, 106, 119–131. [Google Scholar] [CrossRef]

- Yang, G.; Wang, Y. High resolution laser fringe pattern projection based on MEMS micro-vibration mirror scanning for 3D measurement. Opt. Laser Technol. 2021, 142, 107189. [Google Scholar] [CrossRef]

- Wu, Y.; Wu, G.; Li, L.; Zhang, Y.; Luo, H.; Yang, S.; Yan, J.; Liu, F. Inner Shifting-Phase Method for High-Speed High-Resolution 3-D Measurement. IEEE Trans. Instrum. Meas. 2020, 69, 7233–7239. [Google Scholar] [CrossRef]

- Gong, Z.; Sun, J.; Zhang, G. Dynamic structured-light measurement for wheel diameter based on the cycloid constraint. Appl. Opt. 2016, 55, 198–207. [Google Scholar] [CrossRef] [PubMed]

- Sansoni, G.; Trebeschi, M.; Docchio, F. State-of-The-Art and Applications of 3D Imaging Sensors in Industry, Cultural Heritage, Medicine, and Criminal Investigation. Sensors 2009, 9, 568–601. [Google Scholar] [CrossRef] [PubMed]

- Haleem, A.; Javaid, M. 3D scanning applications in medical field: A literature-based review. Clin. Epidemiol. Glob. Health 2019, 7, 199–210. [Google Scholar] [CrossRef]

- Yu, H.; Zheng, D.; Fu, J.; Zhang, Y.; Zuo, C.; Han, J. Deep learning-based fringe modulation-enhancing method for accurate fringe projection profilometry. Opt. Express 2020, 28, 21692–21703. [Google Scholar] [CrossRef]

- Han, M.; Kan, J.; Yang, G.; Li, X. Robust Ellipsoid Fitting Using Combination of Axial and Sampson Distances. IEEE Trans. Instrum. Meas. 2023, 72, 2526714. [Google Scholar] [CrossRef]

- Gorthi, S.S.; Rastogi, P. Fringe projection techniques: Whither we are? Opt. Lasers Eng. 2010, 48, 133–140. [Google Scholar] [CrossRef]

- Feng, S.; Zuo, C.; Zhang, L.; Tao, T.; Hu, Y.; Yin, W.; Qian, J.; Chen, Q. Calibration of fringe projection profilometry: A comparative review. Opt. Lasers Eng. 2021, 143, 106622. [Google Scholar] [CrossRef]

- Miao, Y.; Yang, Y.; Hou, Q.; Wang, Z.; Liu, X.; Tang, Q.; Peng, X.; Gao, B.Z. High-efficiency 3D reconstruction with a uniaxial MEMS-based fringe projection profilometry. Opt. Express 2021, 29, 34243–34257. [Google Scholar] [CrossRef]

- Zhang, S. Flexible and high-accuracy method for uni-directional structured light system calibration. Opt. Lasers Eng. 2021, 143, 106637. [Google Scholar] [CrossRef]

- Yang, Y.; Miao, Y.; Cai, Z.; Gao, B.Z.; Liu, X.; Peng, X. A novel projector ray-model for 3D measurement in fringe projection profilometry. Opt. Lasers Eng. 2022, 149, 106818. [Google Scholar] [CrossRef]

- Yang, D.; Qiao, D.; Xia, C. Curved light surface model for calibration of a structured light 3D modeling system based on striped patterns. Opt. Express 2020, 28, 33240–33253. [Google Scholar] [CrossRef]

- Wan, T.; Liu, Y.; Zhou, Y.; Liu, X. Large-scale calibration method for MEMS-based projector 3D reconstruction. Opt. Express 2023, 31, 5893–5909. [Google Scholar] [CrossRef] [PubMed]

- Gao, W.; Wang, L.; Hu, Z. Flexible method for structured light system calibration. Opt. Eng. 2008, 47, 083602. [Google Scholar] [CrossRef]

- Zhu, H.; Xing, S.; Guo, H. Efficient depth recovering method free from projector errors by use of pixel cross-ratio invariance in fringe projection profilometry. Appl. Opt. 2020, 59, 1145–1155. [Google Scholar] [CrossRef]

- Zhang, S. High-Resolution, Real-Time 3-D Shape Measurement; State University of New York at Stony Brook: New York, NY, USA, 2005. [Google Scholar]

- Zhou, W.-S.; Su, X.-Y. A direct mapping algorithm for phase-measuring profilometry. J. Mod. Opt. 1994, 41, 89–94. [Google Scholar] [CrossRef]

- Lei, F.; Han, M.; Jiang, H.; Wang, X.; Li, X. A phase-angle inspired calibration strategy based on MEMS projector for 3D reconstruction with markedly reduced calibration images and parameters. Opt. Lasers Eng. 2024, 176, 108078. [Google Scholar] [CrossRef]

| System Calibration Parameters | Value | |

|---|---|---|

| Reference phase | 30.6732 | |

| Reference isophase plane | 0.9093 | |

| −0.0134 | ||

| 0.4160 | ||

| −139.7620 | ||

| Phase-angle model | a1 | 0.2022 |

| a2 | −382.4269 | |

| Rotational centerline | v1, v2, v3 | (0.0268, 0.9993, −0.0259) |

| p1, p2, p3 | (97.6335, −184.3396, 116.7032) | |

| Planarity or Sphericity Errors | Plane Inside Calibration Zone | Plane Outside Calibration Zone | Sphere, Inside Calibration Zone | Sphere, Outside Calibration Zone |

|---|---|---|---|---|

| Position1 | 0.0484 mm | 0.1298 mm | 0.0432 mm | 0.0509 mm |

| Position2 | 0.0512 mm | 0.1291 mm | 0.0403 mm | 0.0535 mm |

| Position2 | 0.0411 mm | 0.1325 mm | 0.0388 mm | 0.0553 mm |

| Mean | 0.0469 mm | 0.1305 mm | 0.0408 mm | 0.0532 mm |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lei, F.; Ma, R.; Li, X. Use of Phase-Angle Model for Full-Field 3D Reconstruction under Efficient Local Calibration. Sensors 2024, 24, 2581. https://doi.org/10.3390/s24082581

Lei F, Ma R, Li X. Use of Phase-Angle Model for Full-Field 3D Reconstruction under Efficient Local Calibration. Sensors. 2024; 24(8):2581. https://doi.org/10.3390/s24082581

Chicago/Turabian StyleLei, Fengxiao, Ruijie Ma, and Xinghui Li. 2024. "Use of Phase-Angle Model for Full-Field 3D Reconstruction under Efficient Local Calibration" Sensors 24, no. 8: 2581. https://doi.org/10.3390/s24082581