Use of a Time-of-Flight Camera With an Omek Beckon™ Framework to Analyze, Evaluate and Correct in Real Time the Verticality of Multiple Sclerosis Patients during Exercise

Abstract

:1. Introduction

- Primary symptoms are a direct result of demyelination. This hinders the transmission of electric signals to the muscles (not allowing them to move properly) and body organs (not allowing them to perform normal functions). Many of these symptoms can be managed effectively with medication, rehabilitation, and other medical treatments [13]. The most important symptoms include spasticity (spasticity is stiff or rigid muscles; it may also be called unusual “tightness” or increased muscle tone; reflexes—for example, a knee-jerk reflex—are stronger or exaggerated; the condition can interfere with walking, movement, or speech), weakness, tremor, imbalance, numbness and pain.

- Secondary symptoms result from or are consequences of the primary symptoms. Paralysis (a primary symptom) can lead to pressure sores and urinary incontinence can cause recurrent urinary tract infections.

- Tertiary symptoms are the social, vocational and psychological complications of the primary and secondary symptoms. The most important symptoms include social, professional, marital psychological problems. Depression, for example, is a common problem among people with MS.

- Institutions-Based Rehabilitation (IBR). In an institutions-based approach, all or almost all rehabilitation services are provided by the institution. These services are organized according to those which are available although they no longer correspond to real needs.

- Institutional rehabilitation based on community outreach. With outreach, the focus of control is still institution-based. More people can be “reached” but there will be limits according to distance from the institution, and whether the needs of the disabled people are similar to what the institution offers.

- Community-Based Rehabilitation (CBR). This approach covers all the situations in which rehabilitation resources are available within the community. CBR is generated in the same community. It is based on the needs of the person and seeks to solve problems rather than apply techniques or exercises from the health profession.

- To re-educate and maintain all available voluntary control.

- To maintain the whole amplitude of the movement of joints and soft tissues, and to teach the patient and/or the relatives adequate tightening procedures to prevent contractures.

- To make treatment techniques a part of everyday life, relating them with appropriate daily activities, ensuring maintenance of all the improvement obtained in this manner.

- To analyze and evaluate in real-time the position of patients during the workout session to avoid unsatisfactory practices that may result in more severe muscle imbalances and worsen their health.

2. Related Work

- The Home Care Activity Desk (HCAD) [26] project was sponsored by the EC during the period 2003–2005. It dealt with the development of a tele-rehabilitation system to enable patients affected by MS, Stroke (S) or Traumatic Brain Injury (TBI) to perform upper limb rehabilitation treatment at home. An activity desk was purposely designed to allow the patient to perform exercises at home, to monitor patient’s performances, and to transmit the monitored data to a hospital environment. Patents also had the possibility of interacting with the therapist through a teleconference system.

- eRehab (ubiquitous multidevice personalised telerehabilitation platform) [27]. This project aims to develop and validate the eRehab platform, a tele-rehabilitation platform based on personalized health service massive deployment architecture. This platform will make it possible to carry out therapies in different environments, such as hospitals, homes and on the go, using the device, user interface and contents that best suit the needs and preferences of each user.

- AXARM (Extensible Remote Assistance and Monitoring Tool for Tele-Rehabilitation) [28]. This project is a videoconference oriented and enhanced system which allows specialized professionals from a rehabilitation center, such as psychologists, neurologists and rehabilitators, to carry out remote rehabilitation sessions. Patients can remain at home using broadband communications and Internet services.

- HELLODOC (Healthcare sErvice Linking teLerehabilitatiOn to Disabled people and Clinicians) [29]. The main objective of the HELLODOC project was the evaluation of the EU market in terms of home care services including MS, TBI and stroke though a home-based rehabilitation platform [30]. Remote monitoring and control is possible with two webcams and a teleconferencing service. The main parameters of the exercises (i.e., duration, success, number of attempts) are sent electronically and on video tape to the hospital.

3. Methods

3.1. Participants

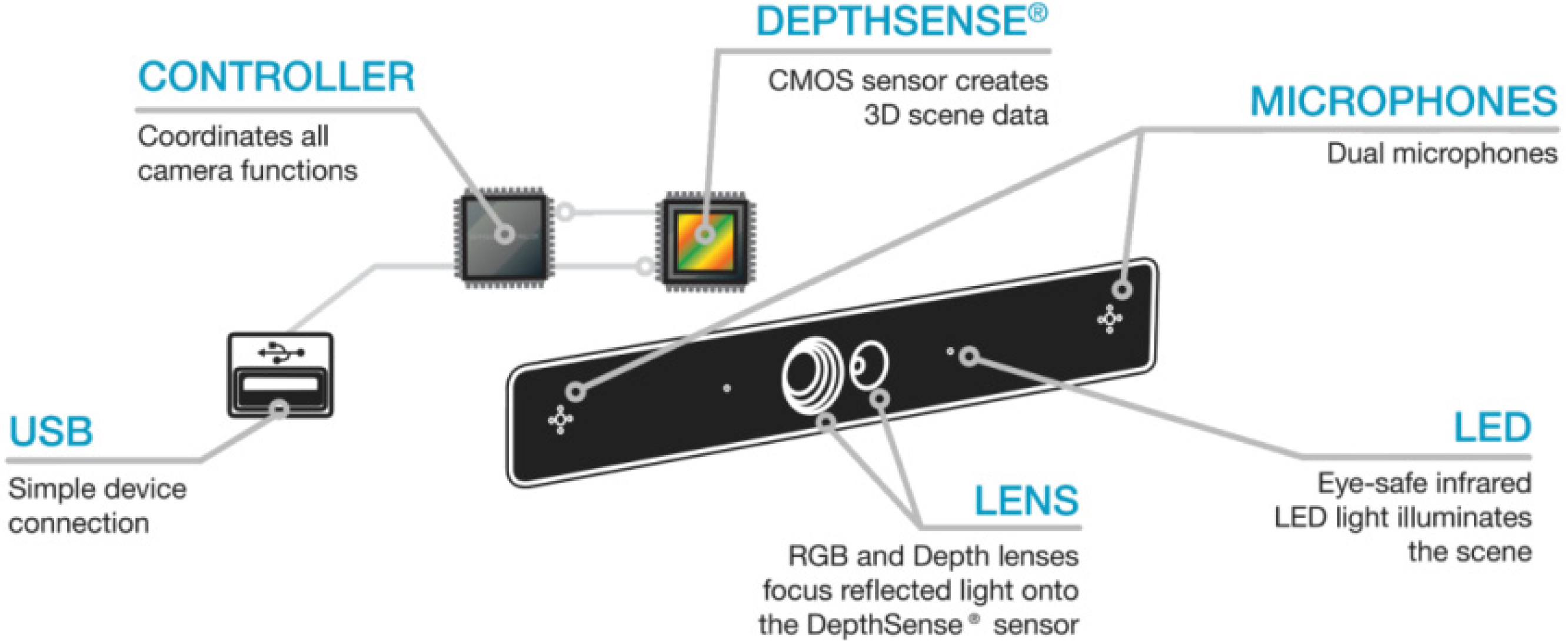

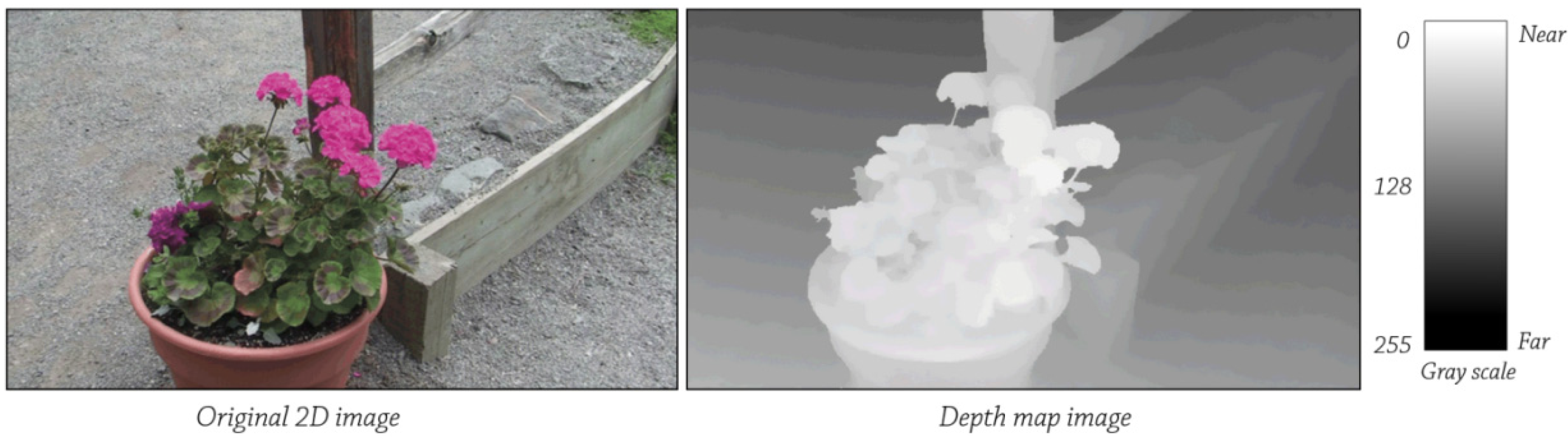

3.2. Materials

| Item | Value |

|---|---|

| Depth field of view | 57.3° × 42° × 73.8° (H × V × D) |

| Depth resolution | QQVGA |

| Frame rate | 25–60 fps |

| Nominal operating range | 15 cm–1 m & 1.5 m–4.5 m |

| Depth noise | <3 cm @ 3 m |

| Illumination type | LED |

| RGB resolution | VGA |

| RGB field of view | 50° × 40° × 60° (H × V × D) |

| SDK | Company | Price | Works with DS311 camera? |

|---|---|---|---|

| Kinect SDK 1.7 | Microsoft | Free | No |

| OpenNI SDK 2.2.0.30 | PrimeSense | Free | No |

| NiTE 2.2.0.10 | PrimeSense | Free | No |

| IISU Pro 3.6 | Softkinetic | 1,200 € | Yes |

| Beckon SDK 3.0 | Omek | Free (Not available *) | Yes |

| Intel Perceptual Computing R5 | Intel | Free | No |

- Tracking seated people. Kinect™ sensor offers two tracking modes, default and seated [37]. Seated mode only tracks ten upper-body joints (shoulders, elbows, wrists, arms and head). In our developed system, we needed a spine joint to obtain the trunk’s verticality angle. In version 2.0 of OpenNI® framework, upper body tracking functionality has been deleted because of errors and limitations [38]. Meanwhile, with Beckon™ SDK, the user can select individual joints to track, i.e., upper body plus spine/hips joints.

3.3. Procedure

| # | Question | Responses | |||

|---|---|---|---|---|---|

| TD | TA | ||||

| 1 | Before using the tool, I think that my health problems were worse than other people in the same situation | 1 | 2 | 3 | 4 |

| 2 | I think that my health has improved by using this system in comparison to other systems | 1 | 2 | 3 | 4 |

| 3 | When I use the system for the time set by the professional, I think that my health improves | 1 | 2 | 3 | 4 |

| 4 | I agree with the frequency of use of the system established by the professional | 1 | 2 | 3 | 4 |

| 5 | I think that I would like to use the system frequently, because it helps me to improve my quality of life | 1 | 2 | 3 | 4 |

| 6 | I believe that my health has improved compared to people who have not used the system | 1 | 2 | 3 | 4 |

| 7 | After using the system, I think that I am more independent (dressing, toileting, etc.) | 1 | 2 | 3 | 4 |

| 8 | Have you felt fatigued after each session using the system? | 1 | 2 | 3 | 4 |

| 9 | I had cramps and/or muscle stiffness after each session using the system | 1 | 2 | 3 | 4 |

| 10 | I felt pain or discomfort after each session using the system | 1 | 2 | 3 | 4 |

| # | Question | Responses | ||||

|---|---|---|---|---|---|---|

| TD | TA | |||||

| 1 | The system includes demonstrations that allowed me to observe and practice complex processes new to me | 1 | 2 | 3 | 4 | 5 |

| 2 | I think that the system interface clearly displays information, is easy to understand and consistent | 1 | 2 | 3 | 4 | 5 |

| 3 | I felt comfortable and confident using the system | 1 | 2 | 3 | 4 | 5 |

| 4 | I think that the system modules were consistent and do their job properly | 1 | 2 | 3 | 4 | 5 |

| 5 | I knew what I was doing at all times | 1 | 2 | 3 | 4 | 5 |

| 6 | I was able to perform all actions of the system | 1 | 2 | 3 | 4 | 5 |

| 7 | I was able to read every option of the system | 1 | 2 | 3 | 4 | 5 |

| 8 | I knew why I was doing the processes at all times | 1 | 2 | 3 | 4 | 5 |

| 9 | I found the various functions in this system to be well integrated | 1 | 2 | 3 | 4 | 5 |

| 10 | I needed to learn a lot of things before I could get going with this system | 1 | 2 | 3 | 4 | 5 |

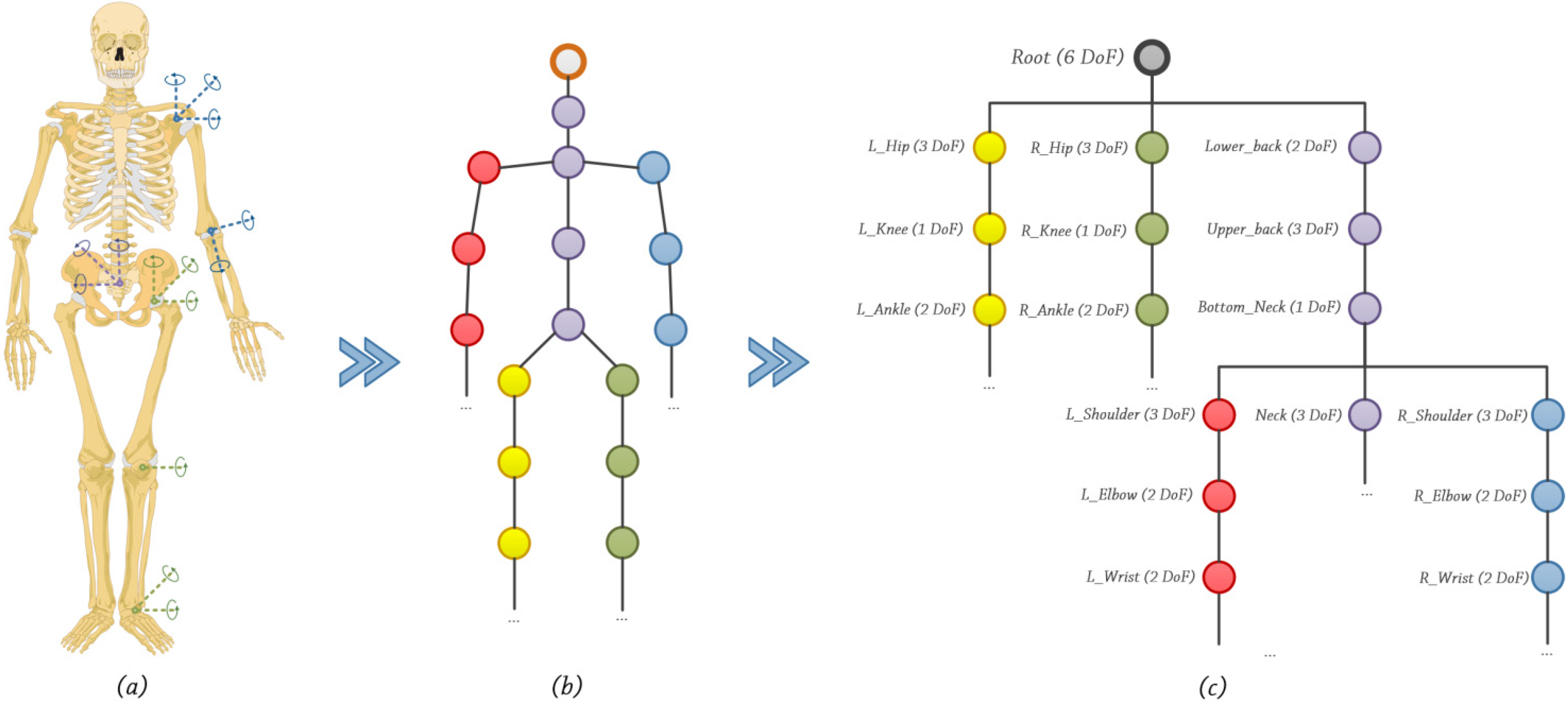

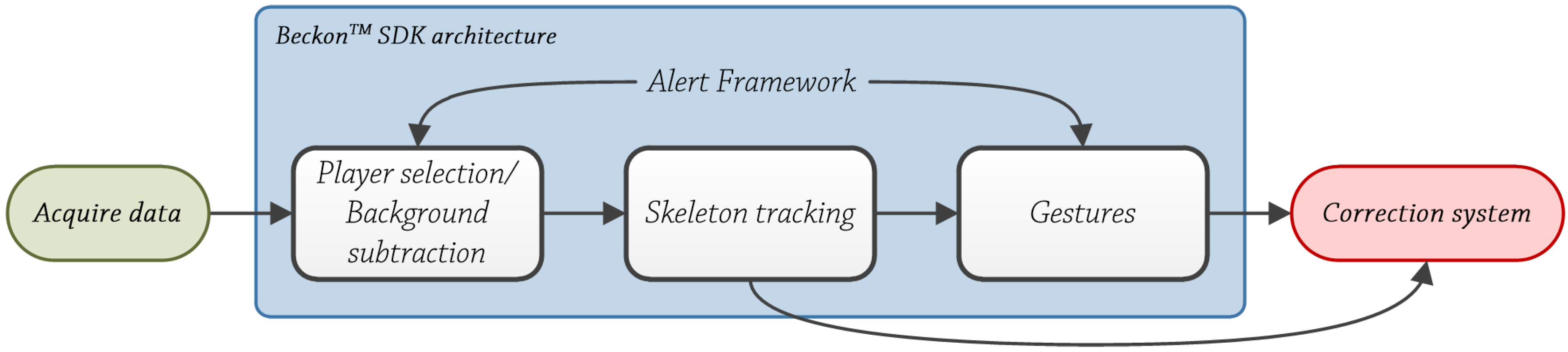

4. Beckon™ SDK

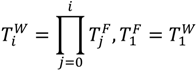

and

and  .

.  is a coordinate system defined according to a previous bone in structure.

is a coordinate system defined according to a previous bone in structure.  is a coordinate system in the World Coordinate System (WCS). Under these conditions, the following equation is valid, Equation (1):

is a coordinate system in the World Coordinate System (WCS). Under these conditions, the following equation is valid, Equation (1):

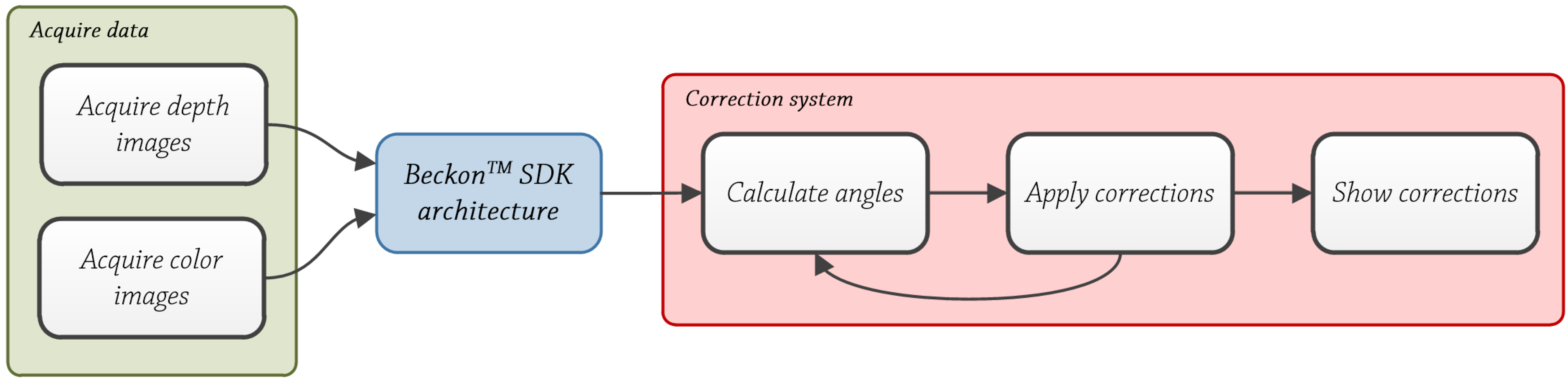

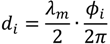

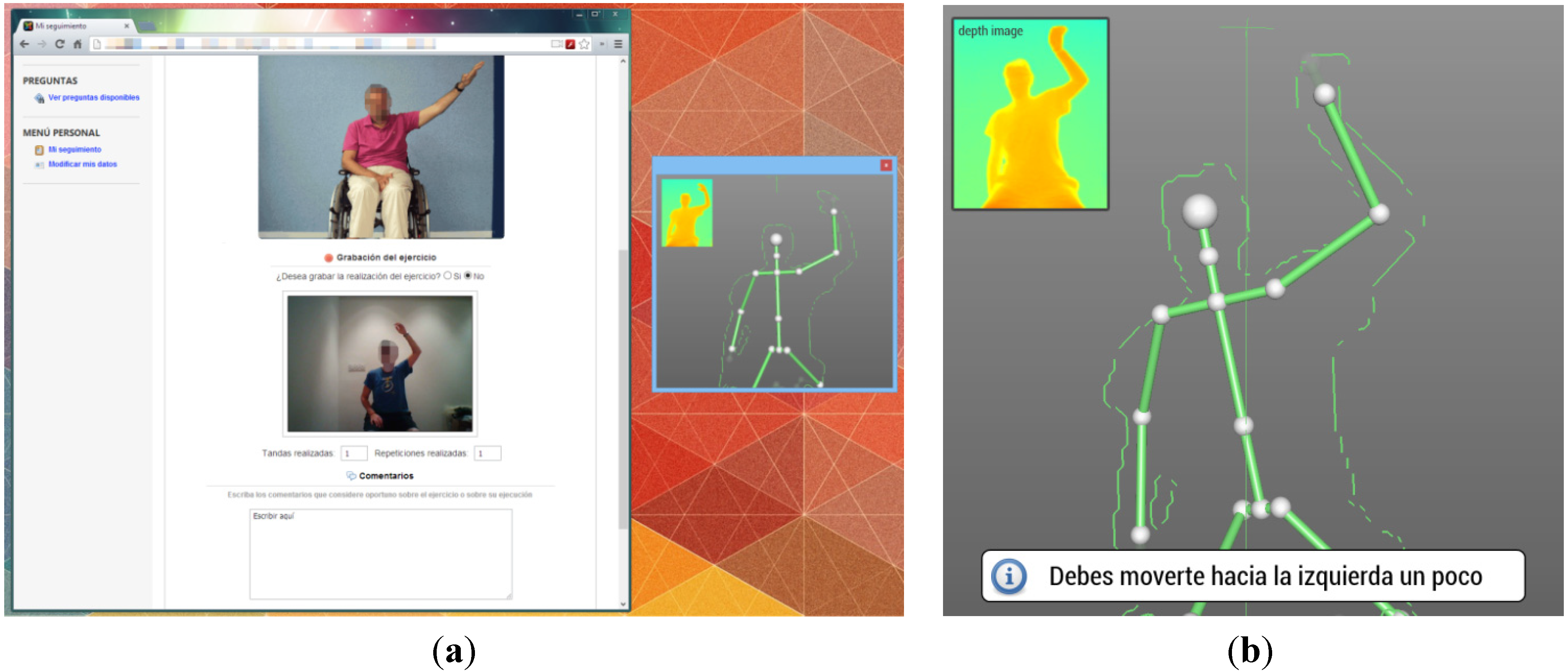

5. System Design

Correction System

Acquire Data

BeckonTM SDK Architecture

- Discriminative or model-free. These methods don’t use models. In [59], Wren et al. use the bottom-up approach to track body parts, and in [60], Brand convert 2D sequences to 3D poses. There are two main groups: example-based models [61], which store a set of samples along with their corresponding pose descriptors; and learning-based models [62], which obtain the data from image observations using training samples.

- Generative or model-based. These methods employ a known model. In [63], Sigal et al. use a collection of loosely-connected body-parts using an undirected graphical model to track people. Merad et al. [64], use skeleton graphs to count people. There are two main groups [58]: indirect models, which use a model as a reference to analyze the data; and direct models, which use a 3D model of the human body along with kinematic data (direct or inverse) to analyze the obtained data.

Correction System

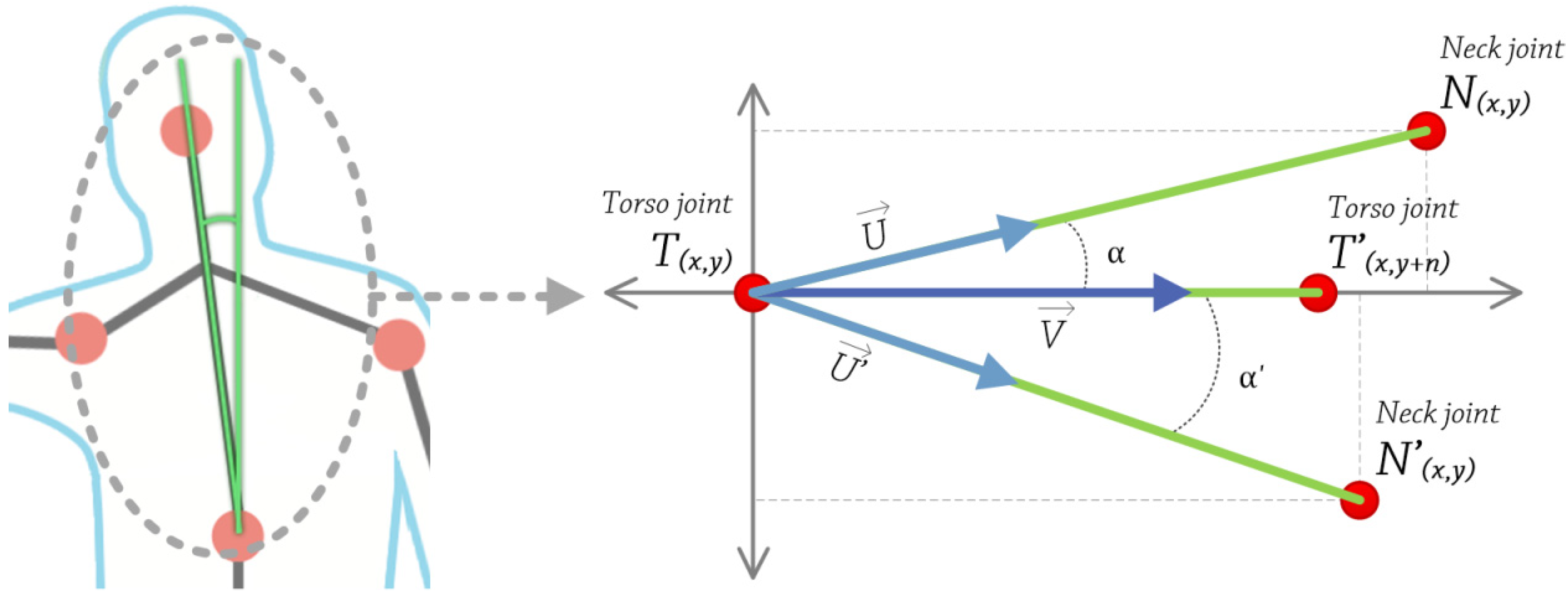

- The sagittal plane is a vertical plane which passes from the front of the body to the back, dividing it into two halves, left and right.

- The frontal plane is a vertical plane that passes from the one side end of the body to the other, dividing the body into two halves, anterior and posterior.

- The transverse plane is a horizontal plane which divides the body horizontally into upper and lower halves.

- Frontal axis (X-axis). It runs from left to right and it is perpendicular to the vertical axis.

- Vertical axis (Y-axis). In standing posture, it is positioned perpendicular to the supporting surface.

- Sagittal axis (Z-axis). It runs from the rear surface of the body to the front surface, and it is perpendicular to X and Y axes.

- Obtain 2D coordinates, in pixels, of torso and some pixels of the upper the torso. Using these two points, construct a vector V that passes through them. This is calculated at the very beginning, and then used as a reference value.

- Get 2D coordinates, in pixels, of neck and torso joints. Using these two points, construct a vector U that passes through them.

- Calculate the angle between these two vectors U and V, using the following equation, Equation (3):

6. Results

6.1. Experiment Example

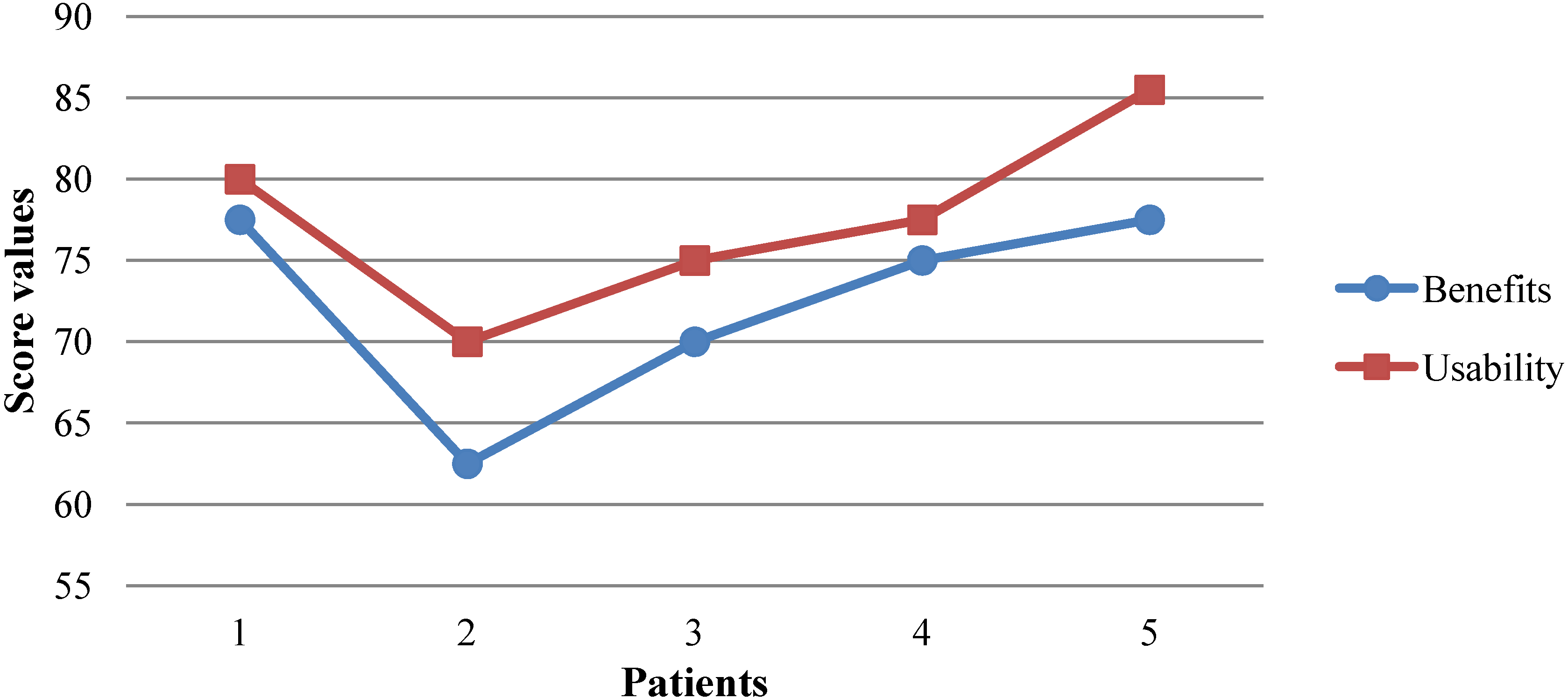

6.2. Assessment Analysis

7. Discussion

8. Conclusions

Conflicts of Interest

References

- Hu, W.; Lucchinetti, C.F. The pathological spectrum of CNS inflammatory demyelinating diseases. Semin. Immunopathol. 2009, 31, 439–453. [Google Scholar] [CrossRef]

- Poser, C.M.; Paty, D.W.; Scheinberg, L.; McDonald, W.I.; Davis, F.A.; Ebers, G.C.; Johnson, K.P.; Sibley, W.A.; Silberberg, D.H.; Tourtellotte, W.W. New diagnostic criteria for multiple sclerosis: Guidelines for research protocols. Ann. Neurol. 1983, 13, 227–231. [Google Scholar] [CrossRef]

- Amato, M.P.; Ponziani, G.; Rossi, F.; Liedl, C.L.; Stefanile, C.; Rossi, L. Quality of life in multiple sclerosis: The impact of depression, fatigue and disability. Mult. Scler. J. 2001, 7, 340–344. [Google Scholar]

- Ghezzi, A.; Deplano, V.; Faroni, J.; Grasso, M.G.; Liguori, M.; Marrosu, G.; Pozzlilli, C.; Simone, L.I.; Zaffaroni, M. Multiple sclerosis in childhood: clinical features of 149 cases. Mult. Scler. J. 1997, 3, 43–46. [Google Scholar] [CrossRef]

- Bakshi, R. Fatigue associated with multiple sclerosis: Diagnosis, impact and management. Mult. Scler. J. 2003, 9, 219–227. [Google Scholar] [CrossRef]

- Weinshenker, B.G.; Bass, B.; Rice, G.P.A.; Noseworthy, J.; Carriere, W.; Baskerville, J.; Ebers, G.C. The natural history of multiple sclerosis: A geographically based study I. Clinical course and disability. Brain 1989, 112, 133–146. [Google Scholar] [CrossRef]

- Columbus, F.H. Treatment and Management of Multiple Sclerosis; An Imprint of Nova Science Publishers, Inc.: Hauppauge, NY, USA, 2005. [Google Scholar]

- Fiske, J.; Griffiths, J.; Thompson, S. Multiple sclerosis and oral care. Dent. Update 2002, 29, 273–283. [Google Scholar]

- Stroud, N.; Minahan, C.; Sabapathy, S. The perceived benefits and barriers to exercise participation in persons with multiple sclerosis. Disabil. Rehabil. 2009, 31, 2216–2222. [Google Scholar] [CrossRef]

- Bakshi, R.; Shaikh, Z.A.; Miletich, R.S.; Czarnecki, D.; Dmochowski, J.; Henschel, K.; Janardhan, V.; Dubey, N.; Kinkel, P.R. Fatigue in multiple sclerosis and its relationship to depression and neurologic disability. Mult. Scler. J. 2000, 6, 181–185. [Google Scholar]

- Schapiro, R.T. Symptom management in multiple sclerosis. Ann. Neurol. 1994, 36, S123–S129. [Google Scholar] [CrossRef]

- Polman, C.H.; Reingold, S.C.; Banwell, B.; Clanet, M.; Cohen, J.A.; Filippi, M.; Fujihara, K.; Havrdova, E.; Hutchinson, M.; Kappos, L.; et al. Diagnostic criteria for multiple sclerosis: 2010 revisions to the McDonald criteria. Ann. Neurol. 2011, 69, 292–302. [Google Scholar] [CrossRef]

- Brodkey, M.B.; Ben-Zacharia, A.B.; Reardon, J.D. Living Well with Multiple Sclerosis. Am. J. Nurs. 2011, 111, 40–48. [Google Scholar]

- Grandisson, M.; Hébert, M.; Thibeault, R. A systematic review on how to conduct evaluations in community-based rehabilitation. Disabil. Rehabil. 2013, 2013, 1–11. [Google Scholar] [CrossRef]

- Olaogun, M.O.B.; Nyante, G.G.G.; Ajediran, A.I. Overcoming the Barriers for Participation by the Disabled: An appraisal and global view of community-based rehabilitation in community development. Afr. J. Physiother. Rehabil. Sci. 2009, 1, 24–29. [Google Scholar]

- Chan, W.M.; Hjelm, N.M. The role of telenursing in the provision of geriatric outreach services to residential homes in Hong Kong. J. Telemed. Telecare 2001, 7, 38–46. [Google Scholar] [CrossRef]

- Azpiroz, J.; Barrios, F.A.; Carrillo, M.A.; Carrillo, R.; Cerrato, A.; Hernandez, J.; Leder, R.S.; Rodriguez, A.O.; Salgado, P. Game Motivated and Constraint Induced Therapy in Late Stroke with fMRI Studies Pre and Post Therapy. In Proceedings of the IEEE-EMBS 2005. 27th Annual International Conference of the Engineering in Medicine and Biology Society, Shanghai, China, 1–4 September 2005.

- Camurri, A.; Mazzarino, B.; Volpe, G.; Morasso, P.; Priano, F.; Re, C. Application of multimedia techniques in the physical rehabilitation of Parkinson’s patients. J. Vis. Comput. Animat. 2003, 14, 269–278. [Google Scholar] [CrossRef]

- Weiss, S.M.; Indurkhya, N.; Zhang, T.; Damerau, F.J. Text Mining: Predictive Methods for Analyzing Unstructured Information; Springer: Berlin, Germany, 2005. [Google Scholar]

- Yellowlees, P.M.; Holloway, K.M.; Parish, M.B. Therapy in virtual environments—clinical and ethical issues. Telemed. e-Health 2012, 18, 558–564. [Google Scholar] [CrossRef]

- Bart, O.; Agam, T.; Weiss, P.L.; Kizony, R. Using video-capture virtual reality for children with acquired brain injury. Disabil. Rehabil. 2011, 33, 1579–1586. [Google Scholar] [CrossRef]

- Betker, A.L.; Szturm, T.; Moussavi, Z.K.; Nett, C. Video game–based exercises for balance rehabilitation: A single-subject design. Arch. Phys. Med.rehabil. 2006, 87, 1141–1149. [Google Scholar] [CrossRef]

- Hailey, D.; Roine, R.; Ohinmaa, A.; Dennett, L. Evidence of benefit from telerehabilitation in routine care: A systematic review. J. Telemed. Telecare 2011, 17, 281–287. [Google Scholar] [CrossRef]

- Hailey, D.; Ohinmaa, A.; Roine, R. Study quality and evidence of benefit in recent assessments of telemedicine. J. Telemed. Telecare 2004, 10, 318–324. [Google Scholar] [CrossRef]

- Whitworth, E.; Lewis, J.A.; Boian, R.; Tremaine, M.; Burdea, G.; Deutsch, J.E. Formative Evaluation of a Virtual Reality Telerehabilitation System for the Lower Extremity. In Proceedings of the 2nd International Workshop on Virtual Rehabilitation (IWVR2003), Piscataway, NJ, USA, 21–22 September 2003.

- Zampolini, M.; Baratta, S.; Schifini, F.; Spitali, C.; Todeschini, E.; Bernabeu, M.; Tormos, J.M.; Opisso, E.; Magni, R.; Magnino, F.; et al. Upper Limb Telerehabilitation with Home Care and Activity Desk (HCAD) System. In Proceedings of the Virtual Rehabilitation, Venice, Italy, 27–29 September 2007.

- Epelde, G.; Carrasco, E.; Gomez-Fraga, I.; Vivanco, K.; Jimenez, J.M.; Rueda, O.; Bizkarguenaga, A.; Sevilla, D.; Sanchez, P. ERehab: Ubiquitous Multidevice Personalised Telerehabilitation Platform. In Proceedings of the AAL Forum 2012, Eindhoven, Netherlands, 24–27 September 2012.

- Bueno, A.; Marzo, J.L.; Vallejo, X. AXARM: An Extensible Remote Assistance and Monitoring Tool for ND Telerehabilitation. In Electronic Healthcare; Springer: Berlin, Germany, 2009; Volume 1, pp. 106–113. [Google Scholar]

- Mikołajewska, E.; Mikołajewski, D. Neurological telerehabilitation–current and potential future applications. J. Health Sci. 2011, 1, 7–14. [Google Scholar]

- Rogante, M.; Bernabeau, M.; Giacomozzi, C.; Hermens, H.; Huijgen, B.; Ilsbroukx, S.; Macellari, V. ICT for Home-Based Service to Maintain the Upper Limb Function in Ageing. In Proceedings of the 6th International Conference of the International Society for Gerontechnology (ISG’08), Pisa, Italy, 4–6 June 2008.

- Kolb, A.; Barth, E.; Koch, R.; Larsen, R. Time-of-flight sensors in computer graphics. Proc. Eurographics (State Art Re.) 2009, 2009, 119–134. [Google Scholar]

- Gokturk, S.B.; Yalcin, H.; Bamji, C. A Time-of-Flight Depth Sensor-System Description, Issues and Solutions. In Proceedings of the Conference on Computer Vision and Pattern Recognition Workshop, CVPRW’04, Washington, DC, USA, 27 May–02 June 2004.

- Omek Interactive. Available online: http://www.linkedin.com/company/omek-interactive (accessed on 28 October 2013).

- SoftKinetic, Inc. Available online: http://www.linkedin.com/company/softkinetic-inc (accessed on 28 October 2013).

- 3D Time of Flight Analog Output DepthSense® Image Sensors (OPT8130 and OPT8140). Available online: http://www.planar.ru/project//documents/44001_45000/44005/slab063.pdf (accessed on 29 October 2013).

- Intel Buys Israeli Startup Omek Interactive for Close to $50 Million. Available online: http://www.haaretz.com/business/.premium-1.536056 (accessed on 28 October 2013).

- Tracking Modes (Seated and Default). Available online: http://msdn.microsoft.com/en-us/library/hh973077.aspx, 2013 (accessed on 28 October 2013).

- Does OpenNI2/NiTE2 Support “Upper Body Only” Skeleton Tracking? Available online: http://community.openni.org/openni/topics/does_openni2_nite2_support_upper_body_only_skeleton_tracking (accessed on 28 October 2013).

- Brooke, J. SUS-A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 194. [Google Scholar]

- Uçan, O.N.; Öğüşlü, C.E. A non-linear technique for the enhancement of extremely non-uniform lighting images. J. Aeronaut. Sp. Technol. 2007, 3, 37–47. [Google Scholar]

- Danielsson, P.-E. Euclidean distance mapping. Comput. Gr. Image Process. 1980, 14, 227–248. [Google Scholar] [CrossRef]

- A summary of image segmentation techniques. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.138.6560&rep=rep1&type=pdf (accessed on 28 October 2013).

- Joshi, S.V.; Shire, A.N. A review of enhanced algorithm for color image segmentation. Int. J. Advanced Res. Comput. Sci. Softw. Eng. 2013, 3, 435–441. [Google Scholar]

- Plagemann, C.; Ganapathi, V.; Koller, D.; Thrun, S. Real-time Identification and Localization of Body Parts from Depth Images. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010.

- Schwarz, L.A.; Mkhitaryan, A.; Mateus, D.; Navab, N. Estimating Human 3D Pose from Time-of-Flight Images Based on Geodesic Distances and Optical Flow. In Proceedings of the IEEE International Conference on Automatic Face & Gesture Recognition and Workshops. (FG 2011), Santa Barbara, CA, USA, 21–25 March 2011.

- Zhang, J.; Siddiqi, K.; Macrini, D.; Shokoufandeh, A.; Dickinson, S. Retrieving Articulated 3-D Models Using Medial Surfaces and Their Graph Spectra. In Energy Minimization Methods in Computer Vision and Pattern Recognition.; Springer: Berlin, Germany, 2005. [Google Scholar]

- Thome, N.; Merad, D.; Miguet, S. Human Body Part Labeling and Tracking Using Graph Matching Theory. In Proceedings of the IEEE International Conference on Video and Signal Based Surveillance, AVSS’06, Sydney, Australia, 22–24 November 2006.

- Witkin, A. Interpolation between Model Poses Using Inverse Kinematics. U.S. Patent 8,358,311, 22 January 2013. [Google Scholar]

- Schwarz, L.A.; Mkhitaryan, A.; Mateus, D.; Navab, N. Human skeleton tracking from depth data using geodesic distances and optical flow. Image Vis. Comput. 2012, 30, 217–226. [Google Scholar] [CrossRef]

- El Gamal, A.; Eltoukhy, H. CMOS image sensors. Circuits Devices Mag., IEEE 2005, 21, 6–20. [Google Scholar] [CrossRef]

- Carlson, B.S. Comparison of Modern CCD and CMOS Image Sensor Technologies and Systems for Low Resolution Imaging. In Proceedings of the 1st International Conference on Sensors, IEEE, Orlando, Florida, USA, 12–14 June 2002.

- Lee, S.B.; Choi, O.; Ioraud, R. Time of Flight Cameras: Principles, methods, and applications; Springer: Berlin, Germany, 2013. [Google Scholar]

- Omek Beckon™ Development Suite. Available online: http://www.omekinteractive.com/content/Datasheet-Omek-BeckonDevelopmentSuite.pdf (accessed on 28 October 2013).

- Crabb, R.; Tracey, C.; Puranik, A.; Davis, J. Real-Time Foreground Segmentation via Range and Color Imaging. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, CVPRW’08, Anchorage, AK, USA, 23–28 June 2008.

- Schiller, I.; Koch, R. Improved Video Segmentation by Adaptive Combination of Depth Keying and Mixture-of-Gaussians. In Image Analysis; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Teichman, A.; Thrun, S. Learning to Segment and Track in RGBD. In Algorithmic Foundations of Robotics X; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Salas, J.; Tomasi, C. People Detection Using Color and Depth Images. In Pattern Recognition; Springer: Berlin, Germany, 2011. [Google Scholar]

- Moeslund, T.B.; Hilton, A.; Krüger, V. A survey of advances in vision-based human motion capture and analysis. Comput. Vis. Image Underst. 2006, 104, 90–126. [Google Scholar] [CrossRef]

- Wren, C.R.; Azarbayejani, A.; Darrell, T.; Pentland, A.P. Pfinder: Real-time tracking of the human body. IEEE Trans.Pattern Anal. Mach. Intell. 1997, 19, 780–785. [Google Scholar] [CrossRef]

- Brand, M. Shadow Puppetry. In Proceedings of the 7th IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999.

- Grauman, K.; Shakhnarovich, G.; Darrell, T. Inferring 3D Structure with a Statistical Image-Based Shape Model. In Proceedings of the 9th IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003.

- Sminchisescu, C.; Kanaujia, A.; Li, Z; Metaxas, D. Discriminative Density Propagation for 3D Human Motion Estimation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR, San Diego, CA, USA, 20–26 June 2005.

- Sigal, L.; Isard, M.; Haussecker, H.; Black, M.J. Loose-limbed people: Estimating 3D human pose and motion using non-parametric belief propagation. Int. J. Comput. Vis. 2012, 98, 15–48. [Google Scholar] [CrossRef]

- Merad, D.; Aziz, K.E.; Thome, N. Fast People Counting Using Head Detection from Skeleton Graph. In Proceedings of the 7th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Boston, MA, USA, , 29 August–1 September 2010.

- Miranda, L.; Vieira, T.; Martinez, D.; Lewiner, T.; Vieira, A.W.; Campos, M.F.M. Real-Time Gesture Recognition from Depth Data through Key Poses Learning and Decision Forests. In Proceedings of the 25th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Ouro Preto, Brazil, 22–25 August 2012.

- Boulic, R.; Varona, J.; Unzueta, L.; Peinado, M.; Suescun, A.; Perales, F. Evaluation of on-line analytic and numeric inverse kinematics approaches driven by partial vision input. Virtual Real. 2006, 10, 48–61. [Google Scholar] [CrossRef]

- Mitra, S.; Acharya, T. Gesture recognition: A survey. IEEE Trans. Syst. Man Cybern. C Appl. Rev. 2007, 37, 311–324. [Google Scholar] [CrossRef]

- Bodack, M.P.; Tunkel, R.S.; Marini, S.G.; Nagler, W. Spinal accessory nerve palsy as a cause of pain after whiplash injury: Case report. J. Pain Symptom Manag. 1998, 15, 321–328. [Google Scholar] [CrossRef]

- Talvitie, U.; Reunanen, M. Interaction between physiotherapists and patients in stroke treatment. Physiother. 2002, 88, 77–88. [Google Scholar] [CrossRef]

- Kauppi, M.; Leppänen, L.; Heikkilä, S.; Lahtinen, T.; Kautiainen, H. Active conservative treatment of atlantoaxial subluxation in rheumatoid arthritis. Rheumatol. 1998, 37, 417–420. [Google Scholar] [CrossRef]

- Nilsson, B.-M.; Söderlund, A. Head posture in patients with whiplash-associated disorders and the measurement method’s reliability-A comparison to healthy subjects. Adv. Physiother. 2005, 7, 13–19. [Google Scholar]

- de Haart, M.; Geurts, A.C.; Huidekoper, S.C.; Fasotti, L.; van Limbeek, J. Recovery of standing balance in postacute stroke patients: a rehabilitation cohort study. Arch. Phys. Med. Rehabil. 2004, 85, 886–895. [Google Scholar] [CrossRef]

- Zhang, L.; Hsieh, J.-C.; Wang, J. A Kinect-Based Golf Swing Classification System Using HMM and Neuro-Fuzzy. In Proceedings of the 2012 International Conference on Computer Science and Information Processing (CSIP), Xi’an, China, 24–26 August 2012.

- French, B.J.; Ferguson, K.R. Testing and Training System for Assessing Movement and Agility Skills without a Confining Field. U.S. Patent 6,098,458, 8 August 2000. [Google Scholar]

- Tsuji, T.; Sumida, Y.; Kaneko, M.; Kawamura, S. A virtual sports system for skill training. J. Robotics Mechatron. 2000, 13, 168–175. [Google Scholar]

- Roston, G.P.; Peurach, T. A Whole Body Kinesthetic Display Device for Virtual Reality Applications. In Proceedings of the 1997 IEEE International Conference on Robotics and Automation, Albuquerque, NM, USA, 20–25 Apirl 1997.

- Barra, J.; Marquer, A.; Joassin, R.; Reymond, C.; Metge, L.; Chauvineau, V.; Pérennou, D. Humans use internal models to construct and update a sense of verticality. Brain 2010, 133, 3552–3563. [Google Scholar] [CrossRef]

- Verheyden, G.; Nieuwboer, A.; van de Winckel, A.; De Weerdt, W. Clinical tools to measure trunk performance after stroke: A systematic review of the literature. Clin. Rehabil. 2007, 21, 387–394. [Google Scholar] [CrossRef]

- Mouchnino, L.; Aurenty, R.; Massion, J.; Pedotti, A. Coordination between equilibrium and head-trunk orientation during leg movement: A new strategy build up by training. J. Neurophysiol. 1992, 67, 1587–1598. [Google Scholar]

- Fortin, C.; Feldman, D.E.; Cheriet, F.; Labelle, H. Clinical methods for quantifying body segment posture: A literature review. Disabil. Rehabil. 2011, 33, 367–383. [Google Scholar] [CrossRef] [Green Version]

- Hall, S.J. Basic Biomechanics, 5th ed.; Lavoisier: Cachan, France, 2007. [Google Scholar]

- Bangor, A.; Kortum, P.; Miller, J. Determining what individual SUS scores mean: Adding an adjective rating scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

- Fragoso, Y.D.; Santana, D.L.B.; Pinto, R.C. The positive effects of a physical activity program for multiple sclerosis patients with fatigue. NeuroRehabilitation 2008, 23, 153–157. [Google Scholar]

- Beckerman, H.; de Groot, V.; Scholten, M.A.; Kempen, J.C.E.; Lankhorst, G.J. Physical activity behavior of people with multiple sclerosis: Understanding how they can become more physically active. Phys. Ther. 2010, 90, 1001–1013. [Google Scholar] [CrossRef]

- Gulick, E.E. Symptom and activities of daily living trajectory in multiple sclerosis: A 10-year study. Nurs. Res. 1998, 47, 137–146. [Google Scholar] [CrossRef]

- Terré-Boliart, R.; Orient-López, F. Tratamiento rehabilitador en la esclerosis múltiple. Rev. Neurol. 2007, 44, 426–431. [Google Scholar]

- LaRocca, N.G.; Kalb, R.C. Efficacy of rehabilitation in multiple sclerosis. Neurorehabilitation Neural Repair 1992, 6, 147–155. [Google Scholar] [CrossRef]

- Occupational therapy: Performance, participation, and well-being. Available online: http://media.matthewsbooks.com.s3.amazonaws.com/documents/tocwork/155/9781556425301.pdf (accessed on 28 October 2013).

- Lacorte, S.; Fernandez‐Alba, A.R. Time of flight mass spectrometry applied to the liquid chromatographic analysis of pesticides in water and food. Mass Spectrum. Rev. 2006, 25, 866–880. [Google Scholar] [CrossRef]

- Pfeifer, T.; Schmitt, R.; Pavim, A.; Stemmer, M.; Roloff, M.; Schneider, C.; Doro, M. Cognitive Production Metrology: A new Concept for Flexibly Attending the Inspection Requirements of Small Series Production. In Proceedings of the 36th International MATADOR Conference, 14–16 July 2010; Springer: Manchester, UK.

- Rodríguez, A.; Rey, B.; Alcañiz, M.; Bañosb, R.; Guixeresa, J.; Wrzesiena, M.; Gomeza, M.; Pereza, D.; Rasalb, P.; Parraa, E. Annual Review of Cybertherapy and Telemedicine 2012: Advanced Technologies in the Behavioral, Social and Neurosciences; ISO Press Bv: Amsterdam, Netherlands, 2012. [Google Scholar]

- Santos, C.; Paterson, R.R.M.; Venâncio, A.; Lima, N. Filamentous fungal characterizations by matrix-assisted laser desorption/ionization time-of-flight mass spectrometry. J. Appl. Microbiol. 2010, 108, 375–385. [Google Scholar] [CrossRef] [Green Version]

- Stevenson, L.G.; Drake, S.K.; Murray, P.R. Rapid identification of bacteria in positive blood culture broths by matrix-assisted laser desorption ionization-time of flight mass spectrometry. J. Clin. Microbial. 2010, 48, 444–447. [Google Scholar] [CrossRef]

© 2013 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Eguíluz, G.; García Zapirain, B. Use of a Time-of-Flight Camera With an Omek Beckon™ Framework to Analyze, Evaluate and Correct in Real Time the Verticality of Multiple Sclerosis Patients during Exercise. Int. J. Environ. Res. Public Health 2013, 10, 5807-5829. https://doi.org/10.3390/ijerph10115807

Eguíluz G, García Zapirain B. Use of a Time-of-Flight Camera With an Omek Beckon™ Framework to Analyze, Evaluate and Correct in Real Time the Verticality of Multiple Sclerosis Patients during Exercise. International Journal of Environmental Research and Public Health. 2013; 10(11):5807-5829. https://doi.org/10.3390/ijerph10115807

Chicago/Turabian StyleEguíluz, Gonzalo, and Begoña García Zapirain. 2013. "Use of a Time-of-Flight Camera With an Omek Beckon™ Framework to Analyze, Evaluate and Correct in Real Time the Verticality of Multiple Sclerosis Patients during Exercise" International Journal of Environmental Research and Public Health 10, no. 11: 5807-5829. https://doi.org/10.3390/ijerph10115807