Automated Ecological Assessment of Physical Activity: Advancing Direct Observation

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants and Procedures

2.2. Activity Session Protocol

2.3. Measures

2.3.1. Video

2.3.2. Accelerometers

2.4. Computer Vision Algorithm Development

2.5. Analyses

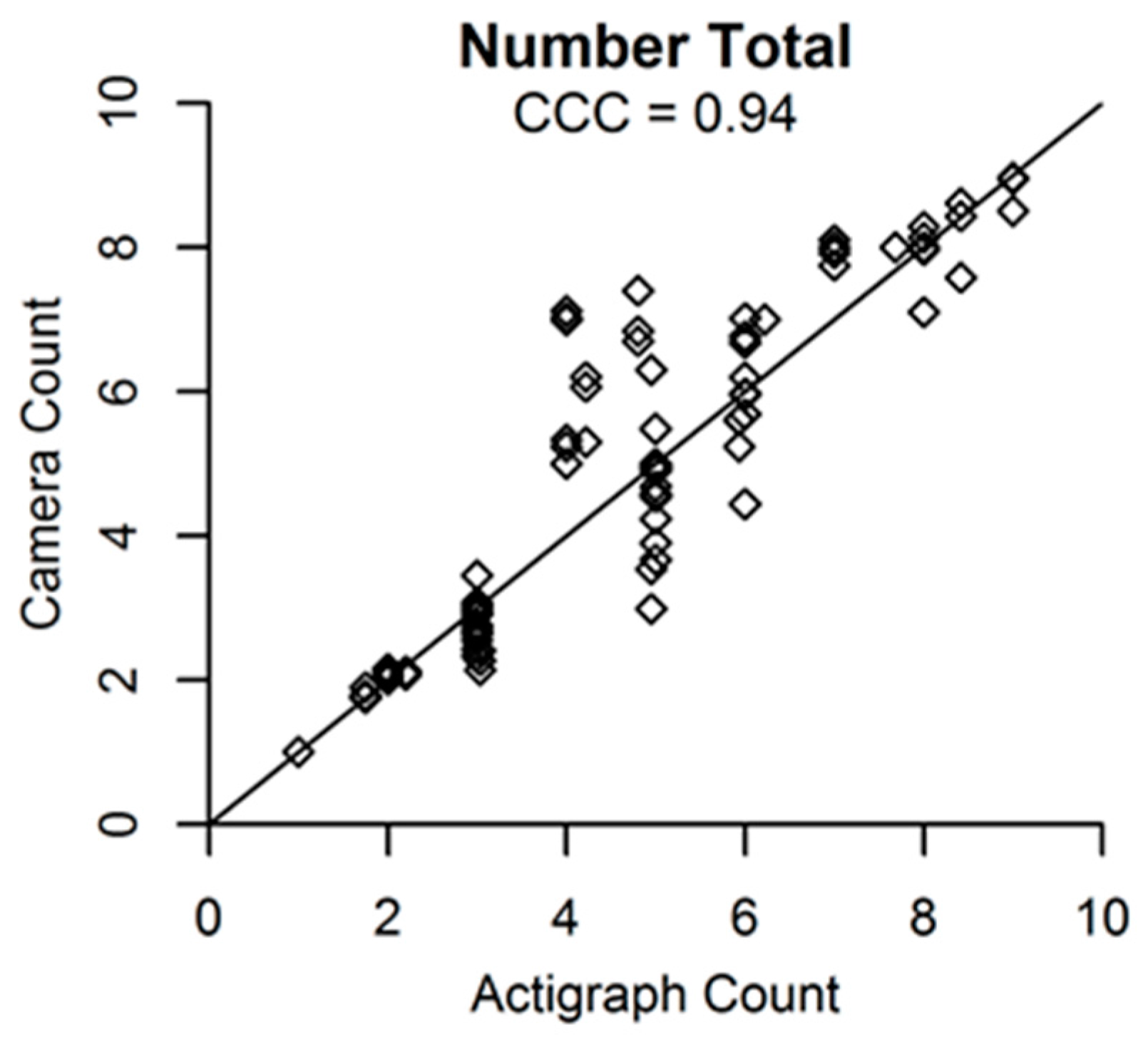

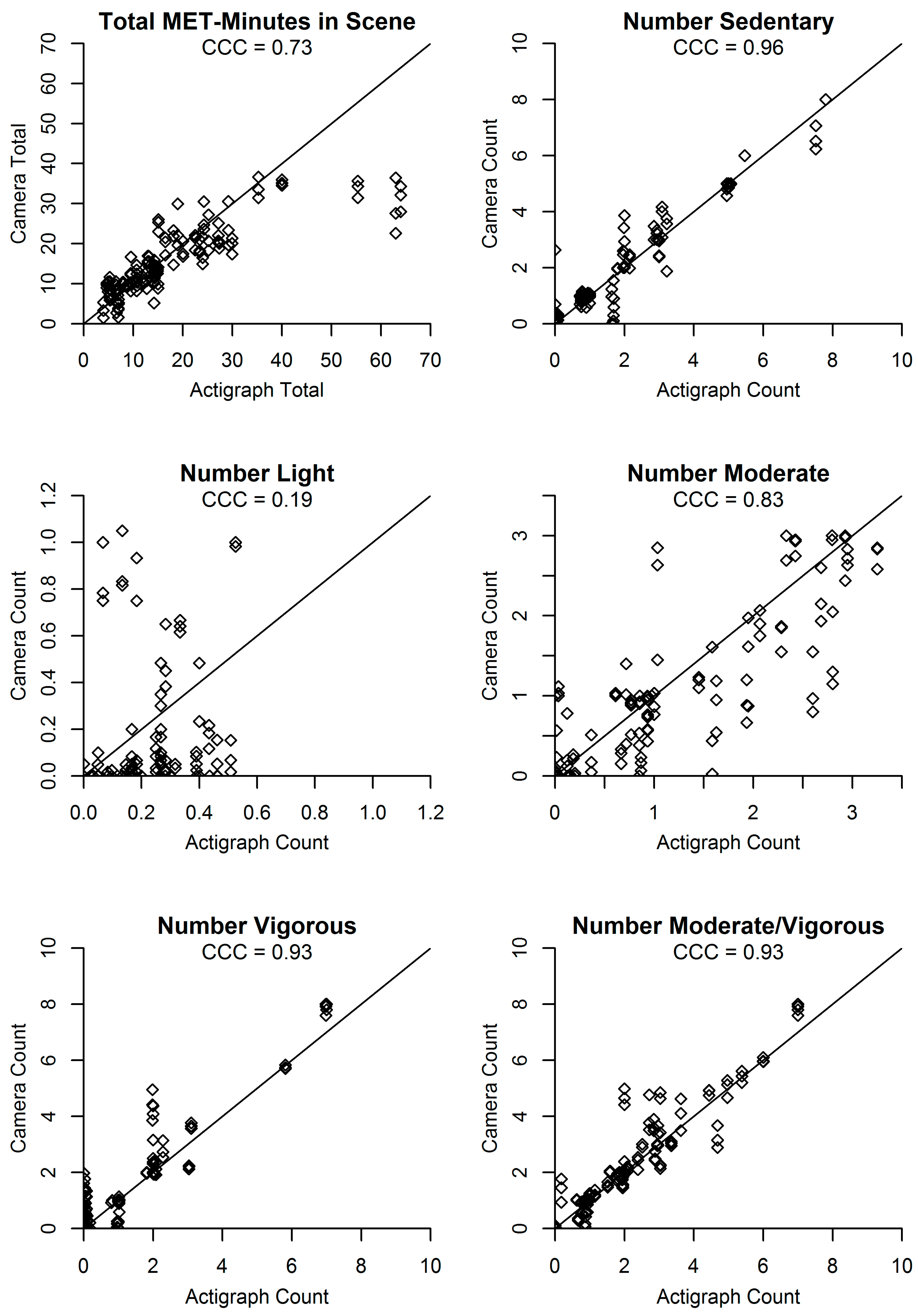

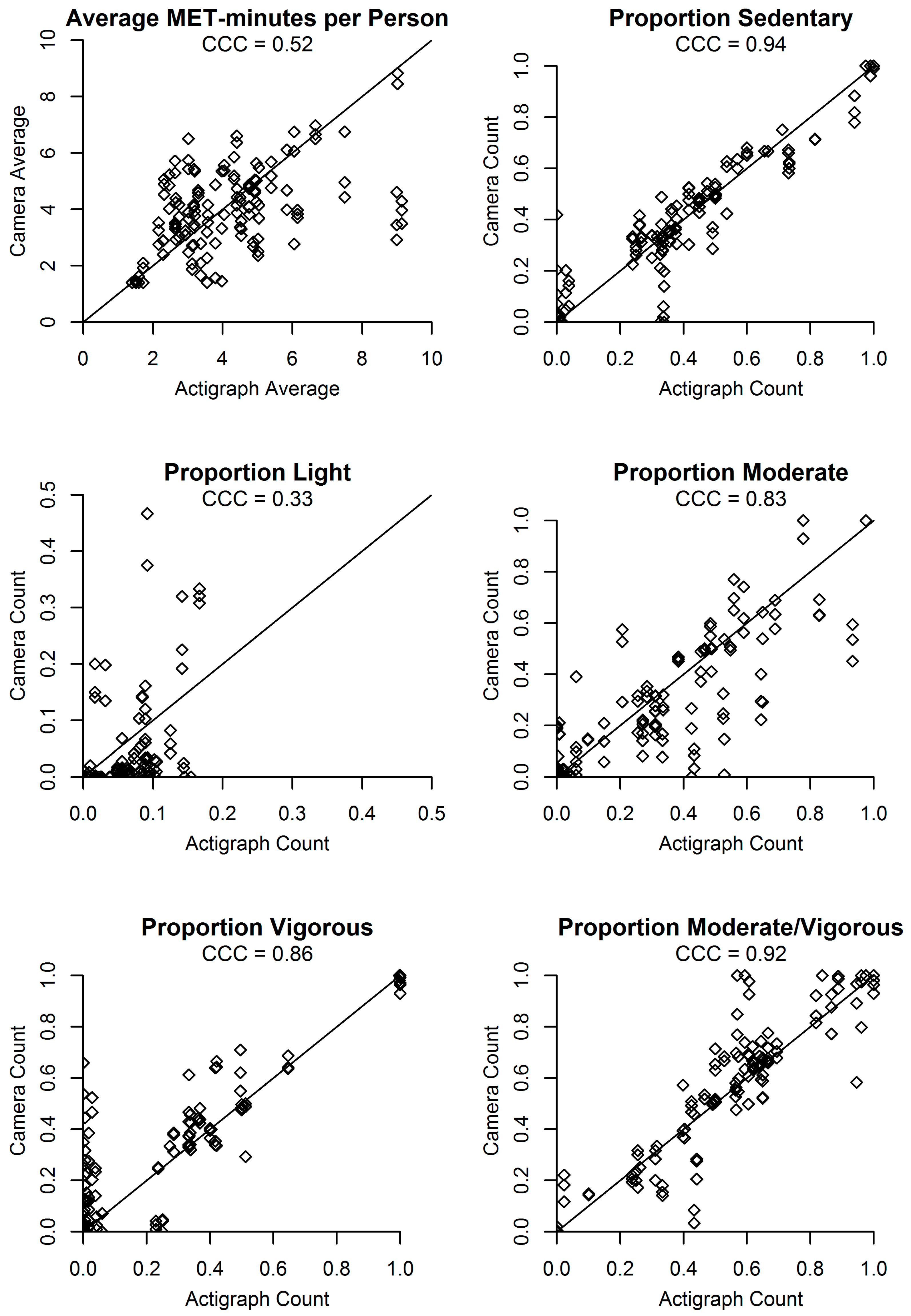

3. Results

3.1. Minute-Level Validity

3.2. Second-Level Validity

4. Discussion

Strengths, Limitations, and Future Directions

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A

| Minute | Combination Number a | Total Number of People | Blocks | ||

|---|---|---|---|---|---|

| Group A | Group B | Group C | |||

| 0 | 1 | 3 | Three people sitting individually | ||

| 1 | 1 | 3 | |||

| 2 | 1 | 3 | |||

| 3 | 1 | 3 | |||

| 4 | 1 | 3 | |||

| 5 | 1 | 3 | |||

| 6 | 1 | 3 | |||

| 7 | 2 | 2 | Two people standing together | ||

| 8 | 2 | 2 | |||

| 9 | 2 | 2 | |||

| 10 | 3 | 1 | One person walking | ||

| 11 | 3 | 1 | |||

| 12 | 3 | 1 | |||

| 13 | 10 | 5 | Two people jogging individually | Two people walking together | |

| 14 | 10 | 5 | |||

| 15 | 10 | 5 | |||

| 16 | 14 | 5 | Two people standing individually | ||

| 17 | 9 | 6 | Two people standing individually | ||

| 18 | 9 | 6 | |||

| 19 | 9 | 6 | |||

| 20 | 5 | 8 | Four people sitting individually | ||

| 21 | 5 | 8 | |||

| 22 | 5 | 8 | |||

| 23 | 5 | 8 | Two people sitting individually | ||

| 24 | 7 | 8 | Two people jogging together | ||

| 25 | 7 | 8 | |||

| 26 | 9 | 3 | One person standing | ||

| 27 | 9 | 3 | |||

| 28 | 9 | 3 | |||

| 29 | 8 | 3 | Two people walking together | ||

| 30 | 8 | 3 | |||

| 31 | 8 | 3 | |||

| 32 | 8 | 3 | |||

| 33 | 8 | 3 | |||

| 34 | 8 | 7 | Three people sitting individually | Two people standing together | |

| 35 | 5 | 5 | |||

| 36 | 7 | 4 | One person jogging | ||

| 37 | 7 | 4 | |||

| 38 | 6 | 4 | One person walking | ||

| 39 | 6 | 4 | |||

| Variables | Criterion | Camera 1 | Camera 2 | Camera 3 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Mean (SD) | Bias (SD) | %Bias (SD) | ICC | Bias (SD) | %Bias (SD) | ICC | Bias (SD) | %Bias (SD) | ICC | |

| Activity volume variables | ||||||||||

| Number people in scene | 4.47 (2.16) | 0.18 (0.94) | 4.0% (21.0) | 0.91 | 0.13 (1.16) | 2.9% (26.0) | 0.87 | 0.21 (1.00) | 4.7% (22.4) | 0.9 |

| Number of people sedentary | 1.95 (1.97) | 0.01 (0.65) | 0.5% (33.3) | 0.95 | 0.04 (1.06) | 2.1% (54.4) | 0.86 | 0.13 (0.86) | 6.7% (44.1) | 0.91 |

| Number of people light | 0.20 (0.45) | −0.05 (0.55) | −25.0% (275.0) | 0.1 | −0.07 (0.54) | −35.0% (270.0) | 0.07 | −0.06 (0.54) | −30.0% (270.0) | 0.12 |

| Number of people moderate | 1.01 (1.11) | −0.11 (0.93) | −10.9% (92.1) | 0.64 | −0.12 (0.95) | −11.9% (94.1) | 0.63 | −0.07 (0.95) | −6.9% (94.1) | 0.63 |

| Number of people vigorous | 1.30 (1.84) | 0.33 (1.08) | 25.4% (83.1) | 0.85 | 0.28 (1.09) | 21.5% (83.8) | 0.84 | 0.22 (1.06) | 16.9% (81.5) | 0.86 |

| Number of people in MVPA | 2.32 (1.87) | 0.22 (1.03) | 9.5% (44.4) | 0.86 | 0.16 (1.04) | 6.9% (44.8) | 0.86 | 0.15 (0.98) | 6.5% (42.2) | 0.88 |

| Total MET-minutes in scene | 19.1 (15.1) | −3.1 (10.2) | −16.0% (53.4) | 0.67 | −4.2 (11.1) | −21.7% (58.1) | 0.56 | −2.88 (11.0) | −15.1% (57.7) | 0.6 |

| Activity distribution variables | ||||||||||

| Proportion of people sedentary | 0.40 (0.30) | −0.01 (0.14) | −2.5% (35.0) | 0.89 | −0.01 (0.20) | −2.5% (50.0) | 0.79 | 0 (0.16) | 0% (40.0) | 0.87 |

| Proportion of people light | 0.05 (0.13) | −0.01 (0.17) | −20.0% (340.0) | 0.11 | −0.02 (0.15) | −40.0% (300.0) | 0.13 | −0.01 (0.16) | −20.0% (320.0) | 0.13 |

| Proportion of people moderate | 0.29 (0.31) | −0.03 (0.25) | −10.3% (86.2) | 0.66 | −0.03 (0.27) | −10.3% (93.1) | 0.64 | −0.03 (0.26) | −10.3% (89.7) | 0.64 |

| Proportion of people vigorous | 0.26 (0.29) | 0.05 (0.21) | 19.2% (80.8) | 0.76 | 0.06 (0.22) | 23.1% (84.6) | 0.72 | 0.04 (0.22) | 15.4% (84.6) | 0.75 |

| Proportion of people MVPA | 0.54 (0.31) | 0.03 (0.19) | 5.6% (35.2) | 0.81 | 0.03 (0.22) | 5.6% (40.7) | 0.76 | 0.01 (0.20) | 1.9% (37.0) | 0.8 |

| Average MET-minutes per person | 4.20 (2.05) | −0.30 (2.12) | −7.1% (50.5) | 0.46 | −0.35 (2.52) | −8.3% (60.0) | 0.31 | −0.09 (2.31) | −2.1% (55.0) | 0.4 |

References

- McKenzie, T.L. 2009 CH McCloy lecture seeing is believing: Observing physical activity and its contexts. Res. Q. Exerc. Sport 2010, 81, 113–122. [Google Scholar] [PubMed]

- McKenzie, T.L.; Van Der Mars, H. Top 10 research questions related to assessing physical activity and its contexts using systematic observation. Res. Q. Exerc. Sport 2015, 86, 13–29. [Google Scholar] [CrossRef] [PubMed]

- McKenzie, T.L.; Cohen, D.A.; Sehgal, A.; Williamson, S.; Golinelli, D. System for Observing Play and Recreation in Communities (SOPARC): Reliability and feasibility measures. J. Phys. Act. Health 2006, 3, S208–S222. [Google Scholar] [CrossRef] [PubMed]

- McKenzie, T.L.; Sallis, J.F.; Nader, P.R. SOFIT: System for observing fitness instruction time. J. Teach. Phys. Educ. 1992, 11, 195–205. [Google Scholar] [CrossRef]

- Cohen, D.A.; Han, B.; Isacoff, J.; Shulaker, B.; Williamson, S.; Marsh, T.; McKenzie, T.L.; Weir, M.; Bhatia, R. Impact of Park Renovations on park use and park-based physical activity. J. Phys. Act. Health 2015, 12, 289–295. [Google Scholar] [CrossRef] [PubMed]

- Cohen, D.A.; Han, B.; Nagel, C.J.; Harnik, P.; McKenzie, T.L.; Evenson, K.R.; Marsh, T.; Williamson, S.; Vaughan, C.; Katta, S. The first national study of neighborhood parks: Implications for physical activity. Am. J. Prev. Med. 2016, 51, 419–426. [Google Scholar] [CrossRef] [PubMed]

- Hollis, J.L.; Williams, A.J.; Sutherland, R.; Campbell, E.; Nathan, N.; Wolfenden, L.; Morgan, P.J.; Lubans, D.R.; Wiggers, J. A systematic review and meta-analysis of moderate-to-vigorous physical activity levels in elementary school physical education lessons. Prev. Med. 2016, 86, 34–54. [Google Scholar] [CrossRef] [PubMed]

- Silva, P.; Santiago, C.; Reis, L.P.; Sousa, A.; Mota, J.; Welk, G. Assessing physical activity intensity by video analysis. Physiol. Meas. 2015, 36, 1037–1046. [Google Scholar] [CrossRef] [PubMed]

- Santiago, C.B.; Sousa, A.; Reis, L.P. Vision system for tracking handball players using fuzzy color processing. Mach. Vis. Appl. 2012, 24, 1055–1074. [Google Scholar] [CrossRef]

- Freedson, P.S.; Melanson, E.; Sirard, J. Calibration of the computer science and applications, Inc. accelerometer. Med. Sci. Sports Exerc. 1998, 30, 777–781. [Google Scholar] [CrossRef] [PubMed]

- Andrej, K.; George, T.; Sanketh, S.; Thomas, L.; Rahul, S.; Li, F.-F. Large-Scale Video Classification with Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Larouche, R.; Saunders, T.J.; John Faulkner, G.E.; Colley, R.; Tremblay, M. Associations between active school transport and physical activity, body composition, and cardiovascular fitness: A systematic review of 68 studies. J. Phys. Act. Health 2014, 11, 206–227. [Google Scholar] [CrossRef] [PubMed]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features with 3d Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Tieleman, T.; Hinton, G. RMSprop: Divide the gradient by a running average of its recent magnitude. In Coursera: Neural Networks for Machine Learning; Technical Report; University of Toronto: Toronto, ON, Canada, 2012. [Google Scholar]

- Shrout, P.E.; Fleiss, J.L. Intraclass correlations: Uses in assessing rater reliability. Psychol. Bull. 1979, 86, 420–428. [Google Scholar] [CrossRef] [PubMed]

- Lin, L.; Hedayat, A.S.; Sinha, B.; Yang, M. Statistical methods in assessing agreement: Models, issues, and tools. J. Am. Stat. Assoc. 2002, 97, 257–270. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [PubMed]

- Trowbridge, M.J.; Huang, T.T.; Botchwey, N.D.; Fisher, T.R.; Pyke, C.; Rodgers, A.B.; Ballard-Barbash, R. Public health and the green building industry: Partnership opportunities for childhood obesity prevention. Am. J. Prev. Med. 2013, 44, 489–495. [Google Scholar] [CrossRef] [PubMed]

- Collins, L.M.; Murphy, S.A.; Bierman, K.L. A conceptual framework for adaptive preventive interventions. Prev. Sci. 2004, 5, 185–196. [Google Scholar] [CrossRef] [PubMed]

- Collins, L.M.; Murphy, S.A.; Strecher, V. The multiphase optimization strategy (MOST) and the sequential multiple assignment randomized trial (SMART): New methods for more potent eHealth interventions. Am. J. Prev. Med. 2007, 32 (Suppl. 5), S112–S118. [Google Scholar] [CrossRef] [PubMed]

- Rivera, D.E.; Pew, M.D.; Collins, L.M. Using engineering control principles to inform the design of adaptive interventions: A conceptual introduction. Drug Alcohol. Depend. 2007, 88 (Suppl. 2), S31–S40. [Google Scholar] [CrossRef] [PubMed]

- National Public Radio. In More Cities, a Camera on Every Corner, Park and Sidewalk. All Tech Considered, 2013. Available online: http://www.npr.org/blogs/alltechconsidered/2013/06/20/191603369/The-Business-Of-Surveillance-Cameras (accessed on 20 March 2017).

- Cable News Network (CNN). City of Tomorrow. 2014. Available online: http://www.cnn.com/interactive/2014/05/specials/city-of-tomorrow/?hpt=hp_c4#New%20York%20City (accessed on 20 March 2017).

- Placemeter. Placemeter Turns Video into Meaningful Data. 2017. Available online: http://www.placemeter.com/ (accessed on 20 March 2017).

- Washington University. Archive of Many Outdoor Scenes. AMOS, 2017. Available online: http://amos.cse.wustl.edu/ (accessed on 20 March 2017).

- Hipp, J.A.; Adlakha, D.; Eyler, A.A.; Gernes, R.; Kargol, A.; Stylianou, A.H.; Pless, R. Learning from Outdoor Webcams: Surveillance of Physical Activity Across Environments. In Seeing Cities through Big Data; Thakuriah, P.V., Tilahun, N., Zellner, M., Eds.; Springer: New York, NY, USA, 2017; pp. 471–490. [Google Scholar]

| Activity Block Combinations | Number of Simultaneous Activities | Number of Seconds of Data |

|---|---|---|

| Sitting only | 1 | 420 |

| Standing only | 1 | 540 |

| Walking only | 1 | 840 |

| Jogging only | 1 | 720 |

| Sitting and standing | 2 | 720 |

| Sitting and walking | 2 | 720 |

| Sitting and jogging | 2 | 780 |

| Standing and walking | 2 | 660 |

| Standing and jogging | 2 | 480 |

| Walking and jogging | 2 | 420 |

| Sitting, standing, and walking | 3 | 240 |

| Sitting, standing, and jogging | 3 | 240 |

| Sitting, walking, and jogging | 3 | 240 |

| Standing, walking, and jogging | 3 | 180 |

| Total | - | 7200 a |

| Characteristics | Number (%) |

|---|---|

| Seconds with 1–4 people in scene | 1322 (47.2%) |

| Seconds with 5–9 people in scene | 1478 (52.8%) |

| Seconds with ≥1 person sedentary | 2082 (74.4%) |

| Seconds with ≥1 person in light activity | 488 (17.4%) |

| Seconds with ≥1 person in moderate activity | 1594 (56.9%) |

| Seconds with ≥1 person in vigorous activity | 1509 (53.9%) |

| Seconds with ≥1 person in MVPA | 2454 (87.6%) |

| Seconds with <3 MET-seconds per person | 1358 (48.5%) |

| Seconds with ≥3 MET-seconds per person | 1442 (51.5%) |

| Variables | Criterion | Camera 1 | Camera 2 | Camera 3 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Mean (SD) | Bias (SD) | %Bias (SD) | ICC | Bias (SD) | %Bias (SD) | ICC | Bias (SD) | %Bias (SD) | ICC | |

| Activity volume variables | ||||||||||

| Number people in scene | 4.34 (2.09) | 0.15 (0.74) | 3.5% (17.1) | 0.94 | 0.08 (0.79) | 1.8% (18.2) | 0.93 | 0.18 (0.77) | 4.1% (17.7) | 0.94 |

| Number of people sedentary | 1.90 (1.89) | 0 (0.37) | 0% (19.5) | 0.98 | 0.01 (0.65) | 0.5% (34.2) | 0.94 | 0.11 (0.51) | 5.8% (26.8) | 0.96 |

| Number of people light | 0.20 (0.16) | −0.06 (0.27) | −30.0% (135.0) | 0.24 | −0.08 (0.29) | −40.0% (145.0) | 0.14 | −0.07 (0.27) | −35.0% (135.0) | 0.21 |

| Number of people moderate | 1.08 (1.02) | −0.12 (0.58) | −11.1% (53.7) | 0.83 | −0.12 (0.52) | −11.1% (48.1) | 0.85 | −0.09 (0.59) | −8.3% (54.6) | 0.83 |

| Number of people vigorous | 1.16 (1.70) | 0.32 (0.77) | 27.6% (66.4) | 0.90 | 0.28 (0.59) | 24.1% (50.9) | 0.93 | 0.22 (0.52) | 19.0% (44.8) | 0.95 |

| Number of people in MVPA | 2.24 (1.73) | 0.20 (0.74) | 8.9% (33.0) | 0.91 | 0.15 (0.57) | 6.7% (25.4) | 0.95 | 0.13 (0.61) | 5.8% (27.2) | 0.94 |

| Total MET-minutes in scene | 18.0 (14.1) | −1.8 (7.7) | −10.0% (42.8) | 0.79 | −3.1 (8.9) | −17.1% (49.4) | 0.68 | −1.7 (8.5) | −9.3% (47.2) | 0.72 |

| Activity distribution variables | ||||||||||

| Proportion of people sedentary | 0.40 (0.28) | −0.01 (0.08) | −2.5% (20.0) | 0.96 | 0 (0.12) | 0% (30.0) | 0.91 | 0.01 (0.08) | 2.5% (20.0) | 0.96 |

| Proportion of people light | 0.06 (0.05) | −0.02 (0.08) | −33.3% (133.3) | 0.33 | −0.02 (0.07) | −33.3% (116.7) | 0.32 | −0.02 (0.08) | −33.3% (133.3) | 0.36 |

| Proportion of people moderate | 0.31 (0.28) | −0.03 (0.15) | −9.7% (48.4) | 0.85 | −0.04 (0.16) | −12.9% (51.6) | 0.82 | −0.03 (0.15) | −9.7% (48.4) | 0.84 |

| Proportion of people vigorous | 0.23 (0.28) | 0.06 (0.14) | 26.1% (60.9) | 0.86 | 0.06 (0.14) | 26.1% (60.9) | 0.85 | 0.04 (0.13) | 17.4% (56.5) | 0.88 |

| Proportion of people MVPA | 0.54 (0.28) | 0.02 (0.11) | 3.7% (20.4) | 0.93 | 0.03 (0.13) | 5.6% (24.1) | 0.90 | 0.01 (0.11) | 1.9% (20.4) | 0.93 |

| Average MET-minutes per person | 4.08 (1.92) | −0.19 (1.58) | −4.7% (38.7) | 0.59 | −0.34 (1.85) | −8.3% (45.3) | 0.43 | 0.04 (1.69) | 1.0% (41.4) | 0.56 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Carlson, J.A.; Liu, B.; Sallis, J.F.; Kerr, J.; Hipp, J.A.; Staggs, V.S.; Papa, A.; Dean, K.; Vasconcelos, N.M. Automated Ecological Assessment of Physical Activity: Advancing Direct Observation. Int. J. Environ. Res. Public Health 2017, 14, 1487. https://doi.org/10.3390/ijerph14121487

Carlson JA, Liu B, Sallis JF, Kerr J, Hipp JA, Staggs VS, Papa A, Dean K, Vasconcelos NM. Automated Ecological Assessment of Physical Activity: Advancing Direct Observation. International Journal of Environmental Research and Public Health. 2017; 14(12):1487. https://doi.org/10.3390/ijerph14121487

Chicago/Turabian StyleCarlson, Jordan A., Bo Liu, James F. Sallis, Jacqueline Kerr, J. Aaron Hipp, Vincent S. Staggs, Amy Papa, Kelsey Dean, and Nuno M. Vasconcelos. 2017. "Automated Ecological Assessment of Physical Activity: Advancing Direct Observation" International Journal of Environmental Research and Public Health 14, no. 12: 1487. https://doi.org/10.3390/ijerph14121487

APA StyleCarlson, J. A., Liu, B., Sallis, J. F., Kerr, J., Hipp, J. A., Staggs, V. S., Papa, A., Dean, K., & Vasconcelos, N. M. (2017). Automated Ecological Assessment of Physical Activity: Advancing Direct Observation. International Journal of Environmental Research and Public Health, 14(12), 1487. https://doi.org/10.3390/ijerph14121487