Human Factor Analysis (HFA) Based on a Complex Network and Its Application in Gas Explosion Accidents

Abstract

:1. Introduction

- (1)

- Quadrant 1 is future-oriented quantitative research, mainly including safety prediction research and exploratory empirical research. This section mainly focuses on empirical research, involving empirical research on the influencing factors of human safety behavior, such as job burnout [7] and the influence of safety climate on safety behavior [8,9].

- (2)

- Quadrant 2 is quantitative studies based on existing data, which mainly analyze the frequency, main influencing factors and paths of target human errors through the study of accident cases. Existing studies mostly focused on the analysis of the occurrence frequency of human errors and their causative factors, such as in [10,11]. A few studies conducted a more in-depth comprehensive analysis on the path of human errors, such as [12].

- (3)

- Quadrant 3 is based on the qualitative analysis of the past, focusing on the mode of analysis of human error. The representative one is the human factor analysis and classification system (HFACS) proposed by scholars in [13], which describes the causes of human errors at four levels: ➀ organizational influence; ➁ unsafe supervision; ➂ the preconditions of unsafe behavior; and ➃ the unsafe behavior of actors. This method has been applied to civil aviation [14], maritime [15], coal mining [16], railway [17], and other systems to facilitate the investigation and analysis of accidents and its human errors. As a result, further improved or more applicable human error analysis models were generated, such as HFACS-MA [18], HFACS-OGI [19], HFACS-CM [16], etc. In addition, there is another HFA model from the perspective of information and cognition [2,20,21]. In recent years, with the development of safety information cognition, this perspective also developed from the micro-level to the macro-level. Needless to say, HFAs from different perspectives have different emphases. To improve the systematicness and pertinence of human error prevention, we should treat them with an inclusive attitude.

- (4)

- Quadrant 4 is future-oriented qualitative analyses, which systematically analyze the possible influencing factors in the system on the basis of the existing human causation model, as to reduce the influence of the uncertainty of complex systems on safety behavior. The final results are often presented in an HFA list, e.g., as in [2,22].

2. HFA Network

2.1. HFA Network Based on Signal-Noise Dependence

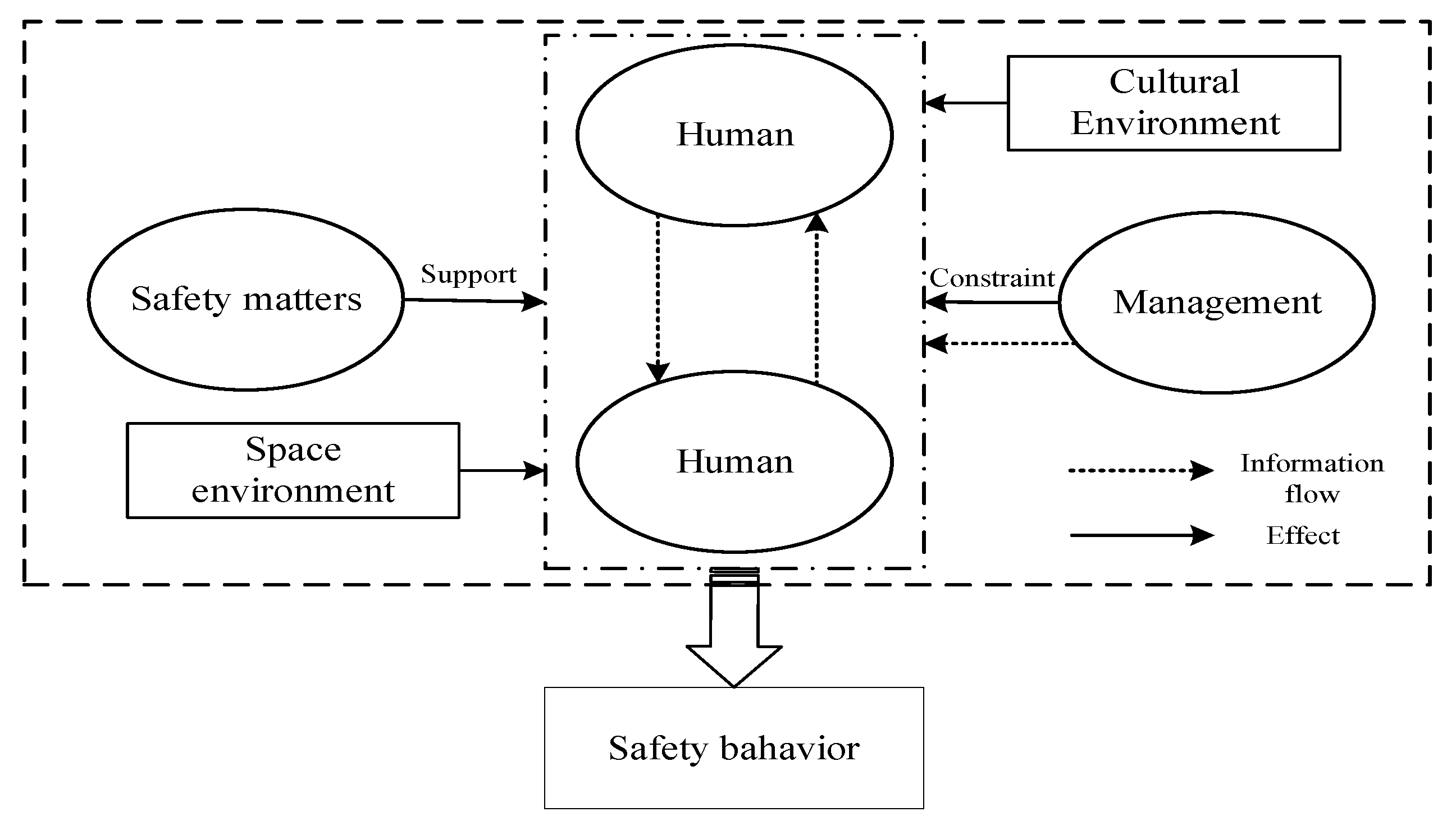

- (1)

- In a safety system, humans mainly include safety managers and operators, and their behaviors include the basic safety behaviors of life and production, summarized as safety interaction behaviors (human–matter interaction, human–environment interaction), which are the direct or driving factors of accidents. The state maintenance and function of environment and matters depends on safety behavior [29].

- (2)

- Environment is the medium and state of human and matters in the system, which mainly refers to the space environment of the system, involving temperature, humidity, noise, light and shade, cleanliness, and so on. For example, high temperatures may cause device faults; too dim lighting can make it hard to find the potential hazard.

- (3)

- Matters are not only related to production, but also to safety, such as safety facilities, safety equipment, etc., which are important supports for safety perception, cognition, and execution. Matters and the environment are mutually coupled—matters can impact, change, or control the environment of the system, and the environment then affects humans and organizations in the system. In addition, due to the correlation between physical structures, cascading effects may occur between physical structures, thus causing damage to safety matters [30].

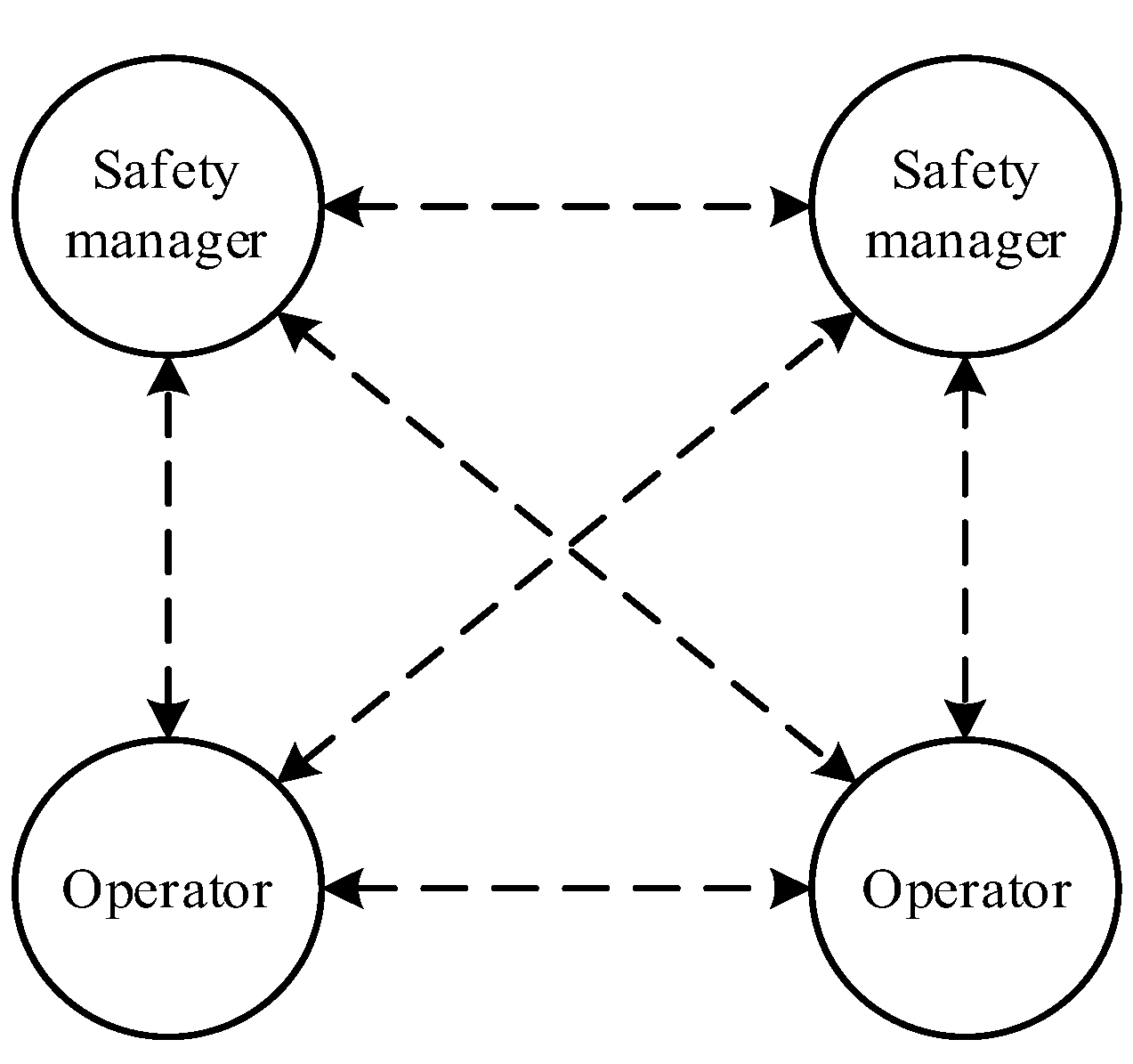

2.2. HFA Network Based on Information Dependence

- (1)

- SIS. The lack of safety information caused by SIS is a common cause of human accidents [32]. It can be understood as the timely transmission of useful information by the manager to the subordinate. In the whole process of safety production, SIS from safety managers to operators is one of the most important links to prevent human errors, as the information mainly involves safety publicity, safety education, and safety training. Open and frequent SIS is significant as it helps workers to make sense of conflicting priorities, reduces ambiguity, and provides a basis for consensus about appropriate ways of working [31].

- (2)

- SIF. SIF refers to the new regulations of the safety state of the system made up of humans and man-made subsystems in the form of safety information that adjust the safety structure, safety function, and safety behavior in the system [33]. Among them, SIF has positive incentive and reverse error correction effects (i.e., positive feedback and negative feedback) for human behavior. Especially for negative feedback, timely SIF promotes timely improvement and optimization of safety operation behavior and safety management behavior. On the contrary, untimely, inadequate, or incorrect SIF leads to the failure of the subject to find their own behavior defects.

- (3)

- SIE. SIE is an exchange of views between subjects on a specific risk issue. This process includes three links of raising questions, transferring safety information to the target subject, and outputting relevant knowledge, opinions, and other information to the target subject. It actually covers the above two safety communication modes (i.e., the link of information output is SIS or SIF, or the combination of both). However, the difference is that this mode is led by active safety communication, whereas the first two are led by passive safety communication.

2.3. HFA Network Based on Constraint Dependence

- (1)

- A safety rule is a safe state of the system or a safe manner of acting in response to a forecast, established before an event, and imposed by the operators and managers of the micro-system as a means of improving safety or achieving the required safety level [34]. As a subset of safety rules, safety regulations are rules implemented by administrative agencies or independent agencies with legal effects, which influence safety behaviors by clarifying what behaviors are illegal and the punishment of violations [35]. These rules promote individuals and organizations to avoid the occurrence of violations in the form of laws and regulations, departmental rules, industry regulations, policies, safety standards, and enterprise regulations.

- (2)

- The essence of regulations is that they are imposed by an authority on an organization or individual that must comply and are backed by some form of institutionalized sanction for noncompliance [36]. Additionally, that management is an important part of the system in which responsibilities and codes of conduct are assigned to safety-related individuals and organizations. Therefore, the management-based SC is based on the role of the regulation-based SC and restricts safety behavior through supervision, inspection, auditing, and safety management, to specifically clarify what should be, cannot be, and how to implement SCs that involve humans, substances, machines, facilities, craft processes, environment, management, etc.

2.4. HFA Network from a Comprehensive Perspective

3. System Safety Method of HFA Based on Complex Network and Its Application

3.1. Proposal of the Method

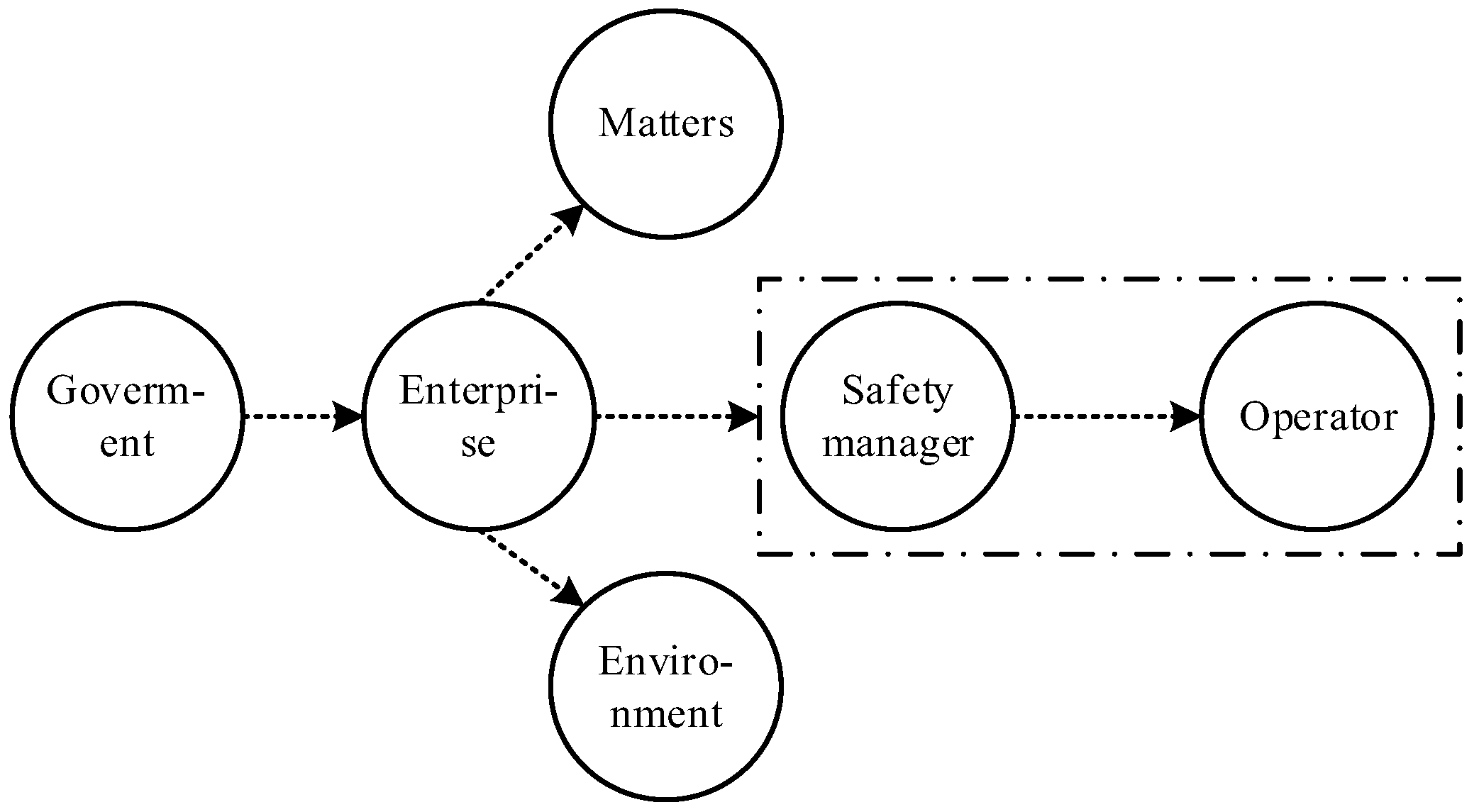

- (1)

- Identify systems to be analyzed and investigate and collect data. A system is an organic whole with specific functions combined with several components that are interdependent and interact with each other [25]. In different systems, the system structures differ significantly. Therefore, the boundary of the micro-system to be analyzed should be divided, and on this basis, all the relevant social and physical structures and their dependence relationships in the system should be clarified. Finally, the relationship between enterprises and relevant government departments is clarified from a macro-perspective.

- (2)

- Determine the type of accident to be studied and summarize the human errors that can lead to the accident. When determining the influencing factors of a specific accident, a causal analysis should be used for inference and deduction, such as the FTA or fishbone diagram, to summarize all the human errors that may lead to the accident.

- (3)

- Based on the “directed edge” in the complex network, deductive analysis is conducted from the perspective of specific human error, and all the causative factors and causative paths are summarized. In the HFA of target human factor errors, we plan to analyze them in stages from bottom to top based on the HFA network shown in Figure 5. There is a cascading effect among the influencing factors of the adjacent side of the department, thus forming a specific human error causation path.

- (4)

- Draw up an HFA network and carry out risk assessment and control. Based on influencing factors and the causative path of human error, an HFA network is drawn, which facilitates the development of the current assessment of human error. Risk assessment focuses on two perspectives: on the one hand, assess the vulnerability of each node; on the other hand, evaluate the influence degree of each node in the network on human error. On this basis, take measures to control the risk.

- (5)

- Develop an HFA network manual. To reduce the probability of human error, a targeted HFA network manual is developed according to specific subjects, where all related factors and paths that may lead to human error can be clarified, and specific countermeasures can also be put forward. To adapt to the dynamics of the system, the HFA network manual should be updated accordingly. When it is found that part of a causation factor or type of accident has not been taken into account, additional consideration shall be given.

3.2. Application of the Method

- (1)

- Deductive analysis of human error causes and paths: taking gas inspectors as an example

- (2)

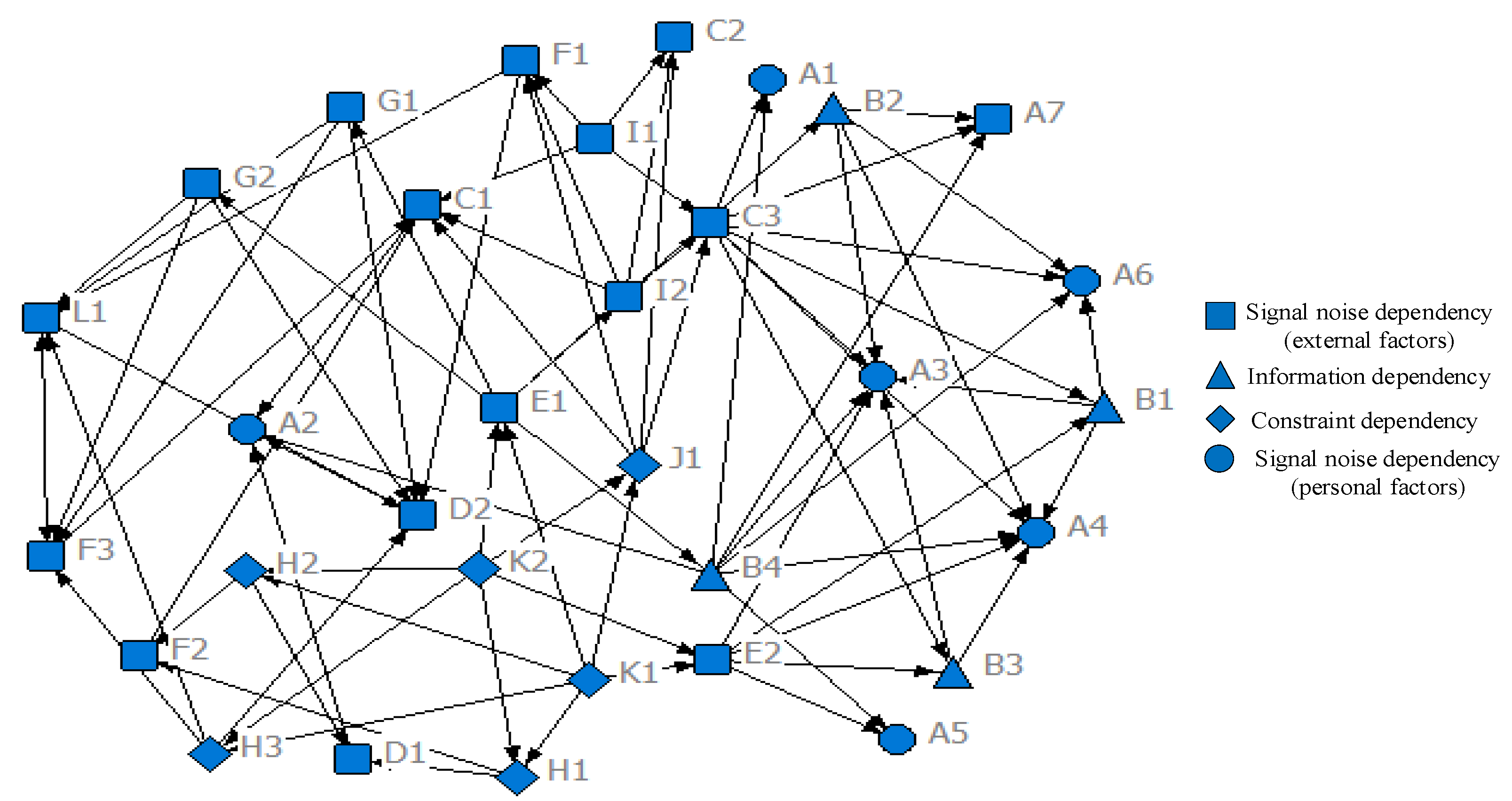

- HFA network construction and risk management

4. Network Analysis Method for Human Errors and Its Application

4.1. Proposal of the Method

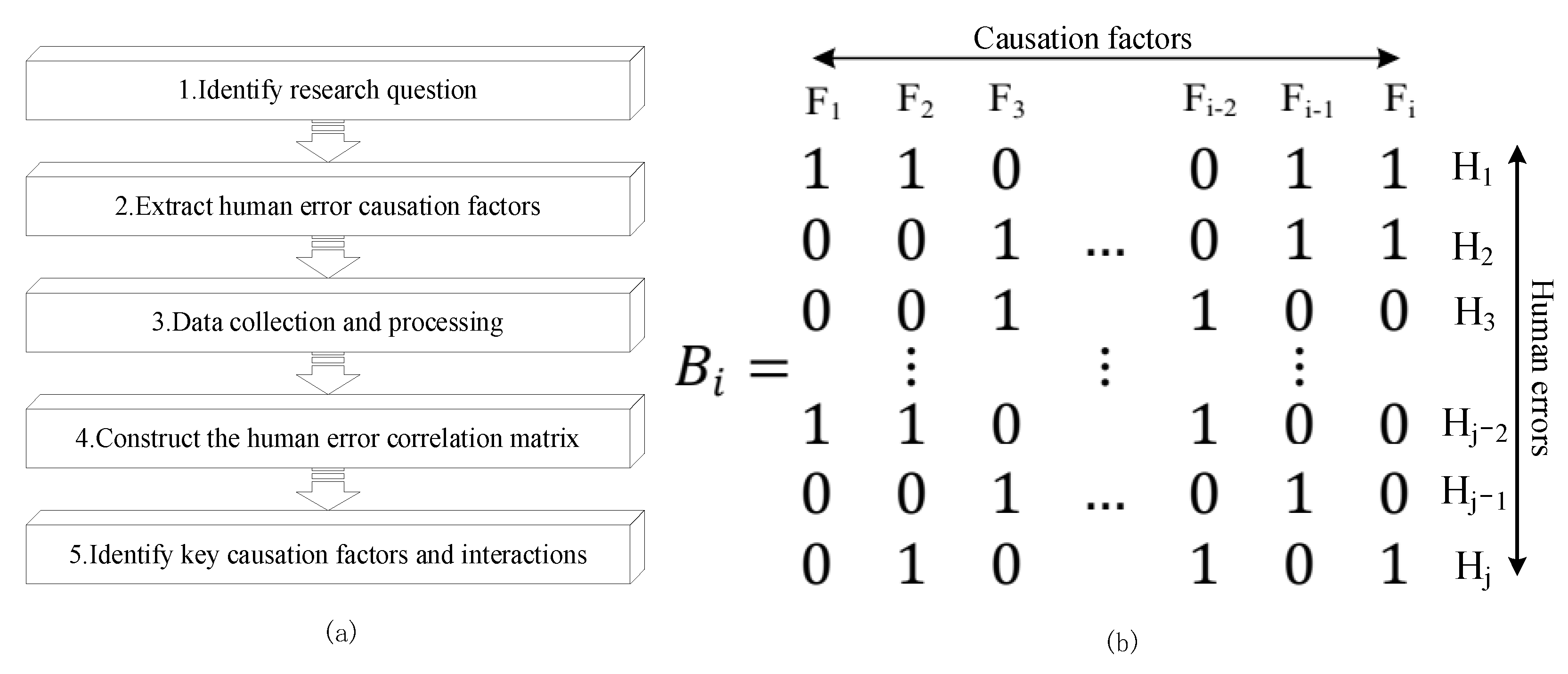

- (1)

- Identify research questions. Determining the research problem is the background application of this method, which should be refined into specific accidents within the region, specific industries, and specific systems. The identification of research questions is the basis for the identification of human error causes and data collection.

- (2)

- Extract human error causation factors. The application of the method in Section 3 systematically summarized the causation factors of human error. On this basis, correcting the existing human error causation factors according to the specific situation needs to be applied.

- (3)

- Collect and process data. The collection of accident cases is matched to the research question, and the scope or boundary usually involves the region, time, type of accident, severity of accident, etc. Among them, different regions may have different characteristics. For example, different countries and provinces have different safety systems and policies, which may lead to different characteristics of human mistakes; the accident case in the time period should have a positive guidance to the reality, as different accident severities may have different corresponding characteristics of human errors. For processing data, when analyzing a large number of accident cases, the process is usually carried out by more than one person. To improve the validity of the analysis results, all participants should be professionals with the sufficient knowledge structure. In addition, professionals involved in accident case analysis should be trained in consistency until “consistency ≥ 95%”. Additionally, the final results need to be reviewed and approved by relevant professors before subsequent analysis.

- (4)

- Construct the human error correlation matrix. The purpose of this section is to study the co-occurrence of human error causation factors. To carry out targeted research, the construction of the human error correlation matrix is divided into safety managers and operators to obtain the corresponding data set of human error causation (if the influence factor exists, the value is “1”; otherwise, the value is “0”), as shown in Figure 9b. On this basis, relevant software (e.g., UNICET 6, NetMiner, MultiNet, etc.) is used to transform the data set of human error causation, and finally, the human error causation association matrix is obtained.

- (5)

- Identify key causative factors and interactions. For the whole network, the density, average shortest path, and clustering coefficient of the human error causation association network explain the closeness, propagation, and cohesion of causation factors in the network [39]. For a single node, the degree centrality measures the direct influence of the causation factor on other factors, whereas the betweenness centrality measures the transmission control force of causative factors on the human error network [39]. Specifically, the greater the betweenness centrality, the more obvious the “bridge” effect of causation/association is.

4.2. Application of the Method

4.2.1. Data Collection

4.2.2. Results

- (1)

- A holistic analysis of human factor networks

- (2)

- Human factor network analysis of different individuals

- ➀

- For blasting workers, the main causes and paths of human error include: inadequate supervision at the government level (K1), insufficient enterprise supervision (E1), insufficient safety education and training (B2), and a not strict enough implementation of the access policy for blasting workers (E2) at the enterprise level, as well as skill defects at the individual level (A4). Among them, K1, A4, and E1 also have high centrality, indicating that these causative factors have a direct impact on other causative factors and play an important role in controlling the transmission of the human error causative network (Figure 10a).

- ➁

- For ventilating workers, insufficient supervision at the government level (K1), insufficient supervision (E1), insufficient investment in ventilation equipment (H1), lack of safety education (B1) at the enterprise level, and deficiency of knowledge at the individual level (A3) are the main factors and pathways leading to human error. Under the premise of weak government supervision, this leads to further lax internal safety and ventilation work in enterprises, mainly involving the delay of safety information feedback, the lack of knowledge and skills training, the lack of enterprise supervision, and the reduction of ventilation access standards (Figure 10b).

- ➂

- For tunneling workers, the lack of supervision at the government level (K1), the lack of supervision (E1), the lack of a safety climate at the enterprise level (C3), unsafe psychology at the individual level (A1) and the defect of knowledge (A3) are the main causes and paths leading to human error. At the same time, an important intermediary link also includes SIS at the enterprise level (B2). In the case of government regulation violation, the failure of SIS and the lack of safety knowledge and skills is more serious (Figure 10c).

- ➃

- For gas inspectors, the lack of supervision at the government level (K1), the lack of strict implementation of access standards at the enterprise level (E2), as well as the unsafe psychology (A1), the defect of knowledge (A3), and the defect of skills (A4) at the individual level are the main factors and paths leading to human error. In addition, the lack of corporate constraints on the cultural environment is the key cause of the unsafe psychology of gas inspectors. At the same time, when government supervision is insufficient or the enterprise safety climate is insufficient, it has a negative impact on the enterprise’s investment in gas measurement equipment (Figure 10d).

- ➄

- For managers, the lack of supervision at the government level (K1), the lack of safety supervision (E1) and the lack of a cultural environment (C3) at the enterprise level, as well as unsafe psychology at the individual level (A1), the lack of knowledge (A3), and the lack of skills (A4) are the main factors and pathways leading to human error. In addition, the failure of managers to protect the cultural environment further stimulates the generation of unsafe psychology; the quality of the cultural environment is also directly related to whether managers destroy it. At the same time, in the absence of government supervision, the bad habits of managers and the defects of SIS of enterprises becomes more serious (Figure 10e).

5. Discussion

- (1)

- The human error causation mode proposed in this paper is an integrated mode, involving signal-noise dependence, information dependence, and constraint dependence, and comprehensively summarizes all the factors that may affect safety behavior. These factors originate from humans, matters, environments, management, and their interactions. The human error causation models proposed in the past (such as the HFACS [13] and SICHFA [2]) have their focus (the former focuses on humans and management, whereas the latter focuses on information and cognition), but they are not applicable to the current complex network system. The human error causation model is comprehensive and universal, but it also has limitations: the mode constructed in this paper is a preliminary research result, which needs to be further improved.

- (2)

- The system safety method of HFA also has its own characteristics, which cannot only clarify the direct coupling effect of various elements in the system, but also can promote the visualization of human factors through the network graph so that all subjects can effectively participate in the prevention of human error. On the one hand, vulnerable points can be identified according to the complex network graph; on the other hand, the network analysis tool can be used to evaluate the key nodes in the system and provide an important basis for prevention work. Therefore, this method combines both qualitative and quantitative attributes, and can promote the initiative, systematic, and dynamic prevention of human error. In the future, an intelligent platform can be introduced to promote the intelligentization of human error prevention. However, the difficulty (operability, workload, etc.) of applying this method will be a major obstacle in practice.

- (3)

- For the network analysis method of human error, compared with the previous purely descriptive literature research, this method not only can identify the nodes with the highest frequency of the effects in certain people due to errors, but also can spread the risk and play an important intermediary role in the network of nodes and edges, which can be effective for recognition. Therefore, this method will be more effective in preventing human error. Its limitation lies in that the method does not fully play the role of network tools, which is needed to solve more problems and further promote the prevention of human error. Subsequently, network tools can be used to mine more information about human error, such as: How does risk spread in the network? Are there other values that can characterize the key features of human error? How does the system network become an optimal network?

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, L.; Wang, Y. Human Reliability Analysis—Human Error Risk Assessment and Control; Aviation Industry Press: Beijing, China, 2015. [Google Scholar]

- Chen, Y.; Feng, W.; Jiang, Z.; Duan, L.; Cheng, S. An accident causation model based on safety information cognition and its application. Reliab. Eng. Syst. Saf. 2021, 207, 107363. [Google Scholar] [CrossRef]

- Kou, L. Discussion on Development of Safety Awareness from Characteristics of Accident. China Saf. Sci. J. 2003, 12, 17–21. [Google Scholar]

- Yood, Y.S.; Ham, D.-H.; Yoon, W.C. Application of activity theory to analysis of human-related accidents: Method and case studies. Reliab. Eng. Syst. Saf. 2016, 150, 22–34. [Google Scholar]

- Woods, D.D.; Dekker, S.; Cook, R.; Johannsen, L. Behind Human Error; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Kim, D.S.; Baek, D.H.; Yoon, W.C. Development and evaluation of a computer-aided system for analyzing human error in railway operations. Reliab. Eng. Syst. Saf. 2010, 95, 87–98. [Google Scholar] [CrossRef]

- Wang, B.; Wang, Y. Job Burnout among Safety Professionals: A Chinese Survey. Int. J. Environ. Res. Public Health 2021, 18, 8343. [Google Scholar] [CrossRef]

- Yu, M.; Qin, W.; Li, J. The influence of psychosocial safety climate on miners’ safety behavior: A cross-level research. Saf. Sci. 2022, 150, 105719. [Google Scholar] [CrossRef]

- Man, S.S.; Yu, R.; Zhang, T.; Chan, A.H.S. How Optimism Bias and Safety Climate Influence the Risk-Taking Behavior of Construction Workers. Int. J. Environ. Res. Public Health 2022, 19, 1243. [Google Scholar] [CrossRef]

- Kelly, D.; Efthymiou, M. An analysis of human factors in fifty controlled flight into terrain aviation accidents from 2007 to 2017. J. Saf. Res. 2019, 69, 155–165. [Google Scholar] [CrossRef]

- Yin, W.; Fu, G.; Yang, C.; Jiang, Z.; Zhu, K.; Gao, Y. Fatal gas explosion accidents on Chinese coal mines and the characteristics of unsafe behaviors: 2000–2014. Saf. Sci. 2017, 92, 173–179. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, K.; You, G.; Wang, B.; Zhao, L. Causation Analysis of Risk Coupling of Gas Explosion Accident in Chinese Underground Coal Mines. Risk Anal. 2019, 39, 1634–1646. [Google Scholar] [CrossRef]

- Wiegmann, D.A.; Shappell, S.A. Human error analysis of commercial aviation accidents: Application of the human factors analysis and classification system (HFACS). Aviat. Space Environ. Med. 2001, 72, 1006–1016. [Google Scholar]

- Li, W.-C.; Harris, D.; Yu, C.-S. Routes to failure: Analysis of 41 civil aviation accidents from the Republic of China using the human factors analysis and classification system. Accid. Anal. Prev. 2008, 40, 426–434. [Google Scholar] [CrossRef]

- Chauvin, C.; Lardjane, S.; Morel, G.; Clostermann, J.-P.; Langard, B. Human and organisational factors in maritime accidents: Analysis of collisions at sea using the HFACS. Accid. Anal. Prev. 2013, 59, 26–37. [Google Scholar] [CrossRef]

- Liu, R.; Cheng, W.; Yu, Y.; Xu, Q. Human factors analysis of major coal mine accidents in China based on the HFACS-CM model and AHP method. Int. J. Ind. Ergon. 2018, 68, 270–279. [Google Scholar] [CrossRef]

- Madigan, R.; Golightly, D.; Madders, R. Application of Human Factors Analysis and Classification System (HFACS) to UK rail safety of the line incidents. Accid. Anal. Prev. 2016, 97, 122–131. [Google Scholar] [CrossRef] [Green Version]

- Chen, S.-T.; Wall, A.; Davies, P.; Yang, Z.; Wang, J.; Chou, Y.-H. A Human and Organisational Factors (HOFs) analysis method for marine casualties using HFACS-Maritime Accidents (HFACS-MA). Saf. Sci. 2013, 60, 105–114. [Google Scholar] [CrossRef]

- Theophilus, S.C.; Esenowo, V.N.; Arewa, A.O.; Ifelebuegu, A.O.; Nnadi, E.O.; Mbanaso, F.U. Human factors analysis and classification system for the oil and gas industry (HFACS-OGI). Reliab. Eng. Syst. Saf. 2017, 167, 168–176. [Google Scholar] [CrossRef]

- Wu, C.; Huang, L. A new accident causation model based on information flow and its application in Tianjin Port fire and explosion accident. Reliab. Eng. Syst. Saf. 2019, 182, 73–85. [Google Scholar] [CrossRef]

- Luo, T.; Wu, C. Safety information cognition: A new methodology of safety science in urgent need to be established. J. Clean. Prod. 2019, 209, 1182–1194. [Google Scholar] [CrossRef]

- Tong, R.; Yang, Y.; Ma, X.; Zhang, Y.; Li, S.; Yang, H. Risk Assessment of Miners’ Unsafe Behaviors: A Case Study of Gas Explosion Accidents in Coal Mine, China. Int. J. Environ. Res. Public Health 2019, 16, 1765. [Google Scholar] [CrossRef] [Green Version]

- Palla, G.; Derenyi, I.; Farkas, I.; Vicsek, T. Uncovering the overlapping community structure of complex networks in nature and society. Nature 2005, 435, 814–818. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Barrat, A.; Barthelemy, M.; Pastor-Satorras, R.; Vespignani, A. The architecture of complex weighted networks. Proc. Natl. Acad. Sci. USA 2004, 101, 3747–3752. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, Z.; Jiang, X. Safety System Engineering; China Machine Press: Beijing, China, 2012. [Google Scholar]

- Milo, R.; Shen-Orr, S.; Itzkovitz, S.; Kashtan, N.; Chklovskii, D.; Alon, U. Network motifs: Simple building blocks of complex networks. Science 2002, 298, 824–827. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhonghua, G.; Hui, J.; Wn, Y. The Influencing Mechanism and Effectiveness Evaluation of Safety Supervision Mode on Accidents. J. Public Manag. 2021, 18, 63–77. [Google Scholar]

- Leveson, N. A new accident model for engineering safer systems. Saf. Sci. 2004, 42, 237–270. [Google Scholar] [CrossRef] [Green Version]

- Zhang, G.; Feng, W. The Multi-level Asymmetric Model and Its Optimization of Safety Information Processing from the Perspective of the Social-technical System. J. Intell. 2021, 40, 122–127+178. [Google Scholar]

- Li, H.-M.; Wang, X.-C.; Zhao, X.-F.; Qi, Y. Understanding systemic risk induced by climate change. Adv. Clim. Change Res. 2021, 12, 384–394. [Google Scholar] [CrossRef]

- Lingard, H.; Pirzadeh, P.; Oswald, D. Talking Safety: Health and Safety Communication and Safety Climate in Subcontracted Construction Workgroups. J. Constr. Eng. Manag. 2019, 145, 1–11. [Google Scholar] [CrossRef]

- Wang, B.; Wu, C. Safety-Related Information Provision: The Key to Reducing the Lack of Safety-Related Information. J. Intell. 2018, 37, 146–153. [Google Scholar]

- Chen, Y.; Cheng, S.; Feng, W.; Duan, L.; Jiang, Z. Research on safety information feedback and its failure mechanism. China Saf. Sci. J. 2020, 30, 30–36. [Google Scholar]

- Hale, A.R.; Swuste, P. Safety rules: Procedural freedom or action constraint? Saf. Sci. 1998, 29, 163–177. [Google Scholar] [CrossRef]

- Hale, A.; Borys, D.; Adams, M. Safety regulation: The lessons of workplace safety rule management for managing the regulatory burden. Saf. Sci. 2015, 71, 112–122. [Google Scholar] [CrossRef]

- Laurence, D. Safety rules and regulations on mine sites—The problem and a solution. J. Saf. Res. 2005, 36, 39–50. [Google Scholar] [CrossRef]

- Chen, H.; Qi, H.; Feng, Q. Characteristics of direct causes and human factors in major gas explosion accidents in Chinese coal mines: Case study spanning the years 1980–2010. J. Loss Prev. Process Ind. 2013, 26, 38–44. [Google Scholar] [CrossRef]

- Meng, X.; Liu, Q.; Luo, X.; Zhou, X. Risk assessment of the unsafe behaviours of humans in fatal gas explosion accidents in China’s underground coal mines. J. Clean. Prod. 2019, 210, 970–976. [Google Scholar] [CrossRef]

- Mika, P. Social Network Analysis. In Social Networks and the Semantic Web; IEEE: Piscataway, NJ, USA, 2007. [Google Scholar]

- Li, S.; Wu, C.; Wang, B. Study on model for accident resulting from multilevel safety information asymmetry. China Saf. Sci. J. 2017, 27, 18–23. [Google Scholar]

- Wang, B.; Wu, C.; Shi, B.; Huang, L. Evidence-based safety (EBS) management: A new approach to teaching the practice of safety management (SM). J. Saf. Res. 2017, 63, 21–28. [Google Scholar] [CrossRef]

- Ross, W.; Gorod, A.; Ulieru, M. A Socio-Physical Approach to Systemic Risk Reduction in Emergency Response and Preparedness. IEEE Trans. Syst. Man Cybern. Syst. 2015, 45, 1125–1137. [Google Scholar] [CrossRef]

- State Council of the People’s Republic of China. Report on Production Safety Accident and Regulations of Investigation and Treatment. In Proceedings of the 172nd Executive Meeting of The State Council, Beijing, China, 28 March 2007. [Google Scholar]

| Causation Link | Causative Analysis of Operator’s Behavior Error | Causative Analysis of Safety Manager’s Behavior Error |

|---|---|---|

| Human | A1: bad psychology; A2: adverse physiology; A3: defects of knowledge; A4: defects of skill; A5: physical/mental defects; A6: bad habit; A7: inadequate personal preparation. | a1: bad psychology; a2: adverse physiology; a3: defects of knowledge; a4: defects of skill; a5: physical/mental defects; a6: bad habit; a7: inadequate personal preparation. |

| Human ⟷ Human | B1: failure of SIF; B2: failure of SIS; B3: failure of SIE; B4: weak supervision. | b1: failure of SIF; b2: failure of SIS; b3: failure of SIE. |

| Environment → Human | C1: unsafe physical environment; C2: unsafe technical environment; C3: unsafe cultural environment. | c1: unsafe physical environment; c2: unsafe technical environment; c3: unsafe cultural environment. |

| Matters → Human | D1: lack of safety matters; D2: failure of safety matters. | d1: lack of safety matters; d2: failure of safety matters. |

| Enterprise → Human | E1: weak supervision; E2: unreasonable access and arrangement. | e1: weak supervision; e2: improper plan; e3: failure to correct problems in time. |

| Environment ⟷ Matters | F1: unsafe physical environment; F2: lack of safety matters; F3: failure of safety matters. | f1: unsafe physical environment; f2: lack of safety matters; f3: failure of safety matters. |

| Human → Matters | G1: the action of damaging safety matters; G2: poor protection of safety matters. | g1: the action of damaging safety matters; g2: poor protection of safety matters. |

| Enterprise → Matters | H1: under-investment in safety; H2: safety resources are improperly allocated; H3: inapplicable safety matters. | h1: under-investment in safety; h2: safety resources are improperly allocated; h3: inapplicable safety matters. |

| Human → Environment | I1: the action of damaging safety environment; I2: poor protection of safety environment. | i1: the action of damaging safety environment; i2: poor protection of safety environment. |

| Enterprise → Environment | J1: insufficient constraints on the safety environment. | j1: insufficient constraints on the safety environment. |

| Government → Enterprise | K1: weak supervision; K2: regulatory violations. | k1: weak supervision; k2: regulatory violations. |

| Matter → Matter | L1: cascading effect between matters. | l1: cascading effect between things. |

| Node | Causation Factor | Node | Causation Factor |

|---|---|---|---|

| A1 | Fluke psychology, experience psychology, neglect psychology, lack of motivation, and so on. | E1 | Coal mine enterprises have insufficient supervision of dispatching personnel. |

| A2 | Acute physical conditions, such as physical discomfort. | E2 | Mining companies employ unqualified gas inspectors. |

| A3 | Lack of professional knowledge and system cognition about disaster prevention and mitigation of gas explosion. | F1 | High temperature, humidity, and dust cause device failure. |

| A4 | Usage error of test instrument; screening error of potential hazard; system execution capacity is insufficient. | F2 | Lack of equipment to regulate the working environment, such as air conditioning. |

| A5 | Permanent physical or mental disability, such as organ defects, intelligence, etc. | F3 | The equipment regulating the working environment is damaged or aging. |

| A6 | Spontaneous unsafe behavior, such as poor testing habits. | G1 | Human behavior causes damage to air conditioners, gas ventilation, and monitoring equipment. |

| A7 | Inadequate mental and physical preparation, such as rest, alcohol restriction, etc. | G2 | Insufficient maintenance and protection of safety facilities by managers. |

| B1 | Other personnel did not timely inform the behavior defects of human factors in gas monitoring. | H1 | Enterprises have not invested enough in gas drainage, monitoring equipment, and other equipment to regulate the environment, resulting in insufficient quantity and poor efficiency. |

| B2 | Safety education and training are inadequate. | H2 | Equipment is unevenly distributed among coal mines. |

| B3 | The communication of potential hazard identification, equipment status, and other fuzzy information is delayed or invalid. | H3 | Safety facilities are not fully applicable, such as fire extinguishers. |

| B4 | Lack of adequate supervision and restraint on their behavior. | I1 | Individual neglect of safety affects the safety climate. |

| C1 | Physical effects of temperature, noise, etc. | I2 | Insufficient maintenance and protection of safety environment by managers. |

| C2 | The design and display of instruments, equipment and facilities are not conducive to the smooth development of behaviors. | J1 | The overall organizational climate of culture, policy, and strategic direction is lacking. |

| C3 | Lack of safety climate. | K1 | Inappropriate oversight and supervision of personnel and resources. |

| D1 | Gas drainage, and monitoring and other equipment is insufficient. | K2 | Management willfully flouted procedures, regulations, and policies. |

| D2 | Gas drainage and monitoring equipment are damaged or outdated. | L1 | Damage to the physical structure leads to the failure of other physical structure functions, such as a power outage that leads to the failure of equipment throughout the mine. |

| Individuals | Density | Average Shortest Path | Clustering Coefficient |

|---|---|---|---|

| Blasting workers | 0.1411 | 1.333 | 0.867 |

| Ventilating workers | 0.0907 | 1.394 | 0.956 |

| Tunneling workers | 0.1885 | 1.451 | 0.819 |

| Gas inspectors | 0.1179 | 1.346 | 0.973 |

| Managers | 0.1623 | 1.456 | 0.834 |

| Individuals | Degree Centrality | Betweenness Centrality | Edge Betweenness Centrality | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Blasting workers | K1 | A4 | E1 | B2 | E2 | E1 | K1 | A4 | J1 | A1 | E1-D1 (5) | E1-A1 (4.833) | E1-A3 (4.833) | B4-A4 (3) |

| Ventilating workers | K1 | E1 | A3 | D1 | H1 | K1 | E1 | D1 | A3 | E2 | K1-B1 (4.2) | E1-B2 (4.033) | K1-E2 (3.95) | K1-B2 (3.833) |

| Tunneling workers | K1 | E1 | C3 | A1 | A3 | B2 | K1 | C3 | A1 | E1 | K2-B2 (8.588) | K2-A3 (4.263) | K2-A4 (4.149) | J1-D1 (3.533) |

| Gas inspectors | K1 | A3 | E2 | A4 | A1 | K1 | E2 | J1 | A3 | A1 | K1-D1 (4.024) | K1-H1 (4.024) | J1-DI (3.524) | J1-HI (3.524) |

| Managers | k1 | a1 | a3 | c3 | e1 | a1 | k1 | c3 | a3 | a4 | i2-a1 (10.617) | i1-c3 (8.267) | k1-a6 (6.242) | k1-b2 (5.875) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, G.; Feng, W.; Lei, Y. Human Factor Analysis (HFA) Based on a Complex Network and Its Application in Gas Explosion Accidents. Int. J. Environ. Res. Public Health 2022, 19, 8400. https://doi.org/10.3390/ijerph19148400

Zhang G, Feng W, Lei Y. Human Factor Analysis (HFA) Based on a Complex Network and Its Application in Gas Explosion Accidents. International Journal of Environmental Research and Public Health. 2022; 19(14):8400. https://doi.org/10.3390/ijerph19148400

Chicago/Turabian StyleZhang, Guirong, Wei Feng, and Yu Lei. 2022. "Human Factor Analysis (HFA) Based on a Complex Network and Its Application in Gas Explosion Accidents" International Journal of Environmental Research and Public Health 19, no. 14: 8400. https://doi.org/10.3390/ijerph19148400