1. Introduction

Due to the difficult-reserved property of electricity, providing accurate load forecasting plays an important role for the effective management operations of a power utility, such as unit commitment, short-term maintenance, network power flow dispatched optimization, and security strategies. On the other hand, inaccurate load forecasting will increase operating costs: over forecasted loads lead to unnecessary reserved costs and an excess supply in the international energy networks; under forecasted loads result in high expenditures in the peaking unit. Therefore, it is essential that every utility can forecast its demands accurately.

There are lots of approaches, methodologies, and models proposed to improve forecasting accuracy in the literature recently. For example, Li et al. [

1] propose a computationally efficient approach to forecast the quantiles of electricity load in the National Electricity Market of Australia. Arora and Taylor [

2] present a case study on short-term load forecasting in France, by incorporating a rule-based methodology to generate forecasts for normal and special days, and by a seasonal autoregressive moving average (SARMA) model to deal with the intraday, intraweek, and intrayear seasonality in load. Takeda et al. [

3] propose a novel framework for electricity load forecasting by combining the Kalman filter technique with multiple regression methods; Zhao and Guo [

4] propose a hybrid optimized grey model (Rolling-ALO-GM (1,1)) to improve the accurate level of annual load forecasting. For those applications of neural networks in load forecasting, the authors of references [

5,

6,

7,

8,

9] have proposed several useful short-term load forecasting models. For these applications of hybridizing popular methods with evolutionary algorithms, the authors of references [

10,

11,

12,

13,

14] have demonstrated that the forecasting performance improvements can be made successfully. These proposed methods could receive obvious forecasting performance improvements in terms of accurate level in some cases, however, the issue of modeling with good interpretability should also be taken into account, as mentioned in [

15]. Furthermore, these proposed models are almost embedded with strong intra-dependency association to experts’ experiences, as well as, they often could not guarantee to receive satisfied accurate forecasting results. Therefore, it is essential to propose some kind of combined model, which hybridizes popular methods with advanced evolutionary algorithms, also combining expert systems and other techniques, to simultaneously receive high accuracy forecasting performances and interpretability.

Due to advanced higher dimensional mapping ability of kernel functions, support vector regression (SVR) is drastically applied to deal with the forecasting problem, which is with small but high dimensional data size. SVR has been an empirically popular model to provide satisfied performances in many forecasting problems [

16,

17,

18]. As it is known that the biggest disadvantage of a SVR model is the premature problem, i.e., suffering from local minimum when evolutionary algorithms are used to determine its parameters. In addition, its robustness also could not receive a satisfied stable level. To look for effective novel algorithms or hybrid algorithms to avoid trapping into local minimum, and to simultaneously receive satisfied robustness is still the very hot point in the SVR modeling research fields [

19]. In the meanwhile, to improve the forecasting accurate level, it is essential to extract the original data set with nonlinear or nonstationary components [

20] and transfer them into single and conspicuous ones. The empirical mode decomposition (EMD) is dedicated to provide extracted components to demonstrate high accurate clustering performances, and it has also received lots of attention in relevant applications fields, such as communication, economics, engineering, and so on [

21,

22,

23]. As aforementioned, the EMD could be applied to decompose the data set into some high frequency detailed parts and the low frequent approximate part. Therefore, it is easy to reduce the interactions among those singular points, thereby increasing the efficiency of the kernel function. It becomes a useful technique to help the kernel function to deal well with the tendencies of the data set, including the medium trends and the long term trends. For determining the values of the parameters in a SVR model well, it attracts lots of relevant researches during two past decades. The most effective approach is to employ evolutionary algorithms, such as GA [

24,

25], PSO [

26,

27], and so on [

28,

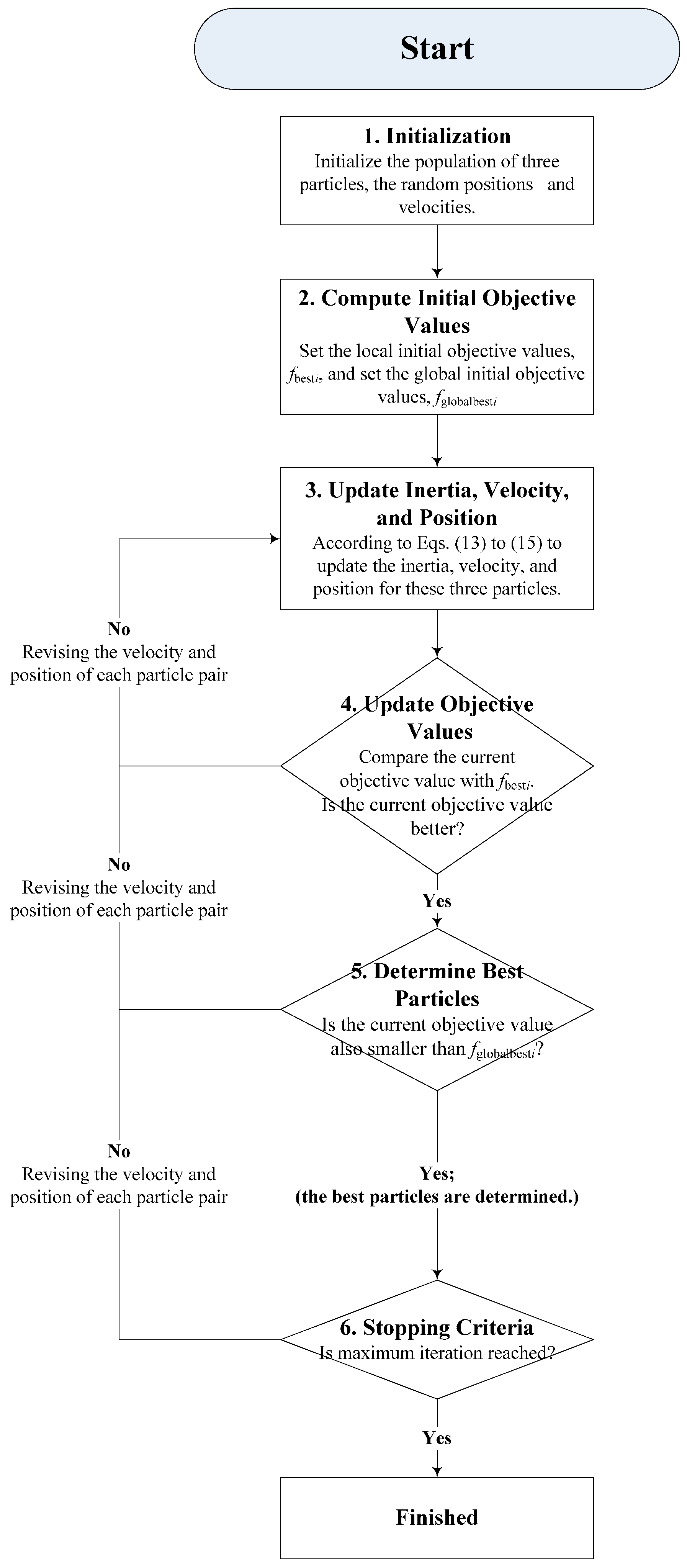

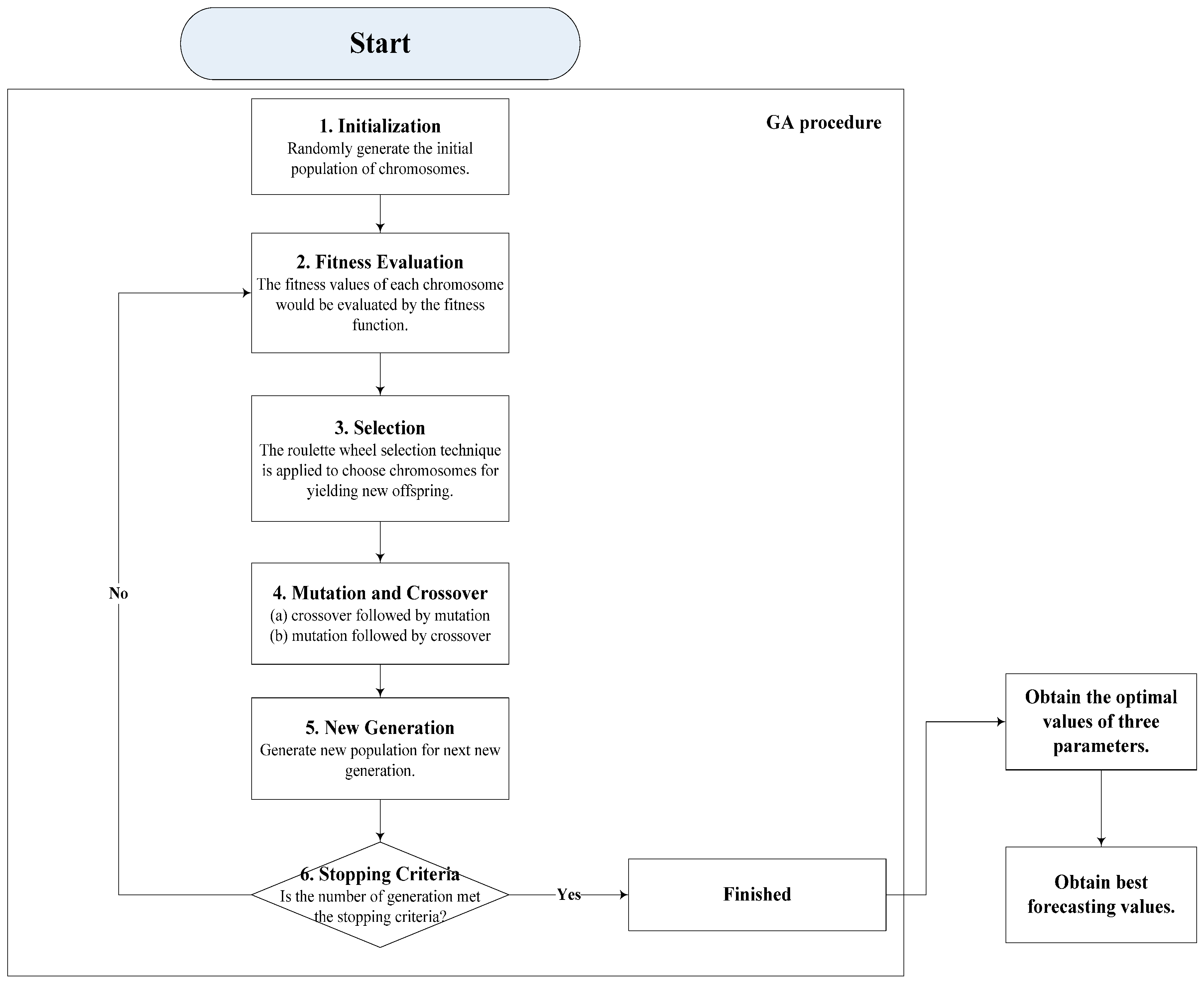

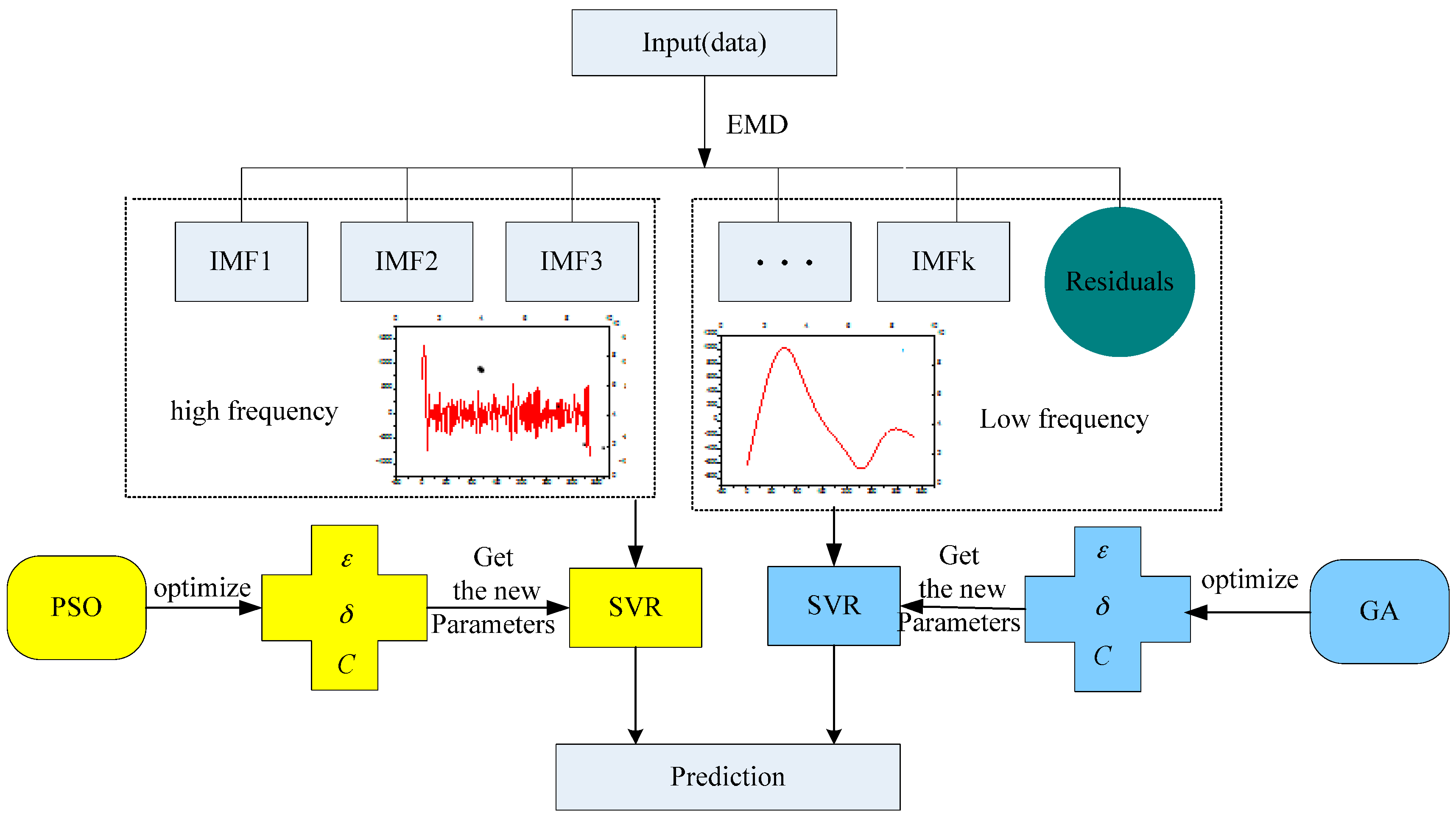

29]. Based on the authors’ empirical experiences in applying the evolutionary algorithms, PSO is more suitable for solving real problems (with more details of data set), it is simple to be implemented, its shortcoming is trapped in the local optimum. For GA, it is more suitable for solving discrete problems (data set reveals stability), however one of its drawbacks is Hamming cliffs. In this paper, the data set will be divided into two parts by EMD (i.e., higher frequent detail parts and the lower frequent part), the higher frequency part is the so-called shock data which demonstrates the details of the data set, thus, SVR’s parameters for this part are suitable to be determined by PSO due to its suitable for solving real problems. The lower frequency part is the so-called contour trend data which reveals its stability, thus, SVR’s parameters for this part could be selected by GA due to its suitable for solving discrete problems.

Therefore, in this paper, the mentioned two parts divided by EMD are conducted by SVR-PSO and SVR-GA, respectively; eventually the improved forecasting performances of proposed model (namely EMD-PSO-GA-SVR model) would be demonstrated. The comprehensive framework could be shown in the following illustrations: (1) the data set is divided by EMD technique into high frequency part with more detailed information and low frequency part with more tendency information, respectively; (2) for the high frequency part, PSO is used to determine the SVR’s parameters, i.e., SVR-PSO is implemented to forecast to receive higher accurate level; (3) for the low frequency part, due to stationary characteristics of tendency information, GA is employed to select suitable parameters in a SVR model, i.e., SVR-GA is implemented to forecast; and, (4) the final forecasting results are obtained from steps (2) and (3). There are also several advantages of the proposed EMD-PSO-GA-SVR model: (1) the proposed model is able to smooth and reduce the noise effects due to inheriting them from from EMD technique; (2) the proposed model is capable to filter data set with detail information and improve microscopic forecasting accurate level due to applying the PSO with the SVR model; and, (3) the proposed model is also capable of capturing the macroscopic outline and to provide accurate forecasting in future tendencies due to inherited from GA. The forecasting processes and superior results would be demonstrated in the next sections.

To demonstrate the advantages and suitability of the proposed model, 30-min electricity loads (i.e., 48 data collected daily) from New South Wales are selected to construct model and to compare the forecasted accurate level with other competitive models, namely, original SVR model, SVR-PSO model (SVR parameters determined by PSO), SVR-GA (SVR parameters selected by GA), and the AFCM model (an adaptive fuzzy model based on a self-organizing map). The second example is from the New York Independent System Operator (NYISO, New York, NY, USA), similarly, 1-h electricity loads (i.e., only 24 data collected daily) are collected to model and to compare the forecasting accurate level. The results demonstrate that the proposed EMD-PSO-GA-SVR model could receive a higher forecasting accuracy level and more comprehensive interpretability. In addition, due to employing the EMD technique, the proposed model could consider more information during the modeling process; thus, it is able to provide more generalization in modeling.

The remainder of this paper is organized as follows.

Section 2 provide the modeling details of the proposed EMD-PSO-GA-SVR model.

Section 3 provides the description of the data set and relevant modeling design.

Section 4 investigates the forecasting results and compares with other competitive models, some insightful discussions are also provided.

Section 5 concludes the study.

3. Experimental Examples

Two experimental examples are used to demonstrate the advantages of the proposed model in terms of applicability, superiority, and generality. The data of the first example (Example 1, in

Section 3.1) is obtained from Australian New South Wales (NSW) electricity market; the data of the second example (Example 2, in

Section 3.2) is from American New York Independent System Operator (NYISO). Furthermore, to demonstrate the overtraining effect for different data sizes, in this paper, two kinds of data sizes, i.e., small data size and large data size, are employed to modeling and analysis, respectively.

To ensure the feasibility of employing EMD to decompose the target data sets (both Examples 1 and 2) into higher and lower frequent parts, it is necessary to verify whether they are nonlinear and non-stationary. This paper applies the recurrence plot (RP) theory [

36,

37] to analyze these two characteristics. As it is known that RP reveals all of the times when the phase space trajectory of the dynamical system visits roughly the same area in the phase space, therefore, it is suitable to analyze the nonlinear and non-stationary characteristics of a data set. The RP analysis for Examples 1 and 2, as shown in

Figure 4a,b, indicate: (1) it is clearly to see the parallel diagonal lines in both figures, i.e., both data sets reveal periodicity and deterministic, and this is the reason that authors could use these data sets to conduct forecasting; (2) it is also clearly to see the checkerboard structures in both figures, i.e., both data sets reveal that, after a transient, they go into slow oscillations that are superimposed on the chaotic motion; and, (3) it obviously demonstrates that vertical and horizontal lines cross at the cross, both data sets reveal laminar states, i.e., non-stationary characteristics. The relevant recurrence rate (the lower rate implies the nonlinear characteristic) and laminarity (the smaller value represents the non-stationary characteristic) for these two data sets also support the abovementioned RP analysis results. Based on the RP analysis, both the electricity load data set demonstrate macroscopic periodicity tendency (i.e., lower frequent part) and the microscopic chaotic oscillations tendency (i.e., higher frequent part), therefore, it is useful to employ EMD to decompose these two data sets into higher and lower frequent part, respectively.

3.1. The Forecasting Results of Example 1

3.1.1. Data Sets for Small and Large Sizes

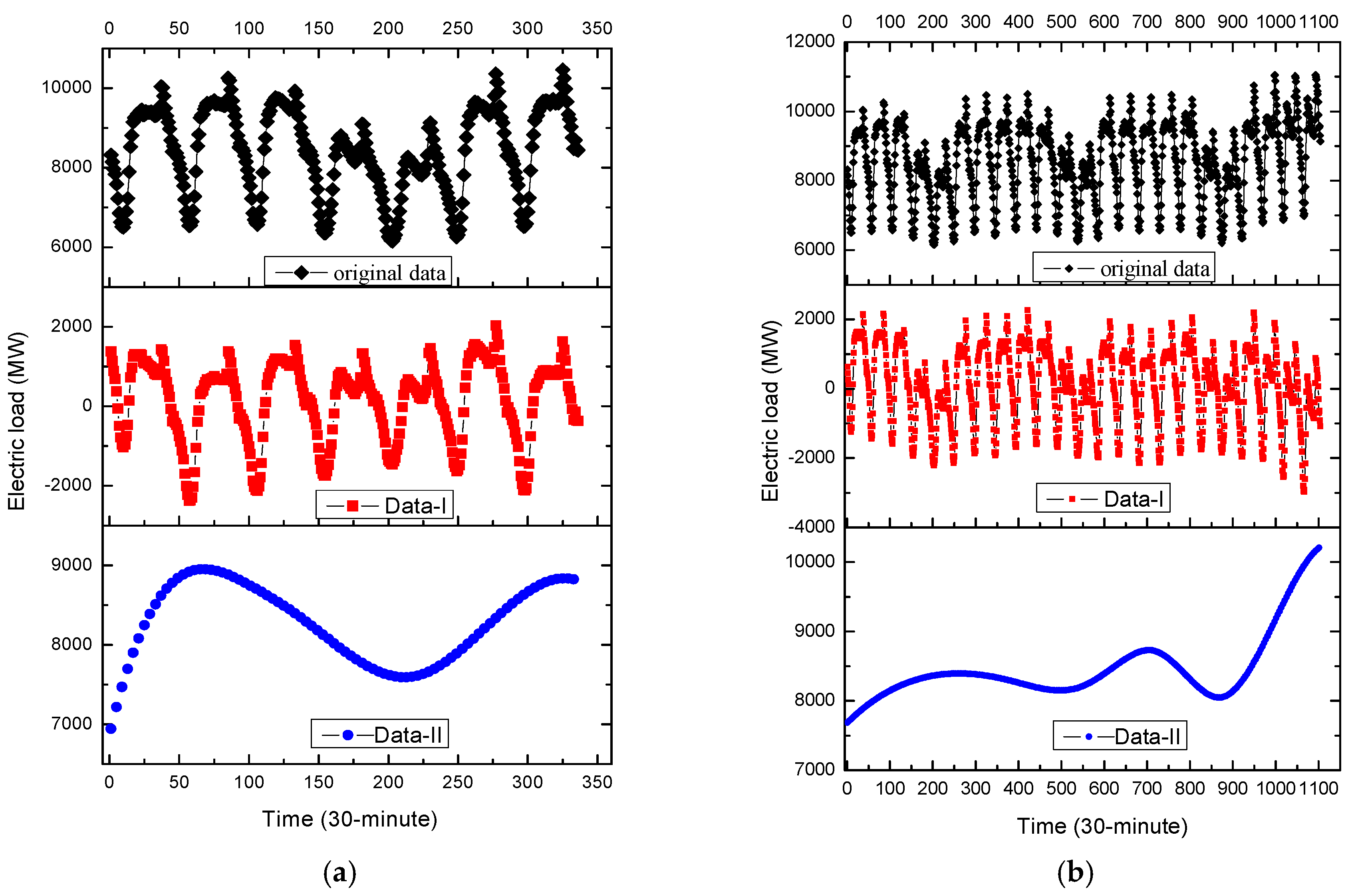

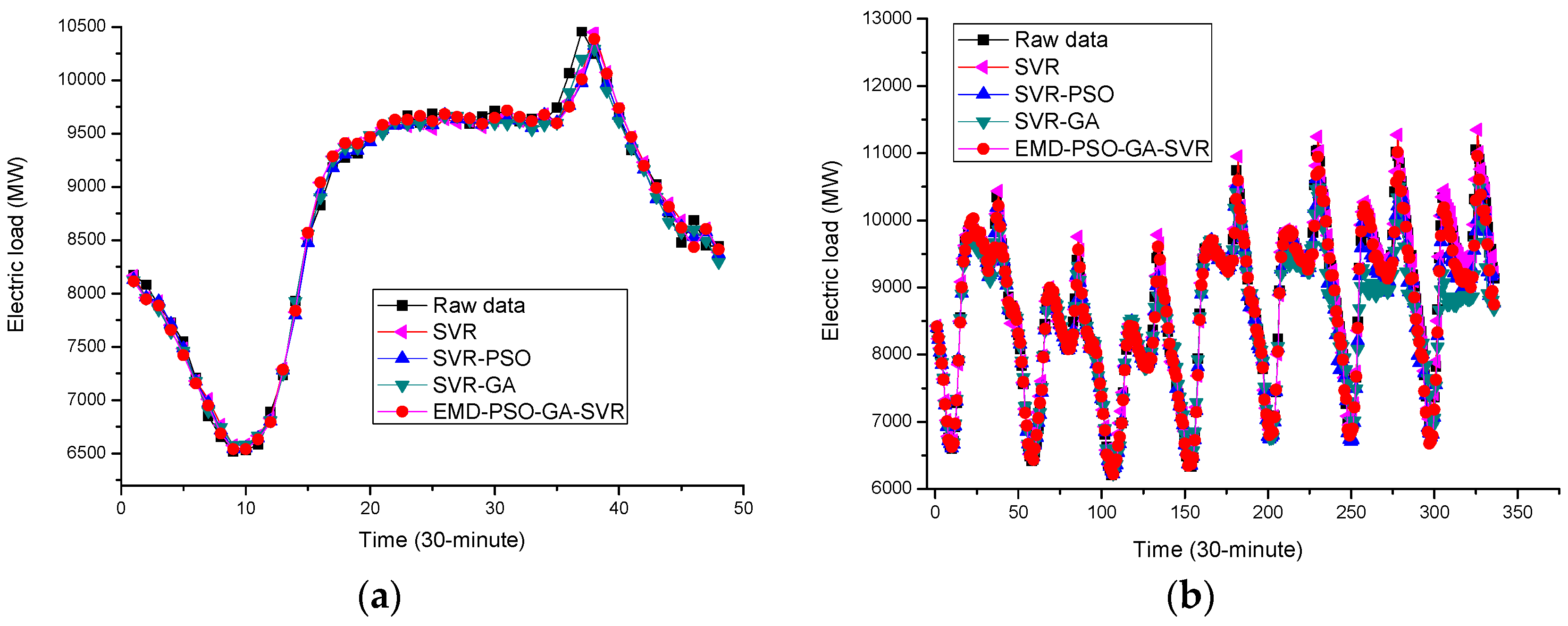

In Example 1, for small data size, there are totally 336 electricity load data per 30 min for seven days, i.e., from 2 to 8 May 2007. In which, the former 288 load data are used as the training data set, the latter 48 load data are as testing data set. The original data set is shown in

Figure 5a.

On the other hand, for large data size, there are totally 1104 electricity load data from 2 to 24 May 2007, based on 30-min scale. In which, the former 768 load data (i.e., from 2 to 17 May 2007) are used as the training data set, the remainder 336 load data are used as the testing data set. The original data set is shown in

Figure 5b.

3.1.2. Decomposition Results by EMD

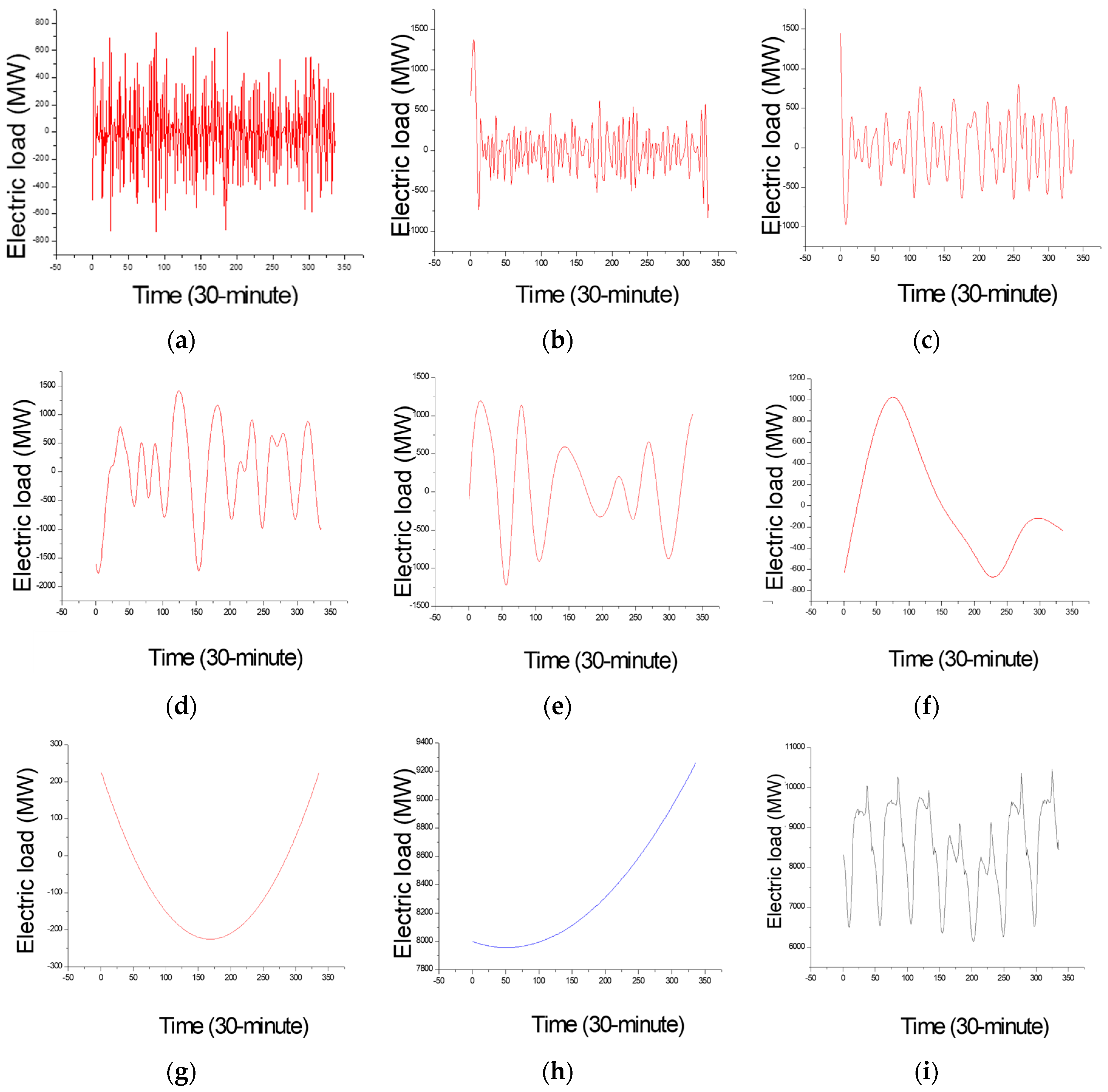

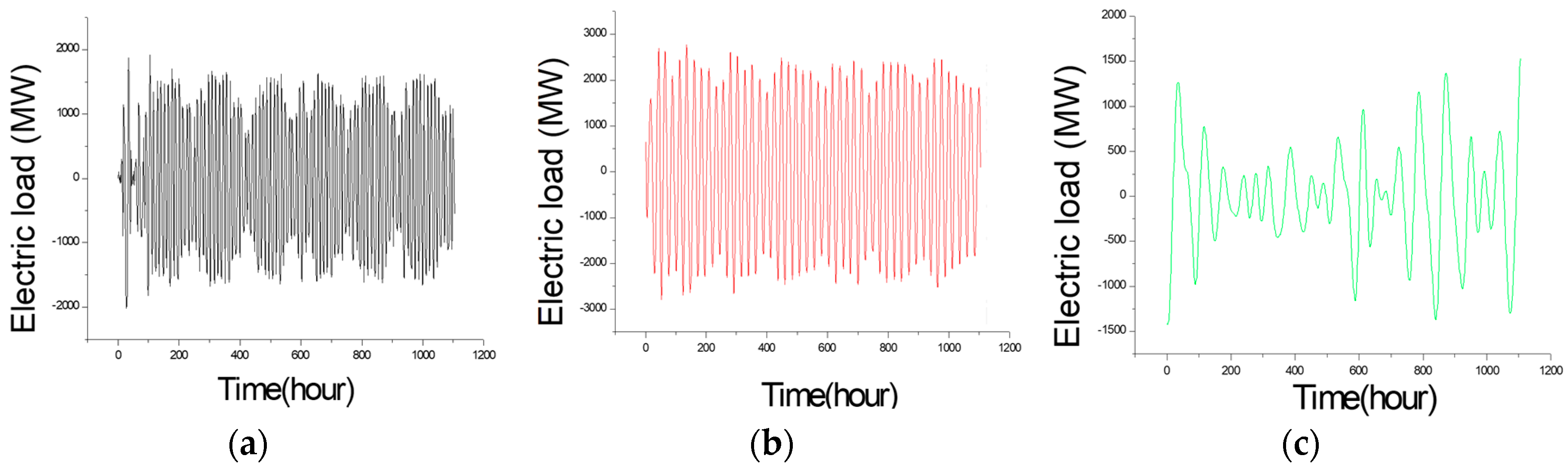

The EMD is employed to decompose the original data set into higher and lower frequency items. For small data size, it could be divided into eight groups, as demonstrated in

Figure 6a–h. In the meanwhile, the time delay of the data set in RP analysis (which value is 4) is simultaneously considered to select the higher and lower frequent parts. Consequently, the former four groups with much more frequency are classified as higher frequency items; the continued four groups with less frequency are classified as lower frequency items. The latest figure represents the original data; by the way,

Figure 6h represents the trend term (i.e., residuals). As also shown in

Figure 5a,b, the fluctuation characteristics of higher frequency item (namely Data-I) are almost the same with the original data, on the contrary, the macrostructure of lower frequency item (namely Data-II) is more stable. Data-I and Data-II will be further analyzed by SVR-PSO and SVR-GA models, respectively, to receive satisfied regression results. The details are illustrated in the next sub-section.

3.1.3. SVR-PSO for Data-I

As shown in

Figure 5, Data-I almost presents as periodical stability every 24 h, which is consistent with people’s production and life. It also reflects the details of continuous changes. In this sub-section, SVR model is employed to conduct forecasting of Data-I, and as it is known that SVR requires evolutionary algorithms to appropriately determine its parameters to improve its forecasting accurate level. When considering that PSO is capable of solving the parameter determination problem from the data set with mentioned continuous change details, therefore, PSO is applied to be hybridized with the SVR model to forecast Data-I.

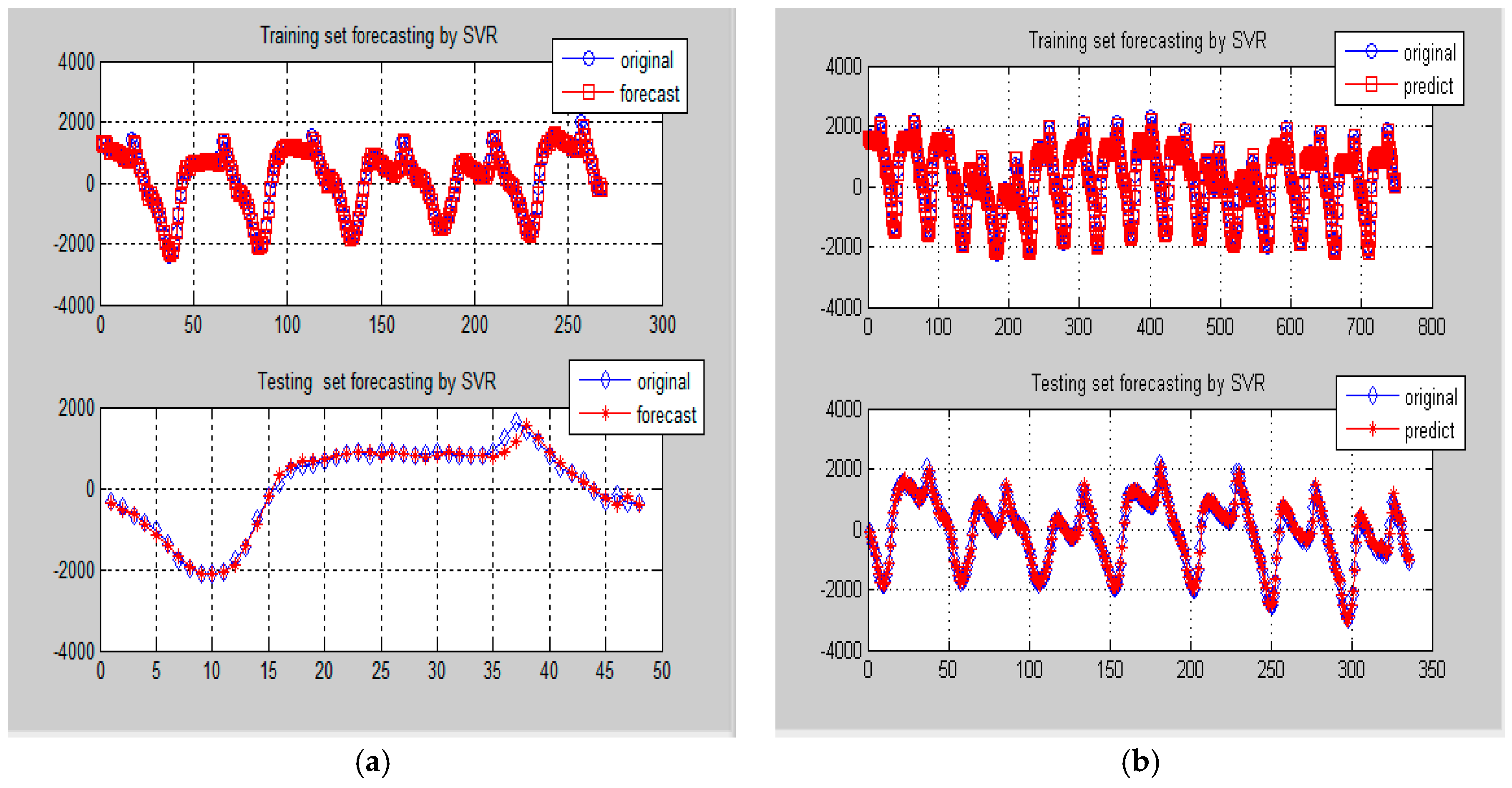

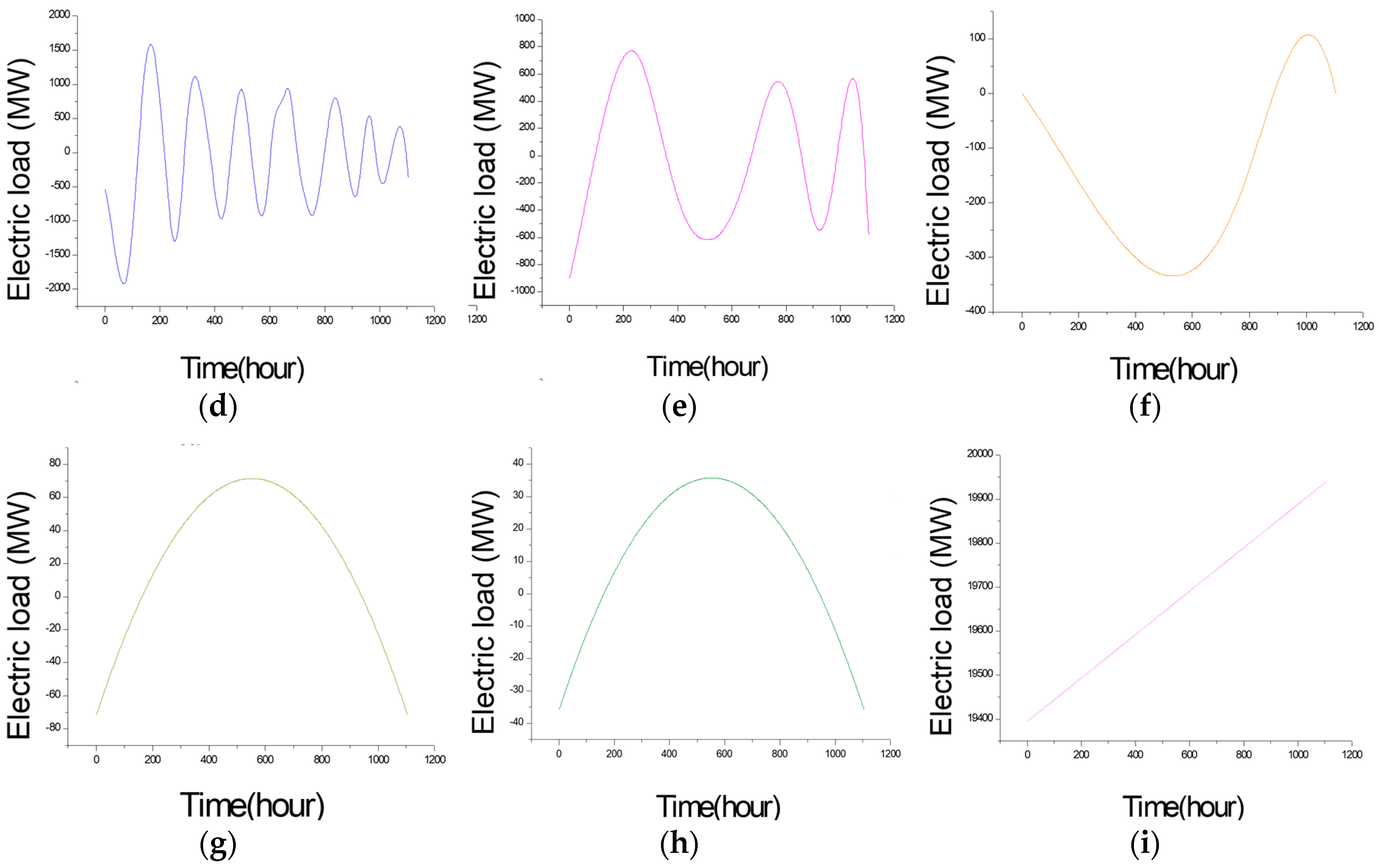

Firstly, the higher frequency items (i.e., Data-I) from small and large data sizes are both used for SVR-PSO modeling. Then, the best modeled results in training and testing stages are received, as shown in

Figure 7a,b, respectively. As demonstrated in

Figure 7, it is obvious to learn about the forecasting accuracy improvements from the hybridization of PSO.

The parameters settings of the SVR model for small and large data sizes are shown in

Table 1. The determined parameters of the SVR-PSO model are shown in

Table 2.

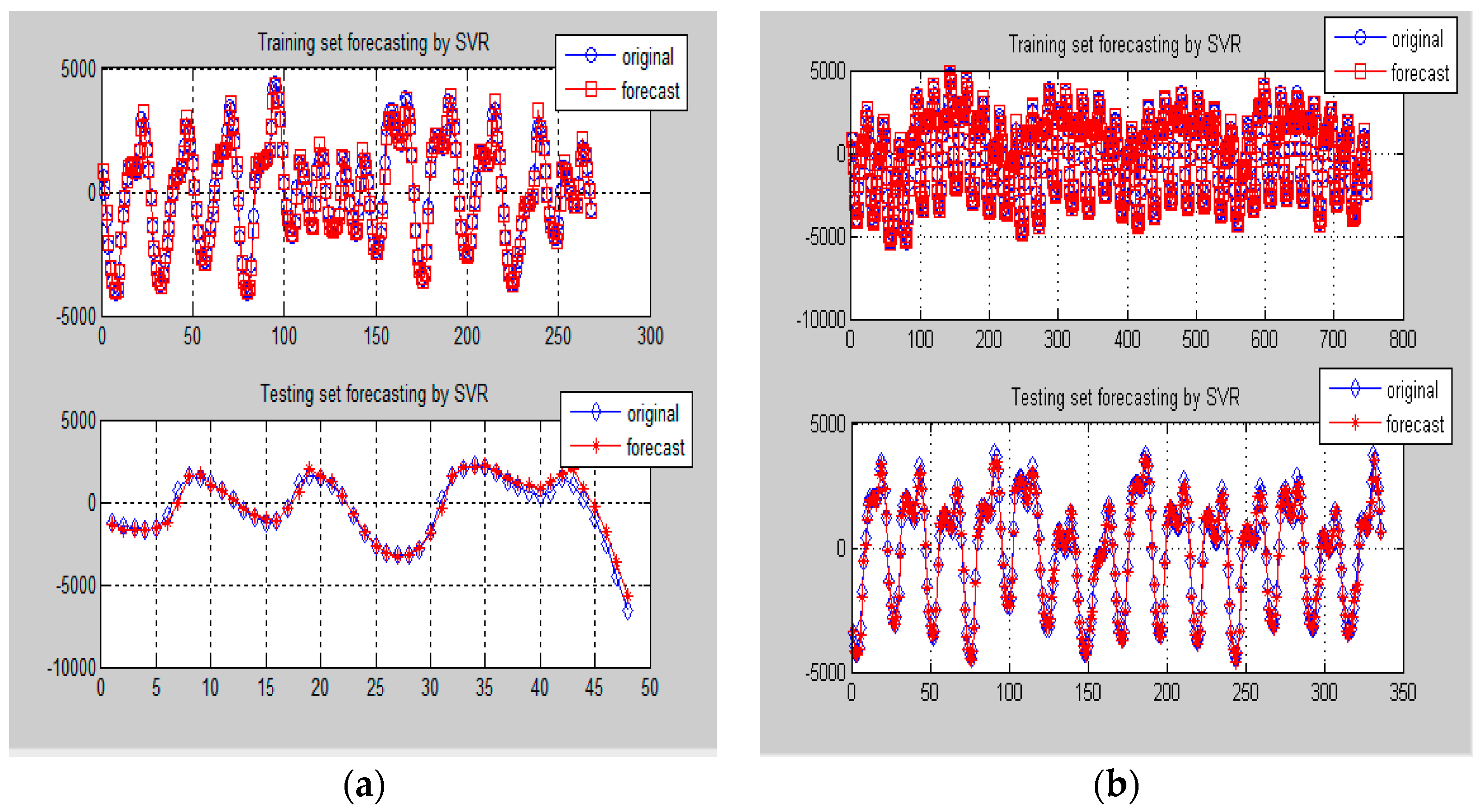

3.1.4. SVR-GA for Data-II

As shown in

Figure 6e–h, the lower frequency item has not only less frequency, but also demonstrates more stability, particularly for the residuals,

Figure 6h. In addition, in the long term, there would suffer from nonlinear mechanical changes, which are relatively discrete. In this sub-section, the SVR model is also used to conduct forecasting of Data-II, and also requires appropriate algorithm to well determine its three parameters. Therefore, it is more suitable to apply GA, while the SVR model is modeling. As abovementioned, GA is familiar to solve discrete problems, thus, the parameters settings of the SVR model for small and large data sizes are the same as shown in

Table 3.

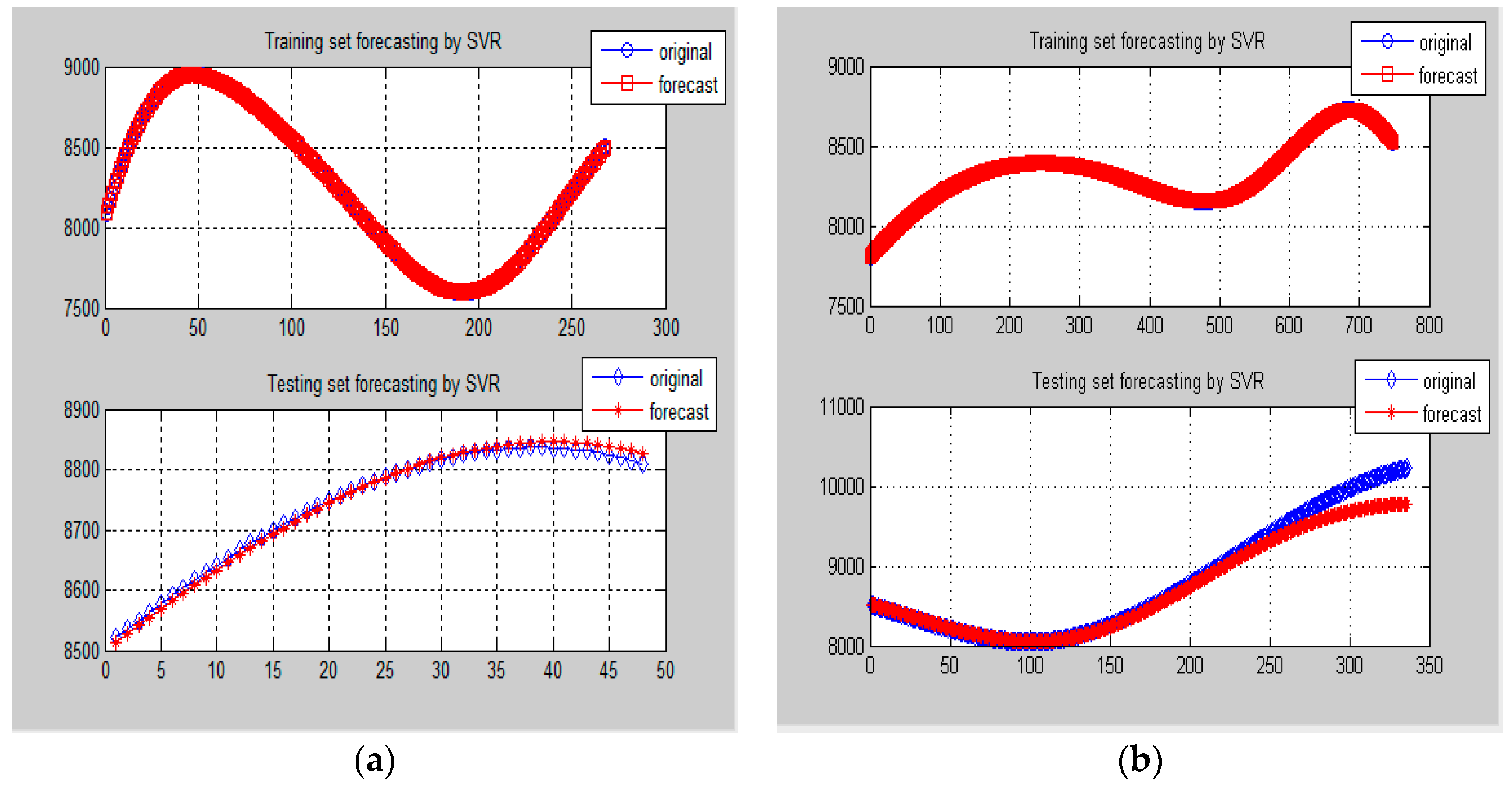

Similarly, the lower frequency items (i.e., Data-II) from small and large data sizes are used for SVR-GA modeling. Then, the best modeled results in training and testing stages are received, as shown in

Figure 8a,b, respectively. In which, it has demonstrated the superiority from the hybridization of GA. The determined parameters of the SVR-GA model are shown in

Table 4.

3.2. The Forecasting Results of Example 2

3.2.1. Data Sets for Small and Large Sizes

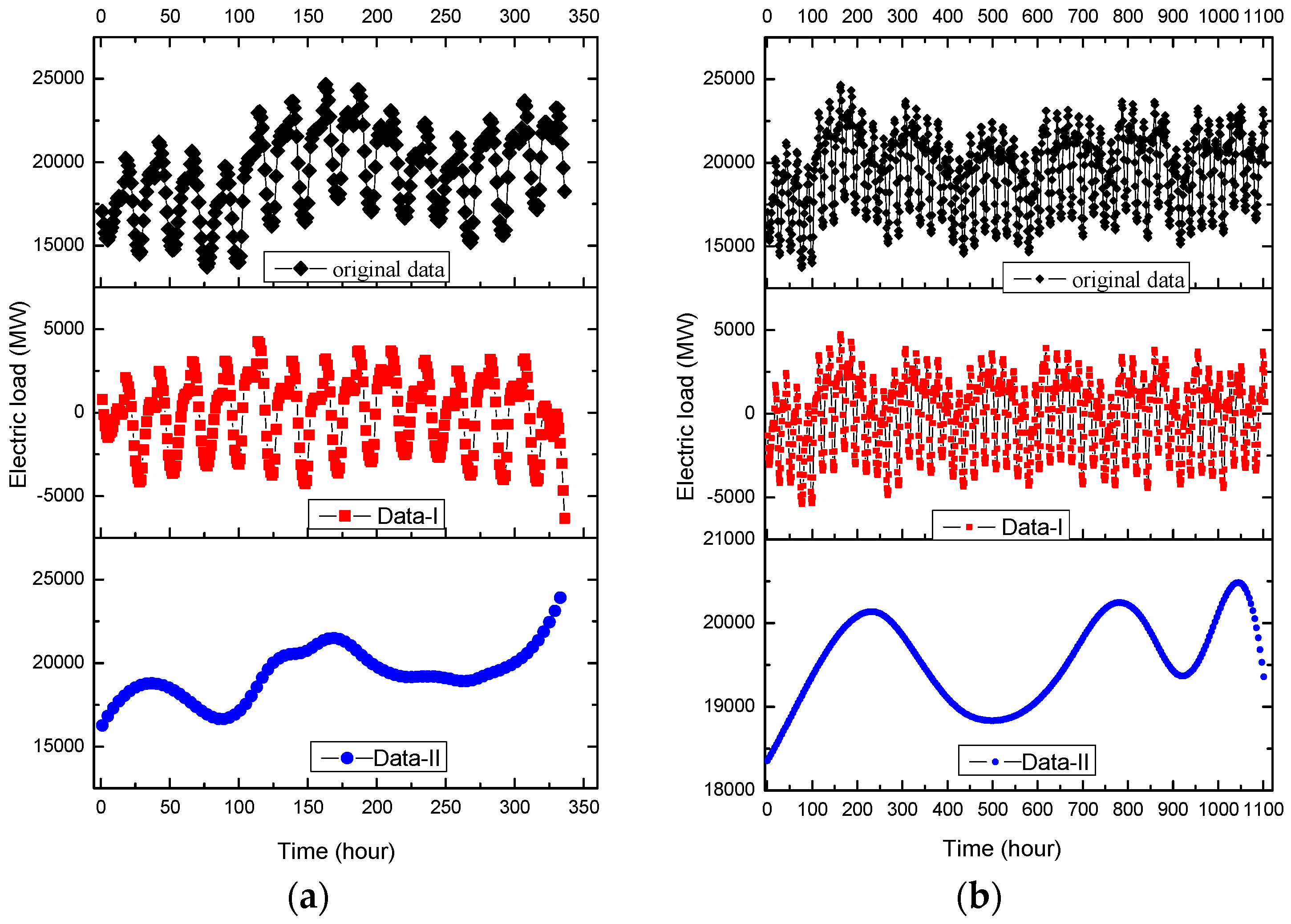

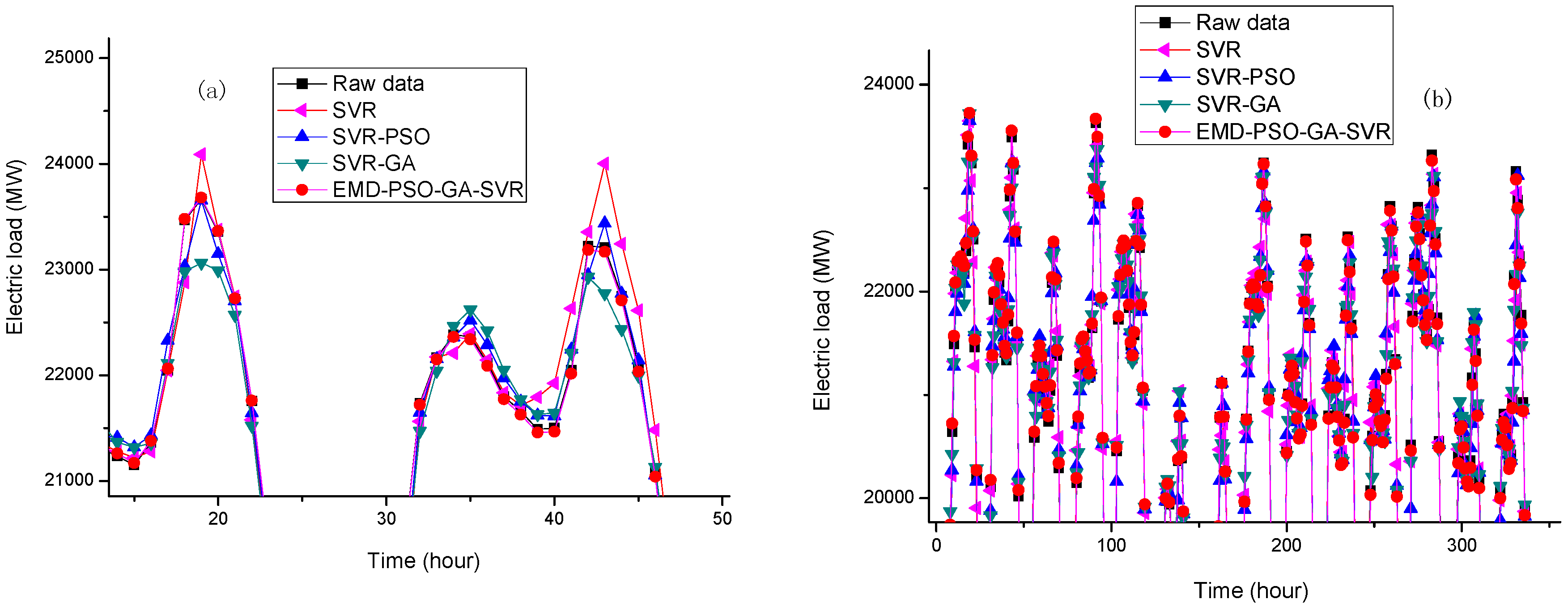

In Example 2, small data size, also totaling 336 hourly load data for 14 days (from 1 to 14 January 2015) are collected. In which, the former 288 load data are used as the training data set, the latter 48 load data are as testing data set. The original data set is shown in

Figure 9a.

For large data size, totally 1104 hourly load data for 46 days (from 1 January to 15 February 2015) are collected to model. The former 768 load data are employed as the training data set, and the remainder 336 load data are used as the testing data set. The original data set is illustrated in

Figure 9b.

3.2.2. Decomposition Results by EMD

Similar as in Example 1, EMD is used to decompose the original data set into higher and lower frequency items. For small data size, it could be divided into nine groups, as shown in

Figure 10a–i. In the meanwhile, the time delay of the data set in RP analysis (which value is 4) is simultaneously considered to select the higher and lower frequent parts. Consequently, the former five groups with much more frequency are classified as higher frequency items; the continued four groups with less frequency are classified as lower frequency items.

Figure 10i also represents the trend term (i.e., residuals). The fluctuation characteristics of Data-I are also obviously the same as the original data, as demonstrated in

Figure 9a,b. On the contrary, the macrostructure of Data-II is more stable. Data-I and Data-II will also be further analyzed by SVR-PSO and SVR-GA models, respectively, to receive satisfied regression results.

3.2.3. SVR-PSO for Data-I

As shown in

Figure 9, Data-I also presents as periodical stability every 24 h, which is the same with the original data. Therefore, it is as similar as in Example 1, PSO is applied to be hybridized with the SVR model to forecast the Data-I.

Firstly, the higher frequency items (i.e., Data-I) from small and large data sizes are both used for SVR-PSO modeling. The best modeled results in the training and testing stages are received, as shown in

Figure 11a,b, respectively. In which, it is obvious to observe that the forecasting accuracy improvements from the hybridization of PSO. The parameters settings of the SVR model for small and large data sizes are as the same as in Example 1, i.e., as shown in

Table 1; the determined parameters of the SVR-PSO model are illustrated

Table 5.

3.2.4. SVR-GA for Data-II

As shown in

Figure 10f–i, the lower frequency item (i.e., Data-II) has less frequency and stability. Therefore, it is as the same as in Example 1, GA is also hybridized with the SVR model to forecast the Data-II. The parameters settings of the SVR model for small and large data sizes are also as the same as shown in

Table 3. The best modeled results in training and testing stages are received, as shown in

Figure 12a,b, respectively. In which, it has demonstrated the superiority from the hybridization of GA. The determined parameters of the SVR-GA model are shown in

Table 6.

5. Conclusions

This paper proposes a novel SVR-based electricity load forecasting model, by hybridizing EMD to decompose the time series data set into higher and lower frequency parts, and, by hybridizing PSO and GA algorithms to determine the three parameters of the SVR models for these two parts, respectively. Via two experimental examples from Australian and American electricity market open data sets, the proposed EMD-PSO-GA-SVR model receives significant forecasting performances rather than other competitive forecasting models in published papers, such as original SVR, SVR-PSO, SVR-GA, and AFCM models.

The most significant contribution of this paper is to overcome the practical drawbacks of an SVR model: the SVR model could only provide poor forecasting for other data patterns, if it is over trained to some data pattern with overwhelming size. Authors firstly apply EMD to decompose the data set into two sub-sets with different data patterns, the higher frequency part and the lower frequency part, to take into account both the accuracy and interpretability of the forecast results. Secondly, authors employ two suitable evolutionary algorithms to reduce the performance volatility of an SVR model with different parameters. PSO and GA are implemented to determine the parameter combination during the SVR modeling process. The results indicate that the proposed EMD-PSO-GA-SVR model demonstrates a better generalization capability than the other competitive models in terms of forecasting capability. It is shown that the extraction of microscopic detail can express its periodic characteristics well, and the macroscopic structure is also in the lower frequency range. The discretization characteristic is expressed by GA, especially at the sharp point, i.e., GA can effectively capture the exact sharp characteristics while the embedded effects of noise and the other factors intertwined. Eventually receiving more satisfying forecasting performances than the other competitive models.