Performance and Reliability of Wind Turbines: A Review

Abstract

:1. Introduction

2. Definitions

2.1. Capacity Factor

2.2. Time-Based Availability

2.3. Technical Availability

2.4. Energetic Availability

2.5. Failure Rate

2.6. Mean Down Time

3. Data Collections on WT Performance and Reliability

3.1. Overview on Initiatives and Publications

- Initiative: Short name of the initiative, in some cases derived by authors of the present paper

- Country: Observation area of the initiative and in most cases location of the responsible institution

- Number of WT: Number of individual WT included in the initiative

- Onshore: Includes data on onshore WT if flagged up

- Offshore: Includes data on offshore WT if flagged up

- Operational turbine years: Summed number of operational years of all included turbines

- Start-Up of survey: Start of work on the initiative, data can also comprise previous years

- End of survey: End of work on the initiative and latest possible data

- Source: Sources considered in the present paper to describe the single initiative

3.2. Description of Considered Sources

3.2.1. CIRCE-Universidad de Zaragoza (Spain)

3.2.2. CREW-Database (USA)

3.2.3. CWEA-Database (China)

3.2.4. Elforsk/Vindstat (Sweden)

3.2.5. EPRI-Database (USA)

3.2.6. EUROWIN, EUSEFIA (Europe)

3.2.7. Garrad Hassan (Worldwide)

3.2.8. Huadian New Energy Company (China)

3.2.9. LWK (Germany)

3.2.10. Lynette (USA)

3.2.11. MECAL (Netherlands)

3.2.12. Muppandal Wind Farm (India)

3.2.13. NEDO-Database (Japan)

3.2.14. ReliaWind (Europe)

3.2.15. Robert Gordon University - RGU (UK)

3.2.16. Round 1 Wind Farms (UK)

3.2.17. Southeast University Nanjing (China)

3.2.18. SPARTA (UK)

3.2.19. Strathclyde (UK)

3.2.20. VTT (Finland)

3.2.21. WindStats (Germany/Denmark)

3.2.22. WInD-Pool (Germany/Europe)

3.2.23. WMEP (Germany)

4. Performance of Wind Turbines

4.1. Capacity Factor

4.2. Availability

5. Reliability of Wind Turbines and Subsystems

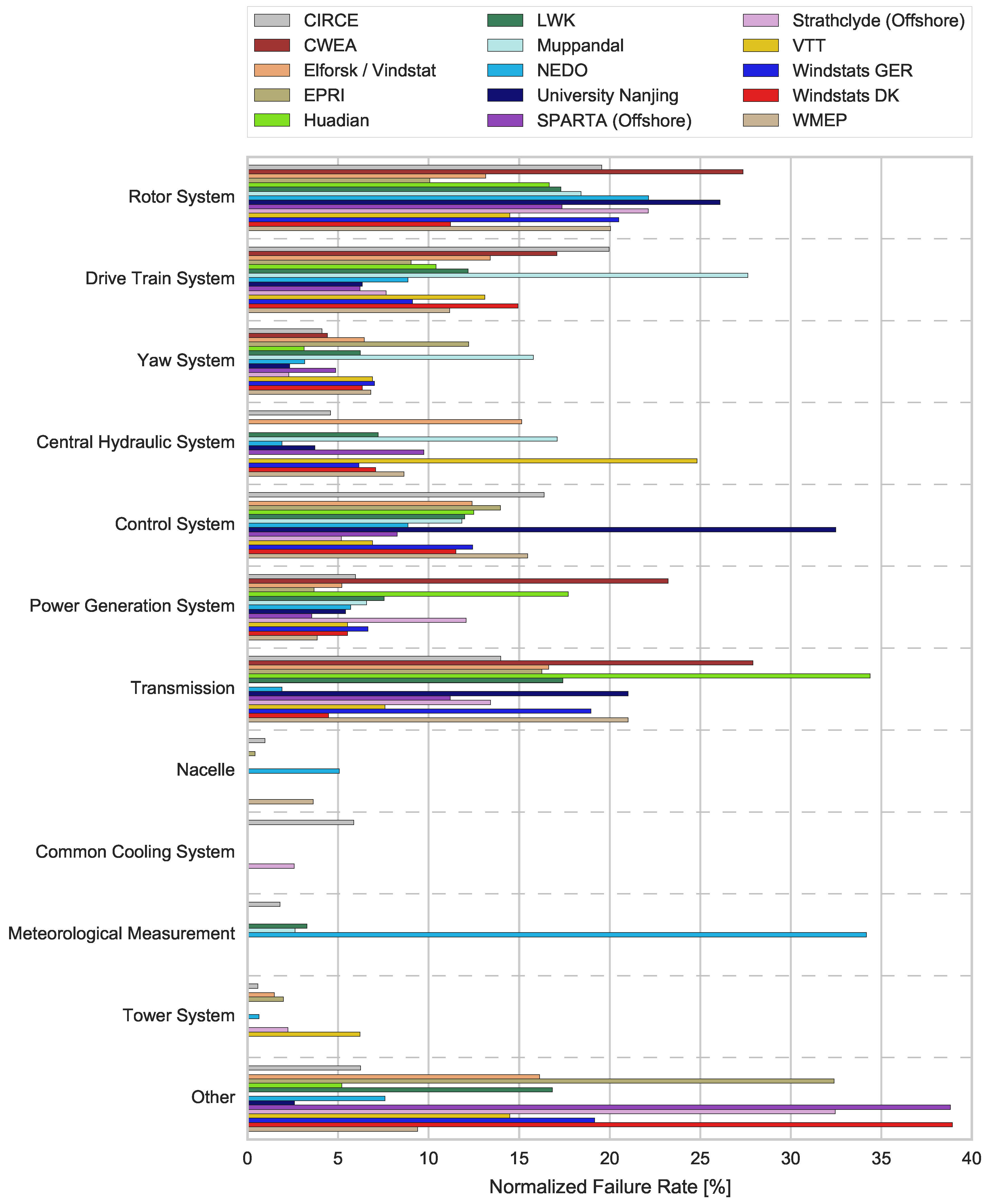

- The single initiatives make use of multiple, in most cases individual, poorly documented designation systems to differentiate between functions/components of WT. The authors of this paper mapped the applied categories to the best of their knowledge to RDS-PP® to enable a comparison of results. A proper mapping was not possible in many cases, that is why the category “Other” has a high share in the results.

- There are big differences in the definition of an event considered as “failure” between the single initiatives. Some consider only events with a down time of at least three days (NEDO) while others (Southeast University Nanjing) count remote resets as well, which leads to high failure frequencies and low average down times. In many cases a sufficient description of a “failure” is not provided.

- It stays in most cases unclear whether repairs, replacements or both are considered in the results. The same is valid for different failure causes (external vs. internal) or the differentiation between preventive and corrective maintenance. Whenever possible, regular maintenance is excluded from the comparison (e.g., EPRI).

5.1. Industry Standards on Data Collection

5.2. Overview on Failure Rate and Mean Down Time

5.3. Failure Rate/Event Rate

5.4. Mean Down Time

6. Discussion

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| Failure Rate | |

| Time-based Availability | |

| Atech | Technical Availability |

| Energetic Availability | |

| BOP | Balance-Of-Plant |

| CF | Capacity Factor |

| CREW | Continuous Reliability Enhancements for Wind |

| CWEA | Chinese Wind Energy Association |

| EPRI | Electric Power Research Institute |

| FGW e.V. | Fördergesellschaft Windenergie und andere Dezentrale Energien |

| GW | Gigawatts |

| IEA | International Energy Agency |

| IEC | International Electrotechnical Commission |

| ISO | International Organization for Standardization |

| KPI | Key Performance Indicators |

| kW | kilowatt |

| LCOE | Levelized Cost of Energy |

| LWK | Chamber of Agriculture |

| MDT | Mean Down Time |

| MOTBF | Mean Operating Time Between Failures |

| MTBF | Mean Time Between Failures |

| MTTF | Mean Time To Failures |

| MTTR | Mean Time To Repair |

| MUT | Mean Up Time |

| MW | Megawatt |

| NEDO | New Energy Industrial Technology Development Organization |

| O&M | Operation and Maintenance |

| Average Power Output | |

| Rated Power | |

| RDS-PP® | Reference Designation System for Power Plants |

| SPARTA | System Performance, Availability and Reliability Trend Analysis |

| SCADA | Supervisory Control and Data Acquisition |

| Available Time | |

| Unavailable Time | |

| Average Actual Power Output | |

| Average Potential Power Output | |

| WT | Wind Turbine |

| WF | Wind Farm |

| WMEP | Wissenschaftliches Mess- und Evaluierungsprogramm |

| ZEUS | Stat-Event-Cause-System |

References

- World Wind Energy Association (WWEA). World Wind Market Has Reached 486 GW from Where 54 GW Has Been Installed Last Year; World Wind Energy Association: Bonn, Germany, 2017. [Google Scholar]

- Lüers, S.; Wallasch, A.K.; Rehfeldt, K. Kostensituation der Windenergie an Land in Deutschland: Update. 2015. Available online: http://publikationen.windindustrie-in-deutschland.de/kostensituation-der-windenergie-an-land-in-deutschland-update/54882668 (accessed on 26 June 2017).

- Hobohm, J.; Krampe, L.; Peter, F.; Gerken, A.; Heinrich, P.; Richter, M. Kostensenkungspotenziale der Offshore-Windenergie in Deutschland: Kurzfassung; Fichtner: Stuttgart, Germany, 2015. [Google Scholar]

- Arwas, P.; Charlesworth, D.; Clark, D.; Clay, R.; Craft, G.; Donaldson, I.; Dunlop, A.; Fox, A.; Howard, R.; Lloyd, C.; et al. Offshore Wind Cost Reduction: Pathways Study; The Crown Estate: London, UK, 2012. [Google Scholar]

- Astolfi, D.; Castellani, F.; Garinei, A.; Terzi, L. Data mining techniques for performance analysis of onshore wind farms. Appl. Energy 2015, 148, 220–233. [Google Scholar] [CrossRef]

- Jia, X.; Jin, C.; Buzza, M.; Wang, W.; Lee, J. Wind turbine performance degradation assessment based on a novel similarity metric for machine performance curves. Renew. Energy 2016, 99, 1191–1201. [Google Scholar] [CrossRef]

- Dienst, S.; Beseler, J. Automatic anomaly detection in offshore wind SCADA data. In Proceedings of the WindEurope Summit 2016, Hamburg, Germany, 27–29 September 2016. [Google Scholar]

- International Electrotechnical Commission. Production Based Availability for Wind Turbines; International Electrotechnical Commission: Geneva, Switzerland, 2013. [Google Scholar]

- Burton, T.; Jenkins, N.; Sharpe, D.; Bossanyi, E. Wind Energy Handbook, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- International Electrotechnical Commission. Time Based Availability for Wind Turbines (IEC 61400-26-1); International Electrotechnical Commission: Geneva, Switzerland, 2010. [Google Scholar]

- International Organization for Standardization. Petroleum, Petrochemical and Natural Gas Industries—Collection and Exchange of Reliability and Maintenance Data for Equipment (ISO 14224:2016); International Organization for Standardization: Geneva, Switzerland, 2016. [Google Scholar]

- International Organization for Standardization. Petroleum, Petrochemical and Natural Gas Industries—Reliability Modelling and Calculation of Safety Systems (ISO/TR 12489); International Organization for Standardization: Geneva, Switzerland, 2013. [Google Scholar]

- Deutsche Elektrotechnische Kommission. Internationales Elektrotechnisches Wörterbuch: Deutsch-Englisch-Französisch-Russisch = International Electrotechnical Vocabulary, 1st ed.; Beuth: Berlin, Germany, 2000. [Google Scholar]

- DIN Deutsches Institut für Normung e.V. Instandhaltung—Begriffe der Instandhaltung (DIN EN 13306); DIN Deutsches Institut für Normung: Berlin, Germany, 2010. [Google Scholar]

- Hahn, B. Wind Farm Data Collection and Reliability Assessment for O&M Optimization: Expert Group Report on Recommended Practices, 1st ed.; Fraunhofer Institute for Wind Energy and Energy System Technology—IWES: Kassel, Germany, 2017. [Google Scholar]

- IEA WIND TASK 33. Reliability Data Standardization of Data Collection for Wind Turbine Reliability and Operation & Maintenance Analyses: Initiatives Concerning Reliability Data (2nd Release), Unpublished report. 2013.

- Sheng, S. Report on Wind Turbine Subsystem Reliability—A Survey of Various Databases; National Renewable Energy Laboratory: Golden, CO, USA, 2013.

- Pettersson, L.; Andersson, J.-O.; Orbert, C.; Skagerman, S. RAMS-Database for Wind Turbines: Pre-Study. Elforsk Report 10:67. 2010. Available online: http://www.elforsk.se/Programomraden/El--Varme/Rapporter/?download=reportrid=10_67_ (accessed on 8 February 2016).

- Branner, K.; Ghadirian, A. Database about Blade Faults: DTU Wind Energy Report E-0067; Technical University of Denmark: Lyngby, Denmark, 2014. [Google Scholar]

- Pinar Pérez, J.M.; García Márquez, F.P.; Tobias, A.; Papaelias, M. Wind turbine reliability analysis. Renew. Sustain. Energy Rev. 2013, 23, 463–472. [Google Scholar] [CrossRef]

- Ribrant, J. Reliability Performance and Maintenance—A Survey of Failures In Wind Power Systems; KTH School of Electrical Engineering: Stockholm, Sweden, 2006. [Google Scholar]

- Greensolver, SASU. Greensolver Index: An Innovatice Benchmark solution to improve your wind and solar assets performance. Available online: http://greensolver.net/en/ (accessed on 10 June 2017).

- Wind Energy Benchmarking Services Limited. Webs: Wind Energy Benchmarking Services. Available online: https://www.webs-ltd.com (accessed on 11 June 2017).

- Sheng, S. Wind Turbine Gearbox Reliability Database: Condition Monitoring, and Operation and Maintenance Research Update; National Renewable Energy Laboratory: Golden, CO, USA, 2016.

- Blade Reliability Collaborative: Reliability, Operations & Maintenance, and Standard; Sandia National Laboratories: Albuquerque, NM, USA, 2017.

- Reder, M.D.; Gonzalez, E.; Melero, J.J. Wind turbine failures—Tackling current problems in failure data analysis. J. Phys. Conf. Ser. 2016, 753, 072027. [Google Scholar] [CrossRef]

- Gonzalez, E.; Reder, M.; Melero, J.J. SCADA alarms processing for wind turbine component failure detection. J. Phys. Conf. Ser. 2016, 753, 072019. [Google Scholar] [CrossRef]

- Peters, V.; McKenney, B.; Ogilvie, A.; Bond, C. Continuous Reliability Enhancement for Wind (CREW) Database: Wind Turbine Reliability Benchmark U.S. Fleet; Public Report October 2011; Sandia National Laboratories: Albuquerque, NM, USA, 2011.

- Hines, V.; Ogilvie, A.; Bond, C. Continuous Reliability Enhancement for Wind (CREW) Database: Wind Plant Reliability Benchmark; Sandia National Laboratories: Albuquerque, NM, USA, 2013.

- Carter, C.; Karlson, B.; Martin, S.; Westergaard, C. Continuous Reliability Enhancement for Wind (CREW): Program Update: SAND2016-3844; Sandia National Laboratories: Albuquerque, NM, USA, 2016.

- Lin, Y.; Le, T.; Liu, H.; Li, W. Fault analysis of wind turbines in China. Renew. Sustain. Energy Rev. 2016, 55, 482–490. [Google Scholar] [CrossRef]

- Carlstedt, N.E. Driftuppföljning av Vindkraftverk: Arsrapport 2012: >50 kW. 2013; Available online: http://www.vindstat.nu/stat/Reports/arsrapp2012.pdf (accessed on 27 August 2017).

- Ribrant, J.; Bertling, L. Survey of failures in wind power systems with focus on Swedish wind power plants during 1997–2005. In Proceedings of the 2007 IEEE Power Engineering Society General Meeting, Tampa, FL, USA, 24–28 June 2007; pp. 1–8. [Google Scholar]

- Carlsson, F.; Eriksson, E.; Dahlberg, M. Damage Preventing Measures for Wind Turbines: Phase 1—Reliability Data. Elforks Report 10:68. 2010. Available online: http://www.elforsk.se/Programomraden/El--Varme/Rapporter/?download=reportrid=10_68_ (accessed on 8 February 2016).

- Estimation of Turbine Reliability Figures within the DOWEC Project. 2002. Available online: https://www.ecn.nl/fileadmin/ecn/units/wind/docs/dowec/10048_004.pdf (accessed on 9 February 2016).

- Schmid, J.; Klein, H.P. Performance of European Wind Turbines: A Statistical Evaluation from the European Wind Turbine Database EUROWIN; Elsevier Applied Science: London, UK; New York, NY, USA, 1991. [Google Scholar]

- Schmid, J.; Klein, H. EUROWIN. The European Windturbine Database. Annual Reports. A Statistical Summary of European WEC Performance Data for 1992 and 19932; Fraunhofer Institute for Solar Energy Systems: Freiburg, Germany, 1994. [Google Scholar]

- Harman, K.; Walker, R.; Wilkinson, M. Availability trends observed at operational wind farms. In Proceedings of the European Wind Energy Conference, Brussels, Belgium, 31 March–3 April 2008. [Google Scholar]

- Chai, J.; An, G.; Ma, Z.; Sun, X. A study of fault statistical analysis and maintenance policy of wind turbine system. In International Conference on Renewable Power Generation (RPG 2015); Institution of Engineering and Technology: Stevenage, UK, 2015; p. 4. [Google Scholar]

- Tavner, P.; Spinato, F. Reliability of different wind turbine concepts with relevance to offshore application. In Proceedings of the European Wind Energy Conference, Brussels, Belgium, 31 March–3 April 2008. [Google Scholar]

- Lynette, R. Status of the U.S. wind power industry. J. Wind Eng. Ind. Aerodyn. 1988, 27, 327–336. [Google Scholar] [CrossRef]

- Koutoulakos, E. Wind Turbine Reliability Characteristics and Offshore Availability Assessment. Master’s Thesis, TU Delft, Delft, The Netherlands, 2010. [Google Scholar]

- Uzunoglu, B.; Amoiralis, F.; Kaidis, C. Wind turbine reliability estimation for different assemblies and failure severity categories. IET Renew. Power Gener. 2015, 9, 892–899. [Google Scholar]

- Herbert, G.J.; Iniyan, S.; Goic, R. Performance, reliability and failure analysis of wind farm in a developing Country. Renew. Energy 2010, 35, 2739–2751. [Google Scholar] [CrossRef]

- Commitee for Increase in Availability/Capacity Factor of Wind Turbine Generator System and Failure/Breakdown Investigation of Wind Turbine Generator Systems Subcommittee; Summary Report; New Energy Industrial Technology Development Organization: Kanagawa, Japan, 2004.

- Gayo, J.B. Final Publishable Summary of Results of Project ReliaWind; Gamesa Innovation and Technology: Egues, Spain, 2011. [Google Scholar]

- Wilkinson, M. Measuring Wind Turbine Reliability—Measuring Wind Turbine Reliability Results of the Reliawind Project; WindEurope: Brussels, Belgium, 2011. [Google Scholar]

- Andrawus, J.A. Maintenance Optimisation for Wind Turbines; Robert Gordon University: Aberdeen, UK, 2008. [Google Scholar]

- Feng, Y.; Tavner, P.J.; Long, H. Early experiences with UK round 1 offshore wind farms. Proc. Inst. Civ. Eng. Energy 2010, 163, 167–181. [Google Scholar] [CrossRef] [Green Version]

- Su, C.; Yang, Y.; Wang, X.; Hu, Z. Failures analysis of wind turbines: Case study of a Chinese wind farm. In Proceedings of the 2016 Prognostics and System Health Management Conference (PHM-Chengdu), Chengdu, China, 19–21 October 2016; pp. 1–6. [Google Scholar]

- Portfolio Review 2016; System Performance, Availability and Reliability Trend Analysis (SPARTA): Northumberland, UK, 2016.

- Carroll, J.; McDonald, A.; McMillan, D. Failure rate, repair time and unscheduled O&M cost analysis of offshore wind turbines. Wind Energy 2015, 19, 1107–1119. [Google Scholar] [Green Version]

- Carroll, J.; McDonald, A.; Dinwoodie, I.; McMillan, D.; Revie, M.; Lazakis, I. Availability, operation and maintenance costs of offshore wind turbines with different drive train configurations. Wind Energy 2017, 20, 361–378. [Google Scholar] [CrossRef]

- Carroll, J.; McDonald, A.; McMillan, D. Reliability comparison of wind turbines with DFIG and PMG drive trains. IEEE Trans. Energy Convers. 2015, 30, 663–670. [Google Scholar] [CrossRef]

- Stenberg, A. Analys av Vindkraftsstatistik i Finland. 2010. Available online: http://www.vtt.fi/files/projects/windenergystatistics/diplomarbete.pdf (accessed on 22 February 2016).

- Turkia, V.; Holtinnen, H. Tuulivoiman Tuotantotilastot: Vuosiraportti 2011; VTT Technical Research Centre of Finland: Espoo, Finland, 2013. [Google Scholar]

- Tavner, P.J.; Xiang, J.; Spinato, F. Reliability analysis for wind turbines. Wind Energy 2007, 10, 1–18. [Google Scholar] [CrossRef]

- Fraunhofer IWES. The WInD-Pool: Complex Systems Require New Strategies and Methods; Fraunhofer IWES: Munich, Germany, 2017. [Google Scholar]

- Stefan, F.; Sebastian, P.; Berthold, H. Performance and reliability benchmarking using the cross-company initiative WInD-pool. In Proceedings of the RAVE Offshore Wind R&D Conference, Bremerhaven, Germany, 14 October 2015. [Google Scholar]

- Pfaffel, S.; Faulstich, S.; Hahn, B.; Hirsch, J.; Berkhout, V.; Jung, H. Monitoring and Evaluation Program for Offshore Wind Energy Use—1. Implementation Phase; Fraunhofer-Institut für Windenergie und Energiesystemtechnik: Kassel, Germany, 2016. [Google Scholar]

- Jung, H.; Pfaffel, S.; Faulstich, S.; Bübl, F.; Jensen, J.; Jugelt, R. Abschlussbericht: Erhöhung der Verfügbarkeit von Windenergieanlagen EVW-Phase 2; FGW eV Wind Energy and Other Decentralized Energy Organizations: Berlin, Germany, 2015. [Google Scholar]

- Faulstich, S.; Durstewitz, M.; Hahn, B.; Knorr, K.; Rohrig, K. Windenergy Report Germany 2008: Written within the Research Project Deutscher Windmonitor; German Federal Ministry for the Environment Nature Conversation and Nuclear Safety: Bonn, Germany, 2009. [Google Scholar]

- Echavarria, E.; Hahn, B.; van Bussel, G.J.W.; Tomiyama, T. Reliability of wind turbine technology through time. J. Sol. Energy Eng. 2008, 130, 031005. [Google Scholar] [CrossRef]

- Faulstich, S.; Lyding, P.; Hahn, B. Component reliability ranking with respect to WT concept and external environmental conditions: Deliverable WP7.3.3, WP7 Condition monitoring: Project UpWind “Integrated Wind Turbine Design”. 2010. Available online: https://www.researchgate.net/publication/321148748_Integrated_Wind_Turbine_Design_Component_reliability_ranking_with_respect_to_WT_concept_and_external_environmental_conditions_Deliverable_WP733_WP7_Condition_monitoring (accessed on 15 June 2017).

- Berkhout, V.; Bergmann, D.; Cernusko, R.; Durstewitz, M.; Faulstich, S.; Gerhard, N.; Großmann, J.; Hahn, B.; Hartung, M.; Härtel, P.; et al. Windenergie Report Deutschland 2016; Fraunhofer: Munich, Germany, 2017. [Google Scholar]

- VGB PoweTech e.V. VGB-Standard RDS-PP: Application Guideline Part 32: Wind Power Plants: VGB-S823-32-2014-03-EN-DE; Verlag Technisch-Wissenschaftlicher Schriften: Essen, Germany, 2014. [Google Scholar]

- GADS Wind Turbine Generation: Data Reporting Instructions: Effective January 2010; NERC: Atlanta, GA, USA, 2010.

- FGW. Technische Richtlinie für Energieanlagen Teil 7: Betrieb und Instandhaltung von Kraftwerken für Erneuerbare Energien Rubrik D2: Zustands-Ereignis-Ursachen-Schlüssel für Erzeugungseinheiten (ZEUS); FGW eV Wind Energy and Other Decentralized Energy Organizations: Berlin, Germany, 2014. [Google Scholar]

- Faulstich, S.; Hahn, B.; Tavner, P.J. Wind turbine downtime and its importance for offshore deployment. Wind Energy 2011, 14, 327–337. [Google Scholar] [CrossRef]

- Giebhardt, J. Wind turbine condition monitoring systems and techniques. In Wind Energy Systems; Elsevier: Amsterdam, The Netherlands, 2011; pp. 329–349. [Google Scholar]

- Sheng, S. Wind Turbine Gearbox Condition Monitoring Round Robin Study—Vibration Analysis: Technical Report NREL/TP-5000-54530; National Renewable Energy Laboratory: Golden, CO, USA, 2012.

- Yang, W.; Tavner, P.J.; Crabtree, C.J.; Feng, Y.; Qiu, Y. Wind turbine condition monitoring: Technical and commercial challenges. Wind Energy 2014, 17, 673–693. [Google Scholar] [CrossRef] [Green Version]

- Kusiak, A.; Zhang, Z.; Verma, A. Prediction, operations, and condition monitoring in wind energy. Energy 2013, 60, 1–12. [Google Scholar] [CrossRef]

- Puglia, G.; Bangalore, P.; Tjernberg, L.B. Cost efficient maintenance strategies for wind power systems using LCC. In Proceedings of the 2014 International Conference on Probabilistic Methods Applied to Power Systems (PMAPS), Durham, UK, 7–10 July 2014; pp. 1–6. [Google Scholar]

- Xie, K.; Jiang, Z.; Li, W. Effect of wind speed on wind turbine power converter reliability. IEEE Trans. Energy Convers. 2012, 27, 96–104. [Google Scholar] [CrossRef]

- Van Bussel, G.J.W. Offshore wind energy, the reliability dilemma. In Proceedings of the First World Wind Energy Conference, Berlin, Germany, 2–6 July 2002. [Google Scholar]

| Initiative | Country | Number of WT | Onshore | Offshore | Operational Turbine Years | Start-Up of Survey | End of Survey | Source |

|---|---|---|---|---|---|---|---|---|

| CIRCE | Spain | 4300 | ✓ | ~13,000 | ~3 years (about 2013) | [26,27] | ||

| CREW-Database | USA | ~900 | ✓ | ~1800 | 2011 | ongoing | [16,28,29,30] | |

| CWEA-Database | China | ? (640 WF) | ✓ | ? | 2010 | 2012 | [31] | |

| Elforsk/Vindstat | Sweden | 786 | ✓ | ~3100 | 1989 | 2005 | [32,33,34] | |

| EPRI | USA | 290 | ✓ | ~580 | 1986 | 1987 | [35] | |

| EUROWIN | Europe | ~3500 | ✓ | ? | 1986 | ~1995 | [36,37] | |

| Garrad Hassan | Worldwide | ? (14,000 MW) | ✓ | ? | ~1992 | ~2007 | [38] | |

| Huadian | China | 1313 | ✓ | 547 | 01/2012 | 05/2012 | [39] | |

| LWK | Germany | 643 | ✓ | >6000 | 1993 | 2006 | [16,40] | |

| Lynette | USA | ? | ✓ | ? | 1981 | 1986 | [41,42] | |

| MECAL | Netherlands | 63 | ✓ | 122 | ~2 years (about 2010) | [43] | ||

| Muppandal | India | 15 | ✓ | 75 | 2000 | 2004 | [44] | |

| NEDO | Japan | 924 | ✓ | 924 | 2004 | 2005 | [45] | |

| ReliaWind | Europe | 350 | ✓ | ? | 2008 | 2010 | [46,47] | |

| Robert Gordon University | UK | 77 | ✓ | ~460 | 1997 | 2006 | [48] | |

| Round 1 offshore WF | UK | 120 | ✓ | 270 | 2004 | 2007 | [49] | |

| University Nanjing | China | 108 | ✓ | ~330 | 2009 | 2013 | [50] | |

| SPARTA | UK | 1045 | ✓ | 1045 | 2013 | ongoing | [51] | |

| Strathclyde | UK | 350 | ✓ | 1768 | 5 years (about 2010) | [52,53,54] | ||

| VTT | Finland | 96 | ✓ | 356 | 1991 | ongoing | [21,55,56] | |

| Windstats Newsletter/Report | Germany | 4500 | ✓ | ~30,000 | 1994 | 2004 | [17,40,57] | |

| Windstats Newsletter/Report | Denmark | 2500 | ✓ | >20,000 | 1994 | 2004 | [17,40,57] | |

| WInD-Pool | Germany/Europe | 456 | ✓ | ✓ | 2086 | 2013 | ongoing | [58,59,60,61] |

| WMEP | Germany | 1593 | ✓ | 15,357 | 1989 | 2008 | [62,63] | |

| Initiative | Capacity Factor [%] | |

|---|---|---|

| Onshore | Offshore | |

| CREW-Database | 35.2 | |

| EUROWIN | 19 | |

| Lynette | 20 | |

| Muppandal | 24.9 | |

| Round 1 offshore WF | 29.5 | |

| SPARTA | 39.9 | |

| VTT | 21.5 | |

| WInD-Pool | 18.4 | 39 |

| WMEP | 18.5 | |

| Initiative | Onshore Availability [%] | Offshore Availability [%] | ||||

|---|---|---|---|---|---|---|

| Time-Based | Technical | Energetic | Time-Based | Technical | Energetic | |

| CREW-Database | 96.5 | |||||

| CWEA-Database | 97 | |||||

| Elforsk/Vindstat | 96 | |||||

| Garrad Hassan | 96.4 | |||||

| Lynette | 80 | |||||

| Muppandal | 82.9 | 94 | ||||

| Round 1 offshore WF | 80.2 | |||||

| SPARTA | 92.5 | |||||

| VTT | 89 | |||||

| WInD-Pool | 94.1 | 92.0 | 92.2 | 88.1 | ||

| WMEP | 98.3 | |||||

| System/Subsystem | CIRCE | CWEA-Database | Elforsk/Vindstat | EPRI | Huadian | LWK | Muppandal | NEDO | |

|---|---|---|---|---|---|---|---|---|---|

| Failure Rate [1/a] | |||||||||

| =MDA | Rotor System | 0.094 | 1.961 | 0.053 | 1.026 | 0.141 | 0.321 | 0.187 | 0.038 |

| =MDA10 … =MDA13 | Rotor Blades | 0.037 | 0.403 | 0.052 | 0.357 | 0.026 | 0.194 | 0.187 | 0.011 |

| =MDA20 | Rotor Hub Unit | 0.006 | / | 0.001 | 0.136 | / | / | / | 0.013 |

| =MDA30 | Rotor Brake System | 0.02 | / | / | 0.195 | / | 0.04 | / | 0.001 |

| - | Pitch System | 0.029 | 1.558 | / | 0.338 | 0.115 | 0.088 | / | 0.013 |

| =MDK | Drive Train System | 0.096 | 1.225 | 0.054 | 0.921 | 0.088 | 0.226 | 0.28 | 0.015 |

| =MDK20 | Speed Conversion System | 0.083 | 1.138 | 0.045 | 0.264 | 0.062 | 0.142 | 0.173 | 0.005 |

| =MDK30 | Brake System Drive Train | 0.002 | 0.087 | 0.005 | 0.452 | 0.018 | 0.053 | 0.107 | 0.003 |

| =MDL | Yaw System | 0.02 | 0.317 | 0.026 | 1.245 | 0.026 | 0.115 | 0.16 | 0.005 |

| =MDX | Central Hydraulic System | 0.022 | / | 0.061 | / | / | 0.134 | 0.173 | 0.003 |

| =MDY | Control System | 0.079 | / | 0.05 | 1.424 | 0.106 | 0.222 | 0.12 | 0.015 |

| =MKA | Power Generation System | 0.029 | 1.665 | 0.021 | 0.374 | 0.15 | 0.14 | 0.067 | 0.01 |

| =MS | Transmission | 0.067 | 2 | 0.067 | 1.657 | 0.291 | 0.323 | / | 0.003 |

| =MSE | Converter System | 0.005 | 2 | / | / | 0.229 | 0.005 | / | / |

| =MST | Generator Transformer System | 0.005 | / | / | / | 0.018 | / | / | / |

| =MUD | Nacelle | 0.005 | / | / | 0.043 | / | / | / | 0.009 |

| =MUR | Common Cooling System | 0.028 | / | / | / | / | / | / | / |

| =CKJ10 | Meteorological Measurement | 0.009 | / | / | / | / | 0.061 | 0.027 | 0.058 |

| =UMD | Tower System | 0.003 | / | 0.006 | 0.203 | / | / | / | 0.001 |

| =UMD10 … =UMD40 | Tower System | 0.002 | / | 0.006 | 0.203 | / | / | / | / |

| =UMD80 | Foundation System | 0.001 | / | / | / | / | / | / | 0.001 |

| - | Other | 0.03 | / | 0.065 | 3.302 | 0.044 | 0.312 | / | 0.013 |

| =G | Wind Turbine (total) | 0.481 | 7.167 | 0.403 | 10.195 | 0.846 | 1.855 | 1.013 | 0.171 |

| System/Subsystem | University Nanjing | SPARTA (Offshore) | Strathclyde (Offshore) | VTT | Windstats GER | Windstats DK | WMEP | |

|---|---|---|---|---|---|---|---|---|

| Failure Rate [1/a] | ||||||||

| =MDA | Rotor System | 12.229 | 2.75 | 1.831 | 0.21 | 0.368 | 0.049 | 0.522 |

| =MDA10 … =MDA13 | Rotor Blades | / | 1.353 | 0.52 | 0.2 | 0.223 | 0.035 | 0.113 |

| =MDA20 | Rotor Hub Unit | 0.027 | / | 0.235 | 0.01 | / | / | 0.171 |

| =MDA30 | Rotor Brake System | / | / | / | / | 0.049 | 0.007 | / |

| - | Pitch System | / | 1.397 | 1.076 | / | 0.097 | 0.007 | 0.238 |

| =MDK | Drive Train System | 2.967 | 0.985 | 0.633 | 0.19 | 0.164 | 0.065 | 0.291 |

| =MDK20 | Speed Conversion System | 2.084 | / | 0.633 | 0.15 | 0.1 | 0.04 | 0.106 |

| =MDK30 | Brake System Drive Train | 0.533 | / | / | 0.04 | 0.039 | 0.014 | 0.13 |

| =MDL | Yaw System | 1.089 | 0.77 | 0.189 | 0.1 | 0.126 | 0.027 | 0.177 |

| =MDX | Central Hydraulic System | 1.747 | 1.543 | / | 0.36 | 0.11 | 0.031 | 0.225 |

| =MDY | Control System | 15.223 | 1.31 | 0.428 | 0.1 | 0.223 | 0.05 | 0.403 |

| =MKA | Power Generation System | 2.537 | 0.561 | 0.999 | 0.08 | 0.12 | 0.024 | 0.1 |

| =MS | Transmission | 9.845 | 1.774 | 1.11 | 0.11 | 0.341 | 0.019 | 0.548 |

| =MSE | Converter System | / | 1.318 | 0.18 | / | / | / | / |

| =MST | Generator Transformer System | / | 0.456 | 0.065 | / | / | / | / |

| =MUD | Nacelle | / | / | / | / | / | / | 0.094 |

| =MUR | Common Cooling System | / | / | 0.213 | / | / | / | / |

| =CKJ10 | Meteorological Measurement | / | / | / | / | / | / | / |

| =UMD | Tower System | / | / | 0.185 | 0.09 | / | / | / |

| =UMD10 … =UMD40 | Tower System | / | / | / | / | / | / | / |

| =UMD80 | Foundation System | / | / | / | / | / | / | / |

| - | Other | 1.218 | 6.147 | 2.685 | 0.21 | 0.344 | 0.169 | 0.245 |

| =G | Wind Turbine (total) | 46.856 | 15.84 | 8.273 | 1.45 | 1.796 | 0.434 | 2.606 |

| System/Subsystem | CIRCE | Elforsk/Vindstat | Huadian | LWK | University Nanjing | VTT | WMEP | |

|---|---|---|---|---|---|---|---|---|

| Mean Down Time per Failure [days] | ||||||||

| =MDA | Rotor System | 6.4 | 3.75 | 4.27 | 1.62 | 0.17 | 10.2 | 3.07 |

| =MDA10 … =MDA13 | Rotor Blades | 8.3 | 3.82 | 7.58 | 1.76 | / | 10.67 | 3.42 |

| =MDA20 | Rotor Hub Unit | 6.76 | 0.52 | / | / | 0.14 | 0.83 | 4.13 |

| =MDA30 | Rotor Brake System | 5.54 | / | / | 2.25 | / | / | / |

| - | Pitch System | 4.17 | / | 3.5 | 1.05 | / | / | 2.14 |

| =MDK | Drive Train System | 8.24 | 10.3 | 6.82 | 4.15 | 0.25 | 21.08 | 4.63 |

| =MDK20 | Speed Conversion System | 8.26 | 10.7 | 6.5 | 5.27 | 0.3 | 25.08 | 6.69 |

| =MDK30 | Brake System Drive Train | 4.29 | 5.23 | 8.53 | 0.74 | 0.06 | 6.08 | 2.71 |

| =MDL | Yaw System | 6.35 | 10.81 | 9.48 | 1.31 | 0.21 | 6.38 | 2.56 |

| =MDX | Central Hydraulic System | 2.05 | 1.8 | / | 1.04 | 0.16 | 3.58 | 1.15 |

| =MDY | Control System | 1.81 | 7.69 | 4.74 | 0.99 | 0.16 | 1.75 | 1.88 |

| =MKA | Power Generation System | 13.65 | 8.78 | 7.02 | 3.1 | 0.24 | 5.13 | 7.45 |

| =MS | Transmission | 3.17 | 4.44 | 6.03 | 1.44 | 0.18 | 5.96 | 1.51 |

| =MSE | Converter System | 3.2 | / | 6.34 | 1.24 | / | / | / |

| =MST | Generator Transformer System | 10.68 | / | 11.37 | / | / | / | / |

| =MUD | Nacelle | 13.98 | / | / | / | / | / | 3.31 |

| =MUR | Common Cooling System | 1.55 | / | / | / | / | / | / |

| =CKJ10 | Meteorological Measurement | 0.83 | / | / | 0.74 | / | / | / |

| =UMD | Tower System | 1.88 | 4.34 | / | / | / | 7.42 | / |

| =UMD10 … =UMD40 | Tower System | 0.45 | 4.34 | / | / | / | / | / |

| =UMD80 | Foundation System | 4.69 | / | / | / | / | / | / |

| - | Other | 2.02 | 2.27 | 2.27 | 0.92 | 0.14 | 2.8 | 1.57 |

| =G | Wind Turbine (total) | 5.18 | 5.42 | 5.75 | 1.72 | 0.18 | 7.29 | 2.57 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pfaffel, S.; Faulstich, S.; Rohrig, K. Performance and Reliability of Wind Turbines: A Review. Energies 2017, 10, 1904. https://doi.org/10.3390/en10111904

Pfaffel S, Faulstich S, Rohrig K. Performance and Reliability of Wind Turbines: A Review. Energies. 2017; 10(11):1904. https://doi.org/10.3390/en10111904

Chicago/Turabian StylePfaffel, Sebastian, Stefan Faulstich, and Kurt Rohrig. 2017. "Performance and Reliability of Wind Turbines: A Review" Energies 10, no. 11: 1904. https://doi.org/10.3390/en10111904