Prediction Method for Power Transformer Running State Based on LSTM_DBN Network

Abstract

1. Introduction

2. Prediction of Dissolved Gases Concentration in Transformer Oil Based on LSTM Model

2.1. Prediction of Dissolved Gases Concentration

2.2. Principles of Prediction

3. Analysis of Transformer Running State Based on Deep Belief Network

3.1. Transformer Running Status Analysis

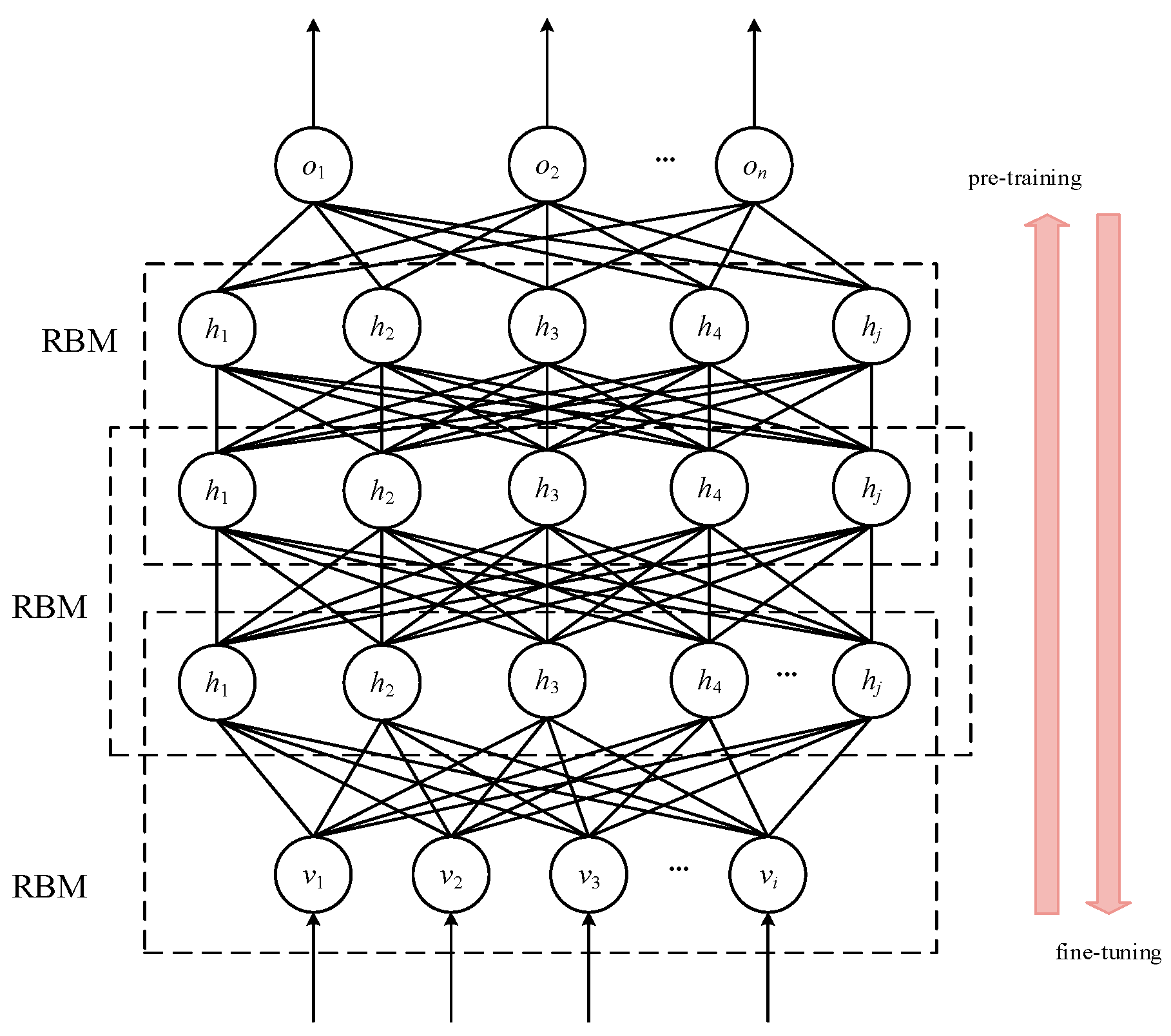

3.2. Deep Belief Network

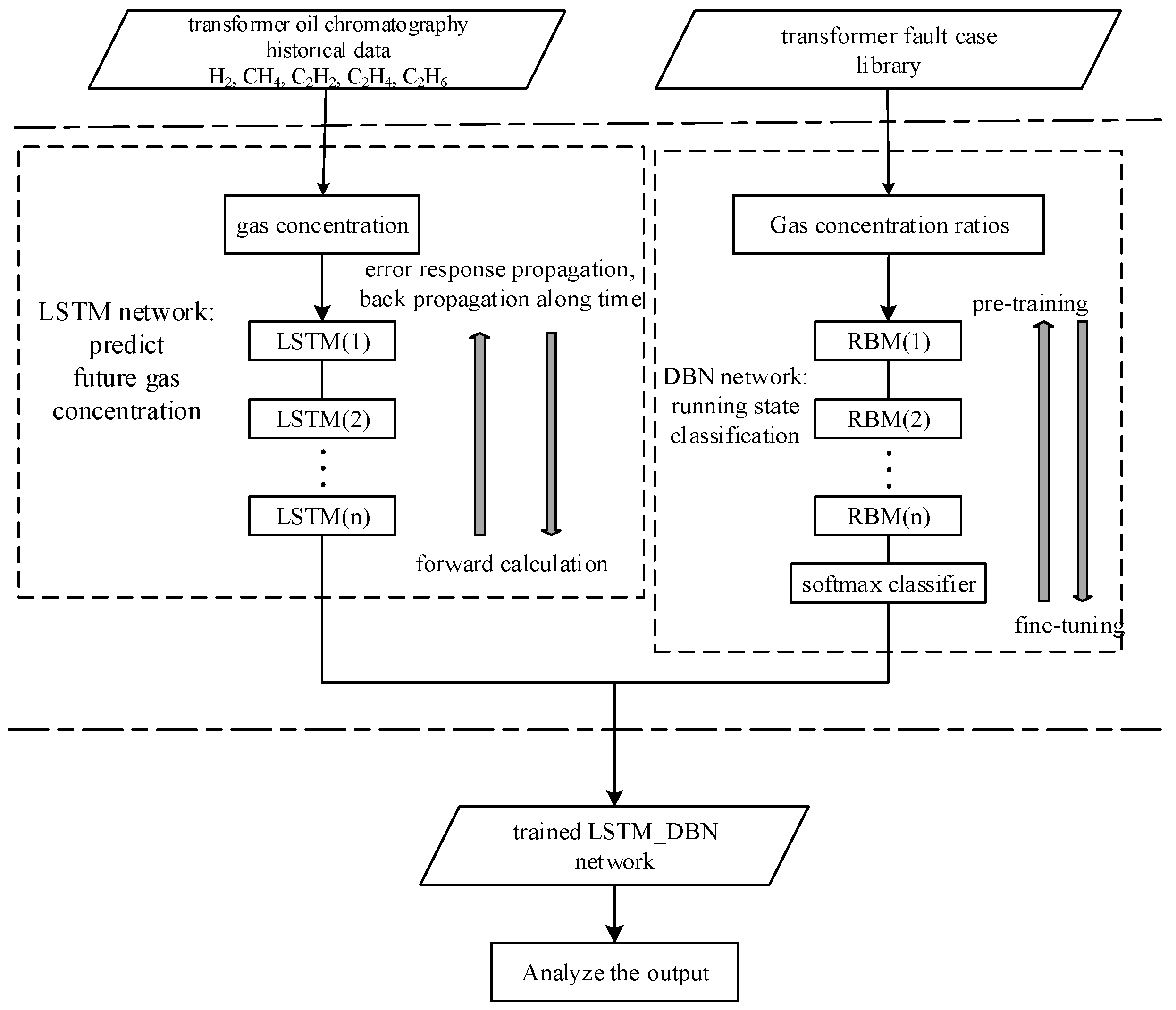

4. Transformer State Prediction Process

- (1)

- Collect the transformer oil chromatographic data and select the characteristic parameters H2, CH4, C2H2, C2H4 and C2H6 as input for the model;

- (2)

- Train the LSTM model. According to the transformer oil chromatography historical data, each characteristic gas concentration is taken as the input, and the corresponding gas concentration is used as the output to train LSTM model to obtain future gas concentration values;

- (3)

- Train the DBN model. According to the samples of the transformer fault case library, the gas concentration ratios are taken as the input of the DBN network, and 7 kinds of transformer running states are used as the output to train DBN model;

- (4)

- Use the trained LSTM_DBN network to test the test set samples. Input the five characteristic gas concentration values to the LSTM model and predict future gas changes. Then calculate the gas concentration ratio and use the ratio results as input to the DBN network to obtain the future running states of the transformer;

- (5)

- If there is fault information in the prediction result, an early warning signal needs to be issued in time and the fault type can be predicted.

5. Case Analysis

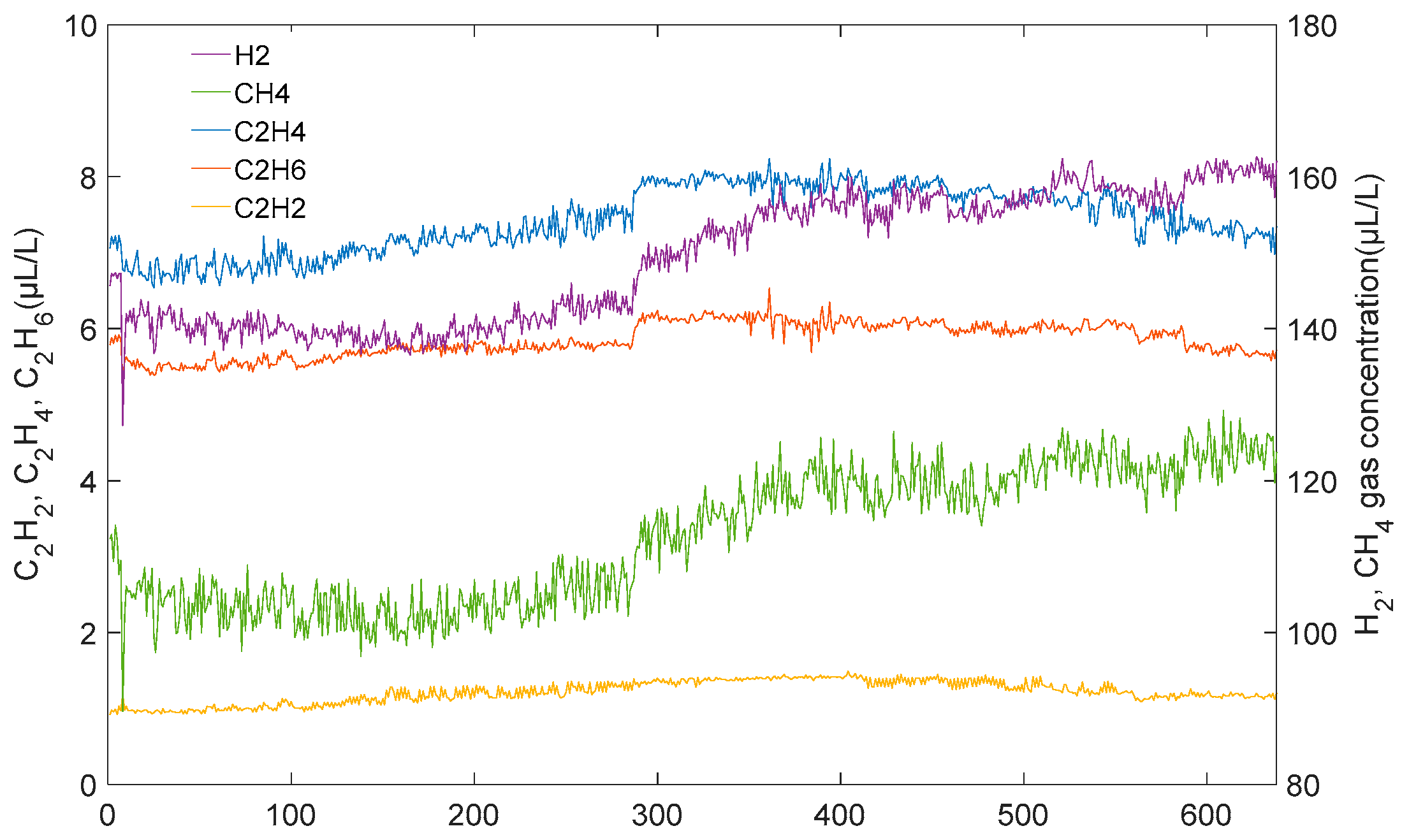

5.1. Gas Concentration Prediction

5.2. Gas Concentration Prediction

5.3. Running State Prediction

6. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

Nomenclature

| Variables | |

| x | the input vector |

| y | the output vector |

| h | the state of the hidden layer |

| Wxh | the weight matrix of the input layer to the hidden layer of RNN network |

| Why | the weight matrix of the hidden layer to the output layer of RNN network |

| Whh | the weight matrix of the hidden layer state as the input at the next moment of RNN network |

| f(t) | the result of the forget state |

| Wf | the weight matrix of forget state |

| bf | The offset of forget state |

| i(t) | the input gate state result |

| the cell state input at time t | |

| Wi | the input gate weight matrix |

| Wc | the input cell state weight matrix |

| bi | the input gate bias |

| bc | the input cell state bias |

| o(t) | the output gate state result |

| Wo | the output gate weight matrix |

| bo | the output gate offset |

| v | a visible layer |

| w | the weights between visible layers and hidden layers |

| the parameter of RBM | |

| the connection weight between the visible layer node vi and the hidden layer node hj | |

| ai | the offsets of vi |

| bj | the offsets of and hj |

| Symbol | |

| the activation function | |

| multiplication by elements | |

References

- Taha, I.B.M.; Mansour, D.A.; Ghoneim, S.S.M. Conditional probability-based interpretation of dissolved gas analysis for transformer incipient faults. IET Gener. Transm. Distrib. 2017, 11, 943–951. [Google Scholar] [CrossRef]

- Singh, S.; Bandyopadhyay, M.N. Dissolved gas analysis technique for incipient fault diagnosis in power transformers: A bibliographic survey. IEEE Electr. Insul. Mag. 2010, 26, 41–46. [Google Scholar] [CrossRef]

- Cruz, V.G.M.; Costa, A.L.H.; Paredes, M.L.L. Development and evaluation of a new DGA diagnostic method based on thermodynamics fundamentals. IEEE Trans. Dielectr. Electr. Insul. 2015, 22, 888–894. [Google Scholar] [CrossRef]

- Yan, Y.; Sheng, G.; Liu, Y.; Du, X.; Wang, H.; Jiang, X.C. Anomalous State Detection of Power Transformer Based on Algorithm Sliding Windows and Clustering. High Volt. Eng. 2016, 42, 4020–4025. [Google Scholar]

- Gouda, O.S.; El-Hoshy, S.H.; El-Tamaly, H.H. Proposed heptagon graph for DGA interpretation of oil transformers. IET Gener. Transm. Distrib. 2018, 12, 490–498. [Google Scholar] [CrossRef]

- Malik, H.; Mishra, S. Application of gene expression programming (GEP) in power transformers fault diagnosis using DGA. IEEE Trans. Ind. Appl. 2016, 52, 4556–4565. [Google Scholar] [CrossRef]

- Khan, S.A.; Equbal, M.D.; Islam, T. A comprehensive comparative study of DGA based transformer fault diagnosis using fuzzy logic and ANFIS models. IEEE Trans. Dielectr. Electr. Insul. 2015, 22, 590–596. [Google Scholar] [CrossRef]

- Li, J.; Zhang, Q.; Wang, K. Optimal dissolved gas ratios selected by genetic algorithm for power transformer fault diagnosis based on support vector machine. IEEE Trans. Dielectr. Electr. Insul. 2016, 23, 1198–1206. [Google Scholar] [CrossRef]

- Zheng, R.; Zhao, J.; Zhao, T.; Li, M. Power Transformer Fault Diagnosis Based on Genetic Support Vector Machine and Gray Artificial Immune Algorithm. Proc. CSEE 2011, 31, 56–64. [Google Scholar]

- Tripathy, M.; Maheshwari, R.P.; Verma, H.K. Power transformer differential protection based on optimal probabilistic neural network. IEEE Trans. Power Del. 2010, 25, 102–112. [Google Scholar] [CrossRef]

- Ghoneim, S.S.M.; Taha, I.B.M.; Elkalashy, N.I. Integrated ANN-based proactive fault diagnostic scheme for power transformers using dissolved gas analysis. IEEE Trans. Dielectr. Electr. Insul. 2016, 23, 586–595. [Google Scholar] [CrossRef]

- Li, S.; Wu, G.; Gao, B.; Hao, C.; Xin, D.; Yin, X. Interpretation of DGA for transformer fault diagnosis with complementary SaE-ELM and arctangent transform. IEEE Trans. Dielectr. Electr. Insul. 2016, 23, 586–595. [Google Scholar] [CrossRef]

- Ghoneim, S.S.M. Intelligent Prediction of Transformer Faults and Severities Based on Dissolved Gas Analysis Integrated with Thermodynamics Theory. IET Sci. Meas. Technol. 2018, 12, 388–394. [Google Scholar] [CrossRef]

- Zhao, W.; Zhu, Y.; Zhang, X. Combinational Forecast for Transformer Faults Based on Support Vector Machine. Proc. CSEE 2008, 28, 14–19. [Google Scholar]

- Zhou, Q.; Sun, C.; Liao, R.J. Multiple Fault Diagnosis and Short-term Forecast of Transformer Based on Cloud Theory. High Volt. Eng. 2014, 40, 1453–1460. [Google Scholar]

- Liu, Z.; Song, B.; Li, E. Study of “code absence” in the IEC three-ratio method of dissolved gas analysis. IEEE Electr. Insul. Mag. 2015, 31, 6–12. [Google Scholar] [CrossRef]

- Song, E.; Soong, F.K.; Kang, H.G. Effective Spectral and Excitation Modeling Techniques for LSTM-RNN-Based Speech Synthesis Systems. IEEE Trans. Speech Audio Process. 2017, 25, 2152–2161. [Google Scholar] [CrossRef]

- Gao, L.; Guo, Z.; Zhang, H. Video captioning with attention-based lstm and semantic consistency. IEEE Trans. Multimed. 2017, 19, 2045–2055. [Google Scholar] [CrossRef]

- Zhao, J.; Qu, H.; Zhao, J. Towards traffic matrix prediction with LSTM recurrent neural networks. Electron. Lett. 2018, 54, 566–568. [Google Scholar] [CrossRef]

- Lin, J.; Sheng, G.; Yan, Y.; Dai, J.; Jiang, X. Prediction of Dissolved Gases Concentration in Transformer Oil Based on KPCA_IFOA_GRNN Model. Energies 2018, 11, 225. [Google Scholar] [CrossRef]

- Dai, J.J.; Song, H.; Sheng, G.H. Dissolved gas analysis of insulating oil for power transformer fault diagnosis with deep belief network. IEEE Trans. Dielectr. Electr. Insul. 2017, 24, 2828–2835. [Google Scholar] [CrossRef]

- Ma, M.; Sun, C.; Chen, X. Discriminative Deep Belief Networks with Ant Colony Optimization for Health Status Assessment of Machine. IEEE Trans. Instrum. Meas. 2017, 66, 3115–3125. [Google Scholar] [CrossRef]

- Beevi, K.S.; Nair, M.S.; Bindu, G.R. A Multi-Classifier System for Automatic Mitosis Detection in Breast Histopathology Images Using Deep Belief Networks. IEEE J. Transl. Eng. Health Med. 2017, 5, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Karim, F.; Majumdar, S.; Darabi, H. LSTM fully convolutional networks for time series classification. IEEE Access 2017, 6, 1662–1669. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, H.; Dong, J. Prediction of Sea Surface Temperature Using Long Short-Term Memory. IEEE Geosci. Remote Sen. Lett. 2017, 14, 1745–1749. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, Y.; Liu, M. Data-Based Line Trip Fault Prediction in Power Systems Using LSTM Networks and SVM. IEEE Access 2017, 6, 7675–7686. [Google Scholar] [CrossRef]

- Song, H.; Dai, J.; Luo, L.; Sheng, G.; Jiang, X. Power Transformer Operating State Prediction Method Based on an LSTM Network. Energies 2018, 11, 914. [Google Scholar] [CrossRef]

- Zhong, P.; Gong, Z.; Li, S. Learning to diversify deep belief networks for hyperspectral image classification. IEEE Geosci. Remote Sen. Lett. 2017, 55, 3516–3530. [Google Scholar] [CrossRef]

- Lu, N.; Li, T.; Ren, X. A deep learning scheme for motor imagery classification based on restricted boltzmann machines. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 566–576. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Zhao, X.; Jia, X. Spectral–spatial classification of hyperspectral data based on deep belief network. IEEE J. Sel. Top. Appl. Earth Observ. 2015, 8, 2381–2392. [Google Scholar] [CrossRef]

- Taji, B.; Chan, A.D.C.; Shirmohammadi, S. False Alarm Reduction in Atrial Fibrillation Detection Using Deep Belief Networks. IEEE Trans. Instrum. Meas. 2018, 67, 1124–1131. [Google Scholar] [CrossRef]

| IEC ratios | CH4/H2, C2H2/C2H4, C2H4/C2H6 |

| Rogers ratios | CH4/H2, C2H2/C2H4, C2H4/C2H6, C2H6/CH4 |

| Dornenburg ratios | CH4/H2, C2H2/C2H4, C2H2/CH4, C2H6/C2H2 |

| Duval ratios | CH4/C, C2H2/C, C2H4/C, where C = CH4 + C2H2 + C2H4 |

| gas concentration ratios | CH4/H2, C2H2/C2H4, C2H4/C2H6, C2H6/CH4, C2H2/CH4, C2H6/C2H2, CH4/C1, C2H2/C1, C2H4/C1, H2/C2, CH4/C2, C2H2/C2, C2H4/C2, C2H6/C2 where C1 = CH4 + C2H2 + C2H4, where C2 = H2 + CH4 + C2H2 + C2H4 + C2H6 |

| Type of Gas | Average Error (%) | |||

|---|---|---|---|---|

| LSTM | GRNN | DBN | SVM | |

| H2 | 1.89 | 5.01 | 2.48 | 6.77 |

| CH4 | 0.26 | 3.93 | 1.78 | 4.01 |

| C2H2 | 2.45 | 4.67 | 1.93 | 6.32 |

| C2H4 | 1.45 | 2.98 | 2.05 | 5.94 |

| C2H6 | 2.1 | 4.24 | 1.64 | 8.46 |

| Month | H | LT | MT | HT | PD | LD | HD | Fault Case Rate |

|---|---|---|---|---|---|---|---|---|

| May | 57 | 1 | 3 | 0 | 1 | 0 | 0 | 8.1% |

| June | 53 | 0 | 5 | 1 | 1 | 0 | 0 | 11.7% |

| July | 49 | 0 | 10 | 2 | 0 | 0 | 1 | 20.9% |

| August | 30 | 2 | 28 | 1 | 0 | 1 | 0 | 51.6% |

| September | 21 | 4 | 34 | 1 | 0 | 0 | 0 | 65% |

| October | 16 | 3 | 37 | 4 | 0 | 2 | 0 | 74.2% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, J.; Su, L.; Yan, Y.; Sheng, G.; Xie, D.; Jiang, X. Prediction Method for Power Transformer Running State Based on LSTM_DBN Network. Energies 2018, 11, 1880. https://doi.org/10.3390/en11071880

Lin J, Su L, Yan Y, Sheng G, Xie D, Jiang X. Prediction Method for Power Transformer Running State Based on LSTM_DBN Network. Energies. 2018; 11(7):1880. https://doi.org/10.3390/en11071880

Chicago/Turabian StyleLin, Jun, Lei Su, Yingjie Yan, Gehao Sheng, Da Xie, and Xiuchen Jiang. 2018. "Prediction Method for Power Transformer Running State Based on LSTM_DBN Network" Energies 11, no. 7: 1880. https://doi.org/10.3390/en11071880

APA StyleLin, J., Su, L., Yan, Y., Sheng, G., Xie, D., & Jiang, X. (2018). Prediction Method for Power Transformer Running State Based on LSTM_DBN Network. Energies, 11(7), 1880. https://doi.org/10.3390/en11071880