1. Introduction

In recent years, renewable energy sources (RESs), particularly photovoltaic (PV) sources, have substantially penetrated modern power systems [

1]. A number of solar PV sources are expected to integrate into the residential power sector due to their abundant and environmentally-friendly nature, as well as their proximity to end-users. The adoption of solar PV sources can reduce system losses, improve reliability, security, and stability, as well as minimize transmission congestion [

2]. However, the solar source is intermittent and is is difficult to accurately predict its power generation. This issue can directly affect power generation planning and may exacerbate power quality and security. Several state-of-the-art deep learning techniques have been proposed in the literature to accurately forecast solar PV power generation in modern power networks.

Deep learning techniques are a subset of machine learning that model the neurons in the human brain. It has evolved from the artificial neural network (ANN), which was pioneered in 1943 by Warren McCulloch and Walter Pitts [

3]. The ANN was later developed as a recurrent neural network (RNN) by David Everett [

4] to address the problem of so-called very deep learning caused by neurons greater than 1000 [

5]. Subsequently, the RNN evolved into the long short-term memory (LSTM) model in 1997 with the addition of cell state [

6]. The gate recurrent unit (GRU) model was developed from the LSTM model in 2014 and is similar conceptually, but requires fewer parameters [

7]. In 1997, the bidirectional RNN was developed to allow forward and backward processing, which later evolved into BiRNN, BiLSTM, and BiGRU [

8]. Another branch of machine learning is the convolutional neural network (CNN), which emerged in the 1980s and is designed to process pixel data [

9].

The deep learning technique has been applied to several research projects. The detection of physical damage on insulators has been studied using a deep learning technique [

10]. Additionally, this technique has been applied to calculate reactive power in an IEEE 14-Bus test system and optimize power flow [

11,

12,

13]. In [

14], several recent deep learning approaches were applied for short-term Net-PV forecasting, while the authors in [

15] proposed frequency-domain analysis and deep learning for ultra-short-term PV power generation forecasting.

Different deep learning models have recently been combined to form a hybrid model for the enhancement of model efficiency. CNN-GRU is a new hybrid predictive model used for short-term wind speed forecasting, in which numerical weather forecasting (NWP) and actual wind speeds are used as the inputs. The CNN-GRU model is a standard used to resolve size differences between data types [

16]. The CNN-BiGRU (bidirectional GRU) hybrid model can fully extract text properties, solve distance sequence dependency, and improve training reliability [

17].

Among the applications of deep learning techniques, forecasting is quite popular. Deep learning techniques are also applied in short-term forecasting and mid-term peak load forecasting [

18,

19,

20]. Moreover, the RNN model has been used in forecasting due to its high efficiency [

21,

22]. Furthermore, the LSTM model was developed for the forecasting application. The LSTM model consists of the cell state or memory, which involves the addition of the RNN model. It was also found that the LSTM model yields high accuracy in forecasting [

19,

20,

23]. Later, the LSTM model was developed into the GRU model by removing the cell state. Only the update gate and reset gate have been used for short-term forecasts [

16,

24,

25,

26]. Typically, CNN has been used for image processing, but some studies have applied it to electrical forecasting [

27]. Both CNN-GRU and CNN-BiGRU models were used to forecast short-term residential loads [

17,

28]. Based on a literature survey, it was found that deep learning techniques have been widely applied in forecasting applications. In [

29], air conditioning loads were forecasted in the short term for effective demand response using the Levenberg–Marquardt algorithm-based ANN. The proposed Levenberg–Marquardt algorithm-based ANN yielded better performance compared to the scaled conjugate gradient (SCG) and statistical regression approach. To promote net-zero emissions, many countries worldwide have adopted PV power generation systems into distribution networks and microgrids [

30,

31]. The authors in [

32] studied the effects of dust on PV panels. The voltage of the panels decreased when the dust was high, thus the efficiency decreased. The dust also caused a partial shading fault that, if not carefully monitored, could lead to a hot spot on the PV panels [

33]. To prevent this situation and detect the hot spot, global maximum power point tracking (GMPPT) is employed [

34]. Due to the uncertainty and variability of the PV source, it is necessary to use highly accurate techniques to predict the output power generation from the PV power plants. So far, many researchers have applied deep learning techniques in energy forecasting to maximize the utilization of PV power plants and for effective energy planning [

35].

Motivated by the above discussion, this paper examines the performances of different well-known deep learning techniques for forecasting short-term PV power generation. The selected deep learning techniques include RNN, CNN, GRU, LSTM, BiLSTM, BiGRU, CNN-LSTM, CNN-BiLSTM, CNN-GRU, and CNN-BiGRU. A real-site floating PV power plant with a capacity of 1.5 MWp at Suranaree University of Technology Hospital in Thailand was selected as the study area. Several input data variables with a resolution of five minutes were utilized for training and testing deep learning models under different weather conditions and time horizons. Furthermore, the root mean square error (RMSE), mean absolute percentage error (MAPE), and mean absolute error (MAE) were used to quantify error values between actual and forecasted values from different deep learning methods.

Table 1 illustrates the differences between our work and existing studies. The main objective of our work was to investigate the performances of widely used deep learning techniques for short-term forecasting of PV power generation on a real site of a floating PV power plant using real datasets.

The main contributions of this paper are as follows:

We have investigated and examined the effectiveness of several well-known deep learning techniques for forecasting the PV power generation of a real-site floating PV power plant located at the Suranaree University of Technology Hospital in Thailand. The selected deep learning techniques were classified into single models (RNN, CNN, LSTM, GRU, BiLSTM, and BiGRU) and hybrid models (CNN-LSTM, CNN-BiLSTM, CNN-GRU, and CNN-BiGRU).

We considered short-term forecasting of the PV power generation. Input variables of the PV power generation, solar irradiance, PV module temperature, and wind speed with five-minute resolution obtained from the floating PV power plant (1.5 MWp in capacity) were utilized to verify the performances of the proposed deep learning techniques. Three scenarios, including one-day PV power generation forecasting under regular and cloudy weather and one-week PV power generation forecasting, are extensively examined and discussed.

Widely used error measurements of RMSE, MAPE, and MAE were applied to quantify the errors between actual and forecasted values from the different deep learning models. The accurate performances of the deep learning models were determined based on these measurements. The proposed deep learning techniques were implemented using Keras based on TensorFlow in Python via the Jupyter notebook.

The rest of the paper is organized as follows:

Section 2 presents the details of the real-site floating PV power plant used as a case study in this paper.

Section 3 introduces the application of the selected deep learning techniques for short-term PV power generation forecasting, including implementation setup and model accuracy analysis.

Section 4 presents the verification results obtained by different deep learning models. Finally,

Section 5 concludes the paper. Note that the theoretical backgrounds of the deep learning models are provided in the

Appendix A,

Appendix B,

Appendix C,

Appendix D,

Appendix E,

Appendix F,

Appendix G,

Appendix H,

Appendix I and

Appendix J.

2. A 1.5 MWp Floating PV Power Plant

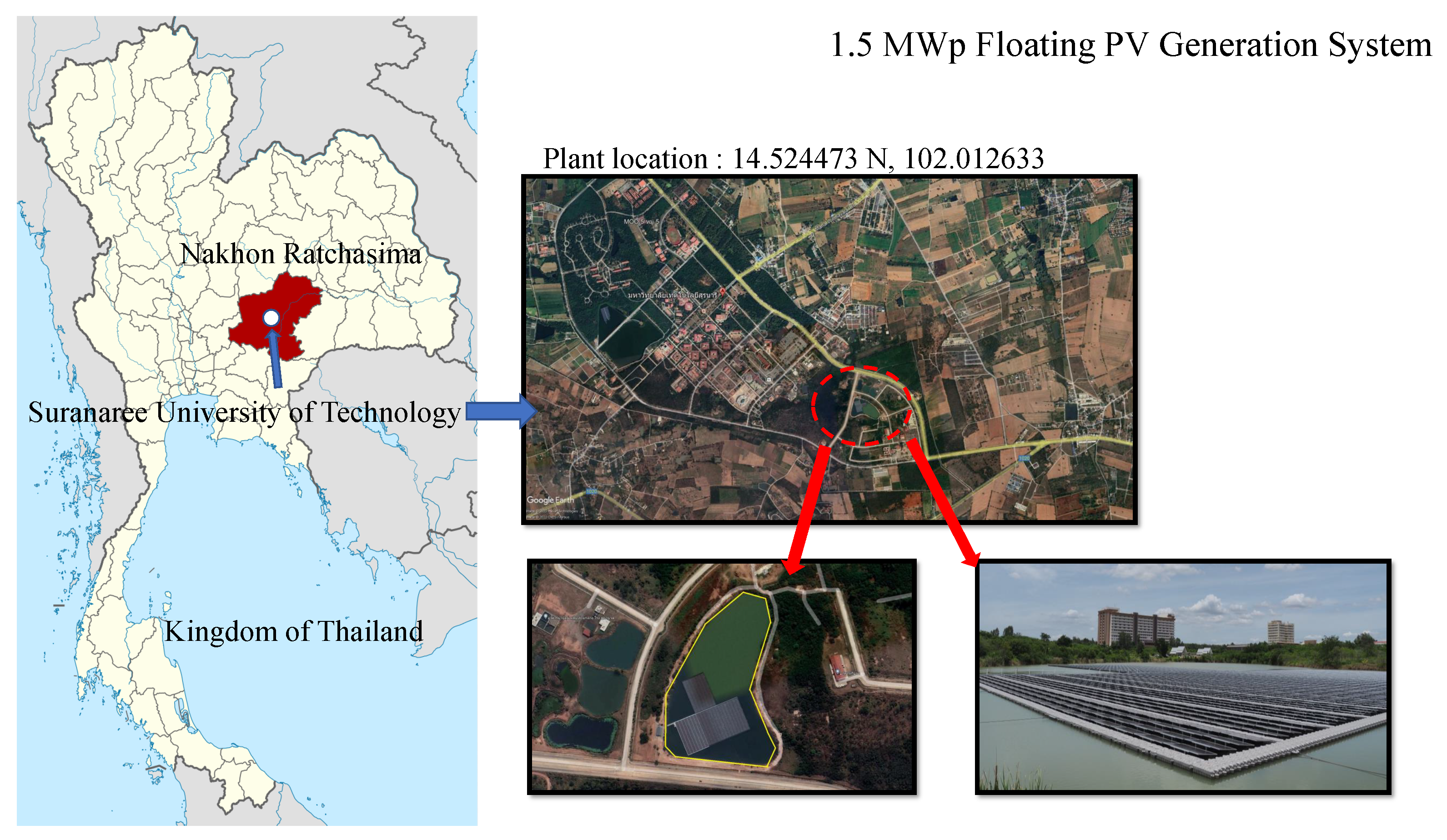

In this study, a floating PV power plant, located at 111 University Road, Suranaree Sub-district, Mueang Nakhon Ratchasima District, Nakhon Ratchasima Province, Thailand, with a population of 30,000, was selected as the study area to investigate the performances of the proposed deep learning models. The installed capacity of the floating PV plant was 1.5 MWp. The floating PV plant cooperated with the Global Power Synergy Public Co., Ltd., Bangkok, Thailand 10120. In the floating PV plant, bi-facial cells and mono-facial panels were installed with a total of 8 inverters; each contains 14 strings with 25 panels. Detail of the PV modules are given in

Table 2; the installed floating PV site is illustrated in

Figure 1.

Specifically, we focus on short-term PV power forecasting, using five-minute resolution data for training and testing deep learning models. The forecasts include one-day and one-week periods, with the one-day forecast further divided into two events: (i) regular weather conditions and (ii) cloudy weather conditions. Input data for the deep learning models include PV power generation, solar irradiance, wind speed, and PV module temperature. The data used for forecasting were selected from a three-month period, between 1 February and 23 April 2022, and divided into training and testing sets. For the one-day forecast under regular weather conditions, the training set includes data from 1 February to 15 April (74 days), with the testing set consisting of data from 16 April (1 day). For the one-day forecast under cloudy weather conditions, the training set also includes data from 1 February to 15 April (74 days), with the testing set consisting of data from 18 April (1 day). Finally, for the one-week forecast, the training set includes data from 1 February to 15 April (74 days), with the testing set consisting of data from 16 April to 23 April (7 days). The training and testing data are shown in

Figure 2.

5. Conclusions

Due to the advancement of computing technologies, various novel deep learning techniques have been proposed. One area where these techniques have been extensively applied is power generation forecasting from renewable energy sources, which enables effective system planning and enhances energy security. In this paper, we investigated the performances of several deep learning techniques for short-term forecasting of PV power generation at a real-site floating PV power plant located at the Suranaree University of Technology Hospital in Thailand. The selected deep learning techniques include RNN, CNN, LSTM, GRU, BiLSTM, BiGRU, CNN-LSTM, CNN-BiLSTM, CNN-GRU, and CNN-BiGRU. Several input data variables with a five-minute resolution from the real floating PV power plant were used to train and test the deep learning models. The performance of the models was quantified using MAE, MAPE, and RMSE errors. Three case studies, based on different weather conditions and time durations, were provided for verification. All three case studies were trained using the same dataset. The effectiveness of the deep learning models varied under different weather conditions and time durations. The CNN-BiGRU model yielded the best performance for one-day PV forecasting, while the BiLSTM model was the most suitable for one-week PV forecasting.