Activity-Aware Energy-Efficient Automation of Smart Buildings

Abstract

:1. Introduction

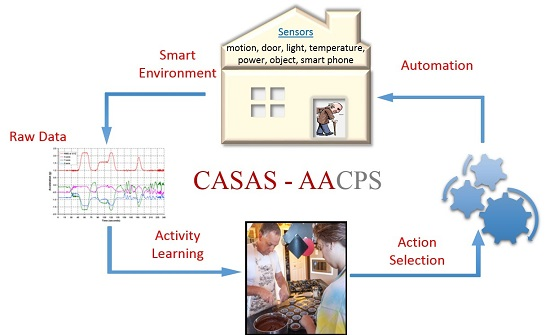

2. Energy-Efficient Smart Buildings

3. Smart Environments

4. Activity Awareness

5. Activity-Aware Home Automation

6. Experimental Results

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Cook, D.J.; Crandall, A.; Thomas, B.; Krishnan, N. CASAS: A smart home in a box. IEEE Comput. 2012, 46, 62–69. [Google Scholar] [CrossRef] [PubMed]

- April 2016 Monthly Energy Review; U.S. Energy Information Administration (EIA): Washington, DC, USA, 2016.

- How Much Energy is Consumed in Residential and Commercial Buildings in the United States? U.S. Energy Information Administration (EIA): Washington, DC, USA, 2016.

- Darby, S.; Liddell, C.; Hills, D.; Drabble, D. Smart Metering Early Learning Project: Synthesis Report; Department of Energy & Climate Change: London, UK, 2015. [Google Scholar]

- Allcott, H.; Rogers, T. The short-run and long-run effects of behavioral interventions: Experimental evidence from energy conservation. Am. Econ. Rev. 2014, 104, 3003–3037. [Google Scholar] [CrossRef]

- Szewcyzk, S.; Dwan, K.; Minor, B.; Swedlove, B.; Cook, D.J. Annotating smart environment sensor data for activity learning. Technol. Health Care 2009, 17, 161–169. [Google Scholar] [PubMed]

- Wilson, C.; Dowlatabadi, H. Models of decision making and residential energy use. Annu. Rev. Environ. Resour. 2007, 32, 169–203. [Google Scholar] [CrossRef]

- Brounen, D.; Kok, N.; Quigley, J.M. Residential energy use and conservation: Economics and demographics. Eur. Econ. Rev. 2012, 56, 931–945. [Google Scholar] [CrossRef]

- Darby, S. Smart metering: What potential for householder engagement? Build. Res. Inf. 2010, 38, 442–457. [Google Scholar] [CrossRef]

- Riche, Y.; Dodge, J.; Metoyer, R. Studying always-on electricity feedback in the home. In Proceedings of the International Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; pp. 1995–1998.

- Kim, C.G.; Kim, K.J. Implementation of a cost-effective home lighting control system on embedded Linux with OpenWrt. Pers. Ubiquitous Comput. 2014, 18, 535–542. [Google Scholar] [CrossRef]

- Speech by the Rt Hon Patricia Hewitt MP, Secretary of State for Health. in Long-term Conditions Alliance Annual Conference; Department of Health: Providence, RI, USA, 2007.

- Kidd, C.D.; Orr, R.; Abowd, G.D.; Atkeson, C.G.; Essa, I.A.; MacIntyre, B.; Mynatt, E.D.; Starner, T.; Newstetter, W. The aware home: A living laboratory for ubiquitous computing research. In Proceedings of the Second International Workshop on Cooperative Buildings, Integrating Information, Organization, and Architecture—CoBuild’99, Pittsburgh, PA, USA, 1–2 October 1999.

- Helal, S.; Mann, W.; El-Zabadani, H.; King, J.; Kaddoura, Y.; Jansen, E. The gator tech smart house: A programmable pervasive space. Computer 2005, 38, 50–60. [Google Scholar] [CrossRef]

- Cook, D.J.; Youngblood, M.; Heierman, E.O.; Gopalratnam, K.; Rao, S.; Litvin, A.; Khawaja, F. MavHome: An agent-based smart home. In Proceedings of the First IEEE International Conference on Pervasive Computing and Communications, Fort Worth, TX, USA, 26 March 2003; pp. 521–524.

- Elfaham, A.; Hagras, H.; Helal, S.; Hossain, S.; Lee, J.W.; Cook, D. A fuzzy based verification agent for the Persim human activity simulator in ambient intelligent environments. In Proceedings of the 2010 IEEE International Conference on Fuzzy Systems, Barcelona, Spain, 18–23 July 2010.

- Cook, D.J.; Das, S. Pervasive computing at scale: Transforming the state of the art. Pervasive Mob. Comput. 2012, 8, 22–35. [Google Scholar] [CrossRef]

- Krishnan, N.; Cook, D.J. Activity recognition on streaming sensor data. Pervasive Mob. Comput. 2014, 10, 138–154. [Google Scholar] [CrossRef] [PubMed]

- Cook, D.J.; Krishnan, N.; Rashidi, P. Activity discovery and activity recognition: A new partnership. IEEE Trans. Syst. Man Cybern. Part B 2013, 43, 820–828. [Google Scholar] [CrossRef] [PubMed]

- Cook, D.J. Learning setting-generalized activity models for smart spaces. IEEE Intell. Syst. 2012, 27, 32–38. [Google Scholar] [CrossRef] [PubMed]

- Crandall, A.; Cook, D.J. Human Aspects in Ambient Intelligence; Atlantis Press: Paris, France, 2013; pp. 55–71. [Google Scholar]

- Aggarwal, J.K.; Ryoo, M.S. Human activity analysis: A review. ACM Comput. Surv. 2011, 43. [Google Scholar] [CrossRef]

- Chen, L.; Hoey, J.; Nugent, C.D.; Cook, D.J.; Yu, Z. Sensor-based activity recognition. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2012, 42, 790–808. [Google Scholar] [CrossRef]

- Ke, S.R.; Thuc, H.L.U.; Lee, Y.J.; Hwang, J.N.; Yoo, J.H.; Choi, K.H. A review on video-based human activity recognition. Computers 2013, 2, 88–131. [Google Scholar] [CrossRef]

- Bulling, A.; Blanke, U.; Schiele, B. A tutorial on human activity recognition using body-worn inertial sensors. ACM Comput. Surv. 2014, 46, 107–140. [Google Scholar] [CrossRef]

- Reiss, A.; Stricker, D.; Hendeby, G. Towards robust activity recognition for everyday life: Methods and evaluation. In Proceedings of the 2013 7th International Conference on Pervasive Computing Technologies for Healthcare and Workshops, Venice, Italy, 5–8 May 2013; pp. 25–32.

- Vishwakarma, S.; Agrawal, A. A survey on activity recognition and behavior understanding in video surveillance. Vis. Comput. 2013, 29, 983–1009. [Google Scholar] [CrossRef]

- Lara, O.; Labrador, M.A. A survey on human activity recognition using wearable sensors. IEEE Commun. Surv. Tutor. 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Chen, L.; Khalil, I. Activity Recognition in Pervasive Intelligent Environments; Chen, L., Nugent, C.D., Biswas, J., Hoey, J., Eds.; Atlantis Press: Paris, France, 2011; pp. 1–31. [Google Scholar]

- Tuaraga, P.; Chellappa, R.; Subrahmanian, V.S.; Udrea, O.; Turaga, P. Machine recognition of human activities: A survey. IEEE Trans. Circuits Syst. Video Technol. 2008, 18, 1473–1488. [Google Scholar] [CrossRef]

- Alon, J.; Athitsos, V.; Yuan, Q.; Sclaroff, S. A unified framework for gesture recognition and spatiotemporal gesture segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 1685–1699. [Google Scholar] [CrossRef] [PubMed]

- Iglesias, J.A.; Angelov, P.; Ledezma, A.; Sanchis, A. Human activity recognition based on evolving fuzzy systems. Int. J. Neural Syst. 2010, 20, 355–364. [Google Scholar] [CrossRef] [PubMed]

- Liao, I.L.; Fox, D.; Kautz, H. Location-based activity recognition using relational Markov networks. In Proceedings of the International Joint Conference on Artificial Intelligence, Edinburgh, UK, 30 July–5 August 2005; pp. 773–778.

- Guenterberg, E.; Ghasemzadeh, H.; Jafari, R. Automatic segmentation and recognition in body sensor networks using a hidden Markov model. ACM Trans. Embed. Comput. Syst. 2012, 11. [Google Scholar] [CrossRef]

- Doppa, J.R.; Fern, A.; Tadepalli, P. Structured prediction via output space search. J. Mach. Learn. Res. 2014, 15, 1317–1350. [Google Scholar]

- Doppa, J.R.; Fern, A.; Tadepalli, P. HC-Search: Learning heuristics and cost functions for structured prediction. J. Artif. Intell. Res. 2014, 50, 369–407. [Google Scholar]

- Hagras, H.; Doctor, F.; Lopez, A.; Callaghan, V. An incremental adaptive life long learning approach for type-2 fuzzy embedded agents in ambient intelligent environments. IEEE Trans. Fuzzy Syst. 2007, 15, 41–55. [Google Scholar] [CrossRef]

- Munguia-Tapia, E.; Intille, S.S.; Larson, K. Activity recognition in the home using simple and ubiquitous sensors. Pervasive Comput. 2004, 3001, 158–175. [Google Scholar]

- Wan, J.; O’Grady, M.J.; O’Hare, G.M. Dynamic sensor event segmentation for real-time activity recognition in a smart home context. Pers. Ubiquitous Comput. 2015, 19, 287–301. [Google Scholar] [CrossRef]

- Jarafi, R.; Sastry, S.; Bajcsy, R. Distributed recognition of human actions using wearable motion sensor networks. J. Ambient Intell. Smart Environ. 2009, 1, 103–115. [Google Scholar]

- Junker, H.; Amft, O.; Lukowicz, P.; Groster, G. Gesture spotting with body-worn inertial sensors to detect user activities. Pattern Recognit. 2008, 41, 2010–2024. [Google Scholar] [CrossRef]

- Mukhopadhyay, S.C. Wearable sensors for human activity monitoring: A review. IEEE Sens. J. 2014, 15, 1321–1330. [Google Scholar] [CrossRef]

- Gu, T.; Chen, S.; Tao, X.; Lu, J. An unsupervised approach to activity recognition and segmentation based on object-use fingerprints. Data Knowl. Eng. 2010, 69, 533–544. [Google Scholar] [CrossRef]

- Philipose, M.; Fishkin, K.P.; Perkowitz, M.; Patterson, D.J.; Fox, D.; Kautz, H.; Hahnel, D. Inferring activities from interactions with objects. IEEE Pervasive Comput. 2004, 3, 50–57. [Google Scholar] [CrossRef]

- Gyorbiro, N.; Fabian, A.; Homanyi, G. An activity recognition system for mobile phones. Mob. Netw. Appl. 2008, 14, 82–91. [Google Scholar] [CrossRef]

- Kwapisz, J.R.; Weiss, G.M.; Moore, S.A. Activity recognition using cell phone accelerometers. ACM SIGKDD Explor. Newsl. 2010, 12, 74–82. [Google Scholar] [CrossRef]

- Candamo, J.; Shreve, M.; Goldgof, D.; Sapper, D.; Kasturi, R. Understanding transit scenes: A survey on human behavior recognition algorithms. IEEE Trans. Intell. Transp. Syst. 2010, 11, 206–224. [Google Scholar] [CrossRef]

- Forster, K.; Monteleone, S.; Calatroni, A.; Roggen, D.; Troster, G. Incremental kNN classifier exploiting correct-error teacher for activity recognition. In Proceedings of the 2010 Ninth International Conference on Machine Learning and Applications, Washington, DC, USA, 12–14 December 2010; pp. 445–450.

- Amft, O.; Troster, G. On-body sensing solutions for automatic dietary monitoring. IEEE Pervasive Comput. 2009, 8, 62–70. [Google Scholar] [CrossRef]

- Zhang, M.; Sawchuk, A.A. Motion primitive-based human activity recognition using a bag-of-features approach. In Proceedings of the 2nd ACM SIGHIT International Health Informatics Symposium, Miami, FL, USA, 28–30 January 2012; pp. 631–640.

- Abdullah, S.; Lane, N.D.; Choudhury, T. Towards population scale activity recognition: A framework for handling data diversity. In Proceedings of the National Conference on Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012.

- Hirano, T.; Maekawa, T. A hybrid unsupervised/supervised model for group activity recognition. In Proceedings of the International Symposium on Wearable Computers, Zurich, Switzerland, 9–12 September 2013; pp. 21–24.

- Hung, H.; Englebienne, G.; Kools, J. Classifying social actions with a single accelerometer. In Proceedings of the ACM International Joint Conference on Pervasive and Ubiquitous Computing, Zurich, Switzerland, 8–12 September 2013; pp. 207–210.

- Petersen, J.; Larimer, N.; Kaye, J.A.; Pavel, M.; Hayes, T.L. SVM to detect the presence of visitors in a smart home environment. In Proceedings of the International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 5850–5853.

- Kjaergaard, M.B. Studying sensing-based systems: Scaling to human crowds in the real world. IEEE Comput. 2013, 17, 80–84. [Google Scholar] [CrossRef]

- Kjaergaard, M.B.; Wirz, M.; Roggen, D.; Troster, G. Detecting pedestrian flocks by fusion of multi-modal sensors in mobile phones. In Proceedings of the ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 240–249.

- Gordon, D.; Hanne, J.-H.; Berchtold, M.; Shirehjini, A.A.N.; Beigl, M. Towards collaborative group activity recognition using mobile devices. Mob. Netw. Appl. 2013, 18, 326–340. [Google Scholar] [CrossRef]

- Lu, C.H.; Chiang, Y.T. Interaction-enabled multi-user model learning for a home environment using ambient sensors. IEEE J. Biomed. Health Inf. 2015, 29, 1015–1046. [Google Scholar]

- Wang, L.; Gu, T.; Tao, X.; Chen, H.; Lu, J. Multi-user activity recognition in a smart home. Atl. Ambient Pervasive Intell. 2011, 4, 59–81. [Google Scholar]

- Wu, T.; Lian, C.; Hsu, J.Y. Joint recognition of multiple concurrent activities using factorial conditional random fields. In Proceedings of the Association for the Advancement of Artificial Intelligence Workshop on Plan, Activity, and Intent Recognition, Palo Alto, CA, USA, 28–29 June 2007.

- Tolstikov, A.; Phus, C.; Biswas, J.; Huang, W. Multiple people activity recognition using MHT over DBN. In Proceedings of the 9th International Conference on Smart Homes and Health Telematics, Montreal, QC, Canada, 12–15 June 2011; pp. 313–318.

- Hu, D.H.; Yang, Q. CIGAR: Concurrent and interleaving goal and activity recognition. In Proceedings of the 23rd National Conference on Artificial Intelligence, Chicago, IL, USA, 13–17 July 2008; pp. 1363–1368.

- Chiang, Y.T.; Hsu, K.C.; Lu, C.H.; Fu, L.C. Interaction models for multiple-resident activity recognition in a smart home. In Proceedings of the International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 3753–3758.

- Gu, T.; Wang, L.; Chen, H.; Tao, X.; Lu, J. Recognizing multiuser activities using wireless body sensor networks. IEEE Trans. Mob. Comput. 2011, 10, 1618–1631. [Google Scholar] [CrossRef]

- Blanke, U.; Schiele, B.; Kreil, M.; Lukowicz, P.; Sick, B.; Gruber, T. All for one or one for all? Combining heterogeneous features for activity spotting. In Proceedings of the 2010 8th IEEE International Conference on Pervasive Computing and Communications Workshops, Mannheim, Germany, 29 March–2 April 2010; pp. 18–24.

- Van Kasteren, T.; Noulas, A.; Englebienne, G.; Krose, B. Accurate activity recognition in a home setting. In Proceedings of the ACM Conference on Ubiquitous Computing, Seoul, Korea, 21–24 September 2008.

- Bulling, A.; Ward, J.A.; Gellersen, H. Multimodal recognition of reading activity in transit using body-worn sensors. ACM Trans. Appl. Percept. 2012, 9. [Google Scholar] [CrossRef]

- Wang, S.; Pentney, W.; Popescu, A.M.; Choudhury, T.; Philipose, M. Common sense based joint training of human activity recognizers. In Proceedings of the International Joint Conference on Artificial Intelligence, Hyderabad, India, 6–12 January 2007; pp. 2237–2242.

- Lester, J.; Choudhury, T.; Borriello, G. A practical approach to recognizing physical activities. In Proceedings of the International Conference on Pervasive Computing, Sydney, Austrilia, 14–18 March 2006.

- Hong, J.H.; Ramos, J.; Dey, A.K. Toward personalized activity recognition systems with a semipopulation approach. IEEE Trans. Hum. Mach. Syst. 2016, 46, 101–112. [Google Scholar] [CrossRef]

- Lichman, M.; Smyth, P. Modeling human location data with mixtures of kernel densities. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014.

- Ma, C.; Doppa, J.R.; Orr, J.W.; Mannem, P.; Fern, X.Z.; Dietterich, T.G.; Tadepalli, P. Prune-and-score: Learning for greedy coreference resolution. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014.

- Xie, J.; Ma, C.; Doppa, J.R.; Mannem, P.; Fern, X.; Dietterich, T.; Tadepalli, P. Learning greedy policies for the easy-first framework. In Proceedings of the 29th AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015.

- Khardon, R. Learning to take actions. Mach. Learn. J. 1999, 35, 57–90. [Google Scholar] [CrossRef]

- Ross, S.; Gordon, G.J.; Bagnell, D. A reduction of imitation learning and structured prediction to no-regret online learning. J. Mach. Learn. Res. 2011, 15, 627–635. [Google Scholar]

- Doppa, J.R.; Yu, J.; Ma, C.; Fern, A.; Tadepalli, P. HC-Search for multi-label prediction: An empirical study. In Proceedings of the National Conference on Artificial Intelligence, Quebec, QC, Canada, 27–31 July 2014.

- Landwehr, N.; Hall, M.; Frank, E. Logistic model trees. In Proceedings of the European Conference on Machine Learning, Dubrovnik, Croatia, 22–26 September 2003; pp. 241–252.

| Activity | # Sensor Events |

|---|---|

| Bathe | 22,761 |

| Bed toilet transition | 6817 |

| Cook | 26,032 |

| Drink | 11,522 |

| Eat | 16,961 |

| Enter home | 1376 |

| Leave home | 2570 |

| Other activity | 791,938 |

| Relax | 8753 |

| Sleep | 793,531 |

| Toilet | 56,969 |

| Wash dishes | 2900 |

| Watch TV | 450,628 |

| Water plants | 2408 |

| Work on computer | 283,509 |

| Activity | Bathe | Bed Toilet | Cook | Drink | Eat | Enter Home | Leave Home | Other Activity | Relax | Sleep | Toilet | Wash Dishes | Watch TV | Water Plants | Work |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Bathe | 1201 | 0 | 0 | 0 | 0 | 0 | 3 | 144 | 0 | 0 | 1059 | 0 | 0 | 0 | 11 |

| Bed toilet | 47 | 998 | 0 | 0 | 0 | 0 | 0 | 175 | 0 | 55 | 104 | 0 | 0 | 0 | 4 |

| Cook | 0 | 0 | 12 | 63 | 114 | 0 | 25 | 6358 | 0 | 0 | 0 | 0 | 76 | 0 | 9 |

| Drink | 0 | 0 | 35 | 80 | 0 | 0 | 0 | 2482 | 0 | 41 | 11 | 21 | 406 | 0 | 9 |

| Eat | 0 | 3 | 5 | 43 | 379 | 0 | 0 | 2873 | 0 | 9 | 29 | 0 | 41 | 0 | 0 |

| Enter home | 0 | 0 | 0 | 0 | 2 | 357 | 2 | 113 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

| Leave home | 0 | 0 | 3 | 1 | 11 | 21 | 207 | 336 | 0 | 2 | 0 | 0 | 23 | 0 | 2 |

| Other activity | 172 | 287 | 1865 | 2181 | 1574 | 93 | 486 | 88,216 | 48 | 863 | 852 | 58 | 5102 | 0 | 2686 |

| Relax | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 243 | 0 | 58 | 1 | 0 | 6 | 0 | 2 |

| Sleep | 0 | 61 | 0 | 1 | 9 | 0 | 0 | 874 | 4 | 8400 | 6 | 1 | 4 | 0 | 2 |

| Toilet | 1159 | 219 | 0 | 17 | 3 | 0 | 0 | 688 | 0 | 1 | 5667 | 0 | 148 | 0 | 22 |

| Wash dishes | 0 | 0 | 5 | 40 | 0 | 0 | 0 | 406 | 0 | 0 | 0 | 0 | 106 | 0 | 0 |

| Watch TV | 0 | 0 | 74 | 68 | 32 | 0 | 4 | 5347 | 51 | 1930 | 70 | 27 | 14,644 | 0 | 8 |

| Water plants | 0 | 0 | 1 | 84 | 0 | 0 | 0 | 428 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Work | 2 | 12 | 0 | 12 | 17 | 2 | 1 | 3206 | 0 | 2544 | 13 | 0 | 35 | 0 | 23,216 |

| Accuracy | 0.98 | 0.99 | 0.96 | 0.97 | 0.98 | 1.00 | 1.00 | 0.79 | 1.00 | 0.97 | 0.98 | 1.00 | 0.93 | 1.00 | 0.96 |

| Overall Accuracy | - | - | - | - | - | - | - | 0.74 | - | - | - | - | - | - | - |

| G Mean | - | - | - | - | - | - | - | 0.88 | - | - | - | - | - | - | - |

| Precision | - | - | - | - | - | - | - | 0.89 | - | - | - | - | - | - | - |

| Recall | - | - | - | - | - | - | - | 0.80 | - | - | - | - | - | - | - |

| Performance Metric | Bathe | Bed Toilet | Cook | Drink | Eat | Enter Home | Leave Home | Other Activity | Relax | Sleep | Toilet | Wash Dishes | Watch TV | Water Plants | Work |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | 0.93 | 0.89 | 0.99 | 0.89 | 0.98 | 0.94 | 0.96 | 0.71 | 1.00 | 0.89 | 0.75 | 0.99 | 0.87 | 1.00 | 0.78 |

| G Mean | 0.77 | 0.54 | 0.73 | 0.58 | 0.68 | 0.51 | 0.34 | 0.71 | 0.87 | 0.91 | 0.53 | 0.36 | 0.86 | 0.00 | 0.78 |

| Precision (False) | 0.99 | 0.99 | 1.00 | 0.98 | 1.00 | 0.99 | 0.99 | 0.82 | 1.00 | 0.99 | 0.90 | 1.00 | 0.94 | 1.00 | 0.95 |

| Precision (True) | 0.11 | 0.04 | 0.35 | 0.09 | 0.27 | 0.05 | 0.04 | 0.65 | 0.65 | 0.79 | 0.20 | 0.02 | 0.71 | 0.00 | 0.39 |

| Recall (False) | 0.93 | 0.90 | 0.99 | 0.90 | 0.99 | 0.95 | 0.97 | 0.59 | 1.00 | 0.83 | 0.80 | 0.99 | 0.87 | 1.00 | 0.78 |

| Recall (True) | 0.63 | 0.32 | 0.54 | 0.37 | 0.47 | 0.28 | 0.12 | 0.85 | 0.77 | 0.99 | 0.35 | 0.13 | 0.86 | 0.00 | 0.79 |

| Device | Automated Turn Off | Double Tap On | Manual Off | TPR | FNR |

|---|---|---|---|---|---|

| F001 | 12 | 2 | 3 | 0.83 | 0.80 |

| LL001 | 0 | 0 | 0 | 1.00 | 1.00 |

| LL002 | 6 | 0 | 13 | 1.00 | 0.32 |

| LL003 | 0 | 0 | 0 | 1.00 | 1.00 |

| LL004 | 18 | 3 | 5 | 0.83 | 0.78 |

| LL005 | 0 | 0 | 6 | 1.00 | 1.00 |

| LL006 | 28 | 17 | 4 | 0.39 | 0.88 |

| LL007 | 4 | 1 | 0 | 0.75 | 1.00 |

| LL008 | 29 | 10 | 9 | 0.66 | 0.76 |

| LL009 | 9 | 6 | 5 | 0.33 | 0.64 |

| LL011 | 0 | 0 | 1 | 1.00 | 0.00 |

| LL013 | 0 | 0 | 0 | 1.00 | 1.00 |

| LL014 | 41 | 31 | 12 | 0.24 | 0.77 |

| LL015 | 16 | 3 | 1 | 0.81 | 0.94 |

| LL016 | 0 | 0 | 0 | 1.00 | 1.00 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thomas, B.L.; Cook, D.J. Activity-Aware Energy-Efficient Automation of Smart Buildings. Energies 2016, 9, 624. https://doi.org/10.3390/en9080624

Thomas BL, Cook DJ. Activity-Aware Energy-Efficient Automation of Smart Buildings. Energies. 2016; 9(8):624. https://doi.org/10.3390/en9080624

Chicago/Turabian StyleThomas, Brian L., and Diane J. Cook. 2016. "Activity-Aware Energy-Efficient Automation of Smart Buildings" Energies 9, no. 8: 624. https://doi.org/10.3390/en9080624