A Hierarchical Fully Convolutional Network Integrated with Sparse and Low-Rank Subspace Representations for PolSAR Imagery Classification

Abstract

:1. Introduction

1.1. Background

1.2. Problems and Motivation

1.3. Contributions and Structure

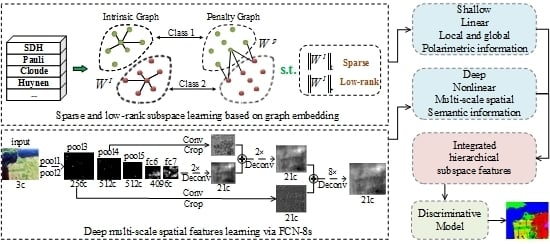

- An effective linear subspace learning based on sparse and low-rank graph embedding discriminant analysis is firstly introduced to perform DR on the stacked multiple polarimetric features, which not only removes the redundant features that potentially aggravate classification performance, but also captures the local and global structures of PolSAR data simultaneously.

- An FCN-8s model pre-trained on optical images is firstly transferred to learn the nonlinear deep multi-scale spatial features of PolSAR image, where we take Pauli-decomposed pseudo-color image as an input “RGB“ image to match model parameters. This kind of high-level semantic information learned adaptively by FCN-8s can significantly facilitate classification.

- The shallow sparse and low-rank representations of PolSAR data in a reduced-dimensional subspace are integrated with the deep spatial features by using a weighted strategy to make their advantages beneficial for each other. In the integrated hierarchical subspace features, multiple types of information, ranging from shallow to deep, linear to nonlinear, local to global, and polarimetric to spatial, is incorporated to boost the discrimination of features for subsequent classification.

2. Preliminaries

2.1. Multidimensional PolSAR Data

2.2. Fully Convolutional Networks

2.3. Subspace Learning Based on Graph Embedding

3. Integrating Hierarchical Subspace Features for PolSAR Image Classification

3.1. Sparse and Low-Rank Subspace Representations of PolSAR Data

3.2. Deep Multi-Scale Spatial Features Learning via FCN-8s

3.3. Integrating FCN with Sparse and Low-Rank Subspace Representations for PolSAR Imagery Classification

4. Experiment

4.1. Experimental Data Sets

- Flevoland data set: The PolSAR data set of Flevoland is a subset of an L-band four-look image acquired by the NASA/Jet Propulsion Laboratory Airborne SAR (AIRSAR) platform in 1989. The data set measured 750 × 1024 pixels with a resolution of 12 m × 6 m, and it is divided into 11 classes referring to [12], where rapeseed, grass, forest, peas, Lucerne, wheat, beet, bare soil, stem beans, water and potato are included. For illustrative purposes, the Pauli-decomposed pseudo-color image and its corresponding ground truth are shown in Figure 9.

- San Francisco data set: The PolSAR data of San Francisco Bay area, acquired by the NASA/Jet Propulsion Laboratory AIRSAR at L-band, consists of five kinds of terrain types: water, mountain, three types of urban areas. This polarimetric SAR data has a size of 900 × 1024 pixels, and its spatial resolution is about 10 m × 10 m. The Pauli color coded image and its ground truth are given in Figure 10.

- Flevoland Benchmark data set: The third PolSAR data used for validation is the benchmark data set of an L-band AIRSAR data obtained in 1991 over Flevoland. The selected image covers a size of 1020 × 1024 pixels, and Figure 11 shows the Pauli-decomposed pseudo-color image and its corresponding ground truth, respectively, where the ground truth comes from [21]. The ground truth shows that the benchmark data contain 14 classes.

4.2. Experiment Settings and Parameters Analysis

4.2.1. Experiment Settings

- BSLGDA: only employ BSLGDA to learn the low-dimensional sparse and low-rank representations of high-dimensional polaimetric features, which are linear and shallow from the point of view of DL feature, where spatial features are not concerned.

- FCN: only transfer the pre-trained FCN-8s to extract deep multi-scale spatial structural information of Pauli-decomposed pseudo-color image, which are nonlinear and high-level abstract spatial representations.

- PCA + FCN: use the traditional principal component analysis (PCA) to perform DR on high-dimensional polaimetric features, then integrate PCA features with FCN features by using a weighted strategy.

- BSLGDA + FCN: integrate shallow sparse and low-rank subspace representations of high-dimensional polaimetric features with deep multi-scale spatial features by using a weighted strategy.

- BLGDA+FCN: use block low-rank graph embedding discriminative analysis (BLGDA) to perform DR. Compared with BSLGDA+FCN, the only difference lies in that sparsity is not concerned.

- BSGDA+FCN: use block sparse graph embedding discriminative analysis (BSGDA) to complete DR. Compared with BSLGDA+FCN, the only difference lies in that low-rankness is not considered.

4.2.2. Parameters Tuning

4.3. Classification Performance

5. Discussion

- The influence of different reduced-dimension. Figure 12 indicates the variation in classification accuracy with respect to the reduced dimension by using the introduced BSLGDA under 1% training samples. It shows that the overall accuracies for Flevoland and Flevoland Benchmark data tend to be stable when the reduced dimension is larger than 33, while the reduced-dimensionality 30 for San Francisco data is a breaking point.

- The sensibility to different training rates. Figure 13 illustrates how the number of training samples affects the classification accuracy of the proposed BSLGDA+FCN. For Flevoland and Flevoland Benchmark data sets, when the training samples ratio is as low as 1%, the proposed method can still offer a high OA of more than 90%. When the ratio is more than 5%, the OA is satisfied and becomes stable. As for San Francisco data, an increase in the training rate leads to a higher accuracy until the rate reaches to 3%. It is worth noting that a very high accuracy can be achieved at a small training ratio, which indicates that the proposed method can perform well when the available training samples are limited. In other words, the proposed algorithm can tackle the small size samples problem effectively.

- The role of features learned via FCN-8s. The experimental results of using the single FCN features for classification demonstrate the great contribution of FCN features to improve classification, which can be explained by the nonlinear, deep-level and multi-scale structural properties learned adaptively from data by FCN. Moreover, in the experiments of integrated features, FCN features play a critical role in improving accuracy. However, FCN features are weak at coping with boundaries and other details, due to the fact that the FCN-8s model has relatively large receptive fields, which determine that it fails to obtain more detailed information.

- The role of sparse and low-rank representations. The sparse and low-rank constraints in BSLGDA make it possible to learn the local and global features. When incorporated into FCN features, the sparse and low-rank subspace features can effectively alleviate the boundary and other detail problems occurring in FCN. However, this kind of low-level learning features cannot offer a very high accuracy because BSLGDA is a linear subspace learning while PolSAR image classification belongs to a nonlinear mapping problem. Therefore, a nonlinear subspace learning based on GE should be further studied for enhancing the performance.

- The synergy effect of FCN and BSLGDA. In order to achieve the further improvement, this work resorts to a synergy of a pre-trained FCN-8s model with sparse and low-rank representations. The proposed BSLGDA+FCN utilizes the complementary advantages of FCN and BSLGDA to boost the discrimination of integrated hierarchical subspace features, which contain multiple types of information, including linear and nonlinear, shallow and deep, local and global, polarimetric and spatial properties. Thus, the synergy effect of FCN and BSLGDA significantly improves the classification accuracy.

- The main idea of the proposed method is not just limited to the integration of FCN and BSLGDA, and the success of our method can offer a general framework to integrate any other existing available methods that are consistent with our integration theory, as well as to develop new algorithms. However, the proposed method is supervised, and it requires tuning several parameters manually. Therefore, one of our future research works will concentrate on embedding the sparse and low-rank subspace features into FCN so as to automatically learn all the parameters. The current valuable results will lay down a solid foundation for studying an automatic classification network.

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ma, X.; Shen, H.; Yang, J.; Zhang, L.; Li, P. Polarimetric-spatial classification of SAR images based on the fusion of multiple classifiers. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 961–971. [Google Scholar]

- Lee, J.S.; Grunes, M.R.; Kwok, R. Classification of multi-look polarimetric SAR imagery based on complex Wishart distribution. Int. J. Remote Sens. 1994, 15, 2299–2311. [Google Scholar] [CrossRef]

- Kong, J.A.; Swartz, A.A.; Yueh, H.A.; Novak, L.M.; Shin, R.T. Identification of Terrain Cover Using the Optimum Polarimetric Classifier. J. Electromagn. Waves Appl. 1988, 2, 171–194. [Google Scholar]

- Van Zyl, J.J. Unsupervised classification of scattering behavior using radar polarimetry data. IEEE Trans. Geosci. Remote Sens. 1989, 27, 36–45. [Google Scholar] [CrossRef]

- Cloude, S.R.; Pottier, E. An entropy based classification scheme for land applications of polarimetric SAR. IEEE Trans. Geosci. Remote Sens. 1997, 35, 68–78. [Google Scholar] [CrossRef]

- Lee, J.S.; Grunes, M.R.; Ainsworth, T.L.; Du, L.J.; Schuler, D.L.; Cloude, S.R. Unsupervised classification using polarimetric decomposition and the complex Wishart classifier. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2249–2258. [Google Scholar]

- Ferro-Famil, L.; Pottier, E.; Lee, J.S. Unsupervised classification of multifrequency and fully polarimetric SAR images based on the H/A/Alpha-Wishart classifier. IEEE Trans. Geosci. Remote Sens. 2001, 39, 2332–2342. [Google Scholar] [CrossRef]

- Lee, J.S.; Grunes, M.R.; Pottier, E.; Ferro-Famil, L. Unsupervised terrain classification preserving polarimetric scattering characteristics. IEEE Trans. Geosci. Remote Sens. 2004, 42, 722–731. [Google Scholar]

- Dong, Y.; Milne, A.K.; Forster, B.C. Segmentation and classification of vegetated areas using polarimetric SAR image data. IEEE Trans. Geosci. Remote Sens. 2001, 39, 321–329. [Google Scholar] [CrossRef]

- Wu, Y.; Ji, K.; Yu, W.; Su, Y. Region-based classification of polarimetric SAR images using Wishart MRF. IEEE Geosci. Remote Sens. Lett. 2008, 5, 668–672. [Google Scholar] [CrossRef]

- He, C.; Liu, X.; Feng, D.; Shi, B.; Luo, B.; Liao, M. Hierarchical Terrain Classification Based on Multilayer Bayesian Network and Conditional Random Field. Remote Sens. 2017, 9, 96. [Google Scholar] [CrossRef]

- Qin, F.; Guo, J.; Sun, W. Object-oriented ensemble classification for polarimetric SAR Imagery using restricted Boltzmann machines. Remote Sens. Lett. 2017, 8, 204–213. [Google Scholar] [CrossRef]

- Zhang, L.; Ma, W.; Zhang, D. Stacked sparse autoencoder in PolSAR data classification using local spatial information. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1359–1363. [Google Scholar] [CrossRef]

- Hou, B.; Kou, H.; Jiao, L. Classification of polarimetric SAR images using multilayer autoencoders and superpixels. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3072–3081. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A review. arXiv, 2017; arXiv:1710.03959. [Google Scholar]

- Xie, H.; Wang, S.; Liu, K.; Lin, S.; Hou, B. Multilayer feature learning for polarimetric synthetic radar data classification. In Proceedings of the 2014 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Bryan, TX, USA, 13–18 July 2014; pp. 2818–2821. [Google Scholar]

- Geng, J.; Fan, J.; Wang, H.; Ma, X.; Li, B.; Chen, F. High-resolution SAR image classification via deep convolutional autoencoders. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2351–2355. [Google Scholar] [CrossRef]

- Geng, J.; Wang, H.; Fan, J.; Ma, X. Deep Supervised and Contractive Neural Network for SAR Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2442–2459. [Google Scholar] [CrossRef]

- Lv, Q.; Dou, Y.; Niu, X.; Xu, J.; Xu, J.; Xia, F. Urban land use and land cover classification using remotely sensed SAR data through deep belief networks. J. Sens. 2015, 2015, 10. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, H.; Xu, F.; Jin, Y.Q. Polarimetric SAR image classification using deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1935–1939. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, H.; Xu, F.; Jin, Y.Q. Complex-valued convolutional neural network and its application in polarimetric SAR image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7177–7188. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, C.; Zhang, H. Integrating H-A-α with fully convolutional networks for fully PolSAR classification. In Proceedings of the International Workshop on Remote Sensing with Intelligent Processing (RSIP), Shanghai, China, 19–21 May 2017; pp. 1–4. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Zhang, L.; Zhang, L.; Du, B. Deep learning for remote sensing data: A technical tutorial on the state of the art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Wright, J.; Yang, A.Y.; Ganesh, A.; Sastry, S.S.; Ma, Y. Robust face recognition via sparse representation. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 210–227. [Google Scholar] [CrossRef] [PubMed]

- Knee, P.; Thiagarajan, J.J.; Ramamurthy, K.N.; Spanias, A. SAR target classification using sparse representations and spatial pyramids. In Proceedings of the 2011 IEEE Radar Conference (RADAR), Kansas City, MO, USA, 23–27 May 2011; pp. 294–298. [Google Scholar]

- Zhang, L.; Sun, L.; Zou, B.; Moon, W.M. Fully polarimetric SAR image classification via sparse representation and polarimetric features. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3923–3932. [Google Scholar] [CrossRef]

- Fang, L.; Wei, X.; Yao, W.; Xu, Y.; Stilla, U. Discriminative Features Based on Two Layers Sparse Learning for Glacier Area Classification Using SAR Intensity Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3200–3212. [Google Scholar] [CrossRef]

- Liu, G.; Lin, Z.; Yu, Y. Robust subspace segmentation by low-rank representation. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 663–670. [Google Scholar]

- Ren, B.; Hou, B.; Zhao, J.; Jiao, L. Unsupervised Classification of Polarimetirc SAR Image Via Improved Manifold Regularized Low-Rank Representation With Multiple Features. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 580–595. [Google Scholar] [CrossRef]

- Li, W.; Liu, J.; Du, Q. Sparse and low-rank graph for discriminant analysis of hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4094–4105. [Google Scholar] [CrossRef]

- Ma, X.; Hao, S.; Cheng, Y. Terrain classification of aerial image based on low-rank recovery and sparse representation. In Proceedings of the 2017 20th International Conference on Information Fusion (Fusion), Xi’an, China, 10–13 July 2017; pp. 1–6. [Google Scholar]

- Fu, G.; Liu, C.; Zhou, R.; Sun, T.; Zhang, Q. Classification for High Resolution Remote Sensing Imagery Using a Fully Convolutional Network. Remote Sens. 2017, 9, 498. [Google Scholar] [CrossRef]

- Jiao, L.; Liang, M.; Chen, H.; Yang, S.; Liu, H.; Cao, X. Deep fully convolutional network-based spatial distribution prediction for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5585–5599. [Google Scholar] [CrossRef]

- Lee, J.; Grunes, M.R.; De Grandi, G. Polarimetric SAR speckle filtering and its implication for classification. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2363–2373. [Google Scholar]

- Cloude, S. Group theory and polarisation algebra. Optik 1986, 75, 26–36. [Google Scholar]

- Huynen, J.R. Phenomenological theory of radar targets. Electromagn. Scatt. 1970, 653–712. [Google Scholar]

- Krogager, E. New decomposition of the radar target scattering matrix. Electron. Lett. 1990, 26, 1525–1527. [Google Scholar] [CrossRef]

- Freeman, A.; Durden, S.L. A three-component scattering model for polarimetric SAR data. IEEE Trans. Geosci. Remote Sens. 1998, 36, 963–973. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Yan, S.; Xu, D.; Zhang, B.; Zhang, H.J.; Yang, Q.; Lin, S. Graph embedding and extensions: A general framework for dimensionality reduction. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 40–51. [Google Scholar] [CrossRef] [PubMed]

- Lin, Z.; Liu, R.; Su, Z. Linearized alternating direction method with adaptive penalty for low-rank representation. In Proceedings of the Advances in Neural Information Processing Systems, Granada, Spain, 12–17 December 2011; pp. 612–620. [Google Scholar]

- Cai, J.F.; Candès, E.J.; Shen, Z. A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 2010, 20, 1956–1982. [Google Scholar] [CrossRef]

- Zhuang, L.; Gao, H.; Lin, Z.; Ma, Y.; Zhang, X.; Yu, N. Non-negative low rank and sparse graph for semi-supervised learning. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 2328–2335. [Google Scholar]

- Maaten, L.V.D.; Hinton, G. Visualizing Data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Kim, S.J.; Koh, K.; Lustig, M.; Boyd, S.; Gorinevsky, D. An Interior-Point Method for Large-Scale ℓ1-Regularized Least Squares. IEEE J. Sel. Top. Signal Process. 2007, 1, 606–617. [Google Scholar] [CrossRef]

| Class | BSLGDA | FCN | PCA + FCN | BLGDA + FCN | BSGDA + FCN | BSLGDA + FCN |

|---|---|---|---|---|---|---|

| 1 | 70.86 | 89.67 | 87.17 | 96.02 | 95.89 | 95.74 |

| 2 | 74.20 | 94.68 | 90.48 | 97.20 | 97.36 | 96.71 |

| 3 | 81.03 | 97.40 | 95.65 | 98.93 | 98.60 | 98.50 |

| 4 | 59.41 | 91.87 | 86.12 | 95.58 | 94.52 | 95.09 |

| 5 | 64.94 | 92.98 | 85.96 | 97.24 | 97.60 | 96.93 |

| 6 | 84.51 | 95.03 | 93.74 | 97.36 | 97.24 | 97.75 |

| 7 | 53.93 | 77.57 | 79.51 | 89.96 | 89.92 | 88.82 |

| 8 | 55.09 | 95.99 | 92.32 | 97.82 | 98.33 | 98.03 |

| 9 | 71.70 | 71.13 | 72.17 | 89.51 | 91.60 | 88.89 |

| 10 | 96.68 | 99.95 | 99.82 | 99.89 | 99.98 | 99.92 |

| 11 | 81.47 | 90.81 | 93.50 | 94.75 | 96.42 | 95.54 |

| OA | 73.77 | 90.99 | 81.97 | 95.78 | 96.12 | 95.67 |

| kappa | 0.7037 | 0.8982 | 0.7966 | 0.9524 | 0.9562 | 0.9512 |

| Class | BSLGDA | FCN | PCA + FCN | BLGDA + FCN | BSGDA + FCN | BSLGDA + FCN |

|---|---|---|---|---|---|---|

| 1 | 72.73 | 97.31 | 93.66 | 98.03 | 97.79 | 97.86 |

| 2 | 87.86 | 95.41 | 89.96 | 96.35 | 96.45 | 96.37 |

| 3 | 96.42 | 99.44 | 98.45 | 99.70 | 99.74 | 99.69 |

| 4 | 77.25 | 97.40 | 95.68 | 98.15 | 98.40 | 98.15 |

| 5 | 64.42 | 97.79 | 66.82 | 97.75 | 97.33 | 97.72 |

| OA | 86.51 | 97.82 | 94.70 | 98.38 | 98.04 | 98.34 |

| kappa | 0.8108 | 0.9693 | 0.9252 | 0.9772 | 0.9725 | 0.9767 |

| Class | BSLGDA | FCN | PCA + FCN | BLGDA + FCN | BSGDA + FCN | BSLGDA + FCN |

|---|---|---|---|---|---|---|

| 1 | 90.14 | 99.53 | 93.42 | 99.68 | 99.80 | 99.53 |

| 2 | 95.26 | 99.85 | 99.76 | 100 | 100 | 99.95 |

| 3 | 98.64 | 100 | 99.92 | 100 | 100 | 100 |

| 4 | 96.39 | 98.31 | 93.51 | 99.46 | 99.74 | 99.59 |

| 5 | 95.57 | 99.90 | 99.72 | 99.95 | 99.97 | 99.81 |

| 6 | 44.56 | 99.80 | 93.57 | 98.52 | 99.82 | 99.60 |

| 7 | 96.46 | 99.78 | 99.63 | 99.98 | 99.88 | 99.80 |

| 8 | 51.12 | 90.54 | 40.59 | 95.70 | 99.51 | 96.68 |

| 9 | 89.96 | 99.27 | 60.96 | 99.41 | 99.22 | 99.46 |

| 10 | 90.86 | 99.27 | 73.96 | 99.59 | 99.84 | 99.35 |

| 11 | 99.46 | 100 | 96.70 | 99.98 | 100 | 100 |

| 12 | 95.81 | 99.52 | 99.49 | 99.66 | 99.71 | 99.83 |

| 13 | 75.37 | 98.65 | 95.46 | 98.77 | 99.57 | 99.35 |

| 14 | 76.42 | 100 | 99.61 | 100 | 99.93 | 100 |

| OA | 92.80 | 97.45 | 96.49 | 99.71 | 99.79 | 99.72 |

| kappa | 0.9153 | 0.9653 | 0.9587 | 0.9966 | 0.9975 | 0.9967 |

| Dataset | Flevoland | San Francisco | Flevoland Benchmark |

|---|---|---|---|

| Pixels | 750 × 1024 | 900 × 1024 | 1020 × 1024 |

| Time | 60.44 | 70.14 | 79.98 |

| Dataset | BSLGDA | FCN | PCA + FCN | BLGDA + FCN | BSGDA + FCN | BSLGDA + FCN |

|---|---|---|---|---|---|---|

| Flevoland | 183.96 | 14.37 | 15.78 | 196.04 | 3060.93 | 169.71 |

| San Francisco | 1390.89 | 13.99 | 16.23 | 1270.10 | 4980.51 | 1849.22 |

| Flevoland Benchmark | 27.69 | 11.83 | 12.39 | 28.60 | 506.56 | 29.22 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; He, C.; Liu, X.; Liao, M. A Hierarchical Fully Convolutional Network Integrated with Sparse and Low-Rank Subspace Representations for PolSAR Imagery Classification. Remote Sens. 2018, 10, 342. https://doi.org/10.3390/rs10020342

Wang Y, He C, Liu X, Liao M. A Hierarchical Fully Convolutional Network Integrated with Sparse and Low-Rank Subspace Representations for PolSAR Imagery Classification. Remote Sensing. 2018; 10(2):342. https://doi.org/10.3390/rs10020342

Chicago/Turabian StyleWang, Yan, Chu He, Xinlong Liu, and Mingsheng Liao. 2018. "A Hierarchical Fully Convolutional Network Integrated with Sparse and Low-Rank Subspace Representations for PolSAR Imagery Classification" Remote Sensing 10, no. 2: 342. https://doi.org/10.3390/rs10020342