Improving Spatial-Temporal Data Fusion by Choosing Optimal Input Image Pairs

Abstract

:1. Introduction

2. Study Area and Data

2.1. Study Area

2.2. Satellite Data and Preprocessing

2.2.1. MODIS Data Products

2.2.2. Landsat Data

2.2.3. NASS-CDL Data

2.3. Simulated Coarse Resolution Data from Landsat Images

3. Methods

3.1. STARFM Data Fusion Algorithm

3.2. Pair Selection Strategies

3.3. Quality Assessment

3.4. Data Sources and Data Fusion Assessment

4. Results and Analysis

4.1. Consistency between MODIS and Landsat

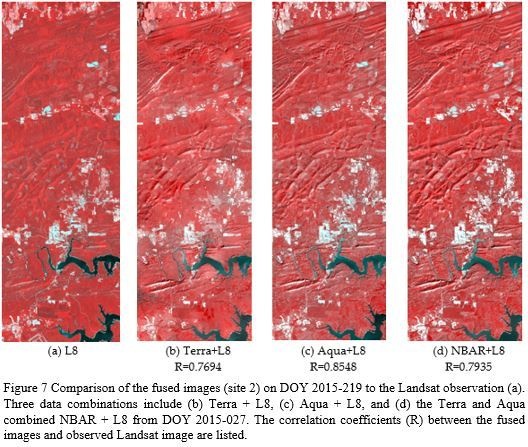

4.2. Effect of Data Sources

4.3. Effect of Pair Date

4.4. Effect of Land Cover Type

4.5. Pair Selection Strategies

5. Discussion

5.1. Selection of Data Sources

5.2. The Impact of Temporal and Spatial Variations on Prediction Accuracy

5.3. Pair Selection Strategies

6. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Woodcock, C.E.; Strahler, A.H. The factor of scale in remote sensing. Remote Sens. Environ. 1987, 21, 311–332. [Google Scholar] [CrossRef]

- Pax-Lenney, M.; Woodcock, C.E. The effect of spatial resolution on the ability to monitor the status of agricultural lands. Remote Sens. Environ. 1997, 61, 210–220. [Google Scholar] [CrossRef]

- Roy, D.P.; Wulder, M.A.; Loveland, T.R.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Helder, D.; Irons, J.R.; Johnson, D.M.; Kennedy, R.; et al. Landsat-8: Science and product vision for terrestrial global change research. Remote Sens. Environ. 2014, 145, 154–172. [Google Scholar] [CrossRef]

- Giri, C.; Pengra, B.; Long, J.; Loveland, T.R. Next generation of global land cover characterization, mapping, and monitoring. Int. J. Appl. Earth Obs. Geoinf. 2013, 25, 30–37. [Google Scholar] [CrossRef]

- Giambene, G. Resource Management in Satellite Networks: Optimization and Cross-Layer Design; Springer: New York, NY, USA, 2007. [Google Scholar]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the blending of the Landsat and MODIS surface reflectance: Predicting daily Landsat surface reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar]

- Zhukov, B.; Oertel, D.; Lanzl, F.; Reinhackel, G. Unmixing-based multisensor multiresolution image fusion. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1212–1226. [Google Scholar] [CrossRef]

- Song, H.; Huang, B. Spatiotemporal Satellite Image Fusion through One-Pair Image Learning. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1883–1896. [Google Scholar] [CrossRef]

- Zhu, X.; Helmer, E.H.; Gao, F.; Liu, D.; Chen, J.; Lefsky, M.A. A flexible spatiotemporal method for fusing satellite images with different resolutions. Remote Sens. Environ. 2016, 172, 165–177. [Google Scholar] [CrossRef]

- Zhu, X.; Cai, F.; Tian, J.; Williams, T. Spatiotemporal Fusion of Multisource Remote Sensing Data: Literature Survey, Taxonomy, Principles, Applications, and Future Directions. Remote Sens. 2018, 10, 527. [Google Scholar] [CrossRef]

- Hilker, T.; Wulder, M.A.; Coops, N.C.; Linke, J.; McDermid, G.; Masek, J.G.; Gao, F.; White, J.C. A new data fusion model for high spatial- and temporal-resolution mapping of forest disturbance based on Landsat and MODIS. Remote Sens. Environ. 2009, 113, 1613–1627. [Google Scholar] [CrossRef]

- Fu, D.; Chen, B.; Wang, J.; Zhu, X.; Hilker, T. An Improved Image Fusion Approach Based on Enhanced Spatial and Temporal the Adaptive Reflectance Fusion Model. Remote Sens. 2013, 5, 6346–6360. [Google Scholar] [CrossRef] [Green Version]

- Gevaert, C.M.; García-Haro, F.J. A comparison of STARFM and an unmixing-based algorithm for Landsat and MODIS data fusion. Remote Sens. Environ. 2015, 156, 34–44. [Google Scholar] [CrossRef]

- Shen, H.; Wu, P.; Liu, Y.; Ai, T.; Wang, Y.; Liu, X. A spatial and temporal reflectance fusion model considering sensor observation differences. Int. J. Remote Sens. 2013, 34, 4367–4383. [Google Scholar] [CrossRef]

- Wang, P.; Gao, F.; Masek, J.G. Operational Data Fusion Framework for Building Frequent Landsat-Like Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7353–7365. [Google Scholar] [CrossRef]

- Frantz, D.; Röder, A.; Udelhoven, T.; Schmidt, M. Forest Disturbance Mapping Using Dense Synthetic Landsat/MODIS Time-Series and Permutation-Based Disturbance Index Detection. Remote Sens. 2016, 8, 277. [Google Scholar] [CrossRef]

- Walker, J.J.; de Beurs, K.M.; Wynne, R.H.; Gao, F. Evaluation of Landsat and MODIS data fusion products for analysis of dryland forest phenology. Remote Sens. Environ. 2012, 117, 381–393. [Google Scholar] [CrossRef]

- Senf, C.; Leitão, P.J.; Pflugmacher, D.; van der Linden, S.; Hostert, P. Mapping land cover in complex Mediterranean landscapes using Landsat: Improved classification accuracies from integrating multi-seasonal and synthetic imagery. Remote Sens. Environ. 2005, 156, 527–536. [Google Scholar] [CrossRef]

- Singh, D. Generation and evaluation of gross primary productivity using Landsat data through blending with MODIS data. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 59–69. [Google Scholar] [CrossRef]

- Semmens, K.A.; Anderson, M.C.; Kustas, W.P.; Gao, F.; Alfieri, J.G.; McKee, L.; Prueger, J.H.; Hain, C.R.; Cammalleri, C.; Yang, Y.; et al. Monitoring daily evapotranspiration over two California vineyards using Landsat 8 in a multi-sensor data fusion approach. Remote Sens. Environ. 2016, 185, 155–170. [Google Scholar] [CrossRef] [Green Version]

- Dong, T.; Liu, J.; Qian, B.; Zhao, T.; Jing, Q.; Geng, X.; Wang, J.; Huffman, T.; Shang, J. Estimating winter wheat biomass by assimilating leaf area index derived from fusion of Landsat-8 and MODIS data. Int. J. Appl. Earth Obs. Geoinf. 2016, 49, 63–74. [Google Scholar] [CrossRef]

- Emelyanova, I.V.; McVicar, T.R.; van Niel, T.G.; Li, L.T.; van Dijk, A.I.J.M. Assessing the accuracy of blending Landsat–MODIS surface reflectances in two landscapes with contrasting spatial and temporal dynamics: A framework for algorithm selection. Remote Sens. Environ. 2013, 133, 193–209. [Google Scholar] [CrossRef]

- Gao, F.; Anderson, M.C.; Zhang, X.; Yang, Z.; Alfieri, J.G.; Kustas, W.P.; Mueller, R.; Johnson, D.M.; Prueger, J.H. Toward mapping crop progress at field scales through fusion of Landsat and MODIS imagery. Remote Sens. Environ. 2017, 188, 9–25. [Google Scholar] [CrossRef]

- Gao, F.; He, T.; Masek, J.G.; Shuai, Y.; Schaaf, C.B.; Wang, Z. Angular Effects and Correction for Medium Resolution Sensors to Support Crop Monitoring. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4480–4489. [Google Scholar] [CrossRef]

- Roy, D.P.; Zhang, H.K.; Ju, J.; Gomez-Dans, J.L.; Lewis, P.E.; Schaaf, C.B.; Sun, Q.; Li, J.; Huang, H.; Kovalskyy, V. A general method to normalize Landsat reflectance data to nadir BRDF adjusted reflectance. Remote Sens. Environ. 2016, 176, 255–271. [Google Scholar] [CrossRef]

- Schaaf, C.B.; Gao, F.; Strahler, A.H.; Lucht, W.; Li, X.; Tsang, T.; Strugnell, N.C.; Zhang, X.; Jin, Y.; Muller, J.-P.; et al. First operational BRDF, albedo nadir reflectance products from MODIS. Remote Sens. Environ. 2002, 83, 135–148. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Schaaf, C.B.; Sun, Q.; Shuai, Y.; Román, M.O. Capturing rapid land surface dynamics with Collection V006 MODIS BRDF/NBAR/Albedo (MCD43) products. Remote Sens. Environ. 2018, 207, 50–64. [Google Scholar] [CrossRef]

- Campagnolo, M.L.; Sun, Q.; Liu, Y.; Schaaf, C.; Wang, Z.; Román, M.O. Estimating the effective spatial resolution of the operational BRDF, albedo, and nadir reflectance products from MODIS and VIIRS. Remote Sens. Environ. 2016, 175, 52–64. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Olexa, E.M.; Lawrence, R.L. Performance and effects of land cover type on synthetic surface reflectance data and NDVI estimates for assessment and monitoring of semi-arid rangeland. Int. J. Appl. Earth Obs. Geoinf. 2014, 30, 30–41. [Google Scholar] [CrossRef]

- Boryan, C.; Yang, Z.; Mueller, R.; Craig, M. Monitoring US agriculture: The US Department of Agriculture, National Agricultural Statistics Service, Cropland Data Layer Program. Geocarto Int. 2011, 26, 341–358. [Google Scholar] [CrossRef]

- MODIS Data Products, 2015. Downloaded from http://reverb.echo.nasa.gov/ (accessed on 23 December 2016).

- Friedl, M.A.; Sulla-Menashe, D.; Tan, B.; Schneider, A.; Ramankutty, N.; Sibley, A.; Huang, X. MODIS Collection 5 global land cover: Algorithm refinements and characterization of new datasets. Remote Sens. Environ. 2010, 114, 168–182. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.G.; Wolfe, R.E.; Huang, C. Building a consistent medium resolution satellite data set using moderate resolution imaging spectroradiometer products as reference. J. Appl. Remote Sens. 2010, 4, 43526. [Google Scholar]

- Landsat Surface Reflectance Products, 2015. Downloaded from http://earthexplorer.usgs.gov/ (accessed on 23 December 2016).

- Zhu, X.; Liu, D.; Chen, J. A new geostatistical approach for filling gaps in Landsat ETM+ SLC-off images. Remote Sens. Environ. 2012, 124, 49–60. [Google Scholar] [CrossRef]

- Masek, J.G.; Vermote, E.F.; Saleous, N.E.; Wolfe, R.; Hall, F.G.; Huemmrich, K.F.; Gao, F.; Kutler, J.; Lim, T.-K. A Landsat Surface Reflectance Dataset for North America, 1990–2000. IEEE Geosci. Remote Sens. Lett. 2006, 3, 68–72. [Google Scholar] [CrossRef]

- Vermote, E.; Justice, C.; Claverie, M.; Franch, B. Preliminary analysis of the performance of the Landsat 8/OLI land surface reflectance product. Remote Sens. Environ. 2016, 185, 46–56. [Google Scholar] [CrossRef]

- USDA Cropland Data Layer (CDL), 2015. Downloaded from https://nassgeodata.gmu.edu/CropScape/ (accessed on 23 December 2016).

- Hilker, T.; Wulder, M.A.; Coops, N.C.; Seitz, N.; White, J.C.; Gao, F.; Masek, J.G.; Stenhouse, G. Generation of dense time series synthetic Landsat data through data blending with MODIS using a spatial and temporal adaptive reflectance fusion model. Remote Sens. Environ. 2009, 113, 1988–1999. [Google Scholar] [CrossRef]

- Huang, C.; Townshend, J.R.G.; Liang, S.; Kalluri, S.N.V.; DeFries, R.S. Impact of sensor’s point spread function on land cover characterization: Assessment and deconvolution. Remote Sens. Environ. 2002, 80, 203–212. [Google Scholar] [CrossRef]

- Tan, B.; Woodcock, C.E.; Hu, J.; Zhang, P.; Ozdogan, M.; Huang, D.; Yang, W.; Knyazikhin, Y.; Myneni, R.B. The impact of gridding artifacts on the local spatial properties of MODIS data: Implications for validation, compositing, and band-to-band registration across resolutions. Remote Sens. Environ. 2006, 105, 98–114. [Google Scholar] [CrossRef]

- Roy, D.P.; Ju, J.; Lewis, P.; Schaaf, C.; Gao, F.; Hansen, M.; Lindquist, E. Multi-temporal MODIS–Landsat data fusion for relative radiometric normalization, gap filling, and prediction of Landsat data. Remote Sens. Environ. 2008, 112, 3112–3130. [Google Scholar] [CrossRef]

- Chen, J.; Zhu, X.; Vogelmann, J.E.; Gao, F.; Jin, S. A simple and effective method for filling gaps in Landsat ETM+ SLC-off images. Remote Sens. Environ. 2011, 115, 1053–1064. [Google Scholar] [CrossRef]

- Romero-Sanchez, M.E.; Ponce-Hernandez, R.; Franklin, S.E.; Aguirre-Salado, C.A. Comparison of data gap-filling methods for Landsat ETM+ SLC-off imagery for monitoring forest degradation in a semi-deciduous tropical forest in Mexico. Int. J. Remote Sens. 2015, 36, 2786–2799. [Google Scholar] [CrossRef]

- Jarihani, A.; McVicar, T.; van Niel, T.; Emelyanova, I.; Callow, J.; Johansen, K. Blending Landsat and MODIS Data to Generate Multispectral Indices: A Comparison of ‘Index-then-Blend’ and ‘Blend-then-Index’ Approaches. Remote Sens. 2014, 6, 9213–9238. [Google Scholar] [CrossRef] [Green Version]

| MODIS Products | Spatial Resolution (m) | Temporal Resolution | Description |

|---|---|---|---|

| MOD09GQ | 250 | Daily | Directional reflectance product acquired by Terra (red and NIR bands only) |

| MOD09GA | 500 | Daily | Directional reflectance product acquired by Terra (for all land bands) |

| MYD09GQ | 250 | Daily | Directional reflectance product acquired by Aqua (red and NIR bands only) |

| MYD09GA | 500 | Daily | Directional reflectance product acquired by Aqua (for all land bands) |

| MCD43A1 | 500 | 16-day | Terra and Aqua combined BRDF/Albedo Model Parameters product |

| MCD43A4 | 500 | Daily | Terra and Aqua combined NBAR product |

| MCD43A2 | 500 | Daily | Quality information product for the corresponding MCD43A4 product |

| MCD12Q1 | 500 | Annual | Land cover |

| Product Name | Data Source | Resolution | Coverage | Solar Zenith Angle |

|---|---|---|---|---|

| Terra NBAR | In-house BRDF correction using MCD43A1, MOD09GQ, MOD09GA, MCD12Q1 | 250 m for red and NIR bands | Clear pixels from Terra overpass time | At Terra overpass time |

| Aqua NBAR | In-house BRDF correction using MCD43A1, MYD09GQ, MYD09GA, MCD12Q1 | 250 m for red and NIR bands | Clear pixels from Aqua overpass time | At Aqua overpass time |

| Terra/Aqua combined NBAR | Standard Collection 6 MODIS NBAR products (MCD43A2 and MCD43A1) | 500 m for all Land bands | Clear pixels from 16-day period (at least two clear observations during the period) | Mean solar zenith angle during 16-day period |

| Study Areas | Path/Row | Terra + L8 | Terra + L7 | Aqua + L8 | Aqua + L7 |

|---|---|---|---|---|---|

| Site 1 | 028031 | 57.77 | 7.25 | 27.62 | 44.64 |

| 029031 | 60.05 | 4.06 | 38.30 | 40.11 | |

| Site 2 | 025036 | 63.66 | 0.66 | 12.39 | 59.15 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, D.; Gao, F.; Sun, L.; Anderson, M. Improving Spatial-Temporal Data Fusion by Choosing Optimal Input Image Pairs. Remote Sens. 2018, 10, 1142. https://doi.org/10.3390/rs10071142

Xie D, Gao F, Sun L, Anderson M. Improving Spatial-Temporal Data Fusion by Choosing Optimal Input Image Pairs. Remote Sensing. 2018; 10(7):1142. https://doi.org/10.3390/rs10071142

Chicago/Turabian StyleXie, Donghui, Feng Gao, Liang Sun, and Martha Anderson. 2018. "Improving Spatial-Temporal Data Fusion by Choosing Optimal Input Image Pairs" Remote Sensing 10, no. 7: 1142. https://doi.org/10.3390/rs10071142