Generating High Spatio-Temporal Resolution Fractional Vegetation Cover by Fusing GF-1 WFV and MODIS Data

Abstract

:1. Introduction

2. Materials and Methods

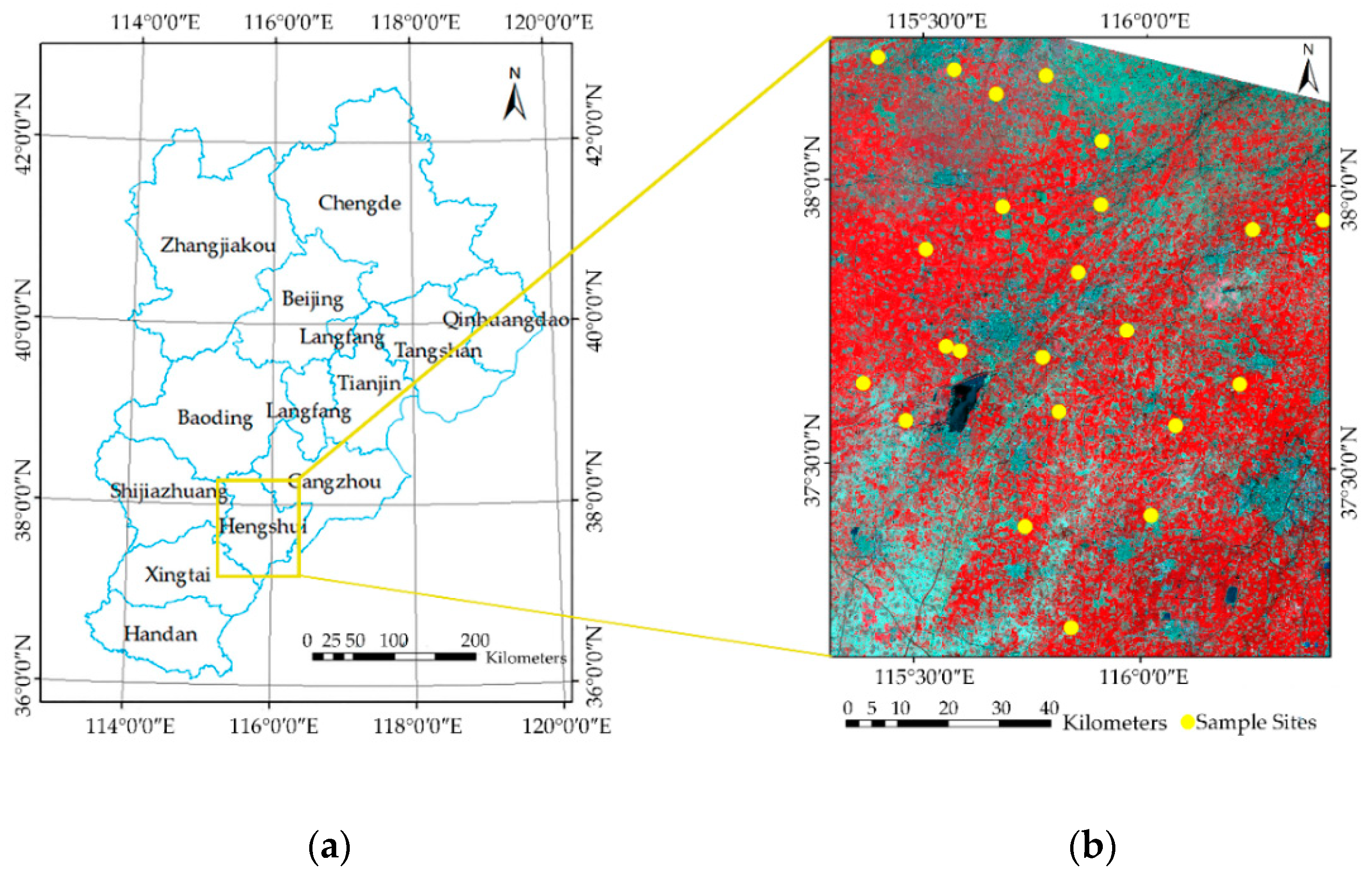

2.1. Study Area and Field Survey Data

2.2. Remote Sensing Data and Pre-Processing

2.2.1. GF-1 WFV Data

2.2.2. MOD09A1 Reflectance and GLASS FVC Data

2.3. Overall Workflow

2.4. FVC Estimation Model for GF-1 WFV Data

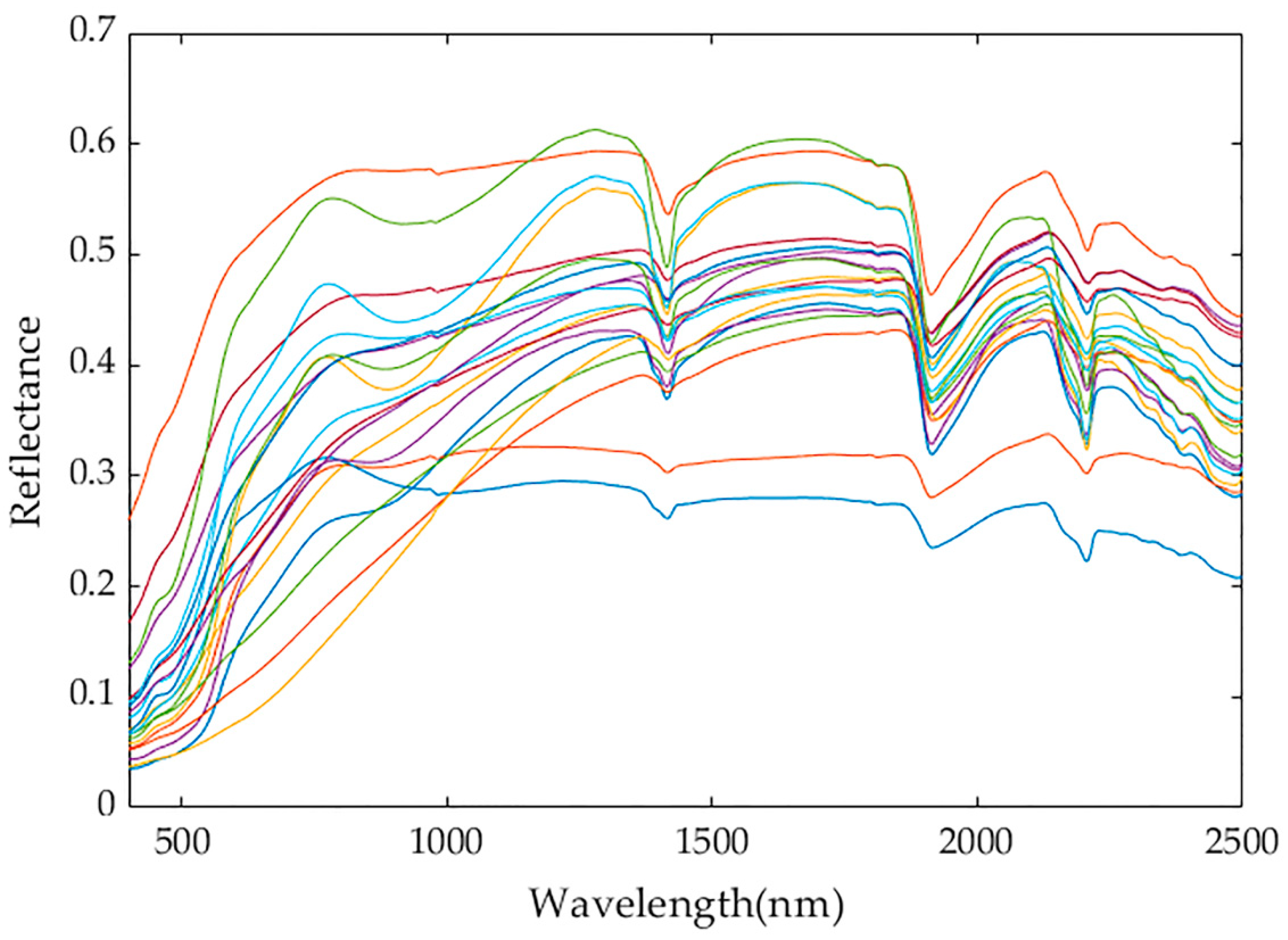

2.4.1. Generating Simulated Datasets Based on the PROSAIL Model

2.4.2. Random Forest Regression

2.5. Spatio-Temporal Fusion Method for GF-1 WFV and MODIS Data

2.6. Validation Method

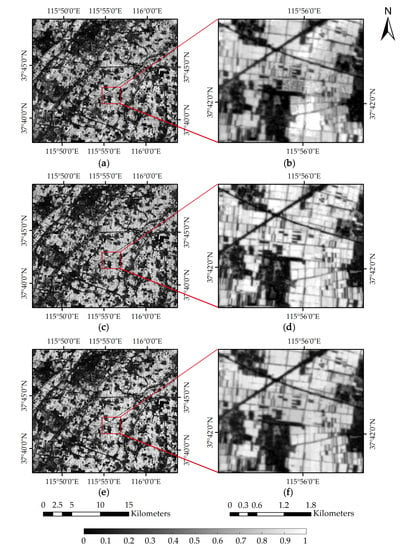

3. Results

3.1. Assessment of the GF-1 WFV FVC Estimation Model

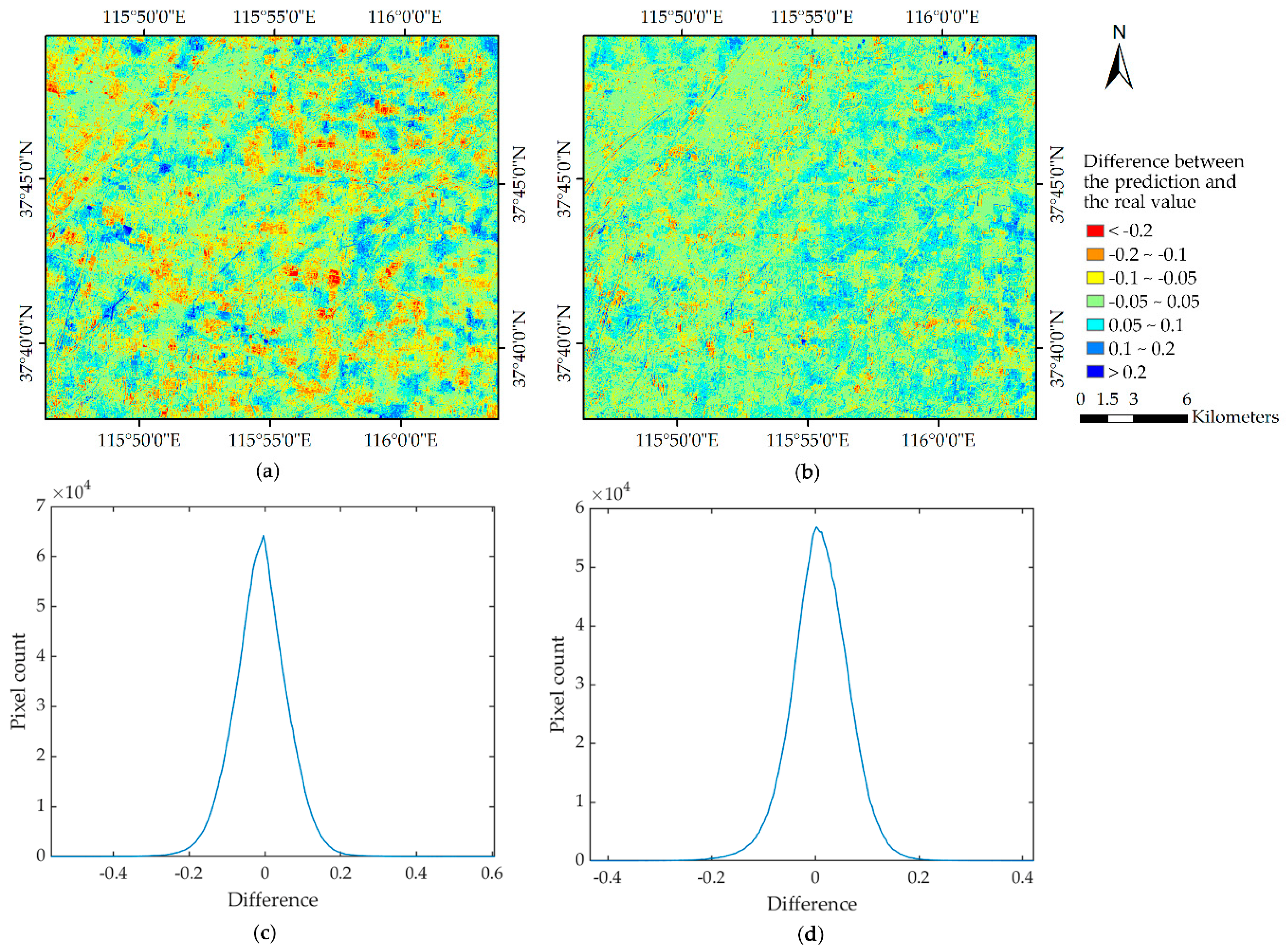

3.2. Assessment and Comparison of Strategies FC and CF

3.2.1. Assessment Based on FVC Estimated from the Actual GF-1 WFV Data

3.2.2. Assessment Based on Field Survey FVC

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ALA | Average Leaf Angle inclination |

| BRDF | Bi-directional Reflectance Distribution Function |

| Leaf chlorophyll a+b content | |

| Anthocyanin content | |

| Car | Carotenoid content |

| Brown pigment content | |

| Dry matter content | |

| Moisture content | |

| CCD | Charge-coupled Device |

| CF | Fusion strategy “FVC_then_Fusion”: Directly fusing the FVC products |

| CRESDA | China Centre for Resources Satellite Data and Application |

| CYCOLPES | Carbon Cycle and Change in Land Observational Products from an Ensemble of Satellites |

| DOY | Day of Year |

| ESTARFM | Enhanced Spatial and Temporal Adaptive Reflectance Fusion Model |

| EVI | Enhanced Vegetation Index |

| FC | Fusion strategy “Fusion_then_FVC”: Fusing the reflectance data firstly and then estimating FVC from the reflectance fusion results |

| FVC | Fractional Vegetation Cover |

| GF-1 | GaoFen-1 satellite |

| GLASS | Global LAnd Surface Satellite |

| GTPs | Ground Truth Points |

| HJ-1 | HuanJing-1 |

| HSI | Hue Saturation Intensity |

| LAI | Leaf Area Index |

| MERIS | Medium Resolution Imaging Spectrometer |

| MODIS | Moderate-resolution Imaging Spectroradiometer |

| MRT | MODIS Reprojection Tool |

| NDVI | Normalized Difference Vegetation Index |

| NNs | Neural Networks |

| PMS | Panchromatic/Multi-spectral cameras |

| POLDER | POLarization and Directionality of the Earth’s Reflectances |

| RAZ | Relative AZimuth angle |

| RFR | Random Forest Regression |

| RFR | Random Forest Regression |

| RMSE | Root Mean Square Error |

| RPC | Rational Polynomial Coefficient |

| SAM | Spectral Angle Mapper |

| SHAR-LABFVC | Shadow-resistant LABFVC |

| STARFM | Spatial and Temporal Adaptive Reflectance Fusion Model |

| SVR | Support Vector Regression |

| SZA | Solar Zenith Angle |

| UTM | Universal Transverse Mercator |

| VIIRS | Visible Infrared Imaging Radiometer Suite |

| VZA | Viewing Zenith Angle |

| WFV | Wide Field View cameras |

References

- Xiao, J.; Moody, A. A comparison of methods for estimating fractional green vegetation cover within a desert-to-upland transition zone in central New Mexico, USA. Remote Sens. Environ. 2005, 98, 237–250. [Google Scholar] [CrossRef]

- Jia, K.; Liang, S.; Gu, X.; Baret, F.; Wei, X.; Wang, X.; Yao, Y.; Yang, L.; Li, Y. Fractional vegetation cover estimation algorithm for Chinese GF-1 wide field view data. Remote Sens. Environ. 2016, 177, 184–191. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Liao, C.; Li, J.; Sun, Q. Fractional vegetation cover estimation in arid and semi-arid environments using HJ-1 satellite hyperspectral data. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 506–512. [Google Scholar] [CrossRef]

- Carlson, T.N.; Perry, E.M.; Schmugge, T.J. Remote estimation of soil moisture availability and fractional vegetation cover for agricultural fields. Agric. For. Meteorol. 1990, 52, 45–69. [Google Scholar] [CrossRef]

- Jiapaer, G.; Chen, X.; Bao, A. A comparison of methods for estimating fractional vegetation cover in arid regions. Agric. For. Meteorol. 2011, 151, 1698–1710. [Google Scholar] [CrossRef]

- Niu, R.Q.; Du, B.; Wang, Y.; Zhang, L.Q.; Chen, T. Impact of fractional vegetation cover change on soil erosion in Miyun reservoir basin, China. Environ. Earth Sci. 2014, 72, 2741–2749. [Google Scholar] [CrossRef]

- Zeng, X.B.; Dickinson, R.E.; Walker, A.; Shaikh, M.; Defries, R.S.; Qi, J.G. Derivation and Evaluation of Global 1-km Fractional Vegetation Cover Data for Land Modeling. J. Appl. Meteorol. 2000, 39, 826–839. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, X.; Zhang, M.; Dahlgren, R.A.; Eitzel, M. A review of vegetated buffers and a meta-analysis of their mitigation efficacy in reducing nonpoint source pollution. J. Environ. Qual. 2010, 39, 76–84. [Google Scholar] [CrossRef]

- Liang, S.; Li, X.; Wang, J. Advanced Remote Sensing: Terrestrial Information Extraction and Applications; Academic Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Baret, F.; Pavageau, K.; Béal, D.; Weiss, M.; Berthelot, B.; Regner, P. Algorithm Theoretical Basis Document for MERIS Top of Atmosphere Land Products (TOA_VEG); Inra-Cseavignon: Avignon, France, 2006. [Google Scholar]

- Schwieder, M.; Leitao, P.J.; Suess, S.; Senf, C.; Hostert, P. Estimating Fractional Shrub Cover Using Simulated EnMAP Data: A Comparison of Three Machine Learning Regression Techniques. Remote Sens. 2014, 6, 3427–3445. [Google Scholar] [CrossRef] [Green Version]

- Si, Y.; Schlerf, M.; Zurita-Milla, R.; Skidmore, A.; Wang, T. Mapping spatio-temporal variation of grassland quantity and quality using MERIS data and the PROSAIL model. Remote Sens. Environ. 2012, 121, 415–425. [Google Scholar] [CrossRef]

- Mu, X.; Song, W.; Gao, Z.; McVicar, T.R.; Donohue, R.J.; Yan, G. Fractional vegetation cover estimation by using multi-angle vegetation index. Remote Sens. Environ. 2018, 216, 44–56. [Google Scholar] [CrossRef]

- Baret, F.; Hagolle, O.; Geiger, B.; Bicheron, P.; Miras, B.; Huc, M.; Berthelot, B.; Niño, F.; Weiss, M.; Samain, O. LAI, fAPAR and fCover CYCLOPES global products derived from VEGETATION: Part 1: Principles of the algorithm. Remote Sens. Environ. 2007, 110, 275–286. [Google Scholar] [CrossRef] [Green Version]

- Roujean, J.L.; Lacaze, R. Global mapping of vegetation parameters from POLDER multiangular measurements for studies of surface-atmosphere interactions: A pragmatic method and its validation. J. Geophys. Res. Atmos. 2002, 107. [Google Scholar] [CrossRef]

- Zou, J.; Lan, J.; Shao, Y. A hierarchical sparsity unmixing method to address endmember variability in hyperspectral image. Remote Sens. 2018, 10, 738. [Google Scholar] [CrossRef]

- Shao, Y.; Lan, J.; Zhang, Y.; Zou, J. Spectral Unmixing of Hyperspectral Remote Sensing Imagery via Preserving the Intrinsic Structure Invariant. Sensors 2018, 18, 3528. [Google Scholar] [CrossRef] [PubMed]

- Gutman, G.; Ignatov, A. The derivation of the green vegetation fraction from NOAA/AVHRR data for use in numerical weather prediction models. Int. J. Remote Sens. 1998, 19, 1533–1543. [Google Scholar] [CrossRef]

- Kimes, D.S.; Knyazikhin, Y.; Privette, J.; Abuelgasim, A.; Gao, F. Inversion methods for physically-based models. Remote Sens. Rev. 2000, 18, 381–439. [Google Scholar] [CrossRef]

- Baret, F.; Weiss, M.; Lacaze, R.; Camacho, F.; Makhmara, H.; Pacholcyzk, P.; Smets, B. GEOV1: LAI and FAPAR essential climate variables and FCOVER global time series capitalizing over existing products. Part1: Principles of development and production. Remote Sens. Environ. 2013, 137, 299–309. [Google Scholar] [CrossRef]

- Mizuochi, H.; Hiyama, T.; Ohta, T.; Fujioka, Y.; Kambatuku, J.R.; Iijima, M.; Nasahara, K.N. Development and evaluation of a lookup-table-based approach to data fusion for seasonal wetlands monitoring: An integrated use of AMSR series, MODIS, and Landsat. Remote Sens. Environ. 2017, 199, 370–388. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the blending of the Landsat and MODIS surface reflectance: Predicting daily Landsat surface reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Walker, J.; De Beurs, K.; Wynne, R. Dryland vegetation phenology across an elevation gradient in Arizona, USA, investigated with fused MODIS and Landsat data. Remote Sens. Environ. 2014, 144, 85–97. [Google Scholar] [CrossRef]

- Meng, J.; Du, X.; Wu, B. Generation of high spatial and temporal resolution NDVI and its application in crop biomass estimation. Int. J. Digit. Earth 2013, 6, 203–218. [Google Scholar] [CrossRef]

- Jia, K.; Liang, S.; Zhang, L.; Wei, X.; Yao, Y.; Xie, X. Forest cover classification using Landsat ETM+ data and time series MODIS NDVI data. Int. J. Appl. Earth Obs. Geoinf. 2014, 33, 32–38. [Google Scholar] [CrossRef]

- Song, W.; Mu, X.; Yan, G.; Huang, S. Extracting the green fractional vegetation cover from digital images using a shadow-resistant algorithm (SHAR-LABFVC). Remote Sens. 2015, 7, 10425–10443. [Google Scholar] [CrossRef]

- Cooley, T.; Anderson, G.P.; Felde, G.W.; Hoke, M.L.; Ratkowski, A.J.; Chetwynd, J.H.; Gardner, J.A.; Adler-Golden, S.M.; Matthew, M.W.; Berk, A. FLAASH, a MODTRAN4-based atmospheric correction algorithm, its application and validation. Proceedings of IEEE International Geoscience & Remote Sensing Symposium, Toronto, ON, Canada, 24–28 June 2002. [Google Scholar]

- Tang, H.; Yu, K.; Hagolle, O.; Jiang, K.; Geng, X.; Zhao, Y. A cloud detection method based on a time series of MODIS surface reflectance images. Int. J. Digit. Earth 2013, 6, 157–171. [Google Scholar] [CrossRef]

- Jia, K.; Yang, L.; Liang, S.; Xiao, Z.; Zhao, X.; Yao, Y.; Zhang, X.; Jiang, B.; Liu, D. Long-Term Global Land Surface Satellite (GLASS) Fractional Vegetation Cover Product Derived From MODIS and AVHRR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 508–518. [Google Scholar] [CrossRef]

- Yang, L.; Jia, K.; Liang, S.; Liu, J.; Wang, X. Comparison of four machine learning methods for generating the GLASS fractional vegetation cover product from MODIS data. Remote Sens. 2016, 8, 682. [Google Scholar] [CrossRef]

- Jia, K.; Liang, S.; Wei, X.; Yao, Y.; Yang, L.; Zhang, X.; Liu, D. Validation of Global LAnd Surface Satellite (GLASS) fractional vegetation cover product from MODIS data in an agricultural region. Remote Sens. Lett. 2018, 9, 847–856. [Google Scholar] [CrossRef]

- Campos-Taberner, M.; Moreno-Martínez, Á.; García-Haro, F.; Camps-Valls, G.; Robinson, N.; Kattge, J.; Running, S. Global estimation of biophysical variables from google earth engine platform. Remote Sens. 2018, 10, 1167. [Google Scholar] [CrossRef]

- Wang, B.; Jia, K.; Liang, S.; Xie, X.; Wei, X.; Zhao, X.; Yao, Y.; Zhang, X. Assessment of Sentinel-2 MSI Spectral Band Reflectances for Estimating Fractional Vegetation Cover. Remote Sens. 2018, 10, 1927. [Google Scholar] [CrossRef]

- Jacquemoud, S.; Baret, F. PROSPECT: A model of leaf optical properties spectra. Remote Sens. Environ. 1990, 34, 75–91. [Google Scholar] [CrossRef]

- Verhoef, W. Light scattering by leaf layers with application to canopy reflectance modeling: The SAIL model. Remote Sens. Environ. 1984, 16, 125–141. [Google Scholar] [CrossRef] [Green Version]

- Darvishzadeh, R.; Skidmore, A.; Schlerf, M.; Atzberger, C. Inversion of a radiative transfer model for estimating vegetation LAI and chlorophyll in a heterogeneous grassland. Remote Sens. Environ. 2008, 112, 2592–2604. [Google Scholar] [CrossRef]

- Duan, S.B.; Li, Z.L.; Wu, H.; Tang, B.H.; Ma, L.; Zhao, E.; Li, C. Inversion of the PROSAIL model to estimate leaf area index of maize, potato, and sunflower fields from unmanned aerial vehicle hyperspectral data. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 12–20. [Google Scholar] [CrossRef]

- Kuusk, A. The hot spot effect in plant canopy reflectance. In Photon-Vegetation Interactions; Myneni, R.B., Ross, J., Eds.; Springer: Berlin/Heidelberg, Germany; pp. 139–159. [CrossRef]

- Feret, J.B.; François, C.; Asner, G.P.; Gitelson, A.A.; Martin, R.E.; Bidel, L.P.; Ustin, S.L.; Le Maire, G.; Jacquemoud, S. PROSPECT-4 and 5: Advances in the leaf optical properties model separating photosynthetic pigments. Remote Sens. Environ. 2008, 112, 3030–3043. [Google Scholar] [CrossRef]

- Scurlock, J.; Asner, G.; Gower, S. Worldwide Historical Estimates of Leaf Area Index, 1932–2000; ORNL/TM-2001/268; Oak Ridge National Laboratory: Oak Ridge, TN, USA, 2001; Volume 34. [Google Scholar]

- Weiss, M.; Baret, F.; Smith, G.; Jonckheere, I.; Coppin, P. Review of methods for in situ leaf area index (LAI) determination: Part II. Estimation of LAI, errors and sampling. Agric. For. Meteorol. 2004, 121, 37–53. [Google Scholar] [CrossRef]

- Dennison, P.E.; Halligan, K.Q.; Roberts, D.A. A comparison of error metrics and constraints for multiple endmember spectral mixture analysis and spectral angle mapper. Remote Sens. Environ. 2004, 93, 359–367. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Hutengs, C.; Vohland, M. Downscaling land surface temperatures at regional scales with random forest regression. Remote Sens. Environ. 2016, 178, 127–141. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Knauer, K.; Gessner, U.; Fensholt, R.; Kuenzer, C. An ESTARFM fusion framework for the generation of large-scale time series in cloud-prone and heterogeneous landscapes. Remote Sens. 2016, 8, 425. [Google Scholar] [CrossRef]

- Olexa, E.M.; Lawrence, R.L. Performance and effects of land cover type on synthetic surface reflectance data and NDVI estimates for assessment and monitoring of semi-arid rangeland. Int. J. Appl. Earth Obs. Geoinf. 2014, 30, 30–41. [Google Scholar] [CrossRef]

- Dewen, C.; Niu Zheng, W.L. Adaptability research of spatial and temporal remote sensing data fusion technology in crop monitoring. Remote Sens. Technol. Appl. 2013, 27, 927–932. [Google Scholar]

- Liu, D.; Yang, L.; Jia, K.; Liang, S.; Xiao, Z.; Wei, X.; Yao, Y.; Xia, M.; Li, Y. Global Fractional Vegetation Cover Estimation Algorithm for VIIRS Reflectance Data Based on Machine Learning Methods. Remote Sens. 2018, 10, 1648. [Google Scholar] [CrossRef]

| Crop Type | The Start and End Time of Field Observation |

|---|---|

| Winter Wheat | 29 March 2017 to 1 April 2017 |

| Winter Wheat | 4 May 2017 to 6 May2017 |

| Maize | 5 July 2017 to 8 July 2017 |

| Maize | 29 July 2017 to 31 July 2017 |

| Acquisition Date | Sensor Type | Tile Number |

|---|---|---|

| 8 March 2017 | WFV4 | 3411993 |

| 12 March 2017 | WFV4 | 3420332 |

| 1 April 2017 | WFV3 | 3502340, 3502339 |

| 18 April 2017 | WFV3 | 3566875 |

| 26 April 2017 | WFV4 | 3595448 |

| 12 May 2017 | WFV3 | 3650209 |

| 26 June 2017 | WFV1 | 3810592 |

| 8 July 2017 | WFV2 | 3856572 |

| 10 August 2017 | WFV3 | 3977545 |

| Tiles | Day of Year (DOY) |

|---|---|

| H27V05 | DOY65, DOY89, DOY105, DOY113, DOY117, DOY121, DOY129, DOY185, DOY209, DOY217 |

| Model | Parameters | Unit | Range | Distribution | Mean | Std. |

|---|---|---|---|---|---|---|

| PROSPECT | μg/cm2 | 20~90 | Gauss | 45 | 30 | |

| g/cm2 | 0.003~0.011 | Gauss | 0.005 | 0.005 | ||

| μg/cm2 | 4.4 | - | - | - | ||

| cm | 0.005~0.015 | Uniform | ||||

| - | 0~2 | Gauss | 0 | 3 | ||

| μg/cm2 | 0 | - | - | - | ||

| N | - | 1.2~2.2 | Gauss | 1.5 | 0.3 | |

| SAIL | LAI | - | 0~7 | Gauss | 2 | 3 |

| ALA | ° | 30~80 | Gauss | 60 | 30 | |

| SZA | ° | 35 | - | - | - | |

| VZA | ° | 0 | - | - | - | |

| RAZ | ° | 0 | - | - | ||

| Hot | - | 0.1~0.5 | Gauss | 0.2 | 0.5 |

| Dates of GF-1 WFV to Fuse | Dates of MODIS to Fuse | Predicted Date | Date of the Actual GF-1 WFV |

|---|---|---|---|

| 18 April 2017 | DOY105 | DOY113 | 26 April 2017 |

| 12 May 2017 | DOY129 |

| Number of Experiment | Date of GF-1 WFV | Date of MODIS | Predicted Date | Date of Field Survey Data |

|---|---|---|---|---|

| 1 | 8 March 2017 12 March 2017 | DOY65 | DOY89 | 29 March 2017 to 1 April 2017 |

| 26 April 2017 | DOY113 | |||

| 2 | 18 April 2017 | DOY105 | DOY121 | 4 May 2017 to 6 May 2017 |

| 12 May 2017 | DOY129 | |||

| 3 | 8 July 2017 | DOY185 | DOY209 | 29 July 2017 to 31 July 2019 |

| 10 August 2017 | DOY217 | |||

| 4 | 26 June 2017 | DOY117 | DOY185 | 5 July 2017 to 8 July 2017 |

| DOY209 (Results of the No.3 Experiment) | DOY209 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tao, G.; Jia, K.; Zhao, X.; Wei, X.; Xie, X.; Zhang, X.; Wang, B.; Yao, Y.; Zhang, X. Generating High Spatio-Temporal Resolution Fractional Vegetation Cover by Fusing GF-1 WFV and MODIS Data. Remote Sens. 2019, 11, 2324. https://doi.org/10.3390/rs11192324

Tao G, Jia K, Zhao X, Wei X, Xie X, Zhang X, Wang B, Yao Y, Zhang X. Generating High Spatio-Temporal Resolution Fractional Vegetation Cover by Fusing GF-1 WFV and MODIS Data. Remote Sensing. 2019; 11(19):2324. https://doi.org/10.3390/rs11192324

Chicago/Turabian StyleTao, Guofeng, Kun Jia, Xiang Zhao, Xiangqin Wei, Xianhong Xie, Xiwang Zhang, Bing Wang, Yunjun Yao, and Xiaotong Zhang. 2019. "Generating High Spatio-Temporal Resolution Fractional Vegetation Cover by Fusing GF-1 WFV and MODIS Data" Remote Sensing 11, no. 19: 2324. https://doi.org/10.3390/rs11192324