1. Introduction

Polarimetric synthetic aperture radar (PolSAR) images have received a lot of attention as they can provide more comprehensive and abundant information compared with SAR images [

1]. In recent decades, the application of PolSAR images has received sustained development in the remote sensing field. In particular, land use land cover (LULC) classification is one of the most typical and important applications in automatic image analysis and interpretation [

2]. Until now, numerous traditional schemes have been put forward for PolSAR image classification, such as Wishart classifiers [

3,

4,

5], target decompositions (TDs) [

6,

7,

8,

9] and random fields (RFs) [

10,

11,

12]. Those traditional methods often use some low-level or mid-level features, which are usually extracted by some feature extraction techniques. Generally, the extracted features include statistic features [

5,

10], backscattering elements [

13], TDs-based features [

8,

9] as well as some popular features used for other images [

14,

15,

16,

17,

18]. However, the extracted features are not only mostly class-specific and hand-crafted but also involve a considerable amount of manual trial and error [

2]. Moreover, some extracted features such as TDs features rely heavily on the complex analysis of PolSAR data. Meanwhile, the selection of descriptive feature sets often increases computation time.

With the rapid development of learning algorithms, several machine learning tools do perform feature learning (or at least feature optimization), such as support vector machines (SVMs) [

15,

19] and random forest (RF) [

2]. However, they are still shallow models that focus on many input features and may not be robust to nonlinear data [

20]. Recently, deep learning (DL) methods represented by the deep convolutional neural networks (CNNs) have demonstrated remarkable learning ability in many image analysis fields [

13,

21,

22,

23]. With the rapid development of DL, many deep networks have been developed for the semantic segmentation, such as sparse autoencoder (SAE), deep fully convolutional neural network (FCN), deep belief network (DBN), generative adversarial network (GAN), and graph convolutional networks (GCN). Compared with the aforementioned conventional methods, DL techniques can automatically learn discriminative features and perform advanced tasks by multiple neural layers in an end-to-end manner, thereby reducing manual error and achieving promising results [

23,

24]. In recent years, some better DL-based algorithms have significantly improved the performance of PolSAR image classification. De et al. [

25] proposed a classification scheme of urban areas in PolSAR data based on polarization orientation angle (POA) shifts and the SAE. Liu et al. [

26] proposed the Wishart DBN (W-DBN) for PolSAR image classification. They defined the Wishart-Bernoulli restricted Boltzmann machine (WBRBM), and stacked WBRBM and binary RBM to form the W-DBN. Zhou et al. [

27] introduced CNN for PolSAR image classification to automatically learn hierarchical polarimetric spatial features. Bin et al. [

28] improved a CNN model and presented a graph-based semisupervised CNN for PolSAR image classification. Inspired by the success of FCN, Wang et al. [

29] presented an effective PolSAR image classification scheme to integrate deep spatial patterns learned automatically by FCN. Li et al. [

30] proposed the sliding window FCN and sparse coding (SFCN-SC) for PolSAR image classification. They employed sliding window operation to apply the FCN architecture directly to PolSAR images. Mohammadimanesh et al. [

31] specially designed a new FCN architecture for the classification of wetland complexes using PolSAR imagery. Furthermore, Pham et al. [

32] employed the SegNet model for the classification of very high resolution (VHR) PolSAR data. However, most studies on DL methods for PolSAR classification tasks predominantly focus on the case of real-valued neural networks (RV-NNs).

Notably, in RV-NNs, inputs, weights, and outputs are all modeled as real-valued (RV) numbers. This means that projections are required to convert the PolSAR complex-valued (CV) data to RV features as RV-NNs input. Although RV-NNs have demonstrated excellent performance in PolSAR image classification tasks, there are a couple of problems stated by RV features. First, it is unclear which projection yields the best performance towards a particular PolSAR image. Although the descriptive feature set generated by multi-projection has achieved remarkable results, a larger feature set means larger computation time and memory consumption, and may even cause data redundancy problems [

2]. Secondly, projection sometimes means a loss of valuable information, especially the phase information, which may lead to unsatisfactory results. Actually, the phase of multi-channel SAR data can provide useful information in the interpretation of SAR images. Especially for PolSAR systems, the phase differences between polarizations have received significant attention for a couple of decades [

33,

34,

35].

In view of the aforementioned problems, some researchers have begun to investigate networks which are tailored to CV data of PolSAR images rather than requiring any projection to classify PolSAR images. Hansch et al. [

36] first proposed the complex-valued MLPs (CV-MLPs) for land use classification in PolSAR images. Shang et al. [

37] suggested a complex-valued feedforward neural network in the Poincare sphere parameter space. Moreover, an improved quaternion neural network [

38] and a quaternion autoencoder [

39] have been proposed for PolSAR land classification. Recently, a complex-valued CNN (CV-CNN) specifically designed for PolSAR image classification has been proposed by Zhang et al. [

40], where the authors derived a complex backpropagation algorithm based on stochastic gradient descent for CV-CNN training.

Although CV-NNs have achieved remarkable breakthroughs for PolSAR image classification, they still suffer some challenges. First, we find that relatively deep network architectures have not received considerable attention in the complex domain. The structures of the above CV-NNs are relatively simple with limited feature extraction layers. This results in limited learning characteristics, which may yield the risk of sub-optimal classification results. Secondly, these networks fail to sufficiently take spatial information into account to effectively reduce the impact of speckles on classification results. Due to the inherent existence of speckle in PolSAR images, the pixel-based classification is easily affected and even leads to incorrect results. In this case, these CV-NNs would be ineffective to explicitly distinguish complex classes, since only local contexts caused by small image patches are considered. Thirdly, it is necessary to construct a CV-NN for direct pixel-wise labeling to predict fast and effectively. Actually, the image classification is a dense (pixel-level) problem that aims at assigning a label to each pixel in the input image. However, existing CV-NNs usually assign an entire input image patch to a category. This results in a large amount of redundant processing and leads to serious repetitive computation.

In response to the above challenges, this paper explores a complex-valued deep FCN architecture, which is an extension of FCN to the complex domain. The FCN is first proposed in [

41] and is an excellent pixel-level classifier for semantic labeling. Typically, FCN outputs a 2-dimensional (2D) spatial image and can preserve certain spatial context information for accurate labeling results. Recently, FCNs have demonstrated remarkable classification ability in the remote sensing community [

42,

43]. However, to use FCN in the complex domain (i.e., CV-FCN) for PolSAR image classification, some tricky problems need to be tackled. First, the CV-FCN tailored to PolSAR data requires a proper scheme for the initialization of complex-valued weights. Generally, FCNs are often pre-trained on VGG-16 [

44], whose parameters are first trained using optical images and are all real-valued numbers. However, those parameters are not appropriate to initialize CV weights of CV-FCN and are ineffective for PolSAR images since they cannot preserve polarimetric phase information. Therefore, a proper complex-valued weight initialization scheme not only can effectively initialize CV weights but also has the potential to reduce the risks of vanishing or exploding gradients, thereby speeding up the training process and improving the performance of networks. Secondly, layers in the upsampling scheme of CV-FCN should be constructed in the complex domain. Although some works have extended some layers to the complex domain [

36,

40,

45], upsampling layers have not yet thoroughly examined in such domain. Finally, it is necessary to select a loss function for predicted CV labeling in the training processing of CV-FCN. The aim is to achieve faster convergence during CV-FCN optimization and obtain higher classification accuracy. Thus, how to design a reasonable loss function in the complex domain that is suitable for PolSAR images classification needs to be solved.

In view of the above-involved limitations, we present a novel complex-valued deep fully convolutional network (CV-FCN) for the classification of PolSAR imagery. The proposed deep CV-FCN adopts the complex downsampling-then-upsampling scheme to achieve pixel-wise classification results. To this end, this paper focuses on four works: (1) complex-valued weight initialization for faster PolSAR feature learning; (2) multi-level CV feature extraction for enriching discriminative information; (3) more spatial information recovery for stronger speckle noise immunity; (4) average cross-entropy loss function for more precise labeling results. Specifically, CV weights of CV-FCN are first initialized by a new complex-valued weight initialization scheme. The scheme explicitly focuses on the statistical characteristics of PolSAR data. Thus, it is very effective for faster training. Then, different-level CV features that retain more polarization information are extracted via the complex downsampling section. Those CV features have a powerful discriminative capacity for various classes. Subsequently, the complex upsampling section upsamples low-resolution CV feature maps and generates dense labeling. Notably, for more spatial information retaining, the complex max-unpooling layers are used in the upsampling section. Those layers recover more spatial information by the max locations maps to reduce the effect of speckles on coherent labeling results as well as improve boundary delineation. In addition, to promote CV-FCN training more effectively, an average cross-entropy loss function is employed in the process of updating CV-FCN parameters. The loss function performs the cross-entropy operation on the real and imaginary parts of CV predicted labeling, respectively. In this way, the phase information is also taken into account during parameter updating, which makes the classification of PolSAR images more precise. Extensive experimental results reflect the effectiveness of CV-FCN for the classification of PolSAR imagery. In summary, the major contributions of this paper can be highlighted as follows:

The CV-FCN structure is proposed for PolSAR image classification, whose weights, biases, inputs, and outputs are all modeled as complex values. The CV-FCN directly uses raw PolSAR CV data as input without any data projection. In this case, it can extract multi-level and more robust CV features that retain more polarization information and have a powerful discriminative capacity for various categories.

A new complex-valued weight initialization scheme is employed to initialize CV-FCN parameters. It allows CV-FCN to mine polarimetric features with relatively few adjustments. Thus, it can speed up CV-FCN training and save computation time.

A complex upsampling scheme for CV-FCN is proposed to capture more spatial information through the complex max-unpooling layers. This scheme cannot only simplify the upsampling learning optimization but also recover more spatial information by max locations maps to reduce the impact of speckles. Thus, smoother and more coherent classification results can be achieved.

A new average cross-entropy loss function in the complex domain is employed for CV-FCN optimization. It takes the phase information into account during parameters updating by average cross-entropy operation of predicted CV labels. Therefore, the new loss function enables CV-FCN optimization more precise while boosting the labeling accuracy.

The remainder of this paper is organized as follows.

Section 2 formulates a detailed theory for the classification method of CV-FCN. In

Section 3, the experiment results on real benchmark PolSAR images are reported. In addition, some related discussions are presented in

Section 4. Finally, the conclusion and future works are given in

Section 5.

2. Proposed Method

In this work, a deep CV-FCN is proposed to conduct the PolSAR image classification. The CV-FCN method integrates the feature extraction module and the classification module in a unified framework. Thus, features extracted through CV-FCN trained by PolSAR data are more able to distinguish various categories for PolSAR classification tasks. In the following, we first give the framework of the deep CV-FCN classification method in

Section 2.1. Then, to learn more discriminative features for classification faster and more accurately, it is critical to train CV-FCN suitable for PolSAR images. Thus, we highlight and introduce four critical works for CV-FCN training in

Section 2.2,

Section 2.3 and

Section 2.4. They include CV weight initialization, deep and multi-level CV feature extraction, more spatial information recovery, and loss function for more precise optimization. Finally, the CV-FCN classification algorithm is summarized in

Section 2.5.

2.1. Framework of the Deep CV-FCN Classification Method

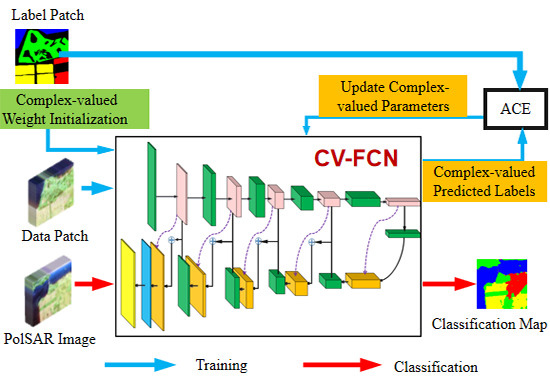

The framework of the CV-FCN classification method is shown in

Figure 1, which consists of two separate modules: the feature extraction module and the classification module. In the feature extraction module, CV-FCN is trained to exploit the discriminative information. Then, the trained CV-FCN is used to classify PolSAR images in the classification module.

The data patches set and the corresponding label patches set are first prepared as input to CV-FCN before training. The two sets are generated from the PolSAR data set and the corresponding ground-truth mask, respectively. Let the CV PolSAR dataset be , where h and w are the height and width of the spatial dimensions respectively, B is the number of complex bands, and is the complex domain. The corresponding ground-truth mask is denoted as . The set of all data patches cropped from the given data set is denoted as , and the corresponding label patches set is , where and () represent one data patch and corresponding label patch, respectively. Here n is the total number of patches, H and W are the patch size in the spatial dimension.

In the feature extraction module, a novel complex-valued weight initialization scheme is first adopted to initialize CV-FCN. Then, a certain percent of patches from the set

are randomly chosen as the training data patches

to the network. These data patches are forward-propagated through the complex downsampling section of CV-FCN [marked by red dotted boxes in

Figure 1] to extract multi-level CV feature maps. Then those low-resolution feature maps are upsampled by the complex upsampling section [marked by blue dotted boxes in

Figure 1] to generate the predicted label patches. Subsequently, calculate the error between the predicted label patches and the corresponding label patches

according to a novel loss function, and then iteratively updating CV parameters in CV-FCN. According to certain conditions, the updating iteration will terminate when the error value does not substantially change.

In the classification module, we feed the entire PolSAR dataset to the trained network. The label of every pixel in this PolSAR image is predicted based on the output of the last complex SoftMax layer. Notably, compared with a CNN model that predicts a single label for the center of each image patch, the CV-FCN model can predict all pixels in the entire image at one time. Thus, this enables pixel-level labeling and can decrease the computation time during the prediction.

2.2. New Complex-Valued Weight Initialization Scheme Using Polarization Data Distribution for Faster Feature Learning

Training deep networks generally rely on proper weight initialization to accelerate learning [

46] and reduce the risks of vanishing or exploding gradients [

45]. To train CV-FCN, we will first consider the problem of complex-valued (CV) weights initialization. Generally, deep ConvNets can update from pre-trained weights generated by the transfer learning technique. However, those weights are all real-valued (RV) and are not appropriate to initialize CV-FCN. Additionally, deep ConvNets pre-trained by RV weights can only consider the backscattering intensities, which means a loss of the polarimetric phase [

47]. Here, based on the distributions of polarization data, a new complex-valued (CV) weight initialization scheme is employed for faster network learning.

For RV networks that process PolSAR images, learned weights can well characterize scattering patterns, particularly in high-level layers [

27]. In [

40], the initialization scheme just initializes the real and imaginary parts of a CV weight separately with a uniform distribution. Fortunately, for a reciprocal medium, a complex scattering vector

can be modeled by a multivariate complex Gaussian distribution, where individual complex scattering coefficient

of

is assumed to have the complex Gaussian distribution [

1]. Thus, we use this distribution to initialize the complex-valued weights in CV-FCN.

Suppose that a CV weight is denoted as

, where the real component

and the imaginary component

are all identically Gaussian distributed with 0 mean and variance

. Here, the initialization criterion proposed by He et al. [

46] is used to calculate the variance of

, i.e.,

, where

is the number of input units. Notably, this criterion provides the current best practice when the activation function is ReLU [

45]. Moreover, the CV weight

can also be denoted as

where

and

are the magnitude and the phase of

, respectively.

is the imaginary unit. Since

is assumed to have a complex Gaussian distribution, the magnitude

follows the Rayleigh distribution [

1]. The expectation and the variance are given by

where

is the single parameter in the Rayleigh distribution. In addition, the variance

and the variance

can be defined as

According to the initialization rules of [

45], in the case of

symmetrically distributed around 0,

. Thus, from Equation (

4) and Equation (

5),

can be formulated as

By substituting Equation (

2) and Equation (

3) into Equation (

6),

is calculated as

According to He’s initialization criterion and Equation (

7), the single parameter in the Rayleigh distribution can be computed as

. At this point, the Rayleigh distribution can be used to initialize the amplitude

. In addition, the phase

is initialized by using the uniform distribution between

and

. Then, a complex-valued weight can be initialized using a random number that is generated according to Equation (

1) by the multiplication of the amplitude

by the phasor

. In this way, according to He’s initialization criterion and Equation (

1), each complex-valued weight in the CV-FCN model can be initialized. Therefore, we perform the new complex-valued weight initialization for CV-FCN.

It is worth noting that our initialization scheme is quite different from the random initialization on both the real and imaginary parts of a CV weight [

40]. The most notable superiority of the new initialization scheme lies in explicitly focusing on the statistical characteristics of training data, which makes it possible for the network to learn properties suitable for PolSAR images after a small amount of fine-tuning. We can understand that the network exhibits some of the same properties as the data to be learned at the beginning, which seems to give a priori rather than the initial random information. Thus, it is possible to increase the potential opportunity to learn some special properties of PolSAR datasets and is much effective for faster training.

2.3. Deep CV-FCN for Dense Feature Extraction

In the forward propagation of the CV-FCN training procedure, dense features are extracted through the complex downsampling-then-upsampling scheme. The detailed configuration of CV-FCN is shown in

Table 1. The complex downsampling section first extracts effective multi-level CV features through downsampling blocks (i.e., B1–B5 in

Figure 1). Then, the complex upsampling section recovers more spatial information in a simple manner and produces dense labeling through a series of upsampling blocks (i.e., B7–B11 in

Figure 1). In particular, fully skip connections between the complex downsampling section and the complex upsampling section fuse shallow, fine features and deep, coarse features to preserve sufficient detailed information for the distinction of complex classes.

2.3.1. Multi-Level Complex-Valued Feature Extraction via the Complex Downsampling Section

The complex downsampling section consisting of downsampling blocks extracts 2-D CV features of different levels. In CV-FCN, five downsampling blocks are employed to extract more abstract and extensive features. Each of them contains four layers, including a complex convolution layer, a complex batch normalization layer, a complex activation layer, and a complex max-pooling layer. Among these layers, the main feature extraction work is performed in the complex convolution layer. Compared with the real convolution layer, the complex convolution layer extracts CV features that retain more polarization information and discriminative information through the complex convolution operation.

In the

lth complex convolution layer, the complex-valued weight parameters and the complex-valued bias are denoted by

and

, respectively, where

H denotes the complex convolutional kernel size,

is the number of input complex feature maps and

is the number of output complex feature maps. The output complex feature maps

outputted by the complex convolution layer is computed by

where

denotes the given input complex feature maps,

is the input feature map size, and ⊗ denotes the convolution operation in the complex domain. The matrix notation of the

nth output complex feature map

[

40] is given by

where

, and

and

are respectively the real part and the imaginary part of

. ⊙ denotes the convolution operation in the real domain. Thus, the

nth output complex feature map can be represented as

The complex batch normalization (BN) layer [

45] is performed for normalization after complex convolution, which holds great potential to relieve networks from overfitting. For the nonlinear transformation of CV features, we find that the complex-valued ReLU (

ReLU) [

45] as the complex activation can provide us good results. The

ReLU is defined as

where

. Then the output

in the

th complex nonlinear layer can be given

Furthermore, the complex max-pooling layer [

40] is adopted to generalize features to a higher level. In this way, features are more robust and CV-FCN can converge well. After five downsampling blocks, the block 6 (B6 in

Figure 1) including a complex convolution layer with 1 × 1 kernels and a complex batch normalization layer densifies its sparse input and extracts complex convolution features.

2.3.2. Using Complex Upsampling Section for More Spatial Information Recovery to Stronger Speckle Noise Immunity

After the complex downsampling section for multi-level CV features extraction, a complex upsampling section is implemented to upsample those CV feature maps. In particular, the new complex max-unpooling layers are employed in the complex upsampling section. The reason is two-fold. On the one hand, compared with the complex deconvolution layer, which is another upsampling operation, the complex max-unpooling layer reduces the number of trainable parameters and mitigates information loss due to complex pooling operations. On the other hand, obtaining smooth labeling results is not easy owing to the inherent existence of speckle in PolSAR images. This issue can be addressed by the complex max-unpooling layer that recovers more spatial information by the max locations maps [represented by purple dotted arrows in

Figure 1]. The spatial information is a critical indicator for confusing categories classification, which captures more wider visual cues to stronger speckle noise immunity.

To be more intuitive,

Figure 2 illustrates an example of the complex max-unpooling operation. The green and black boxes are simple structures of the complex max-pooling and complex max-unpooling, respectively. As shown in the green box, the amplitude feature map is formed by the real and imaginary feature maps where the red dotted box represents a 2 × 2 pooling window with a stride of 2. In the amplitude feature map, four maximum amplitude values are chosen by corresponding pooling windows which are marked by orange, blue, green, and yellow, respectively. They construct the pooled map. At the same time, locations of those maxima are recorded in a set of switch variables which are visualized by the so-called max locations map. On the other hand, in the black box, the real and imaginary input maps are upsampled by the usage of this max locations map, respectively. Then the real and imaginary unpooled maps are produced. Here, those unpooled maps are sparse wherein white regions have the values of 0. This will ensure that the resolution of the output is higher than the resolution of its input.

In particular, we perform the fully skip connections that can fuse multi-level features to preserve sufficient discriminative information for the classification of complex classes. Finally, the complex output layer with the complex SoftMax function [

40] is used to calculate the prediction probability map. Thus, the output of CV-FCN can be formulated as

where

indicates the inputs of the complex output layer and

is the SoftMax function in the real domain. In this layer, output feature maps are the same size as the data patch fed into CV-FCN. This enables pixel-to-pixel training. After the complex downsampling section and the complex upsampling section, the complex forward propagation process of the training phase is completed.

2.4. Average Cross-Entropy Loss Function for Precise CV-FCN Optimization

To promote CV-FCN training more effectively and achieve more precise results, a novel loss function is used as the learning objective to iteratively update CV-FCN parameters

during the backpropagation.

includes

and

. Usually, for multi-class classification tasks, the cross-entropy loss function performs well to update parameters. Compared with the quadratic cost function, it can lead to faster convergence and better classification results [

48]. Thus, a novel average cross-entropy loss function is employed for predicted CV labels in PolSAR classification tasks, which is based on the definition of the popular cross-entropy loss function. Formally, the average cross-entropy (ACE) loss function is defined as

where

indicates the output data patch in the last complex SoftMax layer and

K is the total number of classes.

denotes the sparse representation of the true label patch

, which is converted by one-hot encoding. Notably, non-zeros positions within

are

instead of

. This means that we also take the phase information into account during parameters updating. As a result, the updated CV-FCN can work effectively to provide more precise classification results for PolSAR images.

can be updated iteratively by

J and learning rate

according to

To calculate Equation (

15) and Equation (

16), the key point is to calculate the partial derivatives. Note

J is a real-valued loss function, it can be back-propagated through CV-FCN according to the generalized complex chain rule in [

36]. Thus, the partial derivatives can be calculated as follows:

When the value of loss function no longer decreases, the parameter update is suspended, and the training phase is completed. The trained network will then be used to predict the entire PolSAR image in the classification module.

2.5. CV-FCN PolSAR Classification Algorithm

For more intuitive, the proposed CV-FCN PolSAR Classification algorithm is illustrated by Algorithm 1. Specifically, we first construct the entire training set for CV-FCN and employ the new complex-valued weight initialization scheme to initialize the network. Then, we train CV-FCN by alternately updating CV-FCN parameters using the average cross-entropy loss function. Finally, the entire PolSAR image is classified using the trained CV-FCN.

| Algorithm 1 CV-FCN classification algorithm for PolSAR imagery |

Input: PolSAR dataset , ground-truth image ; learning rate , batch size, momentum parameter.

- 1:

Construct the data patches set and the label patches set using and , respectively; - 2:

- 3:

Choose the entire training set from and ; - 4:

Repeat: - 5:

Forward pass the complex downsampling section to obtain multi-level feature maps by Section 2.3.1; - 6:

Call the complex upsampling section to recover more spatial information by Section 2.3.2; - 7:

- 8:

Update by J and . - 9:

Until: meet the termination criterion. - 10:

Classify the entire PolSAR image by forward passing the trained network to obtain . - 11:

End

Output: Pixel-wise class labels . |