1. Introduction

The synthetic aperture radar (SAR) system performs well in both military and civilian fields such as military reconnaissance, geographical mapping, and disaster warning for its all-weather and all-time working capability of two-dimensional high-resolution imaging [

1,

2,

3,

4,

5]. It has high azimuth resolutions due to relative movement between antenna and target. However, this platform movement also poses difficulties for accurate imaging [

6], and hence motion compensation (MOCO) [

7,

8,

9] is an essential procedure for SAR imaging. Furthermore, for unmanned aerial vehicle (UAV) SAR systems [

10,

11,

12] working at a low altitude, their flight trajectory is usually disturbed by severe atmospheric turbulence because of the small size and light weight of UAV platform [

13], which inevitably causes serious blurring and geometric distortion in SAR images. Normally a high-precision inertial navigation system (INS) which consists of inertial measurement unit (IMU) and global position system (GPS) is necessarily mounted on the UAV platform to record the real-time velocity and position information. However, most UAV SAR platforms are only equipped with a medium- or low-accuracy INS to save costs and payload weight, which reduces the measurement accuracy. Therefore, autofocus techniques [

14] combined with different imaging models are commonly applied to compensate the residual phase error after INS-based MOCO [

15,

16].

In practical applications, previous widely used autofocus algorithms include classical phase gradient autofocus (PGA) [

17], map-drift algorithm (MDA) [

18,

19] and image metric-based autofocus algorithms, such as contrast or entropy optimization [

20,

21,

22,

23,

24]. The PGA retrieves high-order terms of phase error by exploiting the phase gradient of prominent scatters, so its performance is dependent on the number of prominent scatters and is generally affected by noise and clutter. The MDA estimates quadratic phase error (QPE) of raw data according to the displacement between different subaperture images. Hence MDA is usually more robust than PGA when there is lack of prominent point targets in the scene. In Samczynski et al. [

19], a coherent MDA was investigated, which estimates flight parameters more precisely for the low-contrast scene. Metric-based autofocus methods usually produce superior restorations compared with the conventional ones [

20]. An optimization strategy is designed through maximizing (minimizing) a particular sharpness (entropy or sparsity) metric to estimate phase error parameters [

21,

22,

23,

24]. All these algorithms above are designed for compensating spatial-invariant phase errors in SAR imagery based on the hypothesis of narrow beam [

25], which neglects the spatial-variant components. Hence, the previous autofocus algorithms are limited for high-precision UAV SAR imaging.

For low-altitude UAV SAR imaging, the residual phase error after INS-based MOCO would be both range- and azimuth-variant [

26]. To achieve range-variant autofocus, some extended PGA and MDA kernels have been developed [

27,

28,

29,

30,

31,

32]. Earlier work in Thompson et al. [

27] investigated the spatial dependence of phase error on the radar incidence angle and developed the broadside PGA. In Bezvesilniy et al. [

28], conventional MDA was used to estimate the QPE factor of each range sub-block, and then the range-variant phase error was represented by a double integration of the estimated quadratic phase coefficients. In Zhang et al. [

10] and Fan et al. [

29], local maximum-likelihood weighted PGA (LML-WPGA) was proposed, which also approximated the trajectory deviations by range-variant phase error estimation. The LML-WPGA is further extended for highly squinted SAR imaging in [

30], integrating with the Omega-k algorithm for UAV SAR imagery. Without the range blocking strategy, the range-dependent map-drift algorithm (RDMDA) was proposed in Zhang et al. [

32], which models the phase error as a linear function of the range coordinate and estimates the constant and linear coefficients of QPE. It should be emphasized that in the above approaches, the range-dependent phase error for UAV SAR imagery has been well investigated, while the azimuth dependence is usually neglected and difficult to well represent.

On the other hand, given precise motion records with high-precision INS, numerous azimuth-variant MOCO algorithms, such as subaperture topography and aperture (SATA) dependent algorithm [

33], precise topography and aperture dependent algorithm (PTA) [

34,

35], and the PTA derivation algorithms [

36,

37], are developed based on accurate calculation of the residual azimuth-variant phase error with INS records. However, the requirements are inapplicable for UAV SAR imaging. As a result, the residual azimuth-variant phase error should also be compensated by autofocus process. A few azimuth-variant autofocus algorithms have been proposed to deal with this problem. A feasible way is to divide data into azimuth sub-blocks [

38]. Then the azimuth-variance factor is determined by the estimated local phase errors, and PGA or MDA kernels are modified to increase the phase error estimation precision of each subaperture. A multiscale autofocus algorithm was proposed in Cantalloube and Nahum [

39] to derive the spatial-variant phase error with local MDA, which requires the synthesis of many small SAR images. An azimuth-dependent PGA (APGA) algorithm was proposed in Zhou et al. [

40], in which a weighted squint phase gradient autofocus (WSPGA) kernel was used to derive local phase error functions in each subaperture.

The principles of these algorithms above are very similar. Different azimuth blocking strategies assume the spatial dependence in each azimuth block to be nominal and introduce conventional autofocus approaches, such as MDA and PGA, within a small azimuth block. Then a set of phase errors are estimated from azimuth blocks and the azimuth dependence is represented by a parametric model [

41]. Generally, the azimuth blocking strategy has two fundamental problems in real UAV SAR imagery. First, the phase functions of all subapertures should be combined carefully, which is not an easy task. Second, applying MDA or PGA to a small-range block would lead to a large estimation bias due to the insufficient integration gain using small block data. The underlying assumption would also be critically problematic that the sub-block size should be small enough to ensure the spatial invariance of the motion error within a block. Above all, it is usually a dilemma between selecting an optimal block size and recovering the continuous phase error function effectively. Moreover, the azimuth blocking strategy decreases the robustness and efficiency of autofocus approach for real measured UAV SAR imaging. To replace the azimuth blocking strategy, an azimuth-variant autofocus scheme was proposed in Pu et al. [

42], which estimates the azimuth-variant Doppler coefficients by applying a modified Wigner-Ville distribution (M-WVD). However, strictly dominant scattering targets should be required to avoid easy estimation diverging. A modified spatial-variant phase error matching MDA was proposed in Tang et al. [

43], which retrieves the systematic azimuth-variant phase error by a parametric strategy. However, it is incapable of handling azimuth-variant phase errors. Semiparametric-based motion error model, such as Fourier coefficients model proposed in Marston and Plotnick [

44], relies on the calculation of image statistic metrics, but it requires huge computational complexity. In addition, it is worth noting that in Torgrimsson et al. [

45], an efficient geometrical autofocus approach integrated in a fast-factorized back-projection (FFBP) [

46] was proposed. Six independent trajectory parameters are adopted to refocus the image affected by spatial-variant motion errors by using a two-stage optimization approach. It is demonstrated that the FFBP-based autofocus technique owes robust performance in SAR data processing, which is an efficient spatial-variant autofocus algorithm. Based on the above research works, we believe that there is an urgent need for a robust spatial-variant autofocus algorithm suitable for high-band UAV SAR imaging, which motivates the work in this paper.

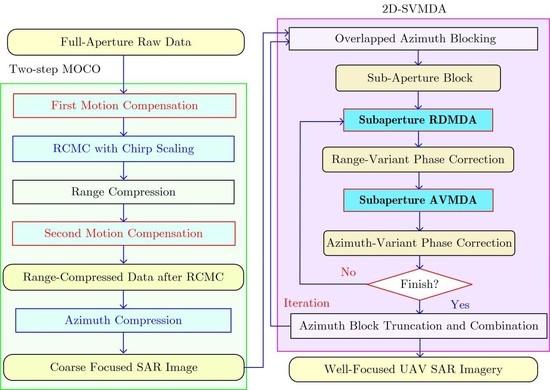

Aimed at solving the spatial-variant phase error problem, first we develop a novel azimuth-variant map-drift algorithm (AVMDA) for autofocusing UAV SAR imagery. AVMDA is established based on a parametric model, representing the azimuth dependence of the residual phase error. Different from the standard MDA, AVMDA models the azimuth phase error by using a linear function in three main steps. First, SAR data is primarily divided into two subapertures. Second, based on the linear azimuth-variant phase model, chirp-z transform (CZT) [

47] is employed instead of fast Fourier transform (FFT) to generate the frequency spectrum of each subaperture. Third, according to the criterion of maximum cross-correlation function, a modified quadratic Newton solver is designed to estimate the coefficient of azimuth-variant phase error efficiently. Inheriting the image correlation process of MDA, AVMDA provides enhanced robustness in dealing with UAV SAR data with severe phase errors. To compensate both range- and azimuth-variant phase error in strip-map UAV SAR imagery, RDMDA is integrated to estimate the range-variant component of phase error. By sequentially combining RDMDA and AVMDA into a finite iterative flow, we can achieve two-dimensional spatial-variant autofocusing, yielding well-focused UAV SAR imagery. We term the whole algorithm as two-dimensional spatial-variant map-drift algorithm (2D-SVMDA). Extensive experiments with simulated and real measured data are conducted to demonstrate its advanced performance.

The rest of this paper is organized as follows:

Section 2 introduces the proposed AVMDA in detail, as the first part of 2D-SVMDA.

Section 3 extends the algorithm to compensate both the range- and azimuth-variant phase error for strip-map UAV SAR imaging and introduces the proposed 2D-SVMDA. In

Section 4, we present the experimental results with simulated and real measured data sets in X-band and Ka-band, respectively.

Section 5 concludes the whole paper with major findings.

4. Simulated and Real Measured Data Experiments

To validate the effectiveness of the proposed AVMDA and 2D-SVMDA autofocus strategies, four sets of experiments are performed with simulated and real measured X-band and Ka-band SAR data in this section. In the simulation experiments, we artificially introduce azimuth-variant and range-variant QPE into the simulated data. The introduced phase errors vary linearly in range and azimuth directions, respectively, where the azimuth-variant QPE linear coefficient is indicated as k, and the range-variant QPE linear coefficient is indicated as b.

The measured data are provided by the National Lab of Radar Signal Processing and Collaborative Innovation Center of Information Sensing and Understanding of Xidian University. There is not additional phase error artificially added into the measured data. Defocusing on imaging is mainly caused by residual range and azimuth-variant phase errors after conventional “two-step” MOCO. The imaging method used in experiments is based on RDA. The computer platform is Windows7 (Microsoft Corporation, Redmond, Washington, USA) 64-bit operating system,

[email protected] GHz CPU, 32 GB memory and MATLAB (MathWorks Corporation, Natick, Massachusetts, USA) Version R2014a.

4.1. X-Band Simulation Experiments

The main simulation parameters of the experiment on X-band are shown in

Table 2. A two-dimensional dot matrix is designed to simulate a scene with strong scatters. The size of simulated data is 8192 × 8192 (azimuth × range). In this subsection, the purpose of the first simulation is to verify the performance of AVMDA. We presuppose azimuth-variant QPE linear coefficient

, and the data is processed with conventional MDA and AVMDA for comparison. Dot matrix imaging results and their magnified sub-scenes are shown in

Figure 6, in which (a) to (d) are ideal point image, azimuth-variant error interfered image, conventional MDA processed image, and AVMDA processed image, respectively. Three points named “A”, “B” and “C” are highlighted by yellow circles, and we analyze their azimuth impulse response functions as shown in

Figure 7. To quantitatively evaluate the focused improvement of the proposed AVMDA compared with the other algorithms, three quantitative metrics are introduced to measure the point impulse response functions, which are peak side-lobe ratio (PSLR), integrated side-lobe ratio (ISLR), and impulse response width (IRW). The results are shown in

Table 3.

It can be seen that the azimuth-variant phase error severely defocuses the points. Due to the weakness in dealing with the azimuth-variant phase error, conventional MDA is not able to refocus all the defocused points. In contrast, by precisely estimating the linear azimuth-variant QPE factor, the refocusing performances of points in AVMDA are very close to the ideally focused ones.

To verify the performance of 2D-SVMDA, a second experiment is designed by simultaneously introducing range- and azimuth-variant QPE linear coefficients with

and

. By considering the impact of range- and azimuth-variant phase errors, it is difficult to remove all the phase errors by conventional MDA or RDMDA. To compare the compensation performance of different autofocus methods, point target simulation results and magnified sub-scenes are shown in

Figure 8, in which (a) to (d) are range- and azimuth-variant error interfered image, conventional MDA processed image, RDMDA processed image, and 2D-SVMDA processed image, respectively. Azimuth impulse response functions and corresponding quantitative results of Points A, B, and C (highlighted in

Figure 8) are shown in

Figure 9 and

Table 4.

It can be seen that the imaging result is blurred due to the range- and azimuth-variant phase errors. Serious defocusing remains in conventional MDA processed and RDMDA processed results, only the proposed 2D-SVMDA can refocus the whole scene in this situation. With the iterative application of RDMDA and AVMDA, the induced range- and azimuth-variant phase errors decrease gradually so that the performance of autofocus result processed by 2D-SVMDA is highly close to the ideal one.

4.2. Ka-Band Simulation Experiments

Similar to the X-band simulation experiments, Ka-band simulation experiments are designed to verify the performance of processed AVMDA and 2D-SVMDA working at high frequencies, such as the millimeter wave band. The main simulation parameters of experiments on Ka-band are shown in

Table 5. The size of simulated data is 8192 × 8192 (azimuth × range). In the first experiment, azimuth-variant QPE linear coefficient

is introduced into the simulated data. Dot matrix imaging results and their magnified sub-scenes are shown in

Figure 10, in which (a) to (d) are ideal point image, azimuth-variant error interfered image, conventional MDA processed image, and AVMDA processed image, respectively. Azimuth impulse response functions and corresponding quantitative results of Points A, B, and C (highlighted in

Figure 10) are shown in

Figure 11 and

Table 6. It is demonstrated that AVMDA performs well when dealing with azimuth-variant QPE for high frequency band and high-resolution SAR imaging.

The second experiment is designed to verify the performance of the proposed 2D-SVMDA working at Ka-band. The presupposed range- and azimuth-variant QPE linear coefficients are

and

, respectively. The point target simulation results and magnified sub-scenes are shown in

Figure 12, in which (a) to (d) are range- and azimuth-variant error interfered image, conventional MDA processed image, RDMDA processed image, and 2D-SVMDA processed image, respectively. Azimuth impulse response functions and corresponding quantitative results of Point A, B, and C (highlighted in

Figure 12) are shown in

Figure 13 and

Table 7. As can be seen, 2D-SVMDA performs the best when dealing with range- and azimuth-variant QPE, because the two-dimensional space-variant phase errors can be simultaneously compensated by the proposed 2D-SVMDA.

4.3. X-Band Real Measured Data Experiments

In this subsection, an X-band real measured UAV SAR experiment is designed. The raw data is collected by an experimental UAV SAR system. The main SAR system parameters are listed in

Table 8 with 16,384-point azimuth samples. The radar platform is equipped with a medium-accuracy IMU and a GPS system whose output rate is 5 Hz. The altitude control is at an accuracy of 3 m, and the IMU provides trajectory information at the velocity accuracy of 0.1 m/s in three dimensions. Due to the inaccuracy of INS, after a standard “two-step” MOCO with the INS data, residual range- and azimuth-variant phase errors remain so that we need an autofocus algorithm to generate high quality images.

An image block with the size of 900 × 1800 (azimuth × range) is picked and processed by different autofocus strategies for comparison. This image block contains three groups of calibration points at the right side of scene. The refocused results are shown in

Figure 14, in which (a) to (d) are processed with INS-only-based “two-step” MOCO, conventional MDA, RDMDA, and the proposed 2D-SVMDA. Apparently, the image generated by the INS-only MOCO is seriously blurred and distorted in geometry due to the lack of accurate motion measurements. To compare the performance of different algorithms more clearly, two sub-scenes with obvious characteristics are magnified, which are shown in

Figure 15 and

Figure 16, respectively. In

Figure 15, there is a man-made structure in this sub-scene. It is obvious that the image is seriously defocused with INS-only MOCO, and partially defocused with conventional MDA and RDMDA, while with 2D-SVMDA autofocus processing, the surface features are distinguishable and focused.

Figure 16 shows three groups of calibration points, and it is easier to compare the performance of different autofocus algorithms. We can distinguish all the calibration points only in 2D-SVMDA autofocus processed image. To make a further comparison, two calibration points in

Figure 16 are sampled to analyze the azimuth pulse response function, which are shown in

Figure 17. The quantitative results of Point A and Point B are listed in

Table 9. By precisely compensating the range- and azimuth-variant phase errors, the observed points could be focused with high precision. Finally, it can be concluded that the proposed 2D-SVMDA autofocus approach has robust performance for the X-band UAV SAR imagery.

4.4. Ka-Band Real Measured Data Experiments

In this subsection, we also provide a Ka-band measured SAR experiment, in which the raw data is collected by a trial airborne SAR system. The main SAR system parameters are shown in

Table 10 with 16,384-point azimuth samples. Because the weather condition during the flight test is unsatisfactory, the measured data is burred with heavy motion errors. After conventional “two-step” MOCO, the residual azimuth-variant motion error is still so large that we need to remove it for accurate imaging. In this paper, the main purpose is to remove the residual range- and azimuth-variant motion errors by the proposed 2D-SVMDA autofocus approach, so we conduct the experiments with two-step INS-only MOCO, conventional MDA, RDMDA, and the proposed 2D-SVMDA.

An image block with the size of 2048 × 4096 (azimuth × range) is picked, which contains manufactured constructions in this scene. The comparison results processed by different autofocus strategies are shown in

Figure 18. Two sub-scenes containing single construction and point-like targets are highlighted by yellow rectangles in

Figure 19, and they are magnified to compare the performance of different autofocus algorithms more clearly in

Figure 20. By considering the severe motion deviations, the image processed by “two-step” INS-only MOCO is seriously blurred because of the residual range- and azimuth-variant phase errors, while conventional MDA and RDMDA partly refocus the image. As a contrast, assuming the residual range- and azimuth-variant phase errors is a linear function with respect to the slow time, we obtain a well-focused image by applying the proposed 2D-SVMDA. Furthermore, in

Figure 21, we compare the azimuth pulse response functions of the two point-like targets marked in

Figure 20. Statistical indicators including PSLR, ISLR, and IRW are listed in

Table 11 to measure the impulse response function performance numerically, which also quantitatively illustrates the performance improvement of the proposed method.