Simulation and Analysis of Photogrammetric UAV Image Blocks—Influence of Camera Calibration Error

Abstract

:1. Introduction

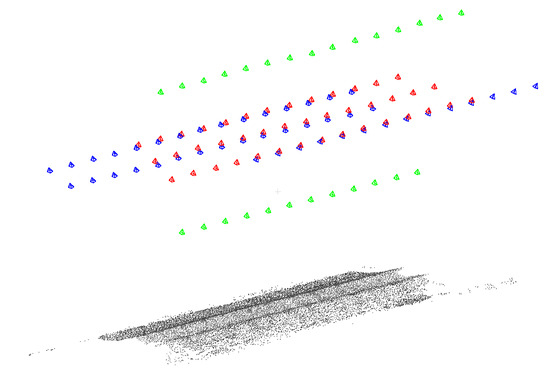

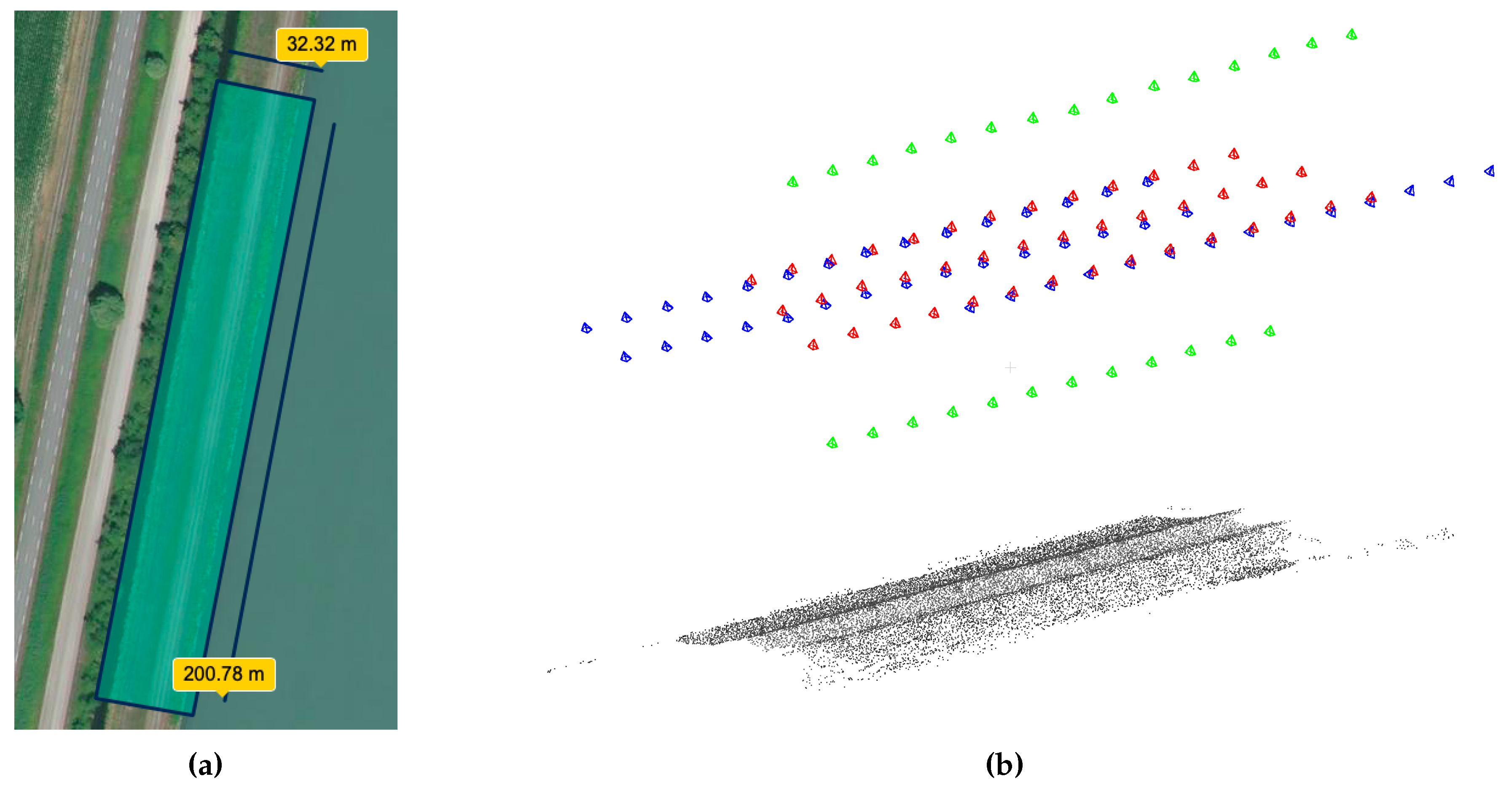

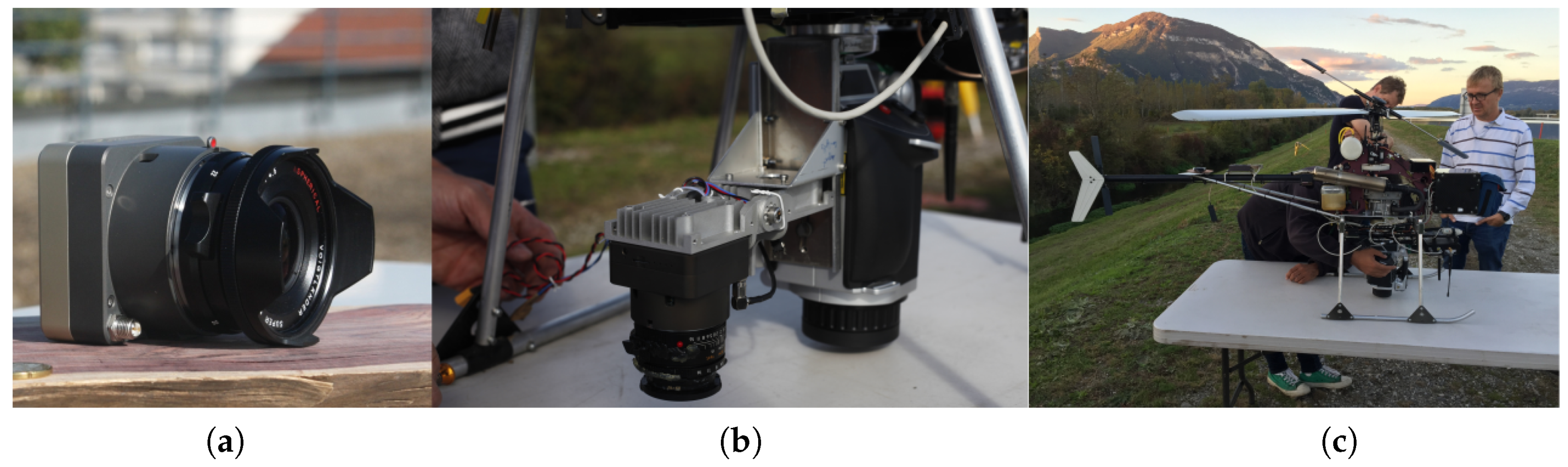

2. Data Generation and Research Design

2.1. Generation of a Synthetic Dataset

- •

- f: the focal length;

- •

- : the principal point

- •

- : 3 degrees of radial distortion coefficients with the radial distortion being expressed as:

- •

- : tangential distortion coefficients with the tangential distortion being expressed as:

- •

- : affine distortion coefficients with the affine distortion being expressed as:

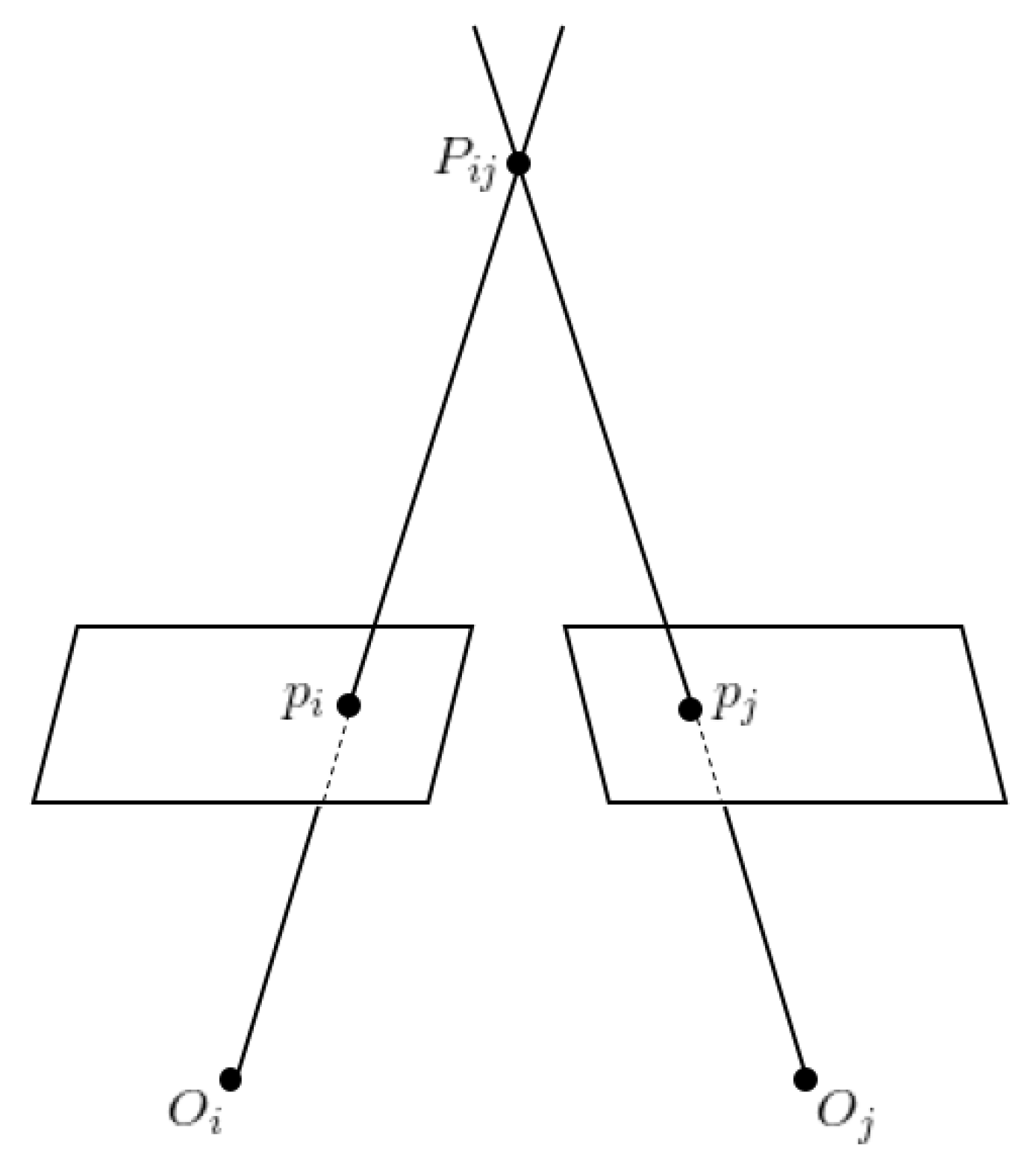

2.2. Problem Simulation and Result Evaluation

3. Experiments and Results

3.1. Erroneous Focal Length

3.2. Gradually Varied Focal Length

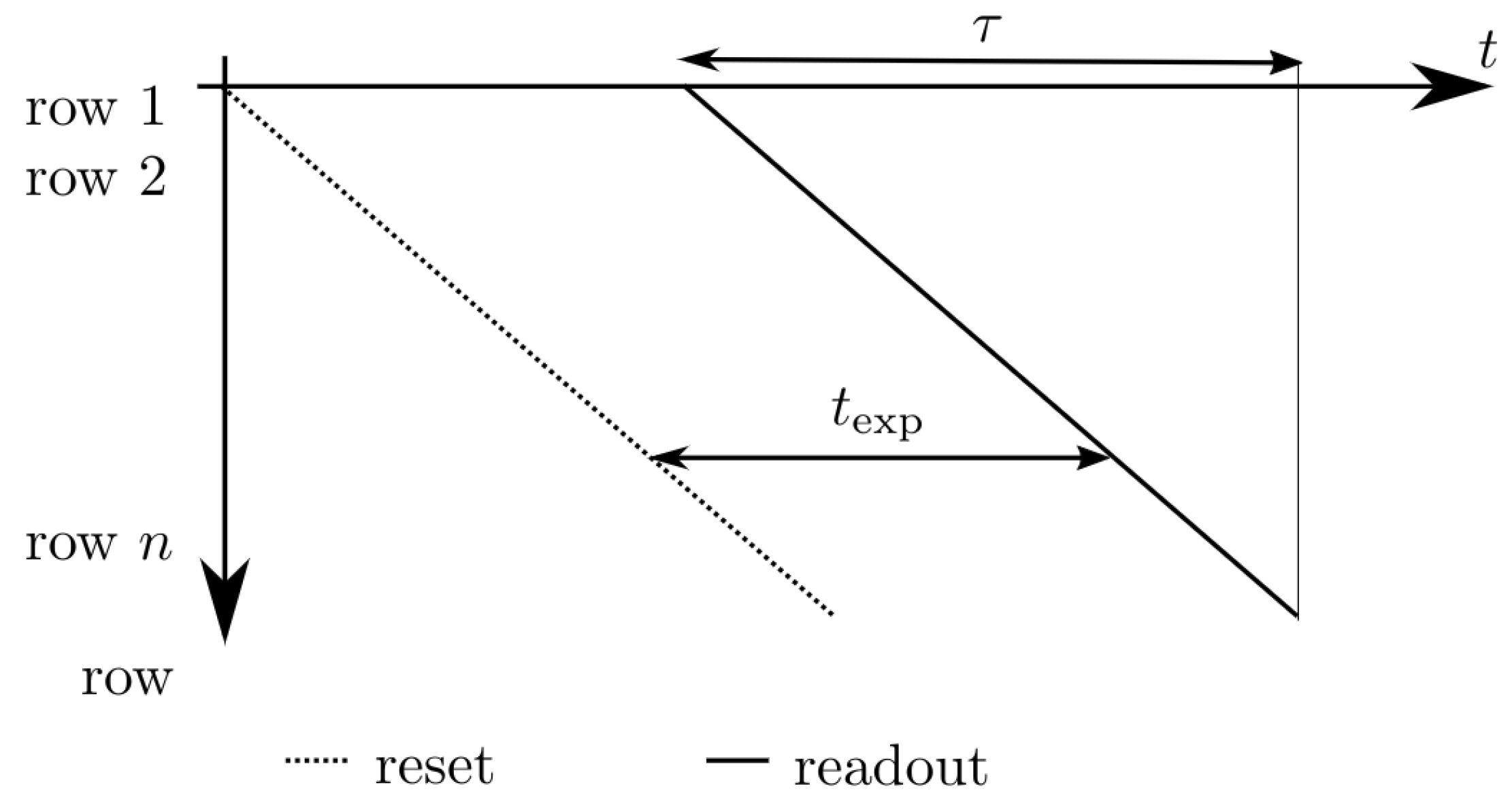

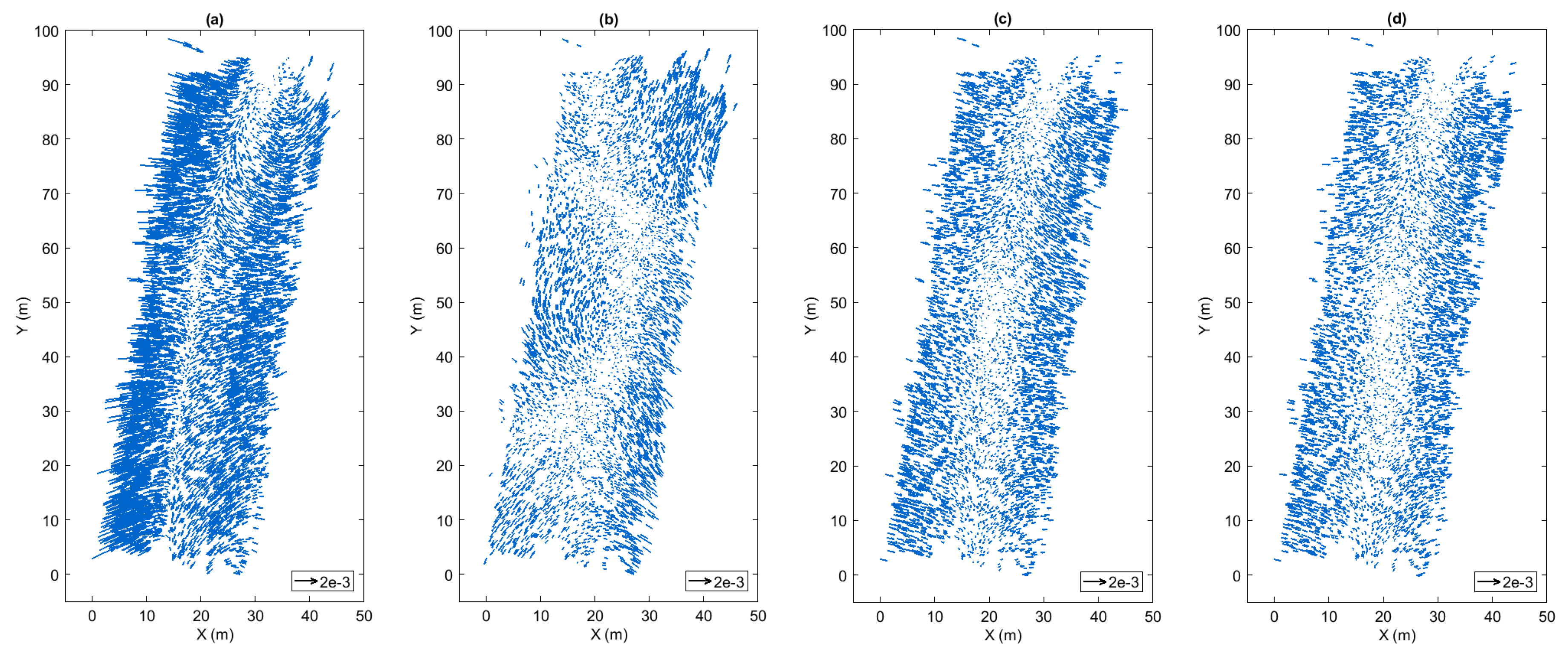

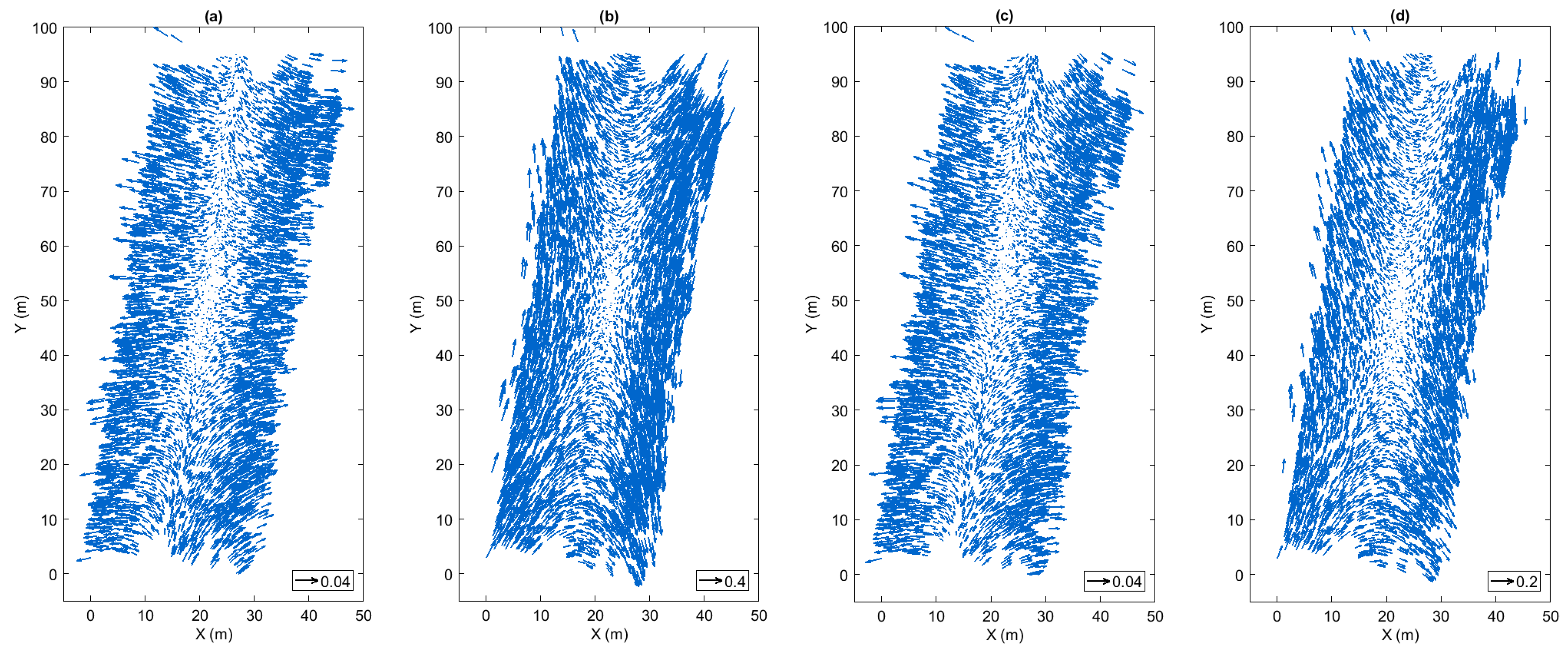

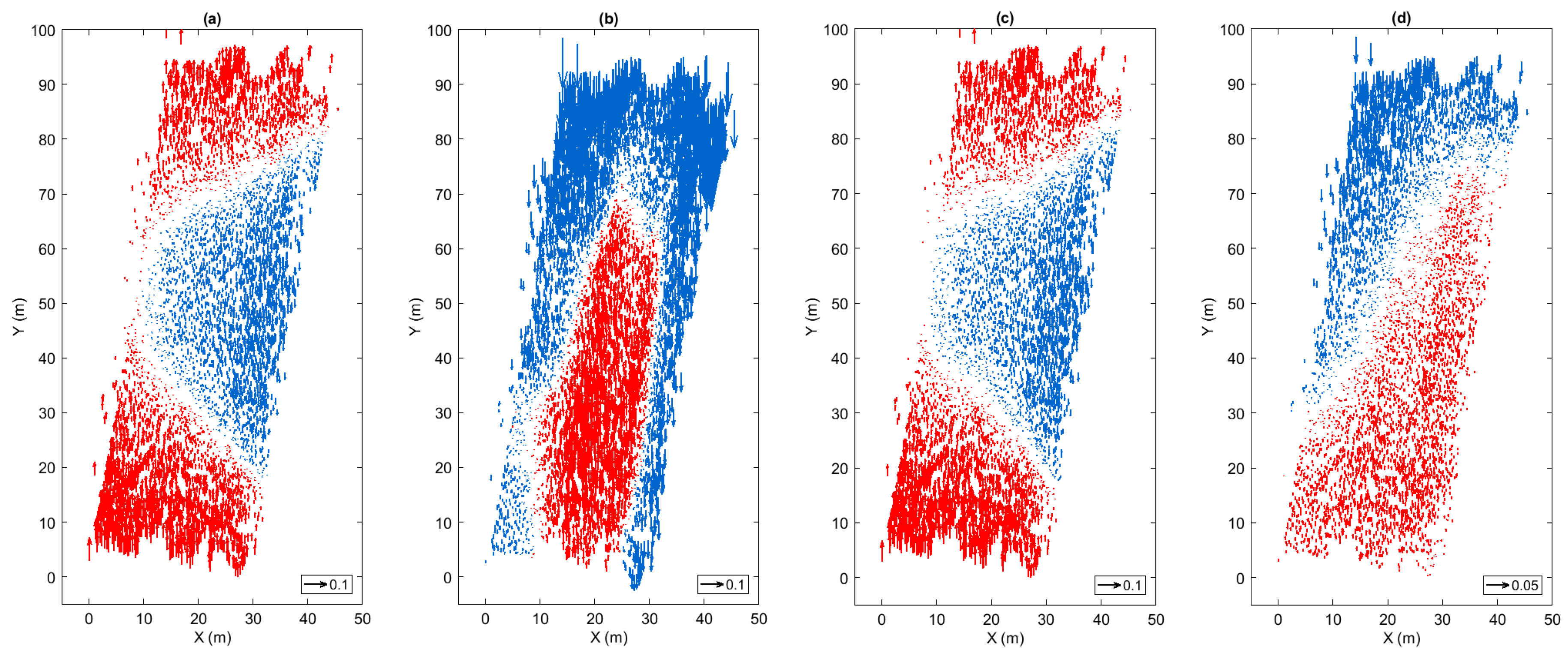

3.3. Error Coming from Rolling Shutter Effect

- -

- T: time interval between two images, 2.5 s, conforms to real acquisition condition of the presented dataset

- -

- : readout time of rolling shutter camera, 50 ms, a middle value among widely-used rolling shutter cameras

- -

- : camera translational velocity, ∼3 m/s, for each image i, the instantaneous velocity is calculated as the ratio between the displacement and the time interval T of image i and , the value conforms to real acquisition condition of the presented dataset

- -

- : camera rotational velocity, with = 0.02°/s and = 0.016°/s, the rotational axis is generated randomly, the amplitude of rotation angle follows the Gaussian distribution, the value of parameters and comes from IMU data of previous lab acquisitions. The rotational axis is generated here to be random, whereas in practice the axis depends on the type of UAV, the installation and the specification of the motor and the resulted vibration. The assumption of randomness is made, so that a general conclusion can be drawn without being limited to specific conditions.

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Heipke, C.; Jacobsen, K.; Wegmann, H. Analysis of the results of the OEEPE test “Integrated Sensor Orientation. In OEEPE Integrated Sensor Orientation Test Report and Workshop Proceedings, Editors; Citeseer: Hannover, Germany, 2002. [Google Scholar]

- Cramer, M.; Stallmann, D.; Haala, N. Direct georeferencing using GPS/inertial exterior orientations for photogrammetric applications. Int. Arch. Photogramm. Remote Sens. 2000, 33, 198–205. [Google Scholar]

- Westoby, M.; Brasington, J.; Glasser, N.; Hambrey, M.; Reynolds, J. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Process. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef] [Green Version]

- Duane, C.B. Close-range camera calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Schut, G. Selection of additional parameters for the bundle adjustment. Photogramm. Eng. Remote Sens. 1979, 45, 1243–1252. [Google Scholar]

- Fraser, C.S. Digital camera self-calibration. ISPRS J. Photogramm. Remote Sens. 1997, 52, 149–159. [Google Scholar] [CrossRef]

- Ebner, H. Self calibrating block adjustment. Bildmessung Luftbildwessen 1976, 44, 128–139. [Google Scholar]

- Gruen, A. Accuracy, reliability and statistics in close-range photogrammetry. In Proceedings of the Inter-Congress Symposium of ISP Commission V, Stockholm, Sweden, 14–17 August 1978. [Google Scholar]

- Fraser, C.S. Automatic camera calibration in close range photogrammetry. Photogramm. Eng. Remote Sens. 2013, 79, 381–388. [Google Scholar] [CrossRef] [Green Version]

- Remondino, F.; Fraser, C. Digital camera calibration methods: Considerations and comparisons. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 266–272. [Google Scholar]

- James, M.R.; Quinton, J.N. Ultra-rapid topographic surveying for complex environments: The hand-held mobile laser scanner (HMLS). Earth Surf. Process. Landf. 2014, 39, 138–142. [Google Scholar] [CrossRef] [Green Version]

- Harwin, S.; Lucieer, A. Assessing the accuracy of georeferenced point clouds produced via multi-view stereopsis from unmanned aerial vehicle (UAV) imagery. Remote Sens. 2012, 4, 1573–1599. [Google Scholar] [CrossRef] [Green Version]

- Lichti, D.; Skaloud, J.; Schaer, P. On the calibration strategy of medium format cameras for direct georeferencing. In Proceedings of the International Calibration and Orientation Workshop EuroCOW, Castelldefels, Spain, 30 January–1 February 2008. [Google Scholar]

- Rosnell, T.; Honkavaara, E. Point cloud generation from aerial image data acquired by a quadrocopter type micro unmanned aerial vehicle and a digital still camera. Sensors 2012, 12, 453–480. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- James, M.R.; Robson, S. Mitigating systematic error in topographic models derived from UAV and ground-based image networks. Earth Surf. Process. Landf. 2014, 39, 1413–1420. [Google Scholar] [CrossRef] [Green Version]

- Javernick, L.; Brasington, J.; Caruso, B. Modeling the topography of shallow braided rivers using Structure-from-Motion photogrammetry. Geomorphology 2014, 213, 166–182. [Google Scholar] [CrossRef]

- Castillo, C.; Pérez, R.; James, M.R.; Quinton, J.; Taguas, E.V.; Gómez, J.A. Comparing the accuracy of several field methods for measuring gully erosion. Soil Sci. Soc. Am. J. 2012, 76, 1319–1332. [Google Scholar] [CrossRef] [Green Version]

- James, M.R.; Robson, S. Straightforward reconstruction of 3D surfaces and topography with a camera: Accuracy and geoscience application. J. Geophys. Res. Earth Surf. 2012, 117. [Google Scholar] [CrossRef] [Green Version]

- James, M.; Varley, N. Identification of structural controls in an active lava dome with high resolution DEMs: Volcán de Colima, Mexico. Geophys. Res. Lett. 2012, 39. [Google Scholar] [CrossRef] [Green Version]

- Wackrow, R.; Chandler, J.H. Minimising systematic error surfaces in digital elevation models using oblique convergent imagery. Photogramm. Rec. 2011, 26, 16–31. [Google Scholar] [CrossRef] [Green Version]

- Martin, O.; Meynard, C.; Pierrot Deseilligny, M.; Souchon, J.P.; Thom, C. Réalisation d’une caméra photogrammétrique ultralégère et de haute résolution. In Proceedings of the Colloque Drones Et Moyens Légers Aéroportés D’observation, Montpellier, France, 24–26 June 2014; pp. 24–26. [Google Scholar]

- Zhou, Y.; Rupnik, E.; Faure, P.H.; Pierrot-Deseilligny, M. GNSS-Assisted Integrated Sensor Orientation with Sensor Pre-Calibration for Accurate Corridor Mapping. Sensors 2018, 18, 2783. [Google Scholar] [CrossRef] [Green Version]

- Rupnik, E.; Daakir, M.; Deseilligny, M.P. MicMac—A free, open-source solution for photogrammetry. Open Geospat. Data Softw. Stand. 2017, 2, 14. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 99, pp. 1150–1157. [Google Scholar]

- Hothmer, J. Possibilities and limitations for elimination of distortion in aerial photographs. Photogramm. Rec. 1958, 2, 426–445. [Google Scholar] [CrossRef]

- Yastikli, N.; Jacobsen, K. Influence of system calibration on direct sensor orientation. Photogramm. Eng. Remote Sens. 2005, 71, 629–633. [Google Scholar] [CrossRef] [Green Version]

- Merchant, D.C. Influence of temperature on focal length for the airborne camera. In Proceedings of the MAPPS/ASPRS Fall Conference, San Antonio, TX, USA, 6–10 November 2006. [Google Scholar]

- Merchant, D.C. Aerial Camera Metric Calibration—History and Status. In Proceedings of the ASPRS 2012 Annual Conference, Sacramento, CA, USA, 19–23 March 2012; pp. 19–23. [Google Scholar]

- Daakir, M.; Zhou, Y.; Pierrot-Deseilligny, M.; Thom, C.; Martin, O.; Rupnik, E. Improvement of photogrammetric accuracy by modeling and correcting the thermal effect on camera calibration. ISPRS J. Photogramm. Remote Sens. 2019, 148, 142–155. [Google Scholar] [CrossRef] [Green Version]

- Vautherin, J.; Rutishauser, S.; Schneider-Zapp, K.; Choi, H.F.; Chovancova, V.; Glass, A.; Strecha, C. Photogrammetric accuracy and modeling of rolling shutter cameras. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 139–146. [Google Scholar] [CrossRef]

- Meingast, M.; Geyer, C.; Sastry, S. Geometric models of rolling-shutter cameras. arXiv 2005, arXiv:cs/0503076. [Google Scholar]

- Chun, J.B.; Jung, H.; Kyung, C.M. Suppressing rolling-shutter distortion of CMOS image sensors by motion vector detection. IEEE Trans. Consum. Electron. 2008, 54, 1479–1487. [Google Scholar] [CrossRef]

- Saurer, O.; Pollefeys, M.; Hee Lee, G. Sparse to dense 3D reconstruction from rolling shutter images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3337–3345. [Google Scholar]

| Flight | 50-na | 3070-na | 50-ob | |

|---|---|---|---|---|

| Nb of images | 42 | 27 | 44 | |

| Height (m) | 50 | 30, 70 | 50 | |

| Orientation | nadir | nadir | oblique | |

| Nb of strips | 3 | 2 | 3 | |

| Overlap (%) | forward | 80 | ||

| side | 70 | |||

| GCP accuracy (mm) | horizontal | 1.3 | ||

| vertical | 1 | |||

| camera focal length (mm) | 35 | |||

| GSD (mm) | 10 | 6, 14 | 10 | |

| Focal Length Variation (pixel) | Order 1 | Order 2 | Order 3 | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 50-na, 50-ob, 3070-na | 50-ob, 50-na, 3070-na | 50-na, 3070-na, 50-ob | ||||||||||||||||||

| RMSE (cm) | STD (cm) | RMSE (cm) | STD (cm) | RMSE (cm) | STD (cm) | |||||||||||||||

| xy | z | xyz | xy | z | xyz | xy | z | xyz | xy | z | xyz | xy | z | xyz | xy | z | xyz | |||

| 1 flight | 50-na | 1.11 | 0.02 | 1.01 | 1.01 | 0.01 | 0.13 | 0.13 | / | / | / | / | / | / | / | / | / | / | / | / |

| 50-ob | 1.21 | / | / | / | / | / | / | 0.05 | 0.05 | 0.07 | 0.02 | 0.04 | 0.03 | / | / | / | / | / | / | |

| 2 flights | 50-na+50-ob | 2.36 | 0.02 | 0.09 | 0.09 | 0.01 | 0.04 | 0.04 | 0.01 | 0.01 | 0.02 | 0.01 | 0.01 | 0.01 | / | / | / | / | / | / |

| 50-na+3070-na | 1.89 | / | / | / | / | / | / | / | / | / | / | / | / | 0.05 | 1.08 | 1.08 | 0.00 | 0.14 | 0.14 | |

| 3 flights | 50-na+50-ob+3070-na | 3.10 | 0.02 | 0.11 | 0.11 | 0.00 | 0.06 | 0.06 | 0.01 | 0.03 | 0.04 | 0.00 | 0.03 | 0.02 | 0.03 | 0.17 | 0.17 | 0.01 | 0.05 | 0.05 |

| Planimetry (cm) | Altimetry (cm) | 3D (cm) | |

|---|---|---|---|

| Rotation, case (a) | 0.07 ± 0.03 | 0.19 ± 0.19 | 0.20 ± 0.12 |

| Rotation, case (b) | 0.02 ± 0.01 | 0.09 ± 0.08 | 0.09 ± 0.05 |

| Rotation, case (c) | 0.03 ± 0.01 | 0.01 ± 0.01 | 0.03 ± 0.01 |

| Rotation, case (d) | 0.02 ± 0.01 | 0.01 ± 0.01 | 0.03 ± 0.01 |

| Translation, case (a) | 1.44 ± 0.62 | 2.61 ± 2.50 | 2.98 ± 1.51 |

| Translation, case (b) | 18.23 ± 7.93 | 5.36 ± 5.14 | 19.00 ± 7.88 |

| Translation, case (c) | 1.44 ± 0.61 | 2.52 ± 2.43 | 2.90 ± 1.46 |

| Translation, case (d) | 6.88 ± 2.97 | 0.71 ± 0.77 | 6.93 ± 2.97 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, Y.; Rupnik, E.; Meynard, C.; Thom, C.; Pierrot-Deseilligny, M. Simulation and Analysis of Photogrammetric UAV Image Blocks—Influence of Camera Calibration Error. Remote Sens. 2020, 12, 22. https://doi.org/10.3390/rs12010022

Zhou Y, Rupnik E, Meynard C, Thom C, Pierrot-Deseilligny M. Simulation and Analysis of Photogrammetric UAV Image Blocks—Influence of Camera Calibration Error. Remote Sensing. 2020; 12(1):22. https://doi.org/10.3390/rs12010022

Chicago/Turabian StyleZhou, Yilin, Ewelina Rupnik, Christophe Meynard, Christian Thom, and Marc Pierrot-Deseilligny. 2020. "Simulation and Analysis of Photogrammetric UAV Image Blocks—Influence of Camera Calibration Error" Remote Sensing 12, no. 1: 22. https://doi.org/10.3390/rs12010022