Reliable and Efficient UAV Image Matching via Geometric Constraints Structured by Delaunay Triangulation

Abstract

:1. Introduction

2. Principle for the Design of the Proposed Algorithm

- For the first issue, match pair selection before feature matching can be an efficient way to decrease the number of image pairs. In our previous work [36], the rough POS of images and mean elevation of test sites have been utilized to compute the footprints of images and determine overlapped match pairs. Compared with exhaustive matching strategy, match pair selection can dramatically decrease the number of match pairs and reduce the total time costs consumed in the stage of feature matching. However, as demonstrated in the tests [36], the number of selected match pairs is still very large when only using the overlap criterion, such as 18,283 match pairs retained for the UAV dataset of 750 images. Thus, the efficiency of feature matching for one image pair should also be addressed, which relates to the second and third issues for image matching.

- For the second issue, an efficient pre-filter step can be designed to remove obvious outliers and increase inlier ratios of initial matches. In our previous work [33], a two-stage geometric verification algorithm was designed for outlier removal of UAV images. In the filtering stage, obvious outliers are removed by using a local consistency analysis of their projected motions, which can increase inlier ratios of initial matches; in the verification stage, retained matches are refined based on the global geometric constraint achieved by fundamental matrix estimation. This method can be utilized to address the second issue. This method, however, depends on extra auxiliary information, i.e., rough POS of images and mean elevation.

- For the third issue, Jiang et al. [31] designed an image matching algorithm for both rigid and non-rigid images, in which a photometric constraint based on the VLD line descriptor and a geometric constraint by using the SAO is implemented by using neighboring structures deduced from the Delaunay triangulation. Initial matches are sorted according to their dissimilarity scores computed using these two constraints, and outlier removal is conducted in a hierarchical manner where matches with high dissimilarity scores are first removed. However, due to high resolutions and large dimensions of UAV images, this method causes very high time costs to achieve scale-invariant photometric constraints.

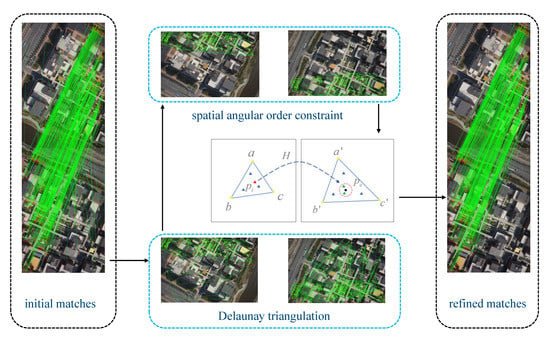

3. UAV Image Matching Based on DTSAO-RANSAC

3.1. Delaunay Triangulation from Initial Matches

3.2. Geometric Constraints Structured by Delaunay Triangulation

- Affine transformation estimation. By using the three nonlinear point pairs of the two corresponding triangles, the affine transformation matrix H from the left triangle to the right triangle is estimated.

- Candidate match searching. For one feature point shown as a red triangle on the left image, its predicted location is computed by shown as a black circle in the right image. Feature points inside the dashed red circle with its center and radius r are searched from Q, which are candidate matches of .

- Expanded match determination. Feature point is compared with each candidate feature by computing the SIFT descriptor distance that is defined as the cosine angle of two feature vectors. Finally, the feature pair with the smallest distance is labeled as an expanded match when its distance is not greater than a distance threshold .

3.3. Implementation of the DTSAO-RANSAC Method

- Initial match generation. For the input images, SIFT features are first detected for each image and described using 128-dimensional descriptors, and initial matches are then obtained by searching for the nearest neighbors with the smallest Euclidean distance of SIFT descriptors. Due to the high resolution of UAV images, the SIFTGPU algorithm [39] with hardware acceleration is used for the fast computation of initial matches. Default parameters of the SIFTGPU library are used.

- Delaunay triangulation construction. According to Section 3.1, the Delaunay triangulation and its corresponding graph are constructed using the initial matches. In this study, the two-dimensional triangulation of Computational Geometry Algorithms Library (CGAL) [40] is selected to implement the Delaunay triangulation of the initial matches.

- Outlier removal based on the SAO constraint. For one target vertex in graph , a dissimilarity score is calculated according to Equation (1) after determining two corresponding angular orders; then, a list of dissimilarity scores can be obtained from n vertices in . The list is sorted in descending order of the dissimilarity score. Assume that the vertex corresponding to the first item in the list is denoted as . In this study, outlier removal is iteratively conducted using a hierarchical elimination strategy until the dissimilarity score of is less than a specified threshold : (a) remove the vertex from graph , and set the dissimilarity score of as zero; (b) update the dissimilarity score of all incident neighbors of the vertex , and resort the score list . With the iterative conduction of these two steps, an outlier list is obtained. To remove remaining outliers, a left-right checking strategy that is implemented by exchanging the roles of graphs and is adopted, and another outlier list is obtained. Finally, an initial match is classified as an outlier as long as it belongs to one of the outlier lists and .

- Match expansion based on the triangulation constraint. According to Section 3.2, match expansion is conducted to resume missed true matches. In this study, to achieve the high efficiency in neighboring point searching, feature points from each image are indexed by using the K-nearest-neighbors [37] algorithm. Similar to Step 3, the left-right checking strategy is also used in match expansion. In other words, the point pair is labeled as a true match if and only if and are the nearest neighbors of each other.

- Match refinement based on RANSAC. Retained matches are finally refined based on the rigorous geometric constraint. In this study, the RANSAC method with the estimation of a fundamental matrix using the seven-point algorithm [41] is utilized to refine the final matches.

| Algorithm 1 DTSAO-RANSAC |

| Input: n initial candidate matches C Output: final matches

|

4. Experimental Results

4.1. Datasets

4.2. Analysis of the Influence of the Score Threshold

4.3. Analysis of the Robustness to Outliers of DTSAO

4.4. Outlier Elimination Based on the DTSAO Algorithm

4.5. Comparison with Other Outlier Elimination Methods

4.5.1. Comparison Using Outlier Removal Tests

4.5.2. Comparison Using Image Orientation Tests

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jiang, S.; Jiang, W. Uav-Based Oblique Photogrammetry for 3d Reconstruction of Transmission Line: Practices and Applications. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 4213, 401–406. [Google Scholar] [CrossRef] [Green Version]

- Jiang, S.; Jiang, W.; Huang, W.; Yang, L. UAV-Based Oblique Photogrammetry for Outdoor Data Acquisition and Offsite Visual Inspection of Transmission Line. Remote Sens. 2017, 9, 278. [Google Scholar] [CrossRef] [Green Version]

- Habib, A.; Han, Y.; Xiong, W.; He, F.; Zhang, Z.; Crawford, M. Automated Ortho-Rectification of UAV-Based Hyperspectral Data over an Agricultural Field Using Frame RGB Imagery. Remote Sens. 2016, 8, 796. [Google Scholar] [CrossRef] [Green Version]

- Xu, Z.; Wu, L.; Shen, Y.; Li, F.; Wang, Q.; Wang, R. Tridimensional reconstruction applied to cultural heritage with the use of camera-equipped UAV and terrestrial laser scanner. Remote Sens. 2014, 6, 10413–10434. [Google Scholar] [CrossRef] [Green Version]

- Jiang, S.; Jiang, C.; Jiang, W. Efficient structure from motion for large-scale UAV images: A review and a comparison of SfM tools. ISPRS J. Photogramm. Remote Sens. 2020, 167, 230–251. [Google Scholar] [CrossRef]

- Ye, Y.; Shan, J. A local descriptor based registration method for multispectral remote sensing images with non-linear intensity differences. ISPRS J. Photogramm. Remote Sens. 2014, 90, 83–95. [Google Scholar] [CrossRef]

- Sedaghat, A.; Ebadi, H. Accurate affine invariant image matching using oriented least square. Photogramm. Eng. Remote Sens. 2015, 81, 733–743. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; Volume 15, pp. 10–5244. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Moravec, H.P. Rover Visual Obstacle Avoidance. IJCAI 1981, 81, 785–790. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Raguram, R.; Chum, O.; Pollefeys, M.; Matas, J.; Frahm, J.M. USAC: A universal framework for random sample consensus. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2022–2038. [Google Scholar] [CrossRef]

- Lu, L.; Zhang, Y.; Tao, P. Geometrical Consistency Voting Strategy for Outlier Detection in Image Matching. Photogramm. Eng. Remote Sens. 2016, 82, 559–570. [Google Scholar] [CrossRef]

- Li, X.; Larson, M.; Hanjalic, A. Pairwise geometric matching for large-scale object retrieval. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5153–5161. [Google Scholar]

- Schonberger, J.L.; Frahm, J.M. Structure-from-motion revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Zhuo, X.; Koch, T.; Kurz, F.; Fraundorfer, F.; Reinartz, P. Automatic UAV Image Geo-Registration by Matching UAV Images to Georeferenced Image Data. Remote Sens. 2017, 9, 376. [Google Scholar] [CrossRef] [Green Version]

- Aguilar, W.; Frauel, Y.; Escolano, F.; Martinez-Perez, M.E.; Espinosa-Romero, A.; Lozano, M.A. A robust graph transformation matching for non-rigid registration. Image Vis. Comput. 2009, 27, 897–910. [Google Scholar] [CrossRef]

- Izadi, M.; Saeedi, P. Robust weighted graph transformation matching for rigid and nonrigid image registration. IEEE Trans. Image Process. 2012, 21, 4369–4382. [Google Scholar] [CrossRef]

- Ma, J.; Zhao, J.; Tian, J.; Yuille, A.L.; Tu, Z. Robust point matching via vector field consensus. IEEE Trans. Image Process. 2014, 23, 1706–1721. [Google Scholar] [CrossRef] [Green Version]

- Sattler, T.; Leibe, B.; Kobbelt, L. SCRAMSAC: Improving RANSAC’s efficiency with a spatial consistency filter. In Proceedings of the Computer Vision, 2009 IEEE 12th International Conference on IEEE, Kyoto, Japan, 29 September–2 October 2009; pp. 2090–2097. [Google Scholar]

- Hu, H.; Zhu, Q.; Du, Z.; Zhang, Y.; Ding, Y. Reliable spatial relationship constrained feature point matching of oblique aerial images. Photogramm. Eng. Remote Sens. 2015, 81, 49–58. [Google Scholar] [CrossRef]

- Li, Y.; Tsin, Y.; Genc, Y.; Kanade, T. Object detection using 2D spatial ordering constraints. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 711–718. [Google Scholar]

- Alajlan, N.; El Rube, I.; Kamel, M.S.; Freeman, G. Shape retrieval using triangle-area representation and dynamic space warping. Pattern Recognit. 2007, 40, 1911–1920. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, F.; Hu, Z. MSLD: A robust descriptor for line matching. Pattern Recognit. 2009, 42, 941–953. [Google Scholar] [CrossRef]

- Zhang, L.; Koch, R. An efficient and robust line segment matching approach based on LBD descriptor and pairwise geometric consistency. J. Vis. Commun. Image Represent. 2013, 24, 794–805. [Google Scholar] [CrossRef]

- Liu, Z.; Marlet, R. Virtual Line Descriptor and Semi-Local Graph Matching Method for Reliable Feature Correspondence. In Proceedings of the British Machine Vision Conference, Surrey, UK, 3–7 September 2012; pp. 16.1–16.11. [Google Scholar]

- Li, J.; Hu, Q.; Ai, M.; Zhong, R. Robust feature matching via support-line voting and affine-invariant ratios. ISPRS J. Photogramm. Remote Sens. 2017, 132, 61–76. [Google Scholar] [CrossRef]

- Li, J.; Hu, Q.; Ai, M. 4FP-Structure: A Robust Local Region Feature Descriptor. Photogramm. Eng. Remote Sens. 2017, 83, 813–826. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W. Reliable image matching via photometric and geometric constraints structured by Delaunay triangulation. ISPRS J. Photogramm. Remote Sens. 2019, 153, 1–20. [Google Scholar] [CrossRef]

- Dominik, W.A. Exploiting the Redundancy of Multiple Overlapping Aerial Images for Dense Image Matching Based Digital Surface Model Generation. Remote Sens. 2017, 9, 490. [Google Scholar] [CrossRef] [Green Version]

- Jiang, S.; Jiang, W. Hierarchical motion consistency constraint for efficient geometrical verification in UAV stereo image matching. ISPRS J. Photogramm. Remote Sens. 2018, 142, 222–242. [Google Scholar] [CrossRef]

- Liu, J.; Xue, Y.; Ren, K.; Song, J.; Windmill, C.; Merritt, P. High-Performance Time-Series Quantitative Retrieval From Satellite Images on a GPU Cluster. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2810–2821. [Google Scholar] [CrossRef] [Green Version]

- Wang, N.; Chen, F.; Yu, B.; Qin, Y. Segmentation of large-scale remotely sensed images on a Spark platform: A strategy for handling massive image tiles with the MapReduce model. ISPRS J. Photogramm. Remote Sens. 2020, 162, 137–147. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W. Efficient structure from motion for oblique UAV images based on maximal spanning tree expansion. ISPRS J. Photogramm. Remote Sens. 2017, 132, 140–161. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Guo, X.; Cao, X. Good match exploration using triangle constraint. Pattern Recognit. Lett. 2012, 33, 872–881. [Google Scholar] [CrossRef]

- Wu, C. SiftGPU: A GPU Implementation of David Lowe’s Scale Invariant Feature Transform (SIFT). 2007. Available online: https://github.com/pitzer/SiftGPU (accessed on 19 June 2017).

- Boissonnat, J.D.; Devillers, O.; Pion, S.; Teillaud, M.; Yvinec, M. Triangulations in CGAL. Comput. Geom. 2002, 22, 5–19. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z. Determining the epipolar geometry and its uncertainty: A review. Int. J. Comput. Vis. 1998, 27, 161–195. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Item Name | Dataset 1 | Dataset 2 | Dataset 3 |

|---|---|---|---|

| UAV type | multi-rotor | multi-rotor | multi-rotor |

| Flight height (m) | 300 | 165 | 175 |

| Camera mode | Sony ILCE-7R | Sony RX1R | Sony NEX-7 |

| Number of cameras | 1 | 1 | 5 |

| Focal length (mm) | 35 | 35 | nadir: 16 oblique: 35 |

| Camera angle () | nadir: 0 oblique: 45/−45 | front: 25, −15 | nadir: 0 oblique: 45/−45 |

| Number of images | 157 | 320 | 750 |

| Image size (pixel) | 7360 × 4912 | 6000 × 4000 | 6000 × 4000 |

| GSD (cm) | 4.20 | 5.05 | 4.27 |

| No. | LOSAC | SPRT | PROSAC | USAC | GC-RANSAC | KVLD-RANSAC | DTVLD-RANSAC | DTSAO-RANSAC |

|---|---|---|---|---|---|---|---|---|

| 1 | 0.256 | 0.271 | 0.257 | 0.255 | 0.252 | 0.252 | 0.255 | 0.257 |

| 2 | 0.240 | 0.270 | 0.262 | 0.244 | 0.236 | 0.237 | 0.240 | 0.236 |

| 3 | 0.162 | 0.188 | 0.195 | 0.195 | 0.163 | 0.068 | 0.118 | 0.098 |

| 4 | 0.232 | 0.271 | 0.249 | 0.241 | 0.224 | —— | 0.222 | 0.225 |

| Item | LOSAC | SPRT | PROSAC | USAC | GC-RANSAC | KVLD-RANSAC | DTVLD-RANSAC | DTSAO-RANSAC | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Filter | Verif | Filter | Verif | Filter | Verif | Filter | Verif | |||||

| (a) Mean (unit in seconds) | ||||||||||||

| 1 | 0.913 | 1.608 | 1.244 | 1.239 | 0.056 | 0.096 | 14.857 | 0.011 | 4.634 | 0.012 | 0.049 | 0.011 |

| 2 | 0.071 | 0.127 | 0.287 | 0.291 | 0.114 | 0.012 | 5.816 | 0.007 | 2.883 | 0.009 | 0.055 | 0.009 |

| 3 | 0.924 | 1.335 | 0.983 | 0.982 | 0.041 | 0.115 | 8.390 | 0.005 | 2.759 | 0.007 | 0.051 | 0.005 |

| (b) Sum (unit in minutes) | ||||||||||||

| 1 | 67.40 | 118.75 | 91.86 | 91.51 | 4.11 | 7.12 | 1096.95 | 0.78 | 342.15 | 0.92 | 3.65 | 0.83 |

| 2 | 6.12 | 10.86 | 24.67 | 25.00 | 9.81 | 1.05 | 499.48 | 0.63 | 247.59 | 0.75 | 4.76 | 0.74 |

| 3 | 281.49 | 406.84 | 299.49 | 299.25 | 12.59 | 34.93 | 2556.49 | 1.45 | 840.63 | 2.00 | 15.51 | 1.65 |

| Dataset | Item | LOSAC | SPRT | PROSAC | USAC | GC- RANSAC | KVLD- RANSAC | DTVLD- RANSAC | DTSAO- RANSAC |

|---|---|---|---|---|---|---|---|---|---|

| 1 | No. images | 157/157 | 157/157 | 157/157 | 157/157 | 157/157 | 157/157 | 157/157 | 157/157 |

| No. points | 70,967 | 71,515 | 67,994 | 69,653 | 68,783 | 76,988 | 76,997 | 75,720 | |

| RMSE | 0.537 | 0.538 | 0.539 | 0.539 | 0.532 | 0.548 | 0.548 | 0.541 | |

| 2 | No. images | 320/320 | 320/320 | 320/320 | 320/320 | 320/320 | 279/320 | 320/320 | 320/320 |

| No. points | 136,131 | 140,475 | 132,005 | 139,766 | 138,829 | 118,425 | 163,370 | 163,245 | |

| RMSE | 0.591 | 0.599 | 0.598 | 0.595 | 0.596 | 0.428 | 0.618 | 0.618 | |

| 3 | No. images | 750/750 | 750/750 | 750/750 | 750/750 | 750/750 | 450/750 | 750/750 | 750/750 |

| No. points | 267,794 | 258,192 | 224,295 | 222,977 | 262,837 | 147,241 | 292,087 | 278,429 | |

| RMSE | 0.670 | 0.661 | 0.654 | 0.647 | 0.671 | 0.620 | 0.683 | 0.676 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, S.; Jiang, W.; Li, L.; Wang, L.; Huang, W. Reliable and Efficient UAV Image Matching via Geometric Constraints Structured by Delaunay Triangulation. Remote Sens. 2020, 12, 3390. https://doi.org/10.3390/rs12203390

Jiang S, Jiang W, Li L, Wang L, Huang W. Reliable and Efficient UAV Image Matching via Geometric Constraints Structured by Delaunay Triangulation. Remote Sensing. 2020; 12(20):3390. https://doi.org/10.3390/rs12203390

Chicago/Turabian StyleJiang, San, Wanshou Jiang, Lelin Li, Lizhe Wang, and Wei Huang. 2020. "Reliable and Efficient UAV Image Matching via Geometric Constraints Structured by Delaunay Triangulation" Remote Sensing 12, no. 20: 3390. https://doi.org/10.3390/rs12203390