Can Commercial Low-Cost Drones and Open-Source GIS Technologies Be Suitable for Semi-Automatic Weed Mapping for Smart Farming? A Case Study in NE Italy

Abstract

:1. Introduction

1.1. A Spatial Approach for Weed Management in Smart Farming

1.2. Unmanned Aerial Systems for Weed Detection

1.3. Aims of the Research

2. Materials and Methods

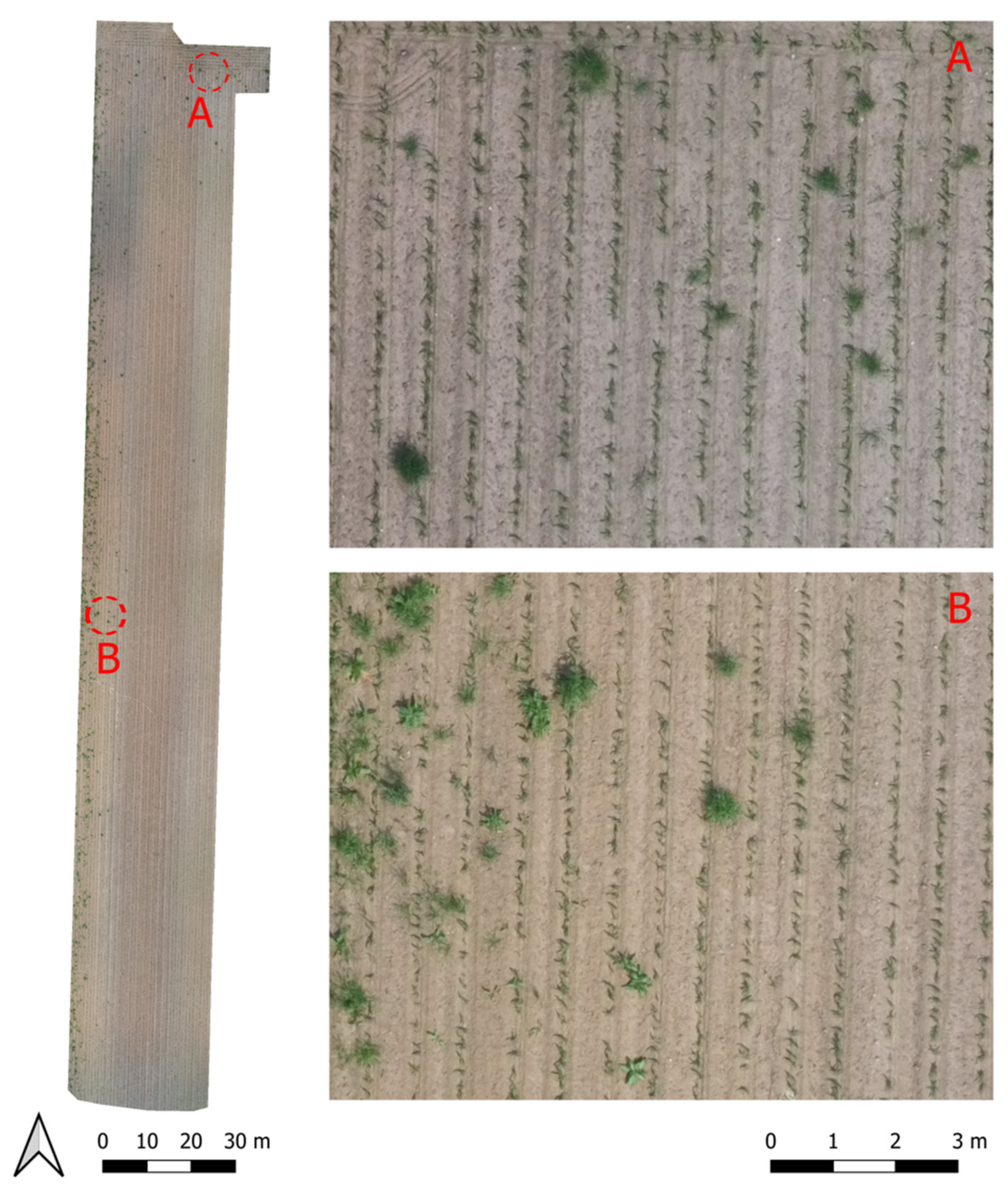

2.1. Study Site

2.2. Open-Source UAS Survey and Orthomosaic Generation with Open Drone Map

2.3. Weed Detection Methods

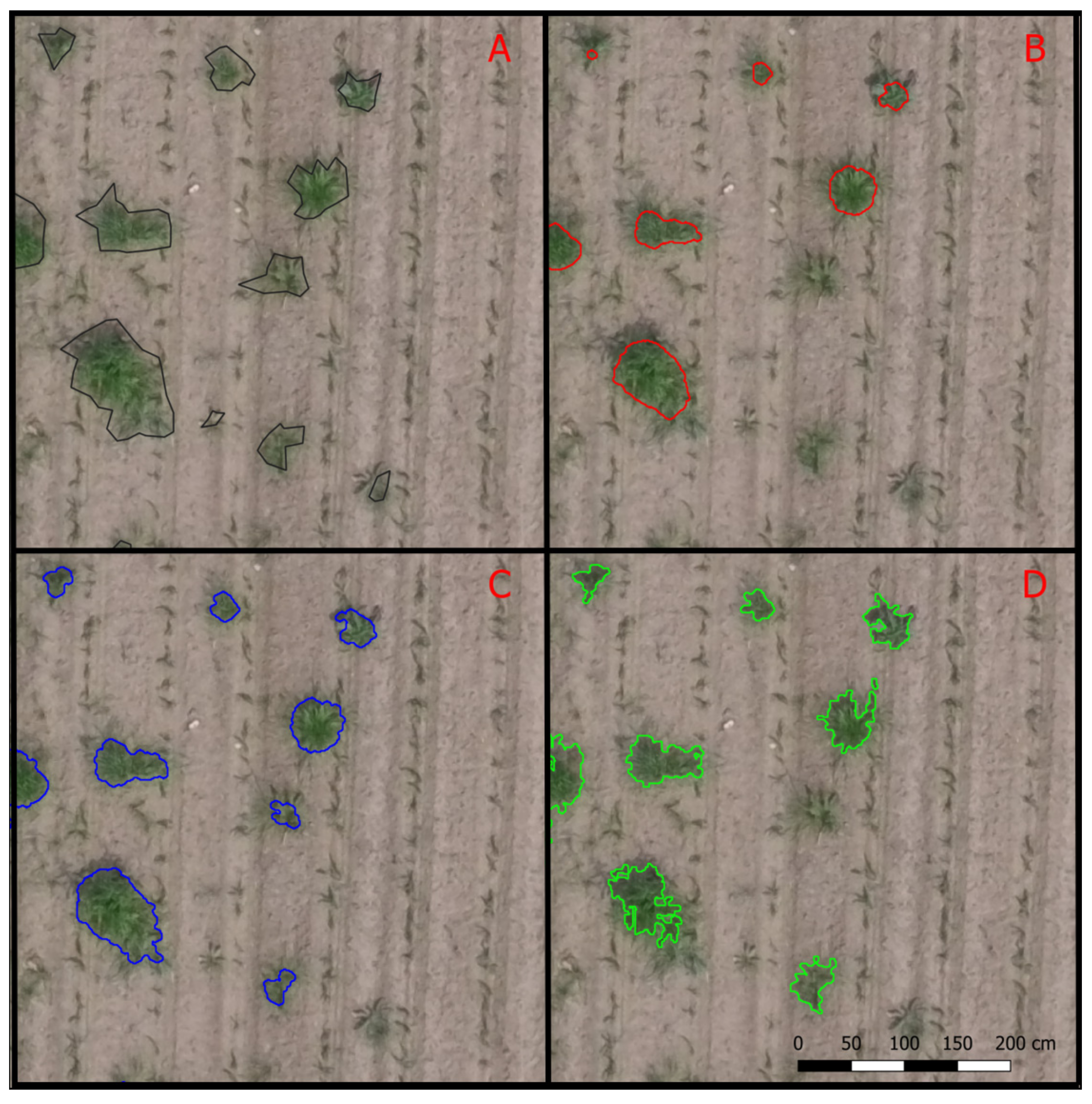

2.3.1. Maximum Likelihood Classifier—MLC

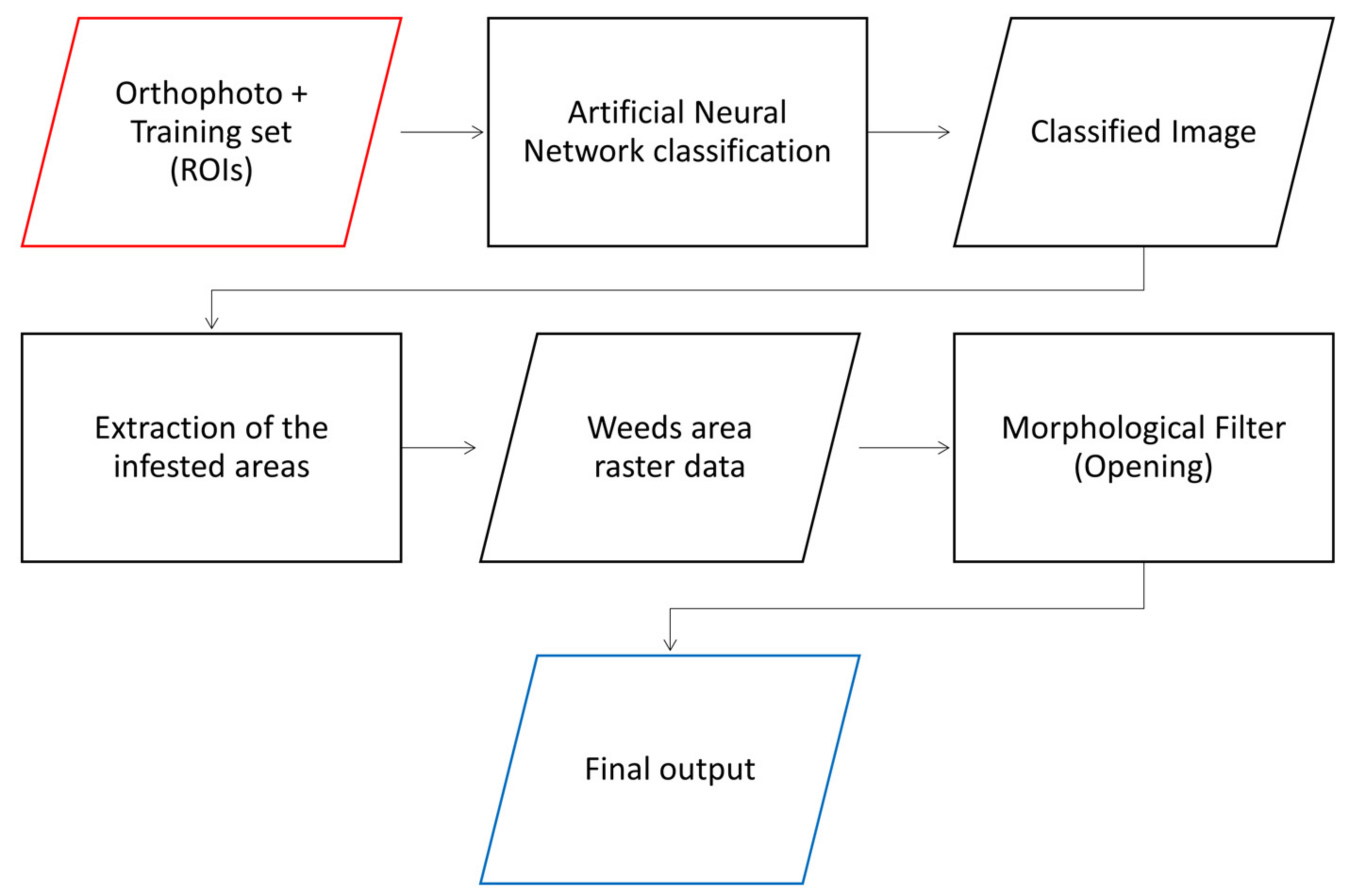

2.3.2. Artificial Neural Network (OpenCV)—ANN

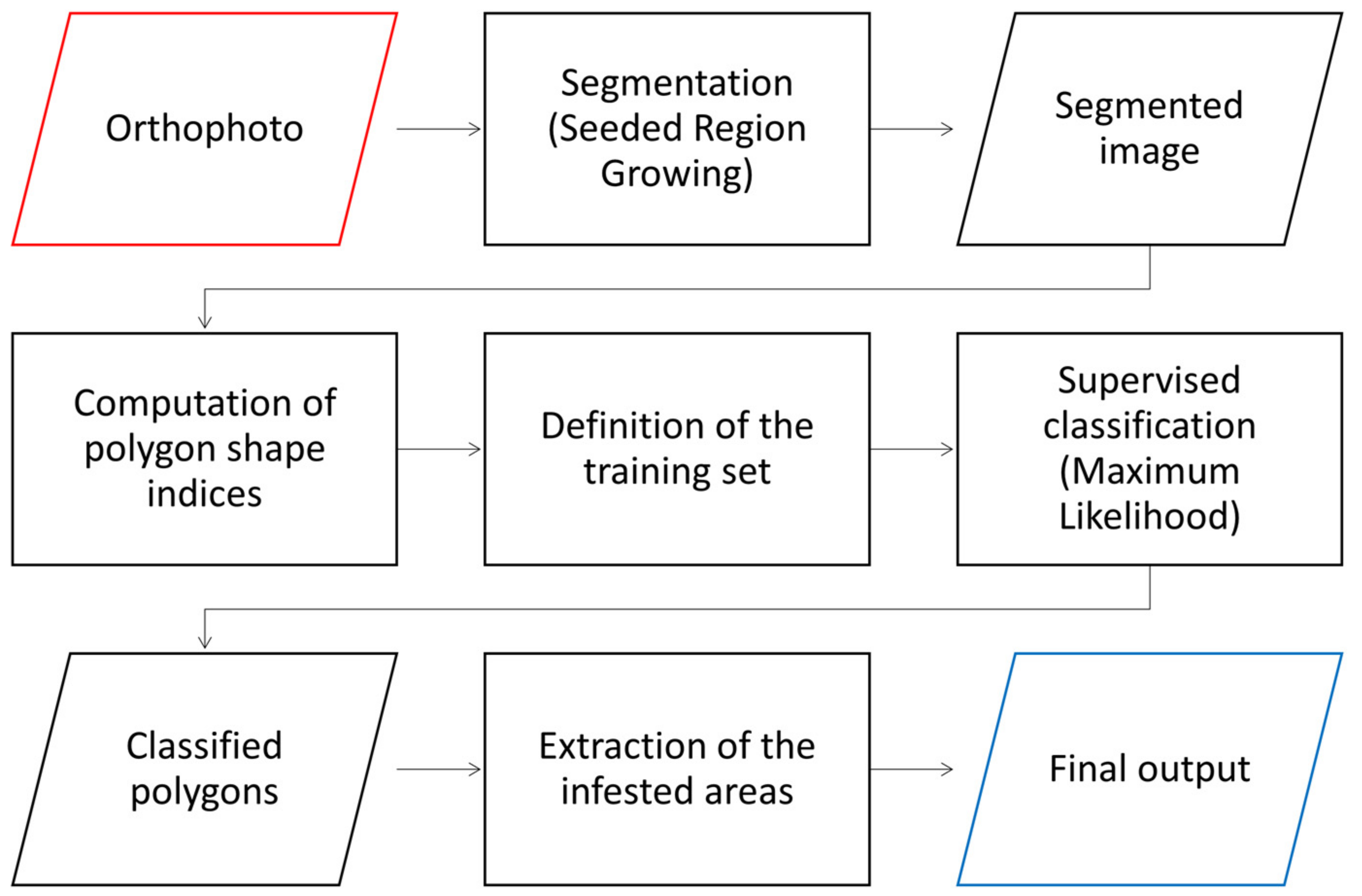

2.3.3. Object-Based Image Analysis—OBIA

2.3.4. Accuracy Assessment

2.4. Prescription Map Creation

3. Results

3.1. Very High Resolution Orthomosaic Generation and Weed Mapping by Photointerpretation

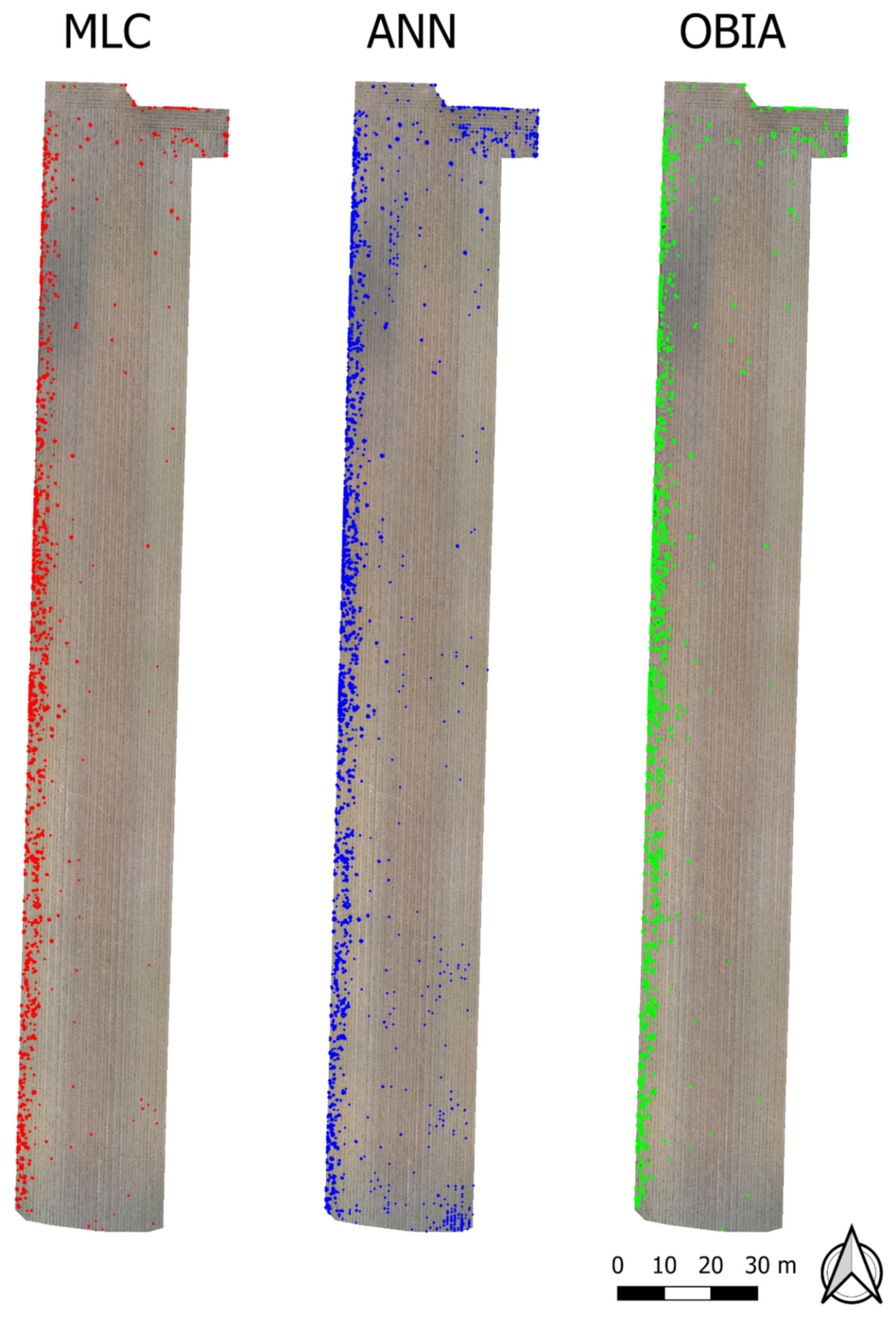

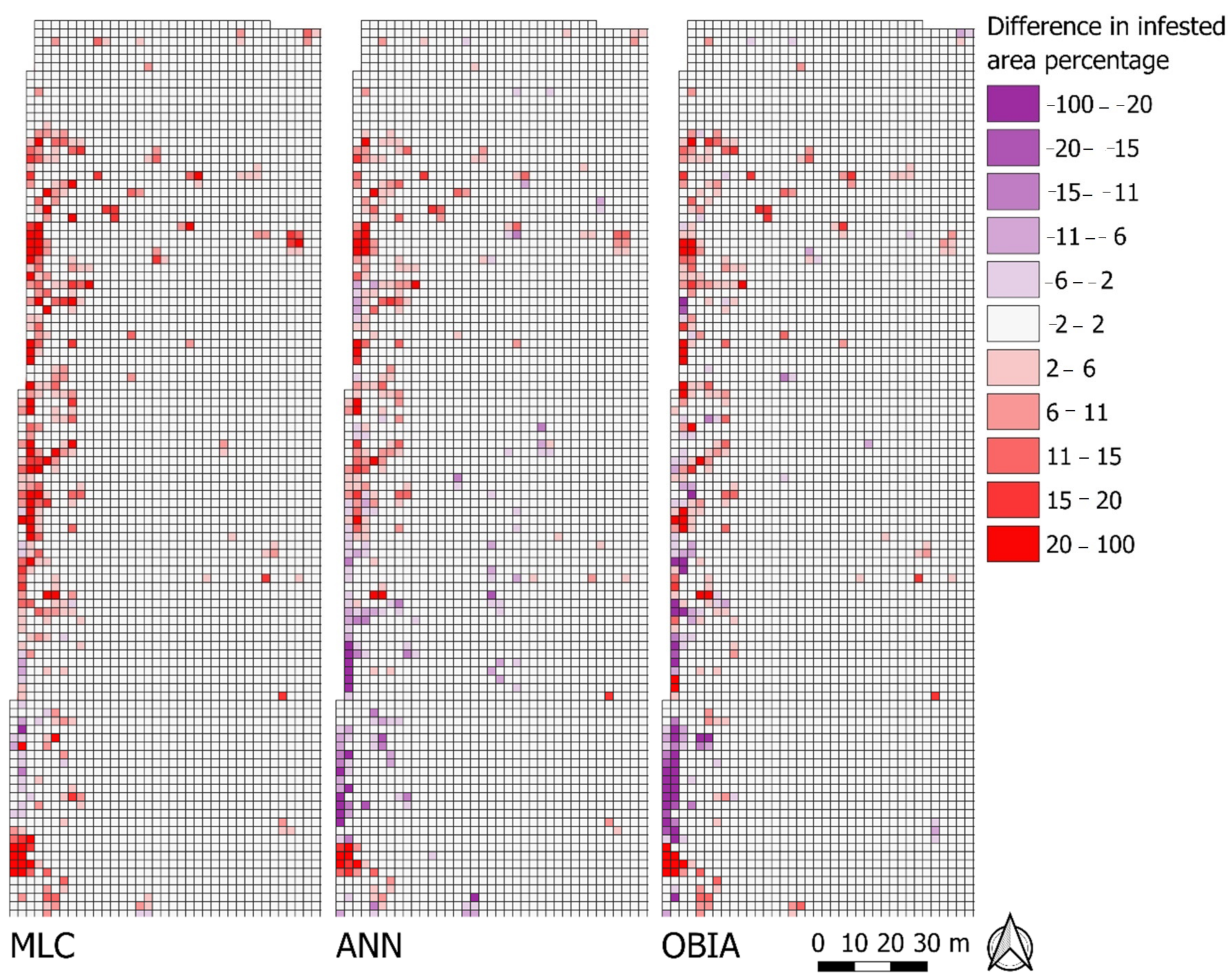

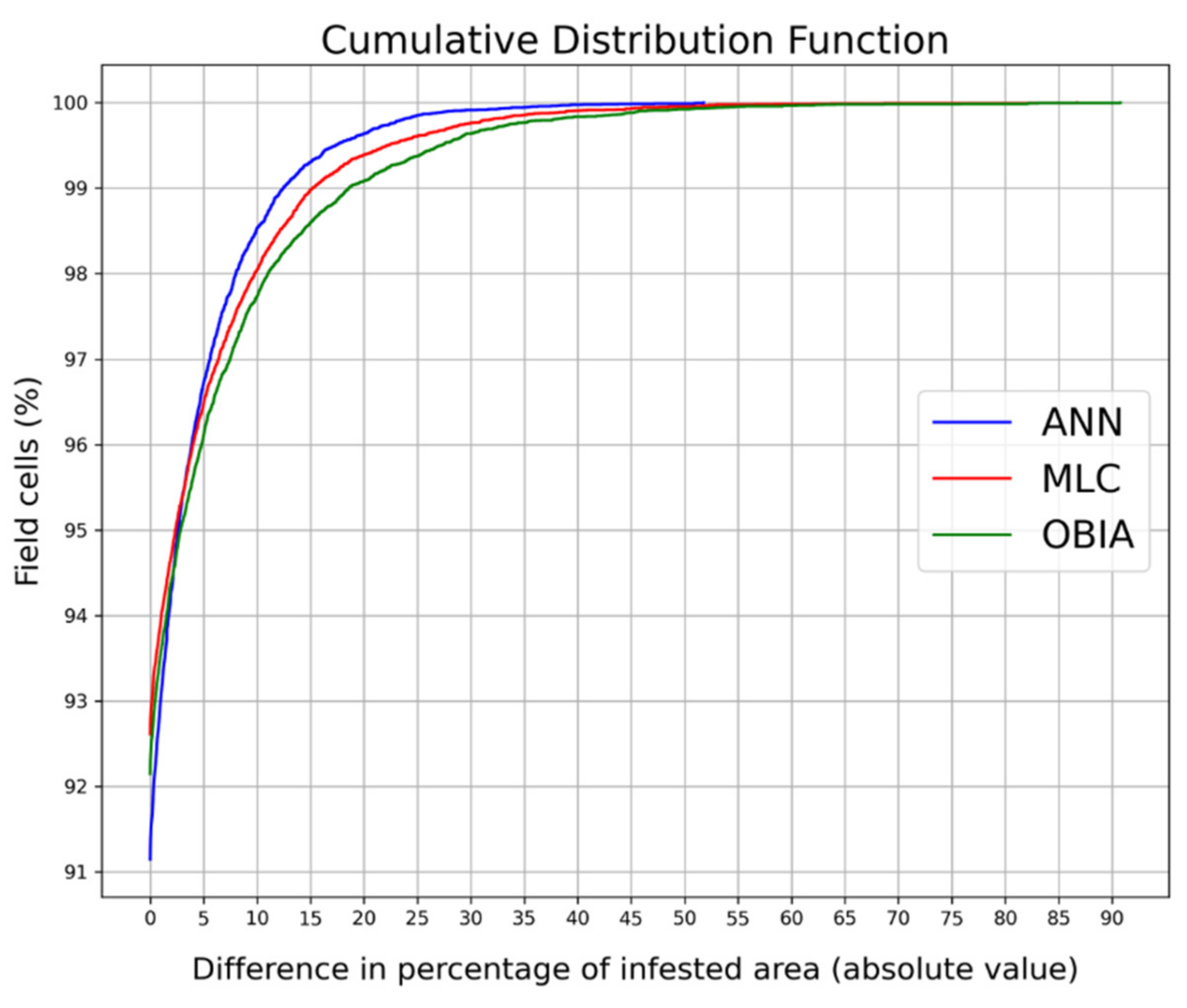

3.2. Semi-Automatic Weed Mapping and Prescription Map Creation

4. Discussion

4.1. From UAS to Prescription Maps for Site-Specific Weed Management: Opportunities and Limitations

4.2. Low-Cost UAS and GIS Open-Source: Towards a More Inclusive Smart Farming?

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lingenfelter, D.D.; Hartwig, N.L. Introduction to Weeds and Herbicides; Pennsylvania State University: College, PA, USA, 2013; pp. 1–38. [Google Scholar]

- Zimdahl, L.R. Fundamentals Of Weed Science, 3rd ed.; Elsevier: Amsterdam, The Netherlands, 2007; ISBN 9780080549859. [Google Scholar]

- Gharde, Y.; Singh, P.K.; Dubey, R.P.; Gupta, P.K. Assessment of yield and economic losses in agriculture due to weeds in India. Crop Prot. 2018, 107, 12–18. [Google Scholar] [CrossRef]

- Zimdahl, L.R. Introduction to Chemical Weed Control. In Fundamentals of Weed Science; Maragioglio, N., Fernandez, B.J., Eds.; Elsevier: Amsterdam, The Netherlands, 2018; pp. 391–416. ISBN 9780128111437. [Google Scholar]

- Arriaga, F.J.; Guzman, J.; Lowery, B. Conventional Agricultural Production Systems and Soil Functions. In Soil Health and Intensification of Agroecosytems; Elsevier: Amsterdam, The Netherlands, 2017; pp. 109–125. ISBN 9780128054017. [Google Scholar]

- Melander, B.; Rasmussen, I.A.; Bàrberi, P. Integrating physical and cultural methods of weed control— examples from European research. Weed Sci. 2005, 53, 369–381. [Google Scholar] [CrossRef]

- Astatkie, T.; Rifai, M.N.; Havard, P.; Adsett, J.; Lacko-Bartosova, M.; Otepka, P. Effectiveness of hot water, infrared and open flame thermal units for controlling weeds. Biol. Agric. Hortic. 2007, 25, 1–12. [Google Scholar] [CrossRef]

- Oerke, E.C. Crop losses to pests. J. Agric. Sci. 2006, 144, 31–43. [Google Scholar] [CrossRef]

- Kraehmer, H.; Laber, B.; Rosinger, C.; Schulz, A. Herbicides as Weed Control Agents: State of the Art: I. Weed Control Research and Safener Technology: The Path to Modern Agriculture. Plant Physiol. 2014, 166, 1119–1131. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mohler, C.L. Ecological bases for the cultural control of annual weeds. J. Prod. Agric. 1996, 9, 468–474. [Google Scholar] [CrossRef]

- Weis, M.; Gutjahr, C.; Ayala, V.R.; Gerhards, R.; Ritter, C.; Schölderle, F. Precision farming for weed management: Techniques. Gesunde Pflanz. 2008, 60, 171–181. [Google Scholar] [CrossRef]

- Idowu, J.; Angadi, S. Understanding and Managing Soil Compaction in Agricultural Fields. Circular 2013, 672, 1–8. [Google Scholar]

- Sherwani, S.I.; Arif, I.A.; Khan, H.A. Modes of Action of Different Classes of Herbicides. In Herbicides, Physiology of Action, and Safety; Price, A., Kelton, J., Sarunaite, L., Eds.; InTech: London, UK, 2015; pp. 165–186. [Google Scholar]

- Gimsing, A.L.; Agert, J.; Baran, N.; Boivin, A.; Ferrari, F.; Gibson, R.; Hammond, L.; Hegler, F.; Jones, R.L.; König, W.; et al. Conducting Groundwater Monitoring Studies in Europe for Pesticide Active Substances and Their Metabolites in the context of Regulation (EC) 1107/2009. J. Consum. Prot. Food Saf. 2019, 14, 1–93. [Google Scholar] [CrossRef] [Green Version]

- Morales, M.A.M.; de Camargo, B.C.V.; Hoshina, M.M. Toxicity of Herbicides: Impact on Aquatic and Soil Biota and Human Health. In Herbicides: Current Research and Case Studies in Use; Price, A., Kelton, J., Eds.; IntechOpen Limited: London, UK, 2013; pp. 399–443. [Google Scholar]

- Liebman, M.; Baraibar, B.; Buckley, Y.; Childs, D.; Christensen, S.; Cousens, R.; Eizenberg, H.; Heijting, S.; Loddo, D.; Merotto, A.; et al. Ecologically sustainable weed management: How do we get from proof-of-concept to adoption? Ecol. Appl. 2016, 26, 1352–1369. [Google Scholar] [CrossRef]

- López-Granados, F.; Torres-Sánchez, J.; Serrano-Pérez, A.; de Castro, A.I.; Mesas-Carrascosa, F.J.; Peña, J.M. Early season weed mapping in sunflower using UAV technology: Variability of herbicide treatment maps against weed thresholds. Precis. Agric. 2016, 17, 183–199. [Google Scholar] [CrossRef]

- Castaldi, F.; Pelosi, F.; Pascucci, S.; Casa, R. Assessing the potential of images from unmanned aerial vehicles (UAV) to support herbicide patch spraying in maize. Precis. Agric. 2017, 18, 76–94. [Google Scholar] [CrossRef]

- Peña, J.M.; Torres-Sánchez, J.; de Castro, A.I.; Kelly, M.; López-Granados, F. Weed Mapping in Early-Season Maize Fields Using Object-Based Analysis of Unmanned Aerial Vehicle (UAV) Images. PLoS ONE 2013, 8, e77151. [Google Scholar]

- Barros, J.C.; Calado, J.G.; Basch, G.; Carvalho, M.J. Effect of different doses of post-emergence-applied iodosulfuron on weed control and grain yield of malt barley (Hordeum distichum L.), under Mediterranean conditions. J. Plant Prot. Res. 2016, 56, 15–20. [Google Scholar] [CrossRef]

- Swanton, C.J.; Shrestha, A.; Chandler, K.; Deen, W. An Economic Assessment of Weed Control Strategies in No-Till Glyphosate-Resistant Soybean (Glycine max). Weed Technol. 2000, 14, 755–763. [Google Scholar] [CrossRef]

- Sartorato, I.; Berti, A.; Zanin, G. Estimation of economic thresholds for weed control in soybean (Glycine max (L.) Merr.). Crop Prot. 1996, 15, 63–68. [Google Scholar] [CrossRef]

- Power, E.F.; Kelly, D.L.; Stout, J.C. The impacts of traditional and novel herbicide application methods on target plants, non-target plants and production in intensive grasslands. Weed Res. 2013, 53, 131–139. [Google Scholar] [CrossRef]

- Weis, M.; Keller, M.; Rueda, V. Herbicide Reduction Methods. In Herbicides-Environmental Impact Studies and Management Approaches; InTech: Rijeka, Croatia, 2012. [Google Scholar]

- European Parliament Directive 2009/128/EC of the European Parliament and the Council of 21 October 2009 establishing a framework for Community action to achieve the sustainable use of pesticides. Off. J. Eur. Union 2009, 309, 71–86.

- European Parliament. Precision Agriculture and the Future of Farming in Europe; European Parliamentary Research Service: Bruxelles, Belgium, 2016; ISBN 9789284604753.

- Kritikos, M. Precision Agriculture in Europe: Legal, Social and Ethical Considerations; European Parliamentary Research Service: Bruxelles, Belgium, 2017; ISBN 9789282368855. [Google Scholar]

- Andújar, D.; Barroso, J.; Fernández-Quintanilla, C.; Dorado, J. Spatial and temporal dynamics of Sorghum halepense patches in maize crops. Weed Res. 2012, 52, 411–420. [Google Scholar] [CrossRef]

- Lambert, J.P.T.; Hicks, H.L.; Childs, D.Z.; Freckleton, R.P. Evaluating the potential of Unmanned Aerial Systems for mapping weeds at field scales: A case study with Alopecurus myosuroides. Weed Res. 2018, 58, 35–45. [Google Scholar] [CrossRef] [Green Version]

- Huang, H.; Deng, J.; Lan, Y.; Yang, A.; Deng, X.; Wen, S.; Zhang, H.; Zhang, Y. Accurate weed mapping and prescription map generation based on fully convolutional networks using UAV imagery. Sensors 2018, 18, 3299. [Google Scholar] [CrossRef] [Green Version]

- Hanzlik, K.; Gerowitt, B. Methods to conduct and analyse weed surveys in arable farming: A review. Agron. Sustain. Dev. 2016, 36, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Tamouridou, A.A.; Alexandridis, T.K.; Pantazi, X.E.; Lagopodi, A.L.; Kashefi, J.; Moshou, D. Evaluation of UAV imagery for mapping Silybum marianum weed patches. Int. J. Remote Sens. 2017, 38, 2246–2259. [Google Scholar] [CrossRef]

- Kim, D.-W.; Kim, Y.; Kim, K.-H.; Kim, H.-J.; Chung, Y.S. Case Study: Cost-effective Weed Patch Detection by Multi-Spectral Camera Mounted on Unmanned Aerial Vehicle in the Buckwheat Field. Korean J. Crop Sci. 2019, 64, 159–164. [Google Scholar]

- Dian Bah, M.; Hafiane, A.; Canals, R. Deep learning with unsupervised data labeling for weed detection in line crops in UAV images. Remote Sens. 2018, 10, 1690. [Google Scholar]

- Lin, F.; Zhang, D.; Huang, Y.; Wang, X.; Chen, X. Detection of corn and weed species by the combination of spectral, shape and textural features. Sustainability 2017, 9, 1335. [Google Scholar] [CrossRef] [Green Version]

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef] [PubMed]

- Louargant, M.; Jones, G.; Faroux, R.; Paoli, J.N.; Maillot, T.; Gée, C.; Villette, S. Unsupervised classification algorithm for early weed detection in row-crops by combining spatial and spectral information. Remote Sens. 2018, 10, 761. [Google Scholar] [CrossRef] [Green Version]

- Fernández-Quintanilla, C.; Peña, J.M.; Andújar, D.; Dorado, J.; Ribeiro, A.; López-Granados, F. Is the current state of the art of weed monitoring suitable for site-specific weed management in arable crops? Weed Res. 2018, 58, 259–272. [Google Scholar] [CrossRef]

- Hunt, E.R.; Daughtry, C.S.T. What good are unmanned aircraft systems for agricultural remote sensing and precision agriculture? Int. J. Remote Sens. 2017, 39, 5345–5376. [Google Scholar] [CrossRef] [Green Version]

- Bakhshipour, A.; Jafari, A. Evaluation of support vector machine and artificial neural networks in weed detection using shape features. Comput. Electron. Agric. 2018, 145, 153–160. [Google Scholar] [CrossRef]

- Eddy, P.R.; Smith, A.M.; Hill, B.D.; Peddle, D.R.; Coburn, C.A.; Blackshaw, R.E. Comparison of neural network and maximum likelihood high resolution image classification for weed detection in crops: Applications in precision agriculture. Int. Geosci. Remote Sens. Symp. 2006, 116–119. [Google Scholar]

- Dos Santos Ferreira, A.; Matte Freitas, D.; Gonçalves da Silva, G.; Pistori, H.; Theophilo Folhes, M. Weed detection in soybean crops using ConvNets. Comput. Electron. Agric. 2017, 143, 314–324. [Google Scholar] [CrossRef]

- Thorp, K.R.; Tian, L.F. A review on remote sensing of weeds in agriculture. Precis. Agric. 2004, 5, 477–508. [Google Scholar] [CrossRef]

- Sivakumar, A.N.V.; Li, J.; Scott, S.; Psota, E.; Jhala, A.J.; Luck, J.D.; Shi, Y. Comparison of object detection and patch-based classification deep learning models on mid-to late-season weed detection in UAV imagery. Remote Sens. 2020, 12, 2136. [Google Scholar] [CrossRef]

- Wendel, A.; Underwood, J. Self-supervised weed detection in vegetable crops using ground based hyperspectral imaging. Proc. IEEE Int. Conf. Robot. Autom. 2016, 2016, 5128–5135. [Google Scholar]

- Zheng, Y.; Zhu, Q.; Huang, M.; Guo, Y.; Qin, J. Maize and weed classification using color indices with support vector data description in outdoor fields. Comput. Electron. Agric. 2017, 141, 215–222. [Google Scholar] [CrossRef]

- Toffanin, P. OpenDroneMap: The Missing Guide. A Practical Guide to Drone Mapping Using Free and Open Source Software; MasseranoLabs LLC.: Saint Petersburg, FL, USA, 2019; ISBN 978-1086027563. [Google Scholar]

- Conrad, O.; Bechtel, B.; Bock, M.; Dietrich, H.; Fischer, E.; Gerlitz, L.; Wehberg, J.; Wichmann, V.; Böhner, J. System for Automated Geoscientific Analyses (SAGA) v. 2.1. Geosci. Model Dev. 2015, 8, 1991–2007. [Google Scholar] [CrossRef] [Green Version]

- Bolstad, P.V.; Lillesand, T.M. Rapid maximum likelihood classification. Photogramm. Eng. Remote Sens. 1991, 57, 67–74. [Google Scholar]

- Otukei, J.R.; Blaschke, T. Land cover change assessment using decision trees, support vector machines and maximum likelihood classification algorithms. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 27–31. [Google Scholar] [CrossRef]

- De Castro, A.I.; Jurado-Expósito, M.; Peña-Barragán, J.M.; López-Granados, F. Airborne multi-spectral imagery for mapping cruciferous weeds in cereal and legume crops. Precis. Agric. 2012, 13, 302–321. [Google Scholar] [CrossRef] [Green Version]

- Weiss, G.; Provost, F. The Effect of Class Distribution on Classifier Learning: An Empirical Study; Tech. Rep. ML-TR-44 Dep.; Computer Science Rutgers University: New Brunswick, NJ, USA, 2001; pp. 1–26. [Google Scholar] [CrossRef]

- Ustuner, M.; Sanli, F.B.; Abdikan, S. Balanced vs imbalanced training data: Classifying rapideye data with support vector machines. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2016, 41, 379–384. [Google Scholar] [CrossRef]

- Said, K.A.M.; Jambek, A.B.; Sulaiman, N. A study of image processing using morphological opening and closing processes. Int. J. Control Theory Appl. 2016, 9, 15–21. [Google Scholar]

- Egmont-Petersen, M.; De Ridder, D.; Handels, H. Image processing with neural networks- A review. Pattern Recognit. 2002, 35, 2279–2301. [Google Scholar] [CrossRef]

- OPEN CV Neural Networks. Available online: https://docs.opencv.org/2.4/modules/ml/doc/neural_networks.html (accessed on 10 May 2021).

- Bechtel, B.; Ringeler, A.; Böhner, J. Segmentation for Object Extraction of Treed Using MATLAB and SAGA. Hamburg. Beiträge Phys. Geogr. Landsch. 2008, 19, 1–12. [Google Scholar]

- Ozdarici-Ok, A. Automatic detection and delineation of citrus trees from VHR satellite imagery. Int. J. Remote Sens. 2015, 36, 4275–4296. [Google Scholar] [CrossRef]

- Adams, R.; Bischof, L. Seeded Region Growing. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 641–647. [Google Scholar] [CrossRef] [Green Version]

- Forman, R.T.T.; Godron, M. Landscape Ecology; Wiley & Sons: Hoboken, NJ, USA, 1986. [Google Scholar]

- Lang, S.; Blaschke, T. Landschaftsanalyse Mit GIS; Eugen-Ulmer-Verlag: Stuttgart, Germany, 2007; ISBN 9783825283476. [Google Scholar]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Boutin, C.; Strandberg, B.; Carpenter, D.; Mathiassen, S.K.; Thomas, P.J. Herbicide impact on non-target plant reproduction: What are the toxicological and ecological implications? Environ. Pollut. 2014, 185, 295–306. [Google Scholar] [CrossRef] [Green Version]

- Norsworthy, J.K.; Ward, S.M.; Shaw, D.R.; Llewellyn, R.S.; Nichols, R.L.; Webster, T.M.; Bradley, K.W.; Frisvold, G.; Powles, S.B.; Burgos, N.R.; et al. Reducing the Risks of Herbicide Resistance: Best Management Practices and Recommendations. Weed Sci. 2012, 60, 31–62. [Google Scholar] [CrossRef] [Green Version]

- Gao, J.; Liao, W.; Nuyttens, D.; Lootens, P.; Vangeyte, J.; Pižurica, A.; He, Y.; Pieters, J.G. Fusion of pixel and object-based features for weed mapping using unmanned aerial vehicle imagery. Int. J. Appl. Earth Obs. Geoinf. 2018, 67, 43–53. [Google Scholar] [CrossRef]

- Lowenberg-Deboer, J.; Erickson, B. Setting the record straight on precision agriculture adoption. Agron. J. 2019, 111, 1552–1569. [Google Scholar] [CrossRef] [Green Version]

- Tey, Y.S.; Brindal, M. Factors influencing the adoption of precision agricultural technologies: A review for policy implications. Precis. Agric. 2012, 13, 713–730. [Google Scholar] [CrossRef]

- Paustian, M.; Theuvsen, L. Adoption of precision agriculture technologies by German crop farmers. Precis. Agric. 2017, 18, 701–716. [Google Scholar] [CrossRef]

- Barnes, A.P.; Soto, I.; Eory, V.; Beck, B.; Balafoutis, A.; Sánchez, B.; Vangeyte, J.; Fountas, S.; van der Wal, T.; Gómez-Barbero, M. Exploring the adoption of precision agricultural technologies: A cross regional study of EU farmers. Land Use Policy 2019, 80, 163–174. [Google Scholar] [CrossRef]

- Pierpaoli, E.; Carli, G.; Pignatti, E.; Canavari, M. Drivers of Precision Agriculture Technologies Adoption: A Literature Review. Procedia Technol. 2013, 8, 61–69. [Google Scholar] [CrossRef] [Green Version]

- Wu, Y.; Xi, X.; Tang, X.; Luo, D.; Gu, B.; Lam, S.K.; Vitousek, P.M.; Chen, D. Policy distortions, farm size, and the overuse of agricultural chemicals in China. Proc. Natl. Acad. Sci. USA 2018, 115, 7010–7015. [Google Scholar] [CrossRef] [Green Version]

- Mondal, P.; Basu, M. Adoption of precision agriculture technologies in India and in some developing countries: Scope, present status and strategies. Prog. Nat. Sci. 2009, 19, 659–666. [Google Scholar] [CrossRef]

- Gliessman, S. Transforming food systems with agroecology. Agroecol. Sustain. Food Syst. 2016, 40, 187–189. [Google Scholar] [CrossRef]

| Number of Layers | 3 |

| Number of Neurons | 12 |

| Maximum Number of Iterations | 3000 |

| Error change (Epsilon) | 0.0000001192 |

| Activation Function | Sigmoid |

| Function’s Alpha | 1 |

| Function’s Beta | 1 |

| Training Method | Back propagation |

| Weight Gradient term | 0.1 |

| Moment term | 0.1 |

| Band Width for Seed Point Generation | 22 |

| Neighborhood | 8 (Moore) |

| Distance | Feature space and position |

| Variance in Feature Space | 3 |

| Variance in Position Space | 10 |

| Generalization | 2 |

| Overall Accuracy % | F1 Score (Range (0, 1)) | MCC (Range(−1, 1)) | nMCC (Range (0, 1)) | Omission Error % | Commission Error % | |

|---|---|---|---|---|---|---|

| MLC | 99.50 | 0.6625 | 0.6817 | 0.8408 | 46.89 | 11.95 |

| ANN | 99.55 | 0.7452 | 0.7437 | 0.8718 | 28.74 | 21.89 |

| OBIA | 99.38 | 0.6779 | 0.6757 | 0.8378 | 28.44 | 35.58 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mattivi, P.; Pappalardo, S.E.; Nikolić, N.; Mandolesi, L.; Persichetti, A.; De Marchi, M.; Masin, R. Can Commercial Low-Cost Drones and Open-Source GIS Technologies Be Suitable for Semi-Automatic Weed Mapping for Smart Farming? A Case Study in NE Italy. Remote Sens. 2021, 13, 1869. https://doi.org/10.3390/rs13101869

Mattivi P, Pappalardo SE, Nikolić N, Mandolesi L, Persichetti A, De Marchi M, Masin R. Can Commercial Low-Cost Drones and Open-Source GIS Technologies Be Suitable for Semi-Automatic Weed Mapping for Smart Farming? A Case Study in NE Italy. Remote Sensing. 2021; 13(10):1869. https://doi.org/10.3390/rs13101869

Chicago/Turabian StyleMattivi, Pietro, Salvatore Eugenio Pappalardo, Nebojša Nikolić, Luca Mandolesi, Antonio Persichetti, Massimo De Marchi, and Roberta Masin. 2021. "Can Commercial Low-Cost Drones and Open-Source GIS Technologies Be Suitable for Semi-Automatic Weed Mapping for Smart Farming? A Case Study in NE Italy" Remote Sensing 13, no. 10: 1869. https://doi.org/10.3390/rs13101869