HOLBP: Remote Sensing Image Registration Based on Histogram of Oriented Local Binary Pattern Descriptor

Abstract

1. Introduction

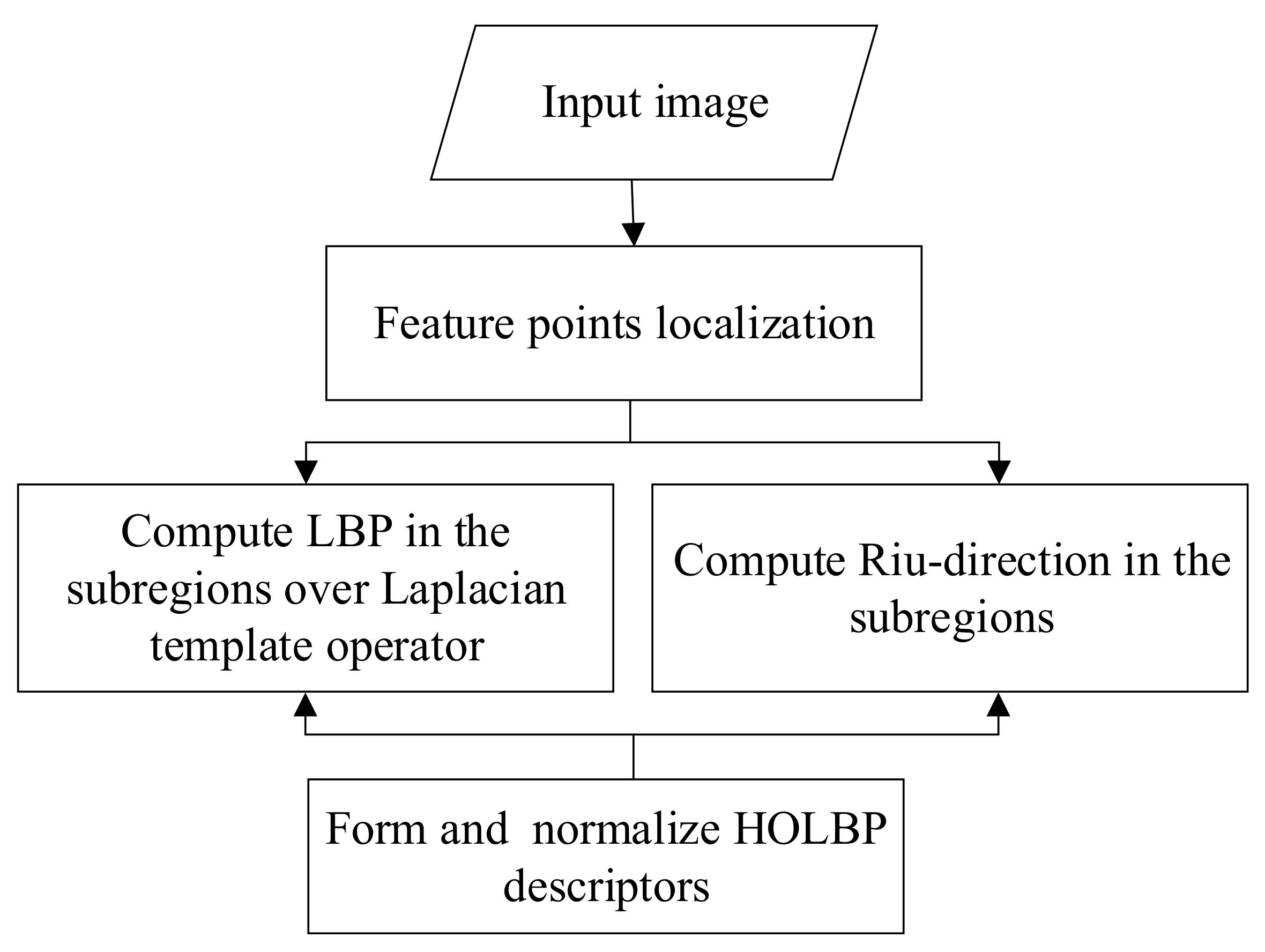

- Redefinition of Gradient and Orientation:Based on the Laplacian and Sobel operators, we improved the edge information representation to improve robustness.

- Constructing descriptor:Based on the local binary pattern (LBP) operator, we proposed a new descriptor, histogram of oriented local binary pattern descriptor (HOLBP), which constructs histograms using the gradient direction of feature points and the LBP value. The texture information of an image and the rotation invariance of the descriptor were preserved as much as possible. We applied the principle of uniform rotation-invariant LBP [23] to add 10-dimensional gradient direction information, based on a 128-dimension descriptor of HOLBP, to enhance matching. This increased the abundance of the description information with eight directions.

- Matching:After the coordinates of the matched points were initially obtained, we used rotation-invariant direction information for selection to ameliorate the instability of the Random Sample Consensus (RANSAC) algorithm.

2. Methods

2.1. Scale-Space Pyramid and Key Point Localization

2.2. Gradient and Orientation Assignment

2.3. Construct HOLBP Descriptor

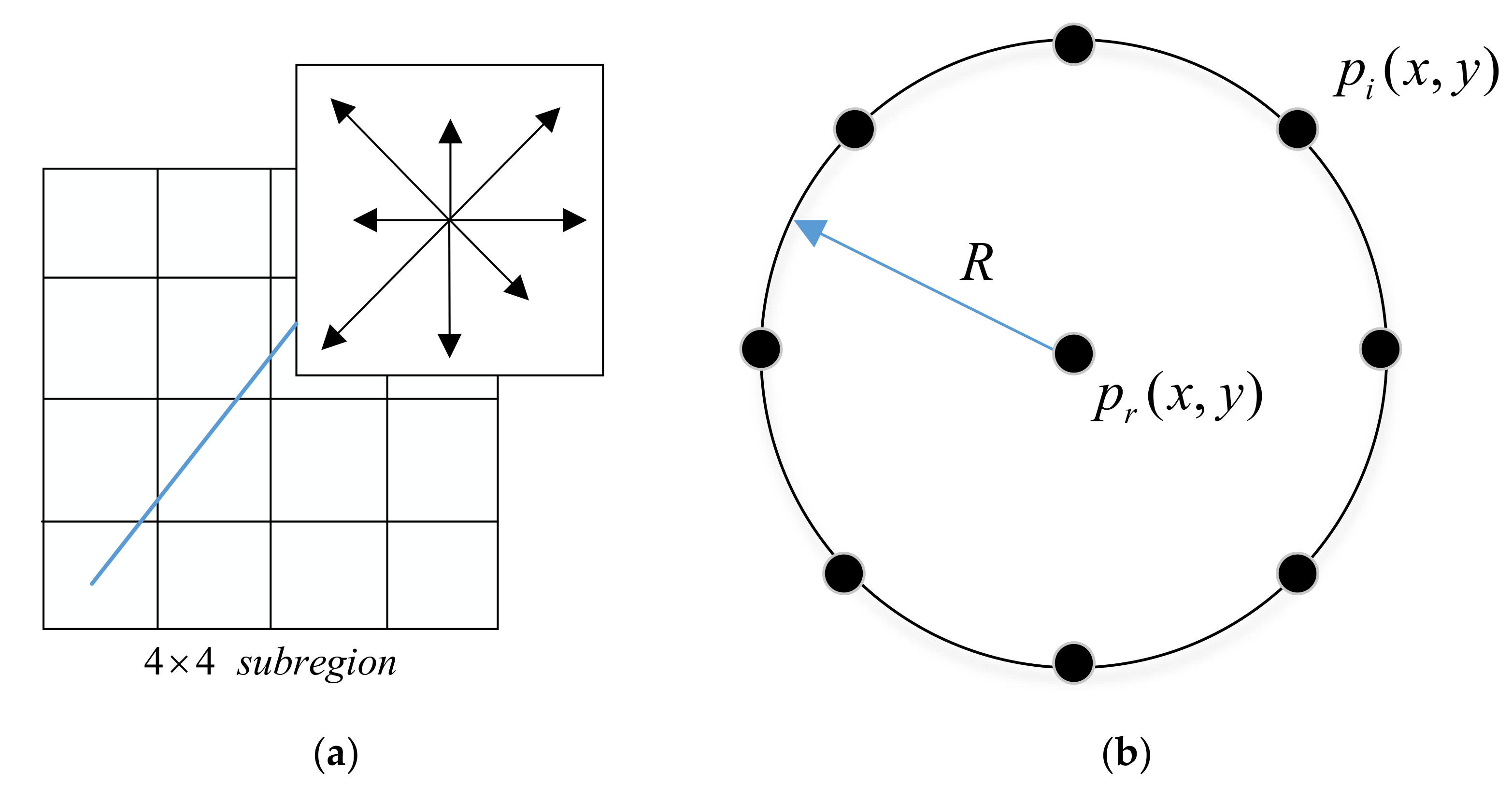

2.3.1. HOLBP

2.3.2. Riu-Direction

2.4. Matching Assignment

| Algorithm 1 Proposed Algorithm |

| Input: <>: The initial matching points through nearest-neighbor distance ratio. , . Output: <>: The final matching set updated by the proposed method. Step1: Obtain sets <> by Equation (12). If , End If Step2: Estimate the homography matrix by Equation (13). Step3: Obtain sets <> by Equation (14). For If End If End For Step4: Obtain sets <> by repeating the iterations. For , , If , , End If End For |

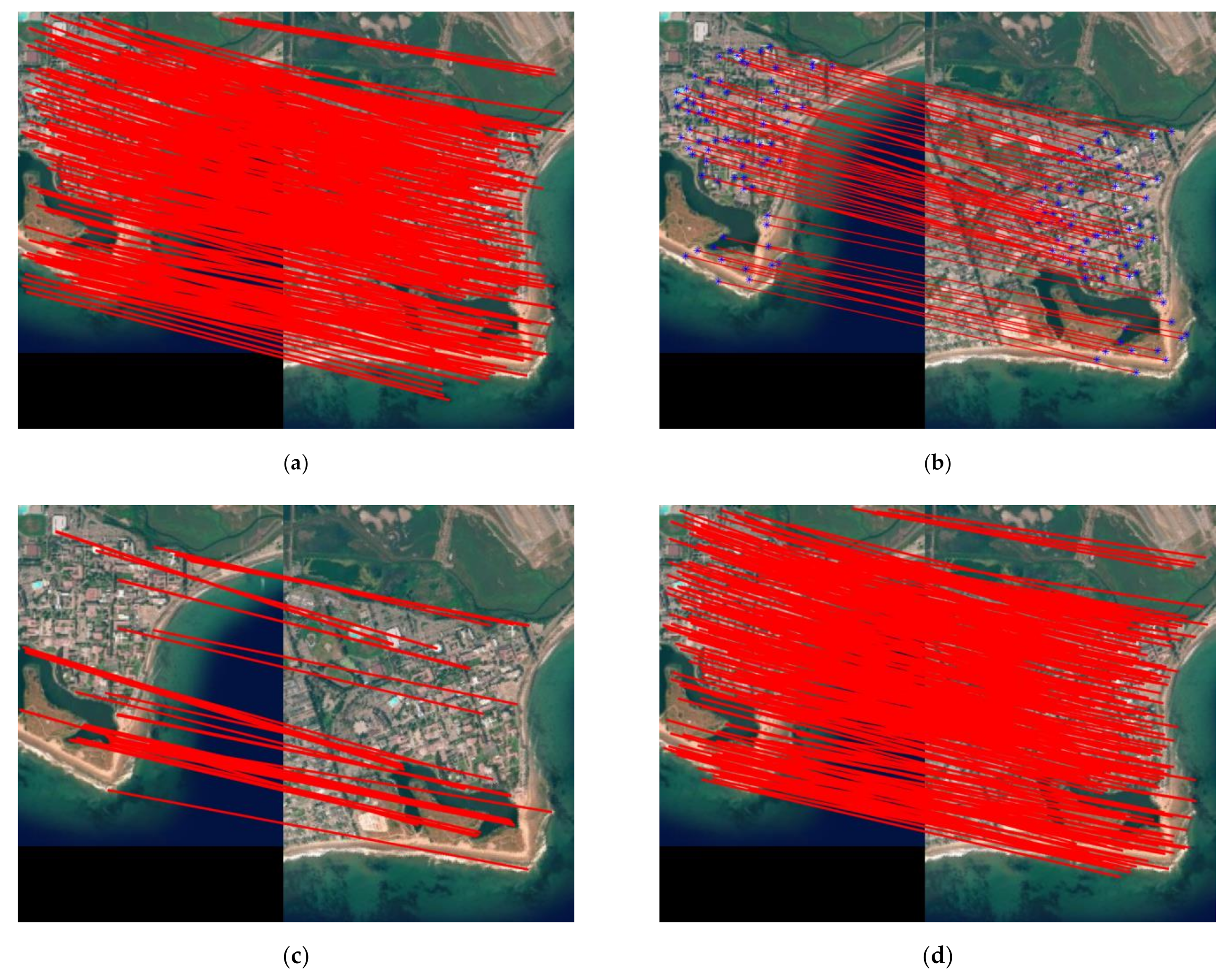

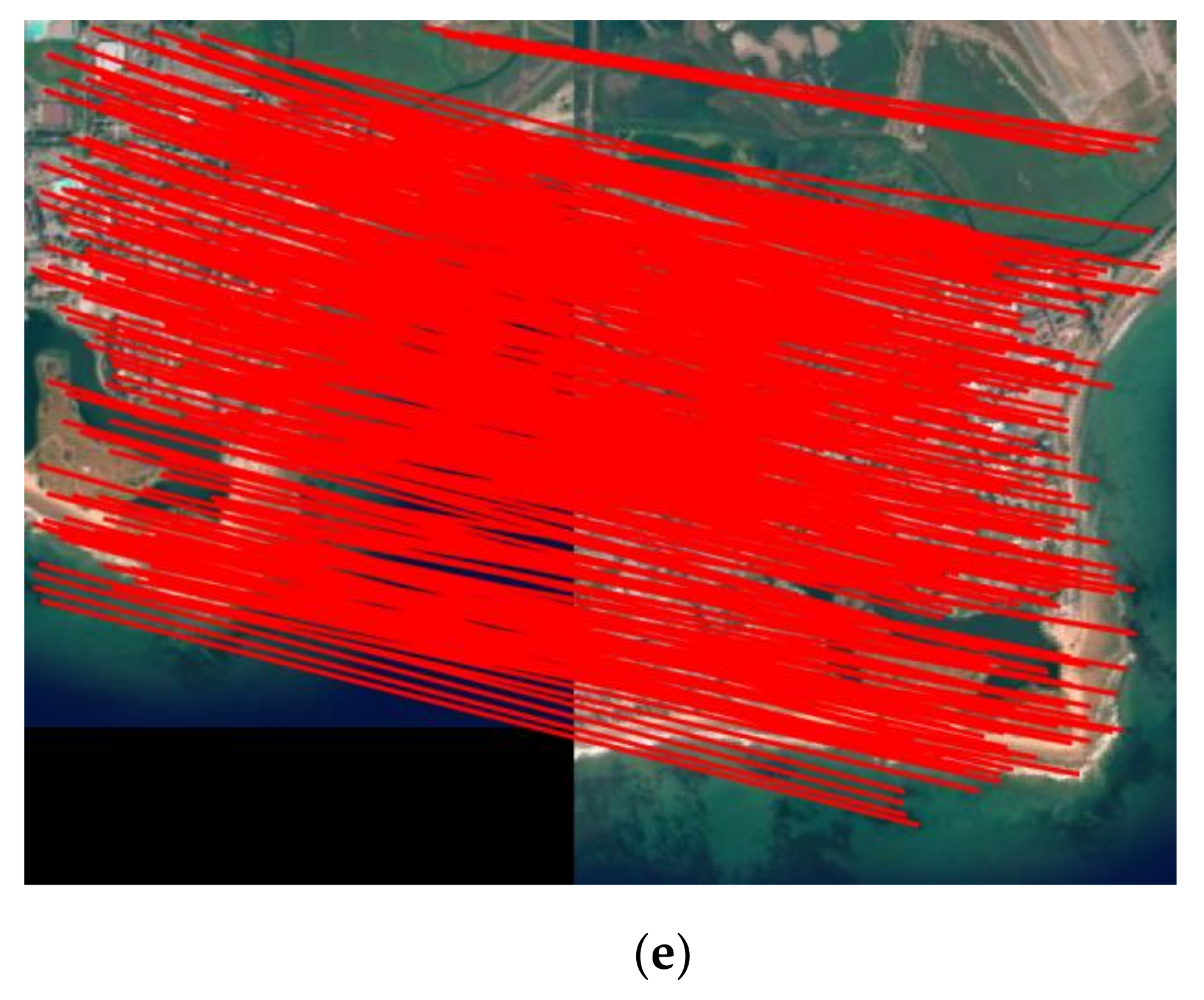

3. Experimental Results and Analysis

3.1. Data

3.2. Experimental Evaluations

3.2.1. Number of Correct Matches

3.2.2. Registration Accuracy

3.2.3. Total Time

3.3. Results Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zitová, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef]

- Xiong, B.; Jiang, W.; Li, D.; Qi, M. Voxel Grid-Based Fast Registration of Terrestrial Point Cloud. Remote Sens. 2021, 13, 1905. [Google Scholar] [CrossRef]

- Ayhan, B.; Dao, M.; Kwan, C.; Chen, H.-M.; Bell, J.F.; Kidd, R. A Novel Utilization of Image Registration Techniques to Process Mastcam Images in Mars Rover with Applications to Image Fusion, Pixel Clustering, and Anomaly Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4553–4564. [Google Scholar] [CrossRef]

- Lu, J.; Jia, H.; Li, T.; Li, Z.; Ma, J.; Zhu, R. An Instance Segmentation Based Framework for Large-Sized High-Resolution Remote Sensing Images Registration. Remote Sens. 2021, 13, 1657. [Google Scholar] [CrossRef]

- Wu, S.; Zhong, R.; Li, Q.; Qiao, K.; Zhu, Q. An Interband Registration Method for Hyperspectral Images Based on Adaptive Iterative Clustering. Remote Sens. 2021, 13, 1491. [Google Scholar] [CrossRef]

- Yu, N.; Wu, M.J.; Liu, J.X.; Zheng, C.H.; Xu, Y. Correntropy-based hypergraph regularized NMF for clustering and feature selection on multi-cancer integrated data. IEEE Trans. Cybern. 2020. [Google Scholar] [CrossRef] [PubMed]

- Cai, G.; Su, S.; Leng, C.; Wu, Y.; Lu, F. A Robust Transform Estimator Based on Residual Analysis and Its Application on UAV Aerial Images. Remote Sens. 2018, 10, 291. [Google Scholar] [CrossRef]

- Zhang, H.P.; Leng, C.C.; Yan, X.; Cai, G.R.; Pei, Z.; Yu, N.G.; Basu, A. Remote sensing image registration based on local affine constraint with circle descriptor. IEEE Geosci. Remote Sens. Lett. 2020. [Google Scholar] [CrossRef]

- Zhang, J.; Ma, W.P.; Wu, Y.; Jiao, L.C. Multimodal remote sensing image registration based on image transfer and local features. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1210–1214. [Google Scholar] [CrossRef]

- Li, Q.L.; Wang, G.Y.; Liu, J.G.; Chen, S.B. Robust scale-invariant feature matching for remote sensing image registration. IEEE Geosci. Remote Sens. Lett. 2009, 6, 287–291. [Google Scholar]

- Ma, W.P.; Zhang, J.; Wu, Y.; Jiao, L.C.; Zhu, H.; Zhao, W. A novel two-step registration method for remote sensing images based on deep and local features. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4834–4843. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Lu, G.J. Techniques for efficient and effective transformed image identification. J. Vis. Commun. Image Represent. 2009, 20, 511–520. [Google Scholar] [CrossRef]

- Ye, Y.X.; Shan, J.; Bruzzone, L.; Shen, L. Robust registration of multimodal remote sensing image based on structural similarity. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2941–2958. [Google Scholar] [CrossRef]

- Ye, Y.X.; Bruzzone, L.; Shan, J.; Bovolo, F.; Zhu, Q. Fast and robust matching for multimodal remote sensing image registration. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9059–9070. [Google Scholar] [CrossRef]

- Wu, Y.; Ma, W.P.; Gong, M.G.; Su, L.Z.; Jiao, L.C. A novel point-matching algorithm based on fast sample consensus for image registration. IEEE Geosci. Remote Sens. Lett. 2015, 12, 43–47. [Google Scholar] [CrossRef]

- Ma, J.Y.; Zhao, J.; Tian, J.W.; Yuille, A.L.; Tu, Z.W. Robust point matching via vector field consensus. IEEE Trans. Image Process. 2014, 23, 1706–1721. [Google Scholar] [CrossRef]

- Ma, J.Y.; Zhao, J.; Jiang, J.J.; Zhou, H.B.; Guo, X.J. Locality preserving matching. Int. J. Comput. Vis. 2019, 127, 512–531. [Google Scholar] [CrossRef]

- Ma, J.Y.; Jiang, J.J.; Zhou, H.B.; Zhao, J.; Guo, X.J. Guided locality preserving feature matching for remote sensing image registration. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4435–4447. [Google Scholar] [CrossRef]

- Jiang, X.Y.; Jiang, J.J.; Fan, A.X.; Wang, Z.Y.; Ma, J.Y. Multiscale locality and rank preservation for robust feature matching of remote sensing images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6462–6472. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Ma, W.P.; Wen, Z.L.; Wu, Y.; Jiao, L.C.; Gong, M.G.; Zheng, Y.F.; Liu, L. Remote sensing image registration with modified SIFT and enhanced feature matching. IEEE Geosci. Remote Sens. Lett. 2017, 14, 3–7. [Google Scholar] [CrossRef]

- Chang, H.H.; Wu, G.L.; Chiang, M.H. Remote sensing image registration based on modified SIFT and feature slope grouping. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1363–1367. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Hong, W.W.J.; Tang, Y.P. Image matching for geomorphic measurement based on SIFT and RANSAC methods. In Proceedings of the 2008 International Conference on Computer Science and Software Engineering, Wuhan, China, 12–14 December 2008; Volume 2, pp. 317–320. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.V. Speeded-up robust features. Comput. Vis. Image Underst. 2008, 110, 404–417. [Google Scholar] [CrossRef]

- Dellinger, F.; Delon, J.; Gousseau, Y.; Michel, J.; Tupin, F. SAR-SIFT: A SIFT-like algorithm for SAR images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 453–466. [Google Scholar] [CrossRef]

- Singh, M.; Mandal, M.; Basu, A. Visual gesture recognition for ground air traffic control using the Radon transform. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 2586–2591. [Google Scholar]

- Singh, M.; Mandal, M.; Basu, A. Pose recognition using the Radon transform. In Proceedings of the IEEE Midwest Symposium on Circuits and Systems, Covington, KY, USA, 7–10 August 2005; pp. 1091–1094. [Google Scholar]

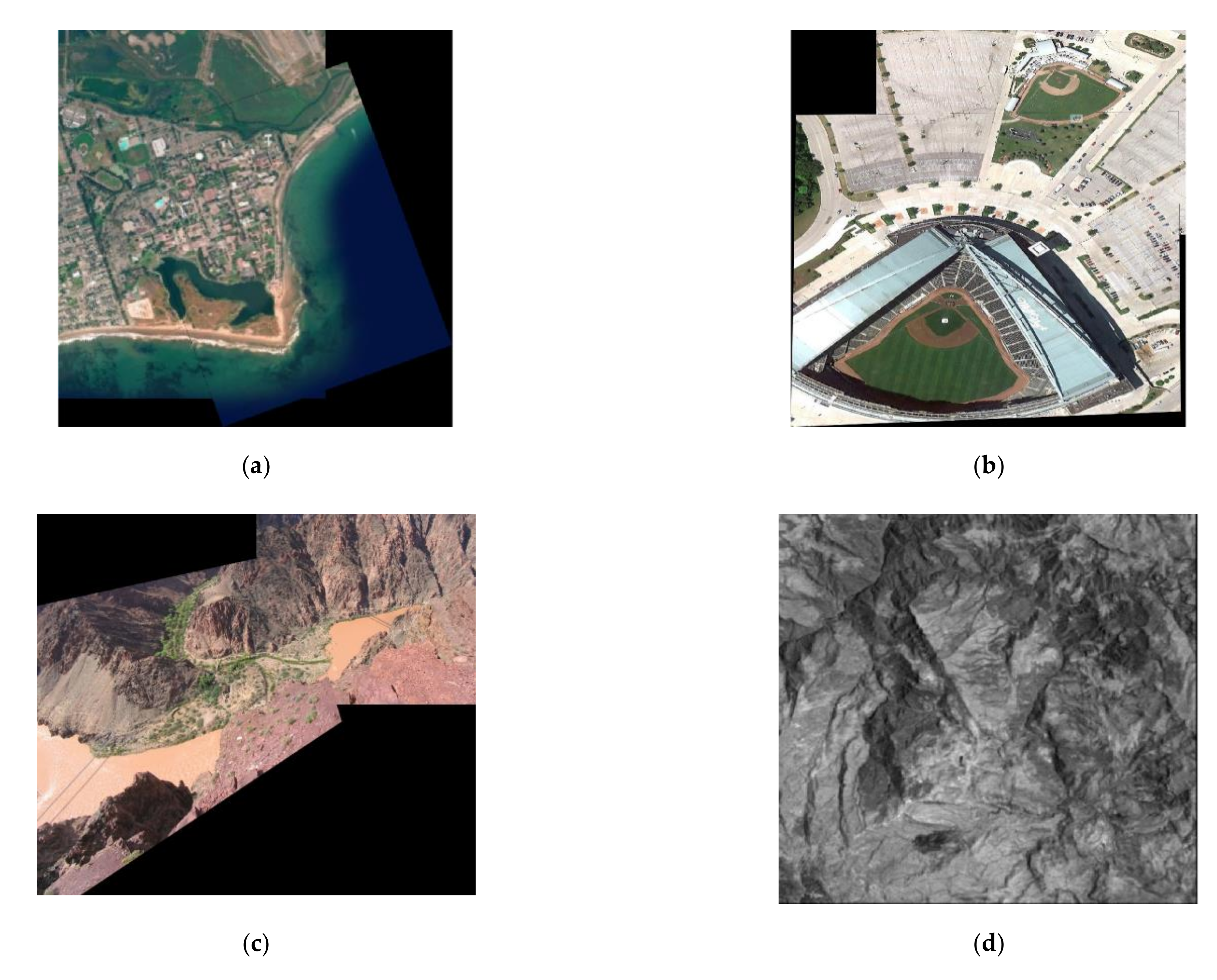

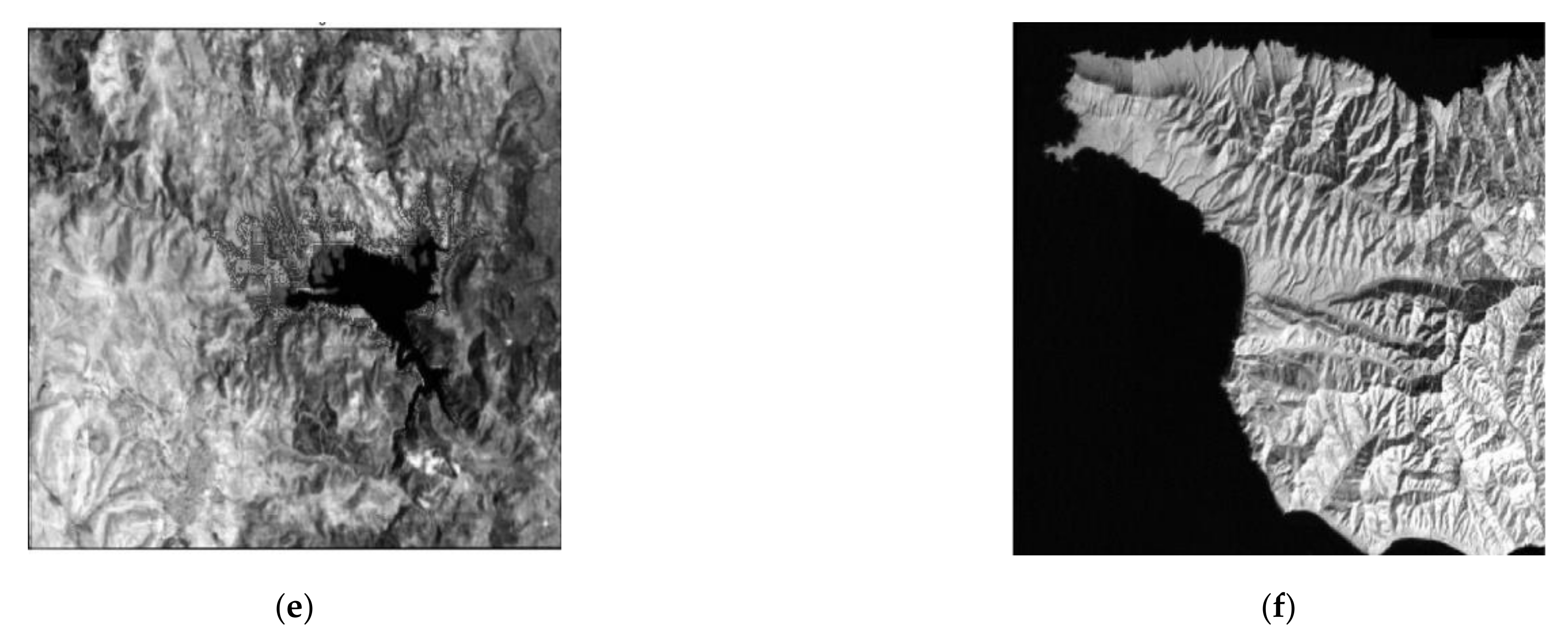

| Pair | Sensor and Data | Size | Image Characteristic |

|---|---|---|---|

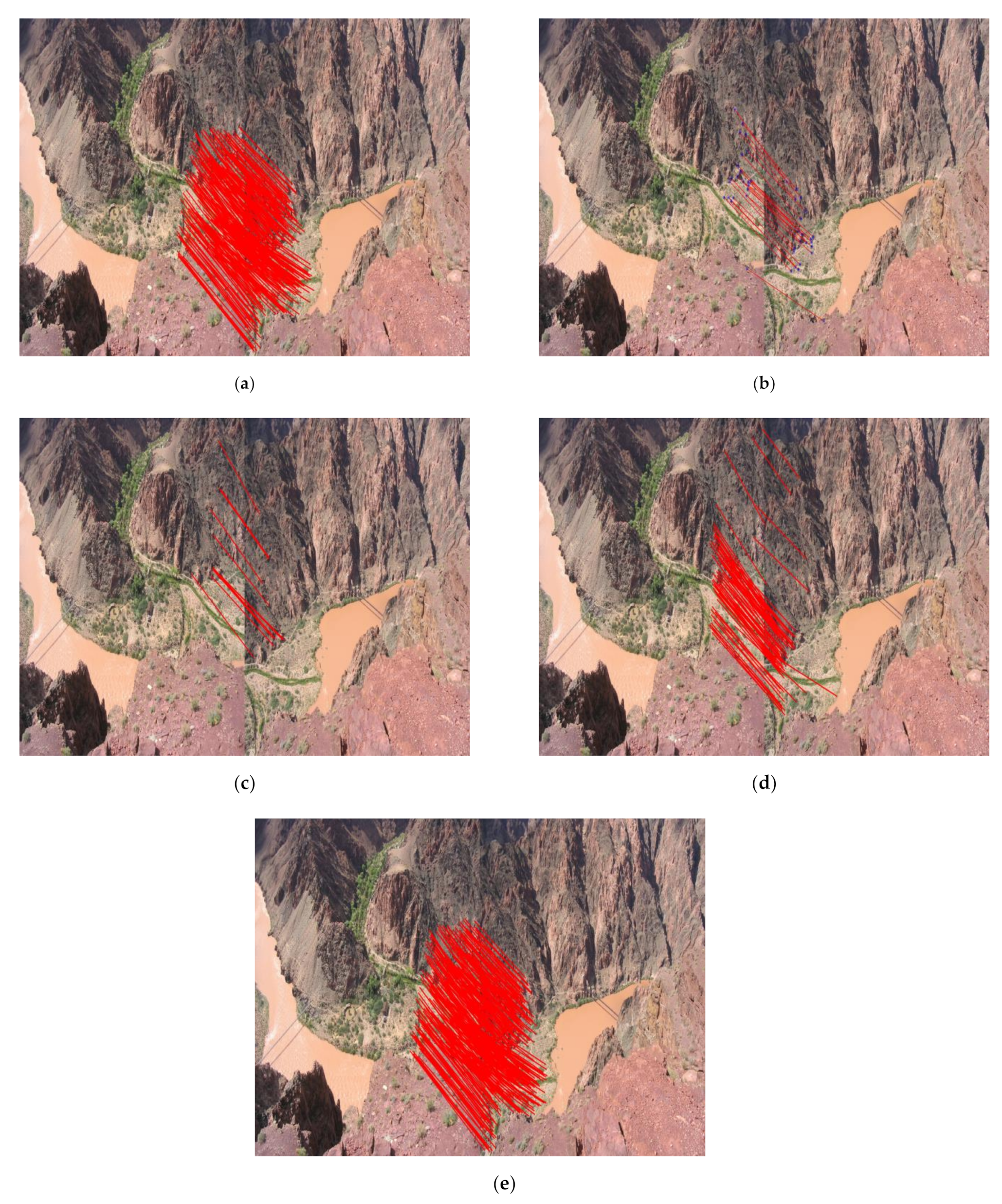

| Pair-A | Remote sensing image data set | 306 × 386 | Geographic images |

| Remote sensing image data set | 472 × 355 | ||

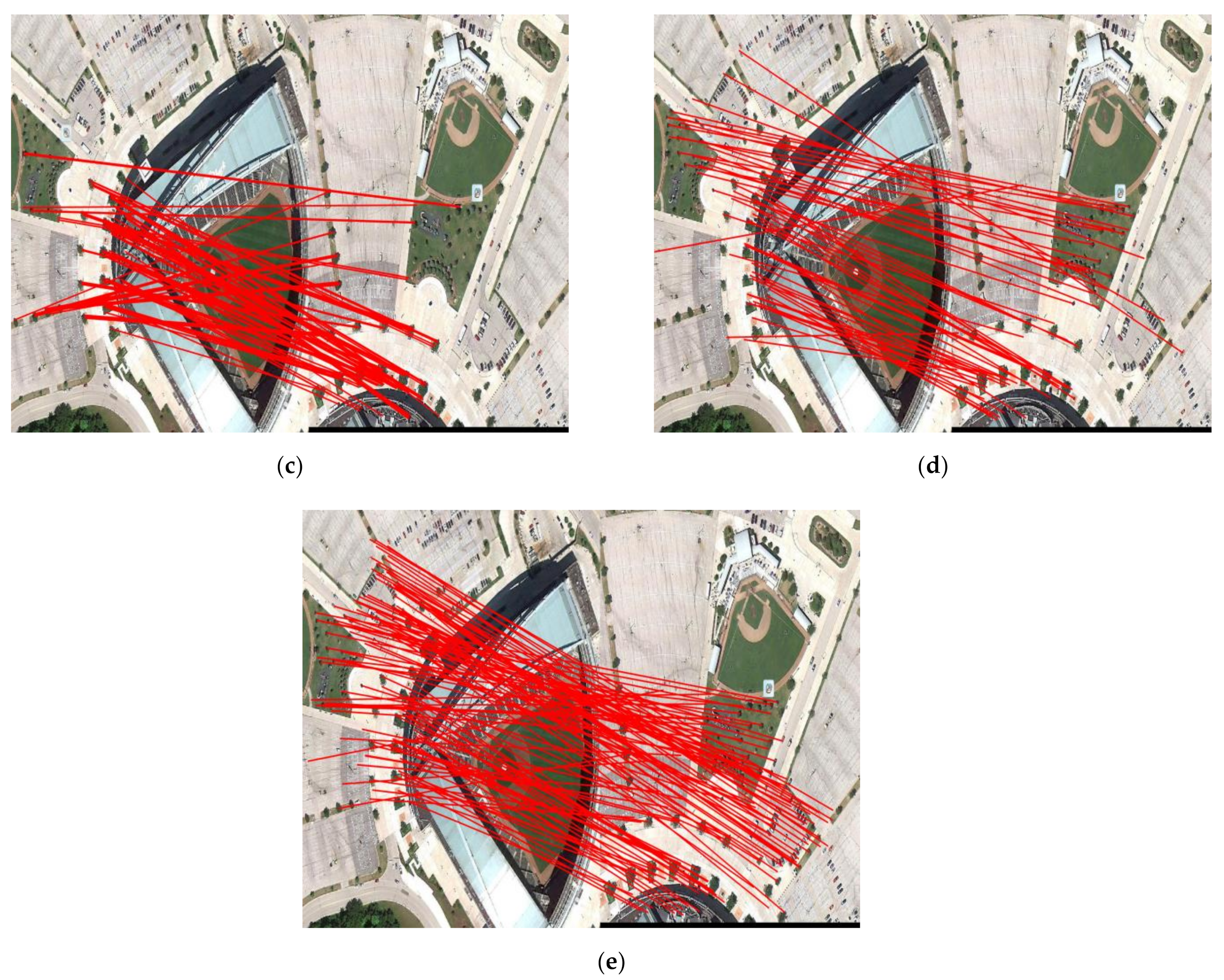

| Pair-B | ADS 40, SH52/August 6, 2008 | 705 | Stadium in Stuttgart, Germany |

| ADS 40, SH52/August 6, 2008 | 695 | ||

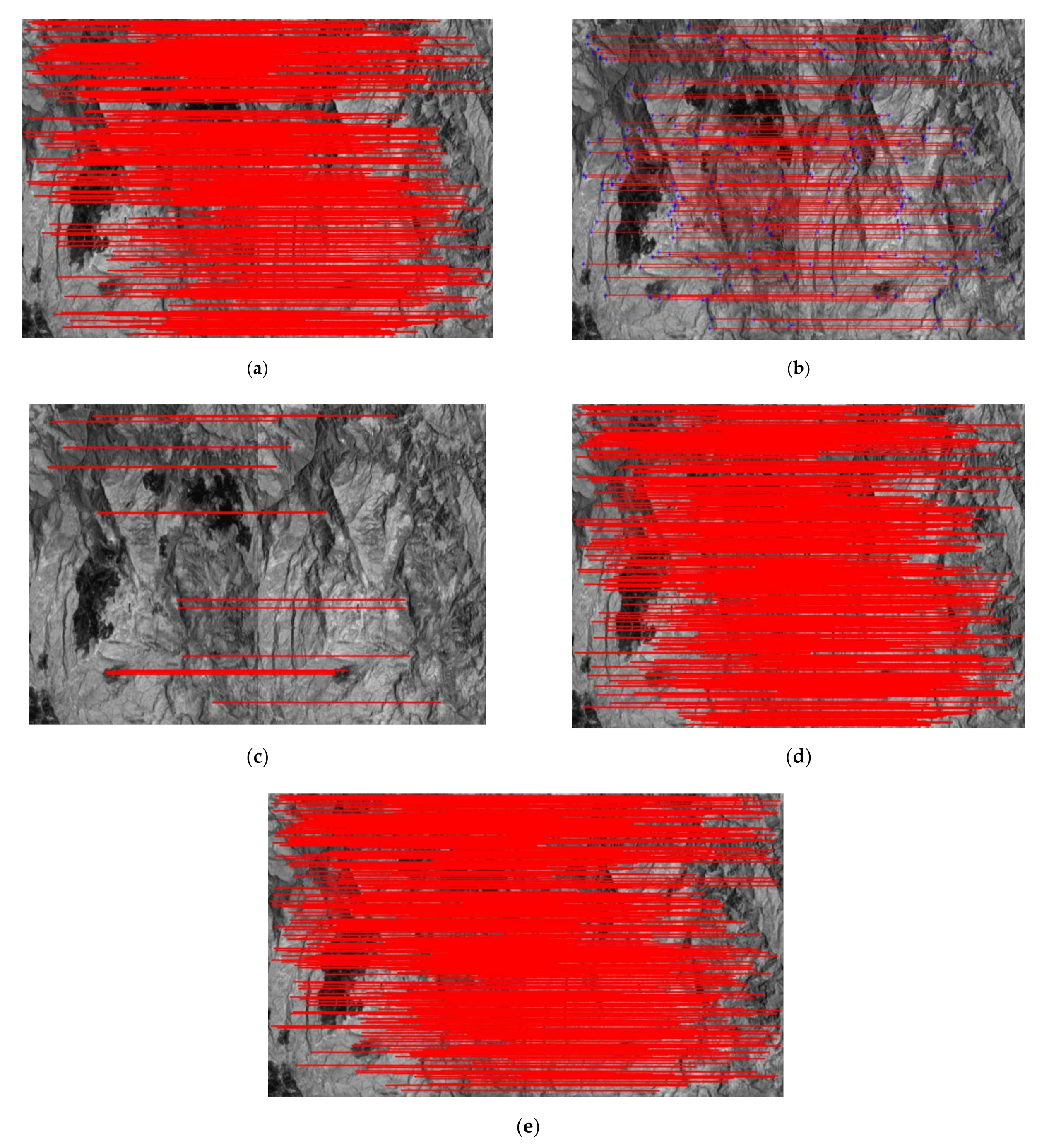

| Pair-C | Remote sensing image data set | 1024 | mountain chain |

| Remote sensing image data set | 1024 | ||

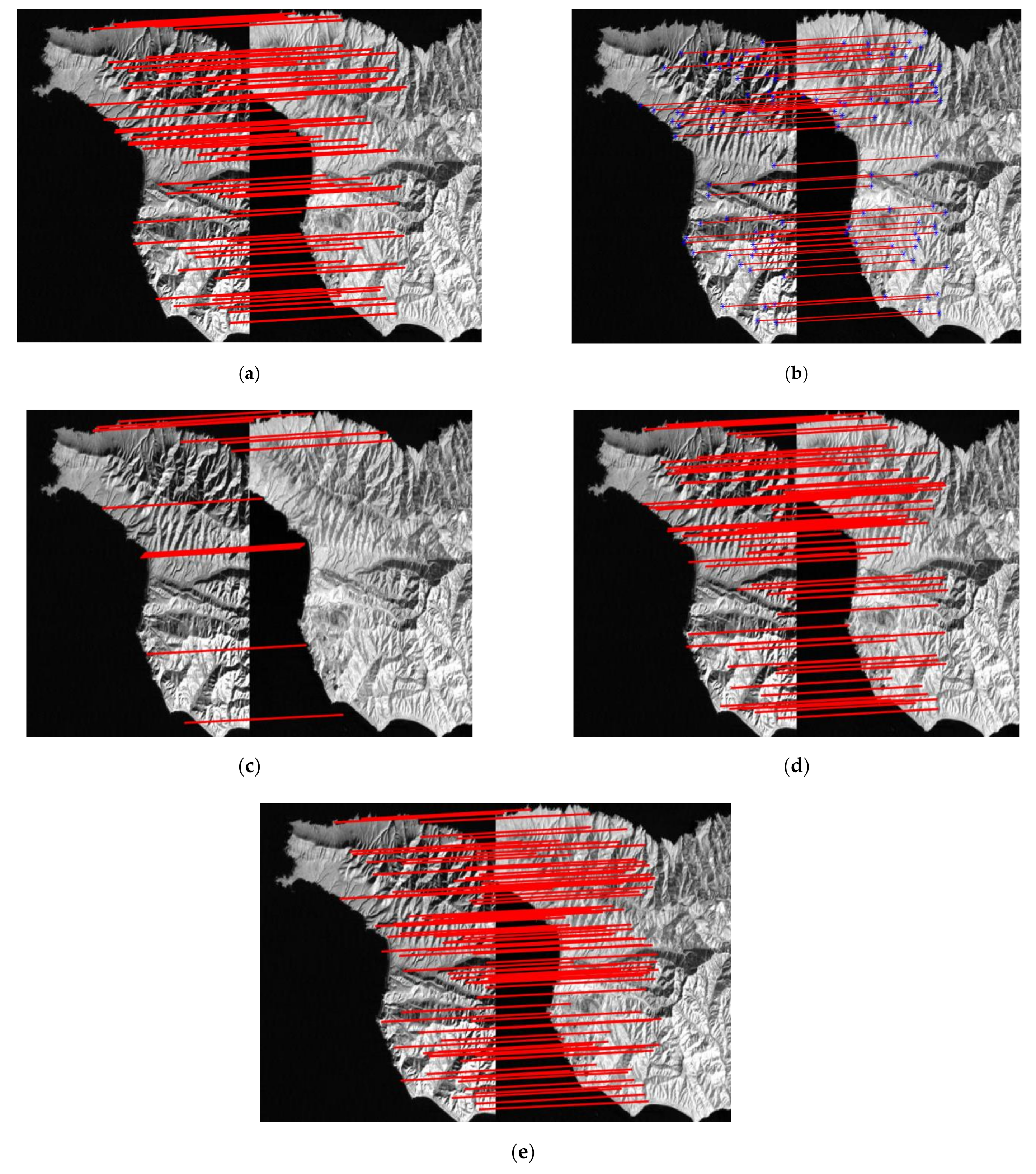

| Pair-D | Landsat-7/ April, 2000 | 512 | Mexico |

| Landsat-7/May, 2002 | 512 | ||

| Pair-E | Landsat-5/September, 1995 | 300 | Sardinia |

| Landsat-5/July, 1996 | 300 | ||

| Pair-F | Remote sensing image data set | 400 | Geographic images |

| Remote sensing image data set | 400 |

| Methods | SIFT+RANSAC | SURF | SAR-SIFT | PSO-SIFT | Our Method |

|---|---|---|---|---|---|

| Number of Matches/Key points | 307/350 | 70/99 | 41/96 | 298/561 | 325/360 |

| Time/s | 7.153 | 6.406 | 6.255 | 9.026 | 10.181 |

| RMSE | 0.3226 | 0.5057 | 0.6645 | 0.3217 | 0.3980 |

| Methods | SIFT+RANSAC | SURF | SAR-SIFT | PSO-SIFT | Our Method |

|---|---|---|---|---|---|

| Number of Matches/Key points | 73/616 | 3/212 | 102/392 | 54/455 | 110/393 |

| Time/s | 10.239 | 10.59 | 18.478 | 18.825 | 17.456 |

| RMSE | 0.5850 | * | 0.5997 | 0.6550 | 0.7608 |

| Methods | SIFT+RANSAC | SURF | SAR-SIFT | PSO-SIFT | Our Method |

|---|---|---|---|---|---|

| Number of Matches/Key points | 283/917 | 22/280 | 14/132 | 77/883 | 329/762 |

| Time/s | 32.701 | 12.058 | 22.894 | 169.662 | 51.385 |

| RMSE | 0.5024 | 0.5534 | 0.6440 | 0.6107 | 0.6143 |

| Methods | SIFT+RANSAC | SURF | SAR-SIFT | PSO-SIFT | Our Method |

|---|---|---|---|---|---|

| Number of Matches/Key points | 449/971 | 122/237 | 18/117 | 542/1196 | 603/975 |

| Time/s | 14.151 | 8.431 | 9.556 | 50.271 | 28.158 |

| RMSE | 0.5988 | 0.5909 | 0.5381 | 0.6121 | 0.7449 |

| Methods | SIFT+RANSAC | SURF | SAR-SIFT | PSO-SIFT | Our Method |

|---|---|---|---|---|---|

| Number of Matches/Key points | 111/336 | 65/198 | 11/103 | 112/345 | 166/325 |

| Time/s | 6.755 | 8.65 | 7.091 | 12.323 | 13.089 |

| RMSE | 0.6168 | 0.5535 | 0.5293 | 0.6444 | 0.8219 |

| Methods | SIFT+RANSAC | SURF | SAR-SIFT | PSO-SIFT | Our Method |

|---|---|---|---|---|---|

| Number of Matches/Key points | 83/292 | 59/181 | 16/141 | 78/372 | 97/244 |

| Time/s | 6.624 | 9.50 | 8.277 | 10.833 | 11.661 |

| RMSE | 0.5734 | 0.5602 | 0.4691 | 0.6388 | 0.7570 |

| Methods | Pair-A | Pair-B | Pair-C | Pair-D | Pair-E | Pair-F |

|---|---|---|---|---|---|---|

| SIFT+RANSAC | 307/0.3226 /7.153 | 73/0.5850/10.239 | 283/0.5024/32.701 | 449/0.5988/14.151 | 111/0.6168/6.755 | 83/0.5734/6.624 |

| SURF | 70 /0.5057/6.406 | 3/*/10.59 | 22/0.5534/12.058 | 122/0.5909/8.431 | 65/0.5535/8.65 | 59/0.5602/9.50 |

| SAR-SIFT | 41/0.6645/6.255 | 102/0.5997/18.478 | 14/0.6440/22.894 | 18/0.5381/9.556 | 11/0.5293/7.091 | 16/0.4691/8.277 |

| PSO-SIFT | 298/0.3217/9.026 | 54/0.6550/18.825 | 77/0.5107/169.662 | 542/ 0.6121/50.271 | 112/0.6444/12.323 | 78/0.6388/10.833 |

| Our method | 325/0.3980/10.181 | 110/0.7608/17.456 | 329/0.6143/51.385 | 627/0.7463/26.51 | 176/0.8796/13.741 | 97/0.7570/11.661 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, Y.; Leng, C.; Zhang, X.; Pei, Z.; Cheng, I.; Basu, A. HOLBP: Remote Sensing Image Registration Based on Histogram of Oriented Local Binary Pattern Descriptor. Remote Sens. 2021, 13, 2328. https://doi.org/10.3390/rs13122328

Hong Y, Leng C, Zhang X, Pei Z, Cheng I, Basu A. HOLBP: Remote Sensing Image Registration Based on Histogram of Oriented Local Binary Pattern Descriptor. Remote Sensing. 2021; 13(12):2328. https://doi.org/10.3390/rs13122328

Chicago/Turabian StyleHong, Yameng, Chengcai Leng, Xinyue Zhang, Zhao Pei, Irene Cheng, and Anup Basu. 2021. "HOLBP: Remote Sensing Image Registration Based on Histogram of Oriented Local Binary Pattern Descriptor" Remote Sensing 13, no. 12: 2328. https://doi.org/10.3390/rs13122328

APA StyleHong, Y., Leng, C., Zhang, X., Pei, Z., Cheng, I., & Basu, A. (2021). HOLBP: Remote Sensing Image Registration Based on Histogram of Oriented Local Binary Pattern Descriptor. Remote Sensing, 13(12), 2328. https://doi.org/10.3390/rs13122328