A Deep Learning Approach to an Enhanced Building Footprint and Road Detection in High-Resolution Satellite Imagery

Abstract

:1. Introduction

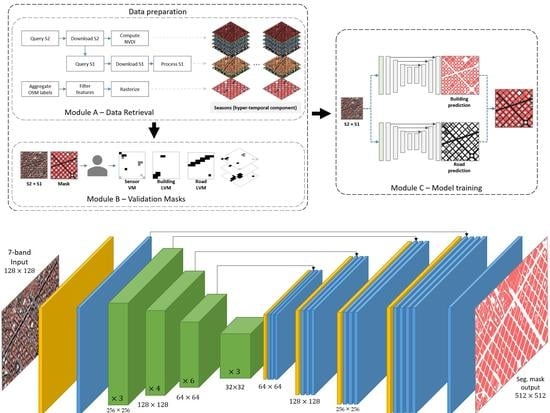

- S2 data (multi-spectral) is fused with S1 data (SAR) making the most of both sensors for building and road detection at a higher resolution (2.5 m) than the input one (10 m).

- A methodology to generate detailed remote sensing data-sets for building footprints and road networks detection using OSM has been developed. Validation masks are proposed for dealing with labeling errors.

- Taking advantage of the high revisit times of the Sentinel missions, a low-cost data augmentation technique is proposed.

- The standard U-Net [15] architecture has been slightly modified given rise to fine-grained segmentation masks. Accordingly, resulting mappings quadruple the input’s spatial resolution.

2. Related Works

2.1. Deep Learning, Convolutional Neural Networks, and Semantic Segmentation

2.2. Deep Learning in Remote Sensing: Applications Using Sentinel-1 and Sentinel-2

2.3. Semantic Segmentation of Buildings and Roads

2.4. Open Data for Deep Learning Labeling

- Omission noise: refers to an object, clearly visible in a satellite/aerial image, which has not been labeled. This type of noise is particularly noticeable in the building labels, since many rural-areas are not completely labeled in OSM. Moreover, small roads and alleys also suffer from omission noise, commonly being omitted from maps.

- Registration noise: refers to an object which has been labeled but its location in the map is inaccurate. Due to the greater spatial resolution, labeling misalignment are more noticeable when dealing with aerial imagery.

3. Proposal: Enhanced Building Footprint and Road Network Detection

3.1. Data-Set Generation

3.2. Validation Masks

- Sensor validation masks: contain information related to low quality data resulting from sensing errors such as the presence of clouds, shadows, etc.

- Label-specific validation masks: address omission noise derived from the use of open databases such as OSM. Specific validation masks are individually generated for each target label (building and road), allowing one to reuse those masks when building up different legends.

3.3. Network Implementation

- Deconvolution (up-sampling at the output): To append two extra deconvolutional layers to the decoder (Figure 3a). Note that, this approach prevents the deconvolutional layers from getting contextual information due to the lack of shared weights (there are no same-level layers on the contraction path). However, the computational cost is lower compared to increasing the resolution at the input.

- Input re-scaling (up-sampling at the input): To increase the input resolution before the feature extractor (Figure 3b). On the one hand, this outlook allows one to keep the network architecture unaltered, avoiding losing pattern information. However, the increase in the input resolution has a negative effect on the computational cost. In order to enhance input’s resolution two classical interpolation algorithms have been considered (Bicubic and Nearest Neighbor) as well as a state-of-the-art S2 super-resolution approach (Galar et al. [63]).

3.4. Network Training

4. Experimental Framework

4.1. Data-Set

4.2. Training Details

4.3. Performance Measures and Evaluation

4.4. Summary of Experiments

- What is the advantage of combining optical and SAR data for building and road detection?

- Can the output resolution be greater than the input resolution?

- How much can the performance be pushed with sensor and label-specific validation masks?

5. Experimental Study

- Experiment 1: On the goodness of S1 and S2 data fusion: assesses the suitability of optical (S2) and SAR (S1) data, as well as their fusion for building and road extraction tasks.

- Experiment 2: Enhancing the output resolution: evaluates different approaches for enhancing the output resolution up to 2.5 m.

- Experiment 3: Assessing the impact of validation masks: studies the impact the use of validation masks has on the model performance.

5.1. Experiment 1: On the Goodness of S1 and S2 Data Fusion

5.2. Experiment 2: Enhancing the Output Resolution

5.3. Experiment 3: Assessing the Impact of Validation Masks

5.4. Discussion

- Combining optical (S2) and radar (S1) data aids the network to cope with complex scenarios, reducing the negative effect of color spectrum variations.

- Enhancing the resolution at the output gives the model more room for better defining the edges of the objects. Moreover, when using U-Net based architectures enhancing the resolution at the input, the model can take advantage of skip connections resulting in more accurate segmentation masks.

- Validation masks are a simple yet effective artifact to increase the quality of the data, drastically reducing the hand-labeling costs.

6. Conclusions and Future Work

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Network |

| DEM | Digital Elevation Model |

| EO | Earth Observation |

| ESA | European Space Agency |

| FCN | Fully Convolutional Network |

| FN | False Negative |

| FP | False Positive |

| GRD | Ground Range Detected |

| IoU | Intersection over Union |

| IW | Interferometric Wide |

| LVM | Label-specific Validation Mask |

| mIoU | Mean Intersection over Union |

| NDVI | Normalized Difference Vegetation Index |

| OSM | OpenStreetMap |

| S1 | Sentinel-1 |

| S2 | Sentinel-2 |

| SAR | Synthetic Aperture Radar |

| SciHub | Sentinels Scientific Data Hub |

| SNAP | Sentinel Application Platform |

| SRTM | Shuttle Radar Topography Missing |

| SVM | Sensor Validation Mask |

| SWIR | Short-Wave Infrared |

| TN | True Negative |

| TP | True Positive |

| TTA | Test Time Augmentations |

| vIoU | Visual Intersection over Union |

| VM | Validation Mask |

| VNIR | Visible/Near Infrared |

References

- Ball, J.; Anderson, D.; Chan, C.S. A Comprehensive Survey of Deep Learning in Remote Sensing: Theories, Tools and Challenges for the Community. J. Appl. Remote Sens. 2017, 11, 1–54. [Google Scholar] [CrossRef] [Green Version]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Zhang, L.; Du, B. Deep Learning for Remote Sensing Data: A Technical Tutorial on the State of the Art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- European Spatial Agency. Copernicus Programme. Available online: https://www.copernicus.eu (accessed on 1 August 2021).

- Drusch, M. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Potin, P.; Rosich, B.; Miranda, N.; Grimont, P.; Shurmer, I.; O’Connell, A.; Krassenburg, M.; Gratadour, J.-B. Copernicus Sentinel-1 Constellation Mission Operations Status. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar]

- Ienco, D.; Interdonato, R.; Gaetano, R.; Ho Tong Minh, D. Combining Sentinel-1 and Sentinel-2 Satellite Image Time Series for land cover mapping via a multi-source deep learning architecture. ISPRS J. Photogramm. Remote Sens. 2019, 158, 11–22. [Google Scholar] [CrossRef]

- Li, X.; Lei, L.; Sun, Y.; Li, M.; Kuang, G. Collaborative Attention-Based Heterogeneous Gated Fusion Network for Land Cover Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 3829–3845. [Google Scholar] [CrossRef]

- Tu, Y. Improved Mapping Results of 10 m Resolution Land Cover Classification in Guangdong, China Using Multisource Remote Sensing Data with Google Earth Engine. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5384–5397. [Google Scholar] [CrossRef]

- Zhu, X.X. So2Sat LCZ42: A Benchmark Data Set for the Classification of Global Local Climate Zones. IEEE Geosci. Remote Sens. Mag. 2020, 8, 76–89. [Google Scholar] [CrossRef] [Green Version]

- Schmitt, M.; Hughes, L.; Qiu, C.; Zhu, X. SEN12MS—A Curated Dataset of Georeferenced Multi-Spectral Sentinel-1/2 Imagery for Deep Learning and Data Fusion. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, IV-2/W7, 153–160. [Google Scholar] [CrossRef] [Green Version]

- Feng, Y.; Yang, C.; Sester, M. Multi-Scale Building Maps from Aerial Imagery. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B3-2020, 41–47. [Google Scholar] [CrossRef]

- Bengio, Y. Deep learning of representations: Looking forward. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2015. [Google Scholar]

- Tao, A.; Sapra, K.; Catanzaro, B. Hierarchical Multi-Scale Attention for Semantic Segmentation. arXiv 2020, arXiv:2005.10821. [Google Scholar]

- Li, J.; Roy, D.P. A Global Analysis of Sentinel-2A, Sentinel-2B and Landsat-8 Data Revisit Intervals and Implications for Terrestrial Monitoring. Remote Sens. 2017, 9, 902. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C. An object-based convolutional neural network (OCNN) for urban land use classification. Remote Sens. Environ. 2018, 216, 57–70. [Google Scholar] [CrossRef] [Green Version]

- Mnih, V.; Hinton, G. Learning to Label Aerial Images from Noisy Data. In Proceedings of the 29th International Coference on International Conference on Machine Learning, ICML’12, Edinburgh, UK, 26 June–1 July 2012. [Google Scholar]

- Sumbul, G.; Charfuelan, M.; Demir, B.; Markl, V. Bigearthnet: A Large-Scale Benchmark Archive for Remote Sensing Image Understanding. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 5901–5904. [Google Scholar]

- Alemohammad, H.; Booth, K. LandCoverNet: A global benchmark land cover classification training dataset. arXiv 2020, arXiv:2012.03111. [Google Scholar]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Convolutional Neural Networks for Large-Scale Remote-Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 645–657. [Google Scholar] [CrossRef] [Green Version]

- Khryashchev, V.; Pavlov, V.; Ostrovskaya, A.; Larionov, R. Forest Areas Segmentation on Aerial Images by Deep Learning. In Proceedings of the 2019 IEEE East-West Design Test Symposium (EWDTS), Batumi, Georgia, 13–16 September 2019. [Google Scholar]

- Dong, S.; Pang, L.; Zhuang, Y.; Liu, W.; Yang, Z.; Long, T. Optical Remote Sensing Water-Land Segmentation Representation Based on Proposed SNS-CNN Network. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar]

- Haklay, M.; Weber, P. OpenStreetMap: User-Generated Street Maps. IEEE Pervasive Comput. 2008, 7, 12–18. [Google Scholar] [CrossRef] [Green Version]

- Xu, Y.; Goodacre, R. On Splitting Training and Validation Set: A Comparative Study of Cross-Validation, Bootstrap and Systematic Sampling for Estimating the Generalization Performance of Supervised Learning. J. Anal. Test. 2018, 2, 249–262. [Google Scholar] [CrossRef] [Green Version]

- Dosovitskiy, A. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Wang, W.; Huang, Y.; Wang, Y.; Wang, L. Generalized Autoencoder: A Neural Network Framework for Dimensionality Reduction. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 496–503. [Google Scholar]

- Helber, P.; Bischke, B.; Hees, J.; Dengel, A. Towards a Sentinel-2 Based Human Settlement Layer. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar]

- Mendili, L.; Puissant, A.; Chougrad, M.; Sebari, I. Towards a Multi-Temporal Deep Learning Approach for Mapping Urban Fabric Using Sentinel 2 Images. Remote Sens. 2020, 12, 423. [Google Scholar] [CrossRef] [Green Version]

- Lary, D.J.; Alavi, A.H.; Gandomi, A.H.; Walker, A.L. Machine learning in geosciences and remote sensing. Geosci. Front. 2016, 7, 3–10. [Google Scholar] [CrossRef] [Green Version]

- Hoeser, T.; Kuenzer, C. Object detection and image segmentation with deep learning on Earth observation data: A review-part I: Evolution and recent trends. Remote Sens. 2020, 12, 1667. [Google Scholar] [CrossRef]

- Helber, P.; Bischke, B.; Dengel, A.; Borth, D. Introducing Eurosat: A Novel Dataset and Deep Learning Benchmark for Land Use and Land Cover Classification. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018. [Google Scholar]

- Valentijn, T.; Margutti, J.; van den Homberg, M.; Laaksonen, J. Multi-Hazard and Spatial Transferability of a CNN for Automated Building Damage Assessment. Remote Sens. 2020, 12, 2839. [Google Scholar] [CrossRef]

- Sun, P.; Chen, G.; Shang, Y. Adaptive Saliency Biased Loss for Object Detection in Aerial Images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7154–7165. [Google Scholar] [CrossRef]

- Krishna Vanama, V.S.; Rao, Y.S. Change Detection Based Flood Mapping of 2015 Flood Event of Chennai City Using Sentinel-1 SAR Images. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar]

- Orlíková, L.; Horák, J. Land Cover Classification Using Sentinel-1 SAR Data. In Proceedings of the 2019 International Conference on Military Technologies (ICMT), Brno, Czech Republic, 30–31 May 2019. [Google Scholar]

- Wagner, F.H. U-Net-Id, an Instance Segmentation Model for Building Extraction from Satellite Images—Case Study in the Joanópolis City, Brazil. Remote Sens. 2020, 12, 1544. [Google Scholar] [CrossRef]

- Hui, J.; Du, M.; Ye, X.; Qin, Q.; Sui, J. Effective Building Extraction from High-Resolution Remote Sensing Images with Multitask Driven Deep Neural Network. IEEE Geosci. Remote Sens. Lett. 2019, 16, 786–790. [Google Scholar] [CrossRef]

- Guo, H.; Shi, Q.; Du, B.; Zhang, L.; Wang, D.; Ding, H. Scene-Driven Multitask Parallel Attention Network for Building Extraction in High-Resolution Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4287–4306. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Q.; Wang, Y. Road Extraction by Deep Residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef] [Green Version]

- Zhou, L.; Zhang, C.; Wu, M. D-LinkNet: LinkNet with Pretrained Encoder and Dilated Convolution for High Resolution Satellite Imagery Road Extraction. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 192–1924. [Google Scholar]

- Fan, K.; Li, Y.; Yuan, L.; Si, Y.; Tong, L. New Network Based on D-LinkNet and ResNeXt for High Resolution Satellite Imagery Road Extraction. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2599–2602. [Google Scholar]

- Radoux, J.; Chomé, G.; Jacques, D.C.; Waldner, F.; Bellemans, N.; Matton, N.; Lamarche, C.; D’Andrimont, R.; Defourny, P. Sentinel-2’s Potential for Sub-Pixel Landscape Feature Detection. Remote Sens. 2016, 8, 488. [Google Scholar] [CrossRef] [Green Version]

- Rapuzzi, A.; Nattero, C.; Pelich, R.; Chini, M.; Campanella, P. CNN-Based Building Footprint Detection from Sentinel-1 SAR Imagery. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 1707–1710. [Google Scholar]

- Oehmcke, S.; Thrysøe, C.; Borgstad, A.; Salles, M.A.V.; Brandt, M.; Gieseke, F. Detecting Hardly Visible Roads in Low-Resolution Satellite Time Series Data. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 2403–2412. [Google Scholar]

- Abdelfattah, R.; Chokmani, K. A semi automatic off-roads and trails extraction method from Sentinel-1 data. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 3728–3731. [Google Scholar]

- Kaiser, P.; Wegner, J.D.; Lucchi, A.; Jaggi, M.; Hofmann, T.; Schindler, K. Learning Aerial Image Segmentation from Online Maps. IEEE Trans. Geosci. Remote Sens. 2017. [Google Scholar] [CrossRef]

- Li, Q.; Shi, Y.; Huang, X.; Zhu, X.X. Building Footprint Generation by Integrating Convolution Neural Network with Feature Pairwise Conditional Random Field (FPCRF). IEEE Trans. Geosci. Remote Sens. 2020, 58, 7502–7519. [Google Scholar] [CrossRef]

- Geofabrik GmbH. Geofabrik. Available online: https://www.geofabrik.de/ (accessed on 1 August 2021).

- Wan, T.; Lu, H.; Lu, Q.; Luo, N. Classification of High-Resolution Remote-Sensing Image Using OpenStreetMap Information. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2305–2309. [Google Scholar] [CrossRef]

- European Spatial Agency. Copernicus Open Access Hub. Available online: https://scihub.copernicus.eu/ (accessed on 1 August 2021).

- Morales-Alvarez, P.; Perez-Suay, A.; Molina, R.; Camps-Valls, G. Remote Sensing Image Classification with Large-Scale Gaussian Processes. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1103–1114. [Google Scholar] [CrossRef] [Green Version]

- Kuc, G.; Chormański, J. Sentinel-2 imagery for mapping and monitoring imperviousness in urban areas. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-1/W2, 43–47. [Google Scholar] [CrossRef] [Green Version]

- Filipponi, F. Sentinel-1 GRD Preprocessing Workflow. Proceedings 2019, 18, 11. [Google Scholar] [CrossRef] [Green Version]

- European Spatial Agency. SNAP—ESA Sentinel Application Platform. Available online: http://step.esa.int (accessed on 1 August 2021).

- Vuolo, F.; Neuwirth, M.; Immitzer, M.; Atzberger, C.; Ng, W.T. How much does multi-temporal Sentinel-2 data improve crop type classification? Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 122–130. [Google Scholar] [CrossRef]

- Persson, M.; Lindberg, E.; Reese, H. Tree Species Classification with Multi-Temporal Sentinel-2 Data. Remote Sens. 2018, 10, 1794. [Google Scholar] [CrossRef] [Green Version]

- QGIS.Org. QGIS Geographic Information System. QGIS Association. 2021. Available online: http://www.qgis.org (accessed on 1 August 2021).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Galar, M.; Sesma, R.; Ayala, C.; Albizua, L.; Aranda, C. Super-Resolution of Sentinel-2 Images Using Convolutional Neural Networks and Real Ground Truth Data. Remote Sens. 2020, 12, 2941. [Google Scholar] [CrossRef]

- Lin, Y.; Xu, D.; Wang, N.; Shi, Z.; Chen, Q. Road Extraction from Very-High-Resolution Remote Sensing Images via a Nested SE-Deeplab Model. Remote Sens. 2020, 12, 2985. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B.; Alamri, A. VNet: An End-to-End Fully Convolutional Neural Network for Road Extraction from High-Resolution Remote Sensing Data. IEEE Access 2020, 8, 179424–179436. [Google Scholar] [CrossRef]

- Taghanaki, S.A. Combo Loss: Handling Input and Output Imbalance in Multi-Organ Segmentation. Comput. Med. Imaging Graph. 2019, 75, 24–33. [Google Scholar] [CrossRef] [Green Version]

- Ma, Y.-D.; Liu, Q.; Qian, Z.-B. Automated image segmentation using improved PCNN model based on cross-entropy. In Proceedings of the 2004 International Symposium on Intelligent Multimedia, Video and Speech Processing, Hong Kong, China, 20–22 October 2004; pp. 743–746. [Google Scholar]

- Sudre, C.H.; Li, W.; Vercauteren, T.; Ourselin, S.; Jorge Cardoso, M. Generalised dice overlap as a deep learning loss function for highly unbalanced segmentations. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2017. [Google Scholar]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A deep learning framework for semantic segmentation of remotely sensed data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef] [Green Version]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Andrade, R.B. Evaluation of Semantic Segmentation Methods for Deforestation Detection in the Amazon. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B3-2020, 1497–1505. [Google Scholar] [CrossRef]

| Trimester (# Valid Tiles Building/Road) | |||||||

|---|---|---|---|---|---|---|---|

| Zone | Dimensions | # Tiles | 2018/06 | 2018/09 | 2018/12 | 2019/03 | Set |

| A coruña | 704 × 576 | 99 | 88/86 | 88/86 | 88/78 | 88/86 | Train |

| Albacete | 1280 × 1152 | 366 | 192/360 | 192/360 | 192/360 | 192/360 | Train |

| Alicante | 1216 × 1472 | 437 | 224/414 | 246/415 | 246/415 | 246/415 | Train |

| Barakaldo | 1088 × 896 | 238 | 214/231 | 215/231 | 215/231 | -/- | Train |

| Barcelona N. | 1152 × 1728 | 486 | 203/283 | 203/283 | 201/283 | 203/283 | Test |

| Barcelona S. | 896 × 1088 | 238 | 135/116 | 137/116 | 137/116 | 137/116 | Test |

| Bilbao | 576 × 832 | 117 | 88/110 | 88/111 | 88/111 | 88/111 | Train |

| Burgos | 512 × 704 | 88 | 54/70 | 54/70 | 54/70 | 53/69 | Train |

| Cáceres | 1024 × 896 | 224 | 110/126 | 110/127 | 110/126 | 110/127 | Test |

| Cartagena | 768 × 1216 | 228 | 132/226 | 133/226 | 132/226 | 132/226 | Train |

| Castellón | 1024 × 1024 | 256 | 134/254 | 135/254 | 134/254 | 134/254 | Train |

| Córdoba | 1088 × 1792 | 476 | 194/461 | 194/461 | 194/461 | 194/461 | Train |

| Denia | 640 × 768 | 120 | 83/115 | 83/116 | 83/116 | 83/116 | Train |

| San Sebastián | 512 × 768 | 96 | 38/53 | -/- | 38/53 | 38/52 | Test |

| Ferrol | 384 × 704 | 66 | 42/27 | 42/27 | 43/27 | 42/27 | Test |

| Gijón | 704 × 832 | 143 | 20/38 | 21/38 | 20/38 | 20/38 | Test |

| Girona | 1536 × 1216 | 456 | 287/443 | 287/445 | 287/443 | -/- | Train |

| Granada | 1664 × 1600 | 650 | 203/426 | 203/427 | 204/427 | 204/426 | Test |

| Huesca | 448 × 576 | 63 | 47/63 | 47/63 | 47/63 | -/- | Train |

| León | 1216 × 768 | 228 | 53/215 | 53/215 | 53/215 | 53/215 | Train |

| Lleida | 576 × 768 | 135 | 55/131 | 55/132 | 41/105 | -/- | Test |

| Logroño | 768 × 960 | 180 | 123/178 | 123/178 | 123/178 | 123/178 | Train |

| Lugo | 768 × 576 | 108 | 14/49 | 15/49 | 15/50 | 15/49 | Test |

| Madrid N. | 1920 × 2688 | 1260 | -/- | -/- | 860/1121 | 861/1121 | Train |

| Madrid S. | 1280 × 2624 | 820 | 455/776 | 459/776 | 459/776 | 459/776 | Train |

| Majadahonda | 1472 × 1344 | 483 | 200/313 | 202/316 | 202/316 | 202/316 | Test |

| Málaga | 1024 × 1472 | 368 | -/- | -/- | -/- | 269/359 | Train |

| Mérida | 512 × 640 | 80 | 20/62 | 21/63 | 21/62 | 21/62 | Train |

| Murcia | 1792 × 1600 | 700 | 130/681 | 130/681 | 130/681 | 130/681 | Train |

| Ourense | 960 × 704 | 165 | 36/164 | 36/164 | 36/164 | 36/164 | Train |

| Oviedo | 960 × 896 | 210 | 153/209 | 153/209 | 155/209 | 155/209 | Train |

| Palma | 1024 × 1344 | 336 | 94/212 | 94/212 | 94/213 | 94/212 | Test |

| Pamplona | 1600 × 1536 | 600 | 382/455 | 382/459 | 382/459 | 384/459 | Test |

| Pontevedra | 384 × 512 | 48 | 16/46 | 18/46 | 18/46 | 18/46 | Train |

| Rivas-vacía | 1088 × 1088 | 289 | 191/265 | 191/265 | 191/265 | 191/265 | Train |

| Salamanca | 832 × 960 | 195 | 130/181 | 130/181 | 130/181 | 130/181 | Train |

| Santander | 1152 × 1216 | 342 | 174/333 | 174/333 | 174/333 | 174/333 | Train |

| Sevilla | 2176 × 2368 | 1258 | 491/1159 | 492/1159 | 491/1159 | 492/1159 | Train |

| Teruel | 640 × 768 | 120 | 67/55 | 67/55 | 67/58 | 67/41 | Test |

| Valencia | 2304 × 1728 | 972 | 341/718 | 341/720 | 341/718 | 341/720 | Test |

| Valladolid | 1408 × 1408 | 484 | 193/265 | 164/265 | 194/265 | 193/265 | Test |

| Vigo | 704 × 1024 | 176 | 61/159 | 61/159 | 62/159 | 62/159 | Train |

| Vitoria | 576 × 896 | 126 | 124/126 | 124/126 | 124/126 | 124/126 | Train |

| Zamora | 512 × 576 | 72 | 15/72 | 17/72 | 17/72 | 17/72 | Train |

| Zaragoza | 2304 × 2752 | 1548 | 1217/1537 | 1217/1537 | 1217/1537 | 1217/1537 | Train |

| Model | Label | F-Score | Rlx. F-Score | IoU | Rlx. IoU |

|---|---|---|---|---|---|

| S1 | Building | 0.6204 | 0.6559 | 0.4704 | 0.5133 |

| Road | 0.3819 | 0.3884 | 0.2376 | 0.2430 | |

| Average | 0.5011 | 0.5221 | 0.3540 | 0.3781 | |

| S2 | Building | 0.6870 | 0.7248 | 0.5389 | 0.5883 |

| Road | 0.5966 | 0.6288 | 0.4331 | 0.4693 | |

| Average | 0.6418 | 0.6768 | 0.4860 | 0.5288 | |

| S1 + S2 | Building | 0.7003 | 0.7400 | 0.5549 | 0.6078 |

| Road | 0.6044 | 0.6385 | 0.4415 | 0.4805 | |

| Average | 0.6523 | 0.6892 | 0.4982 | 0.5441 |

| Model | Label | F-Score | Rlx. F-Score | IoU | Rlx. IoU |

|---|---|---|---|---|---|

| x1 | Building | 0.7003 | 0.7400 | 0.5549 | 0.6078 |

| Road | 0.6044 | 0.6385 | 0.4415 | 0.4805 | |

| Average | 0.6523 | 0.6892 | 0.4982 | 0.5441 | |

| Deconv x4 | Building | 0.7172 | 0.7849 | 0.5684 | 0.6611 |

| Road | 0.7132 | 0.7810 | 0.5551 | 0.6420 | |

| Average | 0.7152 | 0.7829 | 0.5617 | 0.6515 | |

| EDSR (Galar et al.) | Building | 0.7277 | 0.7980 | 0.5753 | 0.6791 |

| Road | 0.7258 | 0.7965 | 0.5648 | 0.6557 | |

| Average | 0.7267 | 0.7972 | 0.5700 | 0.6674 | |

| Bicubic x4 | Building | 0.7432 | 0.8162 | 0.5974 | 0.6994 |

| Road | 0.7481 | 0.8236 | 0.5986 | 0.7015 | |

| Average | 0.7456 | 0.8199 | 0.5980 | 0.7004 | |

| Nearest x4 | Building | 0.7469 | 0.8214 | 0.6027 | 0.7074 |

| Road | 0.7478 | 0.8242 | 0.5982 | 0.7024 | |

| Average | 0.7473 | 0.8228 | 0.6004 | 0.7049 |

| Model | Label | F-Score | Rlx. F-Score | IoU | Rlx. IoU |

|---|---|---|---|---|---|

| VM 1000ep | Building | 0.7216 | 0.7869 | 0.5743 | 0.6645 |

| Road | 0.7395 | 0.8116 | 0.5878 | 0.6846 | |

| Average | 0.7305 | 0.7992 | 0.5810 | 0.6745 | |

| No VM 1000ep | Building | 0.7469 | 0.8214 | 0.6027 | 0.7074 |

| Road | 0.7478 | 0.8242 | 0.5982 | 0.7024 | |

| Average | 0.7473 | 0.8228 | 0.6004 | 0.7049 | |

| No VM 900ep → FT 100ep SVM | Building | 0.7448 | 0.8195 | 0.5998 | 0.7044 |

| Road | 0.7536 | 0.8292 | 0.6054 | 0.7093 | |

| Average | 0.7492 | 0.8243 | 0.6026 | 0.7068 | |

| No VM 900ep → FT 100ep LVM | Building | 0.7491 | 0.8245 | 0.6054 | 0.7117 |

| Road | 0.7540 | 0.8297 | 0.6061 | 0.7102 | |

| Average | 0.7515 | 0.8271 | 0.6057 | 0.7109 | |

| No VM 900ep → FT 100ep SVM + LVM | Building | 0.7503 | 0.8263 | 0.6071 | 0.7147 |

| Road | 0.7555 | 0.8312 | 0.6080 | 0.7125 | |

| Average | 0.7529 | 0.8287 | 0.6075 | 0.7136 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ayala, C.; Sesma, R.; Aranda, C.; Galar, M. A Deep Learning Approach to an Enhanced Building Footprint and Road Detection in High-Resolution Satellite Imagery. Remote Sens. 2021, 13, 3135. https://doi.org/10.3390/rs13163135

Ayala C, Sesma R, Aranda C, Galar M. A Deep Learning Approach to an Enhanced Building Footprint and Road Detection in High-Resolution Satellite Imagery. Remote Sensing. 2021; 13(16):3135. https://doi.org/10.3390/rs13163135

Chicago/Turabian StyleAyala, Christian, Rubén Sesma, Carlos Aranda, and Mikel Galar. 2021. "A Deep Learning Approach to an Enhanced Building Footprint and Road Detection in High-Resolution Satellite Imagery" Remote Sensing 13, no. 16: 3135. https://doi.org/10.3390/rs13163135