Impact of the Dates of Input Image Pairs on Spatio-Temporal Fusion for Time Series with Different Temporal Variation Patterns

Abstract

:1. Introduction

2. Data and Study Area

2.1. Satellite Data

2.1.1. Fine Spatial Resolution Data

2.1.2. Coarse Spatial Resolution Data

2.2. Study Area

3. Methodology

3.1. ESTARFM Algorithm

3.2. Selection of Input Image Pairs

3.3. Accuracy Assessment

4. Results and Analysis

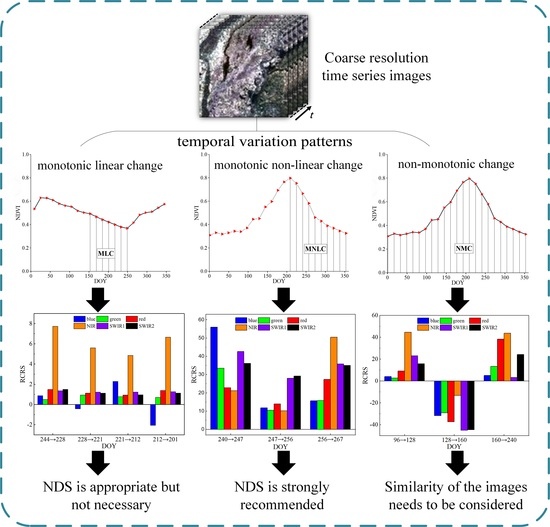

4.1. Impact of the CD on Fusion Accuracy for MLC Period

4.2. Impact of the CD on Fusion Accuracy for MNLC Period

4.3. Impact of the CD on Fusion Accuracy for NMC Period

5. Discussions

6. Conclusions

- (1)

- The impacts of input image date on the accuracy of spatio-temporal fusion depend on the temporal variation patterns of the land surface between the input image date and the prediction date.

- (2)

- For time periods with a monotonic linear change (MLC), a shorter time interval between the input image date (CD) and the prediction date (PD) improves the fusion accuracy. The relationship between the degree of improvement in accuracy and the change of time intervals between the CD and the PD is also nearly linear. The differences of the fusion accuracies of different input image dates are not significant, which implies that a long time interval between the CD and the PD could yield high fusion accuracies for a time period with the MLC.

- (3)

- For time periods with a monotonic nonlinear change (MNLC), a shorter time interval between the input image date (CD) and the prediction date (PD) improves the fusion accuracy as well. The relationship between the degree of improvement in accuracy and the change of time intervals between the CD and the PD is non-linear. The impact of the interval between the CD and the PD on the fusion accuracy is more significant for the MNLC than for the MLC. Thus, for the MNLC, to obtain accurate fusion results, selecting an input image with a date close to the PD is strongly recommended.

- (4)

- For time periods with a non-monotonic change (NMC), in which there is a turning point between the input image date and the prediction date, the impacts of the input image date on the fusion accuracy are complex. A shorter time interval between the CD and the PD may lead to a lower fusion accuracy, whereas a longer time interval between the CD and the PD may lead to a higher fusion accuracy. For the NMC, the optimal selection of the input image date should not depend on the time interval, but on the similarity of the images between the CD and the PD.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, J.M.; Chuvieco, E.; Wang, M. Preface, Special Issue of “50 Years of Environmental Remote Sensing Research: 1969–2019”. Remote Sens. Environ. 2021, 252, 112113. [Google Scholar] [CrossRef]

- Turner, W.; Rondinini, C.; Pettorelli, N.; Mora, B.; Leidner, A.K.; Szantoi, Z.; Buchanan, G.; Dech, S.; Dwyer, J.; Herold, M.; et al. Free and Open-Access Satellite Data Are Key to Biodiversity Conservation. Biol. Conserv. 2015, 182, 173–176. [Google Scholar] [CrossRef] [Green Version]

- Wulder, M.A.; Masek, J.G.; Cohen, W.B.; Loveland, T.R.; Woodcock, C.E. Opening the Archive: How Free Data Has Enabled the Science and Monitoring Promise of Landsat. Remote Sens. Environ. 2012, 122, 2–10. [Google Scholar] [CrossRef]

- Yang, B.; Liu, H.; Kang, E.L.; Shu, S.; Xu, M.; Wu, B.; Beck, R.A.; Hinkel, K.M.; Yu, B. Spatio-Temporal Cokriging Method for Assimilating and Downscaling Multi-Scale Remote Sensing Data. Remote Sens. Environ. 2021, 255, 112190. [Google Scholar] [CrossRef]

- Jia, D.; Cheng, C.; Song, C.; Shen, S.; Ning, L.; Zhang, T. A Hybrid Deep Learning-Based Spatiotemporal Fusion Method for Combining Satellite Images with Different Resolutions. Remote Sens. 2021, 13, 645. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the Blending of the Landsat and MODIS Surface Reflectance: Predicting Daily Landsat Surface Reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar]

- Gómez, C.; White, J.C.; Wulder, M.A. Optical Remotely Sensed Time Series Data for Land Cover Classification: A Review. ISPRS J. Photogramm. Remote Sens. 2016, 116, 55–72. [Google Scholar] [CrossRef] [Green Version]

- Huang, C.; Goward, S.N.; Masek, J.G.; Thomas, N.; Zhu, Z.; Vogelmann, J.E. An Automated Approach for Reconstructing Recent Forest Disturbance History Using Dense Landsat Time Series Stacks. Remote Sens. Environ. 2010, 114, 183–198. [Google Scholar] [CrossRef]

- Lees, K.J.; Quaife, T.; Artz, R.R.E.; Khomik, M.; Clark, J.M. Potential for Using Remote Sensing to Estimate Carbon Fluxes across Northern Peatlands—A Review. Sci. Total Environ. 2018, 615, 857–874. [Google Scholar] [CrossRef]

- Tottrup, C.; Rasmussen, M.S. Mapping Long-Term Changes in Savannah Crop Productivity in Senegal through Trend Analysis of Time Series of Remote Sensing Data. Agric. Ecosyst. Environ. 2004, 103, 545–560. [Google Scholar] [CrossRef]

- Sun, L.; Gao, F.; Xie, D.; Anderson, M.; Chen, R.; Yang, Y.; Yang, Y.; Chen, Z. Reconstructing Daily 30 m NDVI over Complex Agricultural Landscapes Using a Crop Reference Curve Approach. Remote Sens. Environ. 2021, 253, 112156. [Google Scholar] [CrossRef]

- Li, X.; Zhou, Y.; Asrar, G.R.; Mao, J.; Li, X.; Li, W. Response of Vegetation Phenology to Urbanization in the Conterminous United States. Glob. Chang. Biol. 2017, 23, 2818–2830. [Google Scholar] [CrossRef] [PubMed]

- Liu, Q.; Fu, Y.H.; Zhu, Z.; Liu, Y.; Liu, Z.; Huang, M.; Janssens, I.A.; Piao, S. Delayed Autumn Phenology in the Northern Hemisphere Is Related to Change in Both Climate and Spring Phenology. Glob. Chang. Biol. 2016, 22, 3702–3711. [Google Scholar] [CrossRef] [PubMed]

- Chen, B.; Huang, B.; Xu, B. Comparison of Spatiotemporal Fusion Models: A Review. Remote Sens. 2015, 7, 1798–1835. [Google Scholar] [CrossRef] [Green Version]

- Wu, M.; Wu, C.; Huang, W.; Niu, Z.; Wang, C.; Li, W.; Hao, P. An Improved High Spatial and Temporal Data Fusion Approach for Combining Landsat and MODIS Data to Generate Daily Synthetic Landsat Imagery. Inf. Fusion 2016, 31, 14–25. [Google Scholar] [CrossRef]

- Zhu, X.; Cai, F.; Tian, J.; Williams, T.K.-A. Spatiotemporal Fusion of Multisource Remote Sensing Data: Literature Survey, Taxonomy, Principles, Applications, and Future Directions. Remote Sens. 2018, 10, 527. [Google Scholar] [CrossRef] [Green Version]

- Hilker, T.; Wulder, M.A.; Coops, N.C.; Linke, J.; McDermid, G.; Masek, J.G.; Gao, F.; White, J.C. A New Data Fusion Model for High Spatial- and Temporal-Resolution Mapping of Forest Disturbance Based on Landsat and MODIS. Remote Sens. Environ. 2009, 113, 1613–1627. [Google Scholar] [CrossRef]

- Roy, D.P.; Ju, J.; Lewis, P.; Schaaf, C.; Gao, F.; Hansen, M.; Lindquist, E. Multi-Temporal MODIS-Landsat Data Fusion for Relative Radiometric Normalization, Gap Filling, and Prediction of Landsat Data. Remote Sens. Environ. 2008, 112, 3112–3130. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An Enhanced Spatial and Temporal Adaptive Reflectance Fusion Model for Complex Heterogeneous Regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Liu, M.; Liu, X.; Dong, X.; Zhao, B.; Zou, X.; Wu, L.; Wei, H. An Improved Spatiotemporal Data Fusion Method Using Surface Heterogeneity Information Based on ESTARFM. Remote Sens. 2020, 12, 3673. [Google Scholar] [CrossRef]

- Knauer, K.; Gessner, U.; Fensholt, R.; Kuenzer, C. An ESTARFM Fusion Framework for the Generation of Large-Scale Time Series in Cloud-Prone and Heterogeneous Landscapes. Remote Sens. 2016, 8, 425. [Google Scholar] [CrossRef] [Green Version]

- Wang, P.; Gao, F.; Masek, J.G. Operational Data Fusion Framework for Building Frequent Landsat-Like Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7353–7365. [Google Scholar] [CrossRef]

- Liu, M.; Yang, W.; Zhu, X.L.; Chen, J.; Chen, X.H.; Yang, L.Q.; Helmer, E.H. An Improved Flexible Spatiotemporal DAta Fusion (IFSDAF) method for producing high spatiotemporal resolution normalized difference vegetation index time series. Remote Sens. Environ. 2019, 227, 74–89. [Google Scholar] [CrossRef]

- Xie, D.; Gao, F.; Sun, L.; Anderson, M. Improving Spatial-Temporal Data Fusion by Choosing Optimal Input Image Pairs. Remote Sens. 2018, 10, 1142. [Google Scholar] [CrossRef] [Green Version]

- Zhu, X.L.; Helmer, E.H.; Gao, F.; Liu, D.S.; Chen, J.; Lefsky, M.A. A flexible spatiotemporal method for fusing satellite images with different resolutions. Remote Sens. Environ. 2016, 172, 165–177. [Google Scholar] [CrossRef]

- Walker, J.J.; De Beurs, K.M.; Wynne, R.H.; Gao, F. Evaluation of Landsat and MODIS data fusion products for analysis of dryland forest phenology. Remote Sens. Environ. 2012, 117, 381–393. [Google Scholar] [CrossRef]

- Gao, F.; Anderson, M.C.; Zhang, X.; Yang, Z.; Alfieri, J.G.; Kustas, W.P.; Mueller, R.; Johnson, D.M.; Prueger, J.H. Toward mapping crop progress at field scales through fusion of Landsat and MODIS imagery. Remote Sens. Environ. 2017, 188, 9–25. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Cao, R.Y.; Chen, J.; Zhu, X.L.; Zhou, J.; Wang, G.P.; Shen, M.G.; Chen, X.H.; Yang, W. A New Cross-Fusion Method to Automatically Determine the Optimal Input Image Pairs for NDVI Spatiotemporal Data Fusion. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5179–5194. [Google Scholar] [CrossRef]

- Schreier, J.; Ghazaryan, G.; Dubovyk, O. Crop-Specific Phenomapping by Fusing Landsat and Sentinel Data with MODIS Time Series. Eur. J. Remote Sens. 2021, 54, 47–58. [Google Scholar] [CrossRef]

- Cooley, S.W.; Smith, L.C.; Stepan, L.; Mascaro, J. Tracking Dynamic Northern Surface Water Changes with High-Frequency Planet CubeSat Imagery. Remote Sens. 2017, 9, 1306. [Google Scholar] [CrossRef] [Green Version]

- Gao, F.; Hilker, T.; Zhu, X.; Anderson, M.; Masek, J.; Wang, P.; Yang, Y. Fusing Landsat and MODIS Data for Vegetation Monitoring. IEEE Geosci. Remote Sens. Mag. 2015, 3, 47–60. [Google Scholar] [CrossRef]

- Wang, Q.; Blackburn, G.A.; Onojeghuo, A.O.; Dash, J.; Zhou, L.; Zhang, Y.; Atkinson, P.M. Fusion of Landsat 8 OLI and Sentinel-2 MSI Data. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3885–3899. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Zuo, X.; Tian, J.; Li, S.; Cai, K.; Zhang, W. Research on Generic Optical Remote Sensing Products: A Review of Scientific Exploration, Technology Research, and Engineering Application. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3937–3953. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, S.; Woodcock, C.E. Improvement and Expansion of the Fmask Algorithm: Cloud, Cloud Shadow, and Snow Detection for Landsats 4–7, 8, and Sentinel 2 Images. Remote Sens. Environ. 2015, 159, 269–277. [Google Scholar] [CrossRef]

- Zhang, H.K.; Roy, D.P.; Yan, L.; Li, Z.; Huang, H.; Vermote, E.; Skakun, S.; Roger, J.C. Characterization of Sentinel-2A and Landsat-8 Top of Atmosphere, Surface, and Nadir BRDF Adjusted Reflectance and NDVI Differences. Remote Sens. Environ. 2018, 215, 482–494. [Google Scholar] [CrossRef]

- Vermote, E.; Justice, C.; Claverie, M.; Franch, B. Preliminary Analysis of the Performance of the Landsat 8/OLI Land Surface Reflectance Product. Remote Sens. Environ. 2016, 185, 46–56. [Google Scholar] [CrossRef]

- Masek, J.G.; Vermote, E.F.; Saleous, N.E.; Wolfe, R.; Hall, F.G.; Huemmrich, K.F.; Gao, F.; Kutler, J.; Lim, T.K. A Landsat Surface Reflectance Dataset for North America, 1990–2000. IEEE Geosci. Remote Sens. Lett. 2006, 3, 68–72. [Google Scholar] [CrossRef]

- Doxani, G.; Vermote, E.; Roger, J.C.; Gascon, F.; Adriaensen, S.; Frantz, D.; Hagolle, O.; Hollstein, A.; Kirches, G.; Li, F.; et al. Atmospheric Correction Inter-Comparison Exercise. Remote Sens. 2018, 10, 352. [Google Scholar] [CrossRef] [Green Version]

- Foga, S.; Scaramuzza, P.L.; Guo, S.; Zhu, Z.; Dilley, R.D.; Beckmann, T.; Schmidt, G.L.; Dwyer, J.L.; Joseph Hughes, M.; Laue, B. Cloud Detection Algorithm Comparison and Validation for Operational Landsat Data Products. Remote Sens. Environ. 2017, 194, 379–390. [Google Scholar] [CrossRef] [Green Version]

- Masek, J. Automated Registration and Orthorectification Package for Landsat and Landsat-like Data Processing. J. Appl. Remote Sens. 2009, 3, 033515. [Google Scholar] [CrossRef]

- Roy, D.P.; Zhang, H.K.; Ju, J.; Gomez-Dans, J.L.; Lewis, P.E.; Schaaf, C.B.; Sun, Q.; Li, J.; Huang, H.; Kovalskyy, V. A General Method to Normalize Landsat Reflectance Data to Nadir BRDF Adjusted Reflectance. Remote Sens. Environ. 2016, 176, 255–271. [Google Scholar] [CrossRef] [Green Version]

- Roy, D.P.; Li, J.; Zhang, H.K.; Yan, L.; Huang, H.; Li, Z. Examination of Sentinel-2A Multi-Spectral Instrument (MSI) Reflectance Anisotropy and the Suitability of a General Method to Normalize MSI Reflectance to Nadir BRDF Adjusted Reflectance. Remote Sens. Environ. 2017, 199, 25–38. [Google Scholar] [CrossRef]

- Claverie, M.; Ju, J.; Masek, J.G.; Dungan, J.L.; Vermote, E.F.; Roger, J.C.; Skakun, S.V.; Justice, C. The Harmonized Landsat and Sentinel-2 Surface Reflectance Data Set. Remote Sens. Environ. 2018, 219, 145–161. [Google Scholar] [CrossRef]

- Pastick, N.J.; Wylie, B.K.; Wu, Z. Spatiotemporal Analysis of Landsat-8 and Sentinel-2 Data to Support Monitoring of Dryland Ecosystems. Remote Sens. 2018, 10, 791. [Google Scholar] [CrossRef] [Green Version]

- Torbick, N.; Huang, X.; Ziniti, B.; Johnson, D.; Masek, J.; Reba, M. Fusion of Moderate Resolution Earth Observations for Operational Crop Type Mapping. Remote Sens. 2018, 10, 1058. [Google Scholar] [CrossRef] [Green Version]

- Schaaf, C.B.; Gao, F.; Strahler, A.H.; Lucht, W.; Li, X.; Tsang, T.; Strugnell, N.C.; Zhang, X.; Jin, Y.; Muller, J.; et al. First Operational BRDF, Albedo Nadir Reflectance Products from MODIS. Remote Sens. Environ. 2002, 83, 135–148. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Schaaf, C.B.; Strahler, A.H.; Chopping, M.J.; Román, M.O.; Shuai, Y.; Woodcock, C.E.; Hollinger, D.Y.; Fitzjarrald, D.R. Evaluation of MODIS Albedo Product (MCD43A) over Grassland, Agriculture and Forest Surface Types during Dormant and Snow-Covered Periods. Remote Sens. Environ. 2014, 140, 60–77. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Schaaf, C.B.; Sun, Q.; Shuai, Y.; Román, M.O. Capturing Rapid Land Surface Dynamics with Collection V006 MODIS BRDF/NBAR/Albedo (MCD43) Products. Remote Sens. Environ. 2018, 207, 50–64. [Google Scholar] [CrossRef]

- Gao, F.; He, T.; Masek, J.G.; Shuai, Y.; Schaaf, C.; Wang, Z. Angular Effects and Correction for Medium Resolution Sensors to Support Crop Monitoring. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4480–4489. [Google Scholar]

- Shang, R.; Zhu, Z. Harmonizing Landsat 8 and Sentinel-2: A time-series-based reflectance adjustment approach. Remote Sens. Environ. 2019, 235, 111439. [Google Scholar] [CrossRef]

- Wang, Q.; Atkinson, P.M. Spatio-temporal fusion for daily Sentinel-2 images. Remote Sens. Environ. 2018, 204, 31–42. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Wang, X. Spatiotemporal Fusion of Remote Sensing Image Based on Deep Learning. J. Sens. 2020, 2020, 8873079. [Google Scholar] [CrossRef]

- Li, W.; Zhang, X.; Peng, Y.; Dong, M. Spatiotemporal Fusion of Remote Sensing Images Using a Convolutional Neural Network with Attention and Multiscale Mechanisms. Int. J. Remote Sens. 2021, 42, 1973–1993. [Google Scholar] [CrossRef]

- Jia, D.; Song, C.; Cheng, C.; Shen, S.; Ning, L.; Hui, C. A Novel Deep Learning-Based Spatiotemporal Fusion Method for Combining Satellite Images with Different Resolutions Using a Two-Stream Convolutional Neural Network. Remote Sens. 2020, 12, 698. [Google Scholar] [CrossRef] [Green Version]

| FD (DOY) | PD (DOY) | CD (DOY) | Time Interval (Days) |

|---|---|---|---|

| 148 | 196 | 244 (L) | 48 |

| 148 | 196 | 228 (L) | 32 |

| 148 | 196 | 221 (S) | 25 |

| 148 | 196 | 212 (L) | 16 |

| 148 | 196 | 201 (S) | 5 |

| CD (DOY) | PD (DOY) | FD (DOY) | Time Interval (Days) |

|---|---|---|---|

| 240 (L) | 272 | 336 | 32 |

| 247 (S) | 272 | 336 | 25 |

| 256 (L) | 272 | 336 | 16 |

| 267 (S) | 272 | 336 | 5 |

| CD (DOY) | PD (DOY) | FD (DOY) | Time Interval (Days) |

|---|---|---|---|

| 96 (L) | 272 | 336 | 176 |

| 128 (L) | 272 | 336 | 144 |

| 160 (L) | 272 | 336 | 112 |

| 240 (L) | 272 | 336 | 32 |

| 247 (S) | 272 | 336 | 25 |

| 256 (L) | 272 | 336 | 16 |

| 267 (S) | 272 | 336 | 5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, A.; Bo, Y.; Zhao, W.; Zhang, Y. Impact of the Dates of Input Image Pairs on Spatio-Temporal Fusion for Time Series with Different Temporal Variation Patterns. Remote Sens. 2022, 14, 2431. https://doi.org/10.3390/rs14102431

Shen A, Bo Y, Zhao W, Zhang Y. Impact of the Dates of Input Image Pairs on Spatio-Temporal Fusion for Time Series with Different Temporal Variation Patterns. Remote Sensing. 2022; 14(10):2431. https://doi.org/10.3390/rs14102431

Chicago/Turabian StyleShen, Aojie, Yanchen Bo, Wenzhi Zhao, and Yusha Zhang. 2022. "Impact of the Dates of Input Image Pairs on Spatio-Temporal Fusion for Time Series with Different Temporal Variation Patterns" Remote Sensing 14, no. 10: 2431. https://doi.org/10.3390/rs14102431