1. Introduction

Thanks to the rapid development of aerospace technology, communication technology and information processing technology, people have entered the era of remote sensing big data. How to fully explore and mine the growing remote sensing image information has become an urgent problem to be solved [

1,

2,

3]. Landcover classification in remote sensing is a basic and important task in remote sensing big data processing [

4,

5,

6], and it is also a basic work for ecological environment protection [

7,

8], urban planning [

9], geological disaster monitoring [

10,

11], and other fields.

At present, landcover classification in remote sensing images mainly adopts machine learning methods, including shallow machine learning and deep learning. Among them, shallow machine learning methods are mainly represented by Random Forest (RF) [

12], Support Vector Machine (SVM) [

13], etc., which are based on manually-extracted objects such as color, texture, geometric shape and spatial structure, and other feature information. Landcover classification is achieved by learning classification rules from supervised information [

14]. Deep learning methods automatically extract low-level image features of objects from images by building deep networks, and combining them into high-level abstract features, whereby higher classification accuracy can be achieved, which have become the mainstream methods for remote sensing image landcover classification research [

15,

16].

Since remote sensing images not only have the phenomena of “inter-class similarity and intra-class variance”, but also have large scale differences between objects of the same class, which make the automatic classification of remote sensing images have the problems of confusing the classification of similar features and difficulty in identifying small-scale features [

16]. To address the confusing problem of similar features classification, scholars [

17,

18,

19,

20] used Pyramid Pooling Module (PPM), Attention Mechanism (AM), and other methods to model the spatial location and channel relationship. These modules extract the contextual information of features and mutual information between channels, improve the model’s ability to model and understand complex scenes, and reduce confusing information interference. However, these methods are aimed at the feature extraction of the lowest level features, and do not make full use of the spatial position information contained in the top-level features. They are more suitable for the recognition of large-scale objects, while the recognition effect of small-scale objects is not ideal.

To solve the problem that small-scale features are difficult to be recognized, the current methods [

21,

22,

23,

24] mostly use the combination of feature extraction and feature fusion to recover the detailed information of images step by step, among which the representative networks are U-Net [

25], FPN [

26], UperNet [

27], Swin [

28] and Twins [

29], etc. In the research of remote sensing image landcover classification, these methods are often used to improve the recognition ability of multi-scale features. In order to improve the effect of feature extraction, Dalal AL-Alimi [

30] proposed a method combining pyramid extraction network and SE attention mechanism, which can reduce the loss of small objects by selectively retaining the useful information in the feature map through SE. However, the feature map is not fully mined, resulting in the deviation of the detected anchor frame. Wenzhi Zhao [

31] uses graph convolution to extract the bottom features of the network, which is used to capture the long-term dependence in the network and improves the ability to obtain the network context information. However, it ignores the spatial position information contained in the top feature information, resulting in an inaccurate outline of the recognized features. Jianda Cheng [

32] uses a capsule network instead of ResNet for feature extraction, which can enhance the network’s hierarchical understanding of the whole and part of the object and is more conducive to the network’s modeling of the object. However, the advantages of this global and local representation can be more obvious in the recognition of large-scale objects and have little impact on the recognition of small-scale objects. In view of the improvement of the effect of feature fusion method, Yong Liao [

33] uses the attention mechanism and residual connection to fuse multi-scale features, which can improve the ability of the network to extract low-level feature information and high-level semantic information. However, when using the attention mechanism to fuse features, it mainly operates on the underlying features, ignoring the difference between the top-level features and the underlying features, and increasing the risk of small-scale objects being ignored. Qinglie yuan [

34] uses the residual branch network to assist the backbone network in feature transformation, which can enhance the multi-modal data fusion ability of the network. However, this method of realizing adjacent feature fusion through simple element addition ignores the difference of information between feature maps, resulting in the inability to accurately extract low-level semantic information such as the position of the object, and it is difficult to identify small-scale targets [

22,

35].

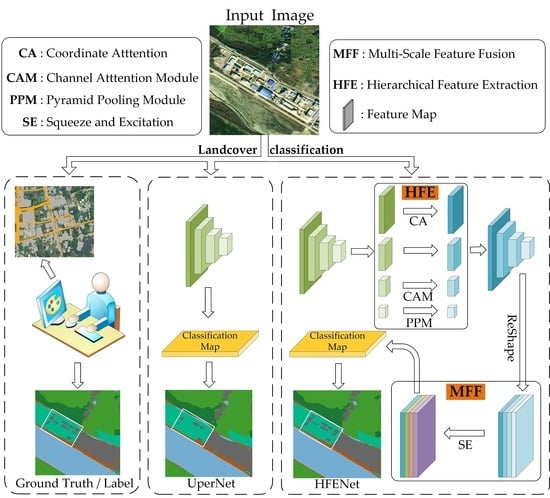

To solve the above-mentioned problems, based on UperNet [

27], this paper proposes a semantic segmentation network, named HFENet, for mining multi-level semantic information, to solve the problems that similar features are easy to be confused and small-scale features are difficult to be identified in remote sensing image landcover classification, so as to improve the accuracy of remote sensing image landcover classification. The main contributions of this paper include:

- (1)

A Hierarchical Feature Extraction (HFE) strategy is proposed. According to the difference of the information contained in the top-level and bottom-level network feature maps, the strategy adopts specific information-mining methods in different network layers to extract the spatial location information, channel information, and global information contained in the feature maps, so as to improve the information mining ability of the network.

- (2)

A Multi-level Feature Fusion (MFF) method is proposed. Aiming at the fusion problem of multiple feature maps with size and semantic differences, this method adopts the method of up sampling the input feature maps step by step and re-weighting them according to the channels, so as to reduce the impact caused by the difference of semantic information, improve the attention of the network to the spatial location information, and enhance the feature expression ability of the network.

- (3)

A Hierarchical Feature Extraction Network (HFENet) model is proposed, which includes HFE and MFF modules. First, the HFE strategy is used to fully mine the information of feature maps, and then the MFF method is used to enhance the expression of feature information, so as to improve the recognition ability of the network to the easily confused and small-scale features and achieve the result of accurate surface coverage classification.

- (4)

The effectiveness of the two modules proposed in our framework is verified by ablation experiments; the effectiveness of our proposed HFENet was demonstrated by performing landcover classification/image segmentation on three remote sensing image datasets and comparing it with the state-of-the-art models (PSPNet [

17], DeepLabv3+ [

36], DANet [

18], etc.).

The rest of this paper is organized as follows.

Section 2 introduces related research work, mainly reviews the development of semantic segmentation in the field of remote sensing image landcover classification in recent years and focuses on the methods based on deep learning.

Section 3 elaborates on the structure of the proposed HFENet and details the design ideas of the proposed HFE and MFF modules.

Section 4 gives the experimental details and results on a self-labeled dataset (MZData) and two public datasets (landCover.ai, WHU Building dataset) [

37,

38]. In

Section 5, a comprehensive analysis is performed for the obtained results.

Section 6 contains a discussion.

4. Experiments and Results

In this section, we focus on the effectiveness of HFENet. Firstly, we verify the role of HFE and MFF in the self-labeled dataset MZData, and then we verify the advantage of HFENet with the datasets of MZData, landcover.ai, and WHU building dataset. Next, we first introduce the datasets used and the parameters involved in the experiments, then explain the experimental design and analyze the experimental results in detail.

4.1. Experiments Settings

4.1.1. Datasets

MZData

This dataset is a land-use/landcover classification dataset produced by a combination of manual interpretation and field survey data using fused satellite imagery of Gaofen-2 (GF-2). The spatial resolution of the image is 1 m, containing three bands of RGB. The original satellite images coverage area is Mianzhu City, Sichuan Province, China (

Figure 4), located in the northwest of the Sichuan Basin, between 31°09′N–31°42′N and 103°54′E–104°20′E. The area is 1245.3 km

2. The city of Mianzhu contains both mountainous and plain terrain areas. Among them, the mountainous areas are mainly woodland, grassland and bare land; while the plain areas contain rich types of ground features, such as buildings, cropland and roads. In the plain area, there are abundant artificial features and large-scale differences between the same features, especially roads and buildings, which have high requirements on the recognition ability of small target objects. The land-use/landcover classification of this dataset contains eight categories, namely cultivated land, garden land, forest land, grassland, buildings, roads, water bodies, and bare land. In addition, in order to deal with the non-study area part introduced in the cropping process, a new category is added as background with all pixel values of 255, which will not have a significant impact on the algorithm classification results.

According to the input requirements of the experimental network, the remote sensing images and interpretation results are sliced into sample images with a resolution of 512*512, and the sample pairs that are all background or contain clouds are manually removed to obtain 10,000 sample images; then the sample set is divided into training set, validation set and test set according to the ratio of 6:2:2 to establish a sample library of land-use/landcover classification data (as shown in

Figure 5).

LandCover.ai

The LandCover.ai dataset [

37] is a dataset for aerial imagery landcover classification. The dataset covers an area of 216 km

2 and contains a total of 41 aerial images, which were taken in Poland and Central Europe. All image data only have three RGB bands. Among them, are 33 orthophotos with 25 cm realistic resolution for each pixel and eight orthophotos with 50 cm realistic resolution for each pixel. The dataset provides a detailed classification of landcover for the main areas of all images by means of manual interpretation, according to three feature categories of buildings, woodlands, and water, and one “other” category. Due to the high spatial resolution of the ground, the image characteristics of different objects are quite different and easy to distinguish.

According to the data set requirements, the data set is divided into 10,674 images with a resolution of 512 × 512, and the training set, validation set, and test set are divided according to the requirements, including 7470 training set images, 1602 validation set images and 1602 test set images, as shown in

Figure 6.

WHU Building Dataset

This dataset is an aerial image building dataset extracted by Wuhan University [

38]. The image contains RGB three channels information, and the original ground resolution is 7.5 cm. By manually interpreting the building vector data of Christchurch, New Zealand, a data set covering an area of about 450 km

2 and 22,000 independent buildings was obtained. Due to the wide coverage area and the large number of buildings, the size and type of buildings vary greatly.

The data set contains 8188 images with 512 × 512 resolution and is divided into training set, validation set and test set. Among them, the training set contains 130,500 independent buildings in 4736 images, the validation set contains 14,500 independent buildings in 1036 images, and the test set contains 42,000 independent buildings in 2416 images, as shown in

Figure 7.

4.1.2. Metrics

To quantitatively evaluate the accuracy of segmentation, this paper uses six metrics to evaluate the effectiveness of the network, which are Pixel Accuracy (PA), mean Pixel accuracy (mP), mean Intersection over Union (mIoU), Frequency Weighted Intersection over Union (FWIoU), mean recall (mRecall) and mean F-1 score (mF1). Among them, mIoU and FWIoU are regional evaluation metrics, and PA, mP, mReCall, and mF1 are pixel-level evaluation metrics. The calculation formulas are respectively as Formulas (1)–(6).

4.1.3. Training Details

All the experimental program codes are based on the pytorch deep learning framework. For all training samples, the mean and standard deviation values of the training set are used for normalization and the online augmentation with random rotation ([−10°, 10°]) and Gaussian noise (

) are used for increasing the size. In the three experiments, the validation-based early stopping mechanism through monitoring the loss value, the optimizer SGD with momentum value of 0.9 and weight attenuation of 0.0001, and the Cross-entropy loss function are used by all networks [

28,

29]. The learning rate is initialized with 0.001 and scheduled by poly. The backbone and number of epochs settings for the three experimental datasets can be seen in

Table 1. For all backbone networks, the pretrained models on the ImageNet dataset are used as the initial weight files for network training.

4.2. Ablation Studies

To verify the role of both HFE and MFF modules, based on MZData, we designed a set of ablation experiments. In the experiments, UperNet was used as the basic network. UperNet + HFE was obtained by replacing the feature extraction part in UperNet with a HFE module. UperNet + MFF was obtained by replacing the feature fusion part in UperNet with MFF module. UperNet + HFE + MFF, namely HFENet, was obtained by replacing the feature extraction part and the feature fusion part in UperNet with a HFE module and a MFF module. The backbone used for each model was resnet101, the epochs for training was 500, and the initial learning rate was 0.001.

Based on MZData, the results of evaluation metrics such as mIoU, FWIoU, PA, mP, mRecall and mF1 were obtained by experimenting with UperNet and different variants of the network were obtained after replacing its modules (

Table 2).

As can be seen from

Table 2, compared with the UperNet network, whether it is the improvement of introducing HFE for feature extraction or the introduction of MFF for feature fusion, the results of various evaluation indicators have a certain improvement. In the experiments, the improvement with HFE alone is better than MFF; the effect of using two improvements (HFENet) simultaneously is more obvious. In order to verify the respective roles of the HFE module and the MFF module in the network, through the quantification of the results, the IoU evaluation index results of each category in the test set were obtained (

Table 3), and the four experimental results were visualized (

Figure 8).

As can be seen from

Table 3, the introduction of HFE or MFF modules alone results in a significant improvement in the IoU values of confusing features such as cropland, garden plot, woodland and grassland. Because MFF assigns weights to the fused multi-scale features, and HFE module mines the relationship between channels in high-level semantic information, it can improve the expression of specific semantic information and reduce the impact of interference information on classification. However, for road and building, which have large scale differences, the IoU values not only do not improve, but also have a slight decrease. This is due to the lack of extraction of precise image location information in the case of only MFF; in the case of only HFE, the network prefers the expression of high-level semantic information in classification, resulting in incomplete representation of low-level semantic information such as spatial location. Two improvements (HFENet) are also introduced, and the IoU values are significantly improved relative to UperNet, both for confusable features and small-scale features. Especially in small-scale features (such as Road and Building), the IoU value is increased by about 10%. This is caused by the characteristics of the HFE module and the MFF module. First, the HFE module can effectively obtain the spatial location information of the images by adopting specific extraction methods for the characteristics of different feature maps. Then, MFF can assign weights to different characteristics of the fused feature maps. Combining two modules at the same time, the network can focus not only on high-level semantic information, but also on low-level semantic information such as spatial location. From the above comparison experiments, it can be seen that the HFE and MFF modules are effective in extracting semantic information at different levels and fusing multi-level and multi-scale features.

It can be seen from

Figure 8a–c that in the process of semantic segmentation, UperNet tends to ignore small-scale features, resulting in discontinuous or even unrecognized phenomena (shown in the box in

Figure 8). The introduction of HFE (UperNet + HFE) or MFF (UperNet + MFF) alone not only fails to improve the network’s utilization of low-level semantic information such as spatial location, but the network is more likely to ignore low-level semantic information; the introduction of both modules at the same time (HFENet) has significantly improved the recognition results. For long and narrow roads, UperNet does not recognize them at all, and HFENet can recognize them well, but there is also a phenomenon that the recognition results are discontinuous. For small buildings, the HFENet recognition results in finer contours, closer to the ideal situation.

From

Figure 8d, it can be seen that UperNet cannot accurately handle the phenomena such as interlacing between features and dissimilarities in the same spectrum, which has an impact on the classification accuracy. Both UperNet + HFE and UperNet + MFF improve the network’s ability to mine advanced semantic information and enhance the recognition of confusing features such as homospectral dissimilarities. HFENet not only improves the recognition ability of the network, but also is more accurate for the boundary contour information of the features.

4.3. Comparing with the State-of-the-Art

In order to prove the advanced nature of the method proposed in this paper, we conduct a set of comparative experiments on the landcover.ai, MZData and WHU building dataset for HFENet and the other seven most advanced landcover classification methods, i.e., U-Net [

25], DeepLabv3+ [

36], PSPNet [

17], FCN [

39], UperNet [

27], DANet [

18], SegNet [

61], to analyze the parameters and Flops of each network and the obtained visualization and quantitative results. U-Net, DeepLabv3+, and SegNet represent encoder-decoder networks. FCN stands for fully convolutional network. PSPNet and UpperNet represent networks for pyramid pooling methods. DANet represents a network of attention mechanism methods.

4.3.1. Experimental Results on MZData

The network was trained and tested on the MZData, and the results of the six quantitative evaluation metrics were calculated as shown in

Table 4.

As can be seen from

Table 4, HFENet outperforms other methods in all six evaluation indicators. Compared with FCN network, mIoU is increased by 10.60 percentage points; compared with UperNet, mIoU is increased by 7.41 percentage points. To verify whether HFENet is superior to other methods in identifying small-scale features, we further counted the IoU values of different networks for each class in the experimental results, as shown in

Table 5.

From the results in

Table 5, it can be seen that, for the relatively small-scale ground object categories such as building and road, compared with the other six networks, the IoU value of HFENet is generally increased by about 10 percentage points. For other easily confused land object categories, such as cultivated land, grassland, and forest land, the IoU value of HFENet has also been significantly improved. In order to more intuitively illustrate the superiority of HFENet compared to other methods, we visually compare the classification results of different networks, as shown in

Figure 9.

It can be seen from

Figure 9a–c that for narrow roads, the HFENet network can identify them well, but other networks cannot identify them. In

Figure 9d, for small buildings, the HFENet network identifies very well, but UperNet, DANet, and other networks do not have complete identification, and FCN does not even identify the building. In

Figure 9e, for the interlaced grassland and woodland, the spectrum of the two ground objects is similar, but the texture information is quite different, the classification effect of HFENet is obviously better than that of other networks. This shows that HFENet is able to capture the low-level semantic information contained in the underlying network using the HFE module, and then adjust the weight relationship between the high-level semantic information and the low-level semantic information through MFF, so that the network can not only separate the two categories by the low-level semantic information, but also correctly classify them by mining the high-level semantic information, thus making the goal of classification more complete.

4.3.2. Experimental Results on WHU Building Dataset

In order to further confirm the advantages of HFENet in multi-scale feature recognition, we specially chose to train and test our model and the other state-of-the-art models on the WHU building dataset [

38]. The dataset is dominated by buildings, which are representative multi-scale features. According to the experimental results, we calculate the six evaluation metrics and the IoU values of the background and buildings relative to each model respectively, as shown in

Table 6.

It can be seen from

Table 6 that HFENet is obviously superior to other models in terms of the classification of buildings with large scale differences, both in the six overall metrics and in the IoU of each category. The mIoU value of HFENet reached 92.12%, about 2 percentage points higher than that of DANet, PSPNet, UperNet and Deeplabv3+, about 6 percentage points higher than U-Net and SegNet; about 12 percentage points higher than FCN. In terms of building category, the IoU values of all models except HFENet are lower than 85%, and the highest is only 84.02%; the IoU value of HFENnet reached 86.09%, which is 19.45% higher than that of FCN and 2.07% higher than that of PSPNet or DANet.

To more intuitively illustrate the superiority of HFENet over other models, we visually compare the classification results of different models, and the results are shown in

Figure 10.

In

Figure 10a, in addition to HFENet and SegNet, other models have different degrees of misclassification, that is, some backgrounds are classified into building class; Compared with SegNet, the outline of HFENet classification is clearer. In

Figure 10b,c, for large-scale buildings, the classification results of HFENet and PSPNet are more complete, while the classification results of other models are missing and relatively broken. In

Figure 10d, for small-scale buildings, it can be clearly seen that other models cannot correctly classify buildings except HFENet. In

Figure 10e, there is a colorful building being quite different from other buildings, which increases the difficulty of classification. As a result, only HFENet can correctly classify this building, while other models fail to do so. From

Figure 10 and

Table 6, it could be found that HFENet can not only correctly recognize targets in complex situations, but also improve the ability of multi-scale feature recognition.

4.3.3. Experimental Results on landcover.ai

To verify the generalization of the method proposed in this paper, we train and test on the aerial image dataset (landcover.ai), and calculate six evaluation metrics for different network experimental results, as shown in

Table 7.

From

Table 7, it is obvious that HFENet outperforms other networks in all six metrics. The highest mIoU value (compared to FCN) increased by 4.31 percentage points, and the lowest (compared to UperNet) also increased by 0.93 percentage points. In order to further illustrate the advantages of HFENet in small-scale object recognition, we count the IoU values of different networks for each class, as shown in

Table 8.

As can be seen from

Table 8, for the building class, the IoU values of each network are below 80%, but HFENet is higher than FCN by 12.28 percentage points and exceeds UperNet by 1.22 percentage points as well. For all classes except the building class, the IoU values of each network are higher than 90% except for one (Deeplabv3+ for the woodland class), and the difference is not significant, but HFENet is higher than all other networks. In general, the IoU value of HFENet in each class is higher than other networks, and the mIoU value also has obvious advantages. The reason is that the HFENet design pays more attention to the underlying information, which improves the recognition accuracy of small-scale objects.

In order to more intuitively illustrate the superiority of HFENet compared to other methods, the results of the classification results of different networks are visualized and compared, and the results are shown in

Figure 11.

In

Figure 11a, for other types of objects, HFENet and PSPNet have better extraction effects than other networks; U-Net, DeepLabv3+ and SegNet not only have incomplete recognition, but also have misclassifications; DANet has obvious missed classification. In

Figure 11b, for the more obvious building features, HFENet is able to identify and segment them completely; other networks can identify buildings, but most segmentation results are incomplete, with missing, empty, or even fragmented areas. In

Figure 11c, for the two objects of building and container (other category) classes, due to the small difference in color and shape, it is easy to cause confusion. HFENet can distinguish them well, but all other networks identify the container as a building. As can be seen from

Figure 11d,e, for small-scale Other and Building, other networks will appear misidentified or completely unrecognized, and HFENet can correctly identify small-scale targets, although there are also minor problems of incomplete or discontinuous identification.

4.3.4. Comparison of Time and Space Complexity of the Models

In order to evaluate the usability of the model more comprehensively, we take the input image of 3 × 512 × 512 as an example, and calculate the parameter quantity of HFENet and other state-of-the-art models as the evaluation index of space complexity, and the number of floating-point operations (flops) as the evaluation index of time complexity. The results are listed in

Table 9.

It can be seen from

Table 9 that since HFENet is improved based on UperNet, there is little difference between the two models in terms of parameter quantity and flops. From the comparison of parameter quantities alone, among all models, the FCN model with VGG16 as the backbone has the largest parameter quantity; in the network with ResNet50 and ResNet101 as backbone, the parameter amount of HFENet is the largest, but only 0.02 M higher than UperNet. From the perspective of flops, whether the model with ResNet50 or ResNet101 as the backbone, the time complexity of DANet is significantly higher than that of other models, followed by PSPNet. The time complexity of HFENet is very close to that of UperNet, and it is similar to that of FCN and U-Net, but it is significantly lower than that of DANet and PSPNet, and also significantly higher than that of SegNet and DeepLabv3++.

In view of the above, from the perspective of quantitative evaluation results and visual effects, the HFENet method proposed in this paper has achieved good results on both datasets. In the case of large differences in the scale of the same object, HFENet can accurately identify small-scale objects by obtaining low-level semantic information such as spatial location and achieve the purpose of improving the classification accuracy of small-scale objects. In the case that the ground objects are interlaced with each other, and the same-spectrum foreign objects are easily confused, HFENet can use the low-level semantic information such as texture to distinguish different ground objects, and then correctly classify different categories by mining high-level semantic information. From the perspective of algorithm complexity, HFENet has no obvious advantages over other models in terms of calculation and storage efficiency; however, compared with UperNet, in the case of no significant change in time and space complexity, the classification effect of the model on multi-scale objects is greatly improved, which reflects the significance and value of the improved method in this paper.

5. Discussion

It is shown through experimental studies that the deep learning remote sensing image segmentation framework-HFENet, proposed in this paper, outperforms other state-of-the-art networks on two different datasets. In these experiments, some phenomena are worth discussing.

In order to solve the problem that UperNet does not make sufficient use of low-level semantic information and it is difficult to identify small-scale features, this paper redesigns the network by applying a hierarchical feature extraction strategy (HFE module) to the backbone network on the basis of UperNet. First, the location attention mechanism is used to focus on the underlying information to enhance the feature extraction of detailed regions and small target objects. Then, at the higher layers of the network, the interrelationships between channels are mined through the channel attention mechanism to improve the expression of specific high-level semantic information. Finally, the multi-scale information of features is obtained through the pyramid pooling module at the highest level of the network to improve the network’s ability to utilize global information. However, it is found through experiments that the underlying semantic information is not well represented when only the hierarchical extraction strategy is used for feature extraction. This phenomenon is mainly due to the fact that, when fusing multi-level features, the network assigns more weight to high-level semantic information, and thus the network ignores the detailed regions contained in the underlying layer as well as small target object information. To address this phenomenon, we enhance the attention to the underlying network features by using the channel attention mechanism feature fusion method (MFF module) to reduce the risk of the underlying information being ignored. Through experiments, we found that the HFENet constructed by using both HFE and MFF modules in the entire model can achieve better performance for remote sensing image semantic segmentation.

Comparing the experimental results on three different datasets, it is not difficult to see that compared with other methods, the improvements of HFENet on MZData and WHU building dataset are significant, and the improvement on landcover.ai is relatively small. Through analysis, the most obvious improvement of HFENet’s feature classification effect in MZData is on roads and buildings, and it can be seen from the visualization results that the improvement is mainly on small-scale features (such as narrow roads and fragmented buildings). This is because HFENet pays more attention to the location information extraction for the underlying network and has a high degree of attention to small-scale objects. For the WHU building dataset, HFENet can completely classify large-scale buildings and small-scale buildings. Even for some complex cases, it can classify buildings by low-level semantic information such as outline position. However, in landcover.ai, only four categories of features are segmented, and most of them belong to larger scale features, which cannot fully reflect the advantages of HFENet in small-scale target recognition. Therefore, the framework HFENet proposed in this paper can maintain the advantages of deep learning network in the recognition of ordinary scale objects in the task of remote sensing image semantic segmentation and can show better results in the refined semantic segmentation task.

6. Conclusions

In this paper, we propose a deep learning framework HFENet for semantic segmentation of remote sensing image landcover classification. This framework is an improvement of the UperNet framework, which mainly solves the problems that similar features in remote sensing images are easily confused and small-scale features are difficult to identify. HFENet is based on hierarchical feature extraction strategy and mainly includes two modules, HFE and MFF. The effects of HFE and MFF modules are verified by ablation studies on the self-labeled dataset MZData. Compared with the state-of-the-art image semantic segmentation models on MZData, landcover.ai and WHU building dataset, the results show that HFENet has obvious advantages in distinguishing interlaced features with similar image features and recognizing small-scale features.

Although the HFENet proposed in this paper provides a new choice for semantic segmentation of remote sensing images, the model has no advantages in terms of time complexity and space complexity of the algorithm; In addition, the super parameters selection of deep learning methods is also a huge challenge. We spent a lot of time in the experiment to select the super parameters to ensure the performance of the model as much as possible. How to automatically adjust the parameters to achieve the best effect of the model is still worth studying.