Cooperative Electromagnetic Data Annotation via Low-Rank Matrix Completion

Abstract

:1. Introduction

2. Problem Formulation

3. The Rank-Minimization-Based Convex Approximation Algorithm

3.1. Updating X

3.2. Updating E

| Algorithm 1 The rank-minimization-based algorithm |

|

Initialization: D, , , , Repeat ; ; ; ; Until some stopping criteria satisfied; Return . |

4. The Maximum-Rank-Decomposition-Based Non-Convex Algorithm

4.1. Updating X

4.2. Updating U

4.3. Updating V

| Algorithm 2 The max-rank-decomposition-based algorithm |

|

Initialization: D, , , , k=0

Repeat: ; ; ; ; Until some stopping criteria satisfied; Return:. |

5. Numerical Experiments and Discussion

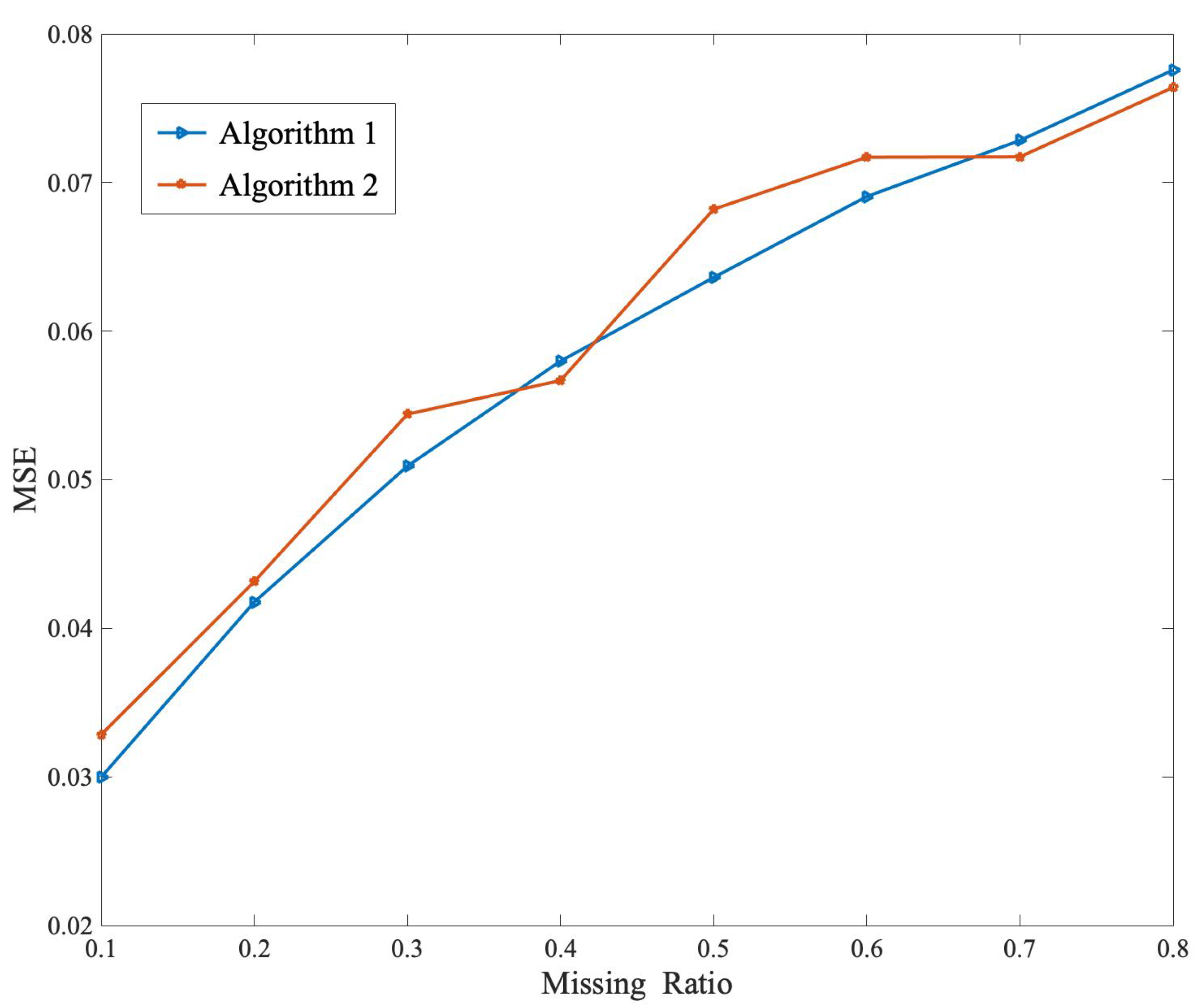

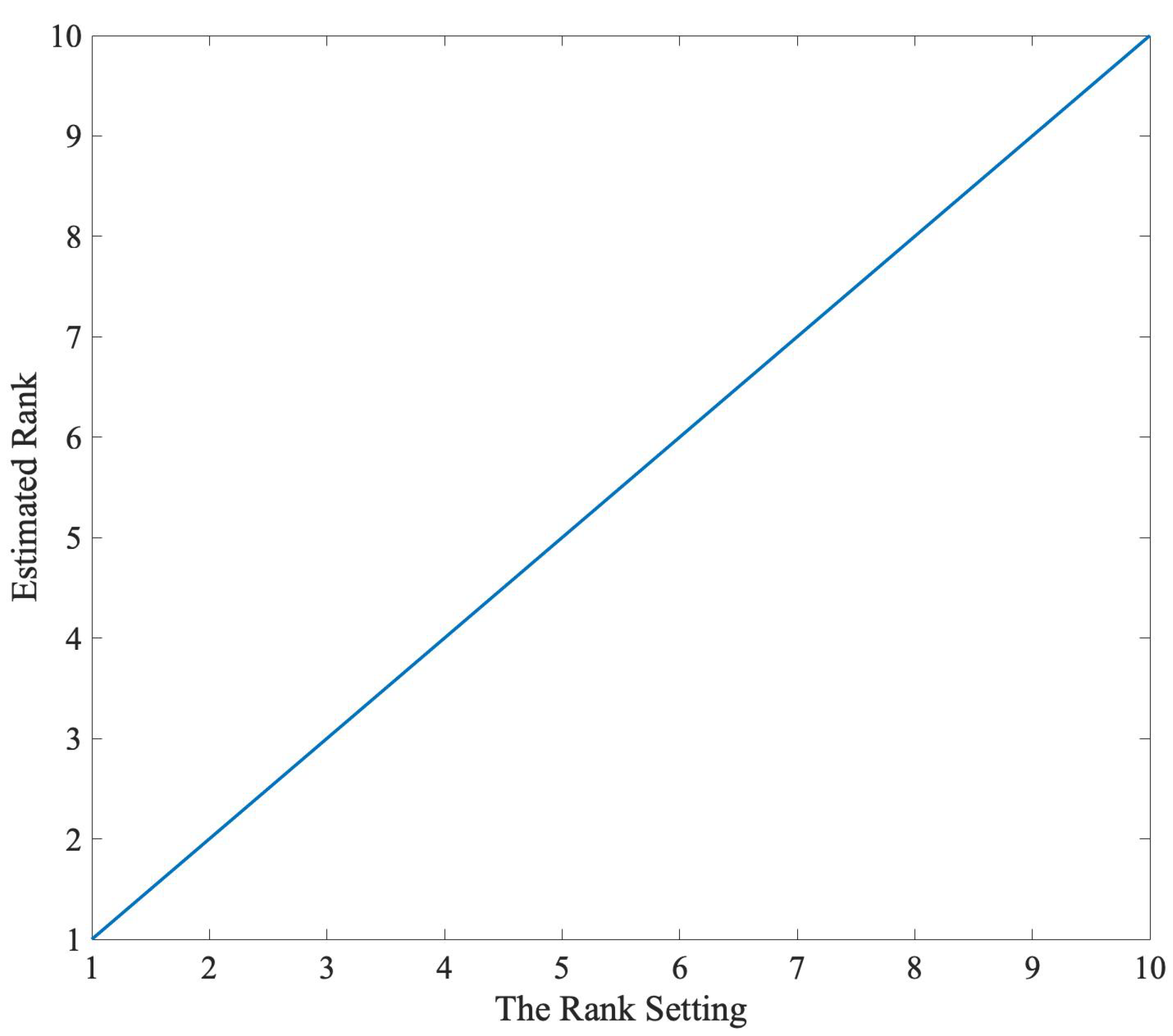

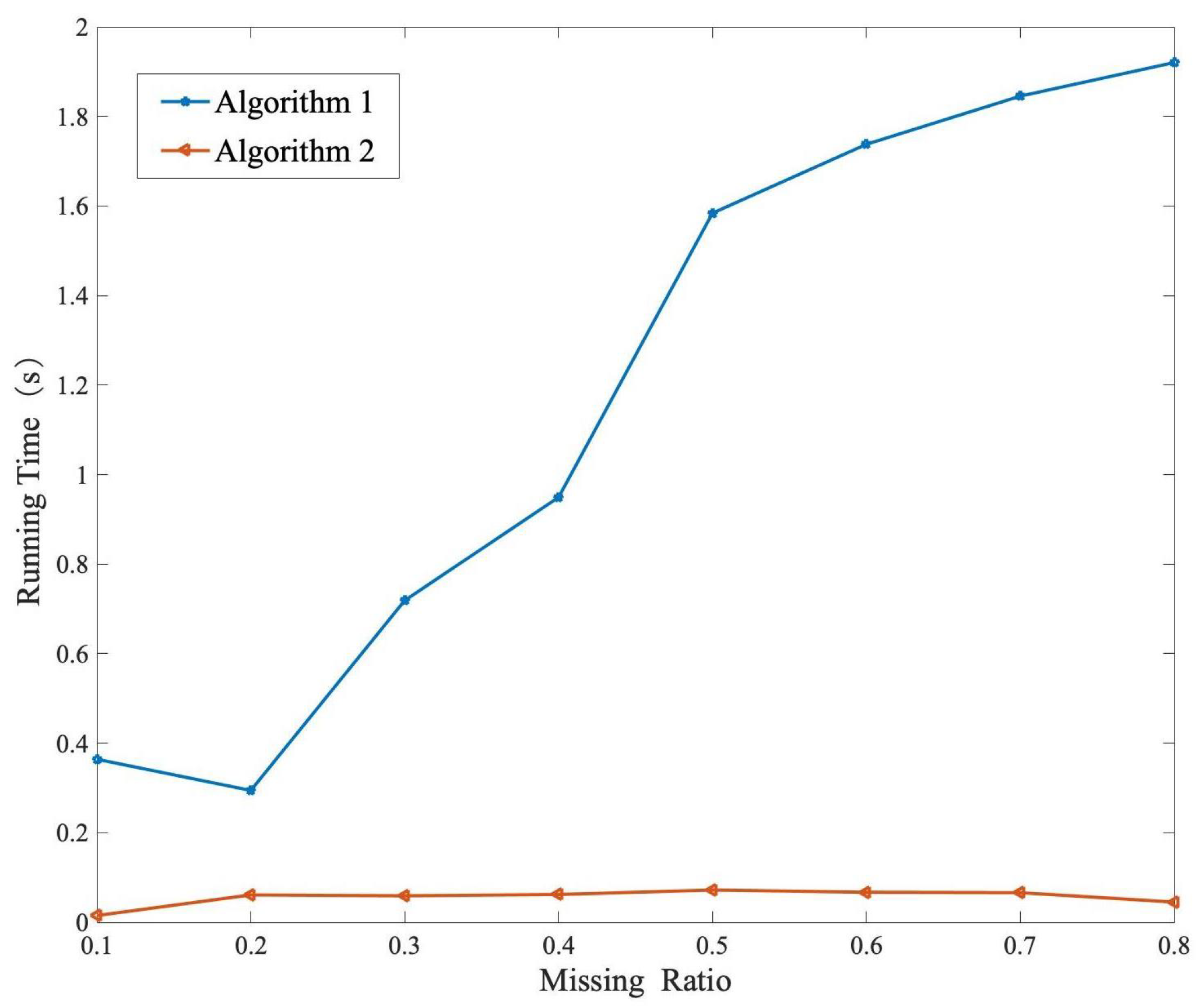

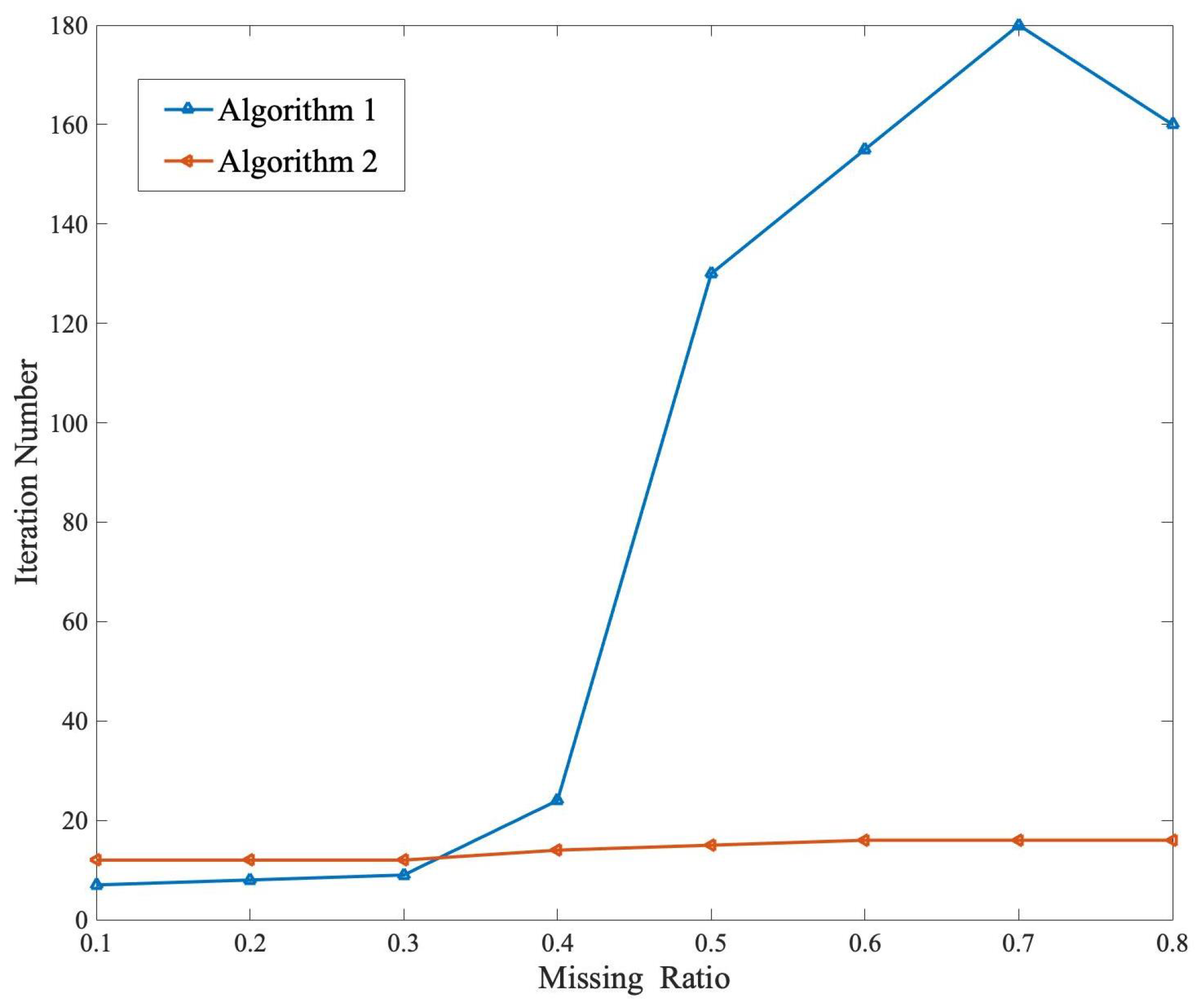

5.1. Synthetic Data Test of Proposed Methods

5.2. Real Data Test of Proposed Methods

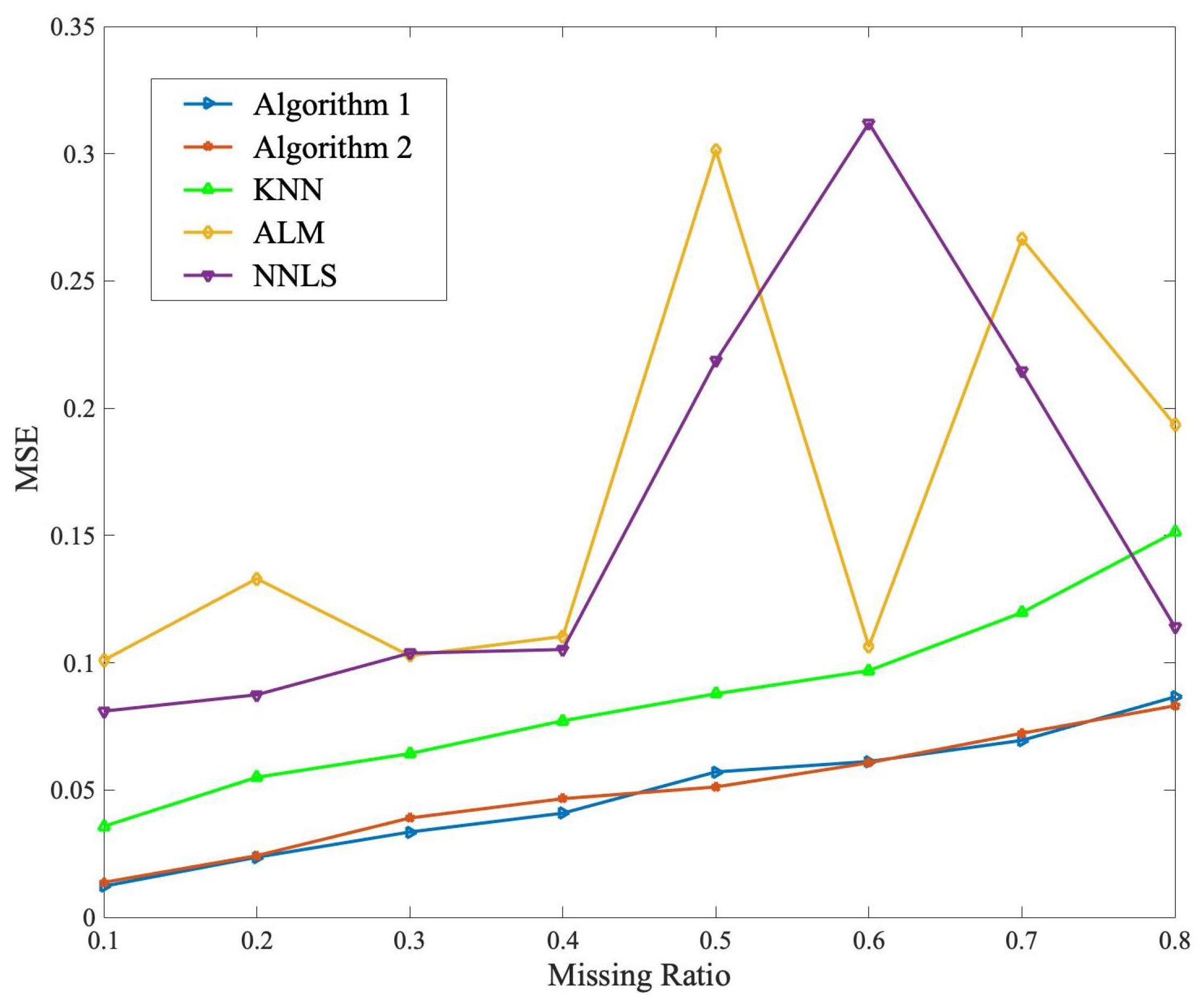

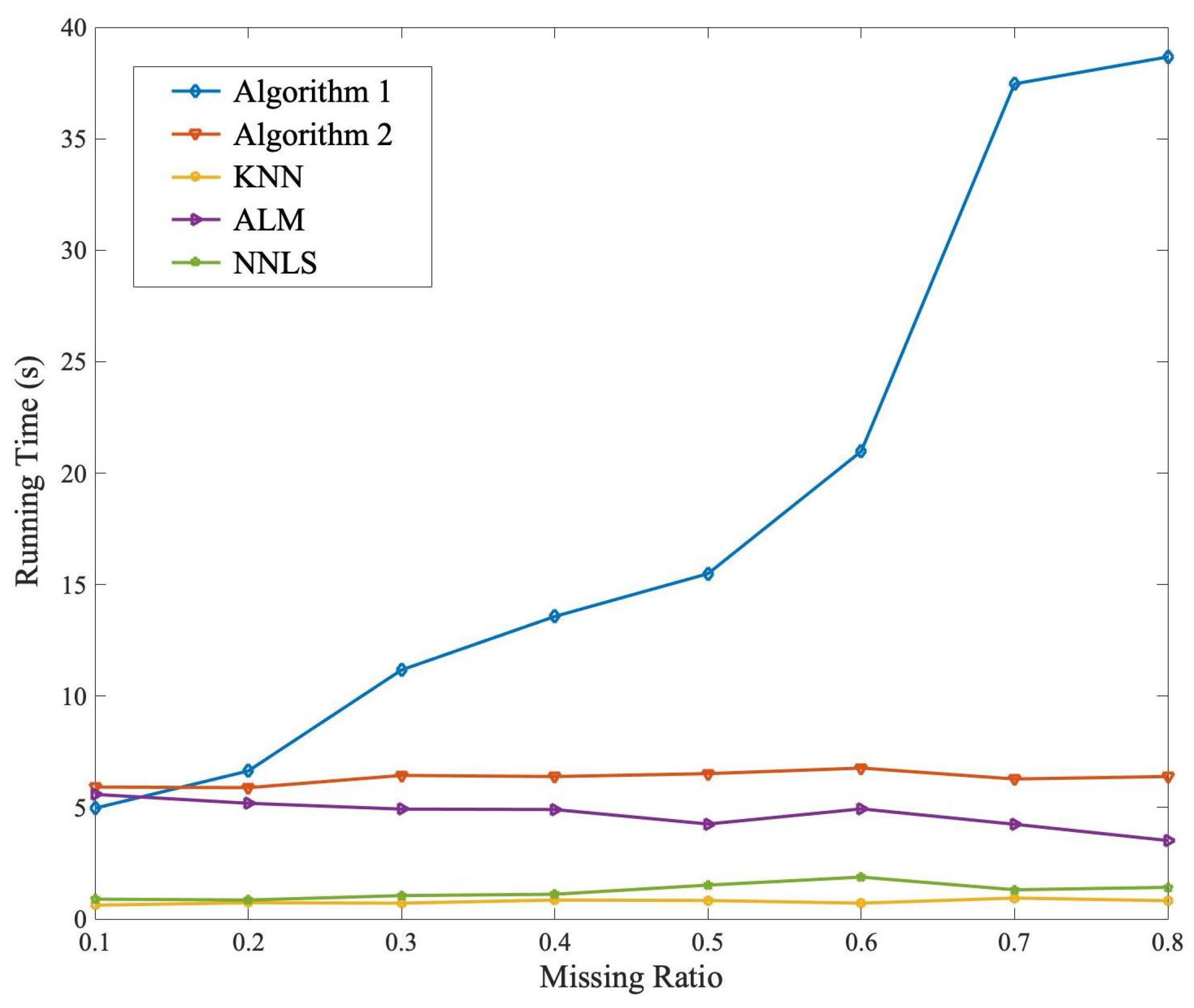

5.3. Comparison Test

- The K-nearest neighbor method (KNN) in [32], which predicts the missing annotation by its K nearest neighbors;

- The augmented Lagrange multiplier method for low-rank matrix recovery (ALM) in [27], where the annotation completion is formulated as a convex optimization model solved by the ALM algorithm;

- The nuclear norm regularized method for annotation completion (NNLS) in [28], where the annotation completion is formulated as an optimization model solved by the accelerated proximal gradient algorithm.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sui, J.; Liu, Z.; Liu, L.; Li, X. Progress in Radar Emitter Signal Deinterleaving. J. Radars 2022, 11, 418–433. [Google Scholar]

- Fu, Y.; Wang, X. Radar signal recognition based on modified semi-supervised SVM algorithm. In Proceedings of the 2017 IEEE 2nd Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 25–26 March 2017; pp. 2336–2340. [Google Scholar]

- Zhu, M.; Wang, S.; Li, Y. Model based Representation and Deinterleaving of Mixed Radar Pulse Sequences with Neural Ma-chineTranslation Network. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 1733–1752. [Google Scholar] [CrossRef]

- He, Y.; Zhu, Y.; Zhao, P. Panorama of national defense big data. Syst. Eng. Electron. 2016, 38, 1300–1305. [Google Scholar]

- Li, A.; Zang, Q.; Sun, D.; Wang, M. A text feature-based approach for literature mining of IncRNA-protein interactions. Neurocomputing 2016, 206, 73–80. [Google Scholar] [CrossRef]

- Arlotta, L.; Crescenz, V.; Mecca, G.; Merialdo, P. Automatic annotation of data extracted from large web sites. In Proceedings of the 6th International Workshop on Web and Databases, San Diego, CA, USA, 12–13 June 2003; ACM: New York, NY, USA, 2003; pp. 7–12. [Google Scholar]

- Li, M.; Li, X. Deep web data annotation method based on result schema. J. Comput. Appl. 2011, 31, 1733–1736. [Google Scholar]

- Candes, E.J.; Li, X.; Ma, Y.; Wright, J. Robust principal component analysis? J. ACM (JACM) 2011, 58, 1–37. [Google Scholar] [CrossRef]

- Xu, H.; Caramanis, C.; Sanghavi, S. Robust PCA via Outlier Pursuit. IEEE Trans. Inf. Theory 2012, 58, 3047–3064. [Google Scholar] [CrossRef] [Green Version]

- Candes, E.J.; Tao, T. The power of convex relaxation: Near optimal matrix completion. IEEE Trans. Inf. Theory 2010, 56, 2053–2080. [Google Scholar] [CrossRef] [Green Version]

- Kulin, M.; Kazaz, T.; Moerman, I.; De Poorter, E. End to end learning from spectrum data: A deep learning approach for wireless signal identification in spectrum monitoring applications. IEEE Access 2018, 6, 18484–18501. [Google Scholar] [CrossRef]

- Recht, B.; Fazel, M.; Parrilo, P.A. Guaranteed minimum rank solutions of linear matrix equations via nuclear norm minimiza-tion. SIAM Rev. 2010, 52, 471–501. [Google Scholar] [CrossRef] [Green Version]

- Wen, Z.; Yin, W.; Zhang, Y. Solving a low rank factorization model for matrix completion by a nonlinear successive over relaxation algorithm. Math. Program. Comput. 2012, 4, 333–361. [Google Scholar] [CrossRef]

- Waters, A.; Sankaranarayanan, A.; Baraniuk, R. SpaRCS: Recovering low-rank and sparse matrices from compressive meas-urements. Neural Inf. Process. Syst. 2011, 24, 1089–1097. [Google Scholar]

- Christodoulou, A.; Zhang, H.; Zhao, B.; Hitchens, T.K.; Ho, C.; Liang, Z.-P. High-Resolution Cardiovascular MRI by Inte-grating Parallel Imaging With Low-Rank and Sparse Modeling. IEEE Trans. Biomed. Eng. 2013, 60, 3083–3092. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sykulski, M. RobustPCA: Decompose a Matrix into Low-Rank and Sparse Components. 2015. Available online: https://CRAN.R-project.org/package=rpca (accessed on 31 July 2015).

- Chen, Y.; Xu, H.; Caramanis, C.; Sanghavi, S. Robust matrix completion and corrupted columns. In Proceedings of the 28th International Conference on Machine Learning, Bellevue, WA, USA, 28 June–2 July 2011; pp. 873–880. [Google Scholar]

- Negahban, S.; Wain, M. Restricted strong convexity and weighted matrix completion: Optimal bounds with noise. J. Mach. Learn. Res. 2012, 5, 1665–1697. [Google Scholar]

- Dai, W.; Kerm, E.; Milenk, O. A geometric approach to low-rank matrix completion. IEEE Trans. Inf. Theory 2012, 58, 237–247. [Google Scholar] [CrossRef]

- Bai, H.; Ma, J.; Xiong, K.; Hu, F. Design of weighted matrix completion model in image inpainting. Syst. Eng. Electron. 2016, 38, 1703–1708. [Google Scholar]

- Zhang, L.; Zhou, Z.; Gao, S.; Yin, J.; Lin, Z.; Ma, Y. Label information guided graph construction for semi-supervised learning. IEEE Trans. Image Process. 2017, 26, 4182–4192. [Google Scholar] [CrossRef]

- Keshavan, A.; Montanari, A.; Oh, S. Matrix completion from noisy entries. J. Mach. Learn. Res. 2010, 11, 2057–2078. [Google Scholar]

- Recht, B. A simpler approach to matrix completion. J. Mach. Learn. Res. 2011, 12, 3413–3430. [Google Scholar]

- Candes, E.; Plan, Y. Matrix completion with noise. Proc. IEEE 2010, 98, 925–936. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.; Zhao, H.; Wang, J.; Li, X.; Huang, P. SAR image denoising via fast weighted nuclear norm minimization. Syst. Eng. Electron. 2019, 41, 1504–1508. [Google Scholar]

- Hestenes, M. Multiplier and gradient methods. J. Optim. Theory Appl. 1969, 4, 303–320. [Google Scholar] [CrossRef]

- Lin, J.; Jiang, C.; Li, Q.; Shao, H.; Li, Y. Distributed method for joint power allocation and admission control based on ADMM Framework. J. Univ. Electron. Sci. Technol. China 2016, 45, 726–731. [Google Scholar]

- Toh, K.; Yun, S. An accelerated proximal gradient algorithm for nuclear norm regularized linear least squares problems. Pac. J. Optim. 2010, 6, 615–640. [Google Scholar]

- Shen, H.; Li, X.; Cheng, Q.; Zeng, C.; Yang, G.; Li, H.; Zhang, L. Missing information reconstruction of remote sensing data: A technical review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 61–85. [Google Scholar] [CrossRef]

- Lin, C.; Lai, K.; Chen, Z.; Chen, Z.-B.; Chen, J.-Y. Patch-based information reconstruction of cloud-contaminated multitemporal images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 163–174. [Google Scholar] [CrossRef]

- Parikh, N.; Boyd, S. Proximal Algorithms, Foundations and Trends in Optimization; Now Publishers Inc.: Delft, The Netherlands, 2014. [Google Scholar]

- Chen, R. Semi-supervised k-nearest neighbor classification method. J. Image Graph. 2013, 18, 195–200. [Google Scholar]

- Shi, Q.; Hong, M. Penalty dual Decompositon method for nonsmooth nonconvex optimization Part I:Algorithm and Con-vergence Analysis. IEEE Trans. Signal Process. 2020, 68, 4108–4122. [Google Scholar] [CrossRef]

| Platform Label | Target Label | Feature 1 | Feature 2 | Feature 3 | Feature 4 | Feature 5 | ⋯ | Feature |

|---|---|---|---|---|---|---|---|---|

| 1 | ⋯ | |||||||

| 1 | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ |

| ⋯ | ||||||||

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋯ | ⋮ | |

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ |

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋯ | ⋮ | |

| 1 | ⋯ | |||||||

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | |

| ⋯ |

| Notation | Explanation |

|---|---|

| X, D, E, , U, V | Matrix |

| Vector | |

| , , , , c | Scalar |

| Target Label () | Feature 1 | Feature 2 | Feature 3 | Feature 4 | Feature 5 | Feature 6 | Feature 7 | Feature 8 | Feature 9 | Feature 10 | ⋯ |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.8331 | 0.9314 | 1.6636 | 1.1639 | 1.2384 | 1.0438 | 1.2527 | 1.0609 | 0.5221 | 0.8351 | ⋯ |

| 2 | 0.7860 | 1.3702 | 1.6861 | 1.6636 | 1.2148 | 0.8691 | 1.1024 | 1.7871 | 0.7318 | 1.1431 | ⋯ |

| 3 | 1.0400 | 0.9844 | 1.1685 | 1.1966 | 0.9242 | 0.6846 | 1.0263 | 1.0460 | 0.6473 | 0.8802 | ⋯ |

| 4 | 0.7558 | 1.1816 | 1.4044 | 1.5881 | 1.0996 | 0.7906 | 0.9751 | 1.7054 | 0.7131 | 0.9999 | ⋯ |

| 5 | 1.1372 | 1.5230 | 2.2505 | 2.0789 | 1.7841 | 1.4639 | 1.6405 | 2.1031 | 0.9132 | 1.3650 | ⋯ |

| 6 | 0.6587 | 0.8033 | 1.5688 | 1.2180 | 1.2643 | 1.1109 | 1.1562 | 1.2050 | 0.4966 | 0.7463 | ⋯ |

| 7 | 0.2884 | 0.5634 | 0.5258 | 0.6923 | 0.4723 | 0.4630 | 0.3422 | 0.7309 | 0.2969 | 0.5746 | ⋯ |

| 8 | 0.7313 | 0.8509 | 0.9388 | 1.1082 | 0.7031 | 0.3967 | 0.7450 | 1.1216 | 0.5658 | 0.6677 | ⋯ |

| 9 | 1.0431 | 1.3981 | 1.7029 | 1.7407 | 1.3799 | 1.1854 | 1.2670 | 1.7087 | 0.8046 | 1.3185 | ⋯ |

| 10 | 1.0597 | 1.3580 | 1.6473 | 1.9323 | 1.3356 | 0.9283 | 1.2509 | 2.0165 | 0.9090 | 1.1356 | ⋯ |

| 1 | 1.7201 | 1.7509 | 2.6238 | 2.1080 | 2.0970 | 1.9122 | 2.1279 | 1.7904 | 1.0234 | 1.7243 | ⋯ |

| 2 | 0.7612 | 1.3054 | 2.0316 | 1.5709 | 1.5117 | 1.3303 | 1.13453 | 1.6025 | 0.6299 | 1.2025 | ⋯ |

| 3 | 0.7386 | 0.9366 | 1.3114 | 1.1991 | 1.1835 | 1.2654 | 1.0069 | 1.0870 | 0.5154 | 1.0572 | ⋯ |

| 4 | 0.7747 | 1.0134 | 1.7442 | 1.3499 | 1.4382 | 1.4058 | 1.2767 | 1.2729 | 0.5502 | 1.0479 | ⋯ |

| 5 | 1.1288 | 1.1637 | 1.5743 | 1.2103 | 1.1755 | 1.0365 | 1.2772 | 0.9698 | 0.6280 | 1.1144 | ⋯ |

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋱ |

| Target Label () | Feature 1 | Feature 2 | Feature 3 | Feature 4 | Feature 5 | Feature 6 | Feature 7 | Feature 8 | Feature 9 | Feature 10 | ⋯ |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.8331 | 1.6636 | 1.2527 | 0.5221 | 0.8351 | ⋯ | |||||

| 2 | 0.7860 | 1.3702 | 1.6636 | 1.2148 | 1.7871 | 0.7318 | 1.1431 | ⋯ | |||

| 3 | 0.9844 | 1.1685 | 0.9242 | 1.0263 | ⋯ | ||||||

| 4 | 0.7558 | 1.4044 | 1.7054 | 0.7131 | 0.9999 | ⋯ | |||||

| 5 | 1.1372 | 1.7841 | 1.4639 | 2.1031 | 0.9132 | 1.3650 | ⋯ | ||||

| 6 | 1.5688 | 1.2643 | 1.2050 | 0.4966 | 0.7463 | ⋯ | |||||

| 7 | 0.2884 | 0.5258 | 0.6923 | 0.4723 | 0.4630 | 0.3422 | 0.7309 | 0.2969 | 0.5746 | ⋯ | |

| 8 | 0.7313 | 0.8509 | 0.7450 | 0.5658 | 0.6677 | ⋯ | |||||

| 9 | 1.0431 | 1.3799 | 1.2670 | 1.3185 | ⋯ | ||||||

| 10 | 1.6473 | 1.9323 | 1.2509 | 2.0165 | 0.9090 | ⋯ | |||||

| 1 | 2.6238 | 2.0970 | 1.9122 | 2.1279 | 1.7243 | ⋯ | |||||

| 2 | 0.7612 | 1.3054 | 1.5117 | 0.6299 | 1.2025 | ⋯ | |||||

| 3 | 1.3114 | 1.1991 | 1.0069 | 0.5154 | 1.0572 | ⋯ | |||||

| 4 | 1.0134 | 1.4382 | 1.4058 | 1.2729 | 0.5502 | ⋯ | |||||

| 5 | 1.1637 | 1.2103 | 1.1755 | 1.0365 | 1.2772 | 0.9698 | 0.6280 | 1.1144 | ⋯ | ||

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋱ |

| Target Label () | Feature 1 | Feature 2 | Feature 3 | Feature 4 | Feature 5 | Feature 6 | Feature 7 | Feature 8 | Feature 9 | Feature 10 | ⋯ |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.8331 | 0.9314 | 1.6636 | 1.1639 | 1.2384 | 1.0438 | 1.2527 | 1.0609 | 0.5221 | 0.8351 | ⋯ |

| 2 | 0.7860 | 1.3702 | 1.6861 | 1.6636 | 1.2148 | 1.1024 | 1.7871 | 1.7871 | 0.7318 | 1.1431 | ⋯ |

| 3 | 1.0400 | 0.9844 | 1.1685 | 1.1966 | 0.9242 | 0.6946 | 1.0263 | 1.0460 | 0.6473 | 0.8802 | ⋯ |

| 4 | 0.7558 | 1.1816 | 1.4044 | 1.5881 | 1.0996 | 0.7906 | 0.9751 | 1.7054 | 0.7131 | 0.9999 | ⋯ |

| 5 | 1.1372 | 1.5230 | 2.2505 | 2.0789 | 1.7841 | 1.4639 | 1.6405 | 2.1031 | 0.9132 | 1.3650 | ⋯ |

| 6 | 0.6587 | 0.8033 | 1.5688 | 1.2180 | 1.2643 | 1.1109 | 1.1562 | 1.2050 | 0.4966 | 0.7463 | ⋯ |

| 7 | 0.2884 | 0.5634 | 0.5258 | 0.6923 | 0.4723 | 0.4630 | 0.3422 | 0.7309 | 0.2969 | 0.5746 | ⋯ |

| 8 | 0.7313 | 0.8509 | 0.9388 | 1.1082 | 0.7031 | 0.3967 | 0.7450 | 1.1216 | 0.5658 | 0.6677 | ⋯ |

| 9 | 1.0431 | 1.3981 | 1.7029 | 1.7407 | 1.3799 | 1.1854 | 1.2670 | 1.7087 | 0.8046 | 1.3185 | ⋯ |

| 10 | 1.0597 | 1.3580 | 1.6473 | 1.9323 | 1.3356 | 0.9283 | 1.2509 | 2.0165 | 0.9090 | 1.1356 | ⋯ |

| 1 | 1.7201 | 1.7509 | 2.6238 | 2.1080 | 2.0970 | 1.9122 | 2.1279 | 1.7904 | 1.0234 | 1.7243 | ⋯ |

| 2 | 0.7612 | 1.3054 | 2.0316 | 1.5709 | 1.5117 | 1.3303 | 1.3453 | 1.6025 | 0.6299 | 1.2025 | ⋯ |

| 3 | 0.7386 | 0.9366 | 1.3114 | 1.1991 | 1.1835 | 1.2654 | 1.0069 | 1.0870 | 0.5154 | 1.0572 | ⋯ |

| 4 | 0.7747 | 1.0134 | 1.7442 | 1.3499 | 1.4382 | 1.4058 | 1.2767 | 1.2729 | 0.5502 | 1.0479 | ⋯ |

| 5 | 1.1288 | 1.1637 | 1.5743 | 1.2103 | 1.1755 | 1.0365 | 1.2772 | 0.9698 | 0.6280 | 1.1144 | ⋯ |

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋱ |

| Target Label () | Feature 1 | Feature 2 | Feature 3 | Feature 4 | Feature 5 | Feature 6 | Feature 7 | Feature 8 | Feature 9 | Feature 10 | ⋯ |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.8331 | 0.9376 | 1.6636 | 1.1643 | 1.1978 | 1.0437 | 1.2527 | 1.0901 | 0.5221 | 0.8351 | ⋯ |

| 2 | 0.7860 | 1.3702 | 1.6875 | 1.6636 | 1.2148 | 0.8691 | 1.1693 | 1.7420 | 0.7318 | 1.1431 | ⋯ |

| 3 | 1.0413 | 0.9844 | 1.1685 | 1.1685 | 0.9242 | 0.6946 | 1.0263 | 1.1058 | 0.6496 | 0.8788 | ⋯ |

| 4 | 0.7558 | 1.0976 | 1.4044 | 1.5837 | 1.1597 | 0.7942 | 1.1111 | 1.7054 | 0.7131 | 0.9999 | ⋯ |

| 5 | 1.1372 | 1.6160 | 2.2437 | 2.0659 | 1.7841 | 1.4639 | 1.6631 | 2.1031 | 0.9132 | 1.3650 | ⋯ |

| 6 | 0.6683 | 0.8156 | 1.5688 | 1.2899 | 1.2643 | 1.1103 | 1.0857 | 1.2050 | 0.4966 | 0.7463 | ⋯ |

| 7 | 0.2884 | 0.5617 | 0.5258 | 0.6923 | 0.4723 | 0.4630 | 0.3422 | 0.7309 | 0.2969 | 0.5746 | ⋯ |

| 8 | 0.7313 | 0.8509 | 0.9844 | 1.1183 | 0.7166 | 0.6775 | 0.7450 | 1.1288 | 0.5658 | 0.6677 | ⋯ |

| 9 | 1.0431 | 1.3351 | 1.7504 | 1.6924 | 1.3799 | 0.4025 | 1.2670 | 1.5447 | 0.7677 | 1.3185 | ⋯ |

| 10 | 1.0970 | 1.3589 | 1.6473 | 1.9323 | 1.3597 | 0.2262 | 1.2509 | 2.0165 | 0.9090 | 1.2495 | ⋯ |

| 1 | 1.7189 | 1.8459 | 2.6238 | 2.1156 | 2.0970 | 1.9122 | 2.1279 | 1.7915 | 1.1190 | 1.7243 | ⋯ |

| 2 | 0.7612 | 1.3054 | 1.9913 | 1.5768 | 1.5117 | 1.2374 | 1.4113 | 1.5867 | 0.6299 | 1.2025 | ⋯ |

| 3 | 0.8057 | 0.9980 | 1.3114 | 1.1991 | 1.1721 | 1.2709 | 1.0069 | 1.1548 | 0.5154 | 1.0572 | ⋯ |

| 4 | 0.7834 | 1.0134 | 1.5256 | 1.4227 | 1.4382 | 1.4058 | 1.1975 | 1.2729 | 0.5502 | 1.0699 | ⋯ |

| 5 | 0.9414 | 1.1637 | 1.5289 | 1.2103 | 1.1755 | 1.0365 | 1.2772 | 0.9698 | 0.6280 | 1.1144 | ⋯ |

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋱ |

| Target | Platf-1 | Platf-2 | ⋯ | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Label | FW | PW | PT | AM | AOA | FW | PW | PT | AM | AOA | ⋯ |

| 1 | 2.1200 | 780 | 95.2850 | 428.7933 | 1.7600 | 780 | 95.7800 | 428.7967 | ⋯ | ||

| 2 | 2.1400 | 800 | 95.5050 | 428.8067 | 1.8000 | 770 | 95.5050 | 428.7933 | ⋯ | ||

| 3 | 1.7200 | 820 | 95.5600 | 428.7933 | 1.9000 | 770 | 95.5050 | 428.7767 | ⋯ | ||

| 4 | 1.8000 | 830 | 95.5600 | 428.7867 | 1.9200 | 760 | 96.0750 | 428.8000 | ⋯ | ||

| 5 | 1.9200 | 820 | 95.5600 | 428.7767 | 2.1200 | 780 | 95.5050 | 428.7633 | ⋯ | ||

| 6 | 2.0800 | 830 | 95.6700 | 428.7700 | 2.1200 | 750 | 95.6700 | 428.7867 | ⋯ | ||

| 7 | 2.1200 | 840 | 95.6700 | 428.7900 | 1.7400 | 770 | 95.3950 | 428.7933 | ⋯ | ||

| 8 | 2.2000 | 840 | 95.6700 | 428.7833 | 1.7600 | 780 | 95.6700 | 428.7967 | ⋯ | ||

| 9 | 2.2000 | 850 | 95.6150 | 428.8000 | 1.9000 | 790 | 95.4500 | 428.7733 | ⋯ | ||

| 10 | 1.9000 | 840 | 95.6150 | 428.7700 | 1.9000 | 800 | 95.6150 | 428.8000 | ⋯ | ||

| 11 | 1.9200 | 870 | 95.5600 | 428.8000 | 1.9000 | 780 | 95.5050 | 428.8000 | ⋯ | ||

| 12 | 1.9000 | 870 | 95.5050 | 428.8000 | 1.7200 | 800 | 95.5600 | 428.8267 | ⋯ | ||

| 13 | 2.0600 | 880 | 95.5600 | 428.7700 | 1.9000 | 800 | 95.6700 | 428.7733 | ⋯ | ||

| 14 | 2.1200 | 880 | 95.5600 | 428.7833 | 1.8800 | 810 | 95.5050 | 428.8000 | ⋯ | ||

| 15 | 2.1400 | 890 | 95.5600 | 428.8000 | 1.7400 | 810 | 95.6700 | 428.7433 | ⋯ | ||

| 16 | 2.1600 | 890 | 95.6150 | 428.7900 | 1.8000 | 820 | 95.5600 | 428.7933 | ⋯ | ||

| 17 | 2.1600 | 900 | 95.5600 | 428.7933 | 1.9200 | 820 | 95.4500 | 428.7767 | ⋯ | ||

| 18 | 1.9200 | 890 | 95.6150 | 428.7767 | 1.8800 | 830 | 95.5600 | 428.8000 | ⋯ | ||

| 19 | 0.2200 | 730 | 95.3400 | 0.3533 | 1.8800 | 830 | 95.5600 | 428.8000 | ⋯ | ||

| 20 | 1.9000 | 900 | 95.5050 | 428.4467 | 2.1400 | 830 | 95.6150 | 428.7533 | ⋯ | ||

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋱ |

| Target | Platf-1 | Platf-2 | ⋯ | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Label | FW | PW | PT | AM | AOA | FW | PW | PT | AM | DOA | ⋯ |

| 1 | 2.1200 | 95.2850 | 0 | 95.7800 | 428.7967 | ⋯ | |||||

| 2 | 800 | 95.5050 | 1.8000 | 95.5050 | 428.7933 | ⋯ | |||||

| 3 | 95.5600 | 428.7933 | 1.9000 | 95.5050 | 428.7767 | ⋯ | |||||

| 4 | 1.8000 | 830 | 428.7867 | 1.9200 | 760 | 96.0750 | 428.8000 | ⋯ | |||

| 5 | 95.5600 | 2.1200 | 780 | 95.5050 | 428.7633 | ⋯ | |||||

| 6 | 2.0800 | 830 | 428.7700 | 95.6700 | 428.7867 | ⋯ | |||||

| 7 | 95.6700 | 1.7400 | 770 | ⋯ | |||||||

| 8 | 2.2000 | 840 | 95.6700 | 428.7833 | 1.7600 | 95.6700 | ⋯ | ||||

| 9 | 2.2000 | 428.8000 | 1.9000 | 790 | 428.7733 | ⋯ | |||||

| 10 | 1.9000 | 840 | 95.6150 | 1.9000 | 800 | 95.6150 | 428.8000 | ⋯ | |||

| 11 | 1.9200 | 428.8000 | 1.9000 | 95.5050 | 428.8000 | ⋯ | |||||

| 12 | 870 | 428.8000 | 800 | 95.5600 | 428.8267 | ⋯ | |||||

| 13 | 95.5600 | 428.7700 | 428.7733 | ⋯ | |||||||

| 14 | 2.1200 | 880 | 428.7833 | 1.8800 | 810 | 95.5050 | 428.8000 | ⋯ | |||

| 15 | 2.1400 | 95.5600 | 428.8000 | 810 | 95.6700 | 428.7433 | ⋯ | ||||

| 16 | 2.1600 | 890 | 95.6150 | 428.7900 | 1.8000 | 428.7933 | ⋯ | ||||

| 17 | 2.1600 | 900 | 95.5600 | 428.7933 | 820 | 95.4500 | 428.7767 | ⋯ | |||

| 18 | 1.9200 | 890 | 428.7767 | 1.8800 | 830 | 95.5600 | 428.8000 | ⋯ | |||

| 19 | 730 | 830 | 95.5600 | 428.8000 | ⋯ | ||||||

| 20 | 1.9000 | 95.5050 | 2.1400 | 830 | 95.6150 | 428.7533 | ⋯ | ||||

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋱ |

| Target | Platf-1 | Platf-2 | ⋯ | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Label | FW | PW | PT | AM | AOA | FW | PW | PT | AM | DOA | ⋯ |

| 1 | 2.1200 | 862.3198 | 95.2850 | 0 | 1.7002 | 769.1010 | 95.7800 | 428.7967 | ⋯ | ||

| 2 | 1.8810 | 800 | 95.5050 | 590.2473 | 1.8000 | 763.4213 | 95.5050 | 428.7933 | ⋯ | ||

| 3 | 1.7387 | 813.6904 | 95.5600 | 428.7933 | 1.9000 | 778.7780 | 95.5050 | 428.7767 | ⋯ | ||

| 4 | 1.8000 | 830 | 93.3096 | 428.7867 | 1.9200 | 760 | 96.0750 | 428.8000 | ⋯ | ||

| 5 | 2.0022 | 839.8333 | 95.5600 | 481.1485 | 2.1200 | 780 | 95.5050 | 428.7633 | ⋯ | ||

| 6 | 2.0800 | 830 | 93.5123 | 428.7700 | 1.7048 | 777.1674 | 95.6700 | 428.7867 | ⋯ | ||

| 7 | 2.0342 | 848.9644 | 95.6700 | 243.2014 | 1.7400 | 770 | 94.1180 | 543.5496 | ⋯ | ||

| 8 | 2.2000 | 840 | 95.6700 | 428.7833 | 1.7600 | 754.3832 | 95.6700 | 487.8920 | ⋯ | ||

| 9 | 2.2000 | 789.7436 | 93.3603 | 428.8000 | 1.9000 | 790 | 93.2589 | 428.7733 | ⋯ | ||

| 10 | 1.9000 | 840 | 95.6150 | 146.4476 | 1.9000 | 800 | 95.6150 | 428.8000 | ⋯ | ||

| 11 | 1.9200 | 811.1999 | 93.4410 | 428.8000 | 1.9000 | 756.8295 | 95.5050 | 428.8000 | ⋯ | ||

| 12 | 2.0224 | 870 | 94.6997 | 428.8000 | 1.7649 | 800 | 95.5600 | 428.8267 | ⋯ | ||

| 13 | 1.8753 | 837.7079 | 95.5600 | 428.7700 | 1.7816 | 784.7113 | 94.5026 | 428.7733 | ⋯ | ||

| 14 | 2.1200 | 880 | 94.4242 | 428.7833 | 1.8800 | 810 | 95.5050 | 428.8000 | ⋯ | ||

| 15 | 2.1400 | 847.0041 | 95.5600 | 428.8000 | 1.6587 | 810 | 95.6700 | 428.7433 | ⋯ | ||

| 16 | 2.1600 | 890 | 95.6150 | 428.7900 | 1.8000 | 784.9361 | 94.9520 | 428.7933 | ⋯ | ||

| 17 | 2.1600 | 900 | 95.5600 | 428.7933 | 1.7786 | 820 | 95.4500 | 428.7767 | ⋯ | ||

| 18 | 1.9200 | 890 | 94.8011 | 428.7767 | 1.8800 | 830 | 95.5600 | 428.8000 | ⋯ | ||

| 19 | 1.7863 | 730 | 95.2033 | 461.7466 | 1.8032 | 830 | 95.5600 | 428.8000 | ⋯ | ||

| 20 | 1.9000 | 813.5963 | 95.5050 | 423.7582 | 2.1400 | 830 | 95.6150 | 428.7533 | ⋯ | ||

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋱ |

| Target | Platf-1 | Platf-2 | ⋯ | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Label | FW | PW | PT | AM | AOA | FW | PW | PT | AM | DOA | ⋯ |

| 1 | 2.1200 | 826.1695 | 95.2850 | 0 | 1.7705 | 802.9382 | 95.7800 | 428.7967 | ⋯ | ||

| 2 | 1.8760 | 800 | 95.5050 | 480.4501 | 1.8000 | 800.3337 | 95.5050 | 428.7933 | ⋯ | ||

| 3 | 1.8730 | 822.1822 | 95.5600 | 428.7933 | 1.9000 | NaN | 95.5050 | 428.7767 | ⋯ | ||

| 4 | 1.8000 | 830 | 93.6472 | 428.7867 | 1.9200 | 760 | 96.0750 | 428.8000 | ⋯ | ||

| 5 | 1.8156 | 796.9825 | 95.5600 | 464.9850 | 2.1200 | 780 | 95.5050 | 428.7633 | ⋯ | ||

| 6 | 2.0800 | 830 | 93.6649 | 428.7700 | 1.7131 | 776.9018 | 95.6700 | 428.7867 | ⋯ | ||

| 7 | 1.8653 | 818.7611 | 95.6700 | 477.6912 | 1.7400 | 770 | 95.8159 | 470.2310 | ⋯ | ||

| 8 | 2.2000 | 840 | 95.6700 | 428.7833 | 1.7600 | 783.2492 | 95.6700 | 462.8508 | ⋯ | ||

| 9 | 2.2000 | 813.6863 | 95.3412 | 428.8000 | 1.9000 | 790 | 95.2221 | 428.7733 | ⋯ | ||

| 10 | 1.9000 | 840 | 95.6150 | 468.3993 | 1.9000 | 800 | 95.6150 | 428.8000 | ⋯ | ||

| 11 | 1.9200 | 807.3440 | 94.5981 | 428.8000 | 1.9000 | 784.6421 | 95.5050 | 428.8000 | ⋯ | ||

| 12 | 1.8600 | 870 | 95.6633 | 428.8000 | 1.7497 | 800 | 95.5600 | 428.8267 | ⋯ | ||

| 13 | 1.8397 | 807.5579 | 95.5600 | 428.7700 | 1.7306 | 784.8500 | 94.5049 | 428.7733 | ⋯ | ||

| 14 | 2.1200 | 880 | 95.4003 | 428.7833 | 1.8800 | 810 | 95.5050 | 428.8000 | ⋯ | ||

| 15 | 2.1400 | 811.4794 | 95.5600 | 428.8000 | 1.7390 | 810 | 95.6700 | 428.7433 | ⋯ | ||

| 16 | 2.1600 | 890 | 95.6150 | 428.7900 | 1.8000 | 794.3686 | 95.6510 | 428.7933 | ⋯ | ||

| 17 | 2.1600 | 900 | 95.5600 | 428.7933 | 1.7596 | 820 | 95.4500 | 428.7767 | ⋯ | ||

| 18 | 1.9200 | 890 | 95.7352 | 428.7767 | 1.8800 | 830 | 95.5600 | 428.8000 | ⋯ | ||

| 19 | 1.8809 | 730 | 96.7416 | 481.7037 | 1.7994 | 830 | 95.5600 | 428.8000 | ⋯ | ||

| 20 | 1.9000 | 826.6687 | 95.5050 | 482.3048 | 2.1400 | 830 | 95.6150 | 428.7533 | ⋯ | ||

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋱ |

| Missing Ratio | Algorithm 1 | Algorithm 2 | KNN | ALM | NNLS |

|---|---|---|---|---|---|

| 0.1 | 0.0122 | 0.0137 | 0.0357 | 0.0810 | 0.1010 |

| 0.2 | 0.0236 | 0.0242 | 0.0550 | 0.0874 | 0.1330 |

| 0.3 | 0.0355 | 0.0390 | 0.0643 | 0.1038 | 0.1027 |

| 0.4 | 0.0409 | 0.0466 | 0.0772 | 0.1052 | 0.1104 |

| 0.5 | 0.0571 | 0.0512 | 0.0878 | 0.2186 | 0.3014 |

| 0.6 | 0.0607 | 0.0612 | 0.0969 | 0.3120 | 0.1064 |

| 0.7 | 0.0695 | 0.0723 | 0.1197 | 0.2144 | 0.2665 |

| 0.8 | 0.0866 | 0.0831 | 0.1513 | 0.1141 | 0.1934 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, W.; Yang, J.; Li, Q.; Lin, J.; Shao, H.; Sun, G. Cooperative Electromagnetic Data Annotation via Low-Rank Matrix Completion. Remote Sens. 2023, 15, 121. https://doi.org/10.3390/rs15010121

Zhang W, Yang J, Li Q, Lin J, Shao H, Sun G. Cooperative Electromagnetic Data Annotation via Low-Rank Matrix Completion. Remote Sensing. 2023; 15(1):121. https://doi.org/10.3390/rs15010121

Chicago/Turabian StyleZhang, Wei, Jian Yang, Qiang Li, Jingran Lin, Huaizong Shao, and Guomin Sun. 2023. "Cooperative Electromagnetic Data Annotation via Low-Rank Matrix Completion" Remote Sensing 15, no. 1: 121. https://doi.org/10.3390/rs15010121