1. Introduction

Yield, as the ultimate goal of agricultural cultivation, serves as the most direct economic parameter for evaluating field productivity [

1]. Maize, one of the world’s major cereal crops, holds significant value in terms of food consumption, feed processing, and industrial production [

2]. Monitoring of the early stages of maize growth can improve crop fertility management. Correct yield estimation using UAV remote sensing technology is not only valuable for the economic decision-making and marketing layout of corn crop in that year but can also play a vital role in agricultural development [

3,

4]. Traditional monitoring of maize crops during reproductive stages typically involves destructive field sampling, while yield assessment relies on on-site information surveys conducted during the harvest season. However, these conventional methods are characterized by time-consuming and labor-intensive procedures, high costs, and the need for data reliability assessment, particularly when dealing with multitemporal data. Consequently, optimizing maize crop monitoring methods and establishing highly accurate yield prediction models for maize crops have emerged as pressing issues.

The advent of remote sensing technology has made the continuous monitoring of crop reproductive stages and yield assessment feasible [

5]. Currently, remote sensing monitoring methods can be classified into satellite remote sensing and unmanned aerial vehicle (UAV) remote sensing. Satellite remote sensing provides broad coverage and low costs; however, it is susceptible to weather conditions and suffers from limitations such as lower spatial resolution compared to UAV imagery, which restricts its capacity for the high-frequency monitoring of crops at smaller spatial scales [

6]. UAVs equipped with diverse sensor types enable the rapid acquisition of crop growth information, leading to their extensive application in crop yield assessment, nutrient diagnosis, growth characteristic evaluation, and more [

7]. Presently, various UAV sensors, including light detection and ranging (LIDAR), multispectral, and RGB cameras, have demonstrated immense potential in crop monitoring [

8]. Multispectral imagery allows for the calculation of vegetation indices (VIs) that reflect crop growth conditions and facilitate yield estimation [

9]. Onboard LIDAR systems accurately capture three-dimensional vegetation information, thereby improving the accuracy of vegetation parameter estimation during different crop reproductive stages. For instance, Liang et al. [

10] predicted rice yield in small-scale fields in southern China by integrating RGB and multispectral imagery while monitoring leaf chlorophyll content (LCC) during reproductive stages using the normalized difference yellow index (NDYI). Patricia et al. [

11] predicted grape yield using RGB spectral data and improved the linear relationship through spectral data integration. Gong et al. [

12] estimated canola seed yield by utilizing canopy information and abundance data derived from UAV multispectral imagery, demonstrating that the product of normalized VI and short-stem leaf abundance provided the most accurate estimate of canola yield under varying nitrogen treatments. Yi et al. [

13] collected multitemporal soybean remote sensing data using hyperspectral, LIDAR, and multispectral sensors, and estimated the leaf area index (LAI) through machine learning techniques. Luo et al. [

14] predicted the height of maize and soybean crops using LIDAR point cloud data and compared the accuracy of height prediction at different point densities. The aforementioned studies confirm that, compared to other methods, UAV remote sensing enables the more effective monitoring of crop growth and yield prediction across diverse crop types.

In recent research, the integration of remote sensing data with various machine learning algorithms has been employed for crop yield prediction and analysis, considering the influence of nonlinear growth characteristics of crops across multiple temporal phases [

15]. Nonlinear machine learning models have demonstrated superior performance compared to traditional linear regression models. For instance, Tian et al. [

16] integrated two remote sensing indicators with meteorological data using LSTM networks to estimate wheat yield. Tian et al. [

17] developed an IPSO-BP neural network that assigned different weights to VIs and LAI during different reproductive stages to establish a yield regression model for yield estimation. Yang et al. [

18] trained a convolutional neural network (CNN) classification model using hyperspectral imagery to extract spectral and RGB information relevant to maize characteristics, enabling maize yield estimation.

However, previous yield prediction models predominantly relied on UAV remote sensing data from a single sensor, failing to effectively utilize multimodal crop information. The fusion of multimodal data can overcome the limitations of unimodal features primarily based on one-dimensional spectral information or two-dimensional RGB imagery. It allows for the effective extraction of multidimensional structural features of crops, thereby enhancing yield prediction accuracy. Multimodal data fusion has attracted significant attention and has been widely applied in various fields. For instance, Mou et al. [

19] employed CNN and long short-term memory (LSTM) models to fuse nonintrusive data and develop a driver stress detection model. Multimodal data fusion has also been applied to yield prediction. Ma et al. [

20] proposed a novel winter wheat yield prediction model based on multimodal imagery, demonstrating superior performance compared to individual modes. Fei et al. [

21] fused data from multiple sensors on UAVs using machine learning methods to enhance crop prediction accuracy. Although these studies effectively integrated multimodal remote sensing data, they did not fully incorporate the multitemporal information of crops.

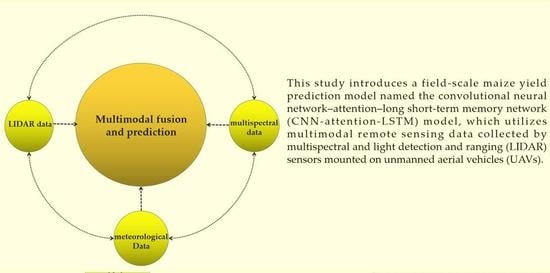

The main objective of this study is to predict maize yield through an innovative fusion model based on multitemporal and multimodal UAV data. To effectively capture the nonlinear features of different data modalities, this study combines the attention mechanism with the CNN-LSTM model to integrate multispectral, LIDAR, and meteorological data. Based on the above issues, the specific objectives of this study are as follows:

Build the CNN-attention-LSTM network model. The model is used to fuse relevant growth parameters and climate data for multiple fertility stages of maize and to make yield predictions.

Provide a comparison of the effects of different reproductive stages and sensor combinations on the yield prediction model. An evaluation of optimal multitemporal and multimodal maize yield predictor combinations is performed.

Evaluate the model robustness using data collected in the test area; the adaptability of the proposed CNN-attention-LSTM model to predict maize yield under different fertilization treatments is also verified.

5. Conclusions

This study presents the development of a CNN-attention-LSTM network that integrates multitemporal and multimodal UAV data for field-scale maize yield prediction. This model exhibits high accuracy and robustness in predicting yield. Field experiments were conducted to validate the model’s performance, considering different nitrogen fertilizer treatments. The experimental results demonstrate that the CNN-attention-LSTM model outperforms existing models in maize yield prediction, achieving an R

2 value of 0.78 and an rRMSE of 8.27% [

18,

45]. The fusion of multimodal data also outperforms individual modalities. The self-attention mechanism in the model allows for the assignment of varying weights to different features. In comparison, the CNN-LSTM model without self-attention achieves an R

2 value of 0.73 and an rRMSE of 13.2%. These results indicate that self-attention effectively balances the information from multiple dimensions.

Based on these findings, this study enables the accurate monitoring and yield prediction of maize at the field scale, contributing to the advancement of precision agriculture. However, there are certain limitations in practical field operations to consider. Firstly, the field experiments focused on a single crop variety, and the collected data exhibited a degree of randomness. Secondly, this study utilized multispectral and LIDAR UAV sensors, neglecting the potential of hyperspectral technology, which has proven valuable in yield prediction [

46].

Future research should focus on improving the training dataset to enhance the generalizability of the model. Additionally, given the demonstrated feasibility of multimodal fusion in yield prediction, further investigation into different fusion methods for various modalities is warranted. Despite its limitations, this study holds practical significance for field-scale maize yield prediction.