Design and Application of a UAV Autonomous Inspection System for High-Voltage Power Transmission Lines

Abstract

:1. Introduction

- (1)

- The degree of autonomy of the inspection flight: This needs to be improved, as the inspection efficiency is low. At present, a mainstream inspection flight robot basically uses a combination of human and machine inspection, the need for the manual operation of the UAV for inspection target photography, which involves copying or first manually operating the UAV for photo point location collection, and then re-flying inspection. Photo copying requires manual participation, a low degree of autonomy, and low inspection efficiency;

- (2)

- Flight control stability issues: An inspection flight robot in response to the complex inspection environment, has difficulty in achieving high precision and stable hovering, which brings a serious impact on accurate data collection, so flight control stability has been a difficult point for industry applications;

- (3)

- Drone battery replacement issues: An existing inspection flight robot generally lacks the functions of fast and accurate recovery and power battery replacement, which means that inspection efficiency cannot significantly improve;

- (4)

- Inspection data fault detection: An inspection flight robot has a low accuracy for intelligent recognition and slow generation of inspection reports.

- (1)

- The ground station system that automatically generates the inspection program is designed, including fine inspection, arc-chasing inspection, and channel inspection, and the UAV can operate autonomously according to this plan to achieve the all-around inspection of high-voltage lines;

- (2)

- The self-developed flight control and navigation system achieves high robustness and high precision flight control for the UAV, solving the problem of poor flight control stability for existing inspection robots;

- (3)

- A mechanical device for automatic battery replacement is designed, and a mobile inspection scheme is provided to complete the transfer of equipment while the UAV performs its task, greatly improving the efficiency of inspection;

- (4)

- Based on the YOLOX object detection model, some improvements are proposed, and the improved YOLOX is deployed on the cloud server to improve detection accuracy.

2. Related Work

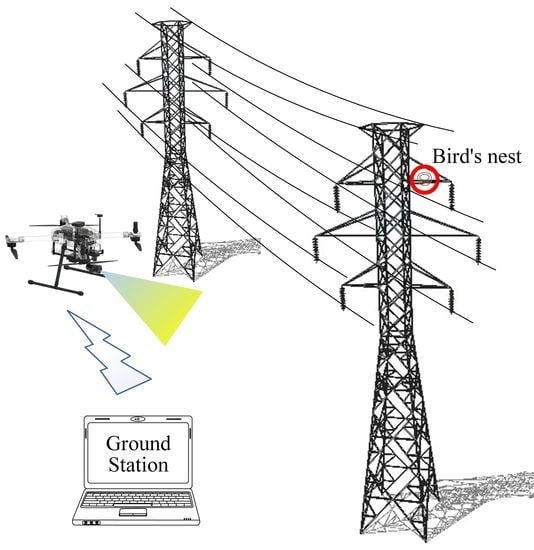

3. Structure of the System and Methods

3.1. Structure of the System

3.2. Path Planning

3.2.1. Fine Inspection

3.2.2. Arc-Chasing Inspection and Channel Inspection

3.3. Sliding Mode Control Algorithm

3.4. Intelligent Machine Nest

3.5. YOLOX

3.6. Improved YOLOX

3.6.1. Coordinate Attention

3.6.2. Varifocal Loss

3.6.3. SCYLLA-IoU

4. Experiments

4.1. Dataset Establishment

4.2. Evaluation Metrics

4.3. Model Training

4.4. Ablation Experiments

4.4.1. Attentional Mechanisms

4.4.2. Confidence Loss

4.4.3. Bounding Box Regression

4.5. System Validation

4.5.1. Flight Data

4.5.2. Inspection Data Collection

4.5.3. Results of the Bird’s Nest Detection

4.5.4. Comparison of Inspection Efficiency

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mao, T.; Huang, K.; Zeng, X.; Ren, L.; Wang, C.; Li, S.; Zhang, M.; Chen, Y. Development of Power Transmission Line Defects Diagnosis System for UAV Inspection based on Binocular Depth Imaging Technology. In Proceedings of the 2019 2nd International Conference on Electrical Materials and Power Equipment (ICEMPE), Guangzhou, China, 7–10 April 2019; pp. 478–481. [Google Scholar] [CrossRef]

- Wu, C.; Song, J.G.; Zhou, H.; Yang, X.F.; Ni, H.Y.; Yan, W.X. Research on Intelligent Inspection System for HV Power Transmission Lines. In Proceedings of the 2020 IEEE International Conference on High Voltage Engineering and Application (ICHVE), Chongqing, China, 25–29 September 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Knapik, W.; Kowalska, M.K.; Odlanicka-Poczobutt, M.; Kasperek, M. The Internet of Things through Internet Access Using an Electrical Power Transmission System (Power Line Communication) to Improve Digital Competencies and Quality of Life of Selected Social Groups in Poland’s Rural Areas. Energies 2022, 15, 5018. [Google Scholar] [CrossRef]

- Yong, Z.; Xiuxiao, Y.; Yi, F.; Shiyu, C. UAV Low Altitude Photogrammetry for Power Line Inspection. ISPRS Int. J. Geoinf. 2017, 6, 14. [Google Scholar] [CrossRef]

- Chen, D.Q.; Guo, X.H.; Huang, P.; Li, F.H. Safety Distance Analysis of 500kV Transmission Line Tower UAV Patrol Inspection. IEEE Electromagn. Compat. Mag. 2020, 2, 124–128. [Google Scholar] [CrossRef]

- Larrauri, J.I.; Sorrosal, G.; González, M. Automatic system for overhead power line inspection using an Unmanned Aerial Vehicle—RELIFO project. In Proceedings of the 2013 International Conference on Unmanned Aircraft Systems (ICUAS), Dubrovnik, Croatia, 28–31 May 2013; pp. 244–252. [Google Scholar] [CrossRef]

- Vemula, S.; Frye, M. Mask R-CNN Powerline Detector: A Deep Learning approach with applications to a UAV. In Proceedings of the 2020 AIAA/IEEE 39th Digital Avionics Systems Conference (DASC), San Antonio, TX, USA, 11–15 October 2020; pp. 244–252. [Google Scholar] [CrossRef]

- Zhao, Z.; Qi, H.; Qi, Y.; Zhang, K.; Zhai, Y.; Zhao, W. Detection method based on automatic visual shape clustering for pin-missing defect in transmission lines. IEEE Instrum. Meas. Mag. 2020, 69, 6080–6091. [Google Scholar] [CrossRef]

- Debenest, P.; Guarnieri, M.; Takita, K.; Fukushima, E.F.; Hirose, S.; Tamura, K.; Kimura, A.; Kubokawa, H.; Lwama, N.; Shiga, F. Expliner—Robot for inspection of transmission lines. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 19–23 May 2008; pp. 244–252. [Google Scholar] [CrossRef]

- Matikainen, L.; Lehtomäki, M.; Ahokas, E.; Hyyppä, J.; Karjalainen, M.; Jaakkola, A.; Kukko, A.; Heinonen, T. Remote sensing methods for power line corridor surveys. ISPRS J. Photogramm. Remote Sens. 2016, 119, 10–31. [Google Scholar] [CrossRef] [Green Version]

- Finotto, V.C.; Horikawa, O.; Hirakawa, A.; Chamas Filho, A. Pole type robot for distribution power line inspection. In Proceedings of the 2012 2nd International Conference on Applied Robotics for the Power Industry (CARPI), Zurich, Switzerland, 11–13 September 2012; pp. 244–252. [Google Scholar] [CrossRef]

- Martinez, C.; Sampedro, C.; Chauhan, A.; Collumeau, J.F.; Campoy, P. The Power Line Inspection Software (PoLIS): A versatile system for automating power line inspection. Eng. Appl. Artif. Intell. 2018, 71, 293–314. [Google Scholar] [CrossRef]

- Li, J.; Wang, L.; Shen, X. Unmanned aerial vehicle intelligent patrol-inspection system applied to transmission grid. In Proceedings of the 2018 2nd IEEE Conference on Energy Internet and Energy System Integration (EI2), Beijing, China, 20–22 October 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Calvo, A.; Silano, G.; Capitán, J. Mission Planning and Execution in Heterogeneous Teams of Aerial Robots supporting Power Line Inspection Operations. In Proceedings of the 2022 International Conference on Unmanned Aircraft Systems, Dubrovnik, Croatia, 21–24 June 2022. [Google Scholar]

- Luque-Vega, L.F.; Castillo-Toledo, B.; Loukianov, A.; Gonzalez-Jimenez, L.E. Power line inspection via an unmanned aerial system based on the quadrotor helicopter. In Proceedings of the MELECON 2014—2014 17th IEEE Mediterranean Electrotechnical Conference, Beirut, Lebanon, 13–16 April 2014; pp. 393–397. [Google Scholar] [CrossRef]

- Li, Z.; Mu, S.; Li, J.; Wang, W.; Liu, Y. Transmission line intelligent inspection central control and mass data processing system and application based on UAV. In Proceedings of the 2016 4th International Conference on Applied Robotics for the Power Industry (CARPI), Jinan, China, 11–13 October 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Guan, H.; Sun, X.; Su, Y.; Hu, T.; Wang, H.; Wang, H.; Peng, C.; Guo, Q. UAV-lidar aids automatic intelligent powerline inspection. Int. J. Electr. Power Energy Syst. 2021, 130, 106987. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.E. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Xu, C.; Li, Q.; Zhou, Q.; Zhang, S.; Yu, D.; Ma, Y. Power Line-Guided Automatic Electric Transmission Line Inspection System. IEEE Instrum. Meas. Mag. 2022, 71, 1–18. [Google Scholar] [CrossRef]

- Li, H.; Dong, Y.; Liu, Y.; Ai, J. Design and Implementation of UAVs for Bird’s Nest Inspection on Transmission Lines Based on Deep Learning. Drones 2022, 6, 252. [Google Scholar] [CrossRef]

- Hao, J.; Wulin, H.; Jing, C.; Xinyu, L.; Xiren, M.; Shengbin, Z. Detection of bird nests on power line patrol using single shot detector. In Proceedings of the 2019 Chinese Automation Congress (CAC), Hangzhou, China, 22–24 November 2019; pp. 3409–3414. [Google Scholar] [CrossRef]

- Jenssen, R.; Roverso, D. Intelligent Monitoring and Inspection of Power Line Components Powered by UAVs and Deep Learning. IEEE Power Energy Technol. Syst. J. 2019, 6, 11–21. [Google Scholar] [CrossRef]

- Yang, L.; Fan, J.; Song, S.; Liu, Y. A light defect detection algorithm of power insulators from aerial images for power inspection. Neural. Comput. Appl. 2022, 34, 17951–17961. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Wang, W.; Ma, H.; Xia, M.; Weng, L.; Ye, X. Attitude and altitude controller design for quad-rotor type MAVs. Math. Probl. Eng. 2013, 2013, 587098. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Online, 14–19 June 2021; pp. 13713–13722. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, Y.; Dayoub, F.; Sunderhauf, N. Varifocalnet: An iou-aware dense object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Online, 19–25 June 2021; pp. 8514–8523. [Google Scholar] [CrossRef]

- Gevorgyan, Z. SIoU Loss: More Powerful Learning for Bounding Box Regression. arXiv 2022, arXiv:2205.12740. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Learning spatial fusion for single-shot object detection. arXiv 2019, arXiv:1911.09516. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Li, Z.; Namiki, A.; Suzuki, S.; Wang, Q.; Zhang, T.; Wang, W. Application of Low-Altitude UAV Remote Sensing Image Object Detection Based on Improved YOLOv5. Appl. Sci. 2022, 12, 8314. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar] [CrossRef]

- Chunxiang, Z.; Jiacheng, Q.; Wang, B. YOLOX on Embedded Device With CCTV & TensorRT for Intelligent Multicategories Garbage Identification and Classification. IEEE Sens. J. 2022, 22, 16522–16532. [Google Scholar] [CrossRef]

- Rao, Y.; Zhao, W.; Tang, Y.; Zhou, J.; Lim, S.N.; Lu, J. Hornet: Efficient high-order spatial interactions with recursive gated convolutions. arXiv 2022, arXiv:2207.14284. [Google Scholar]

- Li, Y.; Yuan, G.; Wen, Y.; Hu, E.; Evangelidis, G.; Tulyakov, S.; Wang, Y.; Ren, J. EfficientFormer: Vision Transformers at MobileNet Speed. arXiv 2022, arXiv:2206.01191. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. Repvgg: Making vgg-style convnets great again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Online, 19–25 June 2021; pp. 13733–13742. [Google Scholar] [CrossRef]

- Li, Y.; Wu, C.Y.; Fan, H.; Mangalam, K.; Xiong, B.; Malik, J.; Feichtenhofer, C. MViTv2: Improved Multiscale Vision Transformers for Classification and Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 4804–4814. [Google Scholar]

| No. | Point of Demand | Inspection Contents |

|---|---|---|

| 1 | High-voltage line towers | Detection of whether the tower is deformed or tilted, the presence of a bird’s nest, etc. |

| 2 | Tower bases | Detection of the ground conditions near the tower base |

| 3 | Cross-arms | Detection of whether the cross-arms are tilted and other abnormalities |

| 4 | Insulators | Detection of an insulator skirt and grading ring damage |

| 5 | Bolts | Detection of whether the installed bolts and nuts have popped out or fallen off, etc. |

| 6 | Lightning rod and grounding device | Detection of whether the discharge gap between them has changed significantly |

| 7 | Anti-vibration hammer | Detection of the fracture of the anti-vibration hammer connection |

| 8 | Lead wire pegging point fixtures | Testing of small size fixtures such as wire pendant locking pins |

| 9 | Ground wire | Detection of ground wire for loose strands and other defects |

| 10 | Ground wire pegging point fixtures | Detection of ground peg locking pin and other objects |

| 11 | Power transmission lines | Detection of whether the transmission line is broken, damaged by foreign objects, etc. |

| 12 | Channel | Checking of over-height trees and illegal buildings in the passage |

| Methods | Size | Par | Gflops | (%) | (%) | |||

|---|---|---|---|---|---|---|---|---|

| YOLOX_s | 768 × 1280 | 8.94 M | 64.22 | 97.7 | 70.05 | / | 77.6 | 69.6 |

| YOLOX_m | 768 × 1280 | 25.28 M | 176.94 | 97.8 | 70.63 | / | 75.6 | 70.5 |

| YOLOX_l | 768 × 1280 | 54.15 M | 373.61 | 97.9 | 70.65 | / | 74.9 | 70.6 |

| YOLOX_x | 768 × 1280 | 99.00 M | 676.87 | 97.8 | 70.37 | / | 77.3 | 70.0 |

| Methods | Size | Par | Gflops | (%) | (%) | |||

|---|---|---|---|---|---|---|---|---|

| YOLOX_m | 768 × 1280 | 25.28 M | 176.94 | 97.8 | 70.63 | / | 75.6 | 70.5 |

| YOLOX_m + SENet | 768 × 1280 | 25.38 M | 176.96 | 97.8 | 70.30 | / | 75.2 | 70.0 |

| YOLOX_m + CBAM | 768 × 1280 | 25.47 M | 177.00 | 97.8 | 70.89 | / | 76.7 | 70.7 |

| YOLOX_m + ECA | 768 × 1280 | 25.28 M | 176.95 | 97.8 | 71.03 | / | 76.7 | 70.8 |

| YOLOX_m + CA | 768 × 1280 | 25.36 M | 177.03 | 97.8 | 71.25 | / | 76.8 | 71.0 |

| Methods | (%) | (%) | |||

|---|---|---|---|---|---|

| YOLOX_m + BCE | 97.8 | 70.63 | / | 75.6 | 70.5 |

| YOLOX_m + FL | 97.7 | 70.22 | 2.8 | 72.4 | 70.4 |

| YOLOX_m + VFL | 98.3 | 71.50 | 20.5 | 76.6 | 71.2 |

| Methods | (%) | (%) | |||

|---|---|---|---|---|---|

| YOLOX_m + IoU | 97.8 | 70.63 | / | 75.6 | 70.5 |

| YOLOX_m + GIoU | 97.8 | 70.92 | / | 76.7 | 70.7 |

| YOLOX_m + DIoU | 97.8 | 71.12 | / | 76.7 | 70.8 |

| YOLOX_m + CIoU | 97.8 | 71.18 | / | 76.7 | 70.8 |

| YOLOX_m + SIoU | 97.9 | 71.36 | / | 76.7 | 71.1 |

| YOLO_m | CA | VFL | SIoU | Par | Gflops | (%) |

|---|---|---|---|---|---|---|

| ✓ | 25.28 M | 176.94 | 70.63 | |||

| ✓ | ✓ | 25.36 M | 177.03 | 71.25 (+0.62) | ||

| ✓ | ✓ | ✓ | 25.36 M | 177.03 | 72.12 (+0.87) | |

| ✓ | ✓ | ✓ | ✓ | 25.36 M | 177.03 | 72.85 (+0.73) |

| Inspection Scheme Technical Index | Our Inspection Scheme | Combined Human-Machine Inspection Scheme | Traditional Manual Inspection Scheme |

|---|---|---|---|

| Fine inspection net inspection time | 5 min | 10–20 min | 50 min |

| Average number of towers inspected per day | 40 | 20 | 5 |

| Maximum number of towers inspected in a single sortie | 6 | 2 | 1 |

| Endurance of single sortie inspection | 42 min | 25 min | / |

| Maximum inspection distance for a single sortie | 5000 m | 2000 m | / |

| Number of maximum waypoints inspected | 1000 | 300 | / |

| Battery replacement time | <3 min | 5 min | / |

| Number of staff | 1 | 3 | 6 |

| Inspection report issuance time | 1 day | 2 days | 3–4 days |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Zhang, Y.; Wu, H.; Suzuki, S.; Namiki, A.; Wang, W. Design and Application of a UAV Autonomous Inspection System for High-Voltage Power Transmission Lines. Remote Sens. 2023, 15, 865. https://doi.org/10.3390/rs15030865

Li Z, Zhang Y, Wu H, Suzuki S, Namiki A, Wang W. Design and Application of a UAV Autonomous Inspection System for High-Voltage Power Transmission Lines. Remote Sensing. 2023; 15(3):865. https://doi.org/10.3390/rs15030865

Chicago/Turabian StyleLi, Ziran, Yanwen Zhang, Hao Wu, Satoshi Suzuki, Akio Namiki, and Wei Wang. 2023. "Design and Application of a UAV Autonomous Inspection System for High-Voltage Power Transmission Lines" Remote Sensing 15, no. 3: 865. https://doi.org/10.3390/rs15030865