Remote Sensing Object Detection in the Deep Learning Era—A Review

Abstract

:1. Introduction

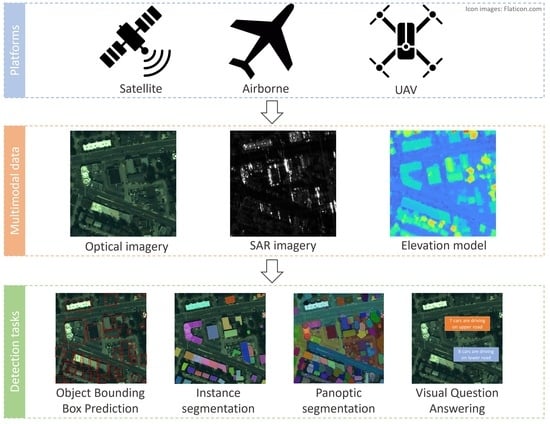

2. Overview of EO Sensors and Data

2.1. 2D Passive Data: Optical Sensors and Images

2.2. 2D Active Data: Synthetic Aperture Radar Data

2.3. 3D Data: LiDAR and Photogrammetry Data

3. An Overview of Remote Sensing Object Detection and Segmentation

3.1. Common Pipeline for Object Detection in EO Data

3.2. Deep Learning Methods for Object Detection in EO

3.2.1. Object Bounding Box Prediction

Two-Stage Object Detection Methods

Single-Stage Object Detection Methods

3.2.2. Instance Segmentation in EO Data

Detection-and-Segmentation Methods

Segmentation-and-Detection Methods

Single-Stage Methods

Self-Attention Methods

3.2.3. Panoptic Segmentation in EO Data

3.3. Object Detection with Multi-Modal Data

3.4. Meta-Learning for X-Shot Problem and Unsupervised Learning in Object Detection

3.5. Language and Foundational Models in EO Object Detection

3.5.1. General Language Model for Object Detection

3.5.2. Foundational Model for Object Detection

4. An Overview of Commonly Used Public EO Datasets

4.1. Object Detection Datasets

4.2. Instance and Panoptic Segmentation and Multi-Task Dataset

5. Applications of EO Object Detection

5.1. Urban and Civilian Applications

5.2. Environmental–Ecological Monitoring and Management

5.3. Agriculturical and Forestry Applications

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sirmacek, B.; Unsalan, C. A Probabilistic Framework to Detect Buildings in Aerial and Satellite Images. IEEE Trans. Geosci. Remote Sens. 2010, 49, 211–221. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A Survey on Object Detection in Optical Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Yao, Q.; Hu, X.; Lei, H. Multiscale Convolutional Neural Networks for Geospatial Object Detection in VHR Satellite Images. IEEE Geosci. Remote Sens. Lett. 2020, 18, 23–27. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–5 December 2012; Curran Associates: New York, NY, USA, 2012; Volume 25. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Everingham, M.; Eslami, S.M.A.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Zhou, B.; Zhao, H.; Puig, X.; Fidler, S.; Barriuso, A.; Torralba, A. Scene Parsing Through ADE20K Dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-Cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Kirillov, A.; He, K.; Girshick, R.; Rother, C.; Dollár, P. Panoptic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9404–9413. [Google Scholar]

- Wang, Y.; Bashir, S.M.A.; Khan, M.; Ullah, Q.; Wang, R.; Song, Y.; Guo, Z.; Niu, Y. Remote Sensing Image Super-Resolution and Object Detection: Benchmark and State of the Art. Expert Syst. Appl. 2022, 197, 116793. [Google Scholar] [CrossRef]

- Sumbul, G.; Cinbis, R.G.; Aksoy, S. Multisource Region Attention Network for Fine-Grained Object Recognition in Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4929–4937. [Google Scholar] [CrossRef]

- Li, X.; Du, Z.; Huang, Y.; Tan, Z. A Deep Translation (GAN) Based Change Detection Network for Optical and SAR Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2021, 179, 14–34. [Google Scholar] [CrossRef]

- Li, H.; Zhu, F.; Zheng, X.; Liu, M.; Chen, G. MSCDUNet: A Deep Learning Framework for Built-up Area Change Detection Integrating Multispectral, SAR, and VHR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5163–5176. [Google Scholar] [CrossRef]

- Biffi, C.; McDonagh, S.; Torr, P.; Leonardis, A.; Parisot, S. Many-Shot from Low-Shot: Learning to Annotate Using Mixed Supervision for Object Detection. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 35–50. [Google Scholar]

- Majee, A.; Agrawal, K.; Subramanian, A. Few-Shot Learning for Road Object Detection. In Proceedings of the AAAI Workshop on Meta-Learning and MetaDL Challenge, PMLR, Virtual, 9 February 2021; pp. 115–126. [Google Scholar]

- Sumbul, G.; Cinbis, R.G.; Aksoy, S. Fine-Grained Object Recognition and Zero-Shot Learning in Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2017, 56, 770–779. [Google Scholar] [CrossRef]

- Kemker, R.; Luu, R.; Kanan, C. Low-Shot Learning for the Semantic Segmentation of Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6214–6223. [Google Scholar] [CrossRef]

- Li, A.; Lu, Z.; Wang, L.; Xiang, T.; Wen, J.-R. Zero-Shot Scene Classification for High Spatial Resolution Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4157–4167. [Google Scholar] [CrossRef]

- Pal, D.; Bundele, V.; Banerjee, B.; Jeppu, Y. SPN: Stable Prototypical Network for Few-Shot Learning-Based Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2021, 19, 5506905. [Google Scholar] [CrossRef]

- Zhu, D.; Xia, S.; Zhao, J.; Zhou, Y.; Niu, Q.; Yao, R.; Chen, Y. Spatial Hierarchy Perception and Hard Samples Metric Learning for High-Resolution Remote Sensing Image Object Detection. Appl. Intell. 2022, 52, 3193–3208. [Google Scholar] [CrossRef]

- Gong, P.; Li, X.; Zhang, W. 40-Year (1978–2017) Human Settlement Changes in China Reflected by Impervious Surfaces from Satellite Remote Sensing. Sci. Bull. 2019, 64, 756–763. [Google Scholar] [CrossRef]

- Zhang, X.; Han, L.; Han, L.; Zhu, L. How Well Do Deep Learning-Based Methods for Land Cover Classification and Object Detection Perform on High Resolution Remote Sensing Imagery? Remote Sens. 2020, 12, 417. [Google Scholar] [CrossRef]

- Kadhim, N.; Mourshed, M. A Shadow-Overlapping Algorithm for Estimating Building Heights from VHR Satellite Images. IEEE Geosci. Remote Sens. Lett. 2017, 15, 8–12. [Google Scholar] [CrossRef]

- Zhang, Y.; Mishra, R.K. A Review and Comparison of Commercially Available Pan-Sharpening Techniques for High Resolution Satellite Image Fusion. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 182–185. [Google Scholar]

- Pettorelli, N.; Vik, J.O.; Mysterud, A.; Gaillard, J.-M.; Tucker, C.J.; Stenseth, N.C. Using the Satellite-Derived NDVI to Assess Ecological Responses to Environmental Change. Trends Ecol. Evol. 2005, 20, 503–510. [Google Scholar] [CrossRef]

- Park, H.G.; Yun, J.P.; Kim, M.Y.; Jeong, S.H. Multichannel Object Detection for Detecting Suspected Trees with Pine Wilt Disease Using Multispectral Drone Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8350–8358. [Google Scholar] [CrossRef]

- Zhang, B.; Wu, Y.; Zhao, B.; Chanussot, J.; Hong, D.; Yao, J.; Gao, L. Progress and Challenges in Intelligent Remote Sensing Satellite Systems. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1814–1822. [Google Scholar] [CrossRef]

- Yu, J.-Y.; Huang, D.; Wang, L.-Y.; Guo, J.; Wang, Y.-H. A Real-Time on-Board Ship Targets Detection Method for Optical Remote Sensing Satellite. In Proceedings of the 2016 IEEE 13th International Conference on Signal Processing (ICSP), Chengdu, China, 6–10 November 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 204–208. [Google Scholar]

- Yang, J.; Li, D.; Jiang, X.; Chen, S.; Hanzo, L. Enhancing the Resilience of Low Earth Orbit Remote Sensing Satellite Networks. IEEE Netw. 2020, 34, 304–311. [Google Scholar] [CrossRef]

- Soumekh, M. Reconnaissance with Slant Plane Circular SAR Imaging. IEEE Trans. Image Process. 1996, 5, 1252–1265. [Google Scholar] [CrossRef]

- Lee, J.-S.; Grunes, M.R.; Pottier, E. Quantitative Comparison of Classification Capability: Fully Polarimetric versus Dual and Single-Polarization SAR. IEEE Trans. Geosci. Remote Sens. 2001, 39, 2343–2351. [Google Scholar]

- Leigh, S.; Wang, Z.; Clausi, D.A. Automated Ice–Water Classification Using Dual Polarization SAR Satellite Imagery. IEEE Trans. Geosci. Remote Sens. 2013, 52, 5529–5539. [Google Scholar] [CrossRef]

- Wang, J.; Lin, Y.; Guo, J.; Zhuang, L. SSS-YOLO: Towards More Accurate Detection for Small Ships in SAR Image. Remote Sens. Lett. 2021, 12, 93–102. [Google Scholar] [CrossRef]

- Chang, Y.-L.; Anagaw, A.; Chang, L.; Wang, Y.C.; Hsiao, C.-Y.; Lee, W.-H. Ship Detection Based on YOLOv2 for SAR Imagery. Remote Sens. 2019, 11, 786. [Google Scholar] [CrossRef]

- Fingas, M.; Brown, C. Review of Oil Spill Remote Sensing. Mar. Pollut. Bull. 2014, 83, 9–23. [Google Scholar] [CrossRef] [PubMed]

- Hasimoto-Beltran, R.; Canul-Ku, M.; Díaz Méndez, G.M.; Ocampo-Torres, F.J.; Esquivel-Trava, B. Ocean Oil Spill Detection from SAR Images Based on Multi-Channel Deep Learning Semantic Segmentation. Mar. Pollut. Bull. 2023, 188, 114651. [Google Scholar] [CrossRef] [PubMed]

- Domg, Y.; Milne, A.; Forster, B. Toward Edge Sharpening: A SAR Speckle Filtering Algorithm. IEEE Trans. Geosci. Remote Sens. 2001, 39, 851–863. [Google Scholar] [CrossRef]

- Lee, J.-S.; Wen, J.-H.; Ainsworth, T.L.; Chen, K.-S.; Chen, A.J. Improved Sigma Filter for Speckle Filtering of SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2008, 47, 202–213. [Google Scholar]

- Fang, Q.; Wang, Z. Cross-Modality Attentive Feature Fusion for Object Detection in Multispectral Remote Sensing Imagery. Pattern Recognit. 2022, 130, 108786. [Google Scholar] [CrossRef]

- Sun, W.; Wang, R. Fully Convolutional Networks for Semantic Segmentation of Very High Resolution Remotely Sensed Images Combined with DSM. IEEE Geosci. Remote Sens. Lett. 2018, 15, 474–478. [Google Scholar] [CrossRef]

- Lee, B.; Wei, Y.; Guo, I.Y. Automatic Parking of Self-Driving Car Based on Lidar. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 241–246. [Google Scholar] [CrossRef]

- Khodadadzadeh, M.; Li, J.; Prasad, S.; Plaza, A. Fusion of Hyperspectral and LiDAR Remote Sensing Data Using Multiple Feature Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2971–2983. [Google Scholar] [CrossRef]

- Zhang, J. Multi-Source Remote Sensing Data Fusion: Status and Trends. Int. J. Image Data Fusion 2010, 1, 5–24. [Google Scholar] [CrossRef]

- Qin, R. Rpc Stereo Processor (Rsp)–a Software Package for Digital Surface Model and Orthophoto Generation from Satellite Stereo Imagery. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 77. [Google Scholar] [CrossRef]

- Qin, R. A Critical Analysis of Satellite Stereo Pairs for Digital Surface Model Generation and a Matching Quality Prediction Model. ISPRS J. Photogramm. Remote Sens. 2019, 154, 139–150. [Google Scholar] [CrossRef]

- Rupnik, E.; Pierrot-Deseilligny, M.; Delorme, A. 3D Reconstruction from Multi-View VHR-Satellite Images in MicMac. ISPRS J. Photogramm. Remote Sens. 2018, 139, 201–211. [Google Scholar] [CrossRef]

- Liu, J.; Gao, J.; Ji, S.; Zeng, C.; Zhang, S.; Gong, J. Deep Learning Based Multi-View Stereo Matching and 3D Scene Reconstruction from Oblique Aerial Images. ISPRS J. Photogramm. Remote Sens. 2023, 204, 42–60. [Google Scholar] [CrossRef]

- Qin, R.; Huang, X.; Liu, W.; Xiao, C. Semantic 3D Reconstruction Using Multi-View High-Resolution Satellite Images Based on U-Net and Image-Guided Depth Fusion. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 5057–5060. [Google Scholar]

- Huang, X.; Qin, R. Multi-View Large-Scale Bundle Adjustment Method for High-Resolution Satellite Images. arXiv 2019, arXiv:1905.09152. [Google Scholar]

- Chen, M.; Qin, R.; He, H.; Zhu, Q.; Wang, X. A Local Distinctive Features Matching Method for Remote Sensing Images with Repetitive Patterns. Photogramm. Eng. Remote Sens. 2018, 84, 513–524. [Google Scholar] [CrossRef]

- Xu, N.; Huang, D.; Song, S.; Ling, X.; Strasbaugh, C.; Yilmaz, A.; Sezen, H.; Qin, R. A Volumetric Change Detection Framework Using UAV Oblique Photogrammetry—A Case Study of Ultra-High-Resolution Monitoring of Progressive Building Collapse. Int. J. Digit. Earth 2021, 14, 1705–1720. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, W.; Chen, L. Constructing DEM Based on InSAR and the Relationship between InSAR DEM’s Precision and Terrain Factors. Energy Procedia 2012, 16, 184–189. [Google Scholar] [CrossRef]

- Arnab, A.; Torr, P.H.S. Pixelwise Instance Segmentation with a Dynamically Instantiated Network. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July 2017. [Google Scholar]

- Gualtieri, J.A.; Cromp, R.F. Support Vector Machines for Hyperspectral Remote Sensing Classification. In Proceedings of the 27th AIPR Workshop: Advances in Computer-Assisted Recognition; International Society for Optics and Photonics, Washington, DC, USA, 14–16 October 1999; Volume 3584, pp. 221–232. [Google Scholar]

- Friedl, M.A.; Brodley, C.E. Decision Tree Classification of Land Cover from Remotely Sensed Data. Remote Sens. Environ. 1997, 61, 399–409. [Google Scholar] [CrossRef]

- Toschi, I.; Remondino, F.; Rothe, R.; Klimek, K. Combining Airborne Oblique Camera and Lidar Sensors: Investigation and New Perspectives. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 437–444. [Google Scholar] [CrossRef]

- Gyongy, I.; Hutchings, S.W.; Halimi, A.; Tyler, M.; Chan, S.; Zhu, F.; McLaughlin, S.; Henderson, R.K.; Leach, J. High-Speed 3D Sensing via Hybrid-Mode Imaging and Guided Upsampling. Optica 2020, 7, 1253–1260. [Google Scholar] [CrossRef]

- Kufner, M.; Kölbl, J.; Lukas, R.; Dekorsy, T. Hybrid Design of an Optical Detector for Terrestrial Laser Range Finding. IEEE Sens. J. 2021, 21, 16606–16612. [Google Scholar] [CrossRef]

- Haklay, M.; Weber, P. Openstreetmap: User-Generated Street Maps. IEEE Pervasive Comput. 2008, 7, 12–18. [Google Scholar] [CrossRef]

- Haklay, M. How Good Is Volunteered Geographical Information? A Comparative Study of OpenStreetMap and Ordnance Survey Datasets. Environ. Plan. B Plan. Des. 2010, 37, 682–703. [Google Scholar] [CrossRef]

- Han, W.; Chen, J.; Wang, L.; Feng, R.; Li, F.; Wu, L.; Tian, T.; Yan, J. Methods for Small, Weak Object Detection in Optical High-Resolution Remote Sensing Images: A Survey of Advances and Challenges. IEEE Geosci. Remote Sens. Mag. 2021, 9, 8–34. [Google Scholar] [CrossRef]

- Yao, X.; Feng, X.; Han, J.; Cheng, G.; Guo, L. Automatic Weakly Supervised Object Detection from High Spatial Resolution Remote Sensing Images via Dynamic Curriculum Learning. IEEE Trans. Geosci. Remote Sens. 2020, 59, 675–685. [Google Scholar] [CrossRef]

- Fischler, M.A.; Elschlager, R.A. The Representation and Matching of Pictorial Structures. IEEE Trans. Comput. 1973, 100, 67–92. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid Object Detection Using a Boosted Cascade of Simple Features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001; IEEE: Piscataway, NJ, USA, 2001; Volume 1, pp. I-511–I-518. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up Robust Features. In Proceedings of the Computer Vision–ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Proceedings, Part I 9. Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An Efficient Alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 2564–2571. [Google Scholar]

- Qin, R. Change Detection on LOD 2 Building Models with Very High Resolution Spaceborne Stereo Imagery. ISPRS J. Photogramm. Remote Sens. 2014, 96, 179–192. [Google Scholar] [CrossRef]

- Safavian, S.R.; Landgrebe, D. A Survey of Decision Tree Classifier Methodology. IEEE Trans. Syst. Man Cybern. 1991, 21, 660–674. [Google Scholar] [CrossRef]

- Ho, T.K. Random Decision Forests. In Proceedings of the 3rd International Conference on Document Analysis and Recognition, Montreal, QC, Canada, 14–16 August 1995; IEEE: Piscataway, NJ, USA, 1995; Volume 1, pp. 278–282. [Google Scholar]

- Belgiu, M.; Drăguţ, L. Random Forest in Remote Sensing: A Review of Applications and Future Directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Dollár, P.; Babenko, B.; Belongie, S.; Perona, P.; Tu, Z. Multiple Component Learning for Object Detection. In Proceedings of the Computer Vision–ECCV 2008: 10th European Conference on Computer Vision, Marseille, France, 12–18 October 2008; Proceedings, Part II 10. Springer: Berlin/Heidelberg, Germany, 2008; pp. 211–224. [Google Scholar]

- Erhan, D.; Szegedy, C.; Toshev, A.; Anguelov, D. Scalable Object Detection Using Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2147–2154. [Google Scholar]

- Hartigan, J.A.; Wong, M.A. A K-Means Clustering Algorithm. JSTOR Appl. Stat. 1979, 28, 100–108. [Google Scholar] [CrossRef]

- Comaniciu, D.; Meer, P. Mean Shift Analysis and Applications. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Corfu, Greece, 20–25 September 1999; IEEE: Piscataway, NJ, USA, 1999; Volume 2, pp. 1197–1203. [Google Scholar]

- Reynolds, D.A. Gaussian Mixture Models. Encycl. Biom. 2009, 741, 659–663. [Google Scholar]

- Hoeser, T.; Kuenzer, C. Object Detection and Image Segmentation with Deep Learning on Earth Observation Data: A Review-Part i: Evolution and Recent Trends. Remote Sens. 2020, 12, 1667. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-Cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-Cnn: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems, Montréal, QC, Canada, 7–12 December 2015; Curran Associates: New York, NY, USA, 2015; Volume 28. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-Fcn: Object Detection via Region-Based Fully Convolutional Networks. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Curran Associates: New York, NY, USA, 2016; Volume 29. [Google Scholar]

- Ren, Y.; Zhu, C.; Xiao, S. Small Object Detection in Optical Remote Sensing Images via Modified Faster R-CNN. Appl. Sci. 2018, 8, 813. [Google Scholar] [CrossRef]

- Bai, T.; Pang, Y.; Wang, J.; Han, K.; Luo, J.; Wang, H.; Lin, J.; Wu, J.; Zhang, H. An Optimized Faster R-CNN Method Based on DRNet and RoI Align for Building Detection in Remote Sensing Images. Remote Sens. 2020, 12, 762. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G. YOLOv5 by Ultralytics 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 16 July 2020).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 10 October 2023).

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single Shot Multibox Detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Pham, M.-T.; Courtrai, L.; Friguet, C.; Lefèvre, S.; Baussard, A. YOLO-Fine: One-Stage Detector of Small Objects under Various Backgrounds in Remote Sensing Images. Remote Sens. 2020, 12, 2501. [Google Scholar] [CrossRef]

- Sun, Z.; Leng, X.; Lei, Y.; Xiong, B.; Ji, K.; Kuang, G. BiFA-YOLO: A Novel YOLO-Based Method for Arbitrary-Oriented Ship Detection in High-Resolution SAR Images. Remote Sens. 2021, 13, 4209. [Google Scholar] [CrossRef]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. Yolact: Real-Time Instance Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9157–9166. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Ning, J.; Cao, Y.; Zhang, Z.; Dong, L.; et al. Swin Transformer v2: Scaling up Capacity and Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12009–12019. [Google Scholar]

- Chen, L.-C.; Hermans, A.; Papandreou, G.; Schroff, F.; Wang, P.; Adam, H. Masklab: Instance Segmentation by Refining Object Detection with Semantic and Direction Features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4013–4022. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-Cnn: Delving into High Quality Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: High Quality Object Detection and Instance Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1483–1498. [Google Scholar] [CrossRef]

- Chen, K.; Pang, J.; Wang, J.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Shi, J.; Ouyang, W.; et al. Hybrid Task Cascade for Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4974–4983. [Google Scholar]

- Zhao, K.; Kang, J.; Jung, J.; Sohn, G. Building Extraction from Satellite Images Using Mask R-CNN with Building Boundary Regularization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 247–251. [Google Scholar]

- Yekeen, S.T.; Balogun, A.-L.; Yusof, K.B.W. A Novel Deep Learning Instance Segmentation Model for Automated Marine Oil Spill Detection. ISPRS J. Photogramm. Remote Sens. 2020, 167, 190–200. [Google Scholar] [CrossRef]

- Mou, L.; Zhu, X.X. Vehicle Instance Segmentation from Aerial Image and Video Using a Multitask Learning Residual Fully Convolutional Network. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6699–6711. [Google Scholar] [CrossRef]

- Su, H.; Wei, S.; Liu, S.; Liang, J.; Wang, C.; Shi, J.; Zhang, X. HQ-ISNet: High-Quality Instance Segmentation for Remote Sensing Imagery. Remote Sens. 2020, 12, 989. [Google Scholar] [CrossRef]

- Li, Q.; Arnab, A.; Torr, P.H.S. Weakly- and Semi-Supervised Panoptic Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Gao, N.; Shan, Y.; Wang, Y.; Zhao, X.; Yu, Y.; Yang, M.; Huang, K. Ssap: Single-Shot Instance Segmentation with Affinity Pyramid. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 642–651. [Google Scholar]

- Wang, X.; Kong, T.; Shen, C.; Jiang, Y.; Li, L. Solo: Segmenting Objects by Locations. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XVIII 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 649–665. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Cheng, B.; Schwing, A.; Kirillov, A. Per-Pixel Classification Is Not All You Need for Semantic Segmentation. Adv. Neural Inf. Process. Syst. 2021, 34, 17864–17875. [Google Scholar]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-Attention Mask Transformer for Universal Image Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 1290–1299. [Google Scholar]

- Xu, X.; Feng, Z.; Cao, C.; Li, M.; Wu, J.; Wu, Z.; Shang, Y.; Ye, S. An Improved Swin Transformer-Based Model for Remote Sensing Object Detection and Instance Segmentation. Remote Sens. 2021, 13, 4779. [Google Scholar] [CrossRef]

- Fan, F.; Zeng, X.; Wei, S.; Zhang, H.; Tang, D.; Shi, J.; Zhang, X. Efficient Instance Segmentation Paradigm for Interpreting SAR and Optical Images. Remote Sens. 2022, 14, 531. [Google Scholar] [CrossRef]

- Kirillov, A.; Girshick, R.; He, K.; Dollár, P. Panoptic Feature Pyramid Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6399–6408. [Google Scholar]

- Zhang, D.; Song, Y.; Liu, D.; Jia, H.; Liu, S.; Xia, Y.; Huang, H.; Cai, W. Panoptic Segmentation with an End-to-End Cell R-CNN for Pathology Image Analysis. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2018: 21st International Conference, Granada, Spain, 16–20 September 2018; Proceedings, Part II 11. Springer: Berlin/Heidelberg, Germany, 2018; pp. 237–244. [Google Scholar]

- De Carvalho, O.L.; de Carvalho Júnior, O.A.; de Albuquerque, A.O.; Santana, N.C.; Borges, D.L. Rethinking Panoptic Segmentation in Remote Sensing: A Hybrid Approach Using Semantic Segmentation and Non-Learning Methods. IEEE Geosci. Remote Sens. Lett. 2022, 19, 3512105. [Google Scholar] [CrossRef]

- Garnot, V.S.F.; Landrieu, L. Panoptic Segmentation of Satellite Image Time Series with Convolutional Temporal Attention Networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 4872–4881. [Google Scholar]

- Qin, R. Automated 3D Recovery from Very High Resolution Multi-View Images Overview of 3D Recovery from Multi-View Satellite Images. In Proceedings of the ASPRS Conference (IGTF) 2017, Baltimore, MD, USA, 12–16 March 2017; pp. 12–16. [Google Scholar]

- Liu, W.; Qin, R.; Su, F.; Hu, K. An Unsupervised Domain Adaptation Method for Multi-Modal Remote Sensing Image Classification. In Proceedings of the 2018 26th International Conference on Geoinformatics, Kunming, China, 28–30 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–5. [Google Scholar]

- Albanwan, H.; Qin, R.; Lu, X.; Li, M.; Liu, D.; Guldmann, J.-M. 3D Iterative Spatiotemporal Filtering for Classification of Multitemporal Satellite Data Sets. Photogramm. Eng. Remote Sens. 2020, 86, 23–31. [Google Scholar] [CrossRef]

- Mäyrä, J.; Keski-Saari, S.; Kivinen, S.; Tanhuanpää, T.; Hurskainen, P.; Kullberg, P.; Poikolainen, L.; Viinikka, A.; Tuominen, S.; Kumpula, T.; et al. Tree Species Classification from Airborne Hyperspectral and LiDAR Data Using 3D Convolutional Neural Networks. Remote Sens. Environ. 2021, 256, 112322. [Google Scholar] [CrossRef]

- Xiao, C.; Qin, R.; Huang, X. Treetop Detection Using Convolutional Neural Networks Trained through Automatically Generated Pseudo Labels. Int. J. Remote Sens. 2020, 41, 3010–3030. [Google Scholar] [CrossRef]

- Dunteman, G.H. Principal Components Analysis; Sage: Thousand Oaks, CA, USA, 1989; Volume 69. [Google Scholar]

- Chen, C.; He, X.; Guo, B.; Zhao, X.; Chu, Y. A Pixel-Level Fusion Method for Multi-Source Optical Remote Sensing Image Combining the Principal Component Analysis and Curvelet Transform. Earth Sci. Inform. 2020, 13, 1005–1013. [Google Scholar] [CrossRef]

- Wu, X.; Li, W.; Hong, D.; Tian, J.; Tao, R.; Du, Q. Vehicle Detection of Multi-Source Remote Sensing Data Using Active Fine-Tuning Network. ISPRS J. Photogramm. Remote Sens. 2020, 167, 39–53. [Google Scholar] [CrossRef]

- Albanwan, H.; Qin, R. A Novel Spectrum Enhancement Technique for Multi-Temporal, Multi-Spectral Data Using Spatial-Temporal Filtering. ISPRS J. Photogramm. Remote Sens. 2018, 142, 51–63. [Google Scholar] [CrossRef]

- Soh, J.W.; Cho, S.; Cho, N.I. Meta-Transfer Learning for Zero-Shot Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3516–3525. [Google Scholar]

- Hospedales, T.; Antoniou, A.; Micaelli, P.; Storkey, A. Meta-Learning in Neural Networks: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5149–5169. [Google Scholar] [CrossRef] [PubMed]

- Yoon, J.; Kim, T.; Dia, O.; Kim, S.; Bengio, Y.; Ahn, S. Bayesian Model-Agnostic Meta-Learning. In Proceedings of the Advances in Neural Information Processing Systems, Montréal, QC, Canada, 4–5 December 2018; Curran Associates: New York, NY, USA, 2018; Volume 31. [Google Scholar]

- Cheng, G.; Yan, B.; Shi, P.; Li, K.; Yao, X.; Guo, L.; Han, J. Prototype-CNN for Few-Shot Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 3078507. [Google Scholar] [CrossRef]

- Ishtiak, T.; En, Q.; Guo, Y. Exemplar-FreeSOLO: Enhancing Unsupervised Instance Segmentation With Exemplars. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 15424–15433. [Google Scholar]

- Wang, X.; Girdhar, R.; Yu, S.X.; Misra, I. Cut and Learn for Unsupervised Object Detection and Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 3124–3134. [Google Scholar]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging Properties in Self-Supervised Vision Transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 9650–9660. [Google Scholar]

- Wang, W.; Dai, J.; Chen, Z.; Huang, Z.; Li, Z.; Zhu, X.; Hu, X.; Lu, T.; Lu, L.; Li, H.; et al. Internimage: Exploring Large-Scale Vision Foundation Models with Deformable Convolutions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 14408–14419. [Google Scholar]

- Chappuis, C.; Zermatten, V.; Lobry, S.; Le Saux, B.; Tuia, D. Prompt-RSVQA: Prompting Visual Context to a Language Model for Remote Sensing Visual Question Answering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 1372–1381. [Google Scholar]

- Chen, K.; Liu, C.; Chen, H.; Zhang, H.; Li, W.; Zou, Z.; Shi, Z. RSPrompter: Learning to Prompt for Remote Sensing Instance Segmentation Based on Visual Foundation Model. arXiv 2023, arXiv:2306.16269. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y.; et al. Segment Anything. arXiv 2023, arXiv:2304.02643. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models from Natural Language Supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Wang, W.; Bao, H.; Dong, L.; Bjorck, J.; Peng, Z.; Liu, Q.; Aggarwal, K.; Mohammed, O.K.; Singhal, S.; Som, S.; et al. Image as a Foreign Language: BEiT Pretraining for Vision and Vision-Language Tasks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 19175–19186. [Google Scholar]

- Zhang, X.; Wang, X.; Tang, X.; Zhou, H.; Li, C. Description Generation for Remote Sensing Images Using Attribute Attention Mechanism. Remote Sens. 2019, 11, 612. [Google Scholar] [CrossRef]

- Sumbul, G.; Nayak, S.; Demir, B. SD-RSIC: Summarization-Driven Deep Remote Sensing Image Captioning. IEEE Trans. Geosci. Remote Sens. 2020, 59, 6922–6934. [Google Scholar] [CrossRef]

- Osco, L.P.; de Lemos, E.L.; Gonçalves, W.N.; Ramos, A.P.M.; Marcato Junior, J. The Potential of Visual ChatGPT for Remote Sensing. Remote Sens. 2023, 15, 3232. [Google Scholar] [CrossRef]

- Yuan, Z.; Mou, L.; Wang, Q.; Zhu, X.X. From Easy to Hard: Learning Language-Guided Curriculum for Visual Question Answering on Remote Sensing Data. IEEE Trans. Geosci. Remote Sens. 2022, 60, 3173811. [Google Scholar] [CrossRef]

- Zhang, J.; Zhou, Z.; Mai, G.; Mu, L.; Hu, M.; Li, S. Text2Seg: Remote Sensing Image Semantic Segmentation via Text-Guided Visual Foundation Models. arXiv 2023, arXiv:2304.10597. [Google Scholar]

- Wang, D.; Zhang, J.; Du, B.; Xu, M.; Liu, L.; Tao, D.; Zhang, L. SAMRS: Scaling-up Remote Sensing Segmentation Dataset with Segment Anything Model. In Proceedings of the Thirty-Seventh Conference on Neural Information Processing Systems Datasets and Benchmarks Track, New Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Liu, K.; Mattyus, G. Fast Multiclass Vehicle Detection on Aerial Images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1938–1942. [Google Scholar]

- Xia, G.-S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A Large-Scale Dataset for Object Detection in Aerial Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object Detection in Optical Remote Sensing Images: A Survey and a New Benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Lam, D.; Kuzma, R.; McGee, K.; Dooley, S.; Laielli, M.; Klaric, M.; Bulatov, Y.; McCord, B. Xview: Objects in Context in Overhead Imagery. arXiv 2018, arXiv:1802.07856. [Google Scholar]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A High-Resolution SAR Images Dataset for Ship Detection and Instance Segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H.; et al. SAR Ship Detection Dataset (SSDD): Official Release and Comprehensive Data Analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Du, D.; Qi, Y.; Yu, H.; Yang, Y.; Duan, K.; Li, G.; Zhang, W.; Huang, Q.; Tian, Q. The Unmanned Aerial Vehicle Benchmark: Object Detection and Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 370–386. [Google Scholar]

- Sun, X.; Wang, P.; Yan, Z.; Xu, F.; Wang, R.; Diao, W.; Chen, J.; Li, J.; Feng, Y.; Xu, T.; et al. FAIR1M: A Benchmark Dataset for Fine-Grained Object Recognition in High-Resolution Remote Sensing Imagery. ISPRS J. Photogramm. Remote Sens. 2022, 184, 116–130. [Google Scholar] [CrossRef]

- Waqas Zamir, S.; Arora, A.; Gupta, A.; Khan, S.; Sun, G.; Shahbaz Khan, F.; Zhu, F.; Shao, L.; Xia, G.-S.; Bai, X. Isaid: A Large-Scale Dataset for Instance Segmentation in Aerial Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 15–20 June 2019; pp. 28–37. [Google Scholar]

- Cheng, G.; Zhou, P.; Han, J. Learning Rotation-Invariant Convolutional Neural Networks for Object Detection in VHR Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Weir, N.; Lindenbaum, D.; Bastidas, A.; Etten, A.V.; McPherson, S.; Shermeyer, J.; Kumar, V.; Tang, H. Spacenet Mvoi: A Multi-View Overhead Imagery Dataset. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 992–1001. [Google Scholar]

- Roscher, R.; Volpi, M.; Mallet, C.; Drees, L.; Wegner, J.D. Semcity Toulouse: A Benchmark for Building Instance Segmentation in Satellite Images. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 5, 109–116. [Google Scholar] [CrossRef]

- Demir, I.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, J.; Basu, S.; Hughes, F.; Tuia, D.; Raskar, R. Deepglobe 2018: A Challenge to Parse the Earth through Satellite Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 172–181. [Google Scholar]

- Brown, M.; Goldberg, H.; Foster, K.; Leichtman, A.; Wang, S.; Hagstrom, S.; Bosch, M.; Almes, S. Large-Scale Public Lidar and Satellite Image Data Set for Urban Semantic Labeling. In Proceedings of the Laser Radar Technology and Applications XXIII, Orlando, FL, USA, 17–18 April 2018; SPIE: Bellingham, WA, USA, 2018; Volume 10636, pp. 154–167. [Google Scholar]

- Mohanty, S.P.; Czakon, J.; Kaczmarek, K.A.; Pyskir, A.; Tarasiewicz, P.; Kunwar, S.; Rohrbach, J.; Luo, D.; Prasad, M.; Fleer, S.; et al. Deep Learning for Understanding Satellite Imagery: An Experimental Survey. Front. Artif. Intell. 2020, 3, 534696. [Google Scholar] [CrossRef]

- Persello, C.; Hansch, R.; Vivone, G.; Chen, K.; Yan, Z.; Tang, D.; Huang, H.; Schmitt, M.; Sun, X. 2023 IEEE GRSS Data Fusion Contest: Large-Scale Fine-Grained Building Classification for Semantic Urban Reconstruction [Technical Committees]. IEEE Geosci. Remote Sens. Mag. 2023, 11, 94–97. [Google Scholar] [CrossRef]

- Chen, Y.; Qin, R.; Zhang, G.; Albanwan, H. Spatial Temporal Analysis of Traffic Patterns during the COVID-19 Epidemic by Vehicle Detection Using Planet Remote-Sensing Satellite Images. Remote Sens. 2021, 13, 208. [Google Scholar] [CrossRef]

- Dolloff, J.; Settergren, R. An Assessment of WorldView-1 Positional Accuracy Based on Fifty Contiguous Stereo Pairs of Imagery. Photogramm. Eng. Remote Sens. 2010, 76, 935–943. [Google Scholar] [CrossRef]

- Bar, D.E.; Raboy, S. Moving Car Detection and Spectral Restoration in a Single Satellite WorldView-2 Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2077–2087. [Google Scholar] [CrossRef]

- Zhou, H.; Wei, L.; Lim, C.P.; Nahavandi, S. Robust Vehicle Detection in Aerial Images Using Bag-of-Words and Orientation Aware Scanning. IEEE Trans. Geosci. Remote Sens. 2018, 56, 7074–7085. [Google Scholar] [CrossRef]

- Drouyer, S. VehSat: A Large-Scale Dataset for Vehicle Detection in Satellite Images. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 268–271. [Google Scholar]

- Chen, L.; Shi, W.; Deng, D. Improved YOLOv3 Based on Attention Mechanism for Fast and Accurate Ship Detection in Optical Remote Sensing Images. Remote Sens. 2021, 13, 660. [Google Scholar] [CrossRef]

- Pi, Y.; Nath, N.D.; Behzadan, A.H. Convolutional Neural Networks for Object Detection in Aerial Imagery for Disaster Response and Recovery. Adv. Eng. Inform. 2020, 43, 101009. [Google Scholar] [CrossRef]

- Tijtgat, N.; Van Ranst, W.; Goedeme, T.; Volckaert, B.; De Turck, F. Embedded Real-Time Object Detection for a UAV Warning System. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) Workshops, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Dong, J.; Ota, K.; Dong, M. UAV-Based Real-Time Survivor Detection System in Post-Disaster Search and Rescue Operations. IEEE J. Miniaturization Air Space Syst. 2021, 2, 209–219. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhong, Y.; Wang, J.; Ma, A.; Zhang, L. Building Damage Assessment for Rapid Disaster Response with a Deep Object-Based Semantic Change Detection Framework: From Natural Disasters to Man-Made Disasters. Remote Sens. Environ. 2021, 265, 112636. [Google Scholar] [CrossRef]

- Gui, S.; Qin, R. Automated LoD-2 Model Reconstruction from Very-High-Resolution Satellite-Derived Digital Surface Model and Orthophoto. ISPRS J. Photogramm. Remote Sens. 2021, 181, 1–19. [Google Scholar] [CrossRef]

- Müller Arisona, S.; Zhong, C.; Huang, X.; Qin, R. Increasing Detail of 3D Models through Combined Photogrammetric and Procedural Modelling. Geo-Spat. Inf. Sci. 2013, 16, 45–53. [Google Scholar] [CrossRef]

- Gruen, A.; Schubiger, S.; Qin, R.; Schrotter, G.; Xiong, B.; Li, J.; Ling, X.; Xiao, C.; Yao, S.; Nuesch, F. Semantically Enriched High Resolution LoD 3 Building Model Generation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 11–18. [Google Scholar] [CrossRef]

- Park, M.J.; Kim, J.; Jeong, S.; Jang, A.; Bae, J.; Ju, Y.K. Machine Learning-Based Concrete Crack Depth Prediction Using Thermal Images Taken under Daylight Conditions. Remote Sens. 2022, 14, 2151. [Google Scholar] [CrossRef]

- Bai, Y.; Gao, C.; Singh, S.; Koch, M.; Adriano, B.; Mas, E.; Koshimura, S. A Framework of Rapid Regional Tsunami Damage Recognition from Post-Event TerraSAR-X Imagery Using Deep Neural Networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 43–47. [Google Scholar] [CrossRef]

- Hu, Y.; Liu, K. Inspection and Monitoring Technologies of Transmission Lines with Remote Sensing; Academic Press: Cambridge, MA, USA, 2017; ISBN 978-0-12-812645-5. [Google Scholar]

- Kim, S.; Kim, D.; Jeong, S.; Ham, J.-W.; Lee, J.-K.; Oh, K.-Y. Fault Diagnosis of Power Transmission Lines Using a UAV-Mounted Smart Inspection System. IEEE Access 2020, 8, 149999–150009. [Google Scholar] [CrossRef]

- Deng, X.D.; Zheng, K.; Wei, G.; Tang, J.H.; Zhang, Z.P. The Infrared Diagnostic Technology of Power Transmission Devices and Experimental Study. Appl. Mech. Mater. 2013, 423–426, 2372–2375. [Google Scholar] [CrossRef]

- Xue, Y.; Wang, T.; Skidmore, A.K. Automatic Counting of Large Mammals from Very High Resolution Panchromatic Satellite Imagery. Remote Sens. 2017, 9, 878. [Google Scholar] [CrossRef]

- Berger-Wolf, T.Y.; Rubenstein, D.I.; Stewart, C.V.; Holmberg, J.A.; Parham, J.; Menon, S.; Crall, J.; Van Oast, J.; Kiciman, E.; Joppa, L. Wildbook: Crowdsourcing, Computer Vision, and Data Science for Conservation. arXiv 2017, arXiv:1710.08880. [Google Scholar]

- Catlin, J.; Jones, T.; Norman, B.; Wood, D. Consolidation in a Wildlife Tourism Industry: The Changing Impact of Whale Shark Tourist Expenditure in the Ningaloo Coast Region. Int. J. Tour. Res. 2010, 12, 134–148. [Google Scholar] [CrossRef]

- Araujo, G.; Agustines, A.; Tracey, B.; Snow, S.; Labaja, J.; Ponzo, A. Photo-ID and Telemetry Highlight a Global Whale Shark Hotspot in Palawan, Philippines. Sci. Rep. 2019, 9, 17209. [Google Scholar] [CrossRef]

- Blount, D.; Gero, S.; Van Oast, J.; Parham, J.; Kingen, C.; Scheiner, B.; Stere, T.; Fisher, M.; Minton, G.; Khan, C.; et al. Flukebook: An Open-Source AI Platform for Cetacean Photo Identification. Mamm. Biol. 2022, 102, 1005–1023. [Google Scholar] [CrossRef]

- Watanabe, J.-I.; Shao, Y.; Miura, N. Underwater and Airborne Monitoring of Marine Ecosystems and Debris. J. Appl. Remote Sens. 2019, 13, 044509. [Google Scholar] [CrossRef]

- Akar, S.; Süzen, M.L.; Kaymakci, N. Detection and Object-Based Classification of Offshore Oil Slicks Using ENVISAT-ASAR Images. Environ. Monit. Assess. 2011, 183, 409–423. [Google Scholar] [CrossRef]

- Gao, Y.; Skutsch, M.; Paneque-Gálvez, J.; Ghilardi, A. Remote Sensing of Forest Degradation: A Review. Environ. Res. Lett. 2020, 15, 103001. [Google Scholar] [CrossRef]

- Lobell, D.B.; Thau, D.; Seifert, C.; Engle, E.; Little, B. A Scalable Satellite-Based Crop Yield Mapper. Remote Sens. Environ. 2015, 164, 324–333. [Google Scholar] [CrossRef]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A. Deep Learning Based Oil Palm Tree Detection and Counting for High-Resolution Remote Sensing Images. Remote Sens. 2017, 9, 22. [Google Scholar] [CrossRef]

- Huang, C.; Asner, G.P. Applications of Remote Sensing to Alien Invasive Plant Studies. Sensors 2009, 9, 4869–4889. [Google Scholar] [CrossRef]

- Papp, L.; van Leeuwen, B.; Szilassi, P.; Tobak, Z.; Szatmári, J.; Árvai, M.; Mészáros, J.; Pásztor, L. Monitoring Invasive Plant Species Using Hyperspectral Remote Sensing Data. Land 2021, 10, 29. [Google Scholar] [CrossRef]

- Wang, D. Unsupervised Semantic and Instance Segmentation of Forest Point Clouds. ISPRS J. Photogramm. Remote Sens. 2020, 165, 86–97. [Google Scholar] [CrossRef]

- Briechle, S.; Krzystek, P.; Vosselman, G. Classification of Tree Species and Standing Dead Trees by Fusing UAV-Based Lidar Data and Multispectral Imagery in the 3D Deep Neural Network PointNet++. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 2, 203–210. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates: New York, NY, USA, 2017; Volume 30. [Google Scholar]

- Song, X.-P.; Potapov, P.V.; Krylov, A.; King, L.; Di Bella, C.M.; Hudson, A.; Khan, A.; Adusei, B.; Stehman, S.V.; Hansen, M.C. National-Scale Soybean Mapping and Area Estimation in the United States Using Medium Resolution Satellite Imagery and Field Survey. Remote Sens. Environ. 2017, 190, 383–395. [Google Scholar] [CrossRef]

- Zhao, W.; Yamada, W.; Li, T.; Digman, M.; Runge, T. Augmenting Crop Detection for Precision Agriculture with Deep Visual Transfer Learning—A Case Study of Bale Detection. Remote Sens. 2021, 13, 23. [Google Scholar] [CrossRef]

- Yamada, W.; Zhao, W.; Digman, M. Automated Bale Mapping Using Machine Learning and Photogrammetry. Remote Sens. 2021, 13, 4675. [Google Scholar] [CrossRef]

- Dong, T.; Liu, J.; Shang, J.; Qian, B.; Ma, B.; Kovacs, J.M.; Walters, D.; Jiao, X.; Geng, X.; Shi, Y. Assessment of Red-Edge Vegetation Indices for Crop Leaf Area Index Estimation. Remote Sens. Environ. 2019, 222, 133–143. [Google Scholar] [CrossRef]

- Mateo-Sanchis, A.; Piles, M.; Muñoz-Marí, J.; Adsuara, J.E.; Pérez-Suay, A.; Camps-Valls, G. Synergistic Integration of Optical and Microwave Satellite Data for Crop Yield Estimation. Remote Sens. Environ. 2019, 234, 111460. [Google Scholar] [CrossRef]

- Lin, Y.-C.; Habib, A. Quality Control and Crop Characterization Framework for Multi-Temporal UAV LiDAR Data over Mechanized Agricultural Fields. Remote Sens. Environ. 2021, 256, 112299. [Google Scholar] [CrossRef]

- Huang, Y.; Lee, M.A.; Thomson, S.J.; Reddy, K.N. Ground-Based Hyperspectral Remote Sensing for Weed Management in Crop Production. Int. J. Agric. Biol. Eng. 2016, 9, 98–109. [Google Scholar]

| Sensor | Spatial Resolution (GSD) | Band Information | |||

|---|---|---|---|---|---|

| Staring SpotLight | SpotLight | Ultrafine | StripMap | ||

| TerraSAR-X | 0.25 m | 2 m | 3 m | X-band: 9.65 GHz | |

| COSMO-SkyMed | 1 m | 3 m | X-band: 9.6 GHz | ||

| Sentinel-1 | 5 m | C-band: 5.405 GHz | |||

| Geofen-3 | 1 m | 3 m | 5 m | C-band: 5.4 GHz | |

| RadarSat-2 | 1 m | 3 m | C-band: 5.405 GHz | ||

| ALOS PALSAR-2 | 1–3 m | 3 m | L-band: 1.27 GHz | ||

| ICEYE | 1 m | 3 m | X-band: 9.75 GHz | ||

| Capella-2 | 0.5 m | 1 m | 1.2 m | X-band (9.4–9.9 GHz) | |

| Dataset Name | Sensor Type | Image Spatial Resolution | Task | Year | Domain (Categories Number) | Image Number & Instance Number |

|---|---|---|---|---|---|---|

| DLR 3K Vehicle [152] | Optical | 0.02 m | Object detection | 2015 | Vehicle (2) | 20 (14,235) |

| DOTA [153] | Optical | 0.3–1 m | Object detection | 2018 | Multi-class (14) | 2806 (188,282) |

| DIOR [154] | Optical | 0.5–30 m | Object detection | 2020 | Multi-class (20) | 23,463 (192,472) |

| xView [155] | Optical | 0.3 m | Object detection | 2018 | Multi-class (60) | 1127 (1 million) |

| HRSID [156] | SAR | 1–5 m | Object detection and instance segmentation | 2020 | Ship | 5604 (16,951) |

| SSDD [157] | SAR | 1–15 m | Object detection | 2021 | Ship | 1160 (2456) |

| UAVDT [158] | Optical | \(UAV) | Object detection | 2018 | Multi-class (14) | 80,000 (841,500) |

| FAIR1M [159] | Optical | 0.3–0.8 m | Object detection | 2022 | Multi-class (37) | 42,796 (1.02 million) |

| iSAID [160] | Optical | \(aerial) | Instance segmentation | 2019 | Multi-class (15) | 2806 (655,451) |

| NWPU VHR-10 [161] | Optical | 0.08–2 m | Object detection and instance segmentation | 2016 | Multi-class (10) | 800 (3775) |

| SpaceNet MVOI [162] | Optical | 0.46–1.67 m | Object detection and instance segmentation | 2019 | Building | 60,000 (126,747) |

| SemCity Toulouse [163] | Optical | 0.5 m | Instance segmentation | 2020 | Building | 4 (9455) |

| DeepGlobe 2018 (road) [164] | Optical | 0.5 m | Instance segmentation | 2018 | Road | 8570 |

| DeepGlobe 2018 (building) [164] | Optical | 0.3 m | Instance segmentation | 2018 | Building | 24,586 (302,701) |

| IARPA CORE3D [165] | Optical + DSM + DTM | 0.3 m | Instance segmentation | 2018 | Building | 154 multi-stereo |

| CrowdAI mapping challenge [166] | Optical | 0.3 m | Instance segmentation | 2018 | Building | 341,058 |

| 2023 IEEE GRSS DFC [167] | Optical + SAR | 0.5 m | Instance segmentation | 2023 | Building roof (12) | 3720 × 2 (194,263) |

| PASTIS [123] | Optical + SAR | 10 m | Object detection and Panoptic segmentation | 2021 | Agricultural parcels (time series) | 2433 (124,422) |

| SAMRS [151] | Optical | 0.3–30 m | Object detection and Panoptic segmentation | 2023 | Multi-class (37) | 105,090 (1,668,241) |

| Domain | Application Area of Focus | Sensors and Data |

|---|---|---|

| Urban and civilian applications | Traffic density, urban mobility | PlanetScope Multispectral Images |

| Maritime security, ship detection | SAR | |

| Impact assessment of Hurricane, flood, infrastructure | Optical Satellite | |

| Powerlines monitor and management | UAV, airborne LiDAR data | |

| Structural Health Monitoring (SHM), bridges/buildings | UAVs, satellites, infrared, ultraviolet | |

| Environmental–ecological monitoring and management | Wildlife migration, animal counting | GeoEye-1’s Panchromatic Satellite |

| Wildlife conservation, species identification | Camera traps, UAV-mounted cameras, social media | |

| Marine ecosystem, debris monitoring | UAVs, AUVs (Autonomous Underwater Vehicle), IR cameras | |

| Ocean oil spill tracking and response | ENVISAT ASAR, Hyperspectral Imaging | |

| Agricultural and forestry applications | Bale counting and monitoring | UAVs |

| Tree counting, invasive plant detection | Satellites, Hyperspectral Imaging | |

| Locust migration routes, outbreak prediction | Satellite data, DEM. | |

| Classify tree species (pine, birch, alder) and identify standing dead trees | UAVs, LiDAR, Multispectral Imaging |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gui, S.; Song, S.; Qin, R.; Tang, Y. Remote Sensing Object Detection in the Deep Learning Era—A Review. Remote Sens. 2024, 16, 327. https://doi.org/10.3390/rs16020327

Gui S, Song S, Qin R, Tang Y. Remote Sensing Object Detection in the Deep Learning Era—A Review. Remote Sensing. 2024; 16(2):327. https://doi.org/10.3390/rs16020327

Chicago/Turabian StyleGui, Shengxi, Shuang Song, Rongjun Qin, and Yang Tang. 2024. "Remote Sensing Object Detection in the Deep Learning Era—A Review" Remote Sensing 16, no. 2: 327. https://doi.org/10.3390/rs16020327