Evaluating a Novel Approach to Detect the Vertical Structure of Insect Damage in Trees Using Multispectral and Three-Dimensional Data from Drone Imagery in the Northern Rocky Mountains, USA

Abstract

:1. Introduction

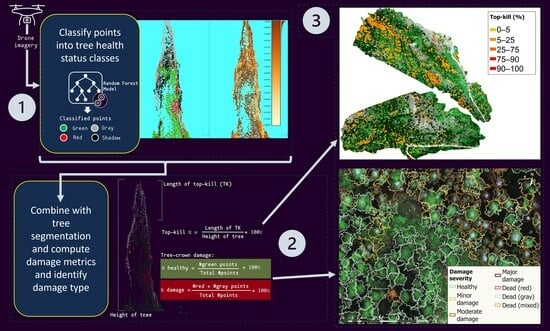

- Segment individual trees within the study area using a multispectral SfM point cloud;

- Develop, evaluate, and apply a classification of points into healthy (green) or damaged (gray and red) classes based on their multispectral reflectances (point-level classification);

- Develop, evaluate, and apply an algorithm for identifying the percent damage, damage severity, and top-kill metrics of individual trees using the 3D classification of reflectances (tree-level damage algorithm).

2. Materials and Methods

2.1. Study Area

2.2. Drone Imagery Collection

2.3. Drone Imagery Pre-Processing

2.4. Reference Data

2.5. Tree Segmentation

2.5.1. Ground and Non-Ground Classification and Height Normalization of Point Cloud

2.5.2. Point Cloud Segmentation into Unique Tree Objects

2.6. Point-Level Classification with Random Forest Models

2.7. Tree-Level Damage Algorithm

2.8. Characterization of the Extent of Tree Damage across the UAV Scene

3. Results

3.1. Tree Segmentation

3.2. Point-Level Classification into Health Status Classes

3.3. Tree-Level Damage

3.4. Tree Damage across the Study Site

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Anderegg, W.R.L.; Trugman, A.T.; Badgley, G.; Anderson, C.M.; Bartuska, A.; Ciais, P.; Cullenward, D.; Field, C.B.; Freeman, J.; Goetz, S.J.; et al. Climate-Driven Risks to the Climate Mitigation Potential of Forests. Science 2020, 368, eaaz7005. [Google Scholar] [CrossRef]

- Anderegg, W.R.L.; Hicke, J.A.; Fisher, R.A.; Allen, C.D.; Aukema, J.; Bentz, B.; Hood, S.; Lichstein, J.W.; Macalady, A.K.; McDowell, N.; et al. Tree Mortality from Drought, Insects, and Their Interactions in a Changing Climate. New Phytol. 2015, 208, 674–683. [Google Scholar] [CrossRef] [PubMed]

- Arneth, A.; Denton, F.; Agus, F.; Elbehri, A.; Erb, K.H.; Elasha, B.O.; Rahimi, M.; Rounsevell, M.; Spence, A.; Valentini, R.; et al. Framing and Context. In Climate Change and Land: An IPCC Special Report on Climate Change, Desertification, Land Degradation, Sustainable Land Management, Food Security, and Greenhouse Gas Fluxes In Terrestrial Ecosystems; Intergovernmental Panel on Climate Change (IPCC): Geneva, Switzerland, 2019; pp. 1–98. [Google Scholar]

- Pearce, D.W. The Economic Value of Forest Ecosystems. Ecosyst. Health 2001, 7, 284–296. [Google Scholar] [CrossRef]

- Hartmann, H.; Bastos, A.; Das, A.J.; Esquivel-Muelbert, A.; Hammond, W.M.; Martínez-Vilalta, J.; McDowell, N.G.; Powers, J.S.; Pugh, T.A.M.; Ruthrof, K.X.; et al. Climate Change Risks to Global Forest Health: Emergence of Unexpected Events of Elevated Tree Mortality Worldwide. Annu. Rev. Plant Biol. 2022, 73, 673–702. [Google Scholar] [CrossRef]

- Hicke, J.A.; Meddens, A.J.H.; Kolden, C.A. Recent Tree Mortality in the Western United States from Bark Beetles and Forest Fires. For. Sci. 2016, 62, 141–153. [Google Scholar] [CrossRef]

- Pureswaran, D.S.; Roques, A.; Battisti, A. Forest Insects and Climate Change. Curr. For. Rep. 2018, 4, 35–50. [Google Scholar] [CrossRef]

- Cohen, W.B.; Yang, Z.; Stehman, S.V.; Schroeder, T.A.; Bell, D.M.; Masek, J.G.; Huang, C.; Meigs, G.W. Forest Disturbance across the Conterminous United States from 1985–2012: The Emerging Dominance of Forest Decline. For. Ecol. Manag. 2016, 360, 242–252. [Google Scholar] [CrossRef]

- Aukema, B.H.; Carroll, A.L.; Zheng, Y.; Zhu, J.; Raffa, K.F.; Dan Moore, R.; Stahl, K.; Taylor, S.W. Movement of Outbreak Populations of Mountain Pine Beetle: Influences of Spatiotemporal Patterns and Climate. Ecography 2008, 31, 348–358. [Google Scholar] [CrossRef]

- Bentz, B.J.; Régnière, J.; Fettig, C.J.; Hansen, E.M.; Hayes, J.L.; Hicke, J.A.; Kelsey, R.G.; Negrón, J.F.; Seybold, S.J. Climate Change and Bark Beetles of the Western United States and Canada: Direct and Indirect Effects. BioScience 2010, 60, 602–613. [Google Scholar] [CrossRef]

- Buotte, P.C.; Hicke, J.A.; Preisler, H.K.; Abatzoglou, J.T.; Raffa, K.F.; Logan, J.A. Climate Influences on Whitebark Pine Mortality from Mountain Pine Beetle in the Greater Yellowstone Ecosystem. Ecol. Appl. 2016, 26, 2507–2524. [Google Scholar] [CrossRef]

- Hall, R.J.; Castilla, G.; White, J.C.; Cooke, B.J.; Skakun, R.S. Remote Sensing of Forest Pest Damage: A Review and Lessons Learned from a Canadian Perspective. Can. Entomol. 2016, 148, S296–S356. [Google Scholar] [CrossRef]

- Hicke, J.A.; Lucatello, S.; Mortsch, L.D.; Dawson, J.; Aguilar, M.D.; Enquist, C.A.F.; Gilmore, E.A.; Gutzler, D.S.; Harper, S.; Holsman, K.; et al. North America. In Climate Change 2022: Impacts, Adaptation and Vulnerability; Intergovernmental Panel on Climate Change: Geneva, Switzerland, 2022; pp. 1929–2042. [Google Scholar]

- Weed, A.S.; Ayres, M.P.; Hicke, J.A. Consequences of Climate Change for Biotic Disturbances in North American Forests. Ecol. Monogr. 2013, 83, 441–470. [Google Scholar] [CrossRef]

- Lausch, A.; Erasmi, S.; King, D.; Magdon, P.; Heurich, M. Understanding Forest Health with Remote Sensing-Part I—A Review of Spectral Traits, Processes and Remote-Sensing Characteristics. Remote Sens. 2016, 8, 1029. [Google Scholar] [CrossRef]

- Rhodes, M.W.; Bennie, J.J.; Spalding, A.; ffrench-Constant, R.H.; Maclean, I.M.D. Recent Advances in the Remote Sensing of Insects. Biol. Rev. 2022, 97, 343–360. [Google Scholar] [CrossRef] [PubMed]

- Senf, C.; Seidl, R.; Hostert, P. Remote Sensing of Forest Insect Disturbances: Current State and Future Directions. Int. J. Appl. Earth Obs. Geoinf. 2017, 60, 49–60. [Google Scholar] [CrossRef] [PubMed]

- Luo, Y.; Huang, H.; Roques, A. Early Monitoring of Forest Wood-Boring Pests with Remote Sensing. Annu. Rev. Entomol. 2023, 68, 277–298. [Google Scholar] [CrossRef] [PubMed]

- Ciesla, W.M.; Stephens, S.S.; Brian, E.H.; Backsen, J.C. Aerial Signatures of Forest Damage in Colorado and Adjoining States; Colorado State Forest Service: Fort Collins, CO, USA, 2015.

- Wickman, B. How to Estimate Defoliation and Predict Tree Damage. In Douglas-Fir Tussock Moth Handbook; Combined Forest Pest Research and Development Program, Agriculture Handbook No. 550; US Department of Agriculture (USDA): Washington, DC, USA, 1979. [Google Scholar]

- Pederson, L.; Eckberg, T.; Lowrey, L.; Bulaon, B. Douglas-Fir Tussock Moth. In Forest Insect & Disease Leaflet 86 (Revised); USDA Forest Service: Washington, DC, USA, 2020. [Google Scholar]

- Fellin, D.G.; Dewey, J.E. Western Spruce Budworm Forest Insect & Disease Leaflet. In Forest Insect & Disease Leaflet 53 (Revised); USDA Forest Service: Washington, DC, USA, 1986. [Google Scholar]

- Ferrell, G.T. Fir Engraver Forest Insect & Disease Leaflet. In Forest Insect & Disease Leaflet 13 (Revised); USDA Forest Service: Washington, DC, USA, 1986. [Google Scholar]

- Hall, R.J.; Skakun, R.S.; Arsenault, E.J. Remotely Sensed Data in the Mapping of Insect Defoliation. In Understanding Forest Disturbance and Spatial Pattern: Remote Sensing and GIS Approaches; CRC Press: Boca Raton, FL, USA, 2006; pp. 85–111. [Google Scholar]

- Hall, R.J.; Volney, W.; Wang, Y. Using a Geographic Information System (GIS) to Associate Forest Stand Characteristics with Top Kill Due to Defoliation by the Jack Pine Budworm. Can. J. For. Res. 1998, 28, 1317–1327. [Google Scholar] [CrossRef]

- Dainelli, R.; Toscano, P.; Di Gennaro, S.F.; Matese, A. Recent Advances in Unmanned Aerial Vehicles Forest Remote Sensing—A Systematic Review. Part II: Research Applications. Forests 2021, 12, 397. [Google Scholar] [CrossRef]

- Ecke, S.; Dempewolf, J.; Frey, J.; Schwaller, A.; Endres, E.; Klemmt, H.-J.; Tiede, D.; Seifert, T. UAV-Based Forest Health Monitoring: A Systematic Review. Remote Sens. 2022, 14, 3205. [Google Scholar] [CrossRef]

- Aber, J.S. Small-Format Aerial Photography and UAS Imagery: Principles, Techniques, and Geoscience Applications, 2nd ed.; Elsevier: Burlington, VT, USA, 2019; ISBN 0-12-812942-5. [Google Scholar]

- Duarte, A.; Borralho, N.; Cabral, P.; Caetano, M. Recent Advances in Forest Insect Pests and Diseases Monitoring Using UAV-Based Data: A Systematic Review. Forests 2022, 13, 911. [Google Scholar] [CrossRef]

- Guimarães, N.; Pádua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities. Remote Sens. 2020, 12, 1046. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ Photogrammetry: A Low-Cost, Effective Tool for Geoscience Applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Mohan, M.; Leite, R.V.; Broadbent, E.N.; Jaafar, W.S.W.M.; Srinivasan, S.; Bajaj, S.; Corte, A.P.D.; do Amaral, C.H.; Gopan, G.; Saad, S.N.M.; et al. Individual Tree Detection Using UAV-Lidar and UAV-SfM Data: A Tutorial for Beginners. Open Geosci. 2021, 13, 1028–1039. [Google Scholar] [CrossRef]

- Cardil, A.; Otsu, K.; Pla, M.; Silva, C.A.; Brotons, L. Quantifying Pine Processionary Moth Defoliation in a Pine-Oak Mixed Forest Using Unmanned Aerial Systems and Multispectral Imagery. PLoS ONE 2019, 14, e0213027. [Google Scholar] [CrossRef] [PubMed]

- Abdollahnejad, A.; Panagiotidis, D. Tree Species Classification and Health Status Assessment for a Mixed Broadleaf-Conifer Forest with UAS Multispectral Imaging. Remote Sens. 2020, 12, 3722. [Google Scholar] [CrossRef]

- Cessna, J.; Alonzo, M.G.; Foster, A.C.; Cook, B.D. Mapping Boreal Forest Spruce Beetle Health Status at the Individual Crown Scale Using Fused Spectral and Structural Data. Forests 2021, 12, 1145. [Google Scholar] [CrossRef]

- Lin, Q.; Huang, H.; Wang, J.; Chen, L.; Du, H.; Zhou, G. Early Detection of Pine Shoot Beetle Attack Using Vertical Profile of Plant Traits through UAV-Based Hyperspectral, Thermal, and Lidar Data Fusion. Int. J. Appl. Earth Obs. Geoinf. 2023, 125, 103549. [Google Scholar] [CrossRef]

- NOAA NCEI, U.S. Climate Normals Quick Access. Available online: https://www.ncei.noaa.gov/access/us-climate-normals/#dataset=normals-annualseasonal&timeframe=30&location=MT&station=USW00024153 (accessed on 28 September 2023).

- MicaSense, Inc. MicaSense RedEdge MX Processing Workflow (Including Reflectance Calibration) in Agisoft Metashape Professional. Available online: https://agisoft.freshdesk.com/support/solutions/articles/31000148780-micasense-rededge-mx-processing-workflow-including-reflectance-calibration-in-agisoft-metashape-pro (accessed on 14 January 2023).

- Agisoft Metashape Agisoft Metashape User Manual—Professional Edition, Version 2.0; Agisoft LLC: St. Petersburg, Russia, 2023.

- Tinkham, W.T.; Swayze, N.C. Influence of Agisoft Metashape Parameters on UAS Structure from Motion Individual Tree Detection from Canopy Height Models. Forests 2021, 12, 250. [Google Scholar] [CrossRef]

- Young, D.J.N.; Koontz, M.J.; Weeks, J. Optimizing Aerial Imagery Collection and Processing Parameters for Drone-based Individual Tree Mapping in Structurally Complex Conifer Forests. Methods Ecol. Evol. 2022, 13, 1447–1463. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; d’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV Topographic Surveys Processed with Structure-from-Motion: Ground Control Quality, Quantity and Bundle Adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef]

- Otsu, K.; Pla, M.; Duane, A.; Cardil, A.; Brotons, L. Estimating the Threshold of Detection on Tree Crown Defoliation Using Vegetation Indices from UAS Multispectral Imagery. Drones 2019, 3, 80. [Google Scholar] [CrossRef]

- Otsu, K.; Pla, M.; Vayreda, J.; Brotons, L. Calibrating the Severity of Forest Defoliation by Pine Processionary Moth with Landsat and UAV Imagery. Sensors 2018, 18, 3278. [Google Scholar] [CrossRef]

- Zhang, N.; Wang, Y.; Zhang, X. Extraction of Tree Crowns Damaged by Dendrolimus tabulaeformis Tsai et Liu via Spectral-Spatial Classification Using UAV-Based Hyperspectral Images. Plant Methods 2020, 16, 135. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Chen, S.-C.; Whitman, D.; Shyu, M.-L.; Yan, J.; Zhang, C. A Progressive Morphological Filter for Removing Nonground Measurements from Airborne LIDAR Data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 872–882. [Google Scholar] [CrossRef]

- Roussel, J.-R.; Auty, D. Airborne LiDAR Data Manipulation and Visualization for Forestry Applications. 2023. Available online: https://cran.r-project.org/package=lidR (accessed on 22 October 2022).

- Silva, C.A.; Hudak, A.T.; Vierling, L.A.; Loudermilk, E.L.; O’Brien, J.J.; Hiers, J.K.; Jack, S.B.; Gonzalez-Benecke, C.; Lee, H.; Falkowski, M.J.; et al. Imputation of Individual Longleaf Pine (Pinus palustris Mill.) Tree Attributes from Field and LiDAR Data. Can. J. Remote Sens. 2016, 42, 554–573. [Google Scholar] [CrossRef]

- Li, W.; Guo, Q.; Jakubowski, M.K.; Kelly, M. A New Method for Segmenting Individual Trees from the Lidar Point Cloud. Photogramm. Eng. Remote Sens. 2012, 78, 75–84. [Google Scholar] [CrossRef]

- Legendre, P.; Legendre, L. Numerical Ecology: Developments in Environmental Modelling. In Developments in Environmental Modelling; Elsevier: Amsterdam, The Netherlands, 1998; Volume 20. [Google Scholar]

- Gamon, J.A.; Surfus, J.S. Assessing Leaf Pigment Content and Activity with a Reflectometer. New Phytol. 1999, 143, 105–117. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color Indices for Weed Identification under Various Soil, Residue, and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS; Third Earth Resources Technology Satellite-1 Symposium. Volume 1: Technical Presentations, section A; NASA: Washington, DC, USA, 1974.

- Hunt, E.R., Jr.; Daughtry, C.S.T.; Eitel, J.U.H.; Long, D.S. Remote Sensing Leaf Chlorophyll Content Using a Visible Band Index. Agron. J. 2011, 103, 1090–1099. [Google Scholar] [CrossRef]

- Perez, D.; Lu, Y.; Kwan, C.; Shen, Y.; Koperski, K.; Li, J. Combining Satellite Images with Feature Indices for Improved Change Detection. In Proceedings of the 2018 9th IEEE Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 8–10 November 2018; pp. 438–444. [Google Scholar]

- Clay, G.R.; Marsh, S.E. Others Spectral Analysis for Articulating Scenic Color Changes in a Coniferous Landscape. Photogramm. Eng. Remote Sens. 1997, 63, 1353–1362. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Qi, Y. Random Forest for Bioinformatics. In Ensemble Machine Learning: Methods and Applications; Zhang, C., Ma, Y., Eds.; Springer: New York, NY, USA, 2012; pp. 307–323. ISBN 978-1-4419-9326-7. [Google Scholar]

- Stahl, A.T.; Andrus, R.; Hicke, J.A.; Hudak, A.T.; Bright, B.C.; Meddens, A.J.H. Automated Attribution of Forest Disturbance Types from Remote Sensing Data: A Synthesis. Remote Sens. Environ. 2023, 285, 113416. [Google Scholar] [CrossRef]

- Graham, M.H. Confronting Multicollinearity in Ecological Multiple Regression. Ecology 2003, 84, 2809–2815. [Google Scholar] [CrossRef]

- Cutler, F. Original by L.B. and A.; Wiener, R. port by A.L. and M. randomForest: Breiman and Cutler’s Random Forests for Classification and Regression 2022. Available online: https://CRAN.R-project.org/package=randomForest (accessed on 30 March 2022).

- Malley, J.D.; Kruppa, J.; Dasgupta, A.; Malley, K.G.; Ziegler, A. Probability Machines. Methods Inf. Med. 2012, 51, 74–81. [Google Scholar] [CrossRef] [PubMed]

- Ahmadi, S.A.; Ghorbanian, A.; Golparvar, F.; Mohammadzadeh, A.; Jamali, S. Individual Tree Detection from Unmanned Aerial Vehicle (UAV) Derived Point Cloud Data in a Mixed Broadleaf Forest Using Hierarchical Graph Approach. Eur. J. Remote Sens. 2022, 55, 520–539. [Google Scholar] [CrossRef]

- Minařík, R.; Langhammer, J.; Lendzioch, T. Automatic Tree Crown Extraction from UAS Multispectral Imagery for the Detection of Bark Beetle Disturbance in Mixed Forests. Remote Sens. 2020, 12, 4081. [Google Scholar] [CrossRef]

- Sparks, A.M.; Corrao, M.V.; Smith, A.M.S. Cross-Comparison of Individual Tree Detection Methods Using Low and High Pulse Density Airborne Laser Scanning Data. Remote Sens. 2022, 14, 3480. [Google Scholar] [CrossRef]

- Gertner, G.; Köhl, M. Correlated Observer Errors and Their Effects on Survey Estimates of Needle-Leaf Loss. For. Sci. 1995, 41, 758–776. [Google Scholar] [CrossRef]

- Metzger, J.M.; Oren, R. The Effect of Crown Dimensions on Transparency and the Assessment of Tree Health. Ecol. Appl. 2001, 11, 1634–1640. [Google Scholar] [CrossRef]

- Cardil, A.; Vepakomma, U.; Brotons, L. Assessing Pine Processionary Moth Defoliation Using Unmanned Aerial Systems. Forests 2017, 8, 402. [Google Scholar] [CrossRef]

- Auerbach, D.S.; Fremier, A.K. Identification of Salmon Redds Using RPV-Based Imagery Produces Comparable Estimates to Ground Counts with High Inter-Observer Variability. River Res. Appl. 2023, 39, 35–45. [Google Scholar] [CrossRef]

- Jemaa, H.; Bouachir, W.; Leblon, B.; LaRocque, A.; Haddadi, A.; Bouguila, N. UAV-Based Computer Vision System for Orchard Apple Tree Detection and Health Assessment. Remote Sens. 2023, 15, 3558. [Google Scholar] [CrossRef]

- Guerra-Hernández, J.; Díaz-Varela, R.A.; Ávarez-González, J.G.; Rodríguez-González, P.M. Assessing a Novel Modelling Approach with High Resolution UAV Imagery for Monitoring Health Status in Priority Riparian Forests. For. Ecosyst. 2021, 8, 61. [Google Scholar] [CrossRef]

- Leidemer, T.; Gonroudobou, O.B.H.; Nguyen, H.T.; Ferracini, C.; Burkhard, B.; Diez, Y.; Lopez Caceres, M.L. Classifying the Degree of Bark Beetle-Induced Damage on Fir (Abies mariesii) Forests, from UAV-Acquired RGB Images. Computation 2022, 10, 63. [Google Scholar] [CrossRef]

- Meng, R.; Dennison, P.E.; Zhao, F.; Shendryk, I.; Rickert, A.; Hanavan, R.P.; Cook, B.D.; Serbin, S.P. Mapping Canopy Defoliation by Herbivorous Insects at the Individual Tree Level Using Bi-Temporal Airborne Imaging Spectroscopy and LiDAR Measurements. Remote Sens. Environ. 2018, 215, 170–183. [Google Scholar] [CrossRef]

- Naseri, M.H.; Shataee Jouibary, S.; Habashi, H. Analysis of Forest Tree Dieback Using UltraCam and UAV Imagery. Scand. J. For. Res. 2023, 38, 392–400. [Google Scholar] [CrossRef]

- Puliti, S.; Astrup, R. Automatic Detection of Snow Breakage at Single Tree Level Using YOLOv5 Applied to UAV Imagery. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102946. [Google Scholar] [CrossRef]

- Bechtold, W.A.; Randolph, K. FIA Crown Analysis Guide; U.S. Department of Agriculture Forest Service, Southern Research Station: Asheville, NC, USA, 2018.

- Luo, L.; Zhai, Q.; Su, Y.; Ma, Q.; Kelly, M.; Guo, Q. Simple Method for Direct Crown Base Height Estimation of Individual Conifer Trees Using Airborne LiDAR Data. Opt. Express 2018, 26, A562–A578. [Google Scholar] [CrossRef]

- Vauhkonen, J. Estimating Crown Base Height for Scots Pine by Means of the 3D Geometry of Airborne Laser Scanning Data. Int. J. Remote Sens. 2010, 31, 1213–1226. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; White, J.C.; Wulder, M.A.; Næsset, E. Remote Sensing in Forestry: Current Challenges, Considerations and Directions. For. Int. J. For. Res. 2023, 97, 11–37. [Google Scholar] [CrossRef]

- Masek, J.G.; Hayes, D.J.; Joseph Hughes, M.; Healey, S.P.; Turner, D.P. The Role of Remote Sensing in Process-Scaling Studies of Managed Forest Ecosystems. For. Ecol. Manag. 2015, 355, 109–123. [Google Scholar] [CrossRef]

- Lausch, A.; Erasmi, S.; King, D.; Magdon, P.; Heurich, M. Understanding Forest Health with Remote Sensing-Part II—A Review of Approaches and Data Models. Remote Sens. 2017, 9, 129. [Google Scholar] [CrossRef]

- Ritz, A.L.; Thomas, V.A.; Wynne, R.H.; Green, P.C.; Schroeder, T.A.; Albaugh, T.J.; Burkhart, H.E.; Carter, D.R.; Cook, R.L.; Campoe, O.C.; et al. Assessing the Utility of NAIP Digital Aerial Photogrammetric Point Clouds for Estimating Canopy Height of Managed Loblolly Pine Plantations in the Southeastern United States. Int. J. Appl. Earth Obs. Geoinf. 2022, 113, 103012. [Google Scholar] [CrossRef]

- Schroeder, T.A.; Obata, S.; Papeş, M.; Branoff, B. Evaluating Statewide NAIP Photogrammetric Point Clouds for Operational Improvement of National Forest Inventory Estimates in Mixed Hardwood Forests of the Southeastern U.S. Remote Sens. 2022, 14, 4386. [Google Scholar] [CrossRef]

- Budei, B.C.; St-Onge, B.; Fournier, R.A.; Kneeshaw, D. Effect of Variability of Normalized Differences Calculated from Multi-Spectral Lidar on Individual Tree Species Identification. In Proceedings of the SilviLaser Conference 2021, Vienna, Austria, 28–30 September 2021; pp. 188–190. [Google Scholar]

- Ekhtari, N.; Glennie, C.; Fernandez-Diaz, J.C. Classification of Airborne Multispectral Lidar Point Clouds for Land Cover Mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2068–2078. [Google Scholar] [CrossRef]

- Russell, M.; Eitel, J.U.H.; Link, T.E.; Silva, C.A. Important Airborne Lidar Metrics of Canopy Structure for Estimating Snow Interception. Remote Sens. 2021, 13, 4188. [Google Scholar] [CrossRef]

- Storck, P.; Lettenmaier, D.P.; Bolton, S.M. Measurement of Snow Interception and Canopy Effects on Snow Accumulation and Melt in a Mountainous Maritime Climate, Oregon, United States. Water Resour. Res. 2002, 38, 5-1–5-16. [Google Scholar] [CrossRef]

- Dial, R.; Bloodworth, B.; Lee, A.; Boyne, P.; Heys, J. The Distribution of Free Space and Its Relation to Canopy Composition at Six Forest Sites. For. Sci. 2004, 50, 312–325. [Google Scholar] [CrossRef]

- Leiterer, R.; Furrer, R.; Schaepman, M.E.; Morsdorf, F. Forest Canopy-Structure Characterization: A Data-Driven Approach. For. Ecol. Manag. 2015, 358, 48–61. [Google Scholar] [CrossRef]

- Di Gennaro, S.F.; Matese, A. Evaluation of Novel Precision Viticulture Tool for Canopy Biomass Estimation and Missing Plant Detection Based on 2.5 D and 3D Approaches Using RGB Images Acquired by UAV Platform. Plant Methods 2020, 16, 91. [Google Scholar] [CrossRef]

- Bright, B.C.; Hicke, J.A.; Hudak, A.T. Estimating Aboveground Carbon Stocks of a Forest Affected by Mountain Pine Beetle in Idaho Using Lidar and Multispectral Imagery. Remote Sens. Environ. 2012, 124, 270–281. [Google Scholar] [CrossRef]

- ASPRS LAS Specification 1.4-R15; The American Society for Photogrammetry & Remote Sensing: Bethesda, MD, USA, 2019.

- Barrett, T.; Dowle, M.; Srinivasan, A. Data.Table: Extension of ‘data.Frame’. 2024. Available online: https://r-datatable.com (accessed on 21 March 2023).

- Roussel, J.-R.; Auty, D.; Coops, N.C.; Tompalski, P.; Goodbody, T.R.H.; Meador, A.S.; Bourdon, J.-F.; de Boissieu, F.; Achim, A. lidR: An R Package for Analysis of Airborne Laser Scanning (ALS) Data. Remote Sens. Environ. 2020, 251, 112061. [Google Scholar] [CrossRef]

- Hijmans, R.J. Terra: Spatial Data Analysis. 2024. Available online: https://rspatial.org/ (accessed on 1 September 2023).

- Schloerke, B.; Cook, D.; Larmarange, J.; Briatte, F.; Marbach, M.; Thoen, E.; Elberg, A.; Toomet, O.; Crowley, J.; Hofmann, H.; et al. GGally: Extension to “Ggplot2” 2023. Available online: https://cran.r-project.org/package=GGally (accessed on 4 April 2023).

- Hardin, P.J.; Shumway, J.M. Statistical Significance and Normalized Confusion Matrices. Photogramm. Eng. Remote Sens. 1997, 63, 735–739. [Google Scholar]

| Name (Abbreviation) | Equation | Reference |

|---|---|---|

| Red–green index (RGI) | Gamon and Surfus [51] | |

| Simple ratio (SR) | Woebbecke et al. [52] | |

| Normalized difference vegetation index (NDVI) | Rouse et al. [53] | |

| Normalized difference red edge (NDRE) index | Hunt Jr. et al. [54] | |

| Green leaf index (GLI) | Hunt Jr. et al. [54] | |

| Excess green (ExG) index | Woebbecke et al. [52] | |

| Red–blue index (RBI) | Perez et al. [55] | |

| Mean red–green–blue (meanRGB) index | Clay et al. [56] |

| Class | Reference | Total | Commission Error (%) | User Accuracy (%) | ||

|---|---|---|---|---|---|---|

| Tree | Not Tree | |||||

| Prediction | Tree | 248 | 35 (31: tree segmentation issue; 4: ground issue) | 283 | 12.4 | 87.6 |

| Not tree | 352 (352: tree segmentation issue) | 365 | 717 | 49.1 | 50.9 | |

| Total | 600 | 400 | 1000 | |||

| Omission error (%) | 58.7 | 8.8 | Overall accuracy | 61.3% | ||

| Producer accuracy (%) | 41.3 | 91.2 | ||||

| Class | Reference | Total | Commission Error (%) | User Accuracy (%) | ||||

|---|---|---|---|---|---|---|---|---|

| Green | Gray | Red | Shadow | |||||

| Prediction | Green | 199 | 1 | 2 | 2 | 204 | 2.0 | 98.0 |

| Gray | 0 | 199 | 5 | 0 | 204 | 2.0 | 98.0 | |

| Red | 1 | 0 | 193 | 0 | 194 | 1.0 | 99.0 | |

| Shadow | 0 | 0 | 0 | 198 | 198 | 0 | 100 | |

| Total | 200 | 200 | 200 | 200 | 800 | |||

| Omission error (%) | 0.5 | 0.5 | 3.5 | 1.0 | Overall accuracy: 98.6% | |||

| Producer accuracy (%) | 99.5 | 99.5 | 96.5 | 99.0 | Out-of-bag error rate: 1.4% | |||

| Tree Condition | Reference | Total | Commission Error (%) | User Accuracy (%) | ||

|---|---|---|---|---|---|---|

| Healthy | Damaged | |||||

| Prediction | Healthy | 196 | 22 | 218 | 10.1 | 89.9 |

| Damaged | 4 | 178 | 182 | 2.2 | 97.8 | |

| Total | 200 | 200 | 400 | |||

| Omission error (%) | 2.0 | 11.0 | Overall accuracy | 93.5% | ||

| Producer accuracy (%) | 98.0 | 89.0 | ||||

| Damage Severity | Reference | Total | Comm. Err. (%) | User Acc. (%) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Healthy | Minor Damage | Moderate Damage | Major Damage | Dead (Red) | Dead (Gray) | Dead (Mixed) | |||||

| Prediction | Healthy | 74 | 4 | 6 | 0 | 0 | 0 | 0 | 84 | 11.9 | 88.1 |

| Minor damage | 1 | 21 | 14 | 1 | 0 | 0 | 0 | 37 | 43.2 | 56.8 | |

| Moderate damage | 0 | 0 | 42 | 5 | 6 | 11 | 4 | 68 | 38.2 | 61.8 | |

| Major damage | 0 | 0 | 0 | 10 | 2 | 7 | 4 | 23 | 56.5 | 43.5 | |

| Dead (red) | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0.0 | 100.0 | |

| Dead (mixed) | 0 | 0 | 0 | 0 | 0 | 0 | 5 | 5 | 0.0 | 100.0 | |

| Total | 75 | 25 | 62 | 16 | 9 | 18 | 13 | 218 | |||

| Omis. Err. (%) | 1.3 | 16.0 | 32.3 | 37.5 | 88.9 | 100.0 | 61.5 | Overall accuracy 70.2% | |||

| Prod. Acc. (%) | 98.7 | 84.0 | 67.7 | 62.5 | 11.1 | 0.0 | 38.5 | ||||

| Damage Type | Reference | Total | Commission Error (%) | User Accuracy (%) | ||

|---|---|---|---|---|---|---|

| Non-Top-Kill | Top-Kill | |||||

| Prediction | Non-top-kill | 18 | 2 | 20 | 10.0 | 90.0 |

| Top-kill | 3 | 38 | 41 | 7.3 | 92.7 | |

| Total | 21 | 40 | 61 | |||

| Omission error (%) | 14.3 | 5.0 | Overall accuracy | 91.8% | ||

| Producer accuracy (%) | 85.7 | 95.0 | ||||

| Tree Type | No. of Trees | Mean % Green | Mean % Gray | Mean % Red | Mean % Damage | No. of Non-TK | No. of TK | Mean TK Length (m) | Mean % TK |

|---|---|---|---|---|---|---|---|---|---|

| Healthy | 12,143 | 99.4 | 0.4 | 0.3 | 0.7 | - | - | - | - |

| Damaged | 3376 | 72.1 | 8.3 | 19.6 | 27.9 | 713 | 2663 | 1.5 | 17.8 |

| Minor damage | 1980 | 87.6 | 4.1 | 8.4 | 12.4 | 541 | 1439 | 0.9 | 11.1 |

| Moderate damage | 1192 | 56.3 | 11.9 | 31.9 | 43.8 | 169 | 1023 | 1.7 | 19.7 |

| Major damage | 154 | 18.3 | 27.3 | 54.4 | 81.7 | 3 | 151 | 4.7 | 54.7 |

| Dead (red) | 5 | 5.3 | 91.2 | 3.5 | 94.7 | 0 | 5 | 4.8 | 39.8 |

| Dead (gray) | 19 | 4.6 | 7.5 | 87.9 | 95.4 | 0 | 19 | 4.0 | 66.3 |

| Dead (mixed) | 26 | 5.0 | 41.9 | 53.1 | 95.0 | 0 | 26 | 5.8 | 57.9 |

| Total (all trees) | 15519 | 93.4 | 4.5 | 2.1 | 6.6 | - | - | - | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shrestha, A.; Hicke, J.A.; Meddens, A.J.H.; Karl, J.W.; Stahl, A.T. Evaluating a Novel Approach to Detect the Vertical Structure of Insect Damage in Trees Using Multispectral and Three-Dimensional Data from Drone Imagery in the Northern Rocky Mountains, USA. Remote Sens. 2024, 16, 1365. https://doi.org/10.3390/rs16081365

Shrestha A, Hicke JA, Meddens AJH, Karl JW, Stahl AT. Evaluating a Novel Approach to Detect the Vertical Structure of Insect Damage in Trees Using Multispectral and Three-Dimensional Data from Drone Imagery in the Northern Rocky Mountains, USA. Remote Sensing. 2024; 16(8):1365. https://doi.org/10.3390/rs16081365

Chicago/Turabian StyleShrestha, Abhinav, Jeffrey A. Hicke, Arjan J. H. Meddens, Jason W. Karl, and Amanda T. Stahl. 2024. "Evaluating a Novel Approach to Detect the Vertical Structure of Insect Damage in Trees Using Multispectral and Three-Dimensional Data from Drone Imagery in the Northern Rocky Mountains, USA" Remote Sensing 16, no. 8: 1365. https://doi.org/10.3390/rs16081365