Automatic Detection of Buildings and Changes in Buildings for Updating of Maps

Abstract

:1. Introduction

1.1. Motivation

1.2. Previous Studies

1.3. Contribution of Our Study

2. Study Area and Data

2.1. Study Area

2.2. Data

2.2.1. Laser Scanner Data

2.2.2. Aerial Image Data

2.2.3. Map Data

3. Methods

3.1. Building Detection

3.1.1. Building Detection Method

3.1.2. Building Detection Experiments

| Data source | Attributes for segments |

|---|---|

| Minimum DSM | Standard deviation, GLCM homogeneity, MSE obtained when fitting a plane to the height values |

| Maximum DSM | Standard deviation, GLCM homogeneity |

| DSM difference | Mean, standard deviation |

| Slope image | Mean |

| Aerial image | Separately for all channels: mean, standard deviation, GLCM homogeneity |

| Normalized Difference Vegetation Index (NDVI) calculated from the mean values in the red and near-infrared channels | |

| Segments and shape polygons [55] derived from the segments | 26 shape attributes [56]: *) area, area excluding inner polygons (p.), area including inner polygons (p.), asymmetry, average length of edges (p.), border index, border length, compactness, compactness (p.), density, edges longer than 20 pixels (p.), elliptic fit, length, length of longest edge (p.), length/width, number of edges (p.), number of inner objects (p.), number of right angles with edges longer than 20 pixels (p.), perimeter (p.), radius of largest enclosed ellipse, radius of smallest enclosing ellipse, rectangular fit, roundness, shape index, standard deviation of length of edges (p.), width |

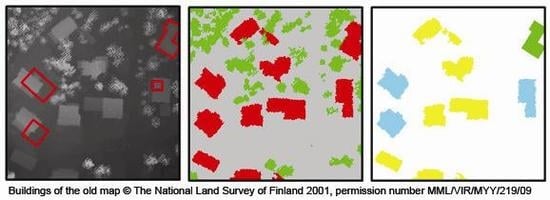

3.2. Change Detection

3.2.1. Change Detection Method

- One building on the map corresponds to one in the building detection (1-1). This is an unchanged (OK, class 1) or changed building (class 2).

- No buildings on the map, one in the building detection (0-1). This is a new building (class 3).

- One building on the map, no buildings in the building detection (1-0). This is possibly a demolished building (class 4).

- One building on the map, more than one in the building detection (1-n), or vice versa (n-1). This can be a real change (e.g., one building demolished, several new buildings constructed), or it can be related to generalization or inaccuracy of the map or problems in building detection. These buildings are assigned to class 5: 1-n/n-1.

- Missing buildings or building parts due to tree cover (demolished or changed buildings in change detection).

- Missing buildings or building parts due to their low height (demolished or changed buildings).

- Enlarged buildings due to their connection with nearby vegetation (changed buildings).

- Misclassification of other objects as buildings (new buildings).

3.2.2. Change Detection Experiments

3.3. Accuracy Estimation

3.3.1. Accuracy Estimation of Building Detection Results

3.3.2. Accuracy Estimation of Change Detection Results

4. Results and Discussion

4.1. Building Detection Results

| Low-rise area | High-rise area | New residential area | Industrial area | All areas | |

|---|---|---|---|---|---|

| Completeness | 89.7% | 90.0% | 89.2% | 96.9% | 91.3% |

| Correctness | 83.8% | 89.3% | 77.7% | 90.6% | 87.1% |

| Mean accuracy | 86.6% | 89.6% | 83.1% | 93.7% | 89.1% |

| Buildings classified as trees | 3.9% | 2.5% | 2.5% | 0.8% | 2.5% |

| Buildings classified as ground | 6.4% | 7.5% | 8.3% | 2.3% | 6.2% |

| Building size (m2) | Number of buildings in the reference map | Completeness (overlap requirement 50% / 1%) | Number of buildings in the building detection results | Correctness (overlap requirement 50% / 1%) |

|---|---|---|---|---|

| ≥ 20 | 1,128 | 88.9% / 91.6% | 1,210 | 86.3% / 87.9% |

| ≥ 40 | 1,012 | 94.0% / 96.4% | 1,060 | 92.7% / 94.2% |

| ≥ 60 | 949 | 95.9% / 98.0% | 974 | 96.0% / 96.7% |

| ≥ 80 | 896 | 96.5% / 98.7% | 916 | 97.5% / 98.1% |

| ≥ 100 | 854 | 96.5% / 98.7% | 861 | 98.4% / 98.7% |

| ≥ 200 | 452 | 96.7% / 99.1% | 534 | 98.9% / 99.1% |

| ≥ 300 | 318 | 95.9% / 99.1% | 355 | 99.4% / 99.4% |

4.2. Change Detection Results

| Change detection results | Reference results | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| OK | Change | New | Demolished*) | 1-n/ n-1 | Not analyzed | Not new building | Sum | % of buildings in c.d. results **) | |

| OK | 645 | 2 | – | 13 | 22 | 0 | – | 682 | 51.0% |

| Change | 19 | 29 | – | 4 | 1 | 1 | – | 54 | 4.0% |

| New | – | – | 172 | – | – | Excluded | 139 | 311 | 23.3% |

| Demolished | 5 | 1 | – | 13 | 0 | 0 | – | 19 | 1.4% |

| 1-n/n-1 | 82 | 1 | – | 3 | 95 | 0 | – | 181 | 13.5% |

| Not analyzed | 2 | 1 | Excluded | 0 | 0 | 87 | – | 90 | 6.7% |

| Not new building | – | – | 79 | – | – | – | – | 79 | – |

| Sum | 753 | 34 | 251 | 33 | 118 | 88 | 139 | 1,416 | |

| % of buildings in ref. results ***) | 59.0% | 2.7% | 19.7% | 2.6% | 9.2% | 6.9% | – | 100% | |

- *)

- 10 of the reference buildings for class 4 (demolished) were not really demolished (see text).

- **)

- % of buildings in the change detection results (total: 1,416 − 79 = 1,337)

- ***)

- % of buildings in the reference results (total: 1,416 − 139 = 1,277)

| Class and building size (m2) | Change detection approach | Number of buildings in the reference results | Completeness | Number of buildings in the change detection results | Correctness |

|---|---|---|---|---|---|

| Class 1 (OK) | |||||

| ≥ 20 | Overlap | 751 / 669 | 85.9% / 96.4% | 682 / 660 | 94.6% / 97.7% |

| Buffers | 655 / 584 | 71.8% / 80.5% | 525 / 506 | 89.5% / 92.9% | |

| ≥ 100 | Overlap | 581 / 516 | 87.3% / 98.3% | 534 / 513 | 94.9% / 98.8% |

| Buffers | 509 / 452 | 71.9% / 81.0% | 407 / 389 | 89.9% / 94.1% | |

| ≥ 300 | Overlap | 204 / 188 | 91.2% / 98.9% | 201 / 191 | 92.5% / 97.4% |

| Buffers | 177 / 165 | 75.7% / 81.2% | 155 / 145 | 86.5% / 92.4% | |

| Class 2 (Change) | |||||

| ≥ 20 | Overlap | 33 / 32 | 87.9% / 90.6% | 53 / 52 | 54.7% / 55.8% |

| Buffers | 118 / 108 | 69.5% / 75.9% | 201 / 197 | 40.8% / 41.6% | |

| ≥ 100 | Overlap | 16 / 15 | 87.5% / 93.3% | 27 / 26 | 51.9% / 53.8% |

| Buffers | 88 / 79 | 69.3% / 77.2% | 153 / 149 | 39.9% / 40.9% | |

| ≥ 300 | Overlap | 5 / 5 | 80.0% / 80.0% | 8 / 7 | 50.0% / 57.1% |

| Buffers | 32 / 28 | 65.6% / 75.0% | 54 / 53 | 38.9% / 39.6% | |

| Class 3 (New) | |||||

| ≥ 20 | Overlap | 251 / 250 | 68.5% / 68.8% | 311 / 311 | 55.3% / 55.3% |

| Buffers | 250 / 249 | 68.4% / 68.7% | 310 / 310 | 55.2% / 55.2% | |

| ≥ 100 | Overlap | 124 / 124 | 90.3% / 90.3% | 120 / 120 | 93.3% / 93.3% |

| Buffers | 123 / 123 | 90.2% / 90.2 % | 119 / 119 | 93.3% / 93.3% | |

| ≥ 300 | Overlap | 50 / 50 | 88.0% / 88.0% | 44 / 44 | 100.0% / 100.0% |

| Buffers | 49 / 49 | 87.8% / 87.8% | 43 / 43 | 100.0% / 100.0% | |

| Class 4 (Demolished) *) | |||||

| ≥ 20 | Overlap | 33 / 30 | 39.4% / 43.3% | 19 / 19 | 68.4% / 68.4% |

| Buffers | 31 / 28 | 41.9% / 46.4% | 17 / 17 | 76.5% / 76.5% | |

| ≥ 100 | Overlap | 14 / 12 | 28.6% / 33.3% | 4 / 4 | 100.0% / 100.0% |

| Buffers | 13 / 11 | 30.8% / 36.4% | 4 / 4 | 100.0% / 100.0% | |

| ≥ 300 | Overlap | 8 / 7 | 25.0% / 28.6% | 2 / 2 | 100.0% / 100.0% |

| Buffers | 8 / 7 | 25.0% / 28.6% | 2 / 2 | 100.0% / 100.0% | |

| Class 5 (1-n/n-1) | |||||

| ≥ 20 | Overlap | 118 | 80.5% | 181 | 52.5% |

| Buffers | 118 | 80.5% | 179 | 53.1% | |

| ≥ 100 | Overlap | 94 | 76.6% | 140 | 51.4% |

| Buffers | 94 | 76.6% | 140 | 51.4% | |

| ≥ 300 | Overlap | 31 | 64.5% | 37 | 54.1% |

| Buffers | 31 | 64.5% | 37 | 54.1% | |

| All classes | |||||

| ≥ 20 | Overlap | 1,186 / 981 | 80.4% / 87.6% | 1,246 / 1,042 | 76.6% / 82.4% |

| Buffers | 1,172 / 969 | 70.9% / 76.0% | 1,232 / 1,030 | 67.5% / 71.5% | |

| ≥ 100 | Overlap | 829 / 667 | 85.5% / 95.5% | 825 / 663 | 85.9% / 96.1% |

| Buffers | 827 / 665 | 74.2% / 81.5% | 823 / 661 | 74.6% / 82.0% | |

| ≥ 300 | Overlap | 298 / 250 | 85.9% / 94.4% | 292 / 244 | 87.7% / 96.7% |

| Buffers | 297 / 249 | 74.1% / 80.3% | 291 / 243 | 75.6% / 82.3% | |

- *)

- 10 of the reference buildings for class 4 (demolished) were not really demolished (see text).

| Change detection results | Reference results | |||||

|---|---|---|---|---|---|---|

| OK | Change | New | Demolished | 1-n/n-1 | Not analyzed | |

| OK after examining tree cover | 15 / 34 | 0 / 1 | 0 / 0 | 2 / 2 | 0 / 0 | 0 / 1 |

| OK after examining DSM | 16 / 14 | 1 / 1 | 0 / 0 | 7 / 7*) | 0 / 0 | 0 / 2 |

5. Conclusions and Further Development

Acknowledgements

References

- Petzold, B.; Walter, V. Revision of topographic databases by satellite images. In Proceedings of the ISPRS Workshop: Sensors and Mapping from Space 1999, Hannover, Germany, September 27–30, 1999; Available online: http://www.ipi.uni-hannover.de/fileadmin/institut/pdf/petzold.pdf (accessed on April 15, 2010).

- Eidenbenz, C.; Käser, C.; Baltsavias, E. ATOMI—automated reconstruction of topographic objects from aerial images using vectorized map information. Amsterdam, The Netherlands, July 16–23, 2000; Vol. XXXIII, Part B3, pp. 462–471.

- Armenakis, C.; Leduc, F.; Cyr, I.; Savopol, F.; Cavayas, F. A comparative analysis of scanned maps and imagery for mapping applications. ISPRS J. Photogramm. Remote Sensing 2003, 57, 304–314. [Google Scholar] [CrossRef]

- Knudsen, T.; Olsen, B.P. Automated change detection for updates of digital map databases. Photogramm. Eng. Remote Sensing 2003, 69, 1289–1296. [Google Scholar] [CrossRef]

- Busch, A.; Gerke, M.; Grünreich, D.; Heipke, C.; Liedtke, C.-E.; Müller, S. Automated verification of a topographic reference dataset: System design and practical results. Istanbul, Turkey, July 12–23, 2004; Vol. XXXV, Part B2. pp. 735–740.

- Steinnocher, K.; Kressler, F. Change detection (Final report of a EuroSDR project). EuroSDR Official Publication No 50; EuroSDR: Frankfurt, Germany, 2006; pp. 111–182. [Google Scholar]

- Champion, N. 2D building change detection from high resolution aerial images and correlation digital surface models. Munich, Germany, September 19–21, 2007; Vol. XXXVI, Part 3/W49A. pp. 197–202.

- Holland, D.A.; Sanchez-Hernandez, C.; Gladstone, C. Detecting changes to topographic features using high resolution imagery. Beijing, China, July 3–11, 2008; Vol. XXXVII, Part B4. pp. 1153–1158.

- Rottensteiner, F. Building change detection from digital surface models and multi-spectral images. Munich, Germany, September 19–21, 2007; Vol. XXXVI, Part 3/W49B. pp. 145–150.

- Champion, N.; Rottensteiner, F.; Matikainen, L.; Liang, X.; Hyyppä, J.; Olsen, B.P. A test of automatic building change detection approaches. Paris, France, September 3–4, 2009; Vol. XXXVIII, Part 3/W4. pp. 145–150.

- Murakami, H.; Nakagawa, K.; Hasegawa, H.; Shibata, T.; Iwanami, E. Change detection of buildings using an airborne laser scanner. ISPRS J. Photogramm. Remote Sensing 1999, 54, 148–152. [Google Scholar] [CrossRef]

- Vögtle, T.; Steinle, E. Detection and recognition of changes in building geometry derived from multitemporal laserscanning data. Istanbul, Turkey, July 12–23, 2004; Vol. XXXV, Part B2. pp. 428–433.

- Jung, F. Detecting building changes from multitemporal aerial stereopairs. ISPRS J. Photogramm. Remote Sensing 2004, 58, 187–201. [Google Scholar] [CrossRef]

- Vu, T.T.; Matsuoka, M.; Yamazaki, F. LIDAR-based change detection of buildings in dense urban areas. In Proceedings of IGARSS’04, Anchorage, AK, USA, September 20–24, 2004; Volume 5, pp. 3413–3416.

- Butkiewicz, T.; Chang, R.; Wartell, Z.; Ribarsky, W. Visual analysis and semantic exploration of urban LIDAR change detection. Comput. Graph. Forum 2008, 27, 903–910. [Google Scholar] [CrossRef]

- Nakagawa, M.; Shibasaki, R. Building change detection using 3-D texture model. Beijing, China, July 3–11, 2008; Vol. XXXVII, Part B3A. pp. 173–178.

- Hoffmann, A.; Van der Vegt, J.W.; Lehmann, F. Towards automated map updating: Is it feasible with new digital data-acquisition and processing techniques? Amsterdam, The Netherlands, July 16–23, 2000; Vol. XXXIII, Part B2. pp. 295–302.

- Knudsen, T.; Olsen, B.P. Detection of buildings in colour and colour-infrared aerial photos for semi-automated revision of topographic databases. Graz, Austria, September 9–13, 2002; Vol. XXXIV, Part 3B. pp. 120–125.

- Olsen, B.P. Automatic change detection for validation of digital map databases. Istanbul, Turkey, July 12–23, 2004; Vol. XXXV, Part B2. pp. 569–574.

- Matikainen, L.; Hyyppä, J.; Hyyppä, H. Automatic detection of buildings from laser scanner data for map updating. Dresden, Germany, October 8–10, 2003; Vol. XXXIV, Part 3/W13. pp. 218–224.

- Matikainen, L.; Hyyppä, J.; Kaartinen, H. Automatic detection of changes from laser scanner and aerial image data for updating building maps. Istanbul, Turkey, July 12–23, 2004; Vol. XXXV, Part B2. pp. 434–439.

- Vosselman, G.; Gorte, B.G.H.; Sithole, G. Change detection for updating medium scale maps using laser altimetry. Istanbul, Turkey, July 12–23, 2004; Vol. XXXV, Part B3. pp. 207–212.

- Vosselman, G.; Kessels, P.; Gorte, B. The utilisation of airborne laser scanning for mapping. Int. J. Appl. Earth Obs. Geoinf. 2005, 6, 177–186. [Google Scholar] [CrossRef]

- Rottensteiner, F. Automated updating of building data bases from digital surface models and multi-spectral images: Potential and limitations. Beijing, China, July 3–11, 2008; Vol. XXXVII, Part B3A. pp. 265–270.

- Ceresola, S.; Fusiello, A.; Bicego, M.; Belussi, A.; Murino, V. Automatic updating of urban vector maps. In Image Analysis and Processing—ICIAP 2005; Roli, F., Vitulano, S., Eds.; Springer-Verlag: Berlin / Heidelberg, Germany, 2005; Lecture Notes in Computer Science Series; Volume 3617/2005, pp. 1133–1139. [Google Scholar]

- Bouziani, M.; Goïta, K.; He, D.-C. Automatic change detection of buildings in urban environment from very high spatial resolution images using existing geodatabase and prior knowledge. ISPRS J. Photogramm. Remote Sensing 2010, 65, 143–153. [Google Scholar] [CrossRef]

- Hug, C. Extracting artificial surface objects from airborne laser scanner data. In Automatic Extraction of Man-Made Objects from Aerial and Space Images (II); Gruen, A., Baltsavias, E.P., Henricsson, O., Eds.; Birkhäuser Verlag: Basel, Switzerland, 1997; pp. 203–212. [Google Scholar]

- Brunn, A.; Weidner, U. Hierarchical Bayesian nets for building extraction using dense digital surface models. ISPRS J. Photogramm. Remote Sensing 1998, 53, 296–307. [Google Scholar] [CrossRef]

- Haala, N.; Brenner, C. Extraction of buildings and trees in urban environments. ISPRS J. Photogramm. Remote Sensing 1999, 54, 130–137. [Google Scholar] [CrossRef]

- Morgan, M.; Tempfli, K. Automatic building extraction from airborne laser scanning data. Amsterdam, The Netherlands, July 16–23, 2000; Vol. XXXIII, Part B3. pp. 616–623.

- Oude Elberink, S.; Maas, H.-G. The use of anisotropic height texture measures for the segmentation of airborne laser scanner data. Amsterdam, The Netherlands, July 16–23, 2000; Vol. XXXIII, Part B3. pp. 678–684.

- Vögtle, T.; Steinle, E. 3D modelling of buildings using laser scanning and spectral information. Amsterdam, The Netherlands, July 16–23, 2000; Vol. XXXIII, Part B3. pp. 927–934.

- Alharthy, A.; Bethel, J. Heuristic filtering and 3D feature extraction from lidar data. Graz, Austria, September 9–13, 2002; Vol. XXXIV, Part 3A. pp. 29–34.

- Tóvári, D.; Vögtle, T. Classification methods for 3D objects in laserscanning data. Istanbul, Turkey, July 12–23, 2004; Vol. XXXV, Part B3. pp. 408–413.

- Ma, R. DEM generation and building detection from lidar data. Photogramm. Eng. Remote Sensing 2005, 71, 847–854. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Trinder, J.; Clode, S.; Kubik, K. Using the Dempster-Shafer method for the fusion of LIDAR data and multi-spectral images for building detection. Inf. Fusion 2005, 6, 283–300. [Google Scholar] [CrossRef]

- Forlani, G.; Nardinocchi, C.; Scaioni, M.; Zingaretti, P. Complete classification of raw LIDAR data and 3D reconstruction of buildings. Pattern Anal. Appl. 2006, 8, 357–374. [Google Scholar] [CrossRef]

- Zhang, K.; Yan, J.; Chen, S.-C. Automatic construction of building footprints from airborne LIDAR data. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2523–2533. [Google Scholar] [CrossRef]

- Miliaresis, G.; Kokkas, N. Segmentation and object-based classification for the extraction of the building class from LIDAR DEMs. Comput. Geosci. 2007, 33, 1076–1087. [Google Scholar] [CrossRef]

- Sohn, G.; Dowman, I. Data fusion of high-resolution satellite imagery and LiDAR data for automatic building extraction. ISPRS J. Photogramm. Remote Sensing 2007, 62, 43–63. [Google Scholar] [CrossRef]

- Lee, D.H.; Lee, K.M.; Lee, S.U. Fusion of lidar and imagery for reliable building extraction. Photogramm. Eng. Remote Sensing 2008, 74, 215–225. [Google Scholar] [CrossRef]

- Meng, X.; Wang, L.; Currit, N. Morphology-based building detection from airborne lidar data. Photogramm. Eng. Remote Sensing 2009, 75, 437–442. [Google Scholar] [CrossRef]

- Vu, T.T.; Yamazaki, F.; Matsuoka, M. Multi-scale solution for building extraction from LiDAR and image data. Int. J. Appl. Earth Obs. Geoinf. 2009, 11, 281–289. [Google Scholar] [CrossRef]

- ISPRS. Beijing, China, July 3–11, 2008; Vol. XXXVII. Available online: http://www.isprs.org/publications/archives.aspx (accessed on April 15, 2010).

- Mallet, C.; Bretar, F.; Soergel, U. Analysis of full-waveform lidar data for classification of urban areas. Photogrammetrie–Fernerkundung–Geoinformation 2008, No. 5/2008, 337–349. [Google Scholar]

- Pfeifer, N.; Rutzinger, M.; Rottensteiner, F.; Muecke, W.; Hollaus, M. Extraction of building footprints from airborne laser scanning: Comparison and validation techniques. In Proceedings of the 2007 IEEE Urban Remote Sensing Joint Event, URBAN 2007-URS 2007, Paris, France, April 11–13, 2007.

- Rottensteiner, F.; Trinder, J.; Clode, S.; Kubik, K. Building detection by fusion of airborne laser scanner data and multi-spectral images: Performance evaluation and sensitivity analysis. ISPRS J. Photogramm. Remote Sensing 2007, 62, 135–149. [Google Scholar] [CrossRef]

- Khoshelham, K.; Nardinocchi, C.; Frontoni, E.; Mancini, A.; Zingaretti, P. Performance evaluation of automated approaches to building detection in multi-source aerial data. ISPRS J. Photogramm. Remote Sensing 2010, 65, 123–133. [Google Scholar] [CrossRef]

- Rutzinger, M.; Rottensteiner, F.; Pfeifer, N. A comparison of evaluation techniques for building extraction from airborne laser scanning. IEEE J. Selected Topics Appl. Earth Obs. Remote Sens. 2009, 2, 11–20. [Google Scholar] [CrossRef]

- Terrasolid. Terrasolid Ltd.: Jyväskylä, Finland. Available online: http://www.terrasolid.fi/ (accessed on April 15, 2010).

- Axelsson, P. DEM generation from laser scanner data using adaptive TIN models. Amsterdam, The Netherlands, July 16–23, 2000; Vol. XXXIII, Part B4. pp. 110–117.

- Intergraph. Intergraph Corporation: Huntsville, AL, USA. Available online: http://www.intergraph.com/sgi/products/default.aspx (accessed on April 15, 2010).

- ERDAS. ERDAS Inc.: Norcross, GA, USA. Available online: http://www.erdas.com/ (accessed on April 15, 2010).

- NLSF (Maanmittauslaitos, National Land Survey of Finland). Maastotietojen laatumalli. NLSF: Helsinki, Finland, 1995. (in Finnish). Available online: http://www.maanmittauslaitos.fi/Tietoa_maasta/Digitaaliset_tuotteet/Maastotietokanta/ (accessed on April 15, 2010).

- Definiens. Definiens AG: München, Germany; Available online: http://www.definiens.com/ (accessed on April 15, 2010).

- Definiens. Definiens Professional 5 Reference Book; Definiens AG: München, Germany, 2006. [Google Scholar]

- Definiens. Available online: http://www.definiens.com/ (accessed on April 15, 2010).

- Baatz, M.; Schäpe, A. Multiresolution segmentation—An optimization approach for high quality multi-scale image segmentation. In Angewandte Geographische Informationsverarbeitung XII: Beiträge zum AGIT-Symposium Salzburg 2000; Strobl, J., Blaschke, T., Griesebner, G., Eds.; Wichmann: Heidelberg, Germany, 2000; pp. 12–23. [Google Scholar]

- The MathWorks. The MathWorks, Inc.: Natick, MA, USA. Available online: http://www.mathworks.com/ (accessed on April 15, 2010).

- Matikainen, L.; Hyyppä, J.; Kaartinen, H. Comparison between first pulse and last pulse laser scanner data in the automatic detection of buildings. Photogramm. Eng. Remote Sensing 2009, 75, 133–146. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees; Wadsworth, Inc.: Belmont, CA, USA, 1984. [Google Scholar]

- Friedl, M.A.; Brodley, C.E. Decision tree classification of land cover from remotely sensed data. Remote Sens. Environ. 1997, 61, 399–409. [Google Scholar] [CrossRef]

- Lawrence, R.L.; Wright, A. Rule-based classification systems using classification and regression tree (CART) analysis. Photogramm. Eng. Remote Sensing 2001, 67, 1137–1142. [Google Scholar]

- Thomas, N.; Hendrix, C.; Congalton, R.G. A comparison of urban mapping methods using high-resolution digital imagery. Photogramm. Eng. Remote Sensing 2003, 69, 963–972. [Google Scholar] [CrossRef]

- Hodgson, M.E.; Jensen, J.R.; Tullis, J.A.; Riordan, K.D.; Archer, C.M. Synergistic use of lidar and color aerial photography for mapping urban parcel imperviousness. Photogramm. Eng. Remote Sensing 2003, 69, 973–980. [Google Scholar] [CrossRef]

- Ducic, V.; Hollaus, M.; Ullrich, A.; Wagner, W.; Melzer, T. 3D vegetation mapping and classification using full-waveform laser scanning. In Proceedings of the International Workshop: 3D Remote Sensing in Forestry, Vienna, Austria, February 14–15, 2006; pp. 222–228. Available online: http://www.rali.boku.ac.at/fileadmin/_/H85/H857/workshops/3drsforestry/Proceedings_3D_Remote_Sensing_2006_rev_20070129.pdf (accessed on April 15, 2010).

- Matikainen, L. Improving automation in rule-based interpretation of remotely sensed data by using classification trees. Photogramm. J. Fin. 2006, 20, 5–20. [Google Scholar]

- Zingaretti, P.; Frontoni, E.; Forlani, G.; Nardinocchi, C. Automatic extraction of LIDAR data classification rules. In Proceedings of the 14th IEEE International Conference on Image Analysis and Processing, ICIAP 2007, Modena, Italy, September 10–14, 2007.

- Im, J.; Jensen, J.R.; Hodgson, M.E. Object-based land cover classification using high-posting-density LiDAR data. GISci. Remote Sens. 2008, 45, 209–228. [Google Scholar] [CrossRef]

- The MathWorks. Online documentation for Statistics Toolbox, Version 4.0; The MathWorks, Inc.: Natick, MA, USA, 2003. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Matikainen, L.; Kaartinen, H.; Hyyppä, J. Classification tree based building detection from laser scanner and aerial image data. Espoo, Finland, September 12–14, 2007; Vol. XXXVI, Part 3/W52. pp. 280–287.

- Matikainen, L.; Hyyppä, J.; Ahokas, E.; Markelin, L.; Kaartinen, H. An improved approach for automatic detection of changes in buildings. Paris, France, September 1–2, 2009; Vol. XXXVIII, Part 3/W8. pp. 61–67.

- Uitermark, H.; van Oosterom, P.; Mars, N.; Molenaar, M. Propagating updates: Finding corresponding objects in a multi-source environment. In Proceedings of the 8th International Symposium on Spatial Data Handling, Vancouver, Canada, July 11–15, 1998; pp. 580–591.

- Badard, T. On the automatic retrieval of updates in geographic databases based on geographic data matching tools. In Proceedings of the 19th International Cartographic Conference and 11th General Assembly of ICA, Ottawa, Canada, August 14–21, 1999; Volume 2, pp. 1291–1300.

- Winter, S. Location similarity of regions. ISPRS J. Photogramm. Remote Sensing 2000, 55, 189–200. [Google Scholar] [CrossRef]

- Song, W.; Haithcoat, T.L. Development of comprehensive accuracy assessment indexes for building footprint extraction. IEEE Trans. Geosci. Remote Sens. 2005, 43, 402–404. [Google Scholar] [CrossRef]

- Zhan, Q.; Molenaar, M.; Tempfli, K.; Shi, W. Quality assessment for geo-spatial objects derived from remotely sensed data. Int. J. Remote Sens. 2005, 26, 2953–2974. [Google Scholar] [CrossRef]

- Helldén, U. A test of Landsat-2 imagery and digital data for thematic mapping, illustrated by an environmental study in northern Kenya; University of Lund: Lund, Sweden, 1980; Lunds Universitets Naturgeografiska Institution, Rapporter och Notiser 47. [Google Scholar]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; Lewis Publishers: Boca Raton, FL, USA, 1999. [Google Scholar]

- Kareinen, J. Maastotietokannan ajantasaistus laserkeilausaineistosta (Updating NLS Topographic Database from laser scanner data). Master’s thesis, Helsinki University of Technology, Espoo, Finland, 2008. [Google Scholar]

© 2010 by the authors; licensee MDPI, Basel, Switzerland. This article is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Matikainen, L.; Hyyppä, J.; Ahokas, E.; Markelin, L.; Kaartinen, H. Automatic Detection of Buildings and Changes in Buildings for Updating of Maps. Remote Sens. 2010, 2, 1217-1248. https://doi.org/10.3390/rs2051217

Matikainen L, Hyyppä J, Ahokas E, Markelin L, Kaartinen H. Automatic Detection of Buildings and Changes in Buildings for Updating of Maps. Remote Sensing. 2010; 2(5):1217-1248. https://doi.org/10.3390/rs2051217

Chicago/Turabian StyleMatikainen, Leena, Juha Hyyppä, Eero Ahokas, Lauri Markelin, and Harri Kaartinen. 2010. "Automatic Detection of Buildings and Changes in Buildings for Updating of Maps" Remote Sensing 2, no. 5: 1217-1248. https://doi.org/10.3390/rs2051217

APA StyleMatikainen, L., Hyyppä, J., Ahokas, E., Markelin, L., & Kaartinen, H. (2010). Automatic Detection of Buildings and Changes in Buildings for Updating of Maps. Remote Sensing, 2(5), 1217-1248. https://doi.org/10.3390/rs2051217