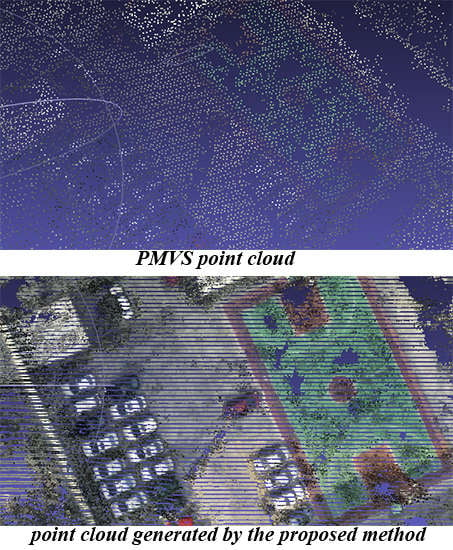

The proposed method can be divided into four steps: (1) a PMVS point cloud generation; (2) patch-based point cloud expansion; (3) point cloud optimization; and (4) an outliers filter. In this section, details of the proposed method are introduced. The principle of this algorithm is illustrated in

Figure 1. As shown in

Figure 1, the proposed method derives from a technique where growing regions start from a set of seed points or patches [

36]. The result of PMVS is a set of patches, and the geometric significance of the patch is a local tangent plane of the object. The proposed algorithm utilizes these results as seed points and takes advantage of projection rules between image pixels and patches to segment the generated patches to expand denser patches. Then, a patch-based Multiphoto Geometrically Constrained Matching (MPGC) algorithm is used to optimize the expanded patches to obtain a more accurate result. Finally, a density constraint [

37] is employed to filter the outliers.

3.2. Patch-Based Point Cloud Expansion

The goal of the expansion step is to expand the seed patch and increase the point cloud density. PMVS attempted to grow a patch starting from a seed matching pixels, and expanding to the neighbor image cells in the visible images until each corresponding image cell reconstructed at least one point. The proposed method utilizes the projection rules to segment the patches into small pieces. Each piece contains one center point, the seed point is growing on the patch and the point cloud is denser.

The result of PMVS records each point in the point cloud with its coordinates (

Xc,

Yc,

Zc), color (R, G, B) and normal vector (

a,

b,

c). By projecting the object point

P(

Xc,

Yc,

Zc) on each image, the image point coordinate

pi(

xi,

yi) (

i is the image index) is calculated. Since the distance between the image point and the origin of the image coordinate system is shorter, the projection distortion is smaller, and the proposed method supposes image

I(

R) as a reference image when the image

I(

R) is satisfied by:

Supposing (

Xc,

Yc,

Zc) is the center of the patch, and (

a,

b,

c) is the normal vector, the local tangent plane (

patch in PMVS) at

P(

Xc,

Yc,

Zc) is:

As illustrated in

Figure 2a, the image point

pi(

xi,

yi) is the center of the image window, where the window size is

μ ×

μ pixels. By projecting the image window onto the patch,

μ ×

μ object points are obtained. Theoretically, the density of the point cloud could expand

μ ×

μ times. The overall algorithm description for this step is given in

Figure 2b. The result patch

P′ consists of the coordinates (

X,

Y,

Z), normal vector (

a,

b,

c) and reference image index

R.

3.3. Patch-Based MPGC to Optimize the Point Cloud

PMVS utilized the projection relationship between the patch and the corresponding images to build a function to find the optimal matching pixel:

In the function above,

i is the index of the visible images (in PMVS, if patch

p is visible in image

i,

i is considered as a visible image of

p);

n is the number of the visible images;

fi is a function that denotes the Normalized Cross-correlation Coefficient (NCC) between corresponding image windows which is obtained by the patch projecting to the reference image (

I0) and visible images (

Ii);

z is the distance of the patch center moving along the ray; (α, β) are the direct angle of the normal vector (

a,

b,

c). The optimization process employed the Nelder-Mead method [

39] to calculate the minimum value of Function (3). From the result of the calculation, the optimal patch (denoted by its center point

P′ and normal vector (

a,

b,

c)) is obtained:

As with the optimization method in PMVS, the proposed method also introduces a patch in the optimization step to obtain a better initial value of the optimization function. In the 1990s, Baltsavias [

40,

41] introduced epipolar line constraints (collinear equation) to Least Square Image Matching (LSM) [

42,

43] and proposed an extremely useful application named Multi-photo Geometrically Constrained Matching (MPGC). This approach simultaneously derives the accurate coordinates of corresponding object points in the object space coordinate system during the image matching process. It has been widely applied to refine matching results in a three-dimensional reconstruction [

25,

26,

44,

45]. The proposed method utilizes a modified MPGC algorithm to optimize the point cloud.

In the traditional LSM method, each pixel in the matching image window is used to build an error equation:

In the error equation above, v is the projection error; h0i and h1i are the radiation distortion coefficients between the reference image and search image i. In the experiments, the initial values of h0i and h1i are usually 0 and 1, respectively. Further, dh0i and dh1i are corrections of parameter h0i and h1i; g0(x0, y0) is the pixel intensity values in the image window of the reference image; gi(xi, yi) is the pixel intensity values of image points (xi, yi) in the search image window; (∂gi/∂xi, ∂gi/∂yi) is the derivative values of pixel intensity in the x and y directions; (dxi, dyi) is the correction values of the image points (xi, yi). Therefore, in a matching of the μ × μ pixels image window, the μ × μ error equations can be listed; if μ × μ is larger than the unknown, using least square adjustment, the corresponding pixels (xi, yi) can be calculated.

MPGC applied epipolar line constraints to the LSM method, and the coordinates of (

xi,

yi) can be denoted by the interior (

xs,

ys,

f) and exterior parameters (projection center

S(

Xs,

Ys,

Zs), rotation matrix (

a1,

a2,

a3;

b1,

b2,

b3;

c1,

c2,

c3)) of image

i and the corresponding object point (

X,

Y,

Z):

Applying the collinear Equation (8) to the LSM error Equation (7), the optimal object point coordinate can be directly obtained during the process of least square adjustment.

However, despite the fact that MPGC performs well in matching refinement, how to select the initial matching window is still a challenge that has yet to be overcome, because either the accuracy of the result or the efficiency of the process is reliant on the quality of the initial value. The proposed method introduces the patch to MPGC to refine the point cloud. By using the patch set obtained in

Section 3.2 as an initial value and projecting each patch onto the visible images to get the initial matching image windows, these initial matching windows have two superior qualities:

All pixels which are located at the same place in the image matching window between the reference and search images are approximate corresponding pixels.

Normal vectors in PMVS results as initial normal vectors of the patch plane, by projecting the patch points onto the images which can significantly decrease the projection deformation.

As with PMVS, the optimization algorithm in the proposed method is based on an individual patch, and each patch

P′ is optimized separately in the following steps: (1) a matching window is selected in reference image

R; (2) the matching window is projected onto the patch plane to calculate the corresponding object points

V(

P′) on patch

P′; (3)

V(

P′) is projected onto each image except image

R to obtain the corresponding points

w(

pi′) on the search images; (4) if the matching window

w(

pi′) is located in the range of image

I and the Normalized Cross-correlation Coefficient (NCC) is larger than 0.6, then image

i is collected into image set

I(

p′); (5) an error equation is built for each corresponding point in the image window between reference image

R and search image set

I(

p′); (6) a least square adjustment is applied to calculate the optimal solution. The overall algorithm description for this step is illustrated in

Figure 3.

The proposed method uses this patch-based MPGC algorithm to optimize the point cloud instead of the PMVS optimization method for the following reasons:

Epipolar line constraint is the most strict constraint for a single-center projection, especially when the camera parameters are known;

Least square adjustment can utilize redundant pixels to decrease the influence of the noise, and has a faster speed in the iterative convergence;

Radiation distortion is taken into account.