LiCHy: The CAF’s LiDAR, CCD and Hyperspectral Integrated Airborne Observation System

Abstract

:1. Introduction

2. LiCHy Instrumentation

2.1. GNSS and Inertial Navigation System (INS)

2.2. Airborne Scanning Waveform LiDAR

2.3. CCD Sensor

2.4. Hyperspectral Sensor

2.5. Multi-Angular Hyperspectral Module (MAM)

3. LiCHy System Integration

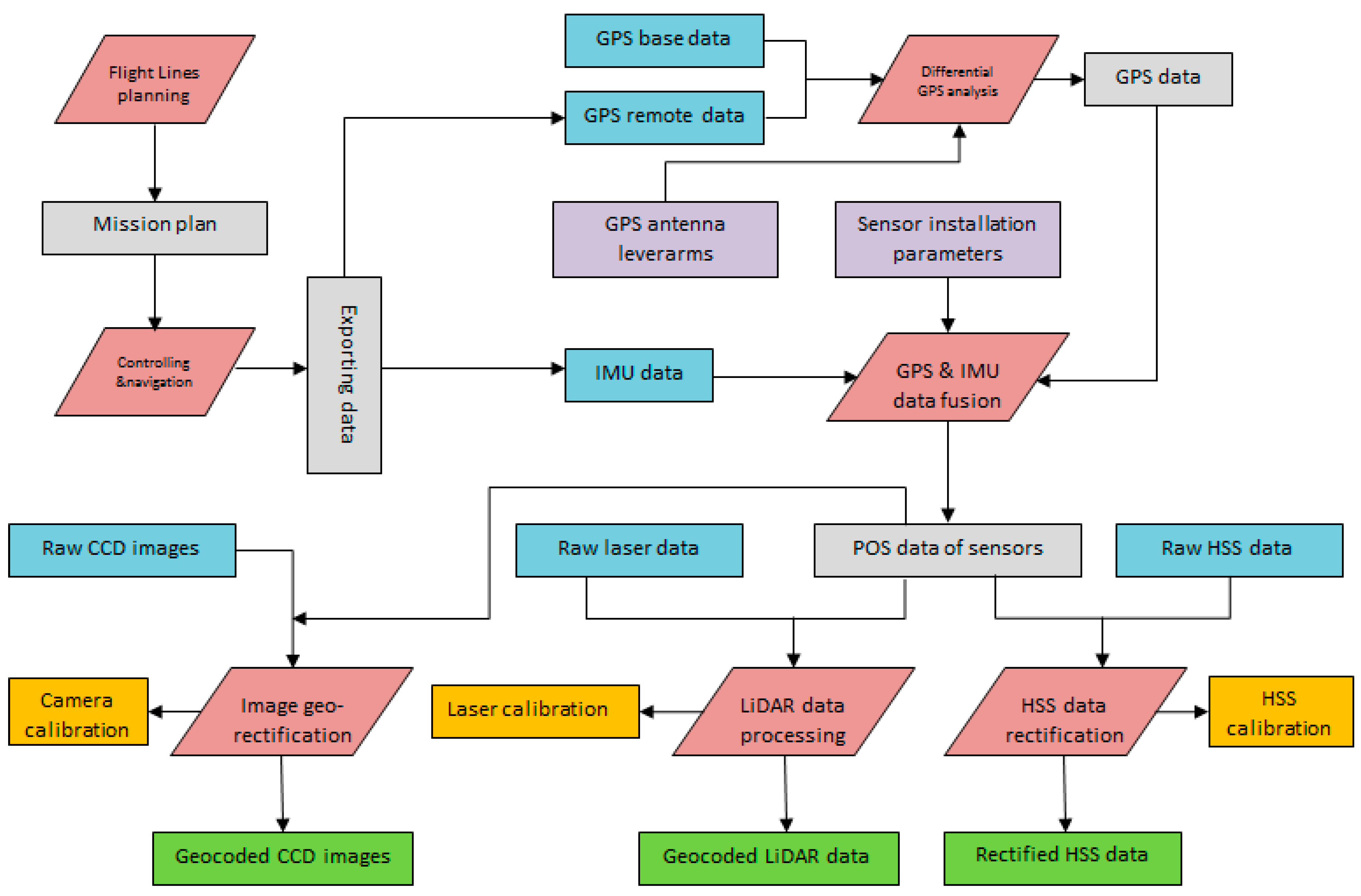

4. Sensor Calibration and Data Processing

4.1. GNSS and INS Data Processing

4.2. LiDAR Data Processing

- (1)

- Full Waveform Decomposition. As LiCHy always enables the continuous collection of full waveform signal, the raw waveform sample data are the first processing object for more advanced LiDAR information, also to reduce the data amount stored by separating the individual pulse returns. Three decomposition methods (i.e., Center Of Gravity estimation, Gaussian Pulse Fitting, and Gaussian Pulse Estimation) are implemented in the processing software of RiProcess [32], all for pulse ranging and extra waveform properties (e.g., pulse width, amplitude).

- (2)

- Geocoding. As the extracted discrete returns of the first step do not contain geographical properties, it is a prerequisite that all the pulses have to be assigned with correct geolocations. The pulse repeat rate (PRR) of the laser is much higher than the data rate of INS. In this step, the POS data are interpolated to provide position and orientation information for each laser pulses. By establishing the geometric model, all the pulse origins and directional vectors can be recovered with the aid of POS data. The LiDAR range ambiguity is resolved by an advanced processing strategy driven by a prior coarse resolution DEM [35].

- (3)

- Boresight Calibration. Limited techniques for integrating the laser and INS introduce errors between the axes of the LiDAR and the POS coordinate systems. To correct these misalignments, boresight calibration is needed with well-planned flight lines and careful post-processing. The boresight angles (i.e., roll, pitch and yaw) are estimated by recognizing identical solid targets in overlapping areas of different lines and applied them back to the laser instrument parameters. As the boresight angles directly change the former geocoding results, geocoding process for flight lines will be performed again to achieve better solutions. Normally these two steps need to be looped several times over the data from calibration flight lines before the residual errors of identical targets do not decrease significantly.

4.3. CCD Data Processing

4.4. Hyperspectral Data Processing

4.5. Data Products Accuracy

5. Data Collection Using LiCHy System

6. Potential Forest Applications of Data from LiCHy System

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Wulder, M.A.; Bater, C.W.; Coops, N.C.; Hilker, T.; White, J.C. The role of LiDAR in sustainable forest management. For. Chron. 2008, 84, 807–826. [Google Scholar] [CrossRef]

- Asner, G.P.; Martin, R.E. Airborne spectranomics: Mapping canopy chemical and taxonomic diversity in tropical forests. Front. Ecol. Environ. 2009, 7, 269–276. [Google Scholar] [CrossRef]

- Riedler, B.; Pernkopf, L.; Strasser, T.; Lang, S.; Smith, G. A composite indicator for assessing habitat quality of riparian forests derived from Earth observation data. Int. J. Appl. Earth Obs. Geoinform. 2015, 37, 114–123. [Google Scholar] [CrossRef]

- Ip, A.; El-Sheimy, N.; Mostafa, M. Performance analysis of integrated sensor orientation. Photogramm. Eng. Remote Sens. 2007, 73, 89–97. [Google Scholar] [CrossRef]

- Næsset, E.; Gobakken, T.; Holmgren, J.; Hyyppä, H.; Hyyppä, J.; Maltamo, M.; Nilsson, M.; Olsson, H.; Persson, Å.; Söderman, U. Laser scanning of forest resources: The Nordic experience. Scand. J. For. Res. 2004, 19, 482–499. [Google Scholar] [CrossRef]

- Vierling, K.T.; Vierling, L.A.; Gould, W.A.; Martinuzzi, S.; Clawges, R.M. Lidar: Shedding new light on habitat characterization and modeling. Front. Ecol. Environ. 2008, 6, 90–98. [Google Scholar] [CrossRef]

- Wulder, M.A.; White, J.C.; Bater, C.W.; Coops, N.C.; Hopkinson, C.; Chen, G. Lidar plots—A new large-area data collection option: Context, concepts, and case study. Can. J. Remote Sens. 2012, 38, 600–618. [Google Scholar] [CrossRef]

- Zhao, D.; Pang, Y.; Li, Z.; Liu, L. Isolating individual trees in a closed coniferous forest using small footprint lidar data. Int. J. Remote Sens. 2014, 35, 7199–7218. [Google Scholar] [CrossRef]

- Duncanson, L.I.; Dubayah, R.O.; Cook, B.D.; Rosette, J.; Parker, G. The importance of spatial detail: Assessing the utility of individual crown information and scaling approaches for lidar-based biomass density estimation. Remote Sens. Environ. 2015, 168, 102–112. [Google Scholar] [CrossRef]

- Hansen, E.H.; Gobakken, T.; Bollandsås, O.M.; Zahabu, E.; Næsset, E. Modeling aboveground biomass in dense tropical submontane rainforest using airborne laser scanner data. Remote Sens. 2015, 7, 788–807. [Google Scholar] [CrossRef] [Green Version]

- Zolkos, S.G.; Goetz, S.J.; Dubayah, R. A meta-analysis of terrestrial aboveground biomass estimation using lidar remote sensing. Remote Sens. Environ. 2013, 128, 289–298. [Google Scholar] [CrossRef]

- Asner, G.P.; Knapp, D.E.; Martin, R.E.; Tupayachi, R.; Anderson, C.B.; Mascaro, J.; Sinca, F.; Chadwick, K.D.; Higgins, M.; Farfan, W.; et al. Targeted carbon conservation at national scales with high-resolution monitoring. Proc. Natl. Acad. Sci. USA 2014, 111, E5016–E5022. [Google Scholar] [CrossRef] [PubMed]

- Hilker, T.; Coops, N.C.; Nesic, Z.; Wulder, M.A.; Brown, M.; Black, A.T. Instrumentation and approach for unattended year round tower based measurements of spectral reflectance. Comput. Electron. Agric. 2007, 56, 72–84. [Google Scholar] [CrossRef]

- Tortini, R.; Hilker, T.; Coops, N.C.; Nesic, Z. Technological advancement in tower-based canopy reflectance monitoring: The AMSPEC-III system. Sensors 2015, 15, 32020–32030. [Google Scholar] [CrossRef] [PubMed]

- Simic, A.; Chen, J.M.; Freemantle, J.; Miller, J.R.; Pisek, J. Improving clumping and LAI algorithms based on multi-angle airborne imagery and ground measurements. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1742–1759. [Google Scholar] [CrossRef]

- Koetz, B.; Morsdorf, F.; Van der Linden, S.; Curt, T.; Allgöwer, B. Multi-source land cover classification for forest fire management based on imaging spectrometry and LiDAR data. For. Ecol. Manag. 2008, 256, 263–271. [Google Scholar] [CrossRef]

- Geerling, G.W.; Abrador-Garcia, M.; Clevers, J.G.P.W.; Ragas, A.M.J.; Smits, A.J.M. Classification of floodplain vegetation by data fusion of spectral (CASI) and LiDAR data. Int. J. Remote Sens. 2007, 28, 4263–4284. [Google Scholar] [CrossRef]

- Liu, L.J.; Pang, Y.; Fan, W.Y.; Li, Z.Y.; Zhang, D.R.; Li, M.Z. Fused airborne LiDAR and hyperspectral data for tree species identification in a natural temperate forest. J. Remote Sens. 2013, 17, 679–695. [Google Scholar]

- Pang, Y.; Tan, B.; Solberg, S.; Li, Z. Forest LAI estimation comparison using LiDAR and hyperspectral data in boreal and temperate forests. SPIE Opt. Eng. Appl. 2009, 7454. [Google Scholar] [CrossRef]

- Asner, G.P.; Knapp, D.E.; Kennedy-Bowdoin, T.; Jones, M.O.; Martin, R.E.; Boardman, J.; Field, C.B. Carnegie airborne observatory: In-flight fusion of hyperspectral imaging and waveform light detection and ranging for three-dimensional studies of ecosystems. J. Appl. Remote Sens. 2007, 1, 013536. [Google Scholar] [CrossRef]

- Kampe, T.U.; Johnson, B.R.; Kuester, M.; Keller, M. NEON: The first continental-scale ecological observatory with airborne remote sensing of vegetation canopy biochemistry and structure. J. Appl. Remote Sens. 2010, 4, 043510. [Google Scholar] [CrossRef]

- National Ecological Observatory Network (NEON). Available online: http://www.neoninc.org/science-design/collection-methods/airborne-remote-sensing (accessed on 12 October 2015).

- Asner, G.P.; Knapp, D.E.; Boardman, J.; Green, R.O.; Kennedy-Bowdoin, T.; Eastwood, M.; Martin, R.E.; Anderson, C.; Field, C.B. Carnegie Airborne Observatory-2: Increasing science data dimensionality via high-fidelity multi-sensor fusion. Remote Sens. Environ. 2012, 124, 454–465. [Google Scholar] [CrossRef]

- Cook, B.D.; Nelson, R.F.; Middleton, E.M.; Morton, D.C.; McCorkel, J.T.; Masek, J.G.; Ranson, K.J.; Ly, V.; Montesano, P.M. NASA Goddard’s LiDAR, hyperspectral and thermal (G-LiHT) airborne imager. Remote Sens. 2013, 5, 4045–4066. [Google Scholar] [CrossRef]

- Hese, S.; Lucht, W.; Schmullius, C.; Barnsley, M.; Dubayah, R.; Knorr, D.; Neumann, K.; Riedel, T.; Schröter, K. Global biomass mapping for an improved understanding of the CO 2 balance—The Earth observation mission Carbon-3D. Remote Sens. Environ. 2005, 94, 94–104. [Google Scholar] [CrossRef]

- Chopping, M.; Moisen, G.G.; Su, L.; Laliberte, A.; Rango, A.; Martonchik, J.V.; Peters, D.P. Large area mapping of southwestern forest crown cover, canopy height and biomass using the NASA Multiangle Imaging Spectro-Radiometer. Remote Sens. Environ. 2008, 112, 2051–2063. [Google Scholar] [CrossRef]

- Chopping, M.; Nolin, A.W.; Moisen, G.G.; Martonchik, J.V.; Bull, M. Forest canopy height from Multiangle Imaging Spectro-Radiometer (MISR) assessed with high resolution discrete return lidar. Remote Sens. Environ. 2009, 113, 2172–2185. [Google Scholar] [CrossRef]

- Schlerf, M.; Atzberger, C. Vegetation structure retrieval in beech and spruce forests using spectrodirectional satellite data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 8–17. [Google Scholar] [CrossRef]

- AEROcontrol & AEROoffice. Available online: http://www.igi.eu/aerocontrol.html (accessed on 11 October 2015).

- Mallet, C.; Bretar, F. Full-waveform topographic LiDAR: State-of-the-art. ISPRS J. Photogramm. Remote Sens. 2009, 64, 1–16. [Google Scholar] [CrossRef]

- Anderson, K.; Hancock, S.; Disney, M.; Gaston, K.J. Is waveform worth it? A comparison of LiDAR approaches for vegetation and landscape characterization. Remote Sens. Ecol. Conserv. 2015, 2, 5–15. [Google Scholar] [CrossRef]

- RIEGL LMS-Q680i. Available online: http://www.riegl.com/products/airborne-scanning/produktdetail/product/scanner/23/ (accessed on 9 October 2015).

- DigiCAM-Digital Aerial Camera. Available online: http://www.igi.eu/digicam.html (accessed on 9 October 2015).

- AISA Eagle II. Available online: http://www.specim.fi/index.php/products/airborne (accessed on 9 October 2015).

- Lu, H.; Pang, Y.; Li, Z.; Chen, B. An automatic range ambiguity solution in high-repetition-rate airborne laser scanner using priori terrain prediction. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2232–2236. [Google Scholar] [CrossRef]

- Terrasolid Ltd., 2015. TerraPhoto User’s Guide. Available online: http://www.terrasolid.com/download/tphoto.pdf (accessed on 9 October 2015).

- Richter, R.; Schläpfer, D. Atmospheric/Topographic Correction for Airborne Imagery. ATCOR-4 User Guide Version 7.0.3; ReSe Applications Schläpfer: Wil SG, Switzerland, 2016. [Google Scholar]

- Hudak, A.T.; Evans, J.S.; Stuart Smith, A.M. LiDAR utility for natural resource managers. Remote Sens. 2009, 1, 934–951. [Google Scholar] [CrossRef]

- Tompalski, P.; Coops, N.C.; White, J.C.; Wulder, M.A. Simulating the impacts of error in species and height upon tree volume derived from airborne laser scanning data. For. Ecol. Manag. 2014, 327, 167–177. [Google Scholar] [CrossRef]

- Simard, M.; Pinto, N.; Fisher, J.B.; Baccini, A. Mapping forest canopy height globally with spaceborne lidar. J. Geophys. Res. Biogeosci. 2011, 116. [Google Scholar] [CrossRef]

- Lefsky, M.A.; Ramond, T.; Weimer, C.S. Alternate spatial sampling approaches for ecosystem structure inventory using spaceborne lidar. Remote Sens. Environ. 2011, 115, 1361–1368. [Google Scholar] [CrossRef]

| LiDAR: Riegl LMS-Q680i | |||

| Wavelength | 1550 nm | Laser beam divergence | 0.5 mrad |

| Laser pulse length | 3 ns | Cross-track FOV | ±30° |

| Maximum laser pulse repetition rate | 400 KHz | Maximum scanning speed | 200 lines/s |

| Waveform Sampling interval | 1 ns | Vertical resolution | 0.15 m |

| Point density @1000 m altitude | 3.6 pts/m2 | ||

| CCD: DigiCAM-60 | |||

| Frame size | 8956 × 6708 | Pixel size | 6 μm |

| Imaging sensor size | 40.30 mm × 53.78 mm | Bit depth | 16 bits |

| FOV | 56.2° | Focal length | 50 mm |

| Ground resolution @1000 m altitude | 0.12 m | ||

| Hyperspectral: AISA Eagle II | |||

| Spectral range | 400–970 nm | Spatial pixels | 1024 |

| Focal length | 18.1 mm | Spectral resolution | 3.3 nm |

| FOV | 37.7° | IFOV | 0.037° |

| Maximum bands | 488 | Frame rate | 160 frames/s |

| Bit depth | 12 bits | View zenith angle range of multi-angular module (MAM) | 5–55° |

| Ground resolution (cross-track) @1000 m altitude, nadir view | 0.68 m | ||

| No. | Site Name with Central Coordinates | Date, Area and Vegetation Type | Objectives |

|---|---|---|---|

| 1 | Genhe, Inner Mongolia (121°35′ E, 50°56′ N) | August 2015; 120 km2 Temperate forest | LiDAR and multi-angle hyperspectral fusion |

| 2 | Maoershan, Heilongjiang Prov. (127°36′ E, 45°21′ N) | September 2015; 360 km2 Temperate forest | Forest inventory, productivity, carbon accounting |

| 3 | Ejina, Gansu Prov. (101°07′ E, 42°0′ N) | July 2014; 220 km2 Dry land forest | Watershed application |

| 4 | Beijing (115°58′ E, 40°27′ N) | September 2013; 80 km2 Temperate forest | Urban forestry, biomass |

| 5 | Anyang, Henan Prov. (114°20′ E, 36° 7′ N) | June 2013; 50 km2 Temperate forest | System calibration and testify, urban forestry, land cover |

| 6 | Yancheng, Jiangsu Prov. (120°49′ E, 32°52′ N) | November 2014; 320 km2 Coastal forest and wetland low vegetation | Wetland and coastal region application, plantation forest, land cover, habitat |

| 7 | Huangshan, Anhui Prov. (118°14′ E, 29°32′ N) | September to October 2014; 2900 km2 Subtropical forest | Regional scale forest biomass mapping, biodiversity application, change detection |

| 8 | Changshu, Jiangsu Prov. (120°42′ E, 31°40′ N) | August, 2013; 60 km2 Subtropical forest | Forest parameter estimation, fuel mapping |

| 9 | Puer, Yunnan Prov. (100°56′ E, 22°44′ N) | December 2013 to April 2014; 4060 km2 Subtropical and tropical forest | Regional scale forest biomass mapping, productivity, biodiversity application |

| Sensor | Data Products | Potential Applications |

|---|---|---|

| Waveform LiDAR | Waveform and point cloud | Topography, hydrology, watershed |

| DTM | Forest height, density, volume, biomass estimation | |

| DSM | ||

| CHM | ||

| LiDAR metrics (height, density, structural) | Structural estimation, biodiversity | |

| CCD camera | Aerial photo | Forest distribution, gap |

| Texture | Horizontal information | |

| Hyperspectral sensor | Reflectance | Forest composition |

| Vegetation indices | Health, vigor | |

| Species | Biodiversity | |

| Pigments | ||

| With Multi-angle | BRDF | Structural estimation, biodiversity |

| Modular | Structural indices | Forest height, volume, biomass |

| Fusion | Improved species classification with structural information integrated | Carbon accounting |

| Improved forest parameter estimation with species stratification | Forest inventory and management | |

| Forest management unit segmentation | Biodiversity, ecosystem services | |

| Data extrapolation among sensors | Satellite mission concept development |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pang, Y.; Li, Z.; Ju, H.; Lu, H.; Jia, W.; Si, L.; Guo, Y.; Liu, Q.; Li, S.; Liu, L.; et al. LiCHy: The CAF’s LiDAR, CCD and Hyperspectral Integrated Airborne Observation System. Remote Sens. 2016, 8, 398. https://doi.org/10.3390/rs8050398

Pang Y, Li Z, Ju H, Lu H, Jia W, Si L, Guo Y, Liu Q, Li S, Liu L, et al. LiCHy: The CAF’s LiDAR, CCD and Hyperspectral Integrated Airborne Observation System. Remote Sensing. 2016; 8(5):398. https://doi.org/10.3390/rs8050398

Chicago/Turabian StylePang, Yong, Zengyuan Li, Hongbo Ju, Hao Lu, Wen Jia, Lin Si, Ying Guo, Qingwang Liu, Shiming Li, Luxia Liu, and et al. 2016. "LiCHy: The CAF’s LiDAR, CCD and Hyperspectral Integrated Airborne Observation System" Remote Sensing 8, no. 5: 398. https://doi.org/10.3390/rs8050398